Zig-zag sampling for discrete structures and non-reversible phylogenetic MCMC

Abstract

We construct a zig-zag process targeting a posterior distribution defined on a hybrid state space consisting of both discrete and continuous variables. The construction does not require any assumptions on the structure among discrete variables. We demonstrate our method on two examples in genetics based on the Kingman coalescent, showing that the zig-zag process can lead to efficiency gains of up to several orders of magnitude over classical Metropolis–Hastings algorithms, and that it is well suited to parallel computation. Our construction resembles existing techniques for Hamiltonian Monte Carlo on a hybrid state space, which suffers from implementationally and analytically complex boundary crossings when applied to the coalescent. We demonstrate that the continuous-time zig-zag process avoids these complications.

Keywords: Bayesian inference; coalescent; hybrid state space; piecewise deterministic Markov process; zig-zag process

1 Introduction

The zig-zag process is a non-reversible, piecewise deterministic Markov process introduced by [BR17, BFR19b] for continuous-time MCMC. It has several advantages over reversible methods such as Metropolis–Hastings [Has70] and Gibbs sampling [GS90]: it avoids diffusive backtracking which slows their mixing, and is rejection-free so that no computation is wasted on rejected moves.

In brief, the generator of the zig-zag process targeting the probability density (with respect to the product of the Lebesgue and counting measures) on a state space is

where is the derivative with respect to , and flips the sign of . The flip rates

| (1) |

with , ensure that leaves invariant [BFR19b, Theorem 2.2]. In words, the coordinates of move at constant velocities until a flip at coordinate , when the corresponding velocity changes sign.

To date, the zig-zag processes have been applied to targets such as the Curie–Weiss model [BR17] and logistic regression [BFR19b], whose state spaces have simple geometric structures with natural notions of direction and velocity. Discrete variables (other than the velocities) have been restricted to cases with simple partial orders, such as model selection [CFS20, GD21]. We construct a zig-zag process on a general hybrid state space with both continuous and discrete coordinates, without imposing any structure on discrete coordinates. This is done by introducing a separate space of continuous variables for each value of the discrete variable, introducing boundaries into the continuous spaces, and updating the discrete variable when boundaries are hit. The strategy takes advantage of the full generality of piecewise-deterministic Markov processes [Dav93, Section 24], and is resembles similar work for Hamiltonian Monte Carlo (HMC) [DBZM17, NDL20]. Our method can also been seen of as a generalization of the zig-zag process on a restricted domain [BBCD+18] to a union of many restricted sub-domains, with jumps between sub-domains at boundary hitting events. We illustrate our method with an application to the coalescent [Kin82]: a tree-valued target with continuous branch lengths, discrete tree topologies with no natural partial order, and no canonical geometric structure.

The coalescent examples illustrate the need for methods which are implementable on complex state spaces. They are also of interest because existing MCMC algorithms for coalescents tend to mix slowly. The key difficulty lies in designing Metropolis–Hastings proposal distributions which combine efficient exploration with a high acceptance rate [MV05, HDPD08, LVH+08]. Workarounds consist of empirical searches for efficient proposals [HD12, ASR16] or Metropolis-coupled MCMC [Gey92]. The former does not scale to problems for which empirical optimization is infeasible. The latter helps mixing between modes, but does not overcome low acceptance rates or the backtracking behavior of reversible MCMC.

The zig-zag process has some similarities with HMC [Nea10], which augments the state space with momentum and uses Hamiltonian dynamics to propose large steps which are accepted with high probability, though they are not rejection-free. Like the zig-zag process, HMC requires gradient information and a suitable geometric embedding of the target. [DBZM17] provided those for the coalescent using an orthant complex construction of phylogenetic tree space [BHV01]. Our examples differ from the method of [DBZM17] in three ways. Firstly, we replace the embedding of [BHV01] with -space [GD16], which is better suited to ultrametric trees. Secondly, the zig-zag process is readily implementable on -space via Poisson thinning without a numerical integrator such as leap-prog [DBZM17, Algorithm 1]. Finally, the zig-zag process has simple boundary behavior between orthants and does not require boundary smoothing [DBZM17, Section 3.3], chiefly because discontinuous gradients are easier to handle on continuous rather than discretized paths.

The rest of the paper is structured as follows. Section 2 defines the zig-zag algorithm with discrete and continuous variables, and proves that it has the desired invariant distribution. Section 3 recalls the coalescent and the -space embedding. In Sections 4 and 5 we recall the popular infinite and finite sites models of mutation, derive zig-zag processes for each, and demonstrate their performance via simulation studies. Section 6 concludes with a discussion. The algorithms and data sets used in the simulation studies are available at https://github.com/JereKoskela/tree-zig-zag.

2 Zig-zag on hybrid spaces

The definition of our zig-zag process follows [Dav93, Section 24]. Let be a countable set. For each , let be an open subset of , be its closure, and be its boundary. We assume that are piecewise Lipschitz and denote by the restriction of to non-corner points. Let and . For a point , let be the outward unit normal, and let be the sets of velocities with which a zig-zag process can exit (+) or enter (-) at . Zig-zag dynamics imply . We also define and . Integrals over and , or subsets thereof, are taken to incorporate discrete sums in the coordinate.

The zig-zag process is defined on , with target on for a given density . At , the process moves with velocity and each coordinate flips at rate , defined as in (1) since is fixed between boundary hitting events. When , the process jumps according to a Markov kernel , where denotes the set of probability measures on . We assume that and satisfy the skew-detailed balance condition

| (2) |

for any and , as well as

| (3) |

for any , and exclude jumps to paths pointing into corners by assuming

| (4) |

where is the time the line hits . We will abuse notation and use as the target density and Lebesgue measure on , and as their restrictions to the surface , on which is not a probability density.

By [Dav93, Theorem 26.14], the zig-zag process with dynamics defined above is a piecewise-deterministic Markov process with extended generator

| (5) |

whose domain consists of measurable functions on satisfying:

-

1.

For each , the limit exists.

-

2.

For each , the function is absolutely continuous on .

-

3.

For ,

(6) -

4.

The random variable is integrable for each , where are the successive jump times (both velocity flips and jumps due to hitting a boundary) of .

For a set , let and be the respective spaces of bounded and continuously differentiable functions on . Let . For , we define

Theorem 1.

Proof.

Provided in the appendix. ∎

Remark 1.

In addition to having the right invariant distribution, a practical algorithm needs to be ergodic. To discuss ergodicity of the zig-zag process from Theorem 1, let be zig-zag processes restricted to respective spaces by boundary jump kernels , each with target proportional to . When is finite, a sufficient condition for ergodicity of the global process is that are all ergodic, and that

| (8) |

for ordered pairs which form a cycle spanning the support of . [BRZ19] provide conditions for ergodicity of single-domain zig-zag processes.

We conclude this section with a pseudocode specification of our zig-zag algorithm.

3 The coalescent and a geometric embedding

An ultrametric binary tree with labeled leaves is a rooted binary tree in which each leaf is an equal graph distance away from the root. We follow [GD16] and encode such a tree with leaf labels as the pair , where is a ranked topology and . The continuous variables encode times between mergers. The time from the leaves to the first merger is , and subsequent variables are times between successive mergers. The ranked topology is an -tuple of pairs of labels, where the th pair specifies the two child nodes of the th merger. Non-leaf nodes are labeled by the leaves they subtend. For example, the ranked topology

encodes the four leaf caterpillar tree depicted in Figures 3 and 7 with nodes labeled left to right. We order the two entries of by their least element for definiteness.

The coalescent [Kin82] is a seminal model for the genetic ancestry of samples from large populations. Under the coalescent, a tree has probability density

| (9) |

which arises as the law of a tree constructed by starting a lineage from each leaf, and merging each pair of lineages at rate 1 until the most recent common ancestor (MRCA) is reached. The success of the coalescent is due to robustness: distributions of ancestries of a large class of individual-based models converge to the coalescent in the infinite population limit under suitable rescalings of time. For details, see e.g. [Wak09].

We define swap operators for via

In words, swaps the order of the th and th mergers. We also define pivot operators and for as

| (10) |

where (resp. ) is the entry of with the higher (resp. lower) least element, and is the sibling: the entry of that is not . Pivot is defined by interchanging and in (10). The pivots are the two nearest neighbor interchanges between the th merger and the merger involving its nearest child. Figure 1 illustrates all three operators.

Next we describe -space, which gives a geometric structure to the set of pairs . For fixed , the space of -vectors is the orthant . Each boundary point with corresponds to a degenerate tree in which one of three things happens:

-

1.

The two leaves of merge at time 0 if .

-

2.

There are two simultaneous mergers if .

-

3.

There is a simultaneous merger of three lineages if .

Type 1 boundaries are boundaries of the whole -space. Type 2 boundaries separate orthants corresponding to two ranked topologies separated by an -step. Trajectories crossing the boundary move from one ranked topology to the other. Type 3 boundaries separate the three orthants which resolve the triple merger into two binary mergers, which differ by a or -step. A trajectory that crosses the boundary visits a tree with the triple merger. Figure 2 depicts the -space with three leaves, and a type 2 boundary in a general -space. An example with five leaves is depicted in [GD16, Figure 2], and the variables are illustrated in Figures 3 and 7 in Sections 4 and 5.

We will use -space to construct zig-zag processes whose state spaces consist of tree topologies, branch lengths, and a scalar parameter introduced in the next section. The discrete variables will be the ranked topologies, with the boundary crossings described above defining the boundary jump kernel . For more on -space, e.g. existence and uniqueness of geodesics and Fréchet means, we refer to [GD16].

4 The infinite sites model

The infinite sites model [Wat75] connects the coalescent tree to DNA sequence data by associating the MRCA with the unit interval . Mutations with independent, -distributed locations accrue along branches of the tree (with branch lengths as specified by ) at rate . The type of a leaf consist of the mutations along branches separating it from the MRCA. We denote the resulting list of types of the leaves by . A realization of a coalescent tree and associated is shown in Figure 3. The typical task is to sample from the conditional law corresponding to observing , but not the tree which gave rise to it.

To handle mutations, we define as the rooted graphical tree with nodes, the first of which are leaves labeled , while the remaining are labeled as in . Edges connect children to their parents, and edge lengths are determined by . For an edge , we denote by and the respective labels of the child and parent nodes of , by the number of mutations on , and by the edge length, where we write if contributes to the length of in which case we say spans .

Given a prior density for , the posterior distribution of follows from (9), the fact that the number of mutations on branch is Poisson()-distributed given , and that mutations on distinct branches are independent. In particular,

| (11) |

provided is consistent , and otherwise. This distribution can be sampled using a zig-zag algorithm by taking to be the set of ranked topologies on leaves which are consistent with , as well as and for each . In the boundary classification of Section 3, is another type 1 boundary. For , we define as the index of the zero variable, taken to be in the case of .

We augment the state space with zig-zag velocities , of which drive and drives . For , we also define as the rate of change of . The boundary kernel is defined separately on each boundary type:

| (12) |

At a type 1 boundary the process reflects back into via a velocity flip. At a type 2 boundary it undergoes an -step and a velocity flip to pass through the boundary. For type 3 boundaries it chooses an adjacent orthant uniformly at random.

In the interiors of orthants, velocity flip rates are

| (13) | ||||

| (14) |

Simulating holding times with these rates is difficult due to the time intervals during which they vanish. One strategy is Poisson thinning via dominating rates consisting of only those terms in the round brackets in (13) and (14) whose sign matches that of the corresponding velocity , but these can result in loose bounds and inefficient algorithms. Instead, we define for brevity, and for define as the shorter child branch from parent node . For we adopt the short-hands

and finally define the time localization as

| (15) |

where , and is a maximum increment for the case when all velocities are positive. The indicator functions in the denominator pick out boundaries where (11) vanishes: type 1 or 3 boundaries corresponding to length 0 branches which carry at least one mutation, and the boundary if there is at least one mutation in total. The parameter ensures that, when the current process time is , such boundaries cannot be hit on , and at most one other boundary can be reached. We found gave good performance across our tests in Sections 4 and 5. A larger value results in tighter bounds on (13) and (14), but wastes more computation as is hit more often before an accepted velocity flip.

On time interval , flip rates (13) and (14) are bounded above by constant rates

where, for each , , if and if . Algorithms 2 and 3 in the appendix give pseudocode for simulating holding times, velocity flips, and boundary crossings as outlined above.

Proposition 1.

Proof.

Provided in the appendix. ∎

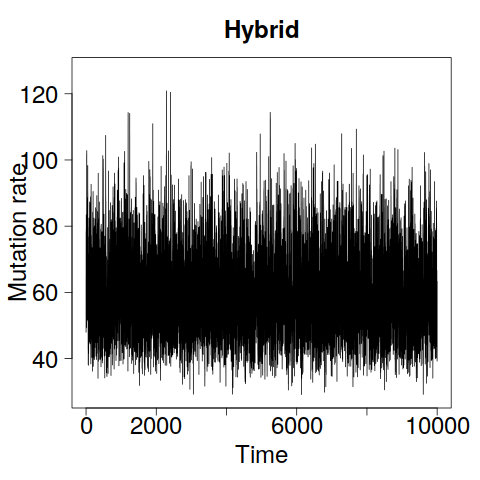

We compared the zig-zag process to a Metropolis–Hastings algorithm by reanalyzing the data of [WFDP91] with , 14 distinct types, and 18 mutations. We used the improper prior , set , and set from trial runs to cross the -mode in unit time. The appendix details the Metropolis–Hastings algorithm, and other tuning parameters in Table 3. We compared both methods to a hybrid combining zig-zag dynamics with continuous time Metropolis–Hastings moves at rate . Performance was insensitive to provided it was not extreme: small values resemble a zig-zag process, while large values resemble Metropolis–Hastings.

Figure 4 shows that the zig-zag and hybrid methods mix visibly better than Metropolis–Hastings over the latent tree, as measured by the tree height . However, they are not as effective at exploring the upper tail of the -marginal, likely because they do not stay in regions of short trees for long enough for to increase into the tail.

To assess scaling, we simulated two data sets: one of size with mutation rate (the approximate posterior mean in Figure 4) and one with and , which models a segment of DNA 10 times longer. Figures 5 and 6 demonstrate that the zig-zag and hybrid processes scale far better than Metropolis–Hastings, particularly when . Estimates in Table 1 quantify the improvement to 1–3 orders of magnitude.

| Data set | Method | ESS()/sec | ESS()/sec | Run time (min) |

| [WFDP91] | Metropolis | 5 | 3 | 3.5 |

| Zig-zag | 160 | 179 | 0.5 | |

| Hybrid | 128 | 67 | 0.5 | |

| , | Metropolis | 70 | ||

| Zig-zag | 1.7 | 1.2 | 45 | |

| Hybrid | 0.5 | 0.3 | 115 | |

| , | Metropolis | 50 | ||

| Zig-zag | 36 | 37 | 1 | |

| Hybrid | 22 | 22 | 1.5 |

5 The finite sites model

The finite sites model [JC69] is more detailed than the infinite sites model, but has greater computational cost. Consider a finite set of sites with a finite number of possible types per site; for example or . Mutations occur along branches of the coalescent tree at each site with rate , and the type of a mutant child is drawn from stochastic matrix with unique stationary distribution . We denote the transition matrix of the -valued compound Poisson process with jump rate and jump transition matrix by . Figure 7 depicts a realization of the finite sites coalescent.

As in Section 4, we denote the configuration of types at the leaves by , and seek to sample from the posterior , which can be written as a sum over the types of internal nodes:

| (16) |

where is the type at site on the node with label , and denotes edges which do not end in a leaf. The target (16) can be evaluated efficiently using the pruning algorithm of [Fel81].

The posterior (16) can be sampled using zig-zag dynamics with the same construction as in Section 4. Velocity flip rates can be written in terms of branch-specific gradients as

| (17) | ||||

| (18) |

which can be evaluated using the linear-cost method of [JZH+20].

We show that events with rates (17) and (18) can be simulated using the example of [GT94, Section 7.4], in which , and is the matrix which always changes state, corresponding to and

| (19) |

As (19) is not bounded away from 0 when , (17) and (18) lack simple bounds for Poisson thinning. As in (15), bounds can be obtained by time localization using

where is a default increment in case all velocities are positive. The variable localizes the next zig-zag time step beginning at time so that at most one branch can shrink to length zero on , can fall by at most of its present value, and can fall by at most of its present value if the first two leaves to merge are of distinct types. This treatment of and is needed as (17) and (18) diverge in these cases, rendering the and boundaries inaccessible.

Given , we have the following bounds on (19) on the time interval :

where . Substituting these bounds into [JZH+20, Equation (9)] provides bounds on flip rates that can be evaluated with cost.

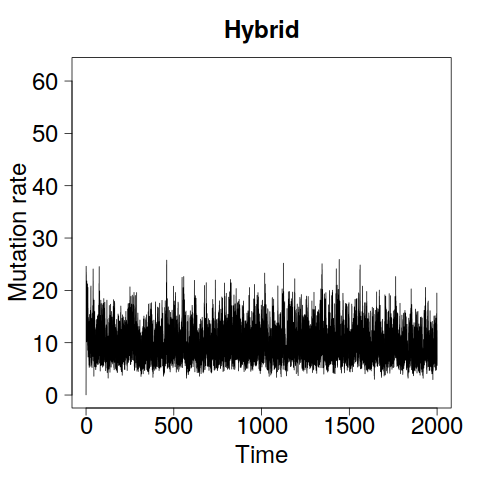

Figure 8 demonstrates that the zig-zag process mixes over latent trees faster than Metropolis–Hastings again, but struggles to explore the upper tail of the -marginal. The hybrid method was run with to compensate for shorter run lengths than in Section 4, and thus resembles Metropolis–Hastings rather than the zig-zag process.

Figures 9 and 10 show results for two further simulated data sets: one with and , and one with and . The superior mixing of the zig-zag process over the latent tree is clear. The lack of mixing in the upper tail of the -marginal is also stark, particularly in Figure 9 where zig-zag significantly underestimates posterior variance. The estimated posterior means of all three methods coincide in all cases (results not shown).

| Data set | Method | ESS()/sec | ESS()/sec | Run time (min) |

|---|---|---|---|---|

| [GT94] | Metropolis | 1.8 | 0.2 | 29 |

| Zig-zag | 2.3 | 1.0 | 18 | |

| Hybrid | 2.1 | 0.6 | 16 | |

| , | Metropolis | 0.09 | 1567 | |

| Zig-zag | 0.004 | 1749 | ||

| Hybrid | 0.01 | 0.003 | 1800 | |

| , | Metropolis | 0.04 | 0.03 | 257 |

| Zig-zag | 0.05 | 0.13 | 175 | |

| Hybrid | 0.08 | 0.07 | 183 |

6 Discussion

We have presented a general method for using zig-zag processes to sample targets defined on hybrid spaces consisting of discrete and continuous variables. This was done by introducing boundaries into the state space of continuous variables and updating discrete components via a Markov jump kernel whenever a boundary was hit. The resulting algorithm remains a piecewise-deterministic Markov processes in the sense of [Dav93, Section 24], and generalizes existing zig-zag processes for restricted domains [BBCD+18]. Crucially, no assumptions of structure among the discrete variables are required. The key conditions on are the skew-detailed balance (2), which is local, and (3), which involves an integral with respect to but not the target . Both are verifiable in applications, and do not require to be normalized. Our method is reminiscent of discrete Hamiltonian Monte Carlo [DBZM17, NDL20], but the lack of time discretization simplifies boundary crossings (though see [NDL20, Section S6.4]).

We have demonstrated the method on two examples involving the coalescent, which is a gold-standard model in phylogenetics. It is defined on the space of binary trees consisting of discrete tree topologies and continuous branch lengths, which lacks a simple geometric structure, e.g. a partial order or a tractable norm. We have also shown that the zig-zag process can improve mixing over trees relative to Metropolis-Hastings, particularly under the infinite sites model. This model is widely used to analyze ever larger data sets, and the zig-zag process shows promise for expanding the scope of feasible MCMC computations.

The zig-zag process was more efficient than Metropolis–Hastings under the infinite sites model in terms of effective sample size, but struggled to explore the tails of the -marginal. A likely reason is correlation in the target: high mutation rates are only be attainable when branch lengths are short. A Metropolis–Hastings algorithm can jump to a high mutation rate as soon as the latent tree has short branches, while the zig-zag process must traverse all intervening mutation rates before branch lengths grow. The speed up zig-zag method of [VR21] has state-dependent velocities, and could provide further improvement. The hybrid method with both zig-zag motion and Metropolis-Hastings updates interpolated between the two algorithms.

All three algorithms exhibited much longer run times under the finite sites model than under infinite sites. For the zig-zag and hybrid methods, that is due to the cost per evaluation of (17) and (18), of which there are . However, flip times for different velocities are conditionally independent given the current state and can be generated in parallel, unlike steps of the Metropolis–Hastings algorithm. Hence the zig-zag process is well suited to parallel architectures.

Acknowledgements

The author was supported by ESPRC grant EP/R044732/1, and is grateful to Jure Vogrinc and Andi Wang for productive conversations on MCMC for coalescent processes, and non-reversible MCMC in general.

Appendix

Proof of Theorem 1.

Since the initial point has a density, the Poisson construction of velocity flips and (4) ensure that corners (where the the process is undefined) are hit with probability 0.

Next, we will verify the four conditions of [Dav93, Section (24.8)]. Firstly, the deterministic zig-zag motion is at a constant, bounded velocity, and hence forms a non-explosive, Lipschitz vector field. The flip rates are bounded, and hence integrable, on compact subsets of because is continuously differentiable in away from boundaries. Velocity flips and boundary transitions both change the state with probability 1, the former by explicit construction and the latter because . The final standard condition is (7). In addition, the first three conditions of [CPWF21, Theorem 1] hold by construction, and hence it suffices for stationarity of to verify their fourth condition by checking that for all ,

The change of variable combined with the fact that is uniform, then (1), and then an integration by parts yield

| (20) |

where is the gradient operator with respect to . To conclude, we will show that the first term on the right hand side of (20) cancels with the second. Using (6), then (2), and then ,

We now exchange order of integration (justified by the fact that is bounded), then apply (3) and the fact that is uniform to obtain

| (21) |

Proof of Proposition 1.

The proof consists of checking the hypotheses of Theorem 1. The set of ranked topologies of binary trees with leaves is finite, and hence so is its restriction to topologies consistent with . The initial condition has a density by assumption. The set of points from which deterministic motion at a constant velocity first hits a boundary at a corner consists of finitely many Lebesgue-null lines. Hence, since the initial condition has a density and the boundary kernel does not change , corners are hit with probability 0.

The augmented posterior corresponding to (11) is by inspection, and only vanishes at a boundary when or a branch with mutations has length zero.

The unit outward normal is , where the is in the th place. We also have , , the third case of (12) is invariant under permutation of , and (11) is continuous even at the boundaries (though its gradient is not), so verifying (2) and (3) is a routine calculation.

It remains to verify (7), which we do by stochastic domination. By construction, the zig-zag process crosses boundaries only when a corresponding velocity is negative. The boundary crossing flips the velocity, and a boundary corresponding to the same coordinate cannot be crossed again until a further flip. Hence, the number of jumps in the zig-zag process started at by time is dominated by , where

which arises as the volume of the set which is reachable from by time multiplied by upper bounds for (13) and (14) for positive velocities on the reachable set. Here is the -norm, which is invariant for all valid zig-zag velocities . In the construction of the dominating random variable, negative velocities are assumed to flip to positive instantaneously which accounts for the initial summand of (the maximum number of initial negative velocities) and the factor of 2. ∎

The remainder of the appendix details the zig-zag and Metropolis–Hastings algorithms used in Sections 4 and 5. Algorithms 2 and 3 provide pseudocode implementations of the zig-zag methods. Line 7 of Algorithm 2 is given twice, with the first applicable to Section 4 and the second to Section 5. Otherwise, both algorithms apply to both sections.

The for-loop on lines 4–10 of Algorithm 1 implements the time localization and ensures that time steps cannot cause negative entries in . The distribution of the increment thus has an atom at the value determined by lines 4–10, signifying either a boundary crossing or a need to recompute the time localization and rates . However, the continuous-time motion of the trajectory is not interrupted in either case; see also [BBCD+18, Section 2.2].

Iterations of the outer while-loop of Algorithm 1 flip a velocity for one of two reasons: a flip event occurs or a boundary is hit. In the former case the reason for the flip is clear by construction. In the latter, a trajectory arriving at a boundary must have for the corresponding velocity. After crossing, the trajectory moves into the interior of an adjacent orthant, which corresponds to in its local coordinates.

The Metropolis–Hastings algorithms in Sections 4 and 5 both used the same proposal mechanisms consisting of three steps. An iteration of the algorithm is one scan through all three steps, with an accept/reject correction after each step. The hybrid method only used steps 1 and 3.

-

1.

A Gaussian random walk update of reflected at 0 with proposal variance tuned to obtain an average acceptance probability .

-

2.

An update of holding times under a fixed topology.

-

3.

A subtree-prune-regraft step.

Type 2 updates first additively perturb the initial holding time as

Further holding times are conditionally independently perturbed as

where and are the perturbed times of the child nodes of the node at time . Again, was tuned so that the average acceptance probability was .

Type 3 updates sample an ordered pair of edges , where is an edge connecting the MRCA to . Edge is deleted, and its child is reattached into . Letting be the time of the node with label in an abuse of notation, the reattachment time has distribution

Under the infinite sites model, moves into topologies incompatible with observed mutations were rejected essentially instantaneously, before costly likelihood evaluations.

Table 3 summarizes hyperparameters and quantities of interest used in the simulation.

| Data set | Method | |||||||

|---|---|---|---|---|---|---|---|---|

| [WFDP91] | Metropolis | - | 8 | 0.6 | 0.27 | 0.25 | 0.06 | - |

| Zig-zag | 8 | - | - | - | - | - | - | |

| Hybrid | 8 | 10 | - | 0.24 | - | 0.06 | 10 | |

| , | Metropolis | - | 6 | 0.25 | 0.23 | 0.24 | 0.03 | - |

| Zig-zag | 6 | - | - | - | - | - | - | |

| Hybrid | 6 | 6 | - | 0.23 | - | 0.03 | 10 | |

| , | Metropolis | - | 18 | 0.4 | 0.26 | 0.23 | 0.02 | - |

| Zig-zag | 40 | - | - | - | - | - | - | |

| Hybrid | 40 | 18 | - | 0.24 | - | 0.01 | 10 | |

| [GT94] | Metropolis | - | 4 | 0.7 | 0.23 | 0.25 | 0.12 | - |

| Zig-zag | 4 | - | - | - | - | - | - | |

| Hybrid | 4 | 4 | - | 0.24 | - | 0.12 | 100 | |

| , | Metropolis | - | 4 | 0.3 | 0.21 | 0.21 | 0.31 | - |

| Zig-zag | 4 | - | - | - | - | - | - | |

| Hybrid | 4 | 3 | - | 0.29 | - | 0.32 | 100 | |

| , | Metropolis | - | 14 | 0.6 | 0.20 | 0.27 | 0.04 | - |

| Zig-zag | 20 | - | - | - | - | - | - | |

| Hybrid | 20 | 14 | - | 0.20 | - | 0.04 | 100 |

References

- [ASR16] A. J. Aberer, A. Stamatakis, and F. Ronquist. An efficient independence sampler for updating branches in Bayesian Markov chain Monte Carlo sampling of phylogenetic trees. Syst. Biol., 65(1):161–176, 2016.

- [BBCD+18] J. Bierkens, A. Bouchard-Côte, A. Doucet, A. B. Duncan, P. Fearnhead, T. Lienart, G. Roberts, and S. J. Vollmer. Piecewise deterministic Markov processes for scalable Monte Carlo on restricted domains. Stat. Probab. Lett., 136:148–154, 2018.

- [BFR19a] J. Bierkens, P. Fearnhead, and G. Roberts. Supplement to the zig-zag process and super-efficient sampling for Bayesian analysis of big data. Ann. Stat., 47, 2019.

- [BFR19b] J. Bierkens, P. Fearnhead, and G. Roberts. The zig-zag process and super-efficient sampling for Bayesian analysis of big data. Ann. Stat., 47(3):1288–1320, 2019.

- [BHV01] L. J. Billera, S. P. Holmes, and K. Vogtmann. Geometry of the space of phylogenetic trees. Adv. Appl. Math., 27:733–767, 2001.

- [BR17] J. Bierkens and G. Roberts. A piecewise deterministic scaling limit of lifted Metropolis-Hastings for the Curie-Weiss model. Ann. Appl. Probab., 27(2):846–882, 2017.

- [BRZ19] J. Bierkens, G. Roberts, and P.-A. Zitt. Ergodicity of the zigzag process. Ann. Appl. Probab., 29(4):2266–2301, 2019.

- [CFS20] A. Chevalier, P. Fearnhead, and M. Sutton. Reversible jump PDMP samplers for variable selection. Preprint, arXiv:2010.11771, 2020+.

- [CPWF21] A. Chevalier, S. Power, A. Wang, and P. Fearnhead. PDMP Monte Carlo methods for piecewise-smooth densities. Preprint, arXiv:2111.05859, 2021+.

- [Dav93] M. Davis. Markov models and Optimization. Chapman Hall, 1993.

- [DBZM17] V. Dinh, A. Bilge, C. Zhang, and F. A. Matsen IV. Probabilistic path Hamiltonian Monte Carlo. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 2017.

- [Fel81] J. Felsenstein. Evolutionary trees from DNA sequences: a maximum likelihood approach. J. Mol. Evol., 17:368–376, 1981.

- [FHVD17] J. M. Flegal, J. Hughes, D. Vats, and N. Dai. mcmcse: Monte Carlo Standard Errors for MCMC, 2017.

- [GD16] A. Gavryushkin and A. J. Drummond. The space of ultrametric phylogenetic trees. J. Theor. Biol., 403:197–208, 2016.

- [GD21] P. Gagnon and A. Doucet. Nonreversible jump algorithms for Bayesian nested model selection. J. Comput. Graph. Stat., 30(2):312–323, 2021.

- [Gey92] C. J. Geyer. Practical Markov chain Monte Carlo. Statist. Sci., 7:473–483, 1992.

- [GS90] A. E. Gelfand and A. F. M. Smith. Sampling-based approaches to calculating marginal densities. J. Am. Stat. Assoc., 85(410):398–409, 1990.

- [GT94] R. C. Griffiths and S. Tavaré. Simulating probability distributions in the coalescent. Theor. Popln Biol., 46:131–159, 1994.

- [Has70] W. K. Hastings. Monte Carlo sampling methods using Markov chains and their applications. Biometrika, 57:97–109, 1970.

- [HD12] S. Höhna and A. J. Drummond. Guided tree topology proposals for Bayesian phylogenetic inference. Syst. Biol., 61(1):1–11, 2012.

- [HDPD08] S. Höhna, M. Defoin-Platel, and A. J. Drummond. Clock-constrained tree proposal operators in Bayesian phylogenetic inference. In BioInformatics and BioEngineering, pages 1–7, 8th IEEE International Conference on IEEE, 2008.

- [JC69] T. H. Jukes and C. R. Cantor. Evolution of protein molecules. In H. N. Munro, editor, Mammalian protein metabolism, pages 21–132. Academic Press, New York, 1969.

- [JZH+20] X. Ji, Z. Zhang, A. Holbrook, A. Nishimura, G. Baele, A. Rambaut, P. Lemey, and M. A. Suchard. Gradients do grow on trees: a linear-time -dimensional gradient for statistical phylogenetics. Mol. Biol. Evol., 37(10):3047–3060, 2020.

- [Kin82] J. F. C. Kingman. The coalescent. Stochast. Process. Applic., 13(3):235–248, 1982.

- [LVH+08] C. Lakner, P. Van Der Mark, J. P. Huelsenbeck, B. Larget, and F. Ronquist. Efficiency of Markov chain Monte Carlo tree proposals in Bayesian phylogenetics. Syst. Biol., 57:86–103, 2008.

- [MV05] E. Mossel and E. Vigoda. Phylogenetic MCMC algorithms are misleading on mixtures of trees. Science, 309:2207–2209, 2005.

- [NDL20] A. Nishimura, D. B. Dunson, and J. Lu. Discontinuous Hamiltonian Monte Carlo for discrete parameters and discontinuous likelihoods. Biometrika, 107(2):365–380, 2020.

- [Nea10] R. M. Neal. MCMC using Hamiltonian dynamics. In Handbook of Markov chain Monte Carlo. CRC Press, 2010.

- [VR21] G. Vasdekis and G. O. Roberts. Speed up zig-zag. Preprint, arXiv:2103.16620, 2021+.

- [Wak09] J. Wakeley. Coalescent theory: an introduction. Roberts & Co, 2009.

- [Wat75] G. A. Watterson. On the number of segregating sites in genetical models without recombination. Theor. Popln Biol., 7:256–276, 1975.

- [WFDP91] R. H. Ward, B. L. Frazier, K. Dew, and S. Pääbo. Extensive mitochondrial diversity within a single Amerindian tribe. Proc. Natl. Acad. Sci. USA, 88:8720–8724, 1991.