YES SIR!

Optimizing Semantic Space of Negatives with Self-Involvement Ranker

Abstract

Pre-trained model such as BERT has been proved to be an effective tool for dealing with Information Retrieval (IR) problems. Due to its inspiring performance, it has been widely used to tackle with real-world IR problems such as document ranking. Recently, researchers have found that selecting “hard” rather than ”random” negative samples would be beneficial for fine-tuning pre-trained models on ranking tasks. However, it remains elusive how to leverage hard negative samples in a principled way. To address aforementioned issues, we propose a fine-tuning strategy for document ranking, namely Self-Involvement Ranker (SIR), to dynamically select hard negative samples to construct high-quality semantic space for training a high-quality ranking model. Specifically, SIR consists of sequential compressors implemented with pre-trained models. Front compressor selects hard negative samples for rear compressor. Moreover, SIR leverages supervisory signal to adaptively adjust semantic space of negative samples. Finally, supervisory signal in rear compressor is computed based on condition probability and thus can control sample dynamic and further enhance the model performance. SIR is a lightweight and general framework for pre-trained models, which simplifies the ranking process in industry practice. We test our proposed solution on MS MARCO with document ranking setting, and the results show that SIR can significantly improve the ranking performance of various pre-trained models. Moreover, our method became the new SOTA model anonymously on MS MARCO Document ranking leaderboard in May 2021.

Introduction

Information Retrieval (IR) which aims to obtain specific information from the Web based on queries has become one of the most prevailing research topics. A key component in an IR system is the ranking model which sorts the documents based on their relevance to a query. Classic ranking algorithms, such as BM25 (Robertson and Walker 1994), simply rely on statistics of keyword matching and suffer from the vocabulary mismatching problems. Because of this, many embedding-based neural ranking models have been proposed to overcome this problem and have achieved better performance (Huang et al. 2013; Shen et al. 2014; Wan et al. 2016a). In recent years, Pre-trained Language Models (PLM), which have fundamentally changed many neural language process areas, have also been applied to document ranking. PLM can effectively capture contextual information and semantic information from text (Devlin et al. 2019; Liu et al. 2019), and hence would bring better match between a query and a document. PLM-based document ranking models have achieved significant improvement (Lample and Conneau 2019).

As the goal of the document ranking is different from other NLP tasks, directly applying existing general PLMs (such as BERT (Devlin et al. 2019) or GPT (Brown et al. 2020)) to document ranking is not optimal. To solve this problem, researchers proposed to design new IR-oriented objectives or self-supervised tasks for training pre-trained models specially for IR. For example, (Lee, Chang, and Toutanova 2019; Chang et al. 2020) proposed Wiki Link Prediction (WLP) task and Inverse Cloze (ICT) task and (Ma et al. 2021a) proposed Representative Words Prediction task (PROP). We refer to them as pre-trained IR models.

Although these pre-trained models have proved to be able to significantly improve ranking quality, it is still under investigation on how to effectively leverage these pre-trained models to train a ranking model. Ranking models are typically trained under Learning to Rank (LTR) (Huang et al. 2013) framework. Take pair-wise loss based LTR for example, ranking models focus on the relative order between a positive sample and a negative sample, for a given query. When constructing train sets for fine-tuning, a common approach is to use random sampling methods for negative sample selection (Huang et al. 2020). But such approach is sub-optimal, because randomly-selected negative samples may be uninformative (Xiong et al. 2020; Robinson et al. 2021) and the distribution of negative samples are fixed. Recently, some studies (Xiong et al. 2020; Zhan et al. 2021; Gao, Dai, and Callan 2021) showed that a proper selection of negative samples is essential to training a ranking model by fine-tuning PLMs.

Motivated by human learning process and curriculum learning (Chevalier-Boisvert et al. 2018; Bengio et al. 2009), we observe that human tend to learn knowledge from easy to hard. For pairwise ranking models, the difficulty of negative samples can be measured by their relevance to the query, which is reflected as their predicted scores. The model should first distinguish very irrelevant negative samples from positive one and then distinguish confusing negative samples from positive sample.

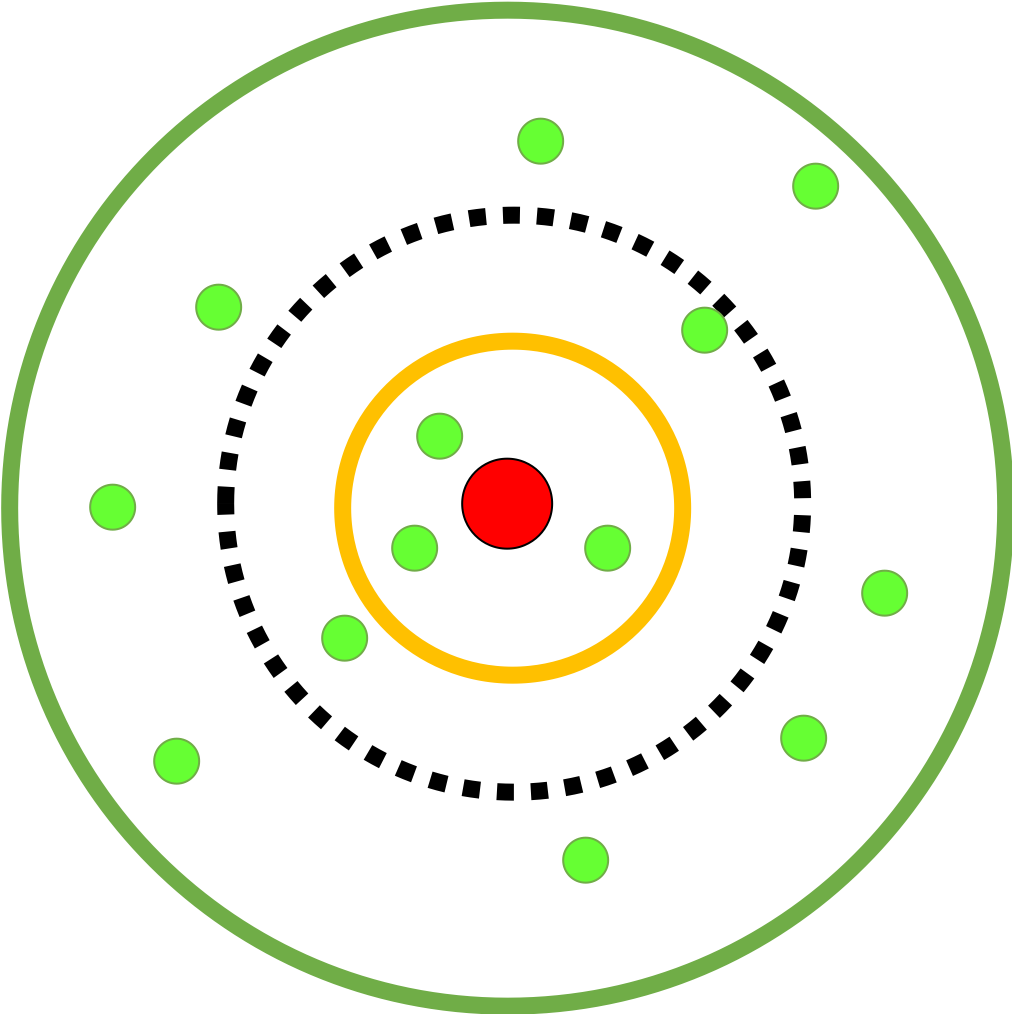

We show the differences between three fine-tuning strategies in Figure 1. In Figure 1a, only the hardest negative samples are selected for training and the semantic space of negatives is quite limited. In Figure 1b, negative samples are randomly selected and the semantic space is relatively complete, but with unreliable quality. In Figure 1c, negative samples are selected from easy to hard. The semantic space is complete and of high quality.

In this paper, we propose a novel training paradigm for neural ranking models atop pre-trained models, which is called Self-Involvement Ranker (SIR). SIR focuses on optimizing semantic space of negative samples when fine-tuning ranking models. To fulfill the goal of gradually increasing learning difficulty, we use multi-level “compressor” to select hard negative samples step-by-step. We use the terminology compressor because the number of training samples after each compressor would considerably decrease. Moreover, in order to achieve self-adaptation and adjustment for negative semantic space, we construct an end-to-end derivable framework which can adjust hard negative samples dynamically by supervisory signals. Here, dynamic hard samples means that hard samples would change as model parameter changes. Meanwhile, in this work, we make dynamic under control by using an conditional probability-liked controller to constrain it, which proves to be an effective way to improve the model performance. Finally, to further explore the learning process of hard negative semantic space, we conduct multiple comparison experiments based on SIR, including SIR V1, V2, V3 and V4. And we highlight SIR V3 and V4 in our work due to their outstanding performance.

Besides performance improvement, SIR also simplifies the pipeline of ranking models in industry practice. Ranking models in industry usually have a complex pipeline, which is comprised of several ranking stages (Feng et al. 2021), as shown in Figure 2. In contrast, out model just uses only “A Model” to leverage the whole ranking process, which reveals great simplification for ranking process.

Experimental results show that SIR can significantly enhance the ranking performance of pre-trained language models and pre-trained IR models. Meanwhile, SIR became the new SOTA model anonymously on MS MARCO Document Ranking leaderboard in May 2021.

In summary, our contributions are as follows:

-

•

We propose a novel training paradigm for neural ranking models atop pre-trained models named SIR, which dynamically selects negative samples to construct high-quality semantic space. SIR can simplify ranking pipeline in industry practice.

-

•

We propose to control sample dynamic via supervisory signal, which is computed based on conditional probability. Such mechanism further improves semantic space.

-

•

Experiments on MS MARCO show that SIR significantly enhances both pre-trained language models and pre-trained IR models.

Related Work

Neural Document Ranking

Neural document ranking models compute relevance scores for query-document pairs based on neural networks. It is critical for them to learn good representation of queries, documents and ranking functions.

We can categorize neural ranking models to representation-focused models and interaction-focused models (Trabelsi et al. 2021). The representation-focused models extract good feature representation from input data. (Huang et al. 2013) first utilizes deep neural networks to extracts features from query and documents independently. To capture local context, (Shen et al. 2014; Qiu and Huang 2015) utilize convolutional neural networks instead of feed-forward networks. Also, recurrent neural networks are used in (Wan et al. 2016a) to capture richer context. In contrast, the interaction-focused models aim to capture matching signals from interactions of query and document tokens. (Guo et al. 2016) performs term matching using histogram-based features. (Wan et al. 2016b; Pang et al. 2017) capture matching signals with gated recurrent units, while (Dai et al. 2018; Hui et al. 2017) use convolutional neural networks.

Pre-trained Models for Document Ranking

The great success of pre-trained language models inspires researchers to utilize pre-trained models for document ranking. A straightforward way is to use PLM, such as BERT or ELECTRA (Clark et al. 2020), to accomplish ranking tasks.

To further enhance models’ capacity on IR, researchers have proposed several pre-trained IR models, whose pre-training tasks are highly relevant to downstream IR tasks. (Lee, Chang, and Toutanova 2019) designs pre-training tasks to explicitly explores the target context based on the query sentence. (Chang et al. 2020) uses random sentence from Wiki as the query to retrieve the related passage from same page or from the hyper-linked page. Ma has explored the representative words prediction (Ma et al. 2021a) for ad-hoc retrieval and later he proposed B-PROP (Ma et al. 2021b) to free PROP from limitation of classical uni-gram language model.

Another way to enhance pre-trained models is to design fine-tuning strategies. ULMFiT (Howard and Ruder 2018) is the pioneering work which uses several strategies to fine-tune the pre-trained model on the text classifiers. Further research tries to use the fine-grained sample strategies to design tasks, such as (Huang et al. 2020) utilized random negative sampling for recall tasks while ANCE (Xiong et al. 2020) used the top of recalled hard negative samples for training. It is surprising that hard negative samples seem experimentally conductive for downstream tasks. (Zhan et al. 2021) theoretically explains the effectiveness of hard negative samples and also introduces two strategies for both dense retrieval and reranking.

Methodologies

In this section, we design 4 different negative sample selection strategies that belong to SIR framework. All of 4 strategies aim to select appropriate negative samples through compressor, which is implemented by pre-trained models. From V1 to V4, the selection of negative samples gradually tends to be adaptive and dynamic. The structure is shown in Figure 3 and the implementation of each version will be introduced in the following subsections.

Given one query and one corpus (including corresponding positive sample corpus and negative sample corpus ), traditional train set construction methods like (Ma et al. 2021a) mostly use one query patching with one positive document sample and one negative document sample . Such method is straightforward but information from negative samples, which might be conductive to IR objectives, is ignored. For this reason, we construct one input train set in a contrast manner which combines one positive document sample with negative samples. We aim to select most informative and critical negative samples for training.

Strategy V1: Self-involvement with Hard Samples

In strategy V1, the trained front compressor will select the top negative samples with higher relevance scores (higher difficulty), and provide them to the rear compressor for training. The process has been shown in Figure 3a.

Supposing that there are compressors (including classifier). The compressor aims to correctly classify positive sample as well as select hard negative samples. As mentioned before, compressors are implemented by some pre-trained models. All compressors have identical network structure in our settings.

We denote parameter of all compressors as . For -th compressor , we denote its parameter as , network as and input data as . For compressor , its input data is a set of queries and their corresponding samples. Each query would correspond to one positive sample and negative samples. We use to denote number of negative samples pairing a positive sample for -th compressor, therefore . For query , its training sample , would be . . Here and . Note that the positive sample would be fixed at the first index position following with negative samples. -th compressor outputs relevance scores for input samples. . For -th compressor, we can formulate our objective function on query as cross entropy loss:

| (1) | ||||

where denotes the probability function.

| (2) | ||||

We can calculate as:

| (3) | ||||

After training the first compressor, the model will evaluate the difficulty of input negative samples and then pass hard ones to the next compressor. We refer this infer-after-train procedure as self-involvement in this paper. Theoretically speaking, this procedure would give the pre-trained model a constrained semantic sample space for learning. Thus, the model would know what they need to learn. At inference stage, we assume relevance scores can reflect the difficulty (or quality) of negative samples. We select hardest negative samples from negative samples (normally, ) at -th compressor and feed them to the next compressors:

| (4) | ||||

Note that the value of K is a hyper parameter and will gradually decrease. The positive sample will automatically send to the next compressor. The last compressor only need to classify the samples, we regard it as the classifier in SIR. In strategy V1, every compressor is initialized at the same checkpoint of certain pre-trained model.

Strategy V2: Self-involvement with Hard Samples and Parameters Memory

For faster convergence, in strategy V2, we keep the same network architecture as strategy V1 but let the model parameters to flow from one compressor to the next. After having trained the front compressor, we share the front compressor’s parameter with the rear one instead of training it from ground up. The rear compressor would start at the checkpoint that the front compressor has achieved. This is a soft parameter sharing mechanism (Ruder 2017).

The reason we use the soft parameter sharing mechanism is that after training the previous compressor , it would capture the complete distribution information of the previous training samples. Sharing parameters can be interpreted as adding prior knowledge to the next compressor . For compressor , the semantic space of negatives would be refined based on both previous memorized knowledge and current training samples. So it would have a more comprehensive sample distribution compared with strategy V1.

Strategy V3: Self-involvement with Dynamic and Hard Samples

Directly employ the prior knowledge in model initialization would improve model performance to some extent, but the distribution of negative samples used for training each compressor is still fixed. The fix distribution of negative samples leads to the dynamics loss, and that can not be compensated by parameter memory. Therefore, V3 uses the supervisory signal to synchronously adjust the compressors at all levels, so that the negative sample distribution selected by each compressor is also dynamic. V3 adopts hard parameter sharing mechanism (Ruder 2017).

In the forward process of the training stage, we keep the same inference procedure as the previous two strategies. Differently, in the backward process, when the model parameters are updated with the supervisory signal, the negative sample distribution selected by the front compressor for the rear compressor will also be adjusted. With the continuous convergence of the model, the difficulty of negative samples increases gradually, which is consistent with the principle of curriculum learning.

Conclusively, We iteratively select dynamic hard samples and gradually force the train set updating step by step. For query , the loss of the whole model consists of classification loss of the first compressor and the last compressor:

| (5) | ||||

Since the compressors in strategy V3 share their parameters, we can formulate the learning process as:

| (6) | ||||

Strategy V4: Self-involvement with Controlled Dynamic and Hard Samples

In strategy V3, the negative sample distribution generated by compressor at each level is adjusted with supervisory signal. However, the signal of the following stage compressor can not directly feed back to supervise the previous stage compressor, but can only indirectly rely on the parameter sharing mechanism to affect previous compressor.

In order to solve the problem of the front compressor’s weak perception of the ranking results of rear compressor, we use the feedback mechanism of the control theory (Franklin et al. 2002) for reference and use the conditional probability (CPR) to link the ranking results of the front and rear compressors. Then, the supervisory signal can be directly back-propagated from the rear compressor to its front compressors.

In the modeling of V4, for query , -th compressor outputs is samples’ relevance score . We softmax as in Eq. (2) to obtain . We denote as for simplicity.

Here, we constrain the relative position of same top-ranked samples in different level of compressors, which means that for -th sample at -th compressor, its predicted score at -th compressor is and its score at front compressor is . Note that the positive sample’s score is .

We denote the samples’ conditional probability at -th compressor as . is computed by the multiplication of posterior probability of sample to fulfill the goal that the supervisory signal of each compressor can be back propagated to front compressor directly. Such settings can control sample dynamics at front compressor with supervisory signal at rear compressor, thus achieve better semantic space of negative samples at all compressors. The conditional probability of -th compressor can be written as:

| (7) | ||||

where denotes element wise multiplication and denotes the beginning logits of .

Then, we formulate the loss function based on -th compressor for query as:

| (8) | ||||

The loss function of V4 is:

| (9) | ||||

Pseudo code for strategy V3 and V4 can be found in Algorithm 1.

Experiments and Analysis

Datasets

MS MARCO Document Ranking is now the most popular dataset in IR (Bajaj et al. 2016). There are 3.2 million documents and 367,013 queries in the training set and the goal is to rank based on their relevance.

Pre-train Models

We conduct experiments on 2 pre-trained language models and 3 pre-trained IR models. For pre-trained language models, BERT (Devlin et al. 2019) and ELECTRA (Clark et al. 2020) are used. BERT designs Masked Language Models (MLM) and Next Sentence Prediction (NSP) as its pre-training tasks. Instead, ELECTRA uses replaced token detection task. For pre-trained IR models, WLP uses Wiki Link Prediction task, ICT uses Inverse Cloze task (Lee, Chang, and Toutanova 2019; Chang et al. 2020) and PROP uses Representative Words Prediction task (Ma et al. 2021a).

Dataset Pre-processing

For each positive sample, we first find its corresponding negative samples with HDCT and ANCE.

HDCT

is a context-aware document term weighing framework for document indexing and retrieval (Dai and Callan 2019). It first uses passage level term weighting and then aggregates them into the document level term weighting. Adopting HDCT indexing can effectively improve the retrieval accuracy for various fine-tuned pre-trained models.

ANCE

is a learning mechanism which use a asynchronously updated ANN index to select hard negative samples from the whole corpus to construct datasets for pre-train models (Xiong et al. 2020). It can achieve state-of-the-art performance and maintain efficiency at the same time.

Implementation Details

Considering the computational cost, we used three-level compressor (including classifier) for the sample selection. Increasing number of level could require a large amount of data for training. In our implementation, we found that three-level architecture would achieve SOTA compared with other multiple level architectures. To show that SIR can be implemented on various pre-trained models, we use pre-trained BERT-base and ELECTRA-base with limited ranking ability.

For training setup, considering that distributed data parallel is used for experiments acceleration, we set samples size to be the integer times of the number of GPU devices. Since we train our model with V100 which has 8 GPUs, we set the size of a training block (the sample number of positives and negatives in a block) as 88 and batch size of the training block as 4. We set our self-involvement samples compressed from 88 to 48 and 48 to 16 in 3 level models. And we further compress 16 to 8 in 4 level models. Also, we set the training epochs as 2. In this work, we used AdamW as our Optimizer with and , and learning rate as . We adopt both the ANCE based top 100 dataset and HDCT based top 100 dataset as the test bed. Since we are trying to leverage pre-trained models with simple SIR strategies, here we only train our model on the side of fine-tuning downstream ranking tasks based on top 100 dataset.

Evaluation

We evaluate SIR on ranking tasks and use mean reciprocal rank (MRR), mean average precision (MAP) and normalized discounted cumulative Gain (NDCG) as metrics.

Performance Comparison

In Table 1, we show that SIR can substantially enhance pre-trained IR models. WLP (Chang et al. 2020), ICT (Chang et al. 2020) and PROP (Ma et al. 2021a) are three pre-trained IR models with different pre-training tasks. All of them gain great performance improvement on both HDCT top 100 based data and ANCE top 100 based data. Only V4 results are reported in Table 1.

In Table 2, we compare different SIR strategies based on two widely used pre-trained language models, including BERT-base and ELECTRA-base. Results show that all SIR strategies increase models’ performance. Among 4 strategies, V4 is the best and V3 is close to V4. It empirically shows that dynamic and hard negative samples would be beneficial for fine-tuning of the pre-trained models. The results are also shown in Figure 4.

To conclude, experiments show that SIR can reach SOTA ranking performance even under a native BERT base and it can also greatly improve arbitrary pre-trained model’s performance on MS MARCO Document ranking. We used SIR V4 strategy to boost ELECTRA-large, which achieved the best scores in MSMARCO document ranking leaderboard in May 2021. In order to prevent exposure of information, the leaderboard scores are not provided in tables for the time being.

| Model | HDCT Top 100 | ANCE Top 100 | ||||

| MRR@100 | MAP@20 | NDCG@20 | MRR@100 | MAP@20 | NDCG@20 | |

| WLP | 0.361 | 0.358 | 0.446 | 0.374 | 0.370 | 0.464 |

| ICT | 0.385 | 0.383 | 0.470 | 0.394 | 0.391 | 0.484 |

| PROP | 0.391 | 0.388 | 0.474 | 0.397 | 0.394 | 0.486 |

| WLP + SIR | 0.435† | 0.434† | 0.517† | 0.449† | 0.446† | 0.535† |

| ICT + SIR | 0.440† | 0.438† | 0.521† | 0.457† | 0.455† | 0.543† |

| PROP + SIR | 0.443† | 0.441† | 0.523† | 0.454† | 0.451† | 0.540† |

| Model | HDCT Top 100 | ANCE Top 100 | ||

| MRR@100 | MRR@10 | MRR@100 | MRR@10 | |

| BERT | 0.386 | 0.377 | 0.396 | 0.386 |

| BERT + V1 | 0.432† | 0.425† | 0.442† | 0.434† |

| BERT + V2 | 0.430† | 0.423† | 0.449† | 0.441† |

| BERT + V3 | 0.437† | 0.430† | 0.452† | 0.444† |

| BERT + V4 | 0.440† | 0.433† | 0.453† | 0.445† |

| ELECTRA | 0.389 | 0.380 | 0.393 | 0.383 |

| ELECTRA+V1 | 0.437† | 0.430† | 0.445† | 0.437† |

| ELECTRA+V2 | 0.440† | 0.433† | 0.454† | 0.446† |

| ELECTRA+V3 | 0.443† | 0.437† | 0.457† | 0.450† |

| ELECTRA+V4 | 0.445† | 0.438† | 0.463† | 0.455† |

| Model | HDCT Top 100 | ANCE Top 100 | ||

| MRR@100 | MRR@10 | MRR@100 | MRR@10 | |

| BERT | 0.386 | 0.377 | 0.396 | 0.386 |

| V1 Random 16 | 0.432† | 0.425† | 0.442† | 0.434† |

| V1 Top 16 | 0.319† | 0.308† | 0.320† | 0.308† |

| V2 Random 16 | 0.430† | 0.423† | 0.449† | 0.441† |

| V2 Top 16 | 0.324† | 0.312† | 0.328† | 0.315† |

| V3 Top 16 | 0.437† | 0.430† | 0.452† | 0.444† |

| V3 Random 16 | 0.436† | 0.429† | 0.450† | 0.442† |

| V3 Top 8 | 0.440† | 0.434† | 0.454† | 0.446† |

| V3 Random 8 | 0.435† | 0.429† | 0.453† | 0.445† |

| V4 Top 16 | 0.440† | 0.433† | 0.453† | 0.445† |

| V4 Random 16 | 0.436† | 0.429† | 0.454† | 0.446† |

| V4 Top 8 | 0.446† | 0.440† | 0.455† | 0.447† |

| V4 Random 8 | 0.442† | 0.435† | 0.452† | 0.444† |

Dynamic VS Randomness

In our experiments, we find that introducing randomness in training would have almost the same effect as using model dynamic. We set up several competitive experiment on our four strategies. In some settings, neural model-based compressor is replaced by random compressor to capture the distribution of whole training samples. Instead of selecting K samples with the highest scores (marked as Top in Table 3), random compressor select K samples randomly (marked as Random in Table 3).

As we can observe from Table 3, when we directly use top 16 hard negative samples in each training step on strategy V2, the performance would have a severe decline. Surprisingly, using random 16 samples for training instead of top 16, the performance of strategy V2 significantly improved. We think that when dynamic negative samples are not selected by the model, the randomness of the data can provide a certain dynamics to improve the effect. However, when we introduce randomness to V3 and V4 strategies, which select dynamic and hard negative samples, the performance would not have an obvious improve. This shows that the randomness of data has a certain homogeneity with the dynamics based on the model.

Impact of Multi-level compressors structure

In Table 3, we explore the impact of number of levels for V3 and V4. Results are also shown in Figure 5. For 3-level compressors structure (including classifier), model selects samples from 88 to 48 to 16 for updating. And for 4-level compressors structure, model selects samples from 88 to 48 to 16 to 8, with an additional compressor. In Table 3, for both Random setting and Top setting, the performance is not necessarily improved as the number of levels increases. This may because with the decreasing of negative samples in training, although hard negative samples would be the most informative ones, they lack the ability of capturing full distribution of the training samples. Thus, with more levels of compressors, the performance could not be improved significantly.

Strategy Analysis

Figure 6 shows semantic space of negatives in classifier. Markers in the figure have the same meaning as Figure 1. Note that Figure 1 shows semantic space of the whole model, while Figure 6 shows semantic space in classifier. V1 selects fix hard negative samples as in Figure 6(a). V2 selects fix hard negative samples but with distribution information of wider space as in Figure 6(b), where black dash line represents distribution information received from front compressor. V3 and V4 selects dynamic hard negative samples as in Figure 6(c). The difficulty of negative samples changes with the training process. The comparison of the four strategies (V1-V4 of SIR) are shown in Table 2.

Analysis to strategy V1

Strategy V1 directly uses multi-level model to select hard negative samples for fine-tuning pre-trained models. Negative samples can maintain a relatively normal effect only under the little dynamics brought by random data sampling. Once this dynamics is lost, the effect will decrease a lot, which has been shown in Table 3. Since the compressors at each level of V1 are fixed, the semantic space of negative samples is not improved or gradually narrowed, resulting in poor generalization ability.

Analysis to strategy V2

Strategy V2, which adopts a soft parameter sharing mechanism, has a certain improvement over V1. Such results show that the prior knowledge from parameters memory would lead models to learn in a more comprehensive manner. Compared with strategy V1, V2 can improve the narrow semantic space with the prior knowledge to prevent the model getting trapped into local optimal.

Analysis to strategy V3

Strategy V2 proves the effectiveness of soft parameter sharing. Strategy V3 goes further to use the supervisory signal to adjust the distribution of negative samples. Compared with V1, V2 and baseline pre-trained models, strategy V3 outperforms it a lot. With the guidance of supervisory signal, V3 would re-evaluate the difficulty of negative samples after parameter update. Therefore, the model can obtain the most suitable negative samples at any stage, which means that the dynamic distribution of negative samples is learned.

Analysis to Strategy V4

V3 dynamically adjusts the distribution of negative samples by using the supervisory signal through the parameter sharing of the compressor. However, the rear compressor cannot feed back the rationality of negative sample selection to the front compressor. In V4, the feedback loop of the front and rear stage compressor is formed through conditional probability, so that the supervisory signal can be adjusted directly through the feedback loop to control the dynamics brought by parameter sharing. Under a certain dynamics control, V4 outperforms other strategies. The negative sample semantic space of V4 is the same as that of V3. The only difference is that supervisory signals from upper compressors can also refine lower compressors, thus they can influence semantic space in lower compressors.

Conclusions and Future Work

In this paper, we propose SIR, a light-weight fine-tuning strategy for pre-trained models. The key idea is to optimize semantic space of negatives for fine-tuning ranking models. SIR can enhance various pre-trained models without complex design in tasks or additional computational resources. Experiments on MS MARCO document ranking dataset shows that SIR achieves significant improvement over the baseline.

Currently, compressors are all homogeneous. We think it promising to explore heterogeneous compressors to integrate strength of different pre-trained models in the future.

References

- Bajaj et al. (2016) Bajaj, P.; Campos, D.; Craswell, N.; Deng, L.; Gao, J.; Liu, X.; Majumder, R.; McNamara, A.; Mitra, B.; Nguyen, T.; et al. 2016. Ms marco: A human generated machine reading comprehension dataset. arXiv preprint arXiv:1611.09268.

- Bengio et al. (2009) Bengio, Y.; Louradour, J.; Collobert, R.; and Weston, J. 2009. Curriculum learning. In Proceedings of the 26th annual international conference on machine learning, 41–48.

- Brown et al. (2020) Brown, T. B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. 2020. Language models are few-shot learners. arXiv preprint arXiv:2005.14165.

- Chang et al. (2020) Chang, W.; Yu, F. X.; Chang, Y.; Yang, Y.; and Kumar, S. 2020. Pre-training Tasks for Embedding-based Large-scale Retrieval. CoRR, abs/2002.03932.

- Chevalier-Boisvert et al. (2018) Chevalier-Boisvert, M.; Bahdanau, D.; Lahlou, S.; Willems, L.; Saharia, C.; Nguyen, T. H.; and Bengio, Y. 2018. Babyai: A platform to study the sample efficiency of grounded language learning. arXiv preprint arXiv:1810.08272.

- Clark et al. (2020) Clark, K.; Luong, M.-T.; Le, Q. V.; and Manning, C. D. 2020. ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators. arXiv:2003.10555.

- Dai and Callan (2019) Dai, Z.; and Callan, J. 2019. Context-Aware Sentence/Passage Term Importance Estimation For First Stage Retrieval. arXiv:1910.10687.

- Dai et al. (2018) Dai, Z.; Xiong, C.; Callan, J.; and Liu, Z. 2018. Convolutional neural networks for soft-matching n-grams in ad-hoc search. In Proceedings of the eleventh ACM international conference on web search and data mining, 126–134.

- Devlin et al. (2019) Devlin, J.; Chang, M.-W.; Lee, K.; and Toutanova, K. 2019. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv:1810.04805.

- Feng et al. (2021) Feng, Y.; Gong, Y.; Sun, F.; Ge, J.; and Ou, W. 2021. Revisit Recommender System in the Permutation Prospective. arXiv:2102.12057.

- Franklin et al. (2002) Franklin, G. F.; Powell, J. D.; Emami-Naeini, A.; and Powell, J. D. 2002. Feedback control of dynamic systems, volume 4. Prentice hall Upper Saddle River, NJ.

- Gao, Dai, and Callan (2021) Gao, L.; Dai, Z.; and Callan, J. 2021. Rethink training of BERT rerankers in multi-stage retrieval pipeline. arXiv preprint arXiv:2101.08751.

- Guo et al. (2016) Guo, J.; Fan, Y.; Ai, Q.; and Croft, W. B. 2016. A deep relevance matching model for ad-hoc retrieval. In Proceedings of the 25th ACM international on conference on information and knowledge management, 55–64.

- Howard and Ruder (2018) Howard, J.; and Ruder, S. 2018. Fine-tuned Language Models for Text Classification. CoRR, abs/1801.06146.

- Huang et al. (2020) Huang, J.-T.; Sharma, A.; Sun, S.; Xia, L.; Zhang, D.; Pronin, P.; Padmanabhan, J.; Ottaviano, G.; and Yang, L. 2020. Embedding-based Retrieval in Facebook Search. Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining.

- Huang et al. (2013) Huang, P.-S.; He, X.; Gao, J.; Deng, L.; Acero, A.; and Heck, L. 2013. Learning Deep Structured Semantic Models for Web Search Using Clickthrough Data. CIKM ’13, 2333–2338. New York, NY, USA: Association for Computing Machinery. ISBN 9781450322638.

- Hui et al. (2017) Hui, K.; Yates, A.; Berberich, K.; and De Melo, G. 2017. PACRR: A position-aware neural IR model for relevance matching. arXiv preprint arXiv:1704.03940.

- Lample and Conneau (2019) Lample, G.; and Conneau, A. 2019. Cross-lingual Language Model Pretraining. arXiv:1901.07291.

- Lee, Chang, and Toutanova (2019) Lee, K.; Chang, M.; and Toutanova, K. 2019. Latent Retrieval for Weakly Supervised Open Domain Question Answering. CoRR, abs/1906.00300.

- Liu (2011) Liu, T.-Y. 2011. Learning to rank for information retrieval.

- Liu et al. (2019) Liu, X.; He, P.; Chen, W.; and Gao, J. 2019. Multi-Task Deep Neural Networks for Natural Language Understanding. arXiv:1901.11504.

- Ma et al. (2021a) Ma, X.; Guo, J.; Zhang, R.; Fan, Y.; Ji, X.; and Cheng, X. 2021a. PROP: Pre-Training with Representative Words Prediction for Ad-Hoc Retrieval. In Proceedings of the 14th ACM International Conference on Web Search and Data Mining, WSDM ’21, 283–291. New York, NY, USA: Association for Computing Machinery. ISBN 9781450382977.

- Ma et al. (2021b) Ma, X.; Guo, J.; Zhang, R.; Fan, Y.; Li, Y.; and Cheng, X. 2021b. B-PROP: Bootstrapped Pre-training with Representative Words Prediction for Ad-hoc Retrieval. CoRR, abs/2104.09791.

- Pang et al. (2017) Pang, L.; Lan, Y.; Guo, J.; Xu, J.; Xu, J.; and Cheng, X. 2017. Deeprank: A new deep architecture for relevance ranking in information retrieval. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, 257–266.

- Qiu and Huang (2015) Qiu, X.; and Huang, X. 2015. Convolutional neural tensor network architecture for community-based question answering. In Twenty-Fourth international joint conference on artificial intelligence.

- Robertson and Walker (1994) Robertson, S. E.; and Walker, S. 1994. Some simple effective approximations to the 2-poisson model for probabilistic weighted retrieval. In SIGIR’94, 232–241. Springer.

- Robinson et al. (2021) Robinson, J.; Chuang, C.-Y.; Sra, S.; and Jegelka, S. 2021. Contrastive Learning with Hard Negative Samples. arXiv:2010.04592.

- Ruder (2017) Ruder, S. 2017. An overview of multi-task learning in deep neural networks. arXiv preprint arXiv:1706.05098.

- Shen et al. (2014) Shen, Y.; He, X.; Gao, J.; Deng, L.; and Mesnil, G. 2014. Learning semantic representations using convolutional neural networks for web search. In Proceedings of the 23rd international conference on world wide web, 373–374.

- Trabelsi et al. (2021) Trabelsi, M.; Chen, Z.; Davison, B. D.; and Heflin, J. 2021. Neural Ranking Models for Document Retrieval. arXiv preprint arXiv:2102.11903.

- Wan et al. (2016a) Wan, S.; Lan, Y.; Guo, J.; Xu, J.; Pang, L.; and Cheng, X. 2016a. A deep architecture for semantic matching with multiple positional sentence representations. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 30.

- Wan et al. (2016b) Wan, S.; Lan, Y.; Xu, J.; Guo, J.; Pang, L.; and Cheng, X. 2016b. Match-srnn: Modeling the recursive matching structure with spatial rnn. arXiv preprint arXiv:1604.04378.

- Xiong et al. (2020) Xiong, L.; Xiong, C.; Li, Y.; Tang, K.-F.; Liu, J.; Bennett, P.; Ahmed, J.; and Overwijk, A. 2020. Approximate Nearest Neighbor Negative Contrastive Learning for Dense Text Retrieval. arXiv:2007.00808.

- Zhan et al. (2021) Zhan, J.; Mao, J.; Liu, Y.; Guo, J.; Zhang, M.; and Ma, S. 2021. Optimizing Dense Retrieval Model Training with Hard Negatives. CoRR, abs/2104.08051.