X-Fake: Juggling Utility Evaluation and Explanation of Simulated SAR Images

Abstract

SAR image simulation has attracted much attention due to its great potential to supplement the scarce training data for deep learning algorithms. Consequently, evaluating the quality of the simulated SAR image is crucial for practical applications. The current literature primarily uses image quality assessment (IQA) techniques for evaluation that rely on human observers’ perceptions. However, because of the unique imaging mechanism of SAR, these techniques may produce evaluation results that are not entirely valid. The distribution inconsistency between real and simulated data is the main obstacle that influences the utility of simulated SAR images. To this end, we propose a novel trustworthy utility evaluation framework with a counterfactual explanation for simulated SAR images for the first time, denoted as X-Fake. It unifies a probabilistic evaluator and a causal explainer to achieve a trustworthy utility assessment. We construct the evaluator using a probabilistic Bayesian deep model to learn the posterior distribution, conditioned on real data. Quantitatively, the predicted uncertainty of simulated data can reflect the distribution discrepancy. We build the causal explainer with an introspective variational auto-encoder (IntroVAE) to generate high-resolution counterfactuals. The latent code of IntroVAE is finally optimized with evaluation indicators and prior information to generate the counterfactual explanation, thus revealing the inauthentic details of simulated data explicitly. The proposed framework is validated on four simulated SAR image datasets obtained from electromagnetic models and generative artificial intelligence approaches. The results demonstrate the proposed X-Fake framework outperforms other IQA methods in terms of utility. Furthermore, the results illustrate that the generated counterfactual explanations are trustworthy, and can further improve the data utility in applications.

Index Terms:

SAR image generation, image quality assessment (IQA), explainable artificial intelligence (XAI), causal counterfactual, Bayesian deep learning.I Introduction

Synthetic Aperture Radar (SAR) is an important remote sensing technology in many applications, owing to its all-day and all-weather imaging ability. At present, intelligent SAR image interpretation based on deep learning is facing the challenge of limited annotated data under abundant and varied imaging conditions [2, 4, 3]. Specifically, the characteristics of SAR targets vary dramatically with imaging parameters, especially the target orientation angle. It will have a significant impact on a deep learning model’s generalization ability with limited measurements. Thus, generating high-quality SAR target images with various observation angles is of paramount importance for practical applications. Synthesizing SAR image data with electromagnetic models or advanced artificial intelligence technologies has attracted the most attention in recent years [1, 5, 6]. In this field, how to evaluate the quality of the simulated SAR images [7, 8] and how to utilize the simulated data properly to improve real-world applications are two important issues to be considered [9, 10, 11].

The current research mainly tackles the above two issues separately. Fig. 1 (a) illustrates the widespread application of image quality assessment (IQA) metrics for objective evaluation of simulated SAR data. The majority of related literature employs IQA metrics, like the structural similarity index measure (SSIM) and peak signal-to-noise ratio (PSNR) [12], for evaluation, a process that relies on human visual perception. However, due to the specific microwave characteristics of the SAR image, these metrics may not be applicable to SAR. Even though there are other SAR-specific image quality criteria, like equivalent look number (ELN) and radiometric resolution [14], they can only judge the SAR image based on how it looks and not how useful it is for building a deep model.

Many advanced simulation approaches can synthesize SAR images with good visual quality [15], but they have difficulties in real-world applications [16, 17]. The inconsistent data distribution between simulated and real data would be one of the most significant obstacles that influences the utility, as presented in Fig. 1 (b). The different physical parameters in model-based simulation, for example, would result in a background discrepancy between simulated and measured data. Researchers proposed both explicit and implicit approaches to address this issue. One of them is to use image pre-processing on simulated SAR data, like filtering and Gaussian noise [59, 60], which changes the simulated data itself. However, these strategies require manual and empirical design, which limits their flexibility. The implicit methods mainly include transfer learning and domain adaptation, aiming at learning representative knowledge from simulated data applicable to measured ones [18]. However, they fail to provide a clear explanation for why the simulated SAR images lack utility. From the user’s perspective, it is crucial to be able to figure out the flaws of generated data at the level of human understanding so as to improve the data utility and the simulation method.

To address the above issues simultaneously, we propose a novel unified framework, denoted as X-Fake, to evaluate and explain the simulated SAR image in terms of utility. As shown in Fig. 1 (c), X-Fake comprises a probabilistic evaluator and a causal explainer collaborated together. The evaluator is able to answer the question: ”Is the input real or not?” with quantitative indicators. If the answer is ”no”, the causal explainer will generate the counterfactual image conditioned on the evaluation indicators and SAR target priors. We claim that the low utility of simulated SAR images, although they have good visual quality, lies in the significant distribution inconsistency. It may appear in inaccurate strong scattering points and target orientation given an azimuth angle, or distinct clutter distribution in the background. Consequently, we expect that the probabilistic evaluator can tell the quantified criteria that reflect the inconsistency, and the causal explainer can figure out the details of the simulated SAR image that lead to inauthenticity.

The proposed framework is inspired by Counterfactual Latent Uncertainty Explanations (CLUE), which was the first to explain the uncertainty estimated by the differentiable probabilistic model [19]. The Bayesian neural network (BNN) takes the weight parameter as a probability distribution instead of a point estimation. It offers the opportunity to predict high uncertainty in the input when it is an out-of-distribution sample with training data. Therefore, we can consider the predicted uncertainty as a criterion that signifies the discrepancy in the distribution between real and simulated data. Considering the specific characteristics of the SAR target, we propose to construct a BNN model to estimate the class label and the azimuth angle simultaneously. In our work, we consider the combination of the predicted label and angle uncertainty to be a quantitative metric.

The goal of interpreting the predicted uncertainty is to answer the following question: ”What would it be like if it could be considered a real SAR target?” We observed that most simulated SAR images have good visual quality, and only some details restrict their utility. We introduce the counterfactual explanation (CE) to highlight the small changes to the input simulated data that would reduce the assigned uncertainty. Different from the previous CLUE method, we propose to construct the causal explainer with an IntroVAE model to ensure the high resolution of the generated counterfactual images. We also propose a multi-objective optimization strategy based on the simulated data and evaluation indicators. This allows us to clearly demonstrate the inauthentic details.

The contributions are summarized as follows:

-

•

A novel trustworthy utility evaluation framework with counterfactual explanation is proposed for simulated SAR images. To the best of our knowledge, the proposed X-Fake offers the first attempt to simultaneously carry out the quantitative assessment and explain the inauthentic details of simulated data in terms of utility.

-

•

In this framework, a Bayesian deep convolutional neural network (BDCNN) with category and angle prediction is constructed as the probabilistic evaluator. In regard to explaining the deficiency of simulated data, we propose an IntroVAE-based model optimized with multiple objectives based on evaluation indicators and SAR target priors to generate a high-resolution counterfactual image.

-

•

Several simulated SAR image datasets are explored for validation, including AI-generated and electromagnetic model-simulated data. The proposed X-Fake outperforms other IQA methods for global and individual evaluation. Additionally, the counterfactual explanations are intuitive, clear, and trustworthy, validated by extensive ablation studies, comparative studies, as well as quantitative and qualitative analysis.

Here is a summary of the paper’s organization: Section II introduces the literature review related to this work regarding IQA methods, the utility of simulated SAR images, and uncertainty quantification with explanation. Then, Section III illustrates the proposed trustworthy utility evaluation and explanation framework X-Fake for simulated SAR images. The experiments and result analysis are demonstrated in Section IV. Finally, Section V presents the summary and outlooks, as well as the limitations of this work.

II Related Work

In this section, various works proposed for image quality assessment, evaluating and improving the utility of simulated SAR images, as well as uncertainty quantification and explanation, are briefly reviewed.

II-A Image Quality Assessment

Image quality assessment (IQA) methods mainly aim to assign a score to an image that can evaluate its quality from a visual perspective. Subjective and objective assessment are the two main types of IQA methods. Subjective assessment measures quality with human input, while objective assessment measures quality without it [20, 21]. The objective IQA, which is the most popular method, can be divided into full-reference (FR), no-reference (NR), and reduced-reference (RR) approaches [22]. A lot of research papers have used FR metrics like mean square error (MSE), peak signal-to-noise ratio (PSNR), structural similarity (SSIM), and visual information fidelity (VIF) to describe the quality of a simulated SAR image. These methods assess the image quality based on human visual perception. For generated images with deep learning models, the Fréchet Inception Distance (FID) is also widely used in feature distribution [24]. In essence, it utilizes the pre-trained ImageNet Inception network as the feature extractor and compares the distances between features. However, due to the specific imaging mechanism of SAR, there may be a lack of trustworthiness for evaluation [5, 25]. The NR models rely primarily on image quality benchmark datasets that record human beings’ evaluation results for visual perception [26, 27]. However, the quality assessment of human visual systems is not applicable to SAR, as we will discuss in our later experiments.

II-B Utility of Simulated SAR Images

In real-world applications, we mostly train the deep neural network with simulated SAR images and real data to enhance the algorithm’s generalization ability. As a result, the utility of the simulated SAR images is worth studying. Some related work focuses on utility evaluation in terms of model performance. The SAR target recognition task, for example, is the most explored. For evaluation, Guo et al. proposed the Substitution Rate Curve (RSC) criteria based on the changing recognition rate [7]. Similarly, the hybrid recognition rate curve (HRR) and the class-wise image quality assessment were proposed in the literature [8], respectively. Besides the utility evaluation, some other works aim to improve the data utility of simulated SAR images with transfer learning, domain adaptation, or knowledge distillation [9, 10, 43]. These methods reduce the distribution discrepancy between real and simulated SAR images, enhancing model performance through the use of simulated data. In summary, evaluating and improving the utility of the simulated SAR images are equally important, yet current studies approach them separately. Mostly, the utility improvement takes place implicitly in the feature space, making it difficult to interpret. In contrast, our method aims to address the two issues simultaneously with a unified, trustworthy, and explainable framework.

II-C Uncertainty Quantification and Explanation for Trustworthy AI

Methods for estimating uncertainty in a DNN prediction are a popular and vital field of research [29]. They can be categorized into single deterministic [30], Bayesian [61], ensemble [31], and test-time augmentation methods [32]. For Bayesian neural networks, specifically, the posterior distribution over the network parameters is estimated, and the predictive uncertainty of test data can be quantified. Approaches such as variational inference [33], Monto Carlo sampling [34], and Laplace approximation [35] were proposed to infer the posterior distribution of BayesCNN. Apart from uncertainty estimation, it is also important to explain the source of uncertainty in order to achieve more trustworthy artificial intelligence (AI). Researchers in explainable artificial intelligence (XAI) have developed numerous methods for helping users understand the predictions of complex supervised learning models [36]. By contrast, explaining the uncertainty of model outputs has received relatively little attention [37, 38, 39]. The mainstream explanation methods can tell why a model works well, but they will be ineffective in explaining the bad result with very high uncertainty [40]. Some researchers conducted causal counterfactuals to explain the high uncertainty in the input [41]. Recently, the literature [19, 42] proposed methods that can help identify which input features are explicitly responsible for models’ uncertainty.

Inspired by the introduced work, we re-formulate the utility evaluation problem by quantifying the domain-shift uncertainty of simulated SAR images in this paper. Furthermore, we illustrate the inauthentic details of the fake SAR image through an uncertainty explanation.

III Method

III-A Overview

The proposed X-Fake framework, as shown in Fig. 2, consists of three steps. The probabilistic evaluator (P-Eva) is firstly constructed with a BayesCNN model. The simulated SAR image is evaluated by P-Eva to obtain the criteria vector containing target class and angle predictions and their uncertainties, denoted as:

| (1) |

where represents the BayesCNN model. The construction and optimization details will be introduced in Section III-B. Then, an IntroVAE model is pre-trained to obtain the optimized encoder and generator , thus preparing for high-quality counterfactual generation. It can be denoted as:

| (2) |

The details will be elaborated in Section III-C. Given the simulated SAR image , its counterfactual explanation is defined as that satisfies the following conditions. On the one hand, should be close to , where the distance metric is applied for constraint. On the other hand, should be satisfied with the desired output of P-Eva. To this end, the optimization can be written as:

| (3) |

Note that optimizing in the high-dimension image space is difficult. To this end, we transform the optimization problem in the latent space of the pre-trained IntroVAE model. The algorithms will be illustrated in details in Section III-D.

III-B Probabilistic Evaluator

The current SAR target image generation is based on prior parameters, and among them the target class and azimuth angle are most critical. The inauthentic details of a simulated SAR target image exist in the following three aspects: ambiguous scattering centers, inaccurate azimuth angles, and out-of-distribution clutter background. They can be summarized with inconsistent feature distribution between real and simulated SAR images, which leads to inferior utility of simulated data. To this end, we propose to construct a probabilistic evaluator based on Bayesian deep neural network to measure the feature distribution discrepancy in terms of class label and azimuth angle.

Specifically, we construct a BayesCNN model as shown in Fig. 3. It features four trainable layers in the front and is subsequently bifurcated into two branches, one for target category prediction and the other for azimuth vector regression prediction. The azimuth is encoded as a vector . In this manner, our BayesCNN network is capable of simultaneously predicting the category label and azimuth angle of the SAR target image.

Compared to its equivalent frequencist CNN, which utilizes a single point estimate for weight parameters, BayesCNN incorporates a probability distribution for its weights. This approach involves conducting posterior inference on the distribution parameters of the neural network using Bayes’ theorem. Therefore, BayesCNN offers results by weighting the posterior probability of all parameter settings, rather than relying solely on a deterministic value of parameters. This approach allows for not only predicting results, but also capturing the uncertainty of those predictions. Our method involves assessing the utility of simulated data by quantifying the uncertainty of the recognition model outputs.

The BayesCNN construction and optimization can be realized in different ways. In this paper, we applied optimization with Bayes by Backprop and Monte Carlo Dropout approximation to construct the P-Eva, denoted as Eva-BBB and Eva-MCD, respectively.

III-B1 Optimization with Bayes by Backprop

Backpropagation and gradient descent are widely applied for neural network training. We apply one of the most popular BayesCNN optimization approaches, Bayes by Backprop (BBB) [56] to learn the posterior distribution of BayesCNN parameters. BBB is a variational inference-based method to learn the posterior distribution on the weights of a neural network from which weights can be sampled in back propagation. It regularizes the weights by maximizing the Evidence Lower Bound (ELBO).

To learn the Gaussian distribution parameters that weight follows, we need to find a way to approximate the intractable true posterior distribution . We use the method of variational inference. This is done by minimizing the Kullback-Leibler difference between the simple variational distribution and the true posterior distribution , that is,

| (4) |

It can be achieved by maximizing the ELBO, that is:

| (5) | ||||

We use the Monte Carlo method to obtain the final objective function approximately, that is,

| (6) |

where is the number of draws.

Note that the last term in Equation (6) refers to the maximum likelihood objective, which is often realized by cross-entropy (classification) or mean square error (regression). As a result, can be written as:

| (7) |

where , , are hyper-parameter, ground truth of class label and angle, respectively.

The local reparameterization trick is applied for optimizing. According to Kumar Shridhar’s work [56], the reparameterization in convolutional layer is achieved by two convolutional operations. Denote the random variable as convolutional filters in the th layer, and the variational posterior probability distribution as . The output of the th layer can be reparameterized as:

| (8) |

where . is the input feature map of the th layer. and denote the convolution operation and component-wise multiplication, respectively.

Thus, the output of a Bayesian convolution layer can be realized by two steps of convolutions, that is, a convolution with the input feature map and the mean values of kernels and another convolution with the square of and the variances of kernels. In this way, the parameters and can be updated separately in the two steps of convolution.

III-B2 Monte Carlo Dropout as a Bayesian Approximation

Gal et al. [57] have demonstrated that a neural network with arbitrary depth and non-linearities applied before each weight layer is mathematically equivalent to an approximation of a probabilistic deep Gaussian process. According to this method, we incorporate dropout layers preceding each convolutional layer. Unlike its equivalent frequencist CNN, dropout is applied not only during model training but also during testing in order to approximate a probabilistic deep Gaussian process. The BayesCNN constructed using this method is named Eva-MCD.

III-B3 Uncertainty

The category uncertainty of BayesCNN prediction is given by [56]:

| (9) |

where denotes the frequentist inference with parameter sampled from the obtained posterier distribution, and denotes the Bayesian average of predictions, and is the sampling number.

The uncertainty for azimuth angle prediction can be expressed as the variance of multiple samplings:

| (10) |

where .

To sum up, the output of P-Eva containing category and azimuth angle predictions with the corresponding uncertainties can be given by:

| (11) |

The overall uncertainty can be calculated as .

III-C IntroVAE Pre-training

Optimizing the counterfactual images in the high-dimensional space is challenging and cannot ensure the in-distribution constraint [19]. To this end, the optimization can be conducted in the latent space of a probabilistic generative model to ensure the similar data manifold. In order to generate precise counterfactual explanations, it is crucial to first develop a high-quality image generation model. Consequently, we propose to utilize IntroVAE [58] as the foundational structure of the explainer. Similar to VAE, IntroVAE consists of an encoder and decoder that can project the high-dimensional data into low-dimensional latent space, enabling us to optimize in the latent space in order to preserve the data manifold. Additionally, IntroVAE incorporates adversarial training based on VAE to enhance the quality of the generated counterfactual explanations.

As depicted in Fig. 2, the VAE encoder is additionally served as the discriminator, and the VAE decoder is served as the generator in GAN. Adversarial learning like GAN is performed during model training so that the model can self-estimate the difference between the generated sample and the training data, and then update itself to produce a more realistic sample.

In order to match the distribution of the generated samples to the true distribution of the given training data, we use the regularization term as the adversarial training cost function. The Encoder requires minimizing so that the posterior of the real data matches the prior . Simultaneously, it requires maximizing so that the posterior of the generated sample deviates from the prior . includes and which are the latent codes of the reconstructed images and , respectively.

The loss function of the Encoder is:

| (12) |

Conversely, the Generator needs to minimize so that the posterior approximately matches the prior :

| (13) |

where is a positive margin, . is the mean square error (MSE) loss function of the reconstruction error. represents KL-divergence: . , , and are weighting parameters used to balance the importance of each item.

III-D Counterfactual Explanation Generation

Counterfactual analysis aims to examine the question of ”What changes are required to the input in order to get the desired output?” to explain how the model makes decisions. In our study, we aim to minimize the modifications required for SAR images deemed of low quality by the BayesCNN evaluation network in order to optimize their utility for deep learning models. This involves generating an ”explanation” closely resembling the original simulated images, and enabling the evaluator to achieve the desired outcome.

For the input simulated SAR image , the prediction of P-Eva is denoted as where the overall uncertainty could be relatively high, or the label and angle prediction are even wrong. The counterfactual explanation should meet the requirements of correct prediction , lower uncertainty, and minimal change compared to . Therefore, the optimization can be divided into the following three parts. First is the distance constraint, denoted as:

| (14) |

where is the L1 norm. The following constraints the counterfactual image consistent with the prior category and angle information:

| (15) |

where denotes the class number, and denotes the L2 norm. are two hyper-parameters. The final term defines the predicted uncertainty of :

| (16) |

The above-mentioned are predicted by the pre-trained P-Eva model. As a result, the overall optimization can be written as:

| (17) |

In the optimization process, we do not directly optimize in the high-dimensional space, but map to the low-dimensional latent space through the pre-trained encoder of IntroVAE to optimize . Therefore, Equation (17) can be re-written as:

| (18) | ||||

Then, the latent counterfactual can be obtained from:

| (19) |

The counterfactual explanation in the image domain can be generated from the pre-trained decoder of IntroVAE, i.e., . During the counterfactual generation, only the latent code is optimized while the P-Eva, the encoder and generator of IntroVAE are frozen.

The inauthentic details of a simulated SAR image can be reported as the pixel changes of the counterfactual explanations and the original image. For a better visualization, the difference is multiplied with its absolute value while keeping the sign. The positive and negative values are colored with red and blue in the experiments, respectively.

IV Experiments

IV-A Datasets

In the experiments, a real SAR image dataset and four simulated SAR image datasets are applied to conduct experiments. The simulated datasets include an EM model based simulation dataset and three generated SAR image datasets by advanced generative AI approaches. Some examples are given in Fig. 4.

| Dataset | Dep Angle | 2S1 | BMP-2 | BRDM-2 | BTR-60 | BTR-70 | D7 | T-62 | T-72 | ZIL-131 | ZSU-234 | Total | Gen-Train | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Real | MSTAR | 15° | 274 | 195 | 274 | 195 | 196 | 274 | 273 | 196 | 274 | 274 | 2425 | / |

| 17° | 299 | 233 | 298 | 256 | 233 | 299 | 299 | 232 | 299 | 299 | 2747 | / | ||

| Simulated | SAR-ACGAN | 17° | 299 | 233 | 298 | 256 | 233 | 299 | 299 | 232 | 299 | 299 | 2747 | 237 |

| SAR-CAE | 17° | 286 | 220 | 286 | 244 | 221 | 287 | 287 | 220 | 287 | 287 | 2625 | 121 | |

| SAR-NeRF | 17° | 360 | 360 | 360 | 360 | 360 | 360 | 360 | 360 | 360 | 360 | 3600 | 258 | |

| SAMPLE | 15°-17° | 174 | 107 | 0 | 0 | 92 | 0 | 0 | 108 | 0 | 174 | 655 | / | |

IV-A1 MSTAR

The Moving and Stationary Target Acquisition and Recognition (MSTAR) dataset [44] is acquired by an X-band high-resolution synthetic aperture radar at Spotlight mode with a resolution of 0.3m 0.3m. The dataset primarily comprise SAR slice images of stationary vehicles, encompassing target images of various vehicle types obtained at different azimuth angles. The experiments utilized data acquired at elevation angles of 15∘ and 17∘, containing images of 10 different classes, that is, 2S1, BMP-2, BRDM-2, BTR-60, BTR-70, D7, T-62, T-72, ZIL-131, and ZSU-234. The imaging azimuth angle ranges from 1∘ to 360∘, with the interval of 1∘ to 15∘.

IV-A2 SAR-ACGAN

The SAR-ACGAN dataset was generated by the Auxiliary Classifier Generative Adversarial Network (ACGAN) model [45], which was trained on 237 samples of MSTAR dataset at the depression angle of 17∘. The selected 237 training images were sampled with the azimuth angle interval of 15∘. The trained ACGAN model generated 2747 SAR images in total, as illustrated in Table I.

IV-A3 SAR-CAE

The SAR-CAE dataset is constructed with a causal adversarial autoencoder (CAE) model proposed by Guo et al. [46]. CAE is a causal model for SAR image representation conditioned on disentangled semantic factors. It was trained on limited MSTAR data with a few azimuth angles, then it can generate SAR target images given an arbitrary azimuth angle and class label. Only 12 or 13 images per category were sampled from MSTAR dataset at the depression angle of 17∘ for training the CAE model, with an azimuth interval of 30∘. The number of the generated images is 2625, as given in Table I.

IV-A4 SAR-NeRF

SAR-NeRF is a cutting-edge generative AI model considering the imaging mechanism of SAR inspired by the concept of neural radiance fields (NeRF), proposed by Lei et al. [47]. The SAR-NeRF dataset is derived from the MSTAR dataset, with an azimuth interval of 15∘ and a depression angle of 17∘. The imaging azimuth angle interval of SAR-NeRF dataset is fixed at 1∘, resulting in a total of 3600 images with a depression angle of 17∘, as depicted in the third row of Table I.

IV-A5 SAMPLE

The Synthetic and Measured Paired and Labeled Experiment (SAMPLE) dataset [15] provides EM model based simulation data and the paired measured SAR images with the same configuration as MSTAR dataset. In this paper, we selected the EM-simulated SAR images of same categories with MSTAR dataset for evaluation, including 2S1, BMP-2, BTR-70, T-72, and ZSU-234. The sampling azimuth angle ranges from 280∘ to 350∘ (10∘ to 80∘ according to the angle definition of MSTAR dataset), with elevation angles ranging from 15∘ to 17∘. The imaging interval is mainly about 1-2∘, but there are also some images with intervals ranging from 3∘ to 10∘. The details are given in Table I.

IV-B Experimental Settings

| SAR-NeRF | SAR-CAE | SAR-ACGAN | SAMPLE | |

|---|---|---|---|---|

| Training | 15∘(80%) | 15∘(80%) | 15∘(80%) | 17∘(80%) |

| Validation | 15∘(20%) | 15∘(20%) | 15∘(20%) | 17∘(20%) |

In this paper, all experiments are conducted on NVIDIA GeForce RTX 3090 Ti with a compute capability of 8.6. The details of training and validation data settings are given in Table II.

The proposed evaluation method aims to assess the distribution inconsistency between real and simulated data. Consequently, different from other full-reference assessment methods, our method does not require paired data. In order to prove the generalization ability of the proposed evaluation method, we applied real SAR images with different depression angle to train the BayesCNN model. To evaluate SAR-ACGAN, SAR-CAE and SAR-NeRF data with depression angle of 17∘, the BayesCNN model is trained with MSTAR real data at 15∘, as given in Table II.

The input size of evaluation model is 8888. The training data are pre-processed with center-crop, logarithm and random grayscale stretching for data augmentation, aiming to improve the generalization of the evaluation model. We used Adam as the optimizer with a learning rate of 0.001. The epoch number and the batch size are set to 300, 25, respectively. The trade-off parameter in Equation (7) is set to 20.

At IntroVAE Pre-training stage, the training and validation settings as well as the data pre-processing, follow the settings of evaluation stage. The hyper-parameters are given in Table III. We use Adam as optimizer to optimize Generator and Encoder with a learning rate of 0.0005. The epoch is 500 and the batch size is 25.

| latent dim | |||||

|---|---|---|---|---|---|

| 10 | 0.0005 | 100 | 0.0005 | 0.0005 | 100 |

The trade-off parameters , , and during the counterfactual optimization will be discussed in the experiments. The optimal values in our experiments are set to 1, 1, and 30, respectively. The Adam optimizer is applied, and the learning rate is 0.0005. The epoch numbers are set to 50, 150, 50, and 200 for SAR-NeRF, SAR-CAE, SAR-ACGAN, and SAMPLE, respectively.

IV-C Quantitative Results of Evaluation

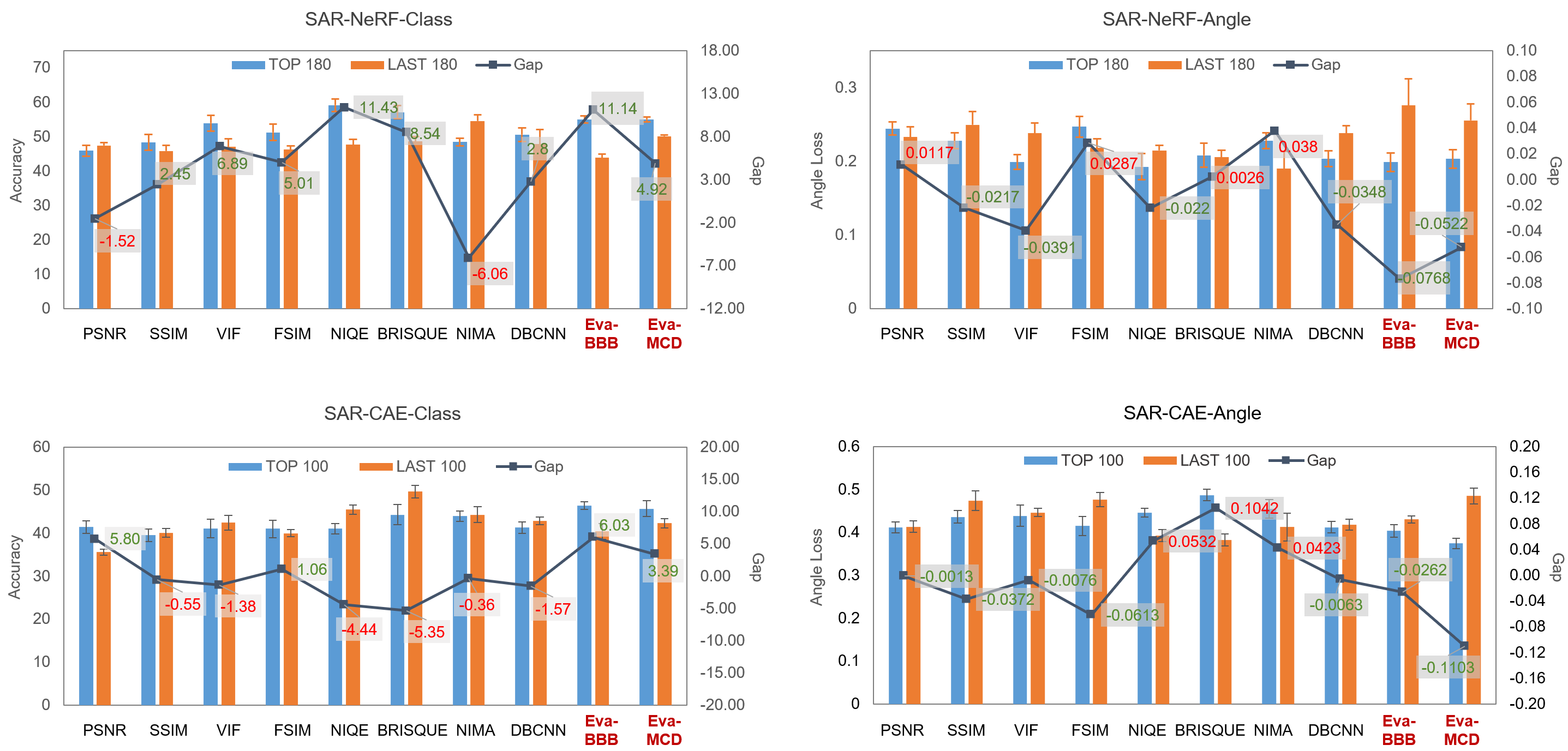

In order to prove the effectiveness of the proposed evaluation method, we sorted the simulated data with different assessment metrics and split them into ”TOP” and ”LAST” evenly. Then, the classification model is trained with ”TOP” and ”LAST” data separately and tested with real images. The performance gap between ”TOP” and ”LAST” demonstrates the effectiveness of the assessment metrics in terms of utility.

In this part, we applied SAR-NeRF and SAR-CAE datasets for experiments. The ”TOP” and ”LAST” numbers of SAR-NeRF and SAR-CAE are set to 180 and 100 per category, respectively. Four full-reference IQA metrics, i.e., PSNR [48], SSIM [49], VIF [50], FSIM [51], and four non-reference IQA models, i.e., NIQE [53], BRISQUE [55], NIMA [54], DBCNN [52], are compared.

As shown in Fig. 5, the classification accuracy of models trained with ”TOP” data filtered by different assessment metrics are marked in blue, while the ones trained with ”LAST” are presented in orange. The performance gap between ”TOP” and ”LAST” is also plotted. The positive values denote the assessment metrics are effective in terms of utility, otherwise demonstrating ineffective.

The results show that our method achieves the highest performance gap, which demonstrates that our method outperform other full-reference and non-reference IQA methods in terms of utility. Notably, some IQA models fail to evaluate the utility of simulated SAR image with negative performance gaps. The results illustrate that those methods based on human visual perception may not applicable to SAR images. We also explore the effectiveness of different BayesCNN models, including Eva-BBB and Eva-MCD. The results show that Eva-BBB can achieve better performance in evaluation.

Fig. 6 exhibits four paired examples of real and simulated images. It is difficult to distinguish the quality of these four simulated images from visual perspective. The VIF indicators demonstrate they have similar image quality, and example (c) and (d) are with lower quality scores than (a) and (b). However, we observed the uncertainties of simulated sample (a) and (b) are high, denoting their distinct feature distribution discrepancy with real data. The incorrect predicted labels by a recognition model trained with real data can verify this. As a result, our method can successfully assess the simulated SAR images in terms of data utility, even they have similar visual quality.

Some researches also proposed the evaluation metrics based on data utility, such as the recognition versus substitution rate curves (RSC) [7]. Fig. 7 illustrates the RSC results of three simulated SAR image datasets. Note that RSC is a dataset utility evaluation index which can only report the global evaluation result, rather than assigning criteria to individuals. To this end, it cannot filter out samples with low-utility. On the contrary, our method can evaluate data utility for each sample with a quantitative metric so as to filter the high quality data.

IV-D Qualitative Results of Explanation

To demonstrate that the proposed explanation method can explicitly reveal the inauthentic details of the simulated data, we visualize some examples from different simulated datasets, as shown in Fig. 8. In this figure, we present the original simulated images in (a) and (e); generate counterfactual samples in (b) and (f); explain the inauthentic details in (c) and (g), where red and blue represent the missing and redundant parts of the simulated images, respectively; and present real samples with the closest azimuth angles in (d) and (h). The predicted uncertainty and class label of the images are also provided.

It can be found that the uncertainty of the generated counterfactual image is reduced to a certain extent (marked in green). Additionally, the wrong prediction can be corrected (marked in red). Compared with the original simulated image, the counterfactual explanation is closer to the real one from a visual perspective. The inauthentic details explicitly explain the reason for the low utility of simulated data.

In addition, we verify the effectiveness of the proposed counterfactual explanation method by visualizing the feature distribution in the latent space. As provided in Fig. 9, the feature distribution of real and original simulated SAR images, and the one of real and counterfactual explanations, are demonstrated in (a) and (b), respectively. It can be seen that the original simulated data and the real ones have a distinct feature distribution inconsistency in the latent space. In contrast, the generated counterfactual samples achieve a more consistent distribution with the real ones, thus improving the data utility.

As illustrated above, the generated counterfactual explanation can improve the data quality and utility both explicitly and implicitly. Accordingly, we conducted several quantitative experiments to verify the potential of our proposed explanation method for improving the simulated data. In Table IV, we applied the A-ConvNet model trained on different simulated data to test the real MSTAR target images (15∘) and recorded the results. For each simulated dataset, four experiments are conducted. The term Upperbound denotes training with full real data, which demonstrates the recognition model’s upper limit. Before denotes training with full original simulated SAR images, while after (BBB/MCD) denotes training with counterfactual samples generated by Eva-BBB and Eva-MCD, respectively. We maintain the same number of training samples across all four experiments to ensure fairness in comparison. We can see that training with full original simulated data will cause a dramatic decrease in classification accuracy tested on real SAR images. The generated counterfactual explanation, however, can successfully improve the data utility that makes the trained model more applicable.

Furthermore, we also tested the performance of training different deep learning architectures with counterfactual samples, as given in Table V. The experimented simulated dataset is SAR-NeRF. We explore popular convolutional neural network and vision transformer models, such as ResNet-18, ResNet-50, DenseNet-121, and Swin-Transformer, for illustration. The results also demonstrate the effectiveness of the proposed explanation method, which has the potential to improve the utility of the data.

| Dataset | 2S1 | BMP-2 | BRDM-2 | BTR-60 | BTR-70 | D7 | T-62 | T-72 | ZIL-131 | ZSU-234 | Overall Acc | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SAR-NeRF | UpperBound | 90.27±1.05 | 98.29±0.64 | 94.77±2.11 | 98.97±0.00 | 98.98±0.83 | 98.54±0.79 | 97.92±1.25 | 99.32±0.64 | 100.00±0.00 | 100.00±0.00 | 97.55±0.30 |

| before | 44.89±6.03 | 73.33±13.18 | 76.40±3.49 | 90.09±2.79 | 91.16±3.01 | 39.54±5.83 | 3.54±0.91 | 85.37±5.84 | 9.49±2.60 | 65.45±11.65 | 54.45±0.77 | |

| after (BBB) | 89.66±0.17 | 92.82±2.55 | 85.89±3.78 | 93.85±1.45 | 94.73±0.96 | 87.96±0.60 | 66.30±1.30 | 96.94±0.72 | 49.03±0.91 | 90.88±5.20 | 83.55±1.04 | |

| after (MCD) | 94.77±1.75 | 91.97±3.41 | 90.02±1.34 | 92.14±2.07 | 95.92±2.73 | 90.27±4.18 | 71.31±5.43 | 96.43±1.25 | 55.11±10.34 | 95.01±2.26 | 86.42±0.96 | |

| SAR-CAE | UpperBound | 94.53±1.37 | 97.61±1.05 | 96.35±2.06 | 98.20±1.42 | 99.17±0.00 | 98.91±0.30 | 98.29±0.91 | 99.66±0.48 | 99.88±0.17 | 100.00±0.00 | 98.18±0.22 |

| before | 62.77±3.17 | 47.18±7.89 | 48.91±12.07 | 67.18±4.61 | 34.69±10.09 | 97.45±3.15 | 12.09±7.00 | 32.14±9.64 | 25.18±10.06 | 42.70±7.53 | 47.26±3.62 | |

| after (BBB) | 90.80±3.13 | 73.74±5.70 | 69.20±8.89 | 77.44±2.53 | 76.02±4.48 | 96.50±2.01 | 81.61±2.91 | 80.51±3.31 | 83.50.29±2.63 | 91.02±3.92 | 82.70±1.16 | |

| after (MCD) | 91.61±1.77 | 80.72±10.09 | 75.40±9.47 | 78.36±4.09 | 74.90±8.00 | 96.35±1.71 | 86.30±1.79 | 78.37±9.90 | 91.90±2.04 | 97.08±1.34 | 86.00±2.83 | |

| SAR-ACGAN | UpperBound | 88.32±3.51 | 97.44±0.73 | 91.00±0.46 | 97.78±0.64 | 98.47±0.42 | 98.66±0.46 | 94.87±2.85 | 98.13±1.46 | 97.57±0.96 | 98.66±0.34 | 95.85±0.90 |

| before | 38.98±5.95 | 19.38±5.47 | 69.20±5.97 | 54.87±4.71 | 30.82±4.70 | 93.57±1.71 | 12.09±7.74 | 44.29±8.56 | 52.41±8.16 | 52.41±10.00 | 48.04±0.36 | |

| after (BBB) | 47.74±4.08 | 93.03±1.64 | 88.83±1.69 | 78.97±4.41 | 93.98±1.69 | 95.99±1.89 | 65.93±7.06 | 53.57±4.63 | 55.11±6.65 | 79.27±4.95 | 74.64±0.19 | |

| after (MCD) | 51.61±5.02 | 89.64±2.23 | 91.97±0.83 | 75.28±3.72 | 91.22±2.22 | 95.99±1.44 | 62.78±8.40 | 58.98±5.14 | 59.93±7.54 | 75.55±4.57 | 74.85±0.18 | |

| SAMPLE | UpperBound | 93.94±1.71 | 100.00±0.00 | - | - | 100.00±0.00 | - | - | 100.00±0.00 | - | 100.00±0.00 | 98.38±0.46 |

| before | 57.58±14.65 | 26.88±11.88 | - | - | 56.86±25.45 | - | - | 16.67±11.51 | - | 92.59±6.05 | 55.66±3.37 | |

| after (BBB) | 56.36±13.19 | 98.92±1.52 | - | - | 94.12±2.40 | - | - | 84.38±9.20 | - | 100.00±0.00 | 84.79±5.10 | |

| after (MCD) | 69.70±10.43 | 79.57±4.02 | - | - | 100.00±0.00 | - | - | 87.50±2.55 | - | 96.30±5.24 | 85.92±3.15 | |

| Model | 2S1 | BMP-2 | BRDM-2 | BTR-60 | BTR-70 | D7 | T-62 | T-72 | ZIL-131 | ZSU-234 | Overall Acc | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ResNet-18 | UpperBound | 78.95±5.67 | 92.65±0.24 | 95.50±1.75 | 96.75±2.38 | 100.00±0.00 | 83.70±4.00 | 90.48±2.26 | 99.32±0.24 | 93.55±2.82 | 100.00±0.00 | 92.56±0.64 |

| before | 5.47±5.95 | 36.92±3.65 | 70.19±19.15 | 71.62±5.87 | 98.47±0.42 | 22.87±5.85 | 0.00±0.00 | 92.52±2.84 | 0.49±0.34 | 77.86±5.86 | 44.15±1.15 | |

| after | 81.51±7.49 | 79.49±2.75 | 79.93±10.58 | 87.69±1.51 | 94.56±3.76 | 80.41±7.94 | 58.36±12.68 | 94.22±2.29 | 44.89±6.11 | 98.66±0.62 | 78.82±1.37 | |

| ResNet-50 | UpperBound | 61.68±15.75 | 87.52±5.46 | 94.16±3.87 | 98.80±0.64 | 98.64±1.27 | 78.83±8.82 | 64.71±13.11 | 97.79±1.05 | 91.97±5.82 | 100.00±0.00 | 86.35±4.41 |

| before | 2.68±2.09 | 44.10±14.72 | 34.55±12.08 | 52.14±23.75 | 97.96±2.20 | 8.88±6.90 | 0.00±0.00 | 82.65±13.01 | 0.00±0.00 | 49.15±19.09 | 33.10±3.51 | |

| after | 76.28±3.28 | 81.03±8.36 | 70.56±12.22 | 91.62±4.30 | 88.78±4.02 | 75.67±3.75 | 53.24±11.01 | 93.03±1.34 | 23.84±4.84 | 92.82±0.75 | 72.89±1.80 | |

| DenseNet-121 | UpperBound | 67.76±6.60 | 82.05±4.66 | 82.73±14.39 | 96.07±1.35 | 100.00±0.00 | 81.02±9.12 | 90.96±7.88 | 97.79±1.46 | 90.39±1.05 | 99.88±0.17 | 88.21±3.05 |

| before | 0.00±0.00 | 1.20±1.35 | 4.14±3.28 | 7.35±7.54 | 98.13±1.20 | 0.00±0.00 | 0.00±0.00 | 80.10±16.50 | 0.12±0.17 | 63.02±27.16 | 22.69±1.10 | |

| after | 48.05±13.27 | 56.24±10.53 | 76.52±14.13 | 88.21±4.41 | 94.90±1.25 | 67.03±19.14 | 57.88±12.10 | 98.81±1.34 | 33.94±7.05 | 98.78±0.62 | 70.43±5.41 | |

| Swin-T | UpperBound | 72.99±6.41 | 71.79±14.56 | 97.32±1.13 | 98.63±0.64 | 96.94±2.08 | 92.58±3.56 | 80.10±7.18 | 97.96±1.50 | 93.07±4.17 | 100.00±0.00 | 89.99±3.09 |

| before | 0.12±0.17 | 0.00±0.00 | 0.12±0.17 | 0.00±0.00 | 100.00±0.00 | 0.00±0.00 | 0.00±0.00 | 0.00±0.00 | 0.97±0.62 | 15.33±6.41 | 9.95±0.66 | |

| after | 41.36±18.12 | 45.81±13.28 | 79.56±14.10 | 66.32±11.15 | 92.35±6.05 | 45.26±6.11 | 25.89±1.80 | 53.23±15.68 | 74.70±8.20 | 45.01±14.87 | 56.00±3.79 | |

Fig. 10 illustrates the utility evaluation results and the counterfactual explanation results of the three AI-generated SAR image datasets. The x-axis and the y-axis denote the number of training samples of the Gen-AI model, and the test results on real SAR images of a simulated data trained model, respectively. It can be observed that SAR-CAE data are generated by the fewest training samples and can perform comparable utility with SAR-ACGAN where the training data are doubled. With similar training numbers, SAR-NeRF data has better utility than SAR-ACGAN according to our evaluation results. The counterfactual explanations of SAR-NeRF and SAR-CAE data are more effective than those of SAR-ACGAN data, that improve the image utility better.

IV-E Ablation Studies

In order to verify the effectiveness of each module in the counterfactual generation process, we conducted a series of ablation experiments on the SAMPLE dataset, as shown in Table VI.

First, the recognition model is trained directly on original simulated data and tested on real data to obtain the baseline result. Then, we applied the CLUE method to generate the counterfactual explanation, i.e., using VAE as the generator and optimizing with distance and uncertainty. The result shows a slight improvement of 5.15% over the baseline, indicating the effectiveness of the counterfactual explanation primarily. It is worth noting that the utility of the generated counterfactual data has increased significantly after adding the class guidance. Additionally, IntroVAE can improve the visual quality of counterfactual samples compared with VAE, enhancing its utility as well as obtaining high-quality counterfactual interpretation samples.

By introducing the angle guidance, we need to enable the assessment model to predict the azimuth angle, denoted as Eva-BBB. It can be obviously observed from the fourth row and the last row that introducing angle guidance can further improve the utility of counterfactual samples. The performance of predicting class and angle has improved to a certain extent. Moreover, we also verify the superiority of BayesCNN based CNN in predicting uncertainty compared with point-estimated frequency neural networks, as given in the 5th and 6th rows in Table VI.

| IntroVAE | Class Guidance | Angle Guidance | P-Eva | Accuracy | Angle loss |

|---|---|---|---|---|---|

| Baseline (Train: Sim data, Test: Real data) | ✗ | 58.35 4.11 | 0.0086 0.0019 | ||

| Eva-BBB (w/o angle) | 63.50 9.47 | 0.0107 0.0008 | |||

| ✓ | Eva-BBB (w/o angle) | 77.19 4.79 | 0.0115 0.0018 | ||

| ✓ | ✓ | Eva-BBB (w/o angle) | 79.32 2.31 | 0.0090 0.0015 | |

| ✓ | ✓ | ✓ | CNN | 77.86 1.91 | 0.0095 0.0009 |

| ✓ | ✓ | ✓ | Eva-BBB | 88.25 1.44 | 0.0054 0.0008 |

IV-F Hyper-Parameter Discussion

There are three hyperparameters involved in the process of counterfactual sample generation. and control the trade-off between the classification loss and angle loss, and controls the distance term between the counterfactual and original data. By fixing and changing the ratio of , it can be found that with the increase in the proportion of angle loss, the azimuth angle of the generated counterfactual image is more accurate, and the recognition model trained with these counterfactual samples performs better on azimuth angle regression prediction. When , the result achieves the highest classification accuracy on the test set. We also experience the influence of . The result shows that a larger would result in less change from the original image, leading to inferior counterfactual results.

| : | Accuracy | Angle loss | |

|---|---|---|---|

| 1:1 | 84.081.35 | 0.00590.0013 | |

| 1:30 | 88.251.44 | 0.00540.0008 | |

| 1:100 | 86.891.59 | 0.00510.0005 | |

| 1:30 | 85.442.42 | 0.00560.0014 |

V Conclusion

In this paper, a novel trustworthy framework, X-Fake, is proposed to evaluate the simulated SAR images and explain the inauthentic details of them from the perspective of data utility. The proposed framework comprises a Bayesian convolutional neural network-based probabilistic evaluator to output predicted uncertainty and a generative model-based causal explainer to obtain the counterfactual explanation. The predicted uncertainty of simulated data reveals an inconsistent distribution with real ones, which can be regarded as a criteria for utility assessment. The counterfactual explanations are generated with prior information, including the target label, azimuth angle, and the predicted uncertainty from the evaluator. It demonstrates the inauthentic details of the simulated image that cause the inconsistent data distribution. Experiments are conducted on several simulated SAR image datasets generated by both EM-based and GenAI-based approaches. The results illustrate that the proposed evaluator can successfully filter out high-quality data in terms of utility, while the trained deep learning model can achieve higher performance on real SAR images. Additionally, the generated counterfactual images explicitly improve the utility of simulated data. The classification model trained on generated counterfactual images outperforms the ones trained on original simulated data by 30%.

References

- [1] C. Cao, Z. Cui, L. Wang, J. Wang, Z. Cao, and J. Yang, “A Demand-Driven SAR Target Sample Generation Method for Imbalanced Data Learning,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–15, 2022.

- [2] Z. Huang, Z. Pan, and B. Lei, “What, where, and how to transfer in SAR target recognition based on deep CNNs,” IEEE Transactions on Geoscience and Remote Sensing, vol. 58, no. 4, pp. 2324–2336, 2019.

- [3] Z. Huang, Z. Pan, and B. Lei, “Transfer learning with deep convolutional neural network for SAR target classification with limited labeled data,” Remote sensing, vol. 9, no. 9, p. 907, 2017.

- [4] C. Wang, H. Gu, and W. Su, “SAR Image Classification Using Contrastive Learning and Pseudo-Labels With Limited Data,” IEEE Geoscience and Remote Sensing Letters, vol. 19, pp. 1–5, 2021.

- [5] K. Wang, G. Zhang, Y. Leng, and H. Leung, “Synthetic Aperture Radar Image Generation With Deep Generative Models,” IEEE Geoscience and Remote Sensing Letters, vol. 16, no. 6, pp. 912–916, 2018.

- [6] X. Hu, W. Feng, Y. Guo, and Q. Wang, “Feature Learning for SAR Target Recognition with Unknown Classes by Using CVAE-GAN,” Remote Sensing, vol. 13, no. 18, p. 3554, 2021.

- [7] Q. Guo, Y. Qian, H. Wang, W. Yu, F. Xu, T. J. Cui, and Y.-Q. Jin, “Recognition Rate Versus Substitution Rate Curve: An Objective Utility Assessment Criterion of Simulated Training Data,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–15, 2022.

- [8] Z. Yu and G. Dong, “A New Quantitative Evaluation Strategy for Deep Generated Sar Images,” in IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, 2022, pp. 2626–2629.

- [9] F. Han, H. Dong, L. Si, and L. Zhang, “Improving SAR Automatic Target Recognition via Trusted Knowledge Distillation from Simulated Data,” IEEE Transactions on Geoscience and Remote Sensing, 2024.

- [10] Y. Sun, Y. Wang, H. Liu, L. Hu, C. Zhang, and S. Wang, “Gradual Domain Adaptation with Pseudo-Label Denoising for SAR Target Recognition When Using Only Synthetic Data for Training,” Remote Sensing, vol. 15, no. 3, p. 708, 2023.

- [11] M. Zhu, B. Zang, L. Ding, T. Lei, Z. Feng, and J. Fan, “LIME-Based Data Selection Method for SAR Images Generation Using GAN,” Remote Sensing, vol. 14, no. 1, p. 204, 2022.

- [12] S. Jiao and W. Dong, “SAR Image Quality Assessment Based on SSIM Using Textural Feature,” in 2013 Seventh international conference on image and graphics, 2013, pp. 281–286.

- [13] J. Yoo and J. Kim, “SAR Image Generation of Ground Targets for Automatic Target Recognition Using Indirect Information,” IEEE Access, vol. 9, pp. 27 003–27 014, 2021.

- [14] M. Vespe and H. Greidanus, “SAR Image Quality Assessment and Indicators for Vessel and Oil Spill Detection,” IEEE Transactions on Geoscience and Remote Sensing, vol. 50, no. 11, pp. 4726–4734, 2012.

- [15] B. Lewis, T. Scarnati, E. Sudkamp, J. Nehrbass, S. Rosencrantz, and E. Zelnio, “A SAR dataset for ATR development: the Synthetic and Measured Paired Labeled Experiment (SAMPLE),” in Algorithms for Synthetic Aperture Radar Imagery XXVI, vol. 10987, 2019, pp. 39–54.

- [16] Q. Song, F. Xu, X. X. Zhu, and Y.-Q. Jin, “Learning to Generate SAR Images With Adversarial Autoencoder,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–15, 2021.

- [17] L. Liu, Z. Pan, X. Qiu, and L. Peng, “SAR Target Classification with CycleGAN Transferred Simulated Samples,” in IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium. IEEE, 2018, pp. 4411–4414.

- [18] Y. Shi, L. Du, Y. Guo, and Y. Du, “Unsupervised Domain Adaptation Based on Progressive Transfer for Ship Detection: From Optical to SAR Images,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–17, 2022.

- [19] J. Antorán, U. Bhatt, T. Adel, A. Weller, and J. M. Hernández-Lobato, “Getting a CLUE: A Method for Explaining Uncertainty Estimates,” arXiv preprint arXiv:2006.06848, 2020.

- [20] P. Mohammadi, A. Ebrahimi-Moghadam, and S. Shirani, “Subjective and Objective Quality Assessment of Image: A Survey,” arXiv preprint arXiv:1406.7799, 2014.

- [21] S. Athar and Z. Wang, “A Comprehensive Performance Evaluation of Image Quality Assessment Algorithms,” Ieee Access, vol. 7, pp. 140 030–140 070, 2019.

- [22] Z. Wang and A. C. Bovik, “Modern Image Quality Assessment,” Ph.D. dissertation, Springer, 2006.

- [23] J. Yoo and J. Kim, “SAR Image Generation of Ground Targets for Automatic Target Recognition Using Indirect Information,” IEEE Access, vol. 9, pp. 27 003–27 014, 2021.

- [24] M. Heusel, H. Ramsauer, T. Unterthiner, B. Nessler, and S. Hochreiter, “GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium,” Advances in neural information processing systems, vol. 30, 2017.

- [25] H. Wang, M. Liu, S. Chen, M. Tao, and J. Wei, “Improved SAR Image Generation with Double Top-K Training Method on Auxiliary Classifier GAN,” in IGARSS 2023-2023 IEEE International Geoscience and Remote Sensing Symposium. IEEE, 2023, pp. 7046–7049.

- [26] A. Mittal, A. K. Moorthy, and A. C. Bovik, “No-Reference Image Quality Assessment in the Spatial Domain,” IEEE Transactions on image processing, vol. 21, no. 12, pp. 4695–4708, 2012.

- [27] H. Talebi and P. Milanfar, “NIMA: Neural Image Assessment,” IEEE transactions on image processing, vol. 27, no. 8, pp. 3998–4011, 2018.

- [28] Z. Yu, G. Dong, and H. Liu, “SAR Image Quality Assessment: From Sample-Wise to Class-Wise,” Remote Sensing, vol. 15, no. 8, p. 2110, 2023.

- [29] J. Gawlikowski, C. R. N. Tassi, M. Ali, J. Lee, M. Humt, J. Feng, A. Kruspe, R. Triebel, P. Jung, R. Roscher et al., “A survey of uncertainty in deep neural networks,” Artificial Intelligence Review, vol. 56, no. Suppl 1, pp. 1513–1589, 2023.

- [30] M. Sensoy, L. Kaplan, and M. Kandemir, “Evidential deep learning to quantify classification uncertainty,” Advances in neural information processing systems, vol. 31, 2018.

- [31] O. Sagi and L. Rokach, “Ensemble learning: A survey,” Wiley interdisciplinary reviews: data mining and knowledge discovery, vol. 8, no. 4, p. e1249, 2018.

- [32] M. S. Ayhan and P. Berens, “Test-time data augmentation for estimation of heteroscedastic aleatoric uncertainty in deep neural networks,” in Medical Imaging with Deep Learning, 2022.

- [33] C. Blundell, J. Cornebise, K. Kavukcuoglu, and D. Wierstra, “Weight uncertainty in neural network,” in International conference on machine learning. PMLR, 2015, pp. 1613–1622.

- [34] F. K. Gustafsson, M. Danelljan, and T. B. Schon, “Evaluating scalable bayesian deep learning methods for robust computer vision,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, 2020, pp. 318–319.

- [35] H. Ritter, A. Botev, and D. Barber, “A scalable laplace approximation for neural networks,” in 6th international conference on learning representations, ICLR 2018-conference track proceedings, vol. 6. International Conference on Representation Learning, 2018.

- [36] W. Samek, G. Montavon, S. Lapuschkin, C. J. Anders, and K.-R. Müller, “Explaining deep neural networks and beyond: A review of methods and applications,” Proceedings of the IEEE, vol. 109, no. 3, pp. 247–278, 2021.

- [37] D. Watson, J. O’Hara, N. Tax, R. Mudd, and I. Guy, “Explaining predictive uncertainty with information theoretic shapley values,” Advances in Neural Information Processing Systems, vol. 36, 2024.

- [38] P. Thiagarajan, P. Khairnar, and S. Ghosh, “Explanation and use of uncertainty quantified by bayesian neural network classifiers for breast histopathology images,” IEEE transactions on medical imaging, vol. 41, no. 4, pp. 815–825, 2021.

- [39] Z. Huang, Y. Liu, X. Yao, J. Ren, and J. Han, “Uncertainty exploration: toward explainable sar target detection,” IEEE Transactions on Geoscience and Remote Sensing, vol. 61, pp. 1–14, 2023.

- [40] R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, and D. Batra, “Grad-cam: Visual explanations from deep networks via gradient-based localization,” in 2017 IEEE International Conference on Computer Vision (ICCV), 2017, pp. 618–626.

- [41] P. Schwab and W. Karlen, “Cxplain: Causal explanations for model interpretation under uncertainty,” Advances in neural information processing systems, vol. 32, 2019.

- [42] D. Ley, U. Bhatt, and A. Weller, “Diverse, global and amortised counterfactual explanations for uncertainty estimates,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, no. 7, 2022, pp. 7390–7398.

- [43] X. Lv, X. Qiu, W. Yu, and F. Xu, “Simulation-aided sar target classification via dual-branch reconstruction and subdomain alignment,” IEEE Transactions on Geoscience and Remote Sensing, vol. 61, pp. 1–14, 2023.

- [44] “The Air Force Moving and Stationary Target Recognition Database,” 2016. [Online]. Available: https://www.sdms.afrl.af.mil/-datasets/mstar/

- [45] A. Odena, C. Olah, and J. Shlens, “Conditional image synthesis with auxiliary classifier gans,” in International conference on machine learning. PMLR, 2017, pp. 2642–2651.

- [46] Q. Guo, H. Xu, and F. Xu, “Causal Adversarial Autoencoder for Disentangled SAR Image Representation and Few-Shot Target Recognition,” IEEE Transactions on Geoscience and Remote Sensing, vol. 61, pp. 1–14, 2023.

- [47] Z. Lei, F. Xu, J. Wei, F. Cai, F. Wang, and Y.-Q. Jin, “SAR-NeRF: Neural Radiance Fields for Synthetic Aperture Radar Multi-View Representation,” arXiv preprint arXiv:2307.05087, 2023.

- [48] J. Korhonen and J. You, “Peak signal-to-noise ratio revisited: Is simple beautiful?” in 2012 Fourth International Workshop on Quality of Multimedia Experience. IEEE, 2012, pp. 37–38.

- [49] Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli, “Image quality assessment: from error visibility to structural similarity,” IEEE transactions on image processing, vol. 13, no. 4, pp. 600–612, 2004.

- [50] H. R. Sheikh and A. C. Bovik, “Image information and visual quality,” IEEE Transactions on image processing, vol. 15, no. 2, pp. 430–444, 2006.

- [51] L. Zhang, L. Zhang, X. Mou, and D. Zhang, “FSIM: A Feature Similarity Index for Image Quality Assessment,” IEEE Transactions on Image Processing, vol. 20, no. 8, pp. 2378–2386, 2011.

- [52] W. Zhang, K. Ma, J. Yan, D. Deng, and Z. Wang, “Blind Image Quality Assessment Using a Deep Bilinear Convolutional Neural Network,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 30, no. 1, pp. 36–47, 2020.

- [53] A. Mittal, R. Soundararajan, and A. C. Bovik, “Making a “Completely Blind” Image Quality Analyzer,” IEEE Signal Processing Letters, vol. 20, no. 3, pp. 209–212, 2013.

- [54] H. Talebi and P. Milanfar, “NIMA: Neural Image Assessment,” IEEE Transactions on Image Processing, vol. 27, no. 8, pp. 3998–4011, 2018.

- [55] A. Mittal, A. K. Moorthy, and A. C. Bovik, “No-Reference Image Quality Assessment in the Spatial Domain,” IEEE Transactions on Image Processing, vol. 21, no. 12, pp. 4695–4708, 2012.

- [56] K. Shridhar, F. Laumann, and M. Liwicki, “A comprehensive guide to bayesian convolutional neural network with variational inference,” arXiv preprint arXiv:1901.02731, 2019.

- [57] Y. Gal and Z. Ghahramani, “Dropout as a bayesian approximation: Representing model uncertainty in deep learning,” in international conference on machine learning. PMLR, 2016, pp. 1050–1059.

- [58] H. Huang, R. He, Z. Sun, T. Tan et al., “Introvae: Introspective variational autoencoders for photographic image synthesis,” Advances in neural information processing systems, vol. 31, 2018.

- [59] H. Choi and J. Jeong, “Despeckling images using a preprocessing filter and discrete wavelet transform-based noise reduction techniques,” IEEE Sensors Journal, vol. 18, no. 8, pp. 3131–3139, 2018.

- [60] M. Mäkitalo, A. Foi, D. Fevralev, and V. Lukin, “Denoising of single-look sar images based on variance stabilization and nonlocal filters,” in 2010 International Conference on Mathematical Methods in Electromagnetic Theory. IEEE, 2010, pp. 1–4.

- [61] A. G. Wilson and P. Izmailov, “Bayesian Deep Learning and a Probabilistic Perspective of Generalization,” Mar. 2022, arXiv:2002.08791 [cs, stat]. [Online]. Available: http://arxiv.org/abs/2002.08791