Wrapped Gaussian Process Functional Regression Model for Batch Data on Riemannian Manifolds

Abstract

Regression is an essential and fundamental methodology in statistical analysis. The majority of the literature focuses on linear and nonlinear regression in the context of the Euclidean space. However, regression models in non-Euclidean spaces deserve more attention due to collection of increasing volumes of manifold-valued data. In this context, this paper proposes a concurrent functional regression model for batch data on Riemannian manifolds by estimating both mean structure and covariance structure simultaneously. The response variable is assumed to follow a wrapped Gaussian process distribution. Nonlinear relationships between manifold-valued response variables and multiple Euclidean covariates can be captured by this model in which the covariates can be functional and/or scalar. The performance of our model has been tested on both simulated data and real data, showing it is an effective and efficient tool in conducting functional data regression on Riemannian manifolds.

1 Introduction

Regression models are ubiquitous and powerful tools in data analysis to reveal the relationship between independent variables and dependent variables. Most well known regression models are formulated under the assumption that the variables all lie in Euclidean vector spaces. However, statistical models of manifold-valued data are gaining popularity in various fields of scientific analysis, such as computational social science, medical imaging analysis and computer vision [8]. Together with the significant increase in manifold-valued data observed by current instrumentation, generalizations of Euclidean regression models to manifold-valued settings are needed. If the manifold structure is not accounted for then the reliability of model predictions in the target spaces is compromised. Consider, for instance, Figure 1, where a regression model is trained on manifold-valued data (represented by the black curves on a S2). The predictions of this model (illustrated by the blue curve) deviate from the manifold. However, when the manifold structure is incorporated into the model, the predictions (depicted by the red curve) align with the target manifold. This underscores the necessity of employing non-Euclidean structure for modelling manifold-valued data. An additional example demonstrating this principle in Kendall’s shape space is provided in the Appendix.

In recent years, functional data have garnered increasing attention due to the continuous or intermittent recording of data at discrete time points. These data often exhibit non-linearity, and a common assumption is that they lie on a nonlinear manifold. For instance, image data, which can be influenced by random domain shifts, are known to reside in such manifolds . However, analyzing functional data poses challenges, particularly when dealing with spaces lacking global or local linear structures. Traditional methods like functional principal component analysis become infeasible in these cases. One specific difficulty arises from defining a suitable metric for data residing on a Riemannian manifold. Researchers have explored alternative approaches to address this issue. For example, [16] propose using point-wise Fréchet means for non-Euclidean time-varying random objects in general metric spaces. Additionally, they introduce point-wise distance trajectories between individual time courses and the estimated Fréchet mean trajectory. This innovative approach allows for a representation of time-varying random objects using functional data. In summary, the study of functional data on nonlinear manifolds presents exciting opportunities for advancing statistical methodologies and understanding complex data structures.

According to the manifold hypothesis, functional data can be mapped onto a low-dimensional nonlinear manifold, which is also known as manifold learning [35]. Gaussian processes (GPs) have proven to be powerful tools for modeling complex data structures. While GPs are commonly applied in Euclidean spaces, recent research has extended their applicability to Riemannian manifolds. [5] utilize the spectral theory of the Laplace-Beltrami operator to compute Matérn kernels on Riemannian manifolds. These kernels, widely used in physical sciences, capture both smoothness and spatial correlations. The spectral approach allows for efficient computation of Matérn covariance functions, enabling GPs to model data residing on curved surfaces. [22] introduce a vector-valued GP equipped with a matrix-valued kernel. This novel framework enables modeling of vector fields on Riemannian manifolds. Applications include geodesic flow modeling, fluid dynamics, and other scenarios where vector-valued data arise. Recently, [2] and [3] extend stationary GPs to compact Lie groups and non-compact symmetric spaces. These spaces play a critical role in spatiotemporal modeling. Those models define a metric based on geodesic distances between predictors, accommodating both functional and scalar predictors. In addition, [26] propose a regression model for functional data on manifolds. While existing approaches assume Euclidean response variables, certain problems involve responses residing directly on Riemannian manifolds. For instance, predicting flight trajectories based on flight time. In contrast to previous models, we propose a regression framework that maps Euclidean-valued predictors to manifold-valued functional responses.

A number of statistical approaches to analysis of manifold-valued data exist in the literature, especially in the context of principal component analysis (PCA). For example, [18] introduced principal geodesic analysis which is a generalisation of PCA on Riemannian manifolds. It replaces the first principal component with a principal geodesic constrained to pass through the intrinsic mean, with calculations performed approximately in Euclidean tangent space. [25] proposed a PCA technique for functional data on -dimensional Riemannian manifolds in which the authors adopt a regularization method by a smoothing penalty coherent with geodesic distances. Subsequently, [11] formulated Riemannian functional principal component analysis by mapping data to tangent spaces via the Riemannian logarithm map and then performing multivariate functional principal component analysis within the tangent space.

In addition to generalizations of PCA to manifold data, generalizations of linear regression have also been studied. Similar to manifold-based PCA, the regression line is typically replaced with a geodesic within the manifold, which gives rise to more challenging optimisation problems in order to fit the models than in the standard Euclidean setting. Geodesic regression [17] is a generalization of linear regression to a manifold-valued setting in which an univariate independent variable is considered in and dependent variables lie in a Riemannian manifold. The author derived a gradient descent algorithm for model fitting via derivatives of the exponential map and Jacobi fields, because the least square method has no analytical solution under this setting. [24] then extended geodesic regression to the multivariate setting in which the independent variables are in and the dependent variable is still manifold-valued. In addition, the authors proposed a variational gradient descent method based on parallel transport which is more convenient for high-dimensional independent variables. In the context of shape analysis, [28] provided an analytic approach to geodesic regression in Kendall’s shape space. Multiple linear regression has also been generalised by [30] for complex random objects in metric spaces with independent variables in . Using a least-squares approach, the authors derived asymptotic rates of convergence.

However, in many applications, non-Euclidean data cannot always be assumed to follow form of linear relationship. [4] introduced a kernel-based nonlinear regression model for both manifold-valued independent variables and manifold-valued dependent variables. [21] extended polynomial regression to Riemannian manifolds by introducing a class of curves on Riemannian manifolds which generalize geodesics and are analogs of polynomial curves in Euclidean space. [10] developed a regression model for a manifold-valued response variable in Riemannian symmetric spaces and covariates in Euclidean spaces with applications in medical imaging analysis. Moreover, [31] introduced an additive linear model for manifold-valued data which is based on the exponential map. In particular, they transform the manifold-valued data onto tangent spaces and then estimate the parameters of the additive linear model by a generalised least squares method.

Gaussian process regression (GPR) is a powerful non-linear and non-parametric model widely used for learning probability distributions over unknown functions. Operating within a Bayesian framework, GPR assumes that both the prior and likelihood follow normal distributions, allowing for the derivation of a posterior distribution that is also Gaussian. This ash been extended to solve the problems involving non-Gaussian data [41] and the use of other prior processes [43]. In addition, researchers have extended GPR to accommodate multi-output scenarios. Notable contributions include the work of [7], [40], and [1], who have explored multi-output Gaussian process regression. Over the past decade, substantial progress has been made in developing Gaussian process regression models specifically tailored for manifolds, thereby expanding the model’s scope and applicability. One intriguing approach is the wrapped Gaussian process regression proposed by [27]. In this framework, the Riemannian manifold is linearized via the logrithm map, projecting data points onto a tangent space. This results in a non-parametric regression model with a probabilistic foundation. Importantly, the computational cost of wrapped Gaussian process regression remains relatively low, as it involves additional calculations only for the exponential map and logarithm map of each manifold-valued data point. The key innovation lies in defining a Gaussian distribution directly on the Riemannian manifold, leveraging insights from real-valued multivariate Gaussian distributions and the exponential map. This novel approach opens up avenues for Gaussian process regression on Riemannian manifolds. Within the Bayesian framework, researchers have derived manifold-valued posterior distributions, albeit under certain assumptions (e.g., the requirement of infinite injectivity radius).

To the best of our knowledge, there is little current literature about regression models for functional batch data on Riemannian manifolds within a probabilistic framework. In light of this, we provide a nonlinear non-parametric regression model with uncertainty for batch data on a smooth Riemannian manifold. Called the wrapped Gaussian process functional regression model (WGPFR), it models the relationship of a functional manifold-valued response and mixed (scalar and functional) Euclidean-valued predictors by representing the mean structure and the covariance structure as different model terms. Specifically, a functional regression model is used for the mean structure with a functional manifold-valued response and scalar covariates, while a wrapped Gaussian process has been used for the covariance structure with functional covariates based on [27] who extend Gaussian process regression on Riemannian manifolds. In this way, the proposed WGPFR model extends the research field of non-parametric regression on manifolds.

The rest of this paper is organized as follows. In section 2, some basic concepts of functional data, Riemannian manifolds, Gaussian process regression and wrapped Gaussian process regression are reviewed. In section 3, we propose our model together with a method for inference via an efficient iterative algorithm. Numerical experiments and real data analysis are reported in Section 4. Finally, we draw conclusions and discuss the results in Section 5.

2 Background

2.1 Functional Data

Functional data analysis is a field of study wherein each observation is a random function, manifesting as a curve, surface, or other variations over a continuum. This analysis is particularly pertinent to data recorded over identical intervals, with consistent frequency and numerous repeated observations. While data are frequently modelled by parametric models incorporating randomness in the realms of statistics or machine learning, functional data analysis contemplates a smooth process observed at discrete time points, offering greater flexibility and reduced parametric constraints, see e.g. [33] and [45].

Furthermore, functional data can be procured from a diverse range of domains. For instance, in finance, volatility surfaces serve as a source of functional data. In the field of biomedical research, time-varying physiological signals yield functional data. Functional Magnetic Resonance Imaging (FMRI) is yet another domain that generates functional data. These examples underscore the broad applicability and interdisciplinary nature of functional data analysis.

2.2 Preliminaries for Riemannian Manifolds

In this section, we review a few basic concepts of Riemannian manifolds, wrapped Gaussian distributions, wrapped Gaussian processes on manifolds and then set up basic notations. More detail on basic Riemannian geometry can be found in standard texts, such as that by [14].

2.2.1 Concepts and Notation

Given a -dimensional smooth differentiable manifold and a tangent space , for , a Riemannian metric on is a family of positive definite inner products which vary smoothly with . Equipped with this Riemannian metric, we call the pair a Riemannian manifold.

The tangent bundle of is defined as a set , where is a point in and is a tangent vector at . If is a smooth curve in , then its length is defined as the integral of where the norm is computed via the Riemannian metric at . For any pair with sufficiently small, there is a unique curve of minimal length between two points and , with initial conditions , and . Such a curve is called a geodesic. The exponential map at is defined as for sufficiently small . Consider the ball of largest radius around the origin in on which is defined and let denote the image of on this ball. Then the exponential map has an inverse on , called the Riemannian logarithm map at , . For any point , the geodesic distance of and is given by .

Figure 2 shows the concepts above. For example, and are two points on a Riemannian manifold , the tangent space at is denoted as and refers to a geodesic between and . The tangent vector is from to at and moves towarding with distance is , which represents and respectively.

The exponential map and its inverse are analogs of vector space operations in the following way. Suppose and refer to two different points on linked by a geodesic , and suppose the tangent vector from the former to the latter is denoted by . In addition, suppose and refer to two Euclidean data points and the vector from the former to the latter is given by . Table 1 shows the relationship of operations between Riemannian manifolds and Euclidean spaces.

| Euclidean Space | Riemannian Manifold | |

|---|---|---|

| Addition | ||

| Subtraction | ||

| Distance |

If is a complete metric space that every Cauchy sequence of points in has a limit that is also in , then the Hopf-Rinow theorem shows that every pair of points is joined by at least one geodesic, a condition known as geodesic completeness, and, equivalently, is defined for all . However, given two points on , the geodesic between the points may not be unique, even if is geodesically complete. The injectivity radius at , denoted , is defined to be the radius of the largest ball at the origin of on which is a diffeomorphism. It follows that the geodesic ball at of radius is contained within under which the Wrapped Gaussian process regression can be derived to a analytical form. In the following, we assume the data are on a Riemannian manifold with infinite injectivity radius, such as spheres and Kendall’s shape spaces.

Some examples of Riemannian manifolds are shown in Appendix, including explicit formulae for the exponential and logarithm maps in certain cases.

2.3 Functional Data on Riemannian Manifolds

To study functional data located on Riemannian manifolds, [11] considered a -dimensional complete Riemannian submanifold of a Euclidean space , with a geodesic distance and a probability space with sample space , -algebra and probability . The sample space of all -valued continuous functions on a compact interval is denoted by . Thus, we define -valued random functions to be functions , , satisfying . Let refers to an ambient Hilbert space of -dimensional square integrable functions on . The inner product is defined as and the norm is given by for .

2.4 Gaussian Process Regression and Wrapped Gaussian Process Regression

2.4.1 Euclidean Gaussian Process Regression

As described in [34], a general Gaussian process regression model is defined as

| (1) |

where , , . The regression function is assumed to follow a Gaussian process prior, that is

| (2) |

where is the mean function of Gaussian process, and is the covariance function with hyper-parameter .

Given training data , we have a multivariate Gaussian distribution that is

| (3) |

where refers to , refers to a mean vector, and refers to a covariance matrix in which the entry of the -th element is .

To obtain the predictive distribution at a new input , it is convenient to take advantage of the properties of Gaussian distribution:

| (4) |

where is a covariance matrix, is scalar and their entries are similar to . Fortunately, the predictive distribution has an analytical form which is

| (5) |

For more details of Gaussian process regression, see [44].

The kernel function in the covariance function can be chosen from a parametric family, such as the radial basis function (RBF) kernel:

| (6) |

with the hyper-parameter chosen by maximizing the marginal likelihood. Specifically, the hyper-parameters can be estimated by conjugate gradient descent algorithm [32] or Markov Chain Monte Carlo [38].

2.4.2 Wrapped Gaussian Distribution

In this subsection, we first review the definitions of the wrapped Gaussian distribution and wrapped Gaussian process on a Riemannian manifold , and then review wrapped Gaussian process regression (WGPR) which is introduced by [27].

Suppose denotes a -dimensional Riemannian manifold and is a random variable on . For and a symmetric positive definite matrix , we can define a wrapped Gaussian distribution as follows

| (7) |

where is called basepoint and is a vector belongs to the tangent space at which follows a multi-variate Gaussian distribution with zero mean and covariance matrix (see Section 3.1 in [27]). The wrapped Gaussian distribution is formally denoted as

| (8) |

[27] describe a manifold-valued Gaussian process constructed by the wrapped Gaussian distribution, and the following material is based on their work. A collection of random points on a manifold index over a set is a wrapped Gaussian process, if every subcollection follows a jointly wrapped Gaussian distribution on . It is denoted as

| (9) |

where is the mean function on a Riemannian manifold, and is a covariance function with hyper-parameter on a tangent space at .

Consider the wrapped Gaussian process regression model which relates the manifold-valued response to the vector-valued predictor though a link function which we assume to be a wrapped Gaussian process. Analogously to Gaussian process regression, the authors also derive inferences for wrapped Gaussian process regression in Bayesian framework. Specifically, given the training data , the prior for is assumed to be a wrapped Gaussian process, which is . Then the joint distribution between the training outputs and the test outputs at is given by

| (16) |

where , , , , with .

Therefore, by Theorem 1 in [27], the predictive distribution for new data given the training data can be obtained by

| (17) |

where

| (18) |

Similar to the comparison of operators between Riemannian manifolds and Euclidean spaces, Table 2 shows the analogs of Gaussian processes between these two spaces.

| Euclidean Spaces | Riemannian Manifolds | |

|---|---|---|

| Definition | ||

| Mean | ||

| Covariance |

3 Main Model and Inference

Gaussian process regression is a powerful non-parametric tool in statistics and machine learning. In Section 3, we adapt GPR to functional data on manifolds by proposing the WGPFR model for batch data on Riemannian manifold. Inference of the WGPFR model is presented in Section 3.2, where the statistical properties of the model parameters are studied and an efficient algorithm for inference is proposed.

3.1 Wrapped Gaussian Process Functional Regression Model

The data consist of discrete observations of random functional curves on , so that the -th curve is denoted as , , . We assume that all curves are observed at a set of times , . Throughout, will index time and will index curves. The set of observations is therefore , , . Associated with the -th curve, we assume there is a real vector-valued functional covariate . We assume, as for the functional responses , that is also observed at the times , so that we are given points . Finally, we assume there is a vector-valued covariate observed for each curve . Thus, the data for the -th curve comprises , together with the vector m. These data, for fixed , are called a batch.

Next we define our hierarchical model for the data. It has the following overall structure, and details of each part are filled out in what follows. At the top level of the hierarchy, there is a mean curve . There are two stages to obtain each curve from . First there is a perturbation of to obtain an unobserved curve . We assume this perturbation depends on the vector covariate m and a functional model parameter , but not on the functional covariates (see Equation (19)). Secondly, we assume is obtained from via a vector field along which depends on the functional covariate (see Equation (20)). Figure 3 shows a schematic of the overall model.

3.1.1 Definition of Mean Structure

It is difficult to infer non-linear relationships non-parametrically between a functional response variable and multi-dimensional functional covariates even when both of them are observed in a space for real-valued functions [37]. Therefore, we assume that the mean structure depends on the scalar covariates only. Specifically we assume

| (19) |

where and play a similar role to the intercept term and slope term of the function-on-scalar linear regression model in Euclidean space. These are model parameters which we estimate. Furthermore, equation (19) can be seen as a functional version of geodesic regression proposed in [17], in which a geodesic regression model for manifold-valued response and scalar-valued predictor is proposed in the form with where is the predictor variable.

3.1.2 Definition of Covariance Structure

Conditional on , the -th curve is modelled as

| (20) |

where is a vector field along . When the data are observed with random noise, [17] defines the geodesic model as . Similarly, the proposed WGPFR model with error term can be defined as

| (21) |

where are independent measurement errors following a multivariate normal distribution with mean zero and covariance matrix . For functional data, in addition to the measurement error term, the most important part we are interested is the underlying covariance structure contained in term , which is Equation (20). In what follows, we explain how is modelled as depending on the functional covariate .

We assume that the vector field can be represented in local coordinates as a -dimensional vector function . We further assume this vector-valued function follows a Gaussian process with mean zero and an underlying covariance structure , which is

| (22) |

Specifically, given two observation times and from the -th batch of data, we assume that the covariance of the two random vectors and a function of the observed covariate values and .

Under the framework of the wrapped Gaussian process in [27], we can represent the above Gaussian process as the wrapped Gaussian process for manifold functional data. That is,

| (23) |

where and are defined as above.

To summarize, the WGPFR model studies the non-linear relationship between the manifold-valued functional response and mixed (functional and vector) Euclidean-valued predictors in two parts, as shown in Figure 3. The first part is the mean structure which is defined through a functional regression to model the manifold-valued functional mean with the vector-valued predictor. The second part is called the covariance structure, and for which the inference is based on a Bayesian method with the prior assumed to be Gaussian process.

Compared to the geodesic regression in [17], the WGPFR additionally incorporates the information of dependence between data modelled by covariance structure which is the main focus of interest for functional data analysis. Compared to the WGPR model in [27], this paper further models the mean part which relates the functional mean on the manifold to the scalar predictor on Euclidean space, while the mean part in [27] is not related to scalar predictors. Note that the scalar predictor plays an important role in the prediction of the model, especially for the batch data we consider in this paper. The proposed WGPFR model is more complicated but much flexible than existing models and induces a more complex inference procedure which will be illustrated in detail in the next subsection.

3.2 Inference for WGPFR

3.2.1 Estimation of Mean Structure

Since the mean structure model (19) can be seen as a functional version of the geodesic regression model [17], an intuitive inference method consists of optimizing and simultaneously for each fixed . However, this would ignore information about the correlation of the function and at different times. On the other hand, if the number of observed times is large, and we account fully for correlations between all different times, then the computational cost will be very high, unless we use some efficient methods, such as [12]. Due to the dependent structure, we cannot conduct optimization at each fixed separately, otherwise the correlation information across different t will be lost. It can also be seen that inference of the functional mean structure model is indeed challenging.

In this paper we borrow an idea of [11] to estimate and . Note that plays the role of an intercept in the linear regression in Euclidean space, which is usually defined as the mean of the response. Similarly, for functional data on a Riemannian manifold, we can define the intrinsic population Fréchet mean function under the assumption of existence and uniqueness:

| (24) |

where denotes geodesic distance. When is fixed, refers to a point on a Riemannian manifold; when is variable, refers to a curve on a Riemannian manifold. Additionally, since each is continuous, is also continuous (see equation (1) in [11]).

The intrinsic Fréchet mean function can be a good choice for since it is a natural mean of manifold-valued functional data and it is reasonable to assume are confined to stay within the radius of injectivity at for all . Then the inverse exponential map can be well-defined. We estimate at each time via the sample Fréchet mean of the points . This requires that the times are the same across all curves . Standard gradient descent methods [29] can be used to obtain the Fréchet sample mean at each time to obtain estimates of , . Denote the logarithm process data as

where is a -dimensional tangent vector of at for a fixed . Since we assume is a dimensional closed Riemannian submanifold of a Euclidean space, it is reasonable to identify the tangent space as a subspace of the Euclidean space with . Then can be seen as valued square integrable functions, which can be represented by a set of basis functions. Similarly, the slope function can also be represented by this set of basis functions. In this way, we can not only easily estimate the intercept term but also the slope term can be obtained by least square methods on . This reduces the computation complexity compared with the optimization method in [17]. Next we illustrate the estimation procedure in detail.

For the estimation of the intrinsic Fréchet mean , the sample Fréchet mean is calculated by minimizing the following loss function for each :

| (25) |

It is natural to use the gradient descent algorithm introduced by [29] to estimate the sample Fréchet mean for each fixed . Using [14], the gradient is given by

| (26) |

An implementation of the gradient descent algorithm to solve equation (25) is given in Algorithm 1. In practice, we choose the step size where is the number of samples and which is relatively small.

For the estimation of , the first step is to find a reasonable basis to represent in the tangent space. Under the framework of [11], suppose we have an arbitrary system of orthonormal basis functions

| (27) |

where is a Hilbert space of with inner product and norm , if and 0 otherwise. Note that the values of each at each time is restricted to the tangent space , which are identified with . Define the -dimensional linear subspace of :

| (28) |

Then the slope functions , can be approximated by a linear span on with expansion coefficients :

| (29) |

Then the estimation of can be transformed to the estimation of , , .

Defining , and , then the multiple linear function-on-scalar regression model becomes

| (30) |

where which are assumed to be independent of the covariate for .

Since can not be obtained directly, we use as the response of the multiple linear regression model, so that the parameters of the regression model are estimated by minimizing the following loss function with observed data:

| (31) |

The coefficients are calculated by standard least squares methods.

To be more specific, let denote a matrix such that the -th column denotes the -th batch, and the -th row denotes the vectored element . Similarly, let denote a matrix; let denote a matrix for which each row indicates a data point; and let denote the coefficient matrix with element for , . Then the above loss function can be rewritten as

| (32) |

The least squares estimate of is obtained by

| (33) |

Consistency of the estimator of the sample Fréchet mean was proved in [11]. The following theorem establishes consistency of the estimator of the regression coefficients; the proof is left to the appendix.

Theorem 1.

Under the conditions (C1)-(C5) in the Appendix, the coefficient is a consistent estimator with probability tending to as in the sense that

| (34) |

It follows that the functional slope coefficients can be estimated by where

| (35) |

for . The estimated mean structure for the -th curve is given by

| (36) |

The function is expected to approximate . However we omit the rigorous proof of the consistency of on the manifold due to some technique difficulties.

3.2.2 Estimation of Covariance Structure

From the section above, we obtain an estimate of the mean structure which is a continuous function on . In this section, we will focus on inference of the covariance structure . We assume that the tangent spaces for can be identified with via some smooth local basis of orthonormal vector fields along . We have mentioned above that the covariance structure can be related to another functional covariate , which is a -valued function. If we denote , then the correlation of different components in could be estimated via a cross-covariance function model, such as the convolved Gaussian process [6]. However, if there are observations , , then the size of a covariance matrix in a Gaussian process of , is while the size of a cross-covariance matrix in a convolved Gaussian process of is , which is computationally expensive. As a result, we will consider different dimensions independently. Specifically, we assume

| (37) |

where denotes a covariance kernel depending on hyper-parameter .

Given the value of , , denote , then for any new input , the conditional distribution of is

| (38) |

where and .

In Gaussian process regression, the hyper-parameters for the -th dimension, , can be estimated by maximizing the sum of marginal likelihood functions for each batch, i.e. maximizing

| (39) |

Under some regularity conditions, the estimator converges to [9]. With the estimated hyper-parameters, the estimated covariance structure of the -th coordinate for the -th batch is given by

| (40) |

where . Under some mild conditions, the above estimator of the covariance structure is information consistent, as the following theorem states.

Theorem 2.

Suppose the underlying true vector field, along can be represented using a local basis by real-valued functions , , and that each function has a Gaussian process prior with mean zero and bounded covariance function for any covariate , is continuous in , further assume the estimator converges to as almost surely, and the mean structure is known.

Then is information consistent to the true , which means an estimator that guarantees convergence to the true parameter value as the sample size grows, if the reproducing kernel Hilbert space norm is bounded and the expected regret term , where is the covariance matrix over , and is the variance of the measurement error .

The proof is given in Appendix. Note that the above theorem holds under the condition that is known, but in practice, we only have an estimate of . Furthermore, the term in the posterior distribution can not be observed directly, and it is approximated by which is the realization of the -th element of .

3.2.3 Update Mean Structure and Covariance Structure

After obtaining the estimated mean structure and covariance structure, we are able to make predictions with given new inputs. In order to improve the performance of our model, we introduce an algorithm which can update the estimated mean structure and covariance structure iteratively.

The loss function of the -th curve at time point with the estimated mean structure and estimated covariance structure is given as

| (41) |

where .

Given the above estimated covariance structure , the mean structure can be updated by a gradient descent algorithm where the gradient is

| (42) |

where is an adjoint with respect to the Riemannian inner product, which plays a similar role to parallel transport. In practice, a variational method for gradient descent [24] can be used as a substitute for gradient in Equation (42). Thereafter, we update the mean structure from to (here the superscript (1) means the -st iteration). The updated coefficients can be estimated by minimizing the loss function

| (43) |

which is a linear least squares problem.

Given the updated mean structure , we can re-calculate the covariance structure . The only difference from Section 3.2.2 is that the estimated mean structure is replaced by the updated mean structure. This two-way updating procedure is then repeated iteratively, stopping when the difference of the updated mean or covariance structure smaller than some given threshold. The algorithm of the updating procedure is specified in Algorithm 2.

4 Numerical Experiments

In this section we demonstrate the WGPFR model on two Riemannian manifolds: and Kendall’s shape space. As previously, we suppose there are curves on a Riemannian manifold; in what follows we will simulate data points on these random curves in different ways to form different scenarios of interpolation and extrapolation. For the extrapolation problem, we use our model to predict the last data points (given all preceding data points) to test the long-term extrapolating performance, and the last data points to test the short-term extrapolation performance. Moreover, since the Algorithm 2 is calculated in Euclidean space, we use a optimizer from SciPy which is powerful and widely-used for minimization problems.

4.1 Regression analysis on

4.1.1 Simulation scenario on

Suppose observation times are equally spaced in the interval with points, where and . To test the performance of our model with different number of observed curves and data points, we considered and batches and , and data points on each batch respectively, which is and . The simulated data are shown in Figure 4 and in the remainder of this subsection we explain how the data were generated.

As introduced previously, the WGPFR model consists of three parts: the intrinsic Fréchet mean function, the mean structure and the covariance structure. In order to simulate data, we first define the Fréchet mean function on by

| (44) |

where refers to a point on a .

Suppose there are two batches, and that we generate two mean structures based on batch-specific covariates and Fourier basis functions , defined by

| (45) |

where for and for refer to the covariates for two scalar batches respectively and refers to tangent vector-valued basis functions. Specifically, we define

| (46) |

where

| (47) |

and is a rotation matrix transferring the vector or

to , this ensures that the vectors are tangents at . For example, given a point and its tangent vector , the rotation matrix is defined by

We use the following covariance function

| (48) |

which is a combination of a squared exponential kernel (often used for stationary Gaussian processes) and a linear kernel (often used for non-stationary Gaussian process), this can be used for most of Gaussian process regression [37]. Since can be embedded into , for each dimension of tangent vector of covariance structure, the hyper-parameters are given as , and . Given these three Gaussian processes with zero mean and kernel 48, we can generate a tangent vector for the covariance structure. However, in practice, the tangent vector may not in the target tangent space because of numerical rounding. For example, if the ideal tangent vector is , our generated tangent vector might be , which will result in the exponential map and inverse exponential map incalculable (defined in Equation (53) and Equation (54) respectively). Therefore, the tangent vector must be projected into the correct tangent space, which is denoted as . The subscript 3 in refers to -dimensional. As a consequence, the formula to generate manifold-valued data for numerical experiments is written as

| (49) |

4.1.2 Model assessment on

In this section, we delete some generated data points on a randomly selected curve, say , in different ways to form training data sets and then calculate the predictions for these deleted data points (i.e. the test data). The performance of WGPFR model is assessed by comparing the root mean square error between predictions and their real data by Euclidean distance, since a sphere can be embedded in easily.

Specifically, we selected data points on uniformly at random as our test data set, and all remaining data points were used as the training data set, which is a typical interpolation problem. This scenario is denoted as Type 1 prediction. As to Type 2 prediction, the short-term forecasting, the last data points on are considered as test data set which is a typical extrapolation problem. Analogously to the short-term forecasting, we also test the performance of WGPFR for long-term forecasting, the Type 3 prediction. In Type 3 prediction, we choose the last data points as test data.

For comparison, in each scenario, the same training data set is used for several other models, such as functional linear regression on Riemannian manifolds (FLRM) and wrapped Gaussian process Fréchet mean regression (WGFmR). Specifically, FLRM is the mean structure (19) without covariance structure; WGPFmR consists of mean structure and covariance structure in which the mean structure is the sample Fréchet mean point for all training data. In addition, the WGPFmR model does not have the updating part. We compare the performance of these three models not only to show a significant improvement of predictive accuracy from concurrent model (consider mean structure and covariance structure simultaneously), but also from the intervatively updating algorithm 2. Moreover, we can use some models, such as Gaussian process functional regression without manifold structure to fit the data and make predictions. However, such models are meaningless since the inferences cannot be guaranteed on the right space which is manifold. Thus, it might be not suitable as a baseline and we only consider comparison with manifold structure.

We replicate each simulation study times. Thus, we test the performance of our model on thousands of test data points. The numerical results reported in Table 3 are the average of root-mean-square-error (RMSE) in every single replication. Using the embedding , it is reasonable to use the Euclidean norm between points as a distance function (chordal metric), which provides a method to calculate the RMSE.

In Type 1 prediction, Type 2 prediction and Type 3 prediction, WGPFR always provides the best prediction. The predictive accuracy of FLRM is better than that of WGPFmR in most scenarios, because FLRM only learns the mean structure of the training data which might be more useful for trend forecasting. In extrapolation, the long-term forecasting and short-term forecasting, since test data are distant from the training data, the output of GRP is usually inaccurate and then the mean structure mainly determines the accurate of prediction. Because we used the Fréchet mean point as the mean function of WGFmR, the prediction of WGFmR is very poor.

Comparing long-term and short-term forecasting, since the test data of the former is closer to the training data than that of the latter, the predictions of short-term forecasting are more accurate than that of long-term forecasting. In addition, from the table we can see that when the numbers of curves are fixed, the RMSE between prediction and real data decreases with the increasing number of points; when the number of points is fixed, the RMSE also decreases with the increasing number of curves.

| Type 1 | Type 2 | Type 3 | |

| 30 curves, 20 points | |||

| WGPFR | 0.0349 | 0.0226 | 0.0341 |

| FLRM | 0.2194 | 0.2951 | 0.3188 |

| WGFmR | 0.2755 | 0.3336 | 0.4779 |

| 30 curves, 60 points | |||

| WGPFR | 0.0209 | 0.0108 | 0.0248 |

| FLRM | 0.1520 | 0.2282 | 0.2311 |

| WGFmR | 0.1909 | 0.1608 | 0.2164 |

| 60 curves, 20 points | |||

| WGPFR | 0.0391 | 0.0273 | 0.0428 |

| FLRM | 0.2117 | 0.2509 | 0.2865 |

| WGFmR | 0.2827 | 0.3165 | 0.4717 |

| 60 curves, 60 points | |||

| WGPFR | 0.0215 | 0.0115 | 0.0126 |

| FLRM | 0.1601 | 0.2022 | 0.2385 |

| WGFmR | 0.2058 | 0.2199 | 0.3225 |

Since Gaussian process regression is a Bayesian model, we also compare the log-predictive likelihood of the covariance structure which provides the randomness of this model. [19] introduce a calculation of log pointwise predictive density in practice. Thus we compute this index by summing the log pointwise predictive density in each dimension and the result is shown in Table 4.

Moreover, discrete Fréchet distance is a metric to measure the similarity between two curves which considers the locations and orderings between predictions and real data. This method is widely used in GPS data, especially for comparing trajectories of animals, vehicles or other moving objects [13]. The results show that our model provides small predictive error under this metric. In other words, our model is effective even the measurement is different from its objective function. The numerical results are shown in Table 4.

We also show the RMSE of in Table 4. We can see that, when the number of curves is fixed, the RMSE between estimated and real decreases with the increasing number of data points; however, when the number of data points is fixed, the RMSE between estimated and real is almost the same (0.1779 compared to 0.1773 and 0.0212 compared to 0.0214).

| Type 1 | Type 2 | Type 3 | |

|---|---|---|---|

| Log predictive likelihood | |||

| 30 curves, 20 points | 38.4998 | 11.2927 | 35.9895 |

| 30 curves, 60 points | 42.1669 | 13.1486 | 46.1013 |

| 60 curves, 20 points | 40.0731 | 12.7354 | 35.9048 |

| 60 curves, 60 points | 45.3947 | 14.1749 | 52.6215 |

| Curve similarity | |||

| 30 curves, 20 points | 0.0657 | 0.0646 | 0.0498 |

| 30 curves, 60 points | 0.0306 | 0.0529 | 0.0336 |

| 60 curves, 20 points | 0.0520 | 0.0596 | 0.0535 |

| 60 curves, 60 points | 0.0247 | 0.0422 | 0.0390 |

| RMSE between and | |||

| 30 curves, 20 points | 0.1779 | 0.1774 | 0.1778 |

| 30 curves, 60 points | 0.0230 | 0.0212 | 0.0236 |

| 60 curves, 20 points | 0.1773 | 0.1770 | 0.1773 |

| 60 curves, 60 points | 0.0228 | 0.0214 | 0.0213 |

4.2 Regression Analysis on Kendall’s Shape Space

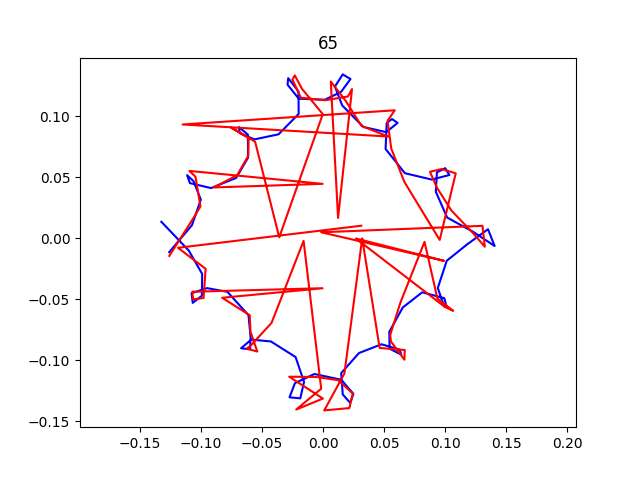

We firstly generated , for , which describes a varying shape. Specifically, the shape is a circle at the beginning and becomes a square in the end. The varying shapes are shown in Figure 5 and they are generated based on an elliptic equation after removing scale, rotation and translation.

We generated data corresponding to 80 landmarks, given by an element of (See the Appendix for more details about Kendall’s shape space) and the mean shape function is defined as

| (50) |

Then, we use the scalar covariates to generate tangent vectors from the mean function to mean structures and by

| (51) |

where refers to the number of elements in , , , , and refers to a ranking function of . For example, when , . Thus, we obtain curves in a batch and each curve is consist of points (curves).

We estimate the mean structure and covariance structure as discussed in Section 3.2.1 and Section 3.2.2. The training data consist of all data in batch one together with the first and the second curve in batch two; the difference between these types of prediction is the handling of the third curve in batch two. Specifically, for Type 1 prediction, time points are randomly selected to form the training data and the remaining points are used as the test data; with regard to Type 2 prediction, we add the first half into the training data and the remaining for the test data; as for the Type 3 prediction, the last time points are considered as the test data and the remaining are added into the training data. Each numerical experiment is repeated for times. In addition, to compare the performances among different regression models, we also tested the WGPFR, approximate wrapped Gaussian process functional regression model, functional linear regression model on Riemannian manifold (the mean structure) and wrapped Gaussian process Fréchet mean regression model.

The RMSE are given in Table 5. Similar to the simulation study on a , the WGPFR model achieves the best prediction results in both interpolation and extrapolation. Moreover, the time consuming of simulation study on is less than minutes while that of shape space is about one hour, since the later has higher dimension. Specifically, there are sets of estimated hyper-parameters for a wrapped Gaussian process on a while sets of estimated hyper-parameters for a wrapped Gaussian process on a shape space.

| Type 1 | Type 2 | Type 3 | |

| 10 curves, 20 points | |||

| WGPFR | 0.0189 | 0.0197 | 0.0202 |

| FLRM | 0.2702 | 0.2777 | 0.3425 |

| WGFmR | 0.1091 | 0.2316 | 0.2453 |

| 10 curves, 40 points | |||

| WGPFR | 0.0159 | 0.0183 | 0.0192 |

| FLRM | 0.2663 | 0.1206 | 0.1767 |

| WGFmR | 0.0914 | 0.1312 | 0.1481 |

| 20 curves, 20 points | |||

| WGPFR | 0.0178 | 0.0190 | 0.0200 |

| FLRM | 0.2648 | 0.2735 | 0.2882 |

| WGFmR | 0.0855 | 0.1698 | 0.1531 |

| 20 curves, 40 points | |||

| WGPFR | 0.0101 | 0.0148 | 0.0163 |

| FLRM | 0.2036 | 0.1165 | 0.1486 |

| WGFmR | 0.0653 | 0.1101 | 0.1383 |

Thus we also show a log pointwise predictive density and the results are in Table 6.

| Type 1 | Type 2 | Type 3 | |

|---|---|---|---|

| 10 curves, 20 points | 80.8193 | 23.3038 | 79.0855 |

| 10 curves, 40 points | 81.1700 | 25.9431 | 83.1195 |

| 20 curves, 20 points | 75.9353 | 22.8560 | 78.5120 |

| 20 curves, 40 points | 77.1970 | 24.7091 | 82.4506 |

4.3 Flight Trajectory Data

In this section, we test our model on a real data set. The earth is roughly a sphere and can be modelled as a copy of . Certain data sets, for example hurricane trajectories, are therefore considered as a manifold-valued random curve [39]. Here we study flight trajectory data, shown in Figure 7 in which the red curves represent flights from Shanghai to London by British Airways and the black curves represent flight trajectories of Eastern China Airlines between the same destinations (the data were downloaded from www.variflight.com on 2020). Therefore, these sets of trajectories can naturally be split into two batches and the model with common mean structure can be used.

The original data includes time, height, speed, longitude and latitude of each flight at discrete time points. We select the position of each airplane as the response variable, which can be transformed onto using longitude and latitude; in addition, the company and time are regarded as non-functional and functional covariates respectively. Before we train the model, it is necessary to pre-process the raw data. In this step, we firstly set the takeoff time of each flight to be and the landing time to be , excluding taxi time. trajectories of each company were selected in which the number of observed data points in every flight is greater than . In order to obtain smooth manifold-valued curves from the data, some kernel smoothing functions with small bandwidth were applied to the longitude and latitude of the training data. For computational reasons, we choose every -th data points of each smoothed trajectory as training data ( data points in each curve totally).

To model the mean structure for flight trajectory data, we use company as the batch-specific covariate. In practice, for Eastern China Airlines, the covariate is defined as ; and for British Airways, the covariate is defined as . Estimation of the mean structure and covariance structure were described in Section 3.2.1 and 3.2.2, respectively. The parameters of the basis functions in mean structure and hyper-parameters in the covariance structure were be updated iteratively, as described in Section 3.2.3. In addition, the initial values of hyper-parameters in convariance structure are drawn from a standard Gaussian distribution independently. Afterwards, the predictions of the WGPFR model has been compared to FLRM and WGFmR for the same flight trajectory data.

The overall prediction of WGPFR model should be better than the other models, since the FLRM model only learns from mean structure and ignores the dependent error. In addition, the mean function of WGFmR is a point on and the prediction should have significant error when data are far away from the Fréchet mean. This is verified by numerical results in Table 7.

The training data and test data are generated in the following way. We randomly selected another flight trajectory of British Airways which had time observations. After the same pre-processing steps, data points were added into the training data and the remaining data points are used as the test data. In order to test the performance of interpolation and extrapolation for the real data set, we form the Type 1 prediction by randomly choosing these data points and form the forecasting by selecting the first points in the trajectory as training data. In addition, we test the capability for short-term forecasting and long-term forecasting by supposing different test data. Specifically, the -th to -th points and the -th to final data points of the flight trajectory can be selected respectively to form two scenarios of prediction which are denoted as short-term and long-term, respectively.

The predictive accuracy of WGPFR compared to FLR and WGFmR on the flight trajectory data is show in Table 7, which shows the mean of root-mean-squared-error of repeated simulations to reduce the effects of random seeds. We can see that the WGPFR model outperforms FLR and WGFmR for interpolation. For the short-term prediction, the RMSE is much smaller than that of the long-term prediction. As mentioned previously, the reason is that GPR provides little extra information when the test data are distant from the training data. The prediction of WGFmR is less accurate since the mean structure (Fréchet mean) is only a manifold-valued point and the test data set are not close to that point.

| Type 1 | Short-term | Long-term | |

|---|---|---|---|

| WGPFR | 0.0048 | 0.0096 | 0.0233 |

| FLRM | 0.0082 | 0.0131 | 0.0471 |

| WGFmR | 0.0187 | 0.0747 | 0.3569 |

5 Conclusion and discussion

In this paper we studied a novel functional regression model within a probabilistic framework for nonlinear manifold-valued response variables and real-valued covariates. Specifically, a wrapped Gaussian process functional regression (WGPFR) model is proposed to model the regression relationship into mean structure and covariance structure. For the mean structure, we proposed a functional version of geodesic regression model for the manifold-valued functional response and scalar covariates, where the inference of the mean structure is based on the intrinsic Fréchet mean function and the traditional functional linear regression model is used on the tangent vectors of the manifold-valued data at the intrinsic Fréchet mean function to estimate the scalar covariates in an ambient Euclidean space. A wrapped Gaussian process prior is proposed to model the covariance structure in the tangent space at the mean function of each batch, where inference is conducted in a Bayesian way.Furthermore, an iterative approach based on a gradient descent algorithm or variational gradient descent algorithm are also applied to update mean structure and covariance structure efficiently. The mean structure captures the relation between functional dependent variable and batch-based scalar independent variable. Meanwhile, the covariance structure models the nonlinear concurrent relationship between functional output and multiple functional covariates. This idea in WGPFR model avoids the curse of dimensionality by using multiple functional covariates as input for functional regression, which promotes the flexibility of this method.

Future research endeavors could encompass various extensions and enhancements to the proposed WGPFR model. Initially, the WGPFR model, as delineated in this paper, is predicated on the assumption of Riemannian manifolds with infinite injectivity radius. The potential exists to adapt this model to other manifolds that may not conform to this assumption.

Secondly, the current paper presumes the independence of Gaussian processes across different dimensions. Future investigations could explore methodologies as suggested by [1] and [40], where Gaussian processes exhibit dependence. However, such an approach would necessitate the development of additional computational efficiencies.

Thirdly, the proposed model could be applied to other intriguing real data sets. For instance, the performance of our model could be evaluated on medical imaging data. Repeated measurements of functional magnetic resonance imaging (fMRI), for example, could be construed as data residing in a specific manifold. Furthermore, the recurrently scanned shapes of the corpus callosum from diverse patients could be modeled to predict the likelihood of Alzheimer’s disease based on certain relative factors [4]. In addition, consideration could be given to non-Gaussian data as [41] and alternative methods to define mean and covariance structures.

The convergence of parameter estimation is substantiated in Theorem 1 and 2. However, the assurance of convergence for the iterative optimization Algorithm 2 remains an open question for future exploration. [20] demonstrated that, given suitable initial parameters, the estimation error of conditional maximization problems is confined within the bounds of statistical error and optimization error with a high degree of probability, potentially laying the groundwork for convergence. Nonetheless, it is crucial to acknowledge the distinction between graphical spaces and Riemannian manifolds. The approach proposed by [42], which establishes that the convergence rate of GPR is optimal under certain preconditions and is upper bounded based on the smoothness of the correlation function in Euclidean space, could potentially be extrapolated to Riemannian manifolds to facilitate the convergence of covariance structure estimation. This proposition, however, requires rigorous validation.

Acknowledgments

JQS’s work is supported by funds of National Key R&D Program of China (2023YFA1011400), the National Natural Science Foundation of China (No. 12271239) and Shenzhen Fundamental Research Program (No. 20220111). Chao Liu is supported in part by China Postdoctoral Science Foundation, No.2021M691443, No.2021TQ0141 and SUSTC Presidential Postdoctoral Fellowship.

Appendix

Comparison of A Model with or without A Manifold Structure

In Kendall’s shape space, the prediction of a regression model without a manifold structure loses the shape framework while a model with manifold structure still keep it.

Examples of Riemannian Manifolds

Sphere : The two-dimensional sphere is a simple example of a Riemannian manifold. It is easy to show that the tangent vectors at a point are the vectors which are orthogonal to . A Riemannian metric is inherited from the ambient Euclidean metric on , and it exactly the Euclidean inner product between tangent vectors. It is easy to show, using this metric, that the shortest curve passing between two points is a the great circle, which is contained within the intersection of and a plane containing , and the origin. The geodesic is unique exactly when one such plane exists, or in other words, when and are not antipodal. The formula of geodesic distance is given by

| (52) |

The formula of exponential map is

| (53) |

The logarithm map is defined for all pairs which are not antipodal:

| (54) |

These concepts can be to the hyper-sphere , where .

Kendall’s Shape Space: As a well-developed probability space, Kendall’s shape space provides a very useful space for illustrate new theory and applications in statistics and machine learning. In 2-dimensional shape analysis [15], a landmark is a point that can be unambiguously identified on all objects in a population. Landmarking is the process of identifying and labelling a set of landmarks on each object in an analysis. This process generates a dimensional (real) vector, which can also be represented in a -dimensional complex space . The shape of an object is the equivalence class of the object when translation, rotation and scaling of the object are removed. Translation is removed by moving the centroid of each object to be origin point. The landmark vectors then lie in a complex subspace , which is a copy of . Two configurations in are equivalent if they are related by scaling and rotation. The set of equivalence classes can be show to be equal to complex projective space and is known as Kendall’s shape space [23]. The following formulae of exp map, log map on Kendall’s shape space are from [46].

Analogously to the sphere, we write down formulae which specify the Riemannian geometry on Kendall’s shape space. Suppose and are two data points in a general , the geodesic distance can be computed by

| (55) |

Note that this expression is invariant under multiplication of and by arbitrary non-zero complex constants. The inverse exponential map is given by

| (56) |

The exponential map is defined by

| (57) |

The tangent space of a point has the same dimensionality as the Kendall’s shape space.

Proofs

In order to prove the theorems in Section 3, we need the following assumptions for the Riemannian manifold and the manifold-valued data .

-

•

(C1) is a closed Riemannian submanifold of a Euclidean space , with geodesic distance induced by the Euclidean metric.

-

•

(C2) Trajectories are continuous for almost surely.

-

•

(C3) For all , the Fréchet mean and sample Fréchet mean exist and are unique almost surely.

-

•

(C4) Almost surely, trajectories lie in a compact set for , where is an open ball centered at with radius .

-

•

(C5) For any ,

Proof of Theorem 1

Proof. Recall the notations and the multiple functional regression model , denote the vector of the realizations at , , of and as , and , respectively. Then we have

For the first term, as are assumed to be independent to the covariate for , so that , . Therefore as .

For the second term, note that

where the last term has been proofed in [11] under the above conditions (C1)-(C5). So the second term also has as .

Proof of Theorem 2

Before proofing the Theorem 2, we first introduce some notation to simplify the proof. Recall that , for simplicity, we omit the subscript and denote the observation at the -th batch and the -th dimension as , and denote the corresponding covariates with which are independently drawn from a distribution . Denote the observed data .

Let be the true underlying function. Assume that the underlying process where all the subscript are omitted. Denote

then is the Bayesian predictive distribution of based on the GPR model. Note that depends on since the hyperparameters of is estimated from the data.

Proof. It suffices to show

Note that

It suffices to give an upper bound for the term .

Let be the Reproducing Kernel Hilbert Space (RKHS) associated with the covariance function , and the span of , i.e. , for any . Assume the true underlying function , then can be expressed as

where and By the properties of , and , where is the covariance matrix over .

Let and be any two measures on , then it yields by Fenchel-Legendre duality relationship that, for any functional on ,

Let for any in and , let be the measure induced by , hence its finite dimensional distribution at is , and

where is defined in the same way as but with being replaced by its estimator .

Let be the posterior distribution of on which has a prior distribution and normal likelihood , where

and is a constant to be specified. In other words, we assume a model with and , and is a set of observations at Thus, is a probability measure on . Therefore, by Gaussian process regression, the posterior of is

where .

It follows that

On the other hand,

By Taylor’s expansion, expanding to the second order at yields

where for some . For Gaussian probability density function, it follows that

so that

Therefore,

.

Since the covariance function is continuous in and we have as . Therefore there exist some positive constants and such that

Thus

for any . Taking infimum over and applying Representer Theorem (see Lemma 2 in [36]) we obtain

for all .

Therefore, we obtain that

as .

References

- [1] Mauricio A Alvarez, Lorenzo Rosasco and Neil D Lawrence “Kernels for vector-valued functions: A review” In Foundations and Trends® in Machine Learning 4.3 Now Publishers, Inc., 2012, pp. 195–266

- [2] Iskander Azangulov, Andrei Smolensky, Alexander Terenin and Viacheslav Borovitskiy “Stationary kernels and Gaussian processes on Lie groups and their homogeneous spaces i: the compact case” In arXiv preprint arXiv:2208.14960, 2022

- [3] Iskander Azangulov, Andrei Smolensky, Alexander Terenin and Viacheslav Borovitskiy “Stationary Kernels and Gaussian Processes on Lie Groups and their Homogeneous Spaces II: non-compact symmetric spaces” In arXiv preprint arXiv:2301.13088, 2023

- [4] Monami Banerjee et al. “A Nonlinear Regression Technique for Manifold Valued Data With Applications to Medical Image Analysis” In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016

- [5] Viacheslav Borovitskiy, Alexander Terenin and Peter Mostowsky “Matérn Gaussian processes on Riemannian manifolds” In Advances in Neural Information Processing Systems 33, 2020, pp. 12426–12437

- [6] Phillip Boyle and Marcus Frean “Dependent gaussian processes” In Advances in neural information processing systems 17, 2004

- [7] Phillip Boyle and Marcus Frean “Dependent gaussian processes” In Advances in neural information processing systems, 2005, pp. 217–224

- [8] Michael M. Bronstein et al. “Geometric Deep Learning: Going beyond Euclidean data” In IEEE Signal Processing Magazine 34, 2017, pp. 18–42

- [9] Taeryon Choi, Jian Q Shi and Bo Wang “A Gaussian process regression approach to a single-index model” In Journal of Nonparametric Statistics 23.1 Taylor & Francis, 2011, pp. 21–36

- [10] Emil Cornea, Hongtu Zhu, Peter Kim and Joseph G Ibrahim “Regression models on Riemannian symmetric spaces” In Journal of the Royal Statistical Society. Series B, Statistical methodology 79.2 NIH Public Access, 2017, pp. 463

- [11] Xiongtao Dai and Hans-Georg Müller “Principal component analysis for functional data on riemannian manifolds and spheres” In The Annals of Statistics 46.6B Institute of Mathematical Statistics, 2018, pp. 3334–3361

- [12] Abhirup Datta, Sudipto Banerjee, Andrew O Finley and Alan E Gelfand “Hierarchical nearest-neighbor Gaussian process models for large geostatistical datasets” In Journal of the American Statistical Association 111.514 Taylor & Francis, 2016, pp. 800–812

- [13] Thomas Devogele, Laurent Etienne, Maxence Esnault and Florian Lardy “Optimized discrete fréchet distance between trajectories” In Proceedings of the 6th ACM SIGSPATIAL Workshop on Analytics for Big Geospatial Data, 2017, pp. 11–19

- [14] MP Do Carmo “Mathematics: Theory & Applications” In Riemannian Geometry Birkhäuser Boston, 1992, pp. xiv+–300

- [15] Ian L Dryden and Kanti V Mardia “Statistical shape analysis: with applications in R” John Wiley & Sons, 2016

- [16] Paromita Dubey and Hans-Georg Müller “Modeling time-varying random objects and dynamic networks” In Journal of the American Statistical Association Taylor & Francis, 2021, pp. 1–16

- [17] P Thomas Fletcher “Geodesic regression and the theory of least squares on Riemannian manifolds” In International journal of computer vision 105.2 Springer, 2013, pp. 171–185

- [18] P Thomas Fletcher, Conglin Lu, Stephen M Pizer and Sarang Joshi “Principal geodesic analysis for the study of nonlinear statistics of shape” In IEEE transactions on medical imaging 23.8 IEEE, 2004, pp. 995–1005

- [19] Andrew Gelman, Jessica Hwang and Aki Vehtari “Understanding predictive information criteria for Bayesian models” In Statistics and computing 24.6 Springer, 2014, pp. 997–1016

- [20] Botao Hao, Will Wei Sun, Yufeng Liu and Guang Cheng “Simultaneous clustering and estimation of heterogeneous graphical models” In Journal of Machine Learning Research 18.217, 2018, pp. 1–58

- [21] Jacob Hinkle, Prasanna Muralidharan, P Thomas Fletcher and Sarang Joshi “Polynomial regression on Riemannian manifolds” In European Conference on Computer Vision, 2012, pp. 1–14 Springer

- [22] Michael Hutchinson et al. “Vector-valued Gaussian processes on Riemannian manifolds via gauge independent projected kernels” In Advances in Neural Information Processing Systems 34, 2021, pp. 17160–17169

- [23] David G Kendall “Shape manifolds, procrustean metrics, and complex projective spaces” In Bulletin of the London mathematical society 16.2 Wiley Online Library, 1984, pp. 81–121

- [24] Hyunwoo J Kim et al. “Multivariate general linear models (MGLM) on Riemannian manifolds with applications to statistical analysis of diffusion weighted images” In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2014, pp. 2705–2712

- [25] Eardi Lila, John AD Aston and Laura M Sangalli “Smooth principal component analysis over two-dimensional manifolds with an application to neuroimaging” In The Annals of Applied Statistics 10.4 Institute of Mathematical Statistics, 2016, pp. 1854–1879

- [26] Zhenhua Lin and Fang Yao “Functional regression on the manifold with contamination” In Biometrika 108.1 Oxford University Press, 2021, pp. 167–181

- [27] Anton Mallasto and Aasa Feragen “Wrapped Gaussian process regression on Riemannian manifolds” In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 5580–5588

- [28] Esfandiar Nava-Yazdani, Hans-Christian Hege, Timothy John Sullivan and Christoph Tycowicz “Geodesic analysis in Kendall’s shape space with epidemiological applications” In Journal of Mathematical Imaging and Vision Springer, 2020, pp. 1–11

- [29] Xavier Pennec “Probabilities and statistics on Riemannian manifolds: Basic tools for geometric measurements.” In NSIP 3, 1999, pp. 194–198 Citeseer

- [30] Alexander Petersen and Hans-Georg Müller “Fréchet regression for random objects with Euclidean predictors” In The Annals of Statistics 47.2 Institute of Mathematical Statistics, 2019, pp. 691–719

- [31] Davide Pigoli, Alessandra Menafoglio and Piercesare Secchi “Kriging prediction for manifold-valued random fields” In Journal of Multivariate Analysis 145 Elsevier, 2016, pp. 117–131

- [32] William H Press, Saul A Teukolsky, William T Vetterling and Brian P Flannery “Numerical recipes 3rd edition: The art of scientific computing” Cambridge university press, 2007

- [33] James O Ramsay “Functional data analysis” In Encyclopedia of Statistical Sciences 4 Wiley Online Library, 2004

- [34] Carl Edward Rasmussen “Gaussian processes in machine learning” In Summer school on machine learning, 2003, pp. 63–71 Springer

- [35] Sam T Roweis and Lawrence K Saul “Nonlinear dimensionality reduction by locally linear embedding” In science 290.5500 American Association for the Advancement of Science, 2000, pp. 2323–2326

- [36] Matthias W Seeger, Sham M Kakade and Dean P Foster “Information consistency of nonparametric Gaussian process methods” In IEEE Transactions on Information Theory 54.5 IEEE, 2008, pp. 2376–2382

- [37] J.Q. Shi, B. Wang, R. Murray-Smith and D.M. Titterington “Gaussian process functional regression modeling for batch data” In Biometrics 63, 2007, pp. 714–723

- [38] Jian Qing Shi and Taeryon Choi “Gaussian process regression analysis for functional data” CRC Press, 2011

- [39] Jingyong Su, Sebastian Kurtek, Eric Klassen and Anuj Srivastava “Statistical analysis of trajectories on Riemannian manifolds: bird migration, hurricane tracking and video surveillance” In The Annals of Applied Statistics 8.1 Institute of Mathematical Statistics, 2014, pp. 530–552

- [40] Mark Van der Wilk et al. “A framework for interdomain and multioutput Gaussian processes” In arXiv preprint arXiv:2003.01115, 2020

- [41] Bo Wang and Jian Qing Shi “Generalized Gaussian process regression model for non-Gaussian functional data” In Journal of the American Statistical Association 109.507 Taylor & Francis, 2014, pp. 1123–1133

- [42] Wenjia Wang and Bing-Yi Jing “Gaussian process regression: Optimality, robustness, and relationship with kernel ridge regression” In Journal of Machine Learning Research 23.193, 2022, pp. 1–67

- [43] Zhanfeng Wang, Maengseok Noh, Youngjo Lee and Jian Qing Shi “A general robust t-process regression model” In Computational Statistics & Data Analysis 154 Elsevier, 2021, pp. 107093

- [44] Christopher KI Williams and Carl Edward Rasmussen “Gaussian processes for machine learning” MIT press Cambridge, MA, 2006

- [45] Fang Yao, Hans-Georg Müller and Jane-Ling Wang “Functional data analysis for sparse longitudinal data” In Journal of the American statistical association 100.470 Taylor & Francis, 2005, pp. 577–590

- [46] Miaomiao Zhang and Tom Fletcher “Probabilistic principal geodesic analysis” In Advances in neural information processing systems 26, 2013