Workforce pDEI: Productivity Coupled with DEI

Abstract

Ranking pertaining to the human-centered tasks – underscoring their paramount significance in these domains such as evaluation and hiring process – exhibits widespread prevalence across various industries. Consequently, decision-makers are taking proactive measurements to promote diversity, underscore equity, and advance inclusion. Their unwavering commitment to these ideals emanates from the following convictions: (i) Diversity encompasses a broad spectrum of differences; (ii) Equity involves the assurance of equitable opportunities; and (iii) Inclusion revolves around the cultivation of a sense of value and impartiality, concurrently empowering individuals. Data-driven AI tools have been used for screening and ranking processes. However, there is a growing concern that the presence of pre-existing biases in databases may be exacerbated, particularly in the context of imbalanced datasets or the black-box-schema. In this research, we propose a model-driven recruitment decision support tool that addresses fairness together with equity in the screening phase. We introduce the term “pDEI” to represent the output-input oriented production efficiency adjusted by socioeconomic disparity. Taking into account various aspects of interpreting socioeconomic disparity, our goals are (i) maximizing the relative efficiency of underrepresented groups and (ii) understanding how socioeconomic disparity affects the cultivation of a DEI-positive workplace.

Introduction

In recent years, there has been a noticeable increase in discussions advocating for greater diversity, such as: “Finance Functions Need More Women Leaders” (Oberauer, Grabowski, and Roos 2022)” and “Why We Need More Faculty of Color in Higher Education (Centeno and Nation 2022)”. These discussions have brought attention to underrepresented groups in the workforce, sparking further conversations about the root causes of the issue, the best timing for action, and effective approaches for addressing it.

The compelling necessity for addressing diversity issues lies in the potential organizational benefits that can arise from fostering DEI within the workforce. For example, diversified professional interactions can better serve a wider group of customers and increase sales and satisfaction rates (Wingar 2019; Ramón, Tran, and Hunt 2023). Integrating DEI into the workforce can enhance crisis communications, with a recommendation for the leadership team to exhibit cultural competence in communicating with diverse groups effectively (Zheng 2020).

As hiring with DEI becoming more crucial than ever, there is a pressing need for operational guidance on systematic recruitment to define and prioritize competitive applicants from underrepresented groups. Incorporating DEI into the hiring process may begin with the screening process, as it serves as the initial opportunity to address diversity, equity, and inclusion within the workforce.

Problem Description

Demographic factors like ethnicity, gender, and age can significantly impact personal experiences and professional interactions across various aspects of life. It’s important to note that the “entrance barrier” related to underrepresented groups can be context-dependent (Harris et al. 2015; Fouad and Santana 2017; Yee 2021), varying not only between group stereotypes and individual stereotypes but also across different industries where the definition of “underrepresented groups” may differ. The most “overrepresented group” can often dominate the representation rate in various industries; nevertheless, there may be instances where multiple groups can be labeled as “overrepresented” in certain niche industries while being considered “underrepresented” in more mainstream sectors.

Consider a scenario where an organization is screening applicants based on their screening evaluations, rated on a scale of 1-7, with 7 being the highest. Suppose that there are two applicants, A (evaluation score 7) and B (evaluation score 6), competing for a single position. Lacking further context, applicant A may be favored as the superior candidate based on its higher evaluation score. Nevertheless, we should consider the scenario where applicant A belongs to an Overrepresented Group while applicant B is from an Underrepresented Group within the specific industry mentioned. At that point, hiring a more suitable candidate can be complicated, especially when considering related DEI values within the job description requirements.

Assuming that Figure 1 displays the top 3 candidates from each demographic group with their performance plotted on the same axis, it is evident that the individual marked with the star should be considered the top candidate across all applicant groups if without incorporating DEI consideration.

Assume that the job screening process aims to choose 4 candidates for the next round interview. Without taking DEI into account, the conventional approach would involve selecting individuals from the highlighted region characterized by higher evaluation scores in Figure 2(a). Nevertheless, this selection scheme could potentially conflict with the goals of DEI, especially when groups A and B represent the most socioeconomically disadvantaged segments of the population. This conflict manifests in two ways: (1) the potential violation of the “ rule” as outlined in federal guidelines (Equal Employment Opportunity Commission 1978), and (2) a concern that, in the long term, equity may prove insufficient in addressing underlying socioeconomic inequalities.

One might perceive an approach akin to equity, as illustrated in Figure 2(b), as a potential solution to control outcome disparities (Raghavan et al. 2020). Nonetheless, it is imperative to thoroughly assess the extent to which this selection process can be justified and whether it would be regarded as equitable for applicants with higher evaluation but are not selected from other groups.

In addressing this question, it becomes paramount to provide a comprehensive justification and elucidation of the notions of Overrepresented and Underrepresented with regard to the factors influencing socioeconomic parity levels.

As an illustration, consider the scenario where we have two distinct sets of ground truth labels representing socioeconomic disparity for each group, as depicted in Figure 3. These labels are arranged along a spectrum, representing a continuum from unfairness to fairness, with the interpretation being from (the most) underrepresented to (the most) overrepresented. If we apply the identical selection scheme (as illustrated in Figure 2(b)), wherein the highlighted region signifies the same set of chosen candidates. The consistency of this selection approach appears more reasonable when considering the disparity as represented in case 1 (Figure 3(a)) rather than in case 2 (Figure 3(b)). As in case 2, the candidate chosen from group A may be perceived as having a comparative advantage over group B. However, it’s noteworthy that the selected candidate from group A exhibits even lower evaluation scores than one candidate from group B who was not selected, making the selected group A candidate less competitive than the group B’s second-highest candidate (unselected).

Expected Outcomes and Benefits

In order to solve the above-mentioned problems, we propose DEI-embedded recruitment model that provide an optimal balance to promote DEI while ensuring overall competency. In which, we consider the output-input oriented efficiency (productivity) captured in the fraction of

| (1) |

to adjust candidates competency. With the proposed model, one can make a more informed decision-making with given requirements of competency and DEI.

Our numerical test demonstrates that the perceived conventional practice of selecting an equal number (or proportion) of applicants might not be deemed sufficiently equitable, which, in turn, depends on the interplay between parity measurements and evaluation scores.

In a more comprehensive perspective, such knowledge can be transferred to the different domain for AI-driven decision-making. This involves emphasizing the significance of understanding the underlying invariant sensitive features, which can offer valuable guidance when applying the model to other domain. It is also crucial to recognize that these models should not solely focus on addressing imbalanced dataset. In addition to considering the “focal loss”, the criteria for evaluating the overall model performance across various groups should be reweighted once more to account for a closer examination of socioeconomic disparities.

Methodology and Literature Review

Bias and Fairness

In the context of human-centered decision-making, bias is often associated with human bias (National Museum of African American History & Culture 2020) and can potentially manifest as algorithmic bias in AI tools (Dwork and Ilvento 2018b; Kordzadeh and Ghasemaghaei 2022).

The goal of mitigating bias arises from the critical need to ensure that models do not manifest discrimination against groups based on their protected attributes, such as demographic characteristics. However, when considering the DEI requirements, we do enforce that our model compensates the underrepresented groups, thus we focus on fairness under equitable considerations.

In this context, we introduce fairness as a gauge of demographic groups’ relative socioeconomic status. Utilizing a fairness metric, we discern individuals belonging to groups characterized by lower fairness scores, consequently highlighting pronounced disparities in socioeconomic inequality. Thus, within the context of Equation (1), for a given arbitrary constant in the numerator, a reduction in the denominator reflecting the disparity would equitably enhance the value of pDEI, taking into account the presence of socioeconomic inequalities.

Parity and Fairness Metrics

Without considering TPR and FPR, the derivations of the parity measurements briefly cover two concepts: Statistical Parity (Zemel et al. 2013; Dwork and Ilvento 2018a) and Disparate Impact (Barocas and Selbst 2016), both of which emphasize equal rates of favorable outcomes across overrepresented and underrepresented groups. When introducing TPR and FPR, the goal shifts to emphasize equality across overrepresented and underrepresented groups in the following ways: (1) Equal true positive rates in TPR Parity, (2) Equal false positive rates in FPR Parity, and (3) Equal true positive and false positive rates in Average Absolute Odds.

An extensive list of fairness metrics can be found in (Chen and Hooker 2021, 2022), which also explores mathematical properties and related optimizations of these metrics.

| Metric | Formulas |

|---|---|

| Statistical Parity | |

| Disparate Impact | |

| TPR Parity | |

| FPR Parity | |

| Average Absolute Odds |

In this research, we use Disparate Impact (DI, as referenced later) by considering the fraction of conditional probabilities due to its property of yielding nonnegative results:

| (2) |

where represents an outcome variable and denotes the examined group as defined by the protected features.

DEA Models

Data envelopment analysis (DEA) models are widely employed for assessing a flexible “fair” form of efficiency in situations involving multiple inputs and multiple outputs (Cook and Seiford 2009; Khodabakhshi and Aryavash 2014; Zhu et al. 2021; Kremantzis, Beullens, and Klein 2022).

DEA models originated from the CRS model, in which (Charnes, Cooper, and Rhodes 1978) proposed the economic term of efficiency for a given decision-making unit with input and output relative to the industry-level benchmark to match the engineering efficiency of , where, given a set of decision making units (DMUs) , treating the industry benchmark as one of the units from the given group , then we can measure the relative efficiency for with input and output :

| (3) |

Switching to the multiple inputs outputs scenario, let us denote a given DMU with the input vector: , and output vector , Model (3) could be further written into the following linear form:

| (4) |

where the pivotal idea of utilizing the weights assigned to a given DMU to gauge the efficiency of other DMUs holds significant promise for enhancing the efficiency of the specified unit. This interpretation aligns with the principles of DEI, as it aims to enhance the scores of the designated unit while assuming that other units can achieve at least the same level of efficiency using identical weights.

In the context of AI-driven screening processes, vendors typically furnish an evaluation that rates applicants across a spectrum of competencies, often represented as multi-dimensional assessment outcomes (Raghavan et al. 2020). These multi-dimensional outcomes would be fed into a model for assessing the candidates’ competency, making them appropriate for the application of DEA models.

pDEI

In Definition (1), it becomes imperative to refine the evaluation of candidates by considering their socioeconomic disparities. In the context of this study, we utilize labor statistics as essential information to analyze and assess the socioeconomic disparities related to job screening tasks, with the aim of elucidating the underlying assumptions connecting the “entrance barrier” and “socioeconomic disparities.”

In light of the constrained dataset granularity within each subcategory, we adopt a method of cross-referencing statistics #10 (U.S. Bureau of Labor Statistics 2023b) and #11 (U.S. Bureau of Labor Statistics 2023a) to enrich our comprehension of disparity metrics for various demographic groups. Subsequently, we integrate this enriched knowledge into Model (4) as an input vector, enhancing the model’s capacity to account for variations in disparity across groups. By fixing the relative fairness scores generated from the labor statistics within each industry, we establish a fixed socioeconomic fairness metric for each group based on their characteristics derived from labor statistics. Please note that the cardinality of holds significant importance when determining which features to include in order to further address fairness considerations.

Numerical Findings

Labor Statistics Fact

Our input vector centers on the conditional probability , representing our assessment of the employment rate, i.e. the event of hired () given applicant from a certain demographic group (), which can further be referenced as parity within a specific demographic group. In this evaluation, the specified labor force criteria of “Total, 16 years and over,” as outlined in (U.S. Bureau of Labor Statistics 2023b), have been employed to approximate the overall available workforce within a specific cohort.

It is imperative to acknowledge that, due to constraints imposed by page limitations, we have incorporated six industry sectors, primarily focusing on those within the “Management, business, and financial operations occupations” category. These sectors have been included based on two considerations: (1) the total employed population of over 500,000 individuals and (2) a combined proportion from each demographic group not exceeding 110%, a criterion designed to account for the influence of individuals from more than two racial backgrounds without unduly overshadowing the impact of other racial groups.

Table 2 displays the conditional probabilities , representing the percentages of employees from specific group within each sector. The row labels denote distinct sectors within the “Management, Business, and Financial Operations Occupations”: represents Chief executives, represents Sales managers Computer and information systems managers, represents Medical and health services managers, represents Education and childcare administrators Property, represents Real estate, and represents Community association managers. For the column labels, “Total” denotes the employment figures for a given sector (in thousands); “Percentage” signifies the employment rate for a given group within the entire workforce of that sector. Among the groups, “” represents women, “” represents White, “” represents Black or African American, “” represents Asian, and “” represents Hispanic or Latino ethnicity.” Since the labor statistics exclusively provide the employment percentage for the group of women, we designate the non-woman group as “” in Table (3).

| Industry | Total | Percentage | ||||

|---|---|---|---|---|---|---|

| 1780 | 29.2 | 85.9 | 5.9 | 6.7 | 6.8 | |

| 566 | 34.2 | 88.3 | 5.9 | 3.5 | 11.3 | |

| 764 | 26.4 | 72.6 | 7.8 | 16.5 | 7.5 | |

| 797 | 71.6 | 74.6 | 16.0 | 7.3 | 9.0 | |

| 988 | 68.1 | 78.0 | 16.3 | 4.0 | 9.8 | |

| 835 | 50.3 | 83.1 | 9.9 | 4.3 | 11 | |

It is worth noting that a substantial portion of the statistics is reported in a percentage format. Consequently, it is important to acknowledge the potential for variations in the presented results attributable to the propagation of errors during calculations.

By cross-referencing the total number of employed individuals within each group, as documented in (U.S. Bureau of Labor Statistics 2023b)—121,908 from group ; 19,937 from ; 10,615 from ; and 29,299 from —we calculate the disparate impact for each group across the above-mentioned six sectors, as in Table 3 and Figure 4:

| Race & Ethnicity | Gender | |||||

|---|---|---|---|---|---|---|

| 2.18 | 0.48 | 1.09 | 0.36 | 0.41 | 2.42 | |

| 2.10 | 0.46 | 0.54 | 0.60 | 0.52 | 1.92 | |

| 1.12 | 0.66 | 3.02 | 0.40 | 0.36 | 2.78 | |

| 1.13 | 1.43 | 1.18 | 0.48 | 2.53 | 0.40 | |

| 1.27 | 1.44 | 0.63 | 0.52 | 2.14 | 0.47 | |

| 1.61 | 0.82 | 0.67 | 0.59 | 1.01 | 0.99 | |

In Figure 4, the central point of the star plot represents the scenario where “DI = 0,” and it is connected by a dashed line to the point where “DI = 1” across various sectors. It is evident that among the selected six sectors, “” exhibits the highest level of overrepresentation, with its DI values exceeding 1 across all sectors; while “” demonstrates the most significant underrepresentation across these industries. Furthermore, “” and “” are found to be overrepresented in certain sectors but underrepresented in the larger ones.

Numerical Results

In this study, we utilize the Matlab Toolbox developed by Balk et al. (2021) for the integration of classical DEA models.

We investigate multiple evaluation scenarios to analyze the level of adjustment across diverse geographical groups. Nonetheless, due to data granularity constraints, our ability to compute the DI is constrained. Given the absence of the most disaggregated data of (Race, Gender) groups, we could only include two input variables for each candidate: (1) DI based on its race and ethnicity (), and (2) DI based on its gender ().

Uniform Evaluation Across Groups

Suppose we are assessing the performance of the top four candidates from each demographic group, denoted as : the top candidate from group . For simplicity, we assign uniform scores across all race groups as follows: = (8, 8, 8, 8), = (7, 7, 7, 7), = (6, 6, 6, 6), and = (5, 5, 5, 5) for the first, second, third, and fourth top candidates across all race groups, respectively.

Tables 4, 5, 6, and 7 display the adjusted efficiency score obtained by treating DI as input in Equation (4) across various sectors. In Tables 4 and 5, the sectors exhibit underrepresentation of the group of women (DI 1), while the sectors in Tables 6 and 7 demonstrate overrepresentation of the group of women (DI 1).

| C1 | C2 | C3 | C4 | ||

|---|---|---|---|---|---|

| R1 | 0.17 | 0.14 | 0.12 | 0.10 | |

| Race and | R2 | 0.75 | 0.65 | 0.56 | 0.47 |

| Ethnicity | R3 | 0.33 | 0.29 | 0.25 | 0.21 |

| R4 | 1 | 0.88 | 0.75 | 0.63 | |

| R1 & G1 | 1 | 0.88 | 0.75 | 0.63 | |

| R2 & G1 | 1 | 0.88 | 0.75 | 0.63 | |

| Race and | R3 & G1 | 1 | 0.88 | 0.75 | 0.63 |

| Ethnicity | R4 & G1 | 1 | 0.88 | 0.75 | 0.63 |

| and | R1 & G2 | 0.17 | 0.15 | 0.13 | 0.11 |

| Gender | R2 & G2 | 0.75 | 0.65 | 0.56 | 0.47 |

| R3 & G2 | 0.33 | 0.29 | 0.25 | 0.21 | |

| R4 & G2 | 1 | 0.88 | 0.75 | 0.63 |

| C1 | C2 | C3 | C4 | ||

|---|---|---|---|---|---|

| R1 | 0.22 | 0.19 | 0.16 | 0.14 | |

| Race and | R2 | 1.00 | 0.88 | 0.75 | 0.63 |

| Ethnicity | R3 | 0.85 | 0.74 | 0.64 | 0.53 |

| R4 | 0.76 | 0.67 | 0.57 | 0.48 | |

| R1 & G1 | 1 | 0.88 | 0.75 | 0.63 | |

| R2 & G1 | 1 | 0.88 | 0.75 | 0.63 | |

| Race and | R3 & G1 | 1 | 0.88 | 0.75 | 0.63 |

| Ethnicity | R4 & G1 | 1 | 0.88 | 0.75 | 0.63 |

| and | R1 & G2 | 0 | 0.24 | 0.20 | 0.17 |

| Gender | R2 & G2 | 1 | 0.88 | 0.75 | 0.63 |

| R3 & G2 | 1 | 0.74 | 0.64 | 0.53 | |

| R4 & G2 | 0.76 | 0.67 | 0.57 | 0.48 |

| C1 | C2 | C3 | C4 | ||

|---|---|---|---|---|---|

| R1 | 0.41 | 0.36 | 0.31 | 0.26 | |

| Race and | R2 | 0.36 | 0.32 | 0.27 | 0.23 |

| Ethnicity | R3 | 0.83 | 0.72 | 0.62 | 0.52 |

| R4 | 1.00 | 0.88 | 0.75 | 0.63 | |

| R1 & G1 | 0.41 | 0.36 | 0.31 | 0.26 | |

| R2 & G1 | 0.36 | 0.32 | 0.27 | 0.23 | |

| Race and | R3 & G1 | 0.83 | 0.72 | 0.62 | 0.52 |

| Ethnicity | R4 & G1 | 1 | 0.88 | 0.75 | 0.63 |

| and | R1 & G2 | 1 | 0.88 | 0.75 | 0.63 |

| Gender | R2 & G2 | 1 | 0.88 | 0.75 | 0.63 |

| R3 & G2 | 1 | 0.88 | 0.75 | 0.63 | |

| R4 & G2 | 1 | 0.88 | 0.75 | 0.63 |

| C1 | C2 | C3 | C4 | ||

|---|---|---|---|---|---|

| R1 | 0.36 | 0.32 | 0.27 | 0.23 | |

| Race and | R2 | 0.72 | 0.63 | 0.54 | 0.45 |

| Ethnicity | R3 | 0.88 | 0.77 | 0.66 | 0.55 |

| R4 | 1.00 | 0.88 | 0.75 | 0.63 | |

| R1 & G1 | 0.98 | 0.85 | 0.73 | 0.61 | |

| R2 & G1 | 0.98 | 0.85 | 0.73 | 0.61 | |

| Race and | R3 & G1 | 0.98 | 0.85 | 0.73 | 0.61 |

| Ethnicity | R4 & G1 | 1 | 0.88 | 0.75 | 0.63 |

| and | R1 & G2 | 1 | 0.88 | 0.75 | 0.63 |

| Gender | R2 & G2 | 1 | 0.88 | 0.75 | 0.63 |

| R3 & G2 | 1 | 0.88 | 0.75 | 0.63 | |

| R4 & G2 | 1 | 0.88 | 0.75 | 0.63 |

Conclusion

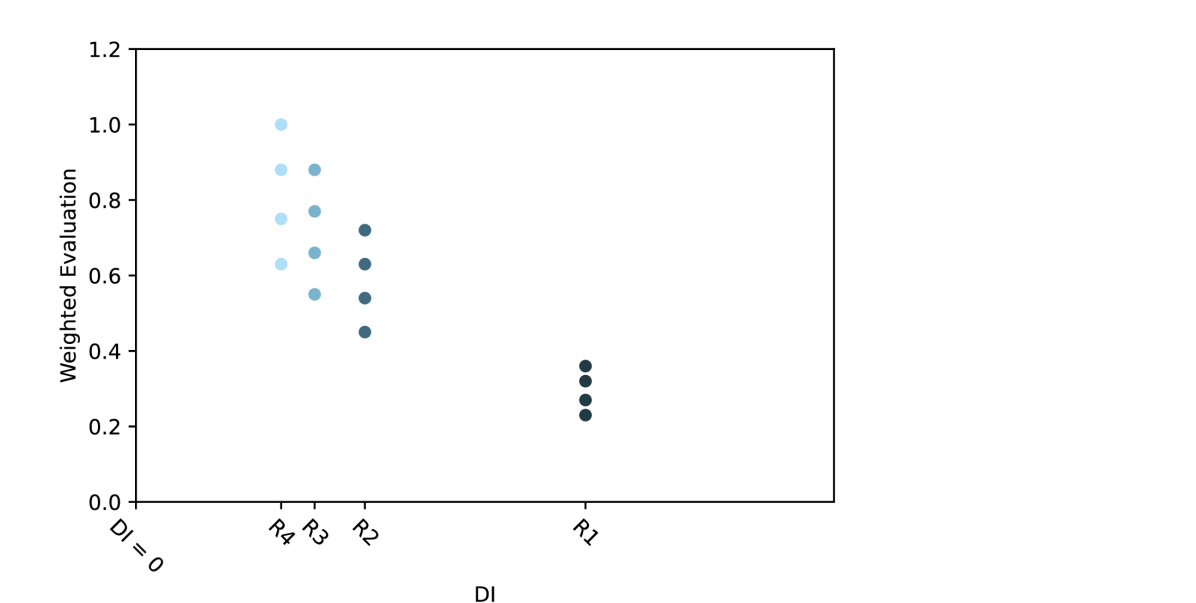

Drawing from the numerical findings, it is evident that the DEA model incorporating the metric offers a means to calibrate candidate performance in accordance with their socioeconomic disparity status. This adjustment can be primarily ascribed to the relative disparities observed within their DI metrics, as pictured in Figures 5 and 6.

Figure 5 visually represents the final adjusted scores through a scatter plot, where the x-axis spans DI values ranging from the smallest (0, indicating the most underrepresented) to the maximum within those sectors, as derived from the data presented in Table 3.

Similarly, Figure 6 consolidates the scatter plots into a polar coordinate system, with reference to the DI metrics represented by the dots connected by dotted lines. From those visualization, the model do underscore that groups with lower fairness scores should contemplate a more substantial degree of scaling adjustment – even when cross-evaluating candidate performance – in order to augment their DEI-adjusted efficiency scores. As depicted in Figure 6, when a group exhibits a higher degree of overrepresentation relative to other groups (indicated by a sharp peak in the dotted line), a corresponding substantive degree of compensation should be allocated to underrepresented groups as a counterbalance to the overrepresented scores.

This observation could serve as a guiding principle for reweighting scores for each group within AI-driven decision tools aimed at constructing interpretable machine learning models. Furthermore, through a comparative analysis of the DEI-adjusted scores presented in Tables 4, 5, 6, and 7, it becomes evident that determined by the DI metric, one dimension of the input may dominate the others. For instance, in Table 4, when simultaneously considering race-based DI and gender-based DI, as observed in Sector 1 where women are extremely underrepresented, all candidates within the “Woman” group (R1&G1, R2&G1, R3&G1, and R4&G1) are evaluated with the same degree of efficiency, irrespective of whether they are overrepresented concerning their race or not.

A plausible explanation for this phenomenon lies in the impact of input metric scales on model outcomes. While normalization is commonly employed to mitigate scale imbalances in machine learning and deep learning algorithms, in case of DEI-related questions, basic normalization techniques might be inadequate in capturing these disparities. However, without appropriate normalization, certain metrics may exert a dominant influence over others, rendering the trained model susceptible to issues such as memorizing “model short-cut” and diminishing interpretability.

Future Improvements

As far as the authors are aware, the predominant body of existing research places a predominant emphasis on assessing fairness through the lens of equal treatment rather than delving into the intricacies of DEI considerations. Addressing how models can effectively compensate underrepresented groups poses a considerably complex challenge.

Through the utilization of the proposed metric, we have observed intriguing patterns that can guide AI practitioners in reevaluating and interpreting their models in the context of fairness and disparity considerations.

Nonetheless, it is important to acknowledge the presence of certain limitations that we intend to address more comprehensively in future research. One such limitation, as highlighted in the conclusion, pertains to the redistribution of scores from overrepresented groups to underrepresented groups, with the extent of transfer increasing as the degree of overrepresentation grows. As illustrated in Table 4, when solely considering the race-based DI, the top candidate from group sees their score reduced to 0.17, rendering this candidate less favorable than the majority of candidates from other demographic groups. This phenomenon arises from the considerably high race-based DI value for at 2.18, in contrast to the DI value of 0.48 for the most underrepresented group. This substantial disparity in DI values leads the model to substantially reduce the scores for individuals within the group. This observation sheds light on a potential research area, namely, the incorporation of constraints on utility transfers from overrepresented to underrepresented groups in the applied model.

References

- Balk et al. (2021) Balk, B. M.; De Koster, M. R.; Kaps, C.; and Zofío, J. L. 2021. An evaluation of cross-efficiency methods: With an application to warehouse performance. Applied Mathematics and Computation, 406: 126261.

- Barocas and Selbst (2016) Barocas, S.; and Selbst, A. D. 2016. Big data’s disparate impact. California law review, 671–732.

- Centeno and Nation (2022) Centeno, J.; and Nation, C. 2022. Why We Need More Faculty Of Color In Higher Education. https://www.forbes.com/sites/civicnation/2021/05/12/why-we-need-more-faculty-of-color-in-higher-education/?sh=6be470f464f2. Accessed: 2022-12-05.

- Charnes, Cooper, and Rhodes (1978) Charnes, A.; Cooper, W. W.; and Rhodes, E. 1978. Measuring the efficiency of decision making units. European journal of operational research, 2(6): 429–444.

- Chen and Hooker (2022) Chen, V. X.; and Hooker, J. 2022. Combining leximax fairness and efficiency in a mathematical programming model. European Journal of Operational Research, 299(1): 235–248.

- Chen and Hooker (2021) Chen, X.; and Hooker, J. N. 2021. A Guide to Formulating Equity and Fairness in an Optimization Model. http://public.tepper.cmu.edu/jnh/equityGuideAOR.pdf. Accessed: 2023-05-24.

- Cook and Seiford (2009) Cook, W. D.; and Seiford, L. M. 2009. Data envelopment analysis (DEA)–Thirty years on. European journal of operational research, 192(1): 1–17.

- Dwork and Ilvento (2018a) Dwork, C.; and Ilvento, C. 2018a. Fairness under composition. arXiv preprint arXiv:1806.06122.

- Dwork and Ilvento (2018b) Dwork, C.; and Ilvento, C. 2018b. Group fairness under composition. In Proceedings of the 2018 Conference on Fairness, Accountability, and Transparency (FAT* 2018).

- Equal Employment Opportunity Commission (1978) Equal Employment Opportunity Commission. 1978. Uniform guidelines on employee selection procedures. Federal Register, 43(166): 38290 – 38315.

- Fouad and Santana (2017) Fouad, N. A.; and Santana, M. C. 2017. SCCT and underrepresented populations in STEM fields: Moving the needle. Journal of Career Assessment, 25(1): 24–39.

- Harris et al. (2015) Harris, K. F. H. F.; Grappendorf, H.; Aicher, T.; and Veraldo, C. 2015. ” Discrimination? Low Pay? Long Hours? I am Still Excited:” Female Sport Management Students’ Perceptions of Barriers toward a Future Career in Sport. Advancing Women in Leadership Journal, 35: 12–21.

- Khodabakhshi and Aryavash (2014) Khodabakhshi, M.; and Aryavash, K. 2014. The fair allocation of common fixed cost or revenue using DEA concept. Annals of Operations Research, 214: 187–194.

- Kordzadeh and Ghasemaghaei (2022) Kordzadeh, N.; and Ghasemaghaei, M. 2022. Algorithmic bias: review, synthesis, and future research directions. European Journal of Information Systems, 31(3): 388–409.

- Kremantzis, Beullens, and Klein (2022) Kremantzis, M. D.; Beullens, P.; and Klein, J. 2022. A fairer assessment of DMUs in a generalised two-stage DEA structure. Expert Systems with Applications, 187: 115921.

- National Museum of African American History & Culture (2020) National Museum of African American History & Culture. 2020. Talking About Race – Bias. https://nmaahc.si.edu/learn/talking-about-race/topics/bias. Accessed: 2023-04-24.

- Oberauer, Grabowski, and Roos (2022) Oberauer, A.; Grabowski, J.; and Roos, A. 2022. Finance Functions Need more Women Leaders. Here’s Why. https://www.bcg.com/publications/2022/why-finance-functions-need-more-women-leaderss. Accessed: 2022-11-17.

- Raghavan et al. (2020) Raghavan, M.; Barocas, S.; Kleinberg, J.; and Levy, K. 2020. Mitigating bias in algorithmic hiring: Evaluating claims and practices. In Proceedings of the 2020 conference on fairness, accountability, and transparency, 469–481.

- Ramón, Tran, and Hunt (2023) Ramón, A.-C.; Tran, M.; and Hunt, D. 2023. Hollywood Diversity Report 2023. Technical report, UCLA Entertainment & Media Research Initiative.

- U.S. Bureau of Labor Statistics (2023a) U.S. Bureau of Labor Statistics. 2023a. Employed persons by detailed occupation, sex, race, and Hispanic or Latino ethnicity. https://www.bls.gov/cps/cpsaat11.html. Accessed: 2023-10-1.

- U.S. Bureau of Labor Statistics (2023b) U.S. Bureau of Labor Statistics. 2023b. Employed persons by occupation, race, Hispanic or Latino ethnicity, and sex. https://www.bls.gov/cps/cpsaat10.html. Accessed: 2023-10-1.

- Wingar (2019) Wingar, J. 2019. Rihanna’s ‘Fenty Beauty’: A Leadership Case For Customer Inclusivity. https://www.forbes.com/sites/jasonwingard/2019/08/02/rihannas-fenty-beauty–a-leadership-case-for-customer-inclusivity/?sh=22ca90063460. Accessed: 2023-1-17.

- Yee (2021) Yee, A. 2021. Asian Americans Are Poorly Represented in Hollywood, Study Shows. https://www.bloomberg.com/news/articles/2021-08-04/asian-americans-are-poorly-represented-in-hollywood-study-shows?embedded-checkout=true. Accessed: 2023-10-1.

- Zemel et al. (2013) Zemel, R.; Wu, Y.; Swersky, K.; Pitassi, T.; and Dwork, C. 2013. Learning fair representations. In International conference on machine learning, 325–333. PMLR.

- Zheng (2020) Zheng, L. 2020. Adapt Your D&I Efforts to the Reality of the Crisis. Havard Business Review, https://hbr.org/2020/05/adapt-your-di-efforts-to-the-reality-of-the-crisis. Accessed: 2023-04-24.

- Zhu et al. (2021) Zhu, Q.; Li, F.; Wu, J.; and Sun, J. 2021. Cross-efficiency evaluation in data envelopment analysis based on the perspective of fairness utility. Computers & Industrial Engineering, 151: 106926.