Word2Wave: Language Driven Mission Programming for

Efficient Subsea Deployments of Marine Robots

Abstract. This paper explores the design and development of a language-based interface for dynamic mission programming of autonomous underwater vehicles (AUVs). The proposed ‘Word2Wave’ (W2W) framework enables interactive programming and parameter configuration of AUVs for remote subsea missions. The W2W framework includes: (i) a set of novel language rules and command structures for efficient language-to-mission mapping; (ii) a GPT-based prompt engineering module for training data generation; (iii) a small language model (SLM)-based sequence-to-sequence learning pipeline for mission command generation from human speech or text; and (iv) a novel user interface for 2D mission map visualization and human-machine interfacing. The proposed learning pipeline adapts an SLM named T5-Small that can learn language-to-mission mapping from processed language data effectively, providing robust and efficient performance. In addition to a benchmark evaluation with state-of-the-art, we conduct a user interaction study to demonstrate the effectiveness of W2W over commercial AUV programming interfaces. Across participants, W2W-based programming required less than 10% time for mission programming compared to traditional interfaces; it is deemed to be a simpler and more natural paradigm for subsea mission programming with a usability score of 76.25. W2W opens up promising future research opportunities on hands-free AUV mission programming for efficient subsea deployments.

1 Introduction

Recent advancements in Large Language Models (LLMs) and speech-based human-machine dialogue frameworks are poised to revolutionize robotics by enabling more natural and spontaneous interactions [1, 2]. These systems allow robots to interpret natural human language for more accessible and interactive operation, especially in challenging field robotics applications. In particular, remote deployments of autonomous robots in subsea inspection, surveillance, and search and rescue operations require dynamic mission adjustments on the fly [3]. Seamless mission parameter adaptation and fast AUV deployment routines are essential features for these applications, which the traditional interfaces often fail to ensure [4, 5, 6, 7].

The existing subsea robotics technologies offer predefined planners that require manual configuration of mission parameters in a complex software interface [8, 9]. It is extremely challenging and tedious to program complex missions spontaneously, even for skilled technicians, especially on an undulating vessel when time is of the essence. Natural language-based interfaces have the potential to address these limitations by making mission programming more user-friendly and efficient. Recent works have shown how the reasoning capabilities of LLMs can be applied for mission planning and human-robot interaction (HRI) [1, 10] by using deep vision-language models [11, 12], text-to-action paradigms [13, 14], and embodied reasoning pipelines [10, 1]. Contemporary research demonstrates promising results [15, 16, 4] on language-based human-machine interfaces (HMIs) for subsea mission programming as well.

In this paper, we introduce “Word2Wave”, a small language model (SLM)-based framework for real-time AUV programming in subsea missions. It provides an interactive HMI that uses natural human speech patterns to generate subsea mission plans to perform autonomous remote operations. For implementation, we adapt a SLM training pipeline based on the Text-To-Text Transfer Transformer (T5) [17] small model (T5-Small) to parse natural human speech into a sequence of computer interpretable commands. These commands are subsequently converted to a set of waypoints for AUVs to execute.

We design the language rules of Word2Wave (W2W) with seven atomic commands to support a wide range of subsea mission plans, particularly focusing on subsea surveying, mapping, and inspection tasks [18]. These commands are simple and intuitive, yet powerful tools, to program complex missions without using tedious software interfaces of commercial AUVs [19, 20]. W2W includes all basic operations to program widely used mission patterns such as lawnmower, spiral, ripple, and polygonal trajectories at various configurations. With these flexible operations, W2W allows users to program subsea missions using natural language, similar to how they would describe the mission to a human diver. To this end, we designed a GPT [21]-based prompt engineering module [22] for comprehensive training data generation.

The proposed learning pipeline demonstrates a delicate balance between robustness and efficiency, making it ideal for real-time mission programming. Through comprehensive quantitative and qualitative assessments, we demonstrate that SLMs can capture targeted vocabulary from limited data with a rightly adapted learning pipeline and articulated language rules. Specifically, our adapted T5-Small model provides SOTA performance for sequence-to-sequence learning while offering faster inference rate (of ms) with fewer parameters than computationally demanding LLMs such as BART-Large [23]. We also investigated other SLM architectures such as MarianMT [24] for benchmark evaluation based on accuracy and computational efficiency.

Moreover, we develop an interactive user interface (UI) for translating the W2W-generated language tokens into 2D mission maps. Unlike traditional HMIs, it adopts a minimalist design intended for futuristic use cases. Specifically, we envision that users will engage in interactive dialogues for formulating and planning subsea missions. While such HMIs are still an open problem, our proposed UI is significantly more efficient and user-friendly – which we validate by a thorough user interaction study with participants.

From the user study, we find that on average, participants took less than time for mission programming by W2W compared to using traditional interfaces. The participants, especially those with prior experiences of subsea deployments, preferred using W2W as a simpler and more intuitive programming paradigm. They rated W2W with a usability score [25] of , validating that it induces less cognitive load and requires minimal technical support for novice users. With these features, the proposed W2W framework takes a step forward to our overarching goal of integrating human-machine dialogue for embodied reasoning and hands-free mission programming of marine robots.

2 Background And Related Work

2.1 Language Models for Human-Machine Embodied Reasoning and HRI

Classical language-based systems focus on deterministic human commands for controlling mobile robots [26, 27]. Traditionally, the open-world navigation with visual goals (ViNGs) [28] or visual-inertial navigation (VIN) [29] pipelines have been mostly independent of human-directed language inputs [30] [31, 32]. In these systems, language or speech inputs are parsed separately as a control input to the ViNG or VIN systems to achieve motion planning [31, 33] and navigation tasks [34, 35].

With the advent of LLMs, contemporary robotics research have focused on leveraging the power of natural language for more interactive human-robot embodied decision-making and shared autonomy [10, 1]. A key advancement is the development of vision-language models (VLMs) [11, 12] for human-machine embodied reasoning. Huang et al. [14] developed an “Inner Monologue” framework that injects continuous sensorimotor feedback into a LLM which prompts as the robot interacts with the environment. In “InstructPix2Pix” [13], Tim et al. combine an LLM and a text-to-image model for image editing and visual question answering. These features can enable robots to understand the visual content and integrate it with relevant linguistic information, as shown by Wu et al. in the “TidyBot” system [36].

While general-purpose LLMs are resource intensive, Small Language Models (SLMs) are often more suited for targeted robotics applications [37]. SLMs in various zero-shot learning pipelines have been fine-tuned for applications such as long-horizon navigation [38], embodied manipulation [39], and trajectory planning [37, 40]. These are emerging technologies and, thus, ongoing developments for more challenging real-world field robotics applications.

2.2 Subsea Mission Programming Interfaces

Leveraging human expertise is critical for configuring subsea mission parameters of mobile robots because fully autonomous mission planning and navigation are challenging underwater [41, 42, 43, 44]. Human-programmed missions enable AUVs to adapt to dynamic mission objectives and deal with environmental challenges in adverse sensing conditions with no GPS or wireless connectivity [45, 46]. Various HRI frameworks [5, 6, 47], telerobotics consoles [48, 49], and language-based interfaces [50, 20] have been developed for mission programming and parameter reconfiguration. For instance, visual languages such as “RoboChat” [5] and “RoboChatGest” [3] use a sequence of symbolic patterns to communicate simple instructions to the robot via AR-Tag markers and hand gestures, respectively. These and other language paradigms [11] are mainly suited for short-term human-robot cooperative missions [51, 52].

For subsea telerobotics, augmented and virtual reality (AR/VR) interfaces integrated on traditional consoles are gaining popularity in recent times [53, 54, 55]. These offer immersive teleop experiences [56, 57, 48] and improve teleoperators’ perception of environmental semantics [55, 58, 59, 60, 61]. Long-term autonomous missions are generally planned offline in terms of a sequence of waypoints following a specific trajectory [58, 4, 62] such as a lawn mower pattern, perimeter following, fixed-altitude spiral/corkscrew patterns, variable-altitude polygons, etc. Yang et al. [15] proposed a LLM-driven OceanChat system for AUV motion planning in HoloOcean simulator [63]. Despite inspiring results in marine simulators [22, 64, 65], the power of language models for subsea mission deployments [16] on real systems are not explored in depth.

3 Word2Wave: Language Design

3.1 Language Commands And Rules

Designing mission programming languages for subsea robots involves unique considerations due to the particular requirements of marine robotics applications. In Word2Wave, our primary objective is to enable spontaneous human-machine interaction by natural language. We also want to ensure that the high-level abstractions in the Word2Wave (W2W) language integrate with the existing industrial interfaces and simulation environments for seamless adaptations.

| \cellcolorgray!10Parameters | b: bearing; d: depth; s: speed | |

| a: altitude; t: turns; r: radius | ||

| tab: spacing; dir: direction; dist: distance | ||

| cw, ccw: clockwise, counter clockwise | ||

| \cellcolorgray!10Command | \cellcolorgray!10Symbol | \cellcolorgray!10Language Structure |

| Start/End | S/E | [S/E: latitude, longitude] |

| Move | Mv | [Mv: b, d, s, d/a, d/a(m)] |

| Track | Tr | [Tr: dir, tab, end, d/a, d/a(m)] |

| Adjust | Az | [Az: d/a, d/a(m)] |

| Circle | Cr | [Cr: t, r, cw/ccw, d/a, d/a(m)] |

| Spiral | SP | [SP: t, r, cw/ccw, d/a, d/a(m)] |

As shown in Table 1, we consider language commands in Word2Wave. Their intended use cases are as follows.

-

.

Start/End (S/E): is intended to start or end a mission at a given latitude and longitude. These coordinates are two input parameters, taken in decimal degrees with respect to true North and West, respectively.

-

.

Move (Mv): command is designed to move the AUV to a specified distance (meters) at a given bearing (w.r.t. North) and speed (m/s). If/when the speed is not explicitly stated, a default value of m/s is used.

-

.

Track (Tr): is used to plan a set of parallel lines given a direction orthogonal to the current bearing (same altitude), spacing between each line, and ending distance.

-

.

Adjust (Az): generates a waypoint to adjust the AUV’s target depth or altitude at its current location.

-

.

Circle (Cr): creates a waypoint commanding the AUV to circle around a position for a number of turns at a given radius in a counter/clockwise direction.

-

.

Spiral (Sp): generates a circular pattern that starts at a central point and then expands outwards over a series of turns out to a specific radius. The spiral direction is set to either clockwise or counterclockwise.

3.2 Mission Types And Parameter Selection

W2W can generate arbitrary mission patterns with varying complexity using the language commands of Table 1. The only limitations are the maneuver capabilities of the host AUV. We demonstrate a particular mission programming instance in Fig. 2(a). Mission parameters for each movement command are chosen based on commonly used terms to ensure compatibility; we particularly explore four most widely used mission patterns, as shown in Fig. 2(b). These atomic patterns can be further combined to plan multi-phase composite missions for a given scenario.

-

Lawnmower (also known as boustrophedon) patterns are ideal for surveying large areas over subsea structures. It is not suited for missions requiring intricate movements or targeted actions. These patterns are most commonly used for sonar-based mapping, as they offer even coverage with a simple and efficient route over a large area.

-

Polygonal routes are best suited for irregular terrain as they provide more precise control over the AUV trajectory. They are more flexible as polygons can be tailored towards specific mission requirements and adapt to local terrains. Hence, they are used for missions involving inspecting known landmarks or targeted waypoints.

-

Ripple patterns are defined as a series of concentric circles with either or both varying radii and depths. These are better suited when coverage is needed over a specific area and when equal spacing is important when collecting data. They are best suited for missions where sampling in varying depths of the water column is required.

-

Spiral paths allow for more concentrated coverage over a specific area. It operates similarly to the ripple pattern but allows for a smooth, continuous trajectory that either radiates outwards or converges to a specific point. Spiral paths do not contain well-defined boundaries compared to ripple patterns but do offer some energy savings due to having continuous motion with smaller changes to trajectory. These are commonly used for search missions requiring high-resolution coverage of a specific point.

As demonstrated in Fig. 2(b), these atomic patterns can be further combined to plan multi-phase composite missions.

3.3 UI For Language To Mission Mapping

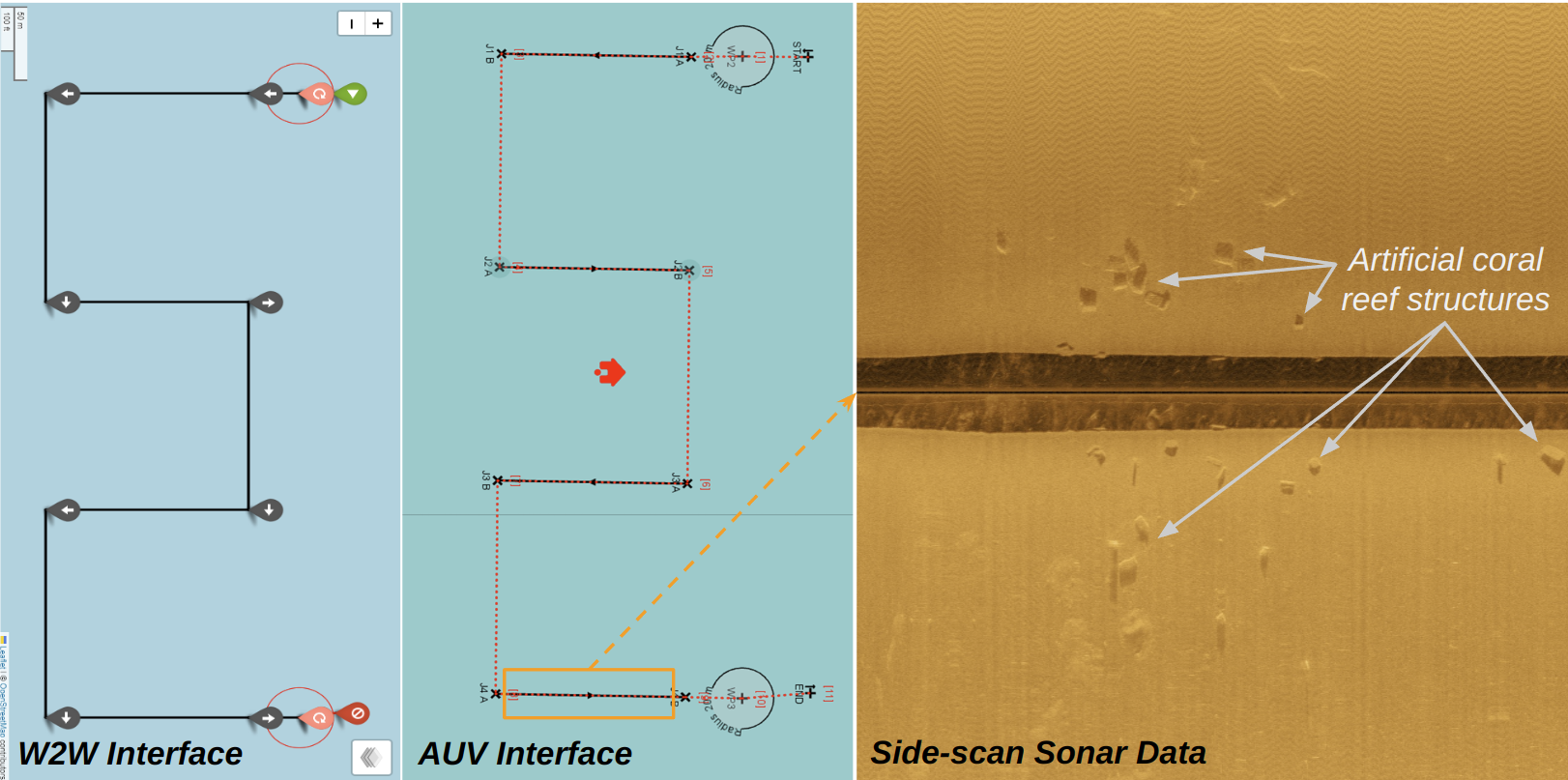

Real-time visual feedback is essential for interactive verification of language to mission translation. To achieve this, we develop a 2D mission visualizer in W2W that places the human language commands into mission paths on a map for confirmation. Specifically, we generate a Leaflet map [66]-based UI on the given GPS coordinates; we further integrate options for subsequent interactions on the map, such as icons, zoom level, and map movement or corrections.

Fig. 3 shows a sample W2W-generated map with icons representing the corresponding command tokens. All individual tokens can be visualized on separate layers for further mission adaptations by the user. The corresponding map on the AUV interface is also shown in Fig. 3; it offers the waypoints with additional information. These waypoints are then loaded onto the AUV for deployment.

4 Word2Wave: Model Training

4.1 Model Selection and Data Preparation

Structured mission command generation from an input paragraph is effectively a text-to-text translation task. Thus, we consider SLM pipelines that are effective for text translation. While popular architectures such as GPT and BERT are mainly suited for text generation and classification [21, 67], Seq2Seq (Sequence to Sequence) models are more suited for translation tasks [68]. For this reason, we choose the T5 architecture, a Seq2Seq model which provides SOTA performance in targeted ‘text-to-text’ translation tasks [68].

In particular, we adopt a smaller variant of the original T5, named T5-Small as it is lightweight and computationally efficient [17, 37]. It processes the input text by encoding the sequence and subsequently decoding it into a concise and coherent summary. We leverage its pre-trained weights and fine-tune it on W2W translation tasks following the language rules and command structures that are presented in Sec. 3.1. The T5-Small version used for W2W, is the checkpoint with M parameters. It leverages the advantages of SLMs and provide an easy-to-train platform for generating mission waypoints from natural language.

Dataset Generation. LLM/SLM-based subsea mission programming for marine robotics is a relatively new area of research. There are no large-scale datasets available for generalized model training. Instead, we take a prompt engineering approach using OpenAI’s ChatGPT-4o [21], which helps produce various ways to program a particular mission. Different manners of phrasing the same command within the prompt ensure that various speech patterns are captured for comprehensive training. Particular attention is given so that outputs from the prompt follow the structure of a valid mission. A total of different mission samples are prepared for supervised training. We verified each sample and their paired commands to ensure that they represent valid subsea missions for AUV deployment.

4.2 Training And Hyperparameter Tuning

We use a randomized - percentile split for training and validation; the remaining samples are used for testing. During training, each line in the dataset is inserted into a tokenizer to extract embedded information. The training is conducted over epochs on a machine with GB of RAM and a single Nvidia RTX Ti GPU with GB of memory. The training is halted when the validation loss consistently remains below a threshold of .

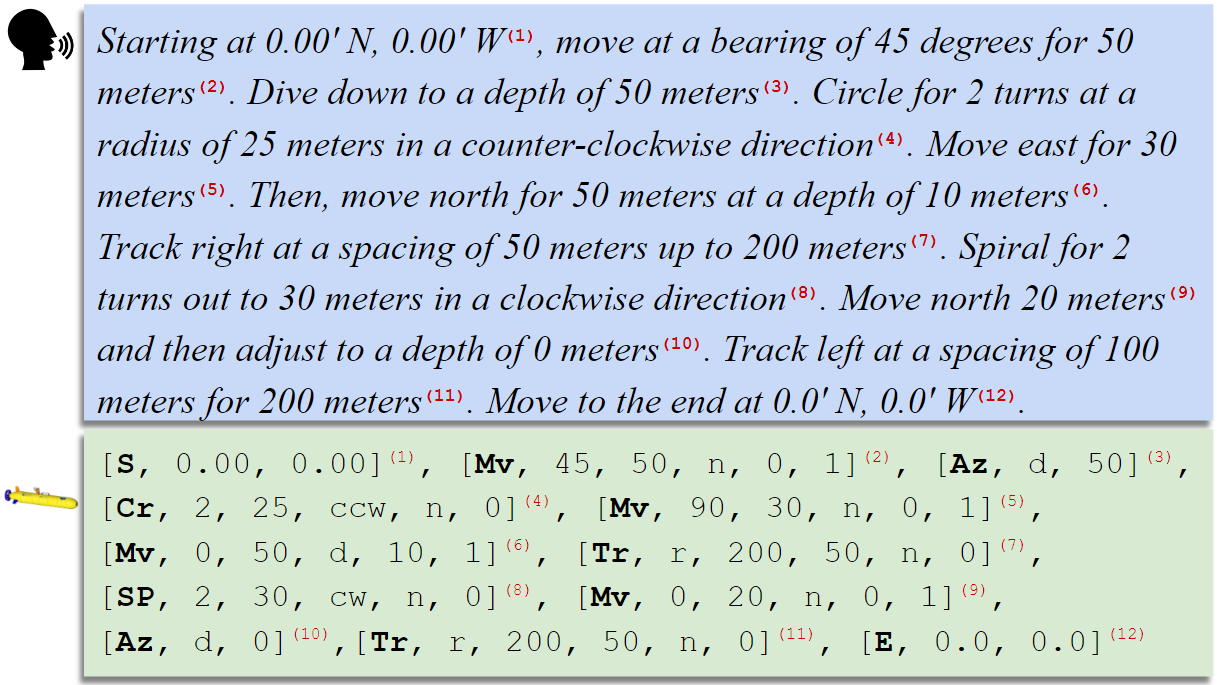

Note that our training pipeline is not intended for general-purpose text-to-text translation, rather a targeted learning on a specific set of mission vocabulary. We make sure that the supervised learning strictly adhered to the language structure of the mission commands outlined in Sec. 3.1. A few sample examples are shown in Fig. 4. The end-to-end training, hyperparameter tuning, and deployment processes are shown in Fig. 5.

Sample outputs. We show three additional sample outputs for W2W (similar examples as in Fig. 4) below.

Sample mission #1:

“Start at N, W. Move at a bearing of for a distance of m at a speed of m/s at an altitude of m. Move South m and then Move South m. Track left for m at a spacing of m. Move North for a distance of m at a speed of m/s at an depth of m, then Adjust to a depth of 0 meters. End at N, W”

Output #1: [S, , ], [Mv, , , , a, ], [Mv, , , , n, ], [Mv, , , , n, ], [Tr, l, , , n, ], [Mv, , , , d, ],[Az, d, 0],[E, , ].

Sample mission #2:

“Start at N, and W, Move at a bearing of for a distance of m at a speed of m/s. Track left for a distance of m spaced by m at a depth of m. End at N, and W”

Output #2: [S, , ], [Mv, , , , n, ], [Tr, l, , , d, ], [E, , ]

Sample mission #3:

“Start at N, W, Move at a bearing of for a distance of m at a speed of m/s at a depth of m. Spiral turns with a radius of m counterclockwise. Adjust to a depth of m. End at N, W”

Output #3: [S, , ], [Mv, , , , d, ], [Sp, , , ccw, n, ], [Az, d, ], [E, , ]

5 Experimental Analyses

5.1 Language Model Evaluation

Language models differ significantly in performance for certain tasks based on their underlying architectures, learning objectives, and application-specific design choices. As mentioned earlier, we adapt a sequence-to-sequence learning pipeline based on the T5-Small model [17]. For performance baseline and ablation experiments, we investigate two other SOTA models of the same genre: BART-Large [23] and MarianMT [24]. We compare their performance based on model accuracy, robustness, and computational efficiency.

Evaluation metrics and setup. To perform this analysis in an identical setup, we train and validate each model on the same dataset (see Sec. 4.2) until validation loss reaches a plateau. For accuracy, we use two widely used metrics: BLEU (bilingual evaluation understudy) [69] and METEOR (metric for evaluation of translation with explicit ordering) [70]. BLEU metric offers a language-independent understanding of how close a predicted sentence is to its ground truth. METEOR additionally considers the order of words during evaluation. It evaluates machine translation output based on the harmonic mean of unigram precision and recall, with recall weighted higher than precision.

In addition, we compare their inference rates on a set of test samples on the same device (RTX TI GPU with GB memory). It is measured as the average time taken to generate a complete mission map given the input sample. Lastly, model sizes based on the number of parameters are apparent from the respective computational graphs.

Quantitative performance analyses of SOTA. Table 2 summarizes the quantitative performance comparison; it demonstrates that the MarianMT and T5-Small models offer more accurate and consistent scores when trained on a targeted dataset compared to BART-Large. We hypothesize that LLMs like BART-Large need more comprehensive datasets and are suited for general-purpose learning. On the other hand, T5-Small has marginally lower BLEU scores compared to MarianMT, while it offers better METEOR values at a significantly faster inference rate. T5-Small only has about M parameters, offering faster runtime while ensuring comparable accuracy and robustness as MarianMT. Further inspection reveals that MarianMT often randomizes the order of generated mission commands. T5-Small does not suffer from these issues, demonstrating a better balance between robustness and efficiency.

Qualitative performance analyses. We evaluate the qualitative outputs of T5-Small for W2W language commands based on the number of inaccurate tokens generation across the whole test set of samples. As Fig. 6 shows, we categorize these into: missed tokens (failed to generate), erroneous tokens (incorrectly generated), and hallucinated (extraneously generated) tokens. Of all the error types, we found that the Adjust commands are hallucinated at a disproportionately greater rate. We hypothesize that it happens due to some bias learned by the model, causing it to associate changes in depth or altitude as an Adjust command. Besides, we observed relatively high missed token counts for Move and Circle commands. Track is another challenging command that suffers from high error rates for token generation. Nevertheless, % tokens are accurately parsed from unseen examples, which we found to be enough for real-time mission programming by human participants.

| Q1: I think that I would like to use this system frequently. | |||||||

| Q2: I found the system unnecessarily complex. | |||||||

| Q3: I thought the system was easy to use. | |||||||

| Q4: I would need the support of a technical person to be able to use this system. | |||||||

| Q5: I found the various functions in this system were well integrated. | |||||||

| Q6: I thought there was too much inconsistency in this system. | |||||||

| Q7: Most people would learn to use this system very quickly. | |||||||

| Q8: I found the system very cumbersome to use. | |||||||

| Q9: I felt very confident using the system. | |||||||

| Q10: I needed to learn a lot of things before I could get going with this system. | |||||||

| Q# | \cellcolorgray!10Baseline | \cellcolorgray!10W2W Interface | Q# | \cellcolorgray!10Baseline | \cellcolorgray!10W2W Interface | ||

| 1 | 2.5 (1.4) | 4.1 (0.8) | 6 | 3.7 (0.9) | 2.9 (1.0) | ||

| 2 | 3.4 (0.9) | 1.5 (0.8) | 7 | 2.8 (1.0) | 4.4 (1.2) | ||

| 3 | 2.4 (0.9) | 4.4 (0.8) | 8 | 3.1 (1.2) | 1.6 (0.9) | ||

| 4 | 4.0 (1.5) | 1.9 (0.7) | 9 | 2.6 (1.0) | 3.6 (1.2) | ||

| 5 | 2.4 (0.8) | 3.7 (1.0) | 10 | 3.5 (0.9) | 1.8 (1.0) | ||

| Metric | \cellcolorgray!10Baseline | \cellcolorgray!10W2W Interface | |||||

| System usability | () | () | |||||

| Time taken (min:sec) | : (:) | : (:) | |||||

5.2 User Interaction Study

We assess the usability benefits of W2W compared to the NemoSens AUV interface, which we consider as the baseline. A total of individuals between the ages of to participated in our study; three of them were familiar with subsea mission programming and deployments, whereas the other people had no prior experience.

Evaluation procedure. Individuals were first introduced to both the W2W UI and Nemosens AUV interface for subsea mission programming. Then, they are asked to program three separate missions on these interfaces. As a quantitative measure, the total time taken to program each mission was recorded; they also completed a user survey to evaluate the degree of user satisfaction and ease of use between the two interfaces. This survey form was based on the system usability scale (SUS) [25]; the results are in Table 3.

User preference analyses. The participants rated W2W’s system usability at , more than twice the SUS score of the baseline AUV programming interface. The participants generally expressed that the baseline interface is more complex, thus asked for assistance repeatedly. They reported several standard deviations higher scores for various features of W2W. They took less than time for programming missions on W2W, validating that it is more user-friendly and easier to use than the baseline.

5.3 Subsea Deployment Scenarios

We use a NemoSens AUV [19] for subsea mission deployments. It is a torpedo-shaped single-thruster AUV equipped with a DVL wayfinder, a down-facing HD camera, and a KHz side-scan sonar. As mentioned, the integrated software interface allows us to program various missions and generate waypoints for the AUV to execute in real-time. Once programmed, these waypoints are loaded to the AUV for deployment near the starting location.

In this general practice, W2W interface is intended as an HMI bridge, transferring the human language into the mission map; the rest of the experimental scenarios remain the same. The mission vocabulary and language rules adopted in W2W correspond to valid subsea missions programmed on an actual robot platform. We demonstrate this with several examples from our subsea deployments in the GoM (Gulf of Mexico) and the Delaware Bay, Atlantic Ocean. Real deployments are shown in Fig. 7 and earlier in Fig. 1.

As demonstrated in Fig. 7, targeted inspection or mapping missions can be programmed with only a few basic language commands and then validated on our 2D interface. In particular, the Track and Move commands are powerful tools that allow programming lawnmower and polygonal patterns fairly easily. Besides, Spiral and Circle commands are suited for more localized mission patterns. These intuitions are consistent with our user study evaluations as well.

Limitations and future work. Despite the accuracy and ease of use, W2W is a single-shot language summarizer, lacking human-machine dialogue capabilities. As discussed earlier in qualitative analyses (see Fig. 6), a major limitation is that W2W often generates hallucinated commands. Hence, the users must try again since the 2D mission maps show inaccurate patterns. This mainly happens when humans speak (instead of type) - as the standard speech-to-text translation packages often generate erroneous texts, which in turn generate wrong mission parameters. A sample failure case is illustrated in Fig. 8. W2W does not provide a method to edit any minor mistakes or specific parameters; hence users need to redo the program from the start.

As such, we are working on extending the mission programming engine to allow multi-shot generative features to enable memory and dialogues. This would provide the interactivity needed to make corrections to missions if needed. Our overarching goal to be able to use W2W to seek suggestions such as,

“We want to map a shipwreck at N, W location. The wreck is about ft long, the altitude clearance is ft. Suggest a few mission patterns for mapping the shipwreck and its surroundings.”

Users may engage in subsequent dialogue to find the best possible mission for their intended application, taking advantage of the W2W engine’s knowledge mined from comprehensive mission databases. We will integrate these embodied reasoning and dialogue-based mission programming features in future W2W versions.

6 Conclusions

This paper presents an SLM-driven mission programming framework named Word2Wave (W2W) for marine robotics. The use of natural language allows intuitive programming of subsea AUVs for remote mission deployments. We formulate novel language rules and intuitive command structures for efficient language-to-mission mapping in real-time. We develop a sequence-to-sequence learning pipeline based on T5-Small model, which demonstrates robust and more efficient performance compared to other SLM/LLM architectures. We also develop a mission map visualizer for users to validate the W2W-generated mission map before deployment. Through comprehensive quantitative, qualitative, and a user interaction study to validate the effectiveness of W2W through real subsea deployment scenarios. In future W2W versions, we will incorporate human-machine dialogue and spontaneous question-answering for embodied mission reasoning. We also intend to explore language-driven HMIs and adapt W2W capabilities for subsea telerobotics applications.

Acknowledgements

This work is supported in part by the National Science Foundation (NSF) grants # and #. We are thankful to Dr. Arthur Trembanis, Dr. Herbert Tanner, and Dr. Kleio Baxevani at the University of Delaware for facilitating our field trials at the 2024 Autonomous Systems Bootcamp. We also acknowledge the system usability scale (SUS) study participants for helping us conduct the UI evaluation comprehensively.

References

- [1] J. Wang, Z. Wu, Y. Li, H. Jiang, P. Shu, E. Shi, H. Hu, C. Ma, Y. Liu, X. Wang, Y. Yao, X. Liu, H. Zhao, Z. Liu, H. Dai, L. Zhao, B. Ge, X. Li, T. Liu, and S. Zhang, “Large Language Models for Robotics: Opportunities, Challenges, and Perspectives,” arXiv preprint arXiv:2401.04334, 2024.

- [2] C. Zhang, J. Chen, J. Li, Y. Peng, and Z. Mao, “Large Language Models for Human-Robot Interaction: A Review,” Biomimetic Intelligence and Robotics, p. 100131, 2023.

- [3] M. J. Islam, M. Ho, and J. Sattar, “Dynamic Reconfiguration of Mission Parameters in Underwater Human-Robot Collaboration,” in IEEE International Conference on Robotics and Automation (ICRA), pp. 1–8, 2018.

- [4] N. Lucas Martinez, J.-F. Martínez-Ortega, P. Castillejo, and V. Beltran Martinez, “Survey of Mission Planning and Management Architectures for Underwater Cooperative Robotics Operations,” Applied Sciences, vol. 10, no. 3, p. 1086, 2020.

- [5] J. Sattar and G. Dudek, “Underwater Human-robot Interaction via Biological Motion Identification,” in Robotics: Science and Systems (RSS), 2009.

- [6] M. J. Islam, M. Ho, and J. Sattar, “Understanding Human Motion and Gestures for Underwater Human–Robot Collaboration,” Journal of Field Robotics (JFR), vol. 36, no. 5, pp. 851–873, 2019.

- [7] A. Vasilijević, D. Nad, F. Mandić, N. Mišković, and Z. Vukić, “Coordinated Navigation of Surface and Underwater Marine Robotic Vehicles for Ocean Sampling and Environmental Monitoring,” IEEE/ASME transactions on mechatronics, vol. 22, no. 3, pp. 1174–1184, 2017.

- [8] C. M. Gussen, C. Laot, F.-X. Socheleau, B. Zerr, T. Le Mézo, R. Bourdon, and C. Le Berre, “Optimization of Acoustic Communication Links for a Swarm of AUVs: The COMET and NEMOSENS Examples,” Applied Sciences, vol. 11, no. 17, p. 8200, 2021.

- [9] J. Zhou, Y. Si, and Y. Chen, “A Review of Subsea AUV Technology,” Journal of Marine Science and Engineering, vol. 11, p. 1119, 2023.

- [10] J. Lin, H. Gao, R. Xu, C. Wang, L. Guo, and S. Xu, “The Development of LLMs for Embodied Navigation,” arXiv preprint arXiv:2311.00530, 2023.

- [11] S.-M. Park and Y.-G. Kim, “Visual Language Navigation: A Survey and Open Challenges,” Artificial Intelligence Review, vol. 56, no. 1, pp. 365–427, 2023.

- [12] Z. Shao, Z. Yu, M. Wang, and J. Yu, “Prompting Large Language Models with Answer Heuristics for Knowledge-Based Visual Question Answering,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 14974–14983, 2023.

- [13] T. Brooks, A. Holynski, and A. A. Efros, “Instructpix2pix: Learning to Follow Image Editing Instructions,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 18392–18402, 2023.

- [14] W. Huang, F. Xia, T. Xiao, H. Chan, J. Liang, P. Florence, A. Zeng, J. Tompson, I. Mordatch, Y. Chebotar, P. Sermanet, N. Brown, T. Jackson, L. Luu, S. Levine, K. Hausman, and B. Ichter, “Inner Monologue: Embodied Reasoning Through Planning with Language Models,” arXiv preprint arXiv:2207.05608, 2022.

- [15] R. Yang, M. Hou, J. Wang, and F. Zhang, “OceanChat: Piloting Autonomous Underwater Vehicles in Natural Language,” arXiv preprint arXiv:2309.16052, 2023.

- [16] Z. Bi, N. Zhang, Y. Xue, Y. Ou, D. Ji, G. Zheng, and H. Chen, “OceanGPT: A Large Language Model for Ocean Science Tasks,” arXiv preprint arXiv:2310.02031, 2023.

- [17] C. Raffel, N. Shazeer, A. Roberts, K. Lee, S. Narang, M. Matena, Y. Zhou, W. Li, and P. J. Liu, “Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer,” Journal of Machine Learning Research, vol. 21, no. 140, pp. 1–67, 2020.

- [18] G. S. Andy, L. Mathieu, G. Bill, and L. Damian, “Subsea Robotics-AUV Pipeline Inspection Development Project,” in Offshore Technology Conference, p. D021S024R001, OTC, 2022.

- [19] R. Inc., “NemoSens: An Open-Architecture, Cost-Effective, and Modular Micro-AUV.” https://rtsys.eu/nemosens-micro-auv, 2020.

- [20] J. McMahon and E. Plaku, “Mission and Motion Planning for Autonomous Underwater Vehicles Operating in Spatially and Temporally Complex Environments,” IEEE Journal of Oceanic Engineering, vol. 41, no. 4, pp. 893–912, 2016.

- [21] J. Achiam, S. Adler, S. Agarwal, L. Ahmad, I. Akkaya, F. L. Aleman, D. Almeida, J. Altenschmidt, S. Altman, S. Anadkat, et al., “Gpt-4 Technical Report,” arXiv preprint arXiv:2303.08774, 2023.

- [22] A. Palnitkar, R. Kapu, X. Lin, C. Liu, N. Karapetyan, and Y. Aloimonos, “ChatSim: Underwater Simulation with Natural Language Prompting,” in OCEANS 2023-MTS/IEEE US Gulf Coast, pp. 1–7, IEEE, 2023.

- [23] M. Lewis, “BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension,” arXiv preprint arXiv:1910.13461, 2019.

- [24] M. Junczys-Dowmunt, R. Grundkiewicz, T. Dwojak, H. Hoang, K. Heafield, T. Neckermann, F. Seide, U. Germann, A. Fikri Aji, N. Bogoychev, A. F. T. Martins, and A. Birch, “Marian: Fast Neural Machine Translation in C++,” in Proceedings of ACL, System Demonstrations, pp. 116–121, 2018.

- [25] J. Brooke, “SUS- A Quick and Dirty Usability Scale,” Usability Evaluation in Industry, vol. 189, no. 194, pp. 4–7, 1996.

- [26] M. Chandarana, E. L. Meszaros, A. Trujillo, and B. D. Allen, “’Fly Like This’: Natural Language Interface for UAV Mission Planning,” in International Conference on Advances in Computer-Human Interactions, no. NF1676L-26108, 2017.

- [27] S. García, P. Pelliccione, C. Menghi, T. Berger, and T. Bures, “High-Level Mission Specification for Multiple Robots,” in Proceedings of the 12th ACM SIGPLAN International Conference on Software Language Engineering, pp. 127–140, 2019.

- [28] D. Shah, B. Eysenbach, G. Kahn, N. Rhinehart, and S. Levine, “Ving: Learning Open-World Navigation with Visual Goals,” in IEEE International Conference on Robotics and Automation (ICRA), pp. 13215–13222, 2021.

- [29] G. Huang, “Visual-Inertial Navigation: A Concise Review,” in International Conference on Robotics and Automation (ICRA), pp. 9572–9582, IEEE, 2019.

- [30] M. Chandarana, E. L. Meszaros, A. Trujillo, and B. D. Allen, “Challenges of Using Gestures in Multimodal HMI for Unmanned Mission Planning,” in Proceedings of the AHFE 2017 International Conference on Human Factors in Robots and Unmanned Systems, Springer, 2018.

- [31] M. A. Shah, “A Language-Based Software Framework for Mission Planning in Autonomous Mobile Robots,” Master’s thesis, Pennsylvania State University, 2011.

- [32] D. W. Mutschler, “Language Based Simulation, Flexibility, and Development Speed in the Joint Integrated Mission Model,” in Proceedings of the Winter Simulation Conference., p. 8, IEEE, 2005.

- [33] D. C. Silva, P. H. Abreu, L. P. Reis, and E. Oliveira, “Development of a Flexible Language for Mission Description for Multi-Robot Missions,” Information Sciences, vol. 288, pp. 27–44, 2014.

- [34] A. C. Trujillo, J. Puig-Navarro, S. B. Mehdi, and A. K. McQuarry, “Using Natural Language to Enable Mission Managers to Control Multiple Heterogeneous UAVs,” in Proceedings of the AHFE 2016 International Conference on Human Factors in Robots and Unmanned Systems, pp. 267–280, Springer, 2017.

- [35] F. D. S. Cividanes, M. G. V. Ferreira, and F. de Novaes Kucinskis, “An Extended HTN Language for Onboard Planning and Acting Applied to a Goal-Based Autonomous Satellite,” IEEE Aerospace and Electronic Systems Magazine, vol. 36, no. 8, pp. 32–50, 2021.

- [36] J. Wu, R. Antonova, A. Kan, M. Lepert, A. Zeng, S. Song, J. Bohg, S. Rusinkiewicz, and T. Funkhouser, “Tidybot: Personalized Robot Assistance with Large Language Models,” Autonomous Robots, vol. 47, no. 8, pp. 1087–1102, 2023.

- [37] T. Kwon, N. Di Palo, and E. Johns, “Language Models as Zero-Shot Trajectory Generators,” IEEE Robotics and Automation Letters, 2024.

- [38] D. Shah, B. Osiński, B. Ichter, and S. Levine, “LM-Nav: Robotic Navigation with Large Pre-trained Models of Language, Vision, and Action,” in Conference on Robot Learning, pp. 492–504, 2023.

- [39] J. Gao, B. Sarkar, F. Xia, T. Xiao, J. Wu, B. Ichter, A. Majumdar, and D. Sadigh, “Physically Grounded Vision-Language Models for Robotic Manipulation,” in IEEE International Conference on Robotics and Automation (ICRA), pp. 12462–12469, 2024.

- [40] Z. Mandi, S. Jain, and S. Song, “Roco: Dialectic Multi-robot Collaboration with Large Language Models,” in IEEE International Conference on Robotics and Automation (ICRA), pp. 286–299, 2024.

- [41] P. J. B. Sánchez, M. Papaelias, and F. P. G. Márquez, “Autonomous Underwater Vehicles: Instrumentation and Measurements,” IEEE Instrumentation & Measurement Magazine, vol. 23, pp. 105–114, 2020.

- [42] D. Wei, P. Hao, and H. Ma, “Architecture Design and Implementation of UUV Mission Planning,” in 2021 40th Chinese Control Conference (CCC), pp. 1869–1873, IEEE, 2021.

- [43] I. A. McManus, A Multidisciplinary Approach to Highly Autonomous UAV Mission Planning and Piloting for Civilian Airspace. PhD thesis, Queensland University of Technology, 2005.

- [44] P. Oliveira, A. Pascoal, V. Silva, and C. Silvestre, “Mission Control of the MARIUS Autonomous Underwater Vehicle: System Design, Implementation, and Sea trials,” International journal of systems science, vol. 29, no. 10, pp. 1065–1080, 1998.

- [45] J. González-García, A. Gómez-Espinosa, E. Cuan-Urquizo, L. G. García-Valdovinos, T. Salgado-Jiménez, and J. A. Escobedo Cabello, “Autonomous Underwater Vehicles: Localization, Navigation, and Communication for Collaborative Missions,” Applied Sciences, vol. 10, no. 4, p. 1256, 2020.

- [46] C. Whitt, J. Pearlman, B. Polagye, F. Caimi, F. Muller-Karger, A. Copping, H. Spence, S. Madhusudhana, W. Kirkwood, L. Grosjean, et al., “Future Vision for Autonomous Ocean Observations,” Frontiers in Marine Science, vol. 7, p. 697, 2020.

- [47] S. S. Enan, M. Fulton, and J. Sattar, “Robotic Detection of a Human-comprehensible Gestural Language for Underwater Multi-human-robot Collaboration,” in 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 3085–3092, IEEE, 2022.

- [48] A. Abdullah, R. Chen, I. Rekleitis, and M. J. Islam, “Ego-to-Exo: Interfacing Third Person Visuals from Egocentric Views in Real-time for Improved ROV Teleoperation,” in In Review. ArXiv: 2407.00848, 2024.

- [49] F. Xu, Q. Zhu, S. Li, Z. Song, and J. Du, “VR-Based Haptic Simulator for Subsea Robot Teleoperations,” in Computing in Civil Engineering, pp. 1024–1032, 2021.

- [50] N. J. Hallin, B. Johnson, H. Egbo, M. O’Rourke, and D. Edwards, “Using Language-Centered Intelligence to Optimize Mine-Like Object Inspections for a Fleet of Autonomous Underwater Vehicles,” UUST’09, 2009.

- [51] M. J. Islam, J. Mo, and J. Sattar, “Robot-to-robot Relative Pose Estimation using Humans as Markers,” Autonomous Robots, vol. 45, no. 4, pp. 579–593, 2021.

- [52] J. Wu, B. Yu, and M. J. Islam, “3d reconstruction of underwater scenes using nonlinear domain projection,” in 2023 IEEE Conference on Artificial Intelligence (CAI), pp. 359–361, IEEE, 2023. Best Paper Award.

- [53] R. Capocci, G. Dooly, E. Omerdić, J. Coleman, T. Newe, and D. Toal, “Inspection-Class Remotely Operated Vehicles—A Review,” Journal of Marine Science and Engineering, vol. 5, no. 1, p. 13, 2017.

- [54] VideoRay, “OctoView: A Cutting Edge Mixed Reality Interface for Pilots and Teams.” https://videoray.com/labs/, 2024.

- [55] M. J. Islam, “Eye On the Back: Augmented Visuals for Improved ROV Teleoperation in Deep Water Surveillance and Inspection,” in SPIE Defense and Commercial Sensing, (Maryland, USA), SPIE, 2024.

- [56] F. Xu, T. Nguyen, and J. Du, “Augmented Reality for Maintenance Tasks with ChatGPT for Automated Text-to-Action,” Journal of Construction Engineering and Management, vol. 150, no. 4, 2024.

- [57] M. Laranjeira, A. Arnaubec, L. Brignone, C. Dune, and J. Opderbecke, “3D Perception and Augmented Reality Developments in Underwater Robotics for Ocean Sciences,” Current Robotics Reports, vol. 1, pp. 123–130, 2020.

- [58] F. Gao, “Mission Planning and Replanning for ROVs,” Master’s thesis, NTNU, 2020.

- [59] J. E. Manley, S. Halpin, N. Radford, and M. Ondler, “Aquanaut: A New Tool for Subsea Inspection and Intervention,” in OCEANS 2018 MTS/IEEE Charleston, pp. 1–4, IEEE, 2018.

- [60] P. Parekh, C. McGuire, and J. Imyak, “Underwater robotics semantic parser assistant,” arXiv preprint arXiv:2301.12134, 2023.

- [61] C. R. Samuelson and J. G. Mangelson, “A Guided Gaussian-Dirichlet Random Field for Scientist-in-the-Loop Inference in Underwater Robotics,” in 2024 IEEE International Conference on Robotics and Automation (ICRA), pp. 9448–9454, IEEE, 2024.

- [62] I. C. Rankin, S. McCammon, and G. A. Hollinger, “Robotic Information Gathering Using Semantic Language Instructions,” in 2021 IEEE International Conference on Robotics and Automation (ICRA), pp. 4882–4888, IEEE, 2021.

- [63] E. Potokar, S. Ashford, M. Kaess, and J. G. Mangelson, “HoloOcean: An Underwater Robotics Simulator,” in International Conference on Robotics and Automation (ICRA), pp. 3040–3046, IEEE, 2022.

- [64] M. M. M. Manhães, S. A. Scherer, M. Voss, L. R. Douat, and T. Rauschenbach, “UUV Simulator: A Gazebo-Based Package for Underwater Intervention and Multi-Robot Simulation,” in Oceans MTS/IEEE Monterey, pp. 1–8, 2016.

- [65] M. Prats, J. Perez, J. J. Fernández, and P. J. Sanz, “An Open Source Tool for Simulation and Supervision of Underwater Intervention Missions,” in IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 2577–2582, 2012.

- [66] V. Agafonkin, “Leaflet.” https://leafletjs.com/, 2010.

- [67] J. Devlin, “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding,” arXiv preprint arXiv:1810.04805, 2018.

- [68] A. Choudhary, M. Alugubelly, and R. Bhargava, “A Comparative Study on Transformer-based News Summarization,” in International Conference on Developments in eSystems Engineering (DeSE), pp. 256–261, 2023.

- [69] K. Papineni, S. Roukos, T. Ward, and W.-J. Zhu, “BLEU: A Method for Automatic Evaluation of Machine Translation,” in Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, pp. 311–318, 2002.

- [70] S. Banerjee and A. Lavie, “METEOR: An Automatic Metric for MT Evaluation with Improved Correlation with Human Judgments,” in Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, pp. 65–72, 2005.