Wireless and Service Allocation for Mobile Computation Offloading with Task Deadlines

Abstract

In mobile computation offloading (MCO), mobile devices (MDs) can choose to either execute tasks locally or to have them executed on a remote edge server (ES). This paper addresses the problem of assigning both the wireless communication bandwidth needed, along with the ES capacity that is used for the task execution, so that task completion time constraints are satisfied. The objective is to obtain these allocations so that the average power consumption of the mobile devices is minimized, subject to a cost budget constraint. The paper includes contributions for both soft and hard task completion deadline constraints. The problems are first formulated as mixed integer nonlinear programs (MINLPs). Approximate solutions are then obtained by decomposing the problems into a collection of convex subproblems that can be efficiently solved. Results are presented that demonstrate the quality of the proposed solutions, which can achieve near optimum performance over a wide range of system parameters.

Index Terms:

Edge computing, mobile computation offloading, soft and hard task completion deadlines, cost budget constraints, power efficiency.I Introduction

Mobile computation offloading (MCO) can be used to improve mobile device (MD) performance by running computational tasks on a remote cloud server rather than executing them locally [1, 2, 3]. Since the energy needed for task execution is incurred by the cloud server, a reduction in mobile device energy consumption can often be obtained [4, 5, 6, 7, 8, 9, 10]. During MCO, wireless communications is used by the MD to communicate with the cloud server. This interaction incurs MD energy use that would not otherwise exist if the task were executed at the MD. MCO also incurs added latency due to the time needed for the MD to interact with the cloud server [11, 12]. An edge server (ES) located close to the network base stations is typically used to reduce this delay by providing high interconnection bandwidth between the base station (BS) and the ES [13].

The question of whether a given task should be offloaded has been studied extensively [14, 15, 16, 17, 18, 19, 20, 21, 22, 23]. It is clear from this work that in order to obtain good performance, the offloading decisions should incorporate both the limited edge server computational capacity [21, 22, 23], and the temporal evolution of the system during the computation offload. This includes the queueing behaviour experienced by offloaded tasks awaiting execution at the ES [18, 19, 20]. Prior work has also considered the question of how best to configure system resources so that MCO is best accommodated [14, 18, 19, 20, 24, 25]. These are the issues that are considered in our paper and involve the tradeoffs between wireless communication and edge server capacity assignment and how these affect the delay performance experienced by the MDs.

The wireless and execution capacity assignment problem in MCO can be informally stated as follows. A network leaseholder (NL) purchases both wireless channel capacity and edge server execution services, subject to a cost budget constraint. The leased resources are then used to provide MCO to a large set of mobile devices [26]. When an MD generates a task for execution, there is an associated deadline, which gives the time by which task execution should be completed with a high degree of certainty [27]. The objective is to find a joint wireless and ES resource assignment that minimizes the mean MD power consumption subject to the budget constraint and constraints on the task completion times. Note that this problem is different than that of network slice creation [28]. In this case, the NL simply purchases services from the network owner (NO), who prices the cost of unit wireless channel and computational resources. Due to the edge server placement, we consider the case where the dominant latencies are that of wireless access and edge server execution [13].

The paper is novel in that it includes formulations for both soft and hard task completion time deadlines. In the soft deadline case, the wireless and edge server capacity assignments are designed so that the probability of task completion time deadline violation is upper bounded. In the hard deadline case, task execution deadlines must always be respected, which is accomplished by including concurrent local execution (CLE) [29] into the problem formulation. In CLE, local execution of the task may be initiated while offloading is ongoing, so that the task completion time deadline is always met.

The inclusion of task deadline constraints significantly increases the difficulty of the problem compared to that of prior work with no completion time requirements or that uses a mean delay criterion [30, 31]. In order to obtain solutions to the problem, a queuing model is used to obtain the delay distribution experienced by tasks that are offloaded to the ES [32, 31]. This model is incorporated into the resulting optimization problems, which are formulated as mixed integer nonlinear programming problems (MINLPs) that are computationally hard to solve exactly. Approximate solutions are obtained by decomposing the non-convex non-linear formulation into a collection of convex subproblems that can be solved efficiently, and then picking the best of these solutions.

A variety of results are presented that characterize the tradeoffs between task deadline violation, average MD power consumption and the cost budget. Our results show the quality of the proposed solutions, which can achieve close-to-optimum performance for a wide range of system parameters. The results also show that with CLE, the proposed solution not only guarantees respecting all hard task completion deadlines, but does so with only slightly higher MD power consumption when compared to the soft task completion deadlines solution with a small deadline violation probability. On the other hand, we show that there is an apparent trade-off in the case of soft task completion deadlines between the average power consumption and the deadline violation probability. Namely, the average MD power consumption of our solution is significantly reduced when a higher deadline violation probability is tolerable.

The main contributions of the paper are summarized below.

-

•

This paper addresses the problem of assigning computational and wireless channel resources for MCO, subject to task execution completion time deadlines. The work is the first that generates joint resource assignments for both soft and hard task deadlines using very general system modelling assumptions compared to prior work. The soft deadline case aims to create assignments so that the probability of task completion time deadline violation is upper bounded. In the hard deadline case, the paper is also unique in that it creates resource assignments where task completion time deadlines are always satisfied. This is done by incorporating CLE into the problem formulations. For this reason, this is the first paper that obtains system resource assignments for MCO that ensure that task completion time deadlines are always satisfied.

-

•

Modeling both soft and hard job completion time targets significantly increases the difficulty of the problem compared to prior work with no completion time requirements or that uses a mean delay criterion [30] [31]. In both deadline cases, the paper addresses this by incorporating an ES queueing system into the problem formulation that models the delay distribution experienced by arriving tasks. The assignment problem is addressed by inverting the estimated probability density function (PDF) of the task completion time and incorporating it into the optimizations. These resource assignments are obtained under very general modeling assumptions, where the wireless channels are modeled as arbitrary base station specific sets of Markov processes and task execution times have a general probability distribution.

-

•

The problems are first formulated as MINLPs, with integral decision variables for the number of wireless channels reserved, and a continuous decision variable for the portion of ES reserved. Even the relaxations of these MINLPs are difficult to solve, since they are non-convex. Hence, instead of following the common practice of solving the relaxation and rounding the fractional solution, we observe that the discretization of the continuous variable and the replacement of the discrete channel variables by approximate functions of the continuous blocking probabilities, allows us to break the original non-convex MINLPs into collections of convex subproblems, that can be solved efficiently. Our solutions are approximate, and their accuracy depends on both the discretization granularity and the approximation functions used for blocking probabilities. On the other hand, they are based on very general assumptions, i.e., the existence of convex upper bound approximations of the inversion of blocking probabilities. The more restricted the system model is, the better these approximations are.

The remainder of the paper is organized as follows. In Section II the prior work most related to our paper is reviewed. The system model and problem formulation is then described in Section III. In Section III-A, the general design problem is first considered assuming soft task completion time deadlines, where the probability of deadline violation is bounded. Following this, in Section III-B a formulation is described when task completion times are subject to hard deadlines. The problem formulations in both cases are non-convex and difficult to deal with directly using conventional optimization approaches. In Section IV, approximation solutions are proposed where the original problems are decomposed into convex subproblems that can be efficiently solved. Both the soft and hard deadline cases are considered in Sections IV-A and IV-B. Section V then introduces some common system assumptions used in the remainder of the paper when solving the optimizations. Both the soft and hard deadline cases are then treated in detail in Sections V-A and V-B. In Section VI simulation results that demonstrate the proposed designs are given. Both the single class and multiple classes of tasks cases are considered in Sections VI-A and VI-B. Finally, we present our conclusions of the work in Section VII.

II Related Work

A large amount of prior MCO work considers the problem based on system state inputs sampled at task generation times, i.e., the models assume that the system is static throughout the offload period [14, 18, 19, 20, 24, 25, 21, 22, 15, 17, 33, 34, 23]. As in our paper, task offloading decisions become more complex when the MD interacts with the network over wireless channels that may change randomly during the offload. Reference [32] studies a distributed computation offloading problem with delay constraints using stochastic communication channels but does not take into account the energy consumption incurred during task offloading. The work in [30] uses a Markov decision process that analyzes the mean task delay and the average system throughput. Unlike our paper, a throughput maximization problem is formulated with constraints on the average task delay, rather than using the delay distribution. In [31], task offloading is modeled as a game using a network of queues to obtain the end-to-end delay. The problem is transformed into one with a generalized Nash equilibrium solution that captures the conflicting interests in resource allocation among mobile network operators and computing resource providers. In references [30] and [31] the average delay is considered rather than the stringent types of soft and hard delay constraints considered in our paper. Reference [35] considers task offloading with statistical QoS guarantees (i.e., tasks are allowed to complete before a given deadline with a probability above a given threshold) to maximize the MD energy efficiency. The energy efficiency is defined as the ratio of the overall executed (transmitted) bits of tasks to the total energy consumption of the MDs. Statistical computation and transmission models are introduced to quantify the correlation between the statistical quality of service (QoS) guarantee and task offloading process. Unlike the models used in [31] and [35], our paper uses a task offloading and resource allocation formulation that uses very general system model assumptions, including base station specific sets of Markov processes for channel modelling.

| References | Joint channel and computation resource assignment | Soft task deadlines | Hard task deadlines | Resource expense | Temporal evolution |

|---|---|---|---|---|---|

| [17][21][22][23] | |||||

| [24][36] | ✓ | ||||

| [26] | |||||

| [30][31] | ✓ | ✓ | |||

| [32] | ✓ | ||||

| [35] | ✓ | ✓ | ✓ | ||

| Our paper | ✓ | ✓ | ✓ | ✓ | ✓ |

Reducing both mobile energy consumption and task execution time is a common objective in mobile computation offloading. The work in [36] investigates a latency minimization problem in a multi-user time-division multiple access system with joint communication and computation resource allocation. Our paper, instead, uses a soft task deadline criterion based on modelling the distributions of both upload and execution time delays. Hard completion time constraints are considered in references [17, 21, 22, 33, 32, 23]. However, unlike our work, they consider the hard completion time requirement as a constraint in the problem formulation. For this reason, if the provided network resources or the MD transmit power are insufficient, the hard completion time constraints may not be satisfied. In our work, we avoid this infeasibility by applying CLE that ensures that hard completion time constraints are always satisfied. A benefit from integrating CLE into the problem formulation is that we no longer require the hard completion time constraints in the problem formulation. The objective in [21] is to minimize the energy consumption of the entire system, and in references [22] and [33], the objective is to minimize the total energy consumption of all MDs. Instead of satisfying delay constraints, the work in [24, 25, 34] optimize a utility function that is a weighted sum of task completion time and energy consumption. Unlike the above work, two different kinds of delay constraints are introduced in our paper, i.e., soft deadlines captured by the statistics of the completion time of the tasks and hard deadlines that are always satisfied by CLE.

Prior work has considered the optimization of wireless network and computational server resources to improve MCO performance [14, 18, 19, 20, 24, 25]. In particular, offloading decisions and base station associations are optimized with transmission power and channel assignments in a cellular network to minimize the total energy consumption of all MDs, subject to task’s latency constraints [17]. Reference [21] studies the problem of task offloading and channel resource allocation for ultra-dense networks and minimizes the total energy consumption of the system with a limited delay tolerance. The work in [22] studies MCO by considering application latency fairness and minimizes MD energy consumption by jointly optimizing the offloading ratio, channel assignments, and channel time allocations. Reference [23] investigates the power minimization problem for meeting the service delay requirements in multi-cell multi-user mobile edge computing networks. Channel assignment and power allocation problems are considered jointly. The work in [26] studies the joint resource management of link scheduling, channel assignment and power control for device-to-device communication assisted multi-tier fog computing with the objective of maximizing the network operator profit under deadline requirements. It considers the service charge collected from all end users, total expense in renting third-party fog nodes, and the energy cost of the ES. All of this work [21, 22, 17, 23, 26] optimizes radio resources and offloading decisions without considering edge server computational capability. The work in [24] investigates relay-assisted computation offloading to minimize the weighted sum of task execution delay and the energy consumption by jointly optimizing the offloading ratio, bandwidth allocation, processor speeds, and transmit power.

Table I summarizes the work described above that is most related to our paper, and compares it to this paper on five key properties: {LaTeXdescription}

The column denotes work where both channel and computation resource assignments are jointly generated. Our work differs from the rest in that we assign aggregate channel resources from the network operator to each base station so that it can support its associated mobile device population, i.e., we do not allocate channel and computation resources of each BS and ES to individual MDs.

The work selected in this column considers some form of soft (i.e., statistical) task deadlines. However, the models we use in this paper are quite different with more general underlying assumptions. Since our soft deadline model aims to set bounds on the probability of task deadline violation, we model the complete delay distribution experienced by executed tasks. This includes the base station channel delay (which is modeled by base station specific Markov processes) and the queueing delay experienced at the ES, where execution times can have a general distribution.

Although there is other work selected in this column, a significant difference exists compared with our paper, which we have already discussed above. Namely, our work can always satisfy all hard task deadlines by incorporating the CLE mechanism into the modeled system. The related work, instead, considers the existence of hard deadlines as a problem constraint that may result in problem infeasibility, which can never happen in our case.

This column denotes work where the resources provided to the MDs are charged by a third-party (e.g., network operator). The work selected considers computational resource expense but not on the wireless base station side. A network profit maximization problem is studied where an expense budget is not considered, unlike the case in our work.

Temporal evolution means that the offload periods may include stochastic changes to the wireless channels and the ES, so that this information must be modeled in the problem formulation, as in our paper. The randomness modeled in the selected work has different underlying assumptions compared to our paper.

III System Model and Problem Formulation

As shown in Fig. 1, we consider a network that consists of BSs that are owned and operated by a NO. The set of BSs is denoted by and indexed by . The network also contains an ES. Tasks generated by an MD can be offloaded through the wireless network and executed on the ES.

The NO permits a NL to rent wireless communication and ES computational capacity that the NL can use for mobile computation offloading for its MDs. When this is done, for each BS , there are up to available channels that can be selected by the NL. The cost of renting a channel from BS is set by the NO to . When a channel is included in the agreement, the NO agrees to provision its network so that sufficient resources are available to allow the traffic generated on the channel to be carried to the ES with an acceptable delay with a high degree of certainty. Since the ES is located at the edge of the network, we focus on the dominant sources of delay, i.e., wireless access at the BSs and task execution at the ES [13].

In order to use the computing resources at the ES, the NL must also lease CPU resources at the ES. The cost (based on the number of CPU cycles per second) for leasing on the CPU resource is denoted by . The maximum available CPU speed for rental is CPU cycles per second.

When an agreement is made between the NO and NL, is defined as the number of channels from BS that are included, and is defined as the fraction of maximum CPU speed at the ES that is included, i.e., the CPU speed available for the NL will be . It is assumed that the NL has a cost budget, denoted by . Accordingly, the total rent must satisfy the following constraint:

| (1) |

There are classes of tasks generated by the MDs, which may need to be offloaded to the ES. Let be the set of task classes. The class of a task is defined by parameters , , and , where is the input data size in bits, is the computation load in number of CPU cycles, and is the deadline of the task in seconds. In what follows, is the task deadline rounded down to time slots of the same duration as the wireless transmission time slots (see below). The probability of a task generated by an MD belonging to class is denoted by ; we assume that this probability is known, e.g., by observing the past history of offloading requests.

Our objective is to create a NO/NL contract for MCO. In MCO, tasks generated by an MD can be executed either locally (at the MD itself) or offloaded through the network and executed on the ES. We focus on two goals, each depending on how hard the task deadline constraint is. Our first goal is to accomplish this so that the mean mobile power consumption is minimized subject to the cost budget constraint and such that the probability that task execution deadline violation is bounded, i.e., the deadline constraints can be violated, albeit rarely. Our second goal is to create a power-efficient, budget-respecting assignment which respects all task deadlines, i.e., deadline constraints are hard; for that purpose we will employ CLE [29].

We model the wireless channels between the MDs and the BSs as discrete-time Markov processes. It is assumed that there are channel models for BS , which are a function of the radio propagation environment that the MDs experience at that BS. is the set of all wireless channel models in BS . For each of the channel models, the Markovian transition probabilities are defined in the usual way, i.e., given the channel state in the current time slot, there is a probability associated to its transition to another state in the next time slot. The time slot duration is defined to be seconds. A class task, offloaded to BS by the MD, encounters channel model with probability ; as with task generation probabilities above, we assume that this probability is also known, e.g., by observing the past history of offloading requests.

To obtain the design, the decision to offload the execution of a task is made using a local execute on blocking (LEB) mechanism as follows. When an MD in BS generates a class task, the MD offloads the task if at least one of the channels is available for immediate use. Otherwise, the MD executes the task locally. When a channel is available, the MD begins the offload by uploading the task bits needed for execution on the ES. The LEB mechanism is useful in that either local execution or remote offloading is initiated immediately at task release time, which may be advantageous when task deadlines are tight. It also provides a simple mechanism for assessing when the current level of local congestion is high, which would suggest that local execution is beneficial.

Tasks arrive at BS according to a stationary process with average arrival rate tasks per second. According to the LEB mechanism, a new task is blocked from BS channel access if all the channels are busy with uploading other tasks. We denote the task blocking probability at BS by , which is a function of . For the sake of notation simplicity, we use in the rest of the paper. Let be the power needed in the MD to process tasks. When a class task is blocked from offloading and executed locally, the local execution time is given as , where is the MD’s execution speed in number of CPU cycles per time slot111 is normally measured in CPU cycles, but in order to apply CLE and to simplify the system, we round it up to a multiple of .. Define as the average local execution time of tasks. Since the task blocking is caused by channel access, which is the same for all task classes, we have . The average energy consumption for executing a task locally is given by . Consider all the tasks that are generated in BS and blocked from offloading in one second, then the mean energy for executing these tasks locally is

| (2) |

which is the average power consumption of the MDs.

The wireless upload transmission time of a th class task in BS when the wireless channel model is , is measured in time slots. The mean wireless upload transmission time for th class tasks in BS according to channel model can be calculated, since can be computed for all from channel model . Moreover, the mean wireless transmission time for BS is

| (3) |

Under the stated assumptions, the aggregate mean task arrival rate at the ES is given by

| (4) |

As is normally the case for stability in a single server queueing system, the following constraint must always be satisfied,

| (5) |

where denotes the mean service rate at the ES, i.e, . As will become clear later, we can relax this constraint to without affecting our proposed solutions.

Let be the delay (including both queueing and execution time) experienced by a th class task from BS at the ES, under wireless channel model . It takes continuous values, and , for any , is a function of and . In what follows, is the discretization of , measured in time slots; its distribution is calculated by

| (6) |

for any number of time slots . Table II lists the related notation and their associated meanings.

| Notation | Definition | Units |

|---|---|---|

| Set of BSs, | ||

| Set of task classes, | ||

| Set of channel models of BS , | ||

| Number of available channels in BS | ||

| Maximum available ES capacity | CPU cycles/sec | |

| Unit price of wireless channels from BS | $ per channel | |

| Unit price of ES capacity | $ per bps | |

| Number of channels from BS | ||

| Fraction of maximum ES capacity | ||

| Cost budget | $ | |

| Data size of a task in class | bits | |

| Computation load of a task in class | CPU cycles | |

| Deadline of a task in class | sec | |

| Discretized deadline of a task in class | Time slots | |

| Probability of a task belonging to class | ||

| Probability of a class task in BS with channel model | ||

| Blocking probability in BS | ||

| Mean service rate at the ES | Tasks/sec | |

| Average task arrival rate in BS | Tasks/sec | |

| Aggregate average task arrival rate at ES | Tasks/sec | |

| Time slot | sec | |

| Wireless transmission time of a th class task in BS with channel model | Time slots | |

| Mean wireless transmission time of a th class task in BS with channel model | Time slots | |

| Mean task uploading transmission time in BS | Time slots | |

| Execution time at ES for class tasks from BS with channel model | sec | |

| Discretized value of | Time slots | |

| Latest feasible starting time for local execution | Time slots | |

| Tolerable probability a class task exceeds deadline | ||

| Local energy consumption per time slot | Joules | |

| Wireless transmission energy per time slot | Joules | |

| Average MD power consumption for uploading tasks in BS | Watts | |

| Average MD power consumption for uploading and executing tasks in BS | Watts |

III-A Problem Formulation with Soft Deadlines

We consider the distribution of total delay for an offloaded task, which is the sum of the data upload delay and the task execution at ES delay , for BS , task class , and channel model . Note that both delays are random variables. As mentioned earlier, the data transmission delay from the BS to the ES is negligible. In addition, in this paper we consider the case of a very small amount of data returned once the execution is completed, and, therefore, we consider only uploading delays between MD and BS.

Following common practice (e.g., [27]) in modelling soft deadlines along the lines of QoS requirements, a th class task in BS under wireless channel model , must have a total delay satisfying

| (7) |

where is the (given) tolerated probability the completion time of a class task exceeds its deadline.222The case corresponds to the case of hard deadlines, and will be dealt with in the next section. Note that takes discrete values (number of time slots), takes discrete values (number of CPU cycle periods), while is continuous (in seconds), so (7) assumes that all quantities are first converted to secs. Its LHS is a function of .

The joint probability distribution of total delay is

| (8) |

where is the maximum value that can take, since is the execution time at the ES without queueing.

The average power consumption of MDs in BS to upload tasks that are granted channels for offloading is

| (9) |

where is the transmission energy per time slot used by the MD for uploading the task bits. Therefore, the expected average power consumption of the MDs for uploading and executing tasks arriving at BS is .

Our objective is to create an allocation that minimizes under the cost budget and deadline constraints (1) and (7). The problem can be formulated as follows:

| (10) | |||||

| (11) | |||||

| (12) | |||||

| (13) | |||||

| (14) | |||||

| (15) | |||||

Constraints (11) and (12) are constraints (1) and (7). Constraint (13) is the (relaxed) queue stability requirement for ES; it is equivalent to (5), since equality leads to infinite mean queueing delay, which is never optimal. The optimization problem (10)-(15) is a mixed integer nonlinear programming (MINLP) problem. Constraint (14) ensures that the number of channels assigned does not exceed the maximum number available in each BS. Even the fractional relaxation of MINLP problem (10)-(15) is non-convex, due to its objective and constraints (12), and, as a result, it is computationally inefficient to solve it exactly. Hence we are going to propose approximate solutions for it.

III-B Problem Formulation with Hard Deadlines

For the case of hard deadline constraints, i.e., when the task deadline must be respected, we employ CLE [29]. In CLE, local execution of the task may be initiated while offloading is ongoing, so that the task deadline is always met, even if offloading fails to finish in time due to the stochastic nature of the wireless channels. Guaranteeing task completion before its deadline may incur additional costs (due to potentially simultaneous local and remote execution of the same task).

When CLE is employed, and in order to ensure that the local execution of a task from class finishes by its deadline, the latest feasible starting time for local execution is

| (16) |

The expected wireless transmission power is still given by (9). However, due to the overlap of offloading and local execution because of CLE, there is an extra mean power consumption due to a (potential) overlap with local execution. This expected overlap power consumption is

| (17) |

where is the number of time slots needed to complete the offloaded task, and is the offloading and local execution overlap. Note that is a function of and .

In case the task offloading goes beyond the finish of the local execution of a task, there is an extra power consumption incurred, whose expected value is

| (18) |

Hence, the expected power consumption of MDs for offloaded tasks in BS in one second is

| (19) |

and the expected power consumption of MDs for tasks arriving at BS in one second is .

As before, our objective is to minimize the total expected power consumption of the MDs for uploading and executing the tasks that are generated in one second, but now subject to hard deadline constraints. The problem is formulated as follows:

| (20) | ||||

| (21) | ||||

| (22) | ||||

| (23) | ||||

| (24) |

IV General Approximate Allocation Solutions

In this section, we propose approximate solutions for optimization problems (10)-(15) and (20)-(24), by decomposing them into convex optimization subproblems which can be solved efficiently.

IV-A Approximate Solution for Soft Deadlines

In this subsection, we propose an approximate solution for the optimization problem (10)-(15) by decomposing it into several convex subproblems that can be solved efficiently, solve them, and then keep the best solution. More specifically, we discretize variable by breaking into equal segments, so that takes values , for . With fixed, we show that the relaxation of (10)-(15) can be approximated by a convex optimization problem, which can be solved in polynomial time. The resulting (fractional) ’s are then rounded to integer values (and this is another source of suboptimality for our solution method). After solving the resulting problems, we output the minimum solution . Obviously, the quality of the approximation depends on the discretization parameter .

We consider the relaxed version of problem (10)-(15), i.e., constraint (14) has been replaced by . With fixed, we show that the non-convex problem (10)-(15) can be transformed into an equivalent convex optimization problem with the ’s as the decision variables. First, we concentrate on constraints (12), (13). Note that is a monotonically decreasing function of the aggregate mean task arrival rate . Hence, by binary search in the range , we can approximate within any desired accuracy the maximum possible value of that satisfies constraints (12) for all . Let be this maximum value (note that , so stability is ensured). Using (4), constraints (12), (13) can be replaced by constraint

| (25) |

Next, we note that the blocking probability is monotonically decreasing in ; let be the blocking probability when . Then constraints (14) can be replaced by the equivalent constraints

| (26) |

Constraint (11) is the only remaining constraint with an explicit dependence on the ’s. Since is a function of , one could potentially use its inverse to replace with a function of . However, such an inversion function may not exist explicitly (and even if it does, it may be non-convex). In its stead, we can use a convex upper bound approximation of the inversion of blocking probability, so that

| (27) |

Hence, the new convex optimization problem that approximates the original one when is fixed, is the following:

| (28) | |||||

| (29) | |||||

| (30) | |||||

| (31) | |||||

After solving (28)-(31) and obtaining the ’s, we can compute the largest integral which achieves a blocking probability equal to or bigger than , for all .

Complexity Analysis: Algorithm GCASD (cf. Algorithm 1) codifies the solution method described above. Problem (28)-(31) is convex, and can be solved in time , for a polynomial . Line 6 takes time (recall that there are BSs, and is the largest ). Hence Algorithm 1 has a running time of , where is the granularity of , and is the binary search cost of line 4 of the algorithm, in order to get a within of the optimal.

IV-B Approximate Solution for Hard Deadlines

In this subsection, we use a similar approach in order to solve (20)-(24). Here we decompose the original problem into several subproblems by discretizing both variable as before, and . Then, for every possible (fixed) pair , the non-convex problem (20)-(24) can be transformed into a convex optimization problem with as its decision variables, which can be solved in polynomial time. By calculating the pair whose subproblem achieves minimum average power consumption, integer values for the original optimization problem are obtained from .

In more detail, we discretize by breaking into equal segments, and then we discretize by breaking interval into equal segments. At iteration of this discretization, and are fixed. Then can be calculated directly for any and , and the original optimization problem (20)-(24) becomes

| (32) | |||||

| (33) | |||||

| (34) | |||||

| (35) | |||||

This is still a non-convex non-linear integer program, which cannot be solved efficiently. As in Section III, and by using (26)-(27), it becomes

| (36) | |||||

| (37) | |||||

| (38) | |||||

| (39) | |||||

Problem (36)-(39) is a convex program and can be solved efficiently. Hence, we can obtain the optimal blocking probabilities , corresponding to a pair . We can compute the largest integral which achieves blocking probabilities no smaller than , for all , by using binary search based on the fact that the ’s are decreasing functions of the ’s. After collecting the solutions for all iterations , we output the minimum cost one .

Complexity Analysis: Algorithm GCAHD (cf. Algorithm 2) codifies the solution method described above. Problem (36)-(39) is convex, and can be solved (line 4) in time , for a polynomial . Line 5 takes time (recall that there are BSs, and is the largest ). Hence Algorithm 2 has a running time of , where and are the granularity of and respectively.

V Task Arrival and Offloading Assumptions

In the remainder of this paper, we assume that tasks arrive from the MDs at BS according to a Poisson process with mean arrival rate . The Poisson process assumption is commonly made in this type of situation, since the number of mobile devices in a given coverage area is typically quite large, each contributing to a small fraction of the total load [37]. In this case, we can invoke the insensitivity property of the Erlang B formula, to compute the probability of blocking at each BS [38]. Note that, typically, the Erlang B result is derived using the Markovian queue, which assumes exponentially distributed channel upload (i.e., service) times [39]. Due to insensitivity, the result holds for any service time distribution with the same mean. Therefore, the blocking probability for a task arriving at BS is

| (40) |

where denotes the mean service rate, which can be calculated by . Function (40) is convex in [40].

Note that due to the Poisson process task arrival assumption, the channel state sampled by arriving tasks is given by the steady-state equilibrium probability distribution of the Markovian channel at that MD. This follows from the PASTA rule [41].

We assume that the aggregate task arrival process at ES is Poisson [42], and, therefore, arriving tasks sample the asymptotic equilibrium state distribution of ES. This approximation is justified due to the mixing of arrivals at ES from BSs operating independently. In this case, ES can be modeled as an queue, whose waiting time is given by the random variable . Given and knowledge of the data upload distribution, the distribution of can be obtained by numerical inversion of the probability generating function of system waiting time for [37]. In this case, the execution time of a task at the ES depends only on which class it belongs to, i.e., , for all and , and . Thus, can be easily obtained.

When applying algorithms GCASD (Algorithm 1) and GCAHD (Algorithm 2) in this case, the upper bound used in problem (28)-(31) and (36)-(39) becomes [43]:

| (41) |

V-A Approximation with Soft Deadlines

V-B Approximation with Hard Deadlines

VI Simulation Results

In this section, we present simulation results to demonstrate the performance of our proposed algorithms GCASD (Algorithm 1) and GCAHD (Algorithm 2). We adopt the two-state Gilbert-Elliot channel model [44], i.e., the channel states change by following a Markov chain with two states, “Good” (G) and “Bad” (B). This model is commonly used to characterize the effects of burst noise in wireless channels, where the channel can abruptly transition between good and bad conditions [45]. The Gilbert-Elliot channel is a difficult one for computation offloading algorithms to deal with compared to those where there is much more correlation in the channel quality as the offloading progresses. Let and , respectively, be the data transmission rate when the channel is in the G and B states. We consider that all channels have the same and values but differ in their state transition probabilities that result in different propagation models. The transition probabilities for propagation model in BS are denoted as , and . In each time slot, the channel state Markov chain transitions in accordance with these probabilities. Denote and , respectively, as the stationary probabilities of a channel in BS for propagation model being in the G and B states. Two sets of simulations are performed with set 1 for single class of tasks and set 2 for multiple classes of tasks. Default parameters used in the simulations are summarized in Table III. The parameter settings that we use were taken from the references [26, 23] and [32]. These references summarize parameter settings for various types of applications including those that are inherently delay sensitive, such as gaming, face recognition and healthcare use. We intentionally use a wide range of parameter values based on the referenced ranges so that we can make conclusions that apply in general settings.

VI-A Simulation set 1: single class of tasks

In this subsection, we will assume that all the tasks generated at the MDs have the same data size and same computation load , i.e., and for all . When the channel is in the G state, the transmission rate of the wireless channel allows a task to be uploaded within one time slot; while when the channel is in the B state, the data transmission rate is zero. Since there is only one class of the tasks, subscript can be dropped from the notation.

Let be the time needed for uploading a task in BS with channel model . The probability that one task in BS with channel model can be uploaded in time slots is given as follows

| (50) |

The mean wireless transmission time of a task in BS uploaded through a channel with propagation model can be calculated as follows

| (51) |

Based on this, the mean wireless transmission time of the tasks in BS is , where is the probability that a task in BS is uploaded through a channel with propagation model .

With a single class of tasks, the ES server becomes an queueing system, for all , and , and the distribution of delay is given by [46]

| (52) |

where .

For comparison, we also run a discrete event simulation (DES) of the system using the ’s and solutions obtained from the proposed algorithms to validate our model assumptions, and these solutions are denoted as DESSD and DESHD, respectively, for the soft deadline (SD) and hard deadline (HD) cases. In addition, we simulate a DES-based OPT scheme for each proposed algorithm as follows. For GCASD, we first obtain all the possible combinations of ’s under constraint (14); for a given combination of ’s, we can obtain the solution of based on (11) and (15), and then check if constraint (13) is satisfied based on the current set of ’s and . If not, we go to the next set of ’s and repeat this procedure. If it is satisfied, we use this set of ’s and to run the DES for the system, and then check if (12) is satisfied. If not, we proceed to the next combination of ’s and repeat the above procedure. If the constraints are satisfied, we save the obtained average power. After going through all the possible combinations of ’s, we obtain the minimum average power and the corresponding ’s and . For GCAHD, we first obtain all the possible combinations of ’s under constraint (23); for a given combination of ’s, we can obtain the solution of based on (21) and (24), and then check if constraint (22) is satisfied based on the current set of ’s and . If not, we go to the next set of ’s and repeat this procedure. If it is satisfied, we use this set of ’s and to run the DES for the system. Then, we save the obtained mean power consumption. After going through all the possible combinations of ’s, we obtain the minimum average power and the corresponding ’s and .

| Parameter | Value in set 1 | Value in set 2 |

|---|---|---|

| 1 s | ||

| 250 mW | ||

| 2.5 mW | ||

| 11, 13, 15 tasks/s | ||

| 15, 15, 20 | ||

| 1, 1, 1 $ | ||

| $ | $ | |

| 75M cycles/s | 200M cycles/s | |

| 1M cycles/s | 2M cycles/s | |

| 140 $ | 90 $ | |

| 2M, 0 bits per time slot | 5M, 1M bits per time slot | |

| 2M bits | 5M, 10M, 15M bits | |

| 4 s | 6, 11, 16 s | |

| 3M CPU cycles | 10M, 20M, 30M CPU cycles | |

In the simulation, we consider a cellular network consisting of 3 BSs. There are two propagation models at each BS with transition probabilities , , , and for . The probabilities of the different channel models in BS 1 are and ; and those in BSs 2 and 3 are , , , and .

Figs. 2(a) and 2(b) show the average power consumption of MDs versus for the SD and HD cases, respectively. In Fig. 2(a), when the tolerable violation of latency is 1%, the average power consumption of MDs is a constant for all the solutions. This is because all the tasks are executed locally regardless of the cost budget, since the tight delay constraints cannot be satisfied if a task is offloaded. When is 3% or 5%, some tasks are allowed to be offloaded, and the average power consumption of the MDs decreases with for all the solutions. This happens since, when the cost budget is small, the optimization is constrained by the cost budget, which limits the number of offloaded tasks; and with the increase of , more channel and ES resource is available, leading to more MDs offloading their tasks. When is large, the budget constraint is loose, and the task offloading completion is mainly affected by the changing wireless transmission conditions. Fig. 2(a) also shows that the average MD power consumption decreases with for all the solutions, since larger makes it easier to meet the latency constraint through offloading, which results in more offloaded tasks and saves power in the MDs.

By comparing the average MD power consumption for and in Fig. 2(a), it is seen that the gap is small when the cost budget is small. The gap then increases as the cost budget increases, and finally becomes constant when the cost budget is sufficiently large. When the cost budget is low, the number of channels is small, which forces most tasks to be executed locally, regardless of the value of . As the cost budget increases, more channels are available, and the offloading decisions are determined by both and the available channel resources. When the cost budget is sufficiently high, the offloading decisions are mainly determined by the value of . The figure also shows that the average MD power consumption using GCASD is almost the same as using DESSD, which validates the model and approximations used in designing GCASD. The performance of GCASD is also close to DESSD-based OPT, which further shows good performance of the former.

By comparing Figs. 2(b) and 2(a), it can be seen that the average MD power consumption for the HD case is slightly higher than that for the SD case with and much lower than that for the SD case with . For the SD case, when , the tight (soft) delay constraint forces all the tasks to be executed locally, which results in the highest average power consumption of the MDs; and the power consumption decreases as increases and more tasks are allowed to be offloaded. Without having to use CLE, the SD solutions result in lower average MD power consumption than the corresponding HD solutions. However, this is at a price that up to of the tasks do not meet their completion deadlines. On the other hand, using CLE in the GCAHD only incur slightly higher power consumption of the MDs compared to GCASD when For the HD case, the total average power consumption of the MDs decreases with when is small and becomes a constant when becomes larger for all schemes, which is the same as that of the SD case with and .

Figs. 3(a) and 3(b) show the average power consumption versus (same for all BSs) for the SD and HD cases, respectively. The figures show that the power consumption increases linearly with for all schemes, since both the local execution power and the uploading transmission power are proportional to the mean task arrival rate. The average MD power consumption using GCAHD is close to that using GCASD with but much lower than that using GCASD with . This demonstrates that the use of CLE in GCAHD is minimized, while always ensuring the HD of the tasks. Fig. 3(a) shows that the performance of GCASD is very close to DESSD and DESSD-based OPT; and Fig. 3(b) shows that the performance of GCAHD is very close to DESHD and DESHD-based OPT. These observations are consistent with the ones from Figs. 2(a) and 2(b). This further demonstrates the good performance of GCASD and GCAHD and validates the model and approximations used in designing the proposed algorithms.

Figs. 4(a) and 4(b) show the average power consumption of the MDs versus , which is the ES capacity, for the SD and HD cases, respectively. For the SD case with , all tasks are executed locally; and when and , offloading is possible for some tasks, and the number of tasks that can be offloaded increases with the ES capacity, resulting in lower power consumption of the MDs. As the ES capacity is sufficiently high, the average power consumption of MDs becomes a constant, since the offloading decisions are determined by the cost budget which limits the number of wireless channels for uploading tasks. Note that the slight increase in average power consumption when is between 60 and 80 is caused by the discretization errors of variable in algorithms 1 and 2. Increasing the values in the algorithms helps reduce the discretization errors but significantly increase the amount of time for running the simulations. Comparing the average power consumption of the HD and the SD cases shown in Figs. 4(a) and 4(b), we have consistent observations as in previous figures.

VI-B Simulation set 2: multiple classes of tasks

In this subsection, tasks have multiple classes. The two-state Gilbert-Elliot channels are considered. Let and , respectively, be the data transmission rates when a channel is in the G and B states. Given the channel state transision probabilities, the distribution of wireless transmission time for uploading a class task in BS through a channel with propagation model can be calculated from [29].

At the ES, the system of serving the uploaded tasks becomes an queueing system. Let be a random variable representing the execution time of the tasks. We have , then the probability density function of can be written as

| (53) |

and the Laplace-Stieltjes transform of is given by

| (54) |

The Laplace-Stieltjes transform of the probability density function of queuing time is given by the Pollaczek-Khinchine transform [37] as

| (55) |

where is the mean of . The distribution of can be obtained by numerical inversion of (55).

In the simulation, we consider a cellular network consisting of 3 BSs, 3 task classes, and 2 channel propagation models. The channel state transition probabilities are , , , and for . The probabilities of accessing channels with different propagation models in BS 1 are and ; those in BSs 2 and 3 are , , , and . The probabilities of a task belonging to different classes are , , and .

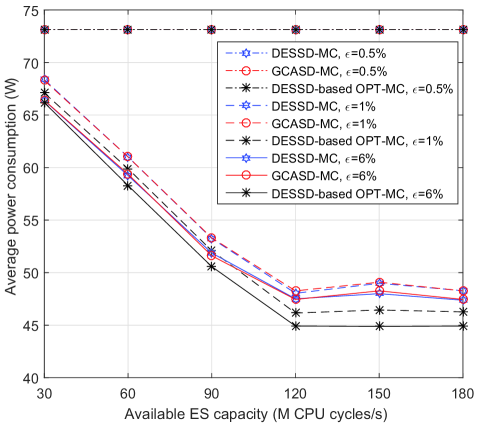

Figs. 5(a) and 5(b) show the average power consumption of MDs versus for the SD and HD cases, respectively. In Fig. 5(a), when is 0.5%, all the tasks are executed locally regardless of the cost budget, since offloading cannot satisfy the tight delay constraints. When is 1% or 6%, the average power consumption of MDs decreases with and then becomes a constant. By comparing the power consumption of the MD in the SD and HD cases, we can see that the average power consumption of MDs for the HD case is slightly higher than that for the SD case with and much lower than that for the SD case with . Figs. 6(a) and 6(b) show the total average power consumption of the MDs versus . All the results show that our GCASD and GCAHD solutions achieve the average power consumption performance that is very close to DES-based OPT, and the observations in the multi-class simulations are consistent with the single-class simulations.

VII Conclusions

This paper has studied joint wireless network and task service allocation for mobile computation offloading. The objective is to minimize the total average power consumption of MDs for completing the arriving tasks, while satisfying the delay constraints of tasks and the cost budget of the network customer. The formulations presented included both soft and hard task completion time deadlines. The designs were formulated as MINLPs and approximate solutions were obtained by decomposing the formulations into convex subproblems. Simulation results were presented that characterize the performance of the system and show various tradeoffs between task deadline violation, average mobile device power consumption and the cost budget. Results were presented that demonstrate the quality of the proposed solutions, which can achieve close-to-optimum performance over a wide range of system parameters. The optimum allocation were obtained by doing exhaustive search-based discrete event simulations for assigning the wireless channels from each BSs and ES capacity.

References

- [1] T. H. Noor, S. Zeadally, A. Alfazi, and Q. Z. Sheng, “Mobile cloud computing: Challenges and future research directions,” Journal of Network and Computer Applications, vol. 115, pp. 70–85, 2018.

- [2] Y. Kwon, H. Yi, D. Kwon, S. Yang, Y. Cho, and Y. Paek, “Precise execution offloading for applications with dynamic behavior in mobile cloud computing,” Pervasive and Mobile Computing, vol. 27, pp. 58–74, 2016.

- [3] H. Ba, W. Heinzelman, C.-A. Janssen, and J. Shi, “Mobile computing-a green computing resource,” in 2013 IEEE Wireless Communications and Networking Conference (WCNC). IEEE, 2013, pp. 4451–4456.

- [4] Z. Gu, R. Takahashi, and Y. Fukazawa, “Real-time resources allocation framework for multi-task offloading in mobile cloud computing,” in 2019 International Conference on Computer, Information and Telecommunication Systems (CITS). IEEE, 2019, pp. 1–5.

- [5] J. Zhang, X. Hu, Z. Ning, E. C.-H. Ngai, L. Zhou, J. Wei, J. Cheng, and B. Hu, “Energy-latency tradeoff for energy-aware offloading in mobile edge computing networks,” IEEE Internet of Things Journal, vol. 5, no. 4, pp. 2633–2645, 2017.

- [6] G. Huerta-Canepa and D. Lee, “A virtual cloud computing provider for mobile devices,” in Proceedings of the 1st ACM Workshop on Mobile Cloud Computing Services: Social Networks and Beyond, June 2010, p. 6.

- [7] B.-G. Chun, S. Ihm, P. Maniatis, M. Naik, and A. Patti, “CloneCloud: Elastic Execution between Mobile Device and Cloud,” in Proceedings of the Sixth Conference on Computer Systems, ser. EuroSys ’11. New York, NY, USA: ACM, 2011, pp. 301–314. [Online]. Available: http://doi.acm.org/10.1145/1966445.1966473

- [8] Y. Shi, S. Chen, and X. Xu, “Maga: A mobility-aware computation offloading decision for distributed mobile cloud computing,” IEEE Internet of Things Journal, vol. 5, no. 1, pp. 164–174, Feb 2018.

- [9] S. Zhou, Y. Sun, Z. Jiang, and Z. Niu, “Exploiting moving intelligence: Delay-optimized computation offloading in vehicular fog networks,” IEEE Communications Magazine, vol. 57, no. 5, pp. 49–55, May 2019.

- [10] D. Mazza, D. Tarchi, and G. E. Corazza, “A unified urban mobile cloud computing offloading mechanism for smart cities,” IEEE Communications Magazine, vol. 55, no. 3, pp. 30–37, March 2017.

- [11] H. A. Alameddine, S. Sharafeddine, S. Sebbah, S. Ayoubi, and C. Assi, “Dynamic task offloading and scheduling for low-latency iot services in multi-access edge computing,” IEEE Journal on Selected Areas in Communications, vol. 37, no. 3, pp. 668–682, 2019.

- [12] J. Liu, J. Ren, Y. Zhang, X. Peng, Y. Zhang, and Y. Yang, “Efficient dependent task offloading for multiple applications in mec-cloud system,” IEEE Transactions on Mobile Computing, pp. 1–1, 2021.

-

[13]

Huawei Inc., “5g network architecture - a high-level perspective,”

https://www.huawei.com/en/technology-insights/industry-insights/outlook/mobile-broadband/insights-reports/5g-network-architecture, 2016. - [14] O. Muñoz, A. Pascual-Iserte, and J. Vidal, “Optimization of Radio and Computational Resources for Energy Efficiency in Latency-Constrained Application Offloading,” IEEE Transactions on Vehicular Technology, vol. 64, no. 10, pp. 4738–4755, October 2015.

- [15] B. Dab, N. Aitsaadi, and R. Langar, “Joint optimization of offloading and resource allocation scheme for mobile edge computing,” in 2019 IEEE Wireless Communications and Networking Conference (WCNC), 2019, pp. 1–7.

- [16] M. Sheng, Y. Wang, X. Wang, and J. Li, “Energy-efficient multiuser partial computation offloading with collaboration of terminals, radio access network, and edge server,” IEEE Transactions on Communications, vol. 68, no. 3, pp. 1524–1537, 2020.

- [17] H. Chen, D. Zhao, Q. Chen, and R. Chai, “Joint computation offloading and radio resource allocations in small-cell wireless cellular networks,” IEEE Transactions on Green Communications and Networking, vol. 4, no. 3, pp. 745–758, 2020.

- [18] J. Du, L. Zhao, J. Feng, and X. Chu, “Computation offloading and resource allocation in mixed fog/cloud computing systems with min-max fairness guarantee,” IEEE Transactions on Communications, vol. 66, no. 4, pp. 1594–1608, April 2018.

- [19] X. Yang, X. Yu, H. Huang, and H. Zhu, “Energy efficiency based joint computation offloading and resource allocation in multi-access mec systems,” IEEE Access, vol. 7, pp. 117 054–117 062, 2019.

- [20] J. Zhang, W. Xia, F. Yan, and L. Shen, “Joint computation offloading and resource allocation optimization in heterogeneous networks with mobile edge computing,” IEEE Access, vol. 6, pp. 19 324–19 337, 2018.

- [21] X. Chen, Z. Liu, Y. Chen, and Z. Li, “Mobile edge computing based task offloading and resource allocation in 5g ultra-dense networks,” IEEE Access, vol. 7, pp. 184 172–184 182, 2019.

- [22] S. Mu, Z. Zhong, and D. Zhao, “Energy-efficient and delay-fair mobile computation offloading,” IEEE Transactions on Vehicular Technology, vol. 69, no. 12, pp. 15 746–15 759, 2020.

- [23] M. Masoudi and C. Cavdar, “Device vs edge computing for mobile services: Delay-aware decision making to minimize power consumption,” IEEE Transactions on Mobile Computing, vol. 20, no. 12, pp. 3324–3337, 2021.

- [24] X. Chen, Y. Cai, Q. Shi, M. Zhao, B. Champagne, and L. Hanzo, “Efficient resource allocation for relay-assisted computation offloading in mobile-edge computing,” IEEE Internet of Things Journal, vol. 7, no. 3, pp. 2452–2468, 2020.

- [25] S. Nath, Y. Li, J. Wu, and P. Fan, “Multi-user multi-channel computation offloading and resource allocation for mobile edge computing,” in ICC 2020 - 2020 IEEE International Conference on Communications (ICC), 2020, pp. 1–6.

- [26] C. Yi, S. Huang, and J. Cai, “Joint resource allocation for device-to-device communication assisted fog computing,” IEEE Transactions on Mobile Computing, vol. 20, no. 3, pp. 1076–1091, 2021.

- [27] H. Park, Y. Jin, J. Yoon, and Y. Yi, “On the economic effects of user-oriented delayed wi-fi offloading,” IEEE Transactions on Wireless Communications, vol. 15, no. 4, p. 2684–2697, 2016.

- [28] L. Cominardi, T. Deiss, M. Filippou, V. Sciancalepore, F. Giust, and D. Sabella, “Mec support for network slicing: Status and limitations from a standardization viewpoint,” IEEE Communications Standards Magazine, vol. 4, no. 2, pp. 22–30, 2020.

- [29] A. Hekmati, P. Teymoori, T. D. Todd, D. Zhao, and G. Karakostas, “Optimal mobile computation offloading with hard deadline constraints,” IEEE Transactions on Mobile Computing, vol. 19, no. 9, pp. 2160–2173, 2020.

- [30] Y. Deng, Z. Chen, and X. Chen, “Resource allocation for multi-user mobile-edge computing systems with delay constraints,” in GLOBECOM 2020 - 2020 IEEE Global Communications Conference, 2020, pp. 1–6.

- [31] C. W. Zaw, N. H. Tran, Z. Han, and C. S. Hong, “Radio and computing resource allocation in co-located edge computing: A generalized nash equilibrium model,” IEEE Transactions on Mobile Computing, pp. 1–1, 2021.

- [32] S. Yue, J. Ren, N. Qiao, Y. Zhang, H. Jiang, Y. Zhang, and Y. Yang, “Todg: Distributed task offloading with delay guarantees for edge computing,” IEEE Transactions on Parallel and Distributed Systems, vol. 33, no. 7, pp. 1650–1665, 2022.

- [33] Y. Geng, Y. Yang, and G. Cao, “Energy-efficient computation offloading for multicore-based mobile devices,” in IEEE INFOCOM 2018 - IEEE Conference on Computer Communications, 2018, pp. 46–54.

- [34] M.-H. Chen, B. Liang, and M. Dong, “Multi-user multi-task offloading and resource allocation in mobile cloud systems,” IEEE Transactions on Wireless Communications, vol. 17, no. 10, pp. 6790–6805, 2018.

- [35] Q. Li, S. Wang, A. Zhou, X. Ma, F. Yang, and A. X. Liu, “Qos driven task offloading with statistical guarantee in mobile edge computing,” IEEE Transactions on Mobile Computing, vol. 21, no. 1, pp. 278–290, 2022.

- [36] J. Ren, G. Yu, Y. Cai, and Y. He, “Latency optimization for resource allocation in mobile-edge computation offloading,” IEEE Transactions on Wireless Communications, vol. 17, no. 8, pp. 5506–5519, 2018.

- [37] A. Y. Khintchine, “Mathematical theory of a stationary queue,” Matematicheskii Sbornik, vol. 39, no. 4, pp. 73–84, 1932.

- [38] D. Y. Burman, “Insensitivity in queueing systems,” Advances in Applied Probability, vol. 13, no. 4, pp. 846–859, 1981.

- [39] D. J. Daley and L. D. Servi, “Idle and busy periods in stable m/m/k queues,” Journal of Applied Probability, vol. 35, no. 4, pp. 950–962, 1998.

- [40] E. J. Messerli, “B.s.t.j. brief: Proof of a convexity property of the erlang b formula,” The Bell System Technical Journal, vol. 51, no. 4, pp. 951–953, 1972.

- [41] R. W. Wolff, “Poisson arrivals see time averages,” Operations Research, vol. 30, no. 2, pp. 223–414, 1982.

- [42] D. N. Shanbhag and D. G. Tambouratzis, “Erlang’s formula and some results on the departure process for a loss system,” Journal of Applied Probability, vol. 10, no. 1, pp. 233–240, 1973.

- [43] S. A. Berezner, A. E. Krzesinski, and P. G. Taylor, “On the inverse of erlang’s function,” Journal of Applied Probability, vol. 35, no. 1, pp. 246–252, 1998.

- [44] E. N. Gilbert, “Capacity of a burst-noise channel,” The Bell System Technical Journal, vol. 39, no. 5, pp. 1253–1265, 1960.

- [45] T. Blazek and C. F. Mecklenbräuker, “Measurement-based burst-error performance modeling for cooperative intelligent transport systems,” IEEE Transactions on Intelligent Transportation Systems, no. 99, pp. 1–10, 2018.

- [46] G. J. Franx, “A simple solution for the m/d/1 waiting time distribution,” Operations Research Letters, vol. 29, no. 5, pp. 221–229, 2001.