Why So Serious? Exploring Humor in AAC Through AI-Powered Interfaces

Abstract.

People with speech disabilities may use speech generating devices to facilitate their speech, aka Augmentative and Alternative Communication (AAC) technology. This technology enables practical conversation; however it remains challenging to deliver expressive and timely comments. In this paper, we study how AAC technology can facilitate such speech, through AI powered interfaces. We focus on the least predictable and most high-paced type: humorous comments.

We conducted seven qualitative interviews with people with speech disabilities, and performed thematic analysis to gain in-depth insights in usage and challenges of AAC technology, and the role humor plays for them. We designed four simple AI powered interfaces to create humorous comments. In a user study with five participants with speech disabilities, these interfaces allowed us to study how to best support making well-timed humorous comments. We conclude with a discussion of recommendations for interface design based on both studies.

1. Introduction

Augmentative and Alternative Communication (AAC) devices are vital for individuals with speech impairments, enabling them to communicate effectively. In combination with accessible input devices such as eye tracking, switches, or pointers and customized vocabulary layouts, AAC devices can support the communication of users with a wide range of motor and verbal abilities. While these tools work for practical every-day conversations, they pose challenges for time-sensitive or expressive forms of communication like humor. Waller (Waller, [n. d.]) found that AAC users communicate at approximately 12-18 words per minute (compared to 125–185 wpm for typical users). Resulting in what is known as communication asymmetry. mpts to overcome this asymmetry: through word prediction (Trnka et al., [n. d.]),visuals (Fontana De Vargas et al., [n. d.]; Fontana de Vargas and Moffatt, [n. d.]), and by inferring word suggestions from context like location, conversation partner and image recognition from the surroundings (Kane and Morris, [n. d.]; Kane et al., [n. d.]).

More recently through incorporating Large Language Models (LLMs) efficiency of auto-complete and suggestions could further increase communication speed(Shen et al., [n. d.]; Valencia et al., [n. d.]). Recent work has focused on not only enhancing typing speed but increasing opportunities for AAC users to participate in time despite communication assymetries. One example is COMPA (Valencia et al., 2024), which enables AAC users to mark conversation segments they intend to address and generate contextual starter phrases related to the marked conversation segment and a selected user intent. This proved to be an excellent tool to support both AAC and non-AAC users in having a better flow in their conversation. Similarly, KWickChat (Shen et al., [n. d.]) explores a sentence-based text entry system that automatically generates suitable sentences for the non speaking partner based on keyword entry, this could allow AAC users generate meaningful replies in daily conversations using very little user input. This body of work is great at increasing the rate of communication for people with speech disabilities. We observe a risk that overly relying on forms of auto-complete reduces the ability to express less predictable comments through the AAC technology.

While supporting faster speaking rates can be beneficial, supporting other goals such as timely humorous expression is under-researched and of most of importance. Humor helps manage the flow of a conversation and aids in building common ground between participants, making interactions enjoyable and fostering interpersonal connections (Waller et al., 2009; Binsted et al., 2006; O’Mara et al., 2006). Early work on humor interfaces for children using AAC explored algorithms for pun generation(O’Mara et al., 2006). This work demonstrated the value of word play for expressive humor creation. Using today’s LLMs we explore different interactions to support AAC users in timely humor expression.

In this paper, we investigate how to better design AAC technology to support making humorous comments. Creating humorous interjections poses significant challenges due to the need for both timely responses and expressive agency. We narrowed down on this challenging use case because it requires both timing and tends to be unpredictable. Humor furthermore is a largely overlooked, yet socially important use case for AAC technology as it plays a big role in social acceptance, both personally and professionally. We extend AAC technology by designing interfaces powered by multi-modal LLMs, to facilitate this crucial form of social interaction. by leveraging real-time transcription, analyzing the context of the conversation, extracting the keywords and generating appropriate speech output, taking the capabilities of multimodal LLMs such as GPT4 (OpenAI et al., [n. d.]) and LLAMA3 to interact with the context of the conversation. We explore this use case for AAC through three studies. (1) To understand the usage and challenges of AAC technology as well as the role humor plays in the lives of people with speech disabilities, we conduct seven qualitative interviews with people with speech disabilities. We use thematic analysis to analyse the results. (2) We take a design-driven approach to explore the space and trade-offs between different UI design considerations leading to four interfaces to create humorous comments with AAC technology. (3) Finally, to understand how those different design-decisions impact practical experience of people with speech disabilities we conduct a user study with five participants. We conclude with a series of recommendations for design of interfaces for AAC technology, catered towards making humorous comments.

2. Positionality Statement

This work is uniquely positioned not just in terms of the problem we attempt to tackle, but also the personal background of the lead author of this work. The lead author on this work has a speech disability and is a user of AAC technology. To understand how this impacts the positioning of the work, we disclose his perspective as it plays a critical role in how some of the work was conducted.

The lead author lost his ability to speak when he was 15 years old, ”it was a wild experience see my whole world changing in just one month when the neuro-motor disease manifested, suddenly I was not able to speak or move normally” this lived experience determined him to help people with disabilities. ”Humor is the lens through which I see the world, it is a part of me I can not help it. When I lost the ability to speak I had to learn to adapt my humor to this new form of communication without losing my touch”.

The lead author entered the AAC world without knowing what AAC was. ”Growing up in Argentina, the access to technology is a bit different. No one told me ”here, these are AAC devices you can use”, I had to discover everything on my own”. At first, while he was in the hospital, he used a laptop to type what he wanted to communicate ”I never felt that this condition was a limitation in my life, it was just a new way to experience life, and It was not going to stop me”.

Initially, he used an iPad mini but the TTS lacked expressiveness ”The voice was monotone and without any emotion, I never felt identified with it. It was very hard to convey what I wanted how I wanted it, The deal breaker for me the was accent due to only having Mexican or Spain Spanish voices. I didn’t liked to sound different to all my friends. I don’t think anyone made fun of this but internally I felt like an outsider”. Eventually he switched to using the notes app on an iPhone and just show others typed text ”I found that showing the text was better for me because when people read they automatically put the ”correct” tone in their heads, and that paired with my facial expression while people are reading my messages allows me to much better express myself. Now I don’t have any specific accent, I have the accent that the reader imagine in their head”.

He has now been an AAC user for over 11 years and his experience is catalysed in this work ”I feel that everyone should have the ability to express humor how and when they want, humor is an extremely powerful tool to connect with people. It is easy to take for granted but for us (AAC users) humor is not trivial, I am very lucky to be able to type fast. However, even I usually find myself in situations where by the time I am done typing, the joke window is long gone, and humorous comment is not longer relevant, this inspired me set out to study how to build AAC technology to better express ourselves.”

3. Contribution, Benefits, and Limitations

We explore how to extend AAC technology to allow for more expressive speech, in doing so, we focus on a challenging form of communication that benefits from precise use of words, context and timing: humorous comments. We contribute insights from three research activities:

-

(1)

From in-depth interviews with seven AAC users, we gain insights into the current usage and limitations of AAC technology for expressive speech and the role humor plays in the lived experience of our users.

-

(2)

We explore a design space of interfaces for AI powered apps to support AAC users in making humorous comments. We discuss trade-offs in design choices, specifically around user control and efficiency.

-

(3)

Through a user study with five AAC users we evaluate four proposed interfaces to derive insights on interface design and identify preferences and trade-offs regarding the identified design space.

-

(4)

Finally, based on the conclusions of these three activities, we draw design recommendations for the development of future AAC technology.

We do not claim generalizability of our conclusions, as common in the field of accessibility, we had a small but very diverse set of participants for our studies. The conclusions are profound and built on deep experience, but by no means do we claim full coverage of people with speech disabilities. The design space we explored specifically focuses on components in user interfaces of AI powered applications to create humorous comments. We consider this is an important and emerging subset of the space, but there obviously are other aspects worth exploring such as different input technologies, giving users control over the actual voice for expressive speech, or more aesthetic aspects. Finally, we have studied the usage of these interfaces in a relatively controlled lab study, we chose this to minimize the burden on our user community at this point in the process where we derive design guidelines, we hope that our conclusions will inform the design of interfaces which would be worth testing in more open-ended scenarios or long-term deployment.

4. Related Work

We build on work in challenges in AAC and expressiveness, trade-offs in AI writing support, and computational humor.

4.1. Challenges in AAC: Agency, Expression and Timing

Using a speech generating AAC device requires multiple cognitive, physical and time resources from a user. In real-time conversation, AAC users must first formulate their response or contribution and then plan how to convey it using the available technology. Naturally, communicating with an AAC device, especially if a user has severe motor impairments, takes more time than communicating by using speech. Furthermore, prior work has found that conversational agency, an individual’s capacity to express and achieve their goals in conversation, can be impacted by social constraints and the relationship among speakers (Valencia et al., 2020), the pace of communication (Kane et al., 2017), and the quality of communication resources available (e.g., vocabulary, technology, physical and social context) (Ibrahim et al., 2024). AAC users communicate at approximately 12-18 wpm compared to 125 to 185 wpm for non-AAC speakers (Waller, [n. d.]). In response to this asymmetry, researchers have focused on increasing AAC users’ communication rates through incorporating Large Language Models (LLMs) suggestions (Shen et al., [n. d.]; Valencia et al., [n. d.]; Yusufali et al., 2023; Cai et al., [n. d.]; Fang et al., [n. d.]) and using visual output as partner feedback to support the shared conversational flow (Valencia et al., 2024; Sobel et al., 2017; Fiannaca et al., 2017). These efforts have highlighted trade-offs between automation, timing, and agency where AI-generated phrases or visual displays may support timely participation in conversation but might not adequately represent the user’s personal communication style. Additionally, relying on language generation specifically may also reduce the perceived agency of a user with a disability, potentially creating the impression that the system is driving the communication rather than the user (Valencia et al., [n. d.]). In this work we are interested in understanding the relationships between agency, expression, and timing through the use case of humor creation tools for AAC.

4.2. Trade-offs in AI Writing Support

Writing assistants came from the need to make communication more efficient. Prior to language models, there were text entry systems. Text entry systems would focus on a word (Bi et al., 2014) or short sentences (Vertanen et al., 2015) and suggest up to three subsequent words based on the likelihood distributions. More recent systems can suggest multiple short replies (Kannan et al., 2016) or a single long reply. However, as large language models become more robust, with the ability to inspire ideas (Gero et al., 2022), revise text (Cui et al., 2020), and generate short stories (Singh et al., 2023), there are several research fields that examine the interaction between users and AI-writing assistants beyond efficiency. There is a growing interest in viewing AI-writing assistants as co-authors (Lee et al., 2022; Mirowski et al., 2023; Jakesch et al., 2023). Recent scholarship in this area has focused on understanding how writers evaluate and integrate the suggestions provided by these language models into their cognitive writing processes (Bhat et al., 2023). Writers proactively engage with system-generated content, making deliberate efforts to integrate it into their writing (Singh et al., 2023). In accessibility research, there are LLM-based tools for people with dyslexia that can alleviate the difficulties of writing an email through automatic text generation (Goodman et al., 2022). AI writing assistance has also been explored for AAC through simulations that demonstrate benefits to typing efficiency (Shen et al., [n. d.]) and user studies (Valencia et al., 2024, [n. d.]) that explore supporting timely participation in conversation for AAC users.

While AI-enhanced writing tools can help people communicate efficiently, thee tools raise concerns regarding their impact on the user’s authenticity and agency when communicating and self-expressing. AI-assisted writing environments may hinder individual uniqueness and authenticity in aspects like voice, style, and tone (Cardon et al., 2023). While AI writing support could save time and physical effort, AAC users reported concerns about how AI suggestions do not accurately represent their writing style (Valencia et al., [n. d.]). Kadoma et al. (Kadoma et al., 2024) explored the influence of writer-style bias on inclusion, control, and ownership when collaborating with LLMs. Their findings revealed that participants who felt a greater sense of inclusion by the text suggestions also experienced enhanced agency and ownership over the co-authored content. Moreover, feelings of inclusion helped alleviate the perceived loss of control and agency when participants accepted more AI-generated suggestions.

While AI can be useful in increasing efficiency in communication, there is the question of whether agency is lost in the process. Given a keyword suggestion or selecting a generated phrase, as described above, could undermine autonomy and hinder personal expression [19]. Choosing a generated phrase made AAC users believe they did not have agency over their own choice, rather the system had made the choice for them. Agency, is a critical component of allowing AAC users to fully express themselves (Valencia et al., 2020).

4.3. Computational Humor and Creative Expression Tools

Computational humor deals with the question of whether computers can successfully generate, interpret, and respond to humor. Prior work has demonstrated that computational humor, such as that of computer generated humorous images (Wen et al., 2015), has enabled individuals to contribute their humor to conversations, allowing them to express themselves and their humor effectively. CAHOOTS, an online chat system that suggests humorous images for users to respond during conversations. Computational humor work using Large Language Models has focused on the interpretation of humor within various contexts. Previous work with GPT-4 to translate humorous contexts proved that the model was successful in preserving and interpreting humor and understanding within social contexts (Abu-Rayyash, 2023). Computational humor has shown that humorous AI systems, notably Witscript, can appear human-like, by using algorithms developed by expert comedy writers to generate humorous responses (Toplyn, 2023). In a study using Witscript, a Human, GPT-LOL (a simple joke generator created to serve as a baseline), and Witscript 3 jokes were rated for human-likeness. Witscript 3’s responses were judged as jokes 44.1% of the time in comparison to GPT-LOL 33.8% and 23.6% Human responses, indicating the potential of AI in creating conversational humor effectively.

Computational Humor has also been used to support children who use AAC exploring different possible user inputs. Using the system STANDUP, children could select either specific words to be included in a joke, a topic for the joke or a specific joke type to be generated (Waller et al., 2009; Ritchie et al., 2007). While STANDUP acted as a language playground for children enabling them to build puns, in this work we explore how different humor-creation inputs and AI-assistance modes impact adult AAC users perceptions of their agency. Similar to (Kantosalo et al., 2014) we want to better understand the roles computers could take in supporting humor expression for AAC users.

5. Qualitative Interviews: understanding use and challenges of AAC technology to create humorous comments

AAC technology has come a long way, and people with speech disabilities already use and make humor with their AAC devices as part of their daily lives. To better contextualize both the role humor and AAC technology plays in their lives, we conducted qualitative interviews with our target population. The interview protocol and data processing were reviewed by our institutional review board and approved under protocol ¡anonymous for review¿.

5.1. Participants

We recruited seven people with speech disabilities. Table 1 contains an overview of their basic demographics. To increase access to our study, we allowed participants to attend remotely via video conference software. This increased the diversity of participants we recruited.

| ID | Age | Gender | Severity of Motor Disability | AAC Device/s | Input Modality |

|---|---|---|---|---|---|

| P1 | 25-34 | Female | Mild (minor limitations in movement) |

Phone/iPad

(NovaChat/TouchChat) |

Direct touch |

| P2 | 25-34 | Non-binary | Mild (minor limitations in movement) | Laptop/iPad | Direct touch/physical keyboard (preferred) |

| P3 | 25-34 | Female | Profound (unable to perform daily activities without significant assistance) | Accent1400, Nuvoice | Direct touch with guard |

| P4 | 45-54 | Male | Moderate (noticeable limitations, performs daily activities with some assistance) | Accent 1000 | Direct select input |

| P5 | 45-54 | Male | Profound (unable to perform daily activities without significant assistance) | Tobii Dynabox | Eye gaze |

| P6 | 25-34 | Male | Profound (unable to perform daily activities without significant assistance) | Eye gaze edge/grid 3 | Eye gaze/track-ball |

| P7 | 35-44 | Male | Severe (requires assistance for most daily activities) | Tobii Dynabox | Eye gaze |

5.2. Structure

We followed a semi-structured interview structure, the two main themes we were interested in exploring were: (1) challenges and usage of AAC for expressive communication, and (2) the role humor plays in the lives of our participants. We include the full interview protocol in the supplementary material.

As mentioned in the positioning statement, the lead author of this work has a speech disability. Thus, interviews were conducted using his AAC device, which added a layer of observation to the conversation and interview process.

5.3. Data and Analysis

We collected and transcribed video and audio recordings of seven interviews. Interviews were conducted remotely through video conference software. These interviews lasted between one hour to three hours to accommodate each participant’s needs and speech rate.

We used thematic analysis (Braun and Clarke, 2006) to drive insights from the interviews. Three members of the research team individually applied open codes to the interview transcripts. First, they coded the same transcript individually. Then, they came together to review discrepancies to reach a consensus and split the remaining transcripts. After that, the three researchers came together again to conduct two rounds of affinity diagramming to group the codes by thematic meaning and similarity. Finally, they converged their code books to a total of 62 codes and extracted three higher order themes with six underlying sub-themes. For the full code book, please refer to the our supplementary material.

5.4. Results

In this section we outline the main themes illustrated by relevant quotes and observations. We find the following overarching themes: reliance on AAC, current use and experience of humor with AAC, and barriers to humor expression. Each of these is broken down into more specific observations from the interviews in the sections below.

5.4.1. Reliance on AAC: ”Communication is wild and crazy, but it is also the window to my world”

Participants shared various reflections on their experiences with AAC, emphasizing both its challenges and benefits. For all participants, AAC is essential for communication. P2 described AAC as ”freedom,” allowing them to avoid rationing speech: “I can often rely on mouth words, but not always, and having AAC available … it means I don’t have to worry about rationing speech.” P3 echoed this sentiment, calling AAC a ”blessing” that helps them stay connected: “I would be lost without it.” Similarly, P1 shared how AAC has improved their interactions with loved ones: “[AAC technology] helped me communicate with friends and family. When I talk with my voice, and they can’t understand me.”

Humor is central to how participants interact and relate to others. P6 explained how humor lightens the burden of AAC use, making interactions more enjoyable and natural: “We laugh a lot. It makes using AAC less of a chore… and makes us like regular guys.” P4 added that humor comes naturally to them: “I laugh a lot and find things funny”. Yet, P5 highlighted the complexity of navigating others’ reactions to humor, noting that people sometimes laugh nervously because they are unsure how to respond: “When I am funny, people laugh in a nervous laugh, they don’t know how to take me. I’m just like everyone else.”

Participants shared how AAC opens up their world, giving them access to experiences like anyone else. P7 captured this sentiment, saying: “I learned this communication is wild and crazy, but it is also the window to my world, giving me the chance to do so many things just like everyone else.” P6 agreed: “That is life as an AAC user—accept is good enough.”

Overall, participants’ reflections show how AAC not only facilitates essential communication but also supports humor, emotional expression, and personal connection, despite its challenges.

subsubsection urrent Use and Experience of Humor with AAC

This big recurring theme provided two contexts in which humor plays an important role for AAC users with rather different implications. We divide it in humor as an avenue for inter-personal relationships, and humor as an avenue for personal expression.

Humor as an avenue for inter-personal relationships. Humor functions as a form of social capital for AAC users. Participants frequently discussed the significance of humor in their lives. P3, for example, emphasized humor’s central role in fostering connections: “[Humor is] very important. My family and I are always joking around. Staff and I too.” then she elaborated ”[Humor allows her to ] Interact with people better, Connecting better with people who I am talking to” This reflects how humor fosters a sense of normalcy and closeness with others.

Humor can also be a means of contributing to conversations and integrating more fully into social interactions. P1 described a moment where humor enabled them to engage in a conversation: “When my friend is saying something funny, then I join in by adding a few words to help them out”. In doing so, they illustrated humor’s capacity to bridge the gap between AAC users and their conversational partners. P1 further elaborated: “Sometimes I make everyone laugh because it gives everyone the opportunity to enjoy my jokes”, demonstrating how humor allows AAC users to shift the dynamic and become a focal point of joy and connection.

Several participants shared humor’s role in making them feel more relatable and human. P6 explained how humor helps break down barriers in conversation, whether casual or formal: “Humor is important in conversations for SGD users, just like any other person who can use their physiological voice. For example, in casual conversation or when giving a speech, you want to get the audience to relax and relate to you more.” Similarly, P7 reflected on the importance of humor in being recognized as a person, adding that it helps “to get people to see that I am a person.” P4 shared a related example during a webinar about self-advocacy, where humor helped them connect with their audience: “I was saying because I am an old fogey that I had more experience than others—people laughed at that.” This illustrates how humor can defuse tension or build rapport, even in more structured settings.

These reflections reveal that humor is not just a tool for interaction but also enhances AAC users’ sense of self-worth and social integration. P6 succinctly captured this sentiment: “Humor is important. Using an AAC is hard enough, and I love joking around with my friends.” P5 echoed this by adding, ”I like to use humor every day. I like to laugh; I always have.” Together, these statements underline the critical role humor plays in helping AAC users connect, relate, and seamlessly integrate into social environments—acting as a crucial form of social capital.

Humor as an avenue for personal expression. We observed that humor is often personal and subjective, and the way AAC users express humor revealed diverse preferences. For example, P1 mentioned using common phrases like “Oops, I did it again,” or “How rude?” to convey expressive reactions during an ongoing conversation. P4 shared, “For me, it’s just when I find something funny or when I’m writing a speech I can naturally put things in that I think are funny, not necessarily that they are funny to other people.” This illustrates how humor is often personal and subjective.

Humor is understood and used by AAC users in varied ways. P3 emphasized playfulness in language, describing their preference for “play on words, not Shakespearean style, but stuff to joke around. Sarcasm. Light conversations.”. P2 expanded ”I definitely use a lot of humor like, saying true things in humorous ways that point out the absurdity So, satire-like but not quite satire? Not quite sarcasm either, usually. Like I said: saying true things in ways that point out the absurdity.” P4 and P5 added that they use sarcasm a lot.

P6 highlighted that AAC should have genres of jokes such as “your mama jokes” furthermore he explained, “Having the most current popular expressions, including single words, and having a speech device that updates regularly to add them. It shouldn’t take any away because you might want to continue using a particular word or saying, even if it isn’t the most current.” This illustrates the dynamic nature of humor and how language evolves over time.

AAC users express humor in varied ways, highlighting the complexity of designing interfaces that can capture this diversity in styles showing how humor preferences can be culturally and contextually specific.

5.4.2. Barriers to Humor Expression

We found three major barriers for AAC users that hinder their ability to freely express their humor: intonation, timing, and technical limitations. Here, we provide examples of our participants’ lived experiences and perspectives to explain how these factors affect their contextual communication choices and, consequently, the way they express humorous comments.

Intonation: It’s not what you say but how you say it. A common challenge AAC users faced when creating humorous comments was a lack of control of intonation of their device (P2, P3, P4, P5, P6, and P7). Participants felt that these devices did not reflect the tone of their “inner voice” to deliver jokes appropriately. For example, P4 explained how the voice does not match how he thinks he sounds: “The tone I have in my head don’t translate well to the device. It may seem angry and not funny when I say it with the device… We get frustrated because people don’t hear it the way we hear it in our head”. Still, P4 emphasized that with practice, they learned to adapt their timing and cadence: “The more I get the feel of my timing with the device… you can put jokes in your cadence because you hear it and start speaking in your head like the device.”

Thus, participants felt that tone was a fundamental aspect of communicating humorous comments and “sounding like a human”, equal to people who can speak with their own voice. P6 said that the thing that they dislike the most about their AAC device is that “There isn’t much voice inflection, or the ability to regulate the speed without going into settings” and that to tell a joke “you might want to slow down part of what you are saying, like a pre-emphasis”, explaining how tone and speech rate could enhance the delivery of the joke. P5 added that “sarcasm is very hard to convey as an AAC user” because “this device doesn’t have emotion”. P3 further detailed that the device makes her sound monotone since she cannot change the tone “right away”. This has an impact on how she thinks others perceive her. Lack of control over intonation is why P2 sometimes prefers to project their speech with a projector: “Flat tone of voice can be a problem when I’m actually using speech generation … but I usually don’t do that anyways(…) TTS is so bad. Like, it’s better than not having the option. But that’s different from being good.”

All participants use a combination of non-verbal gestures (facial expressions, hand gestures, and bodily movements), and their natural voice to react to ongoing conversations. For example, P4 detailed how they switch between their natural voice and their device for expressive preference: “Expressive reactions come from my natural voice and I use my device for clarity for people who don’t understand me”. Non-verbal gestures play an important role in signaling humor: “it’s usually the looks I give or the sounds I make that is letting people know I’m joking”. However different AAC users have different expressive capabilities. P1, P2, and P4 can use natural speech and move their bodies to express themselves whereas P6 cannot move their body on their own.

Overall, participants’ lived experiences have shown how they cope with the lack of intonation in their devices, but these techniques are not universally applicable. This creates significant barriers in conveying humor and nuance, which, as discussed before, play a fundamental role in AAC users’ communication choices and self-perception.

Timing: “Timing is everything”. We asked participants what they prioritize when delivering humorous comments: timing or expressiveness. It was a surprisingly even split, a slight majority prioritized expressiveness (P2, P4, P6, and P7) over timing (P1, P3, and P5), they clarified they have to gauge their conversational partner expectations to decide how to communicate moment to moment. Related, and maybe more importantly, all participants except P2 agreed that the context window where humorous comment can land is short, and they feel they are always “falling behind” in conversations. P4, P5, P6, and P7 shared a similar feeling, P4 exemplified: “Sometimes I see and hear something funny while I’m typing something else mid-sentence. I can’t express that and keep what I was saying”.

Participants mentioned strategies to deliver timely humorous comments: (1) Signaling intention to speak (P2), (2) using expressive reactions (P4), (3) shortening or simplifying comments (P1, P3), and (4) waiting for their turn to speak (P1). Strategies 1 and 2 provide control of the timing to AAC users, while strategies 3 and 4 are a response to the conversational partner’s control of the conversation. P1 explained that sometimes “you have to sacrifice what you would want to say, because it’s too long”. P7 added that they allow people to continue speaking even while he is typing because he feels “the slowness gives anxiety (…) and people cannot keep up with the conversation”.

Participants explained how they leverage pre-generated messages to deliver their humorous comments faster. Some participants, like P1, P2, P3, P5, and P6, were accustomed to generating phrases and then using them at a moment’s notice. They explained they use a built-in functionality in their SGD to write down predefined phrases like “I am sorry about that” or “ha!” (P6) to react humorously to their conversational partner commentary. While these phrases can be used as humorous reactions during any conversation; by definition these phrases are context-agnostic and do not reflect any deeper thoughts or direct references to what is being said during the conversation.

To summarize, timing is a major barrier for AAC users when making humorous comments, they are constantly trying to overcome these barriers. Providing ways with which AAC users can deliver contextually appropriate humorous comments faster, while retaining their original intention, remains an open question.

Technical Limitations of AAC. Participants brought up technical challenges of AAC technology hindering their ability to make humorous comments. Two limitations directly affect AAC users’ expressiveness: Lack of multilingual support and correct pronunciation of words in AAC Software. These problems were highlighted by P2 and P6. P2 is a bilingual speaker of English and Mandarin, and they expressed that: ”Mandarin Chinese AAC’s inability to detect which pronunciation should be used for characters pronounced differently based on context is 100% annoying.” This problem was not unique to multi-lingual users, P6 clarified that English-language AAC has a similar problem: “I hate that it does not pronounce words that are spelled the same correctly, like read, or lead”.

As for limitations that indirectly affect users’ expressiveness, common challenges were battery life and Internet access. Participants mentioned they rely on AAC technology to communicate with others, so they chose software and adopted patterns of use to guarantee their device is always available. P3, P4, P6, and P7, have a strong preference for software that does not rely on Internet access. They adopt similar patterns to avoid battery drain, P4 explained: “Unless I’m home, I don’t turn on my internet because it drains batteries faster”.

Finally, participants mentioned indirect barriers posed by proprietary AAC software and hardware. AAC users’ range of mobility varies greatly, and this is reflected in the wide variety of their software and hardware choices. For instance, P2 uses at least 5 different applications to communicate in different settings (e.g., Catalystwo, Proloquo4Text, Flip Writer, Projecting text editing software, and eSpeak), and P3, P4, P5, and P6 all use different devices with different input assistance (e.g., eye tracking, Accent 1000, Nuvoice, etc.)

6. Exploring a design space of AAC interface design to create humorous comments

By exploring a part of the design space of AAC interfaces, we aim to better understand what trade-offs go into designing such applications. Based on the critical role timing plays for AAC devices, as revealed in the qualitative interviews, we narrow down that space by focusing on techniques to leverage AI as a form of auto-complete. While auto-complete will speed up the ability to make humorous comments, there is a risk that it diminishes the sense of agency of the user by making jokes for users instead of with users. To explore this, we consider different UI components for such auto-complete, and implement four prototypical interfaces supporting different degrees of agency (user control) and efficiency (time to deliver the humorous comment).

6.1. An Example Interface

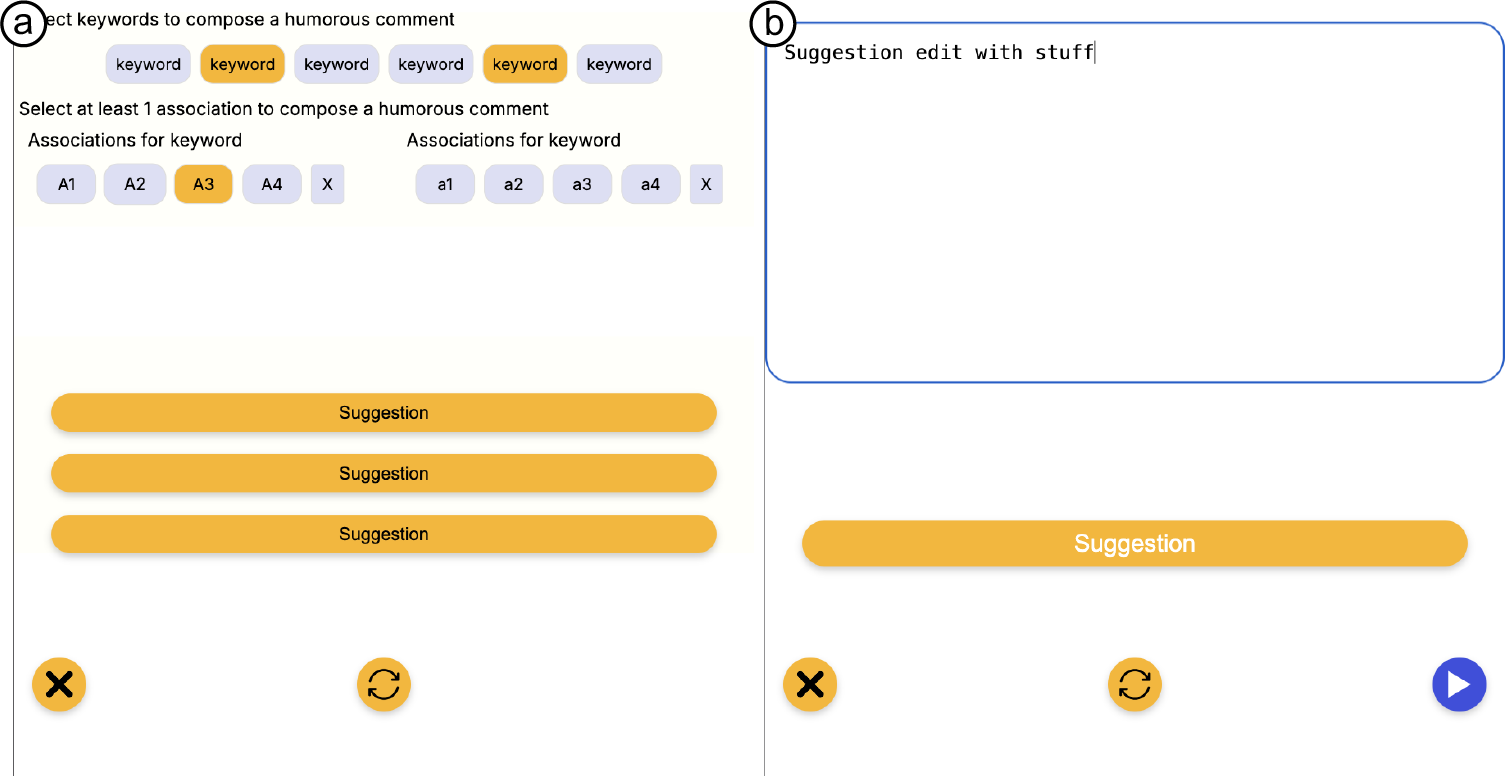

Before diving into specific UI design considerations, we demonstrate the general concept of one of our applications to get a sense for the UI flow in a brief overview outlined in Figure 2. For research purpose we aimed to design our interfaces be AAC device-independent, running in the browser and basically consisting of a text field in which users can type and access the Text-To-Speech (TTS) module to speak their words. By keeping a simple interface and implementing it as a browser tool we allow users to leverage their own input devices (ranging from eye tracking to virtual or physical keyboards as we have seen in our qualitative interviews).

(a) We extended this app with a ”joke mode”, which triggers the different forms of interfaces we explore in this section to support users in composing humorous comments. (b) The user can press the button to output their humorous comments through text to speech. (c) This example interface, later referred to as I2, displays keywords based on the ongoing conversation, which is captured and transcribed using automatic speech recognition (ASR). (d) Users either start typing their joke and/or select keywords to compose it. (e) The interface displays auto-completed suggestions for jokes and updates these as users provide more context. (f) If the user does not like the suggestion they can hit the refresh button to get a new one. When satisfied with the suggestion the user selects the suggestion which will copy to their input field, then they can either play it right away or customize the text.

6.2. Design Components

There are three dimensions of user interface components in the simple interface introduced above, we explore this by developing four applications that span the different dimensions. Table 2 shows how each interface represents a subset of the different UI components. We detail the design of these components in the next sections.

| input technique | suggestions | guidance | ||||||||

| ID | context | keyword | assoc | auto | single | multiple | editable | open | wiz | |

| I1 | ||||||||||

| I2 | ||||||||||

| I3 | ||||||||||

| I4 | ||||||||||

I2 is the interface we have seen in Figure 2. The other interfaces are variations thereof. I1 lets users interact with the conversation context by selecting speech bubbles instead of just keywords to tune the jokes. I3 is closer to I2 in that it also uses keywords as the base, but requires additional associations related to these keywords to fine-tune their meaning in the context of a joke. Finally, I4 is the simplest interface, it just generates suggested jokes automatically. It uses the conversation history as input, but gives users no way to tune.

6.2.1. Input Techniques to Tune Jokes

The input required by users to create humorous comments plays a large role in the efficiency of using auto-complete. We explore four different solutions. More user input will reduce the efficiency but gives users an increased sense of control. Figure 3 shows the different techniques side-by-side. (a) Full Auto is the simplest interface, it does not allow the user to tune the joke, it just creates a suggestion for a joke based on the current conversation context. This will be later referred to as I4. (b) Keyword selection simplifies the interaction by distilling the conversation into essential terms, leading to faster interjections, thereby enhancing efficiency while maintaining moderate agency through keyword choices. Both I1 and I2 allow for keyword selection. (c) Conversation context selection allows users to interact directly with specific parts of the conversation, offering high agency by providing detailed context for generating humorous comments, but at a cost to efficiency due to the increased interaction time required. This interface is labeled I1. Finally, (d) Associations Selection, inspired by Witscript (Toplyn, 2023), this component allows users to specify associations related to selected keywords to better steer the joke. The additional association helps disambiguate the way the keyword is to be used. This forms the basis of I3.

Next to these input techniques, all interfaces enable generating a joke by starting to type. This will specifically include the typed content as the start of each suggestion. By comparing these methods, we gain insight into how different levels of user control and interaction complexity impact the balance between expressiveness and speed in AAC interfaces.

6.2.2. Presentation of Joke Suggestions

We explore three ways to present the suggested jokes to users: Multiple Suggestions, Refining a Single Suggestion, and Looping Through Suggestions. Multiple Suggestions (I1 and I3) enhance agency by offering a variety of options, increasing the chance that users will find a fitting comment. However, the time that takes to read several choices slows down decision-making, particularly in fast-paced conversations. Refining a Single Suggestion (I2) provides users with a quick starting point that can be refined, before triggering TTS it gets displayed in the text field and allows users to modify. Users can make small adjustments to tailor the output to their needs without sacrificing too much speed. Finally, all interfaces allow users to loop through suggestions if they do not like a specific joke. Combined, these strategies highlight how different design choices affect user experience in AAC interfaces, offering varied solutions.

6.2.3. Open or Guided Interface

Past work supports how constrained choices could support ease of creative expression. We explore two guidance approaches: Wizard-Style guidance (Figure 4, A) and no/open guidance (Figure 4, B). The Wizard-Style guidance in (I3) requires users to input keywords and associations to generate jokes. On the other hand, more open guidance (I1 and I2) provides users with quick, AI-generated suggestions they can refine with additional selection of UI elements if needed. While the guidance provides likely well-fitting jokes, the more open style refinement can be significantly faster at the cost of less structure in coming up with jokes.

6.3. Implementation

The interfaces described above all build on a similar tech stack illustrated in Figure 5, which we make available to other researchers as a platform to continue to explore interface design for AAC technology. At the core is a NEXTJS 14.2.5 framework running on Vercel to allow for easy deployment across browsers. The front end React, Tailwind, while the back-end consists of 3 core components: Amazon Transcribe for speech recognition, Amazon Polly for neural text to speech, OpenAI GPT (OpenAI et al., [n. d.]) to process the conversation, generate keywords, associations and suggestions.

7. User Study: Understanding the practical implications of design choices

To evaluate the impact on the user experience of the design trade-offs discussed in the previous chapter, we conducted a user study with the four resulting interfaces. We were specifically interested in the way our participants experienced the timing and control over the humorous comments they made with the interface. The study and data collection protocols were reviewed and approved by our institution’s IRB board.

7.1. Participants

Five participants completed the user study (P1, P2, P3, P4, and P5). Due to personal circumstances P6 and P7 could not participate. P1 and P3 participated in person, at their homes, which added an extra layer of contextual information about how they interacted with the researcher conducting the experiment such as non-verbal cues.

7.2. Procedure

Each study session consisted of an interface demonstration followed by a conversational task with a researcher to try out the corresponding interface. Participants engaged in four fictional conversations lasting approximately 6 to 12 minutes. The conversations included the participant and a researcher in a one-on-one format. The researcher role-played as a conversational partner, while a second conversational partner (CP) was present in the room for protocol or system clarification if needed but did not actively participate in the conversation.

P2-P5 experienced all four interfaces during the study and P1 experience all interfaces except the Full-auto interface (Interface 4) because they had to end the study early.

7.2.1. Conversational Tasks

The conversations were semi-structured and informal, with the focus on generating humorous comments based on the ongoing dialogue. Study participants engaged on conversation in various ways: using either speech (via text-to-speech) or through written text. We designed four conversational tasks to elicit humorous comments within the context of a real-time interaction. The four conversational tasks were based on the following topics:

-

•

Task 1: Getting Lost in a Costume Store - Participants engaged in a scenario where they and the researcher were trying to find the perfect outfit for an upcoming party. The conversation involved navigating a series of humorous mishaps, such as accidentally trying on absurd costumes, getting lost in the store, and debating which ridiculous outfit would be the most entertaining.

-

•

Task 2: Applying to the Wrong Job - In this task, participants responded to a scenario in which the researcher mistakenly applied for and received a job in a funny or unusual role. Participants were asked to offer advice on what the researcher should do next.

-

•

Task 3: Explaining a Funny Mishap at Work -For this task, the researcher described a humorous mishap they experienced at the office to the participant who acted as a workmate. The task required participants to make light of the situation while the researcher explained what had happened.

-

•

Task 4: Ordering Pizza - Participants engaged in a conversation where they were placing a pizza order with the researcher. The discussion involved humorous misunderstandings about toppings, delivery instructions, and the restaurant’s unusual options.

After completing each task, participants filled out a Likert scale questionnaire to gather their feedback. Finally, after the four conversational tasks, participants were asked open-ended questions through an online survey where they could elaborate on what they like or dislike about the interfaces. Survey questions included questions like: What did you like the most about the interfaces? What did you find most challenging about using the interface?

7.3. Data Collection and Analysis

Video and audio were recorded for analysis during each session. During the tasks we recorded the participant’s screen to capture the interaction with the different elements in the screen.

7.3.1. Interaction Coding

To analyze usage of the different apps and features, we coded the videos for each interfaces with close-coding for each interaction. For example, we coded each time participants entered joke mode or interacted with the interface feature. This allowed to see emerging patterns in how the users interact with our interfaces in (Figure 7) we characterized those interactions by color and shape then we proceeded to extract conclusions from the observed patterns.

7.3.2. Likert-scales and survey analysis

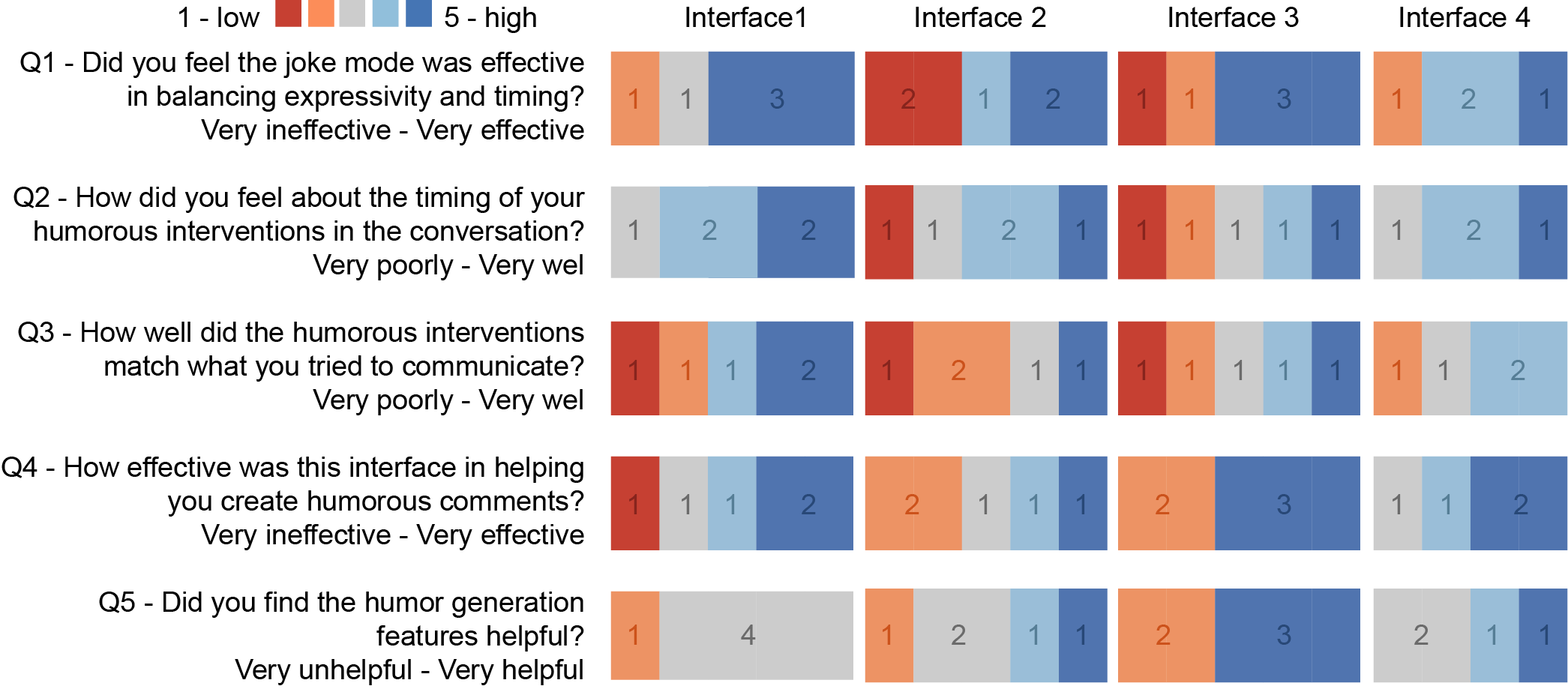

We collected Likert scale questionnaires to asses the usefulness of each feature. We captured participants thoughts on how well the tool worked out to generate humorous comments (e.g., Did you find the humor generation features helpful? How well did the humorous interventions match what you tried to communicate?). In (Figure 6) we present the results of the Likert scale questionnaires.

7.4. Results

We present insights into participants’ experiences with various interfaces designed to support AAC users generate humorous comments in live conversations. We explored four interfaces proposed in our design space: (1) Context Bubble Selection, (2) Keyword Selection, (3) Wizard-style Guidance, and (4) Full-auto Suggestions

In section 7.4.1 we present participants user ratings (See Figure 6) to describe their overall experience with each interface. In sections 7.4.2 to 7.4.5, we dive deeper into participants patterns of interaction with each interface (See Figure 7) and describe how well each proposed feature supported AAC users in generating humorous comments. In sections 7.4.6 and 7.4.7, we highlight participants preferences for all interfaces and features, and also recommendations participants shared to improve future tools to support generation of humorous comments with AAC.

7.4.1. User Experience

In this section we present results of how participants rated each interface in terms of: (1) Helpfulness ,e(3) Timelinesst, (4) expressiveness to match their intention, and (5) balancing expressiveness and timing (all relating to generating humorous comments). We found surprising facts such as some participants favoring the Full-auto interface over other interfaces despite taking away their agency to generate jokes. Other interesting findings included: (1) Most participants found the Contextual Conversation Bubble Interface the best in terms of intention expressiveness (matching their intention), as well as timing, striking the middle ground in this trade-off; despite this, (2) Most participants scored Contextual Conversation Bubble neutral or somewhat negatively in terms of helpfulness to generate jokes. We provide more details in the following subsections.

Timing vs Intention

As seen in Fig 6 Q2 results, the Interface 1 was the best to deliver humorous comments in a timely manner. Four participants scored it positively, and only one scored it neutral. Meanwhile, the Interface 3 had the strongest negative reaction with two participants scoring it negatively, and one scored it neutral. In the Interface 3 users had to make several clicks more than in the other interfaces to be able to generate jokes. For both the Interface 2 and Interface 4 participants felt roughly similar: Both had three positive reactions, and one neutral reaction. This is to be expected since both interfaces required users to click a similar amount of times to generate a joke. For example, with the Interface 2 the user might select two keywords and then send a joke, while with the Interface 4 the user might have to refresh the joke generation several times before sending it (See Fig 7). Surprisingly, Interface 1 came on top. Initially we hypothesized it to be the 2nd worst or the worst in timing due to its’ strict requirement of having to select both conversation bubbles and keywords before being able to see the first suggestion. We observed that participants, even if they had to click more times than in Interface 2 and 4, they could immediately select the portions of the conversation they were interested in to make a joke, making it faster than waiting for the right keywords or joke to appear. P4 explained “I think because I’m a person who reacts to what is said instantly it’s difficult to wait for the AI to come up with humorous suggestions. Especially if it doesn’t match what I was instantly thinking”. P2 added “Refreshing [asking for a new suggestion] sometimes made it better but reloading and opening the joke interface were also actions that took time”.

Moving to Q3 results (intention), again, Interface 1 came ahead of the rest though by a considerable margin. It was the only interface where three participants scored it positively. The context bubble selection feature ability to select snippets of the conversation and then keywords derived from those snippets strongly enhanced users’ abilities to evoke their intention through the interface. Then, Interface 3 beat Interface 2 because it allowed users to the refine their intention by using the suggested associations. Something interesting happened at the lower bottom; Interface 4 had marginally better scores than Interface 2 even though users had less direct control of the joke output (they could only hit a refresh button that provided a joke based on the past 5 sentences). Three participants had negative reactions to Interface 2 while only one participant had a somewhat negative reaction to the Interface 4. We observed that during their use of Interface 2, participants were frustrated because they did not have control over which keywords popup and sometimes the keywords they wanted to use did not appear immediately after the other person spoke. P2 shared ”It gave a lot of jokes that used the keywords but didn’t actually fit the conversation”. Thus, we observed that this mismatch in intention evoked stronger negative feelings for Interface 2 in Q3.

Balancing Timing and Intention to Create Jokes:

In Q1 results Interface 1 achieved first place though not by a significant margin. All interfaces had three positive reactions but the strongest positive reactions were for Interface 1 with three participants giving the highest score (5) and one participant gave a neutral score. Participants felt that Interface 1 struck a reasonable balance between providing features that let them refine the joke to their intended message, and the time it took to do it. Interface 2, 3 and 4 all had an acceptable balance, though the Interface 3 came slightly ahead over the keywords interface with two participants providing slightly better individual scores ( three 5s vs two 5s and one 4, and one 1 and one 2 vs two 2s). Participants weighted intention as more important than timing (as seen in our interview results!) to decide which interface had a better balance between intention and timing. While participants had to go through many steps to evoke their intention when using Interface 3, the benefits of being able to refine their intention outweighed the sacrifice in timing. When comparing Interface 2 and 4 there is no clear difference, though participants had stronger opinions for Interface 2 ( two 1s and two 5s vs zero 1s and one 5). Again, we believe that this was because the mismatch in intention when using Interface 2 evoked stronger negative feelings overall.

Humor Generation:

The Interface 1 beat Interface 3 as the best in Q3 (matching intention) but it was the least helpful (Q1) at generating humorous comments. While users had the ability to intentionally select concrete portions out of the conversation (thus, the best at Q3), some users were confused and struggled to access the feature of selecting keywords out of the selected conversation bubbles. This made it difficult for the LLM to generate appropriate jokes since users did not communicate to the LLM what it should focus on. For example, P4 and P5 had a hard time scrolling through the conversation history due to their input methods (See 7.4.2). Thus, while providing added agency, four participants scored Interface 1 as neutral and one as not helpful to generate jokes.

Three participants scored the Interface 3 as very helpful (Q1) and very effective (Q2) in generating humorous comments. Since participants were able to select contextually relevant keywords, and then associations that better matched their intention, they had more control on what the LLM would use to generate jokes. This is reflected in its’ previously presented score on intention (Q3), where Interface 3 scored second best. Despite this positive outcome, two participants scored this interface as not helpful and somewhat ineffective. This was reflected in the interaction pattern of some of these participants. For example, P3 engaged with the feature only once during their task interaction (See Fig7, I3-P3).

Interestingly, Interface 4 and 2 both received one very helpful, one helpful, and two neutral scores. As previously argued, both of these interfaces required a similar (low) amount of clicks to generate a joke. This resulted in Interface 4 being perceive as effective; receiving two very effective, one effective and one neutral score. As P4 put it: “ (Interface 4) best of the interfaces so far due to simplicity”. Despite taking some control from them in generating the jokes, some participants found interfaces that required less clicks to be helpful and effective to generate humorous comments.

7.4.2. Conversation context selection and multiple suggestions

The conversation context selection technique included in Interface 1 enabled users to select the conversational context bubbles for their humorous comment (see Figure 3, A). Participants interaction with the conversation bubbles varied widely (Figure 7). For example, P1 and P3 only selected the conversation context bubbles one time each to try it. P1 then used one additional joke suggestion without selecting the conversational context bubbles again. P3 liked that she could see the transcript of the conversation while she listened to it and mentioned: “it helped me think of jokes.”

P4 and P5 had a hard time scrolling through the conversation history due to their input methods (joystick mouse and eye gaze respectively) which interfered with their ability to see the whole screen. They did not use the conversation context selection feature. On the other hand, P2 selected conversation context bubbles 10 times during the task but an interesting pattern in their behavior was that they would use the interface to see the humorous comments suggestions and then typed their own version of the joke, as if they used suggestions only as inspiration support. All participants except P5 used the suggestions to share humorous comments.

7.4.3. Keywords and single suggestions

Interface 2 allowed users to select keywords extracted from the conversation to refine the humorous comments suggestions (see figure 3, B). We can observe in figure 7 that with this interface our participants generated the highest amount of humorous comments out of all interfaces. All users were able to successfully compose a humorous comment selecting keywords and suggestions. P2 interacted with the interface the most, selecting 15 keywords throughout the conversation but, similar to interface 1, they used the suggestions as a source of inspiration. After selecting a couple of keywords, they would turn off joke mode and then type their own version of the jokes. They only picked a suggestion once, and they also edited it before playing it.

P3, P4, and P5 showed a similar pattern choosing only one keyword and then picking the first suggestion. On the other hand, P1 usually selected 3 keywords to guide the AI to the joke she wanted. At the end of the conversation, P1 fired two suggestions in a row without picking any keyword. This was an interesting behavior since no other participant did something similar with any other interface. Post-task she commented “It was easy to use, it made sense but sometimes i felt like I wasn’t in control”. We asked how this could be improved and she added “typing the keywords” could help her feel in control. P3 added that she liked the “very basic” interface.

7.4.4. Guided interface with keyword associations

Interface 3 guided users through selecting keywords extracted from the conversation and then keyword associations to generate joke suggestions in a wizard-style way. This interface showed an increased number of interactions in general from P1, P2 and P4 compared to the rest of the interfaces but that might as well be because of the guided-style of composing the humorous comments. P4 explored the generation of suggestions by selecting different associations for the same keyword in one occasion and in another by selecting two keywords then 1 association but then instead of picking the second association he selected 1 more keyword (previous step) and then selecting the second association before finally choosing the suggestion.

In a more standard way P1 was able to share 6 jokes following the guidance of the interface by first selecting keywords, followed by associations and finally picking a suggestion. Notably, P1 used the refresh option to obtain more suggestions, rather than choosing the first one as in previous interfaces.

P3 exhibited a very limited interaction with this interface, engaging joke mode only once. This might be due to the fact that she was using one of the researchers as proxy to click elements on the screen, and this guided interface required many clicking steps before a user could get to joke suggestions. Similarly P5 did not engage joke mode at all for this interface. We hypothesize that this guided interface might not be the preferred way for eye gaze input but without further testing this might be an abrupt assumption.

On the other hand P2 interacted with this interface much more than all the others, engaging joke mode 7 times selecting 11 keywords and choosing 12 associations in total over the whole conversation, but unfortunately this did not translate into the numbers of jokes made using the suggestions where the total count came to be one. Most of their interactions concluded into them closing joke mode and typing their own jokes after going through the whole guided process. After the task P4 expressed his discomfort of having “too many steps” before he could get the suggestion. Furthermore, P1 shared “keywords [and associations] are fun to pick but sometimes it’s wasn’t easy to choose [between so many options]”. Lastly, P3 expressed her excitement about this interface which worked similar to how her AAC device works, by using associations on a symbol based keyboard.

7.4.5. Automatic Suggestions

Interface 4 was the simplest interface which gave the users the “best” joke automatically based on the conversation context and the user’s typing input. Users could then either accept it, optionally edit it or hit the refresh button to get a new automatic suggestion. P2 and P4 took advantage of the refresh button to quickly iterate through several suggestions before picking one, and they both opted to slightly tweak the suggestion with the manual input to make it more personal. P2 hit the refresh button at least 24 times during the session but only used the suggestion three times with edits. For all other instances, P2 refreshed the suggestions and then proceeded to directly type their own humorous comment.

In contrast, P3 never used the refresh button. From the two ocassions when she picked a suggestion, she chose the first option without making any edits to be able to deliver the joke as quickly as possible. P5 completely ignored the suggestions and typed 100% of his comments, we believe that this was due to the fact that when he open his eye gaze keyboard to speak it overlaid over the part of the screen where the suggestions were. Sadly due to time constraints P1 wasn’t able to try this interface.

P3 and P4 expressed that they liked interface 4 the most due to simplicity. P3 shared “it helped me think of jokes” and she liked that it it gave suggestions without needed to click anything. We asked about how well the AI matched her intentions and she replied “it matched my intentions and also influenced it”. P4 said “best of the interfaces so far due to simplicity” but also expressed “As the other interfaces it takes time getting use to using the app generating thoughts and the delay of using the mouse delays flow [of the interaction]”

7.4.6. Overall Feature Preference

P2 expressed that they liked the ability of editing the suggestion after picking it “It was a useful feature when it existed.” and they also liked being able to see both the jokes and the text input at the same time. On that note P4 expressed that it was hard to use the applications on a separate screen from his AAC device “Seeing it on a device I can touch and be in line with my sight” since he was using the computer for the Zoom and Interfaces and his AAC device (touchscreen) as virtual keyboard on the computer. Despite that he liked the “Ease of learning” of the different interfaces. P3 expressed “I really liked the first interface [bubbles]. I found the text-to-speech Setup most effective”. P5 said “It was ”new” to me. Everything takes a moment to learn at first” P3 expressed a similar feeling “I just found learning several different interfaces hard.”

7.4.7. Recommendations for improvements

Participants expressed enthusiasm for the different range of options that our interfaces presented as well as recommendations for access considerations and improvements. Both P2 and P4 expressed the need of “Full keyboard navigability” to which P4 expanded “Keyboard shortcuts and things without a mouse in mind. Interfaces might be better on touchscreen for people using direct select methods, for scanners and eye gaze may be troublesome” which is an important point for futures improvements. P3 said that she would want to see “an adult version and a child version for everyone” implying how she might want to use different types of humor in different situations. P5 added “A sarcastic voice would be nice”. In general all the participants expressed their excitement about the opportunity to test different interfaces and P3 encapsulated that feeling closing the session saying “ I’m just excited to see where this project goes, and whether or not my future device will have it.”

8. Discussion

Our work presents one of the first explorations of the design space of interfaces for AI powered apps to support AAC users in making humorous comments. Our interview study findings revealed the importance of humor for AAC user’s to connect with others socially. We also found several key barriers in current AAC software and hardware that hinders AAC users ability to generate humorous comments, such as intonation, timing, and technical limitations. Our user study findings reveal nuances and preferences of AAC users for generating humorous comments through participant’s users ratings for each of our prototype interfaces. These user ratings reveal interesting trade-offs such as participants enjoying using the Full-auto interface despite taking agency away from them. We also present user patterns of interaction, which demonstrate how each participant uniquely interacted with each interface. In the following section, we propose design recommendations for the development of future AAC technology.

8.1. Recommendations for Design

After conducting the two studies outlined before, we draw the following high-level recommendations for the design of AI-powered AAC interfaces to make humorous comments:

Intonation and Expressivity: Both our interview study and user study found evidence for participants prioritizing expressivity to a high degree. Participants deeply desired to be able to express themselves despite sometimes having to sacrifice timing. Our interview study also captured a dimension in communication and joke-construction which we did not explore in our user study: Intonation. As participants explained during the interviews, intonation plays a fundamental role in controlling the setup and the impact of a joke. Relatedly, how the voice sounds (e.g., male, female, kid, adult, robotic) was another factor brought up multiple times by several participants during the interviews and the user studies. Most participants felt that people might perceive them as ”not human” and ”monotone”, since their voice is typically a computerized TTS. Thus, we recommend future AI powered apps to design the experience in such way that users (or the AI) have control over the voice that plays the joke, and also the cadence of the words in message. For example, future applications can include interface components like emojis (tongue-sticking out as sarcastic) (Fiannaca et al., 2018) to indicate the intonation in which the joke must be read. Alternative, the AI might automatically detect whether the use is being sarcastic or otherwise to modify the voice with the matching intonation.

Simplicity: Participants valued simplicity to a significant degree. Simplicity comes in two flavors: Low amount of actions to achieve their goal (generating a joke), and offering the right amount of options. Participants praised Interface 4 for being able to quickly generate a joke and having an interface simple to understand. On the contrary, participants pointed out how the high number of steps to use Interface 3 might make it unusable despite offering one of the highest levels of agency to the user. Thus, we recommend future designers to carefully consider the number of “actions” the user needs to perform before they can get the AI suggest humorous comments to use. We also recommend future designers to minimize the number of options that are simultaneously presented to the user. For example, future AI powered apps can leverage the extended context of window that more recent LLM models offer to retain information about users preferences and patterns of use. Through this, the interface can adapt dynamically to offer the right amount of options (Low or high). This not only simplifies the UI but also reduces the overhead time that users spend reviewing multiple suggestion before deciding whether to use one suggestion or other.

Recallability: Participants pointed out that one of the best features of all our interfaces was having access to the full transcript of the conversation in interface Conversation Bubble interface. The transcript not only allowed them to select the context bubbles for the AI to use, but also as a source of material to think of jokes. Recalling P3 comments, being able to see the conversation while listening to their conversational partner helped her think of jokes. Therefore, we recommend future designers to integrate a form of conversation visualization paired with an automatic speech recognition (ASR) toolkit. One way in which they could integrate such visualization could be following our example of speech bubbles in Interface 1.

Interoperability: Even though all the users were able to interact with all the interfaces, we observed that P4 and P5 had a worse experience using their joystick input and eye gaze with some of the interfaces. Specifically, the action of scrolling was difficult to perform. As such, these participants did not found as helpful the interfaces that required scrolling (1,3). The input experience of each individual is unique, and the effort to perform one task or another varies widely. We recommend future designers to (1) use non-scrollable pages to ease user with less mobility using alternative input methods, (2) offer multiple UI layouts options since non-standard input devices like the eye gaze keyboard might overlay another interface component on top of any application. This might obstruct the interaction to certain elements, like we observed with P5 and his Tobii Dynabox.

8.2. Future Work

Our user study explored four different interfaces during one study session. While this is an excellent first step to get a sense for how to support humor creation for AAC users, it is critical that users have an opportunity to be exposed for longer periods of time with each tools to thoroughly test them in their daily lives. Future researchers can conduct longitudinal studies to collect richer data in terms of user interactions, and ecological data about the kinds of scenarios AAC users decide to use these tools to support them generate humorous comments. Our study derived rich insights about the current experience of creating humor with AAC technology, however we also acknowledge our sample population was small. Future researchers can also conduct studies with a larger cohort of AAC users with a wider range of abilities.

9. Conclusion

In this paper we presented an exploration of how AI-powered interfaces can enhance Augmentative and Alternative Communication (AAC) technology to help people with speech disabilities deliver well-timed humorous comments. Through qualitative interviews and a user study, we analyzed the challenges of using AAC for humor and proposed recommendations for designing interfaces that support humor creation with AAC.

Future researchers can follow our recommendations for design to create intelligent AAC interfaces that provide AAC users with the ”freedom” to express themselves and have a good laugh with others.

Acknowledgements.

Thanks to Zekai Shen, Samee Chandra and Maria Tane for helping in the develpment of the prototypes and Roy Zunder for helping analysing the data and wrinting.References

- (1)

- Abu-Rayyash (2023) Hussein Abu-Rayyash. 2023. In AI meets comedy: Viewers’ reactions to GPT-4 generated humor translation. https://www.sciencedirect.com/science/article/pii/S2215039023000553

- Bhat et al. (2023) Advait Bhat, Saaket Agashe, Parth Oberoi, Niharika Mohile, Ravi Jangir, and Anirudha Joshi. 2023. Interacting with Next-Phrase Suggestions: How Suggestion Systems Aid and Influence the Cognitive Processes of Writing. In Proceedings of the 28th International Conference on Intelligent User Interfaces (Sydney, NSW, Australia) (IUI ’23). Association for Computing Machinery, New York, NY, USA, 436–452. https://doi.org/10.1145/3581641.3584060

- Bi et al. (2014) Xiaojun Bi, Tom Ouyang, and Shumin Zhai. 2014. Both complete and correct? multi-objective optimization of touchscreen keyboard. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Toronto, Ontario, Canada) (CHI ’14). Association for Computing Machinery, New York, NY, USA, 2297–2306. https://doi.org/10.1145/2556288.2557414

- Binsted et al. (2006) K. Binsted, A. Nijholt, O. Stock, C. Strapparava, G. Ritchie, R. Manurung, H. Pain, A. Waller, and D. O’Mara. 2006. Computational humor. IEEE Intelligent Systems 21, 2 (2006), 59–69. https://doi.org/10.1109/MIS.2006.22

- Braun and Clarke (2006) Virginia Braun and Victoria Clarke. 2006. Using thematic analysis in psychology. Qualitative research in psychology 3, 2 (2006), 77–101.

- Cai et al. ([n. d.]) Shanqing Cai, Subhashini Venugopalan, Katie Seaver, Xiang Xiao, Katrin Tomanek, Sri Jalasutram, Meredith Ringel Morris, Shaun Kane, Ajit Narayanan, Robert L. MacDonald, Emily Kornman, Daniel Vance, Blair Casey, Steve M. Gleason, Philip Q. Nelson, and Michael P. Brenner. [n. d.]. Using Large Language Models to Accelerate Communication for Users with Severe Motor Impairments. arXiv:2312.01532 [cs] http://arxiv.org/abs/2312.01532

- Cardon et al. (2023) Peter Cardon, Carolin Fleischmann, Jolanta Aritz, Minna Logemann, and Jeanette Heidewald. 2023. The Challenges and Opportunities of AI-Assisted Writing: Developing AI Literacy for the AI Age. Business and Professional Communication Quarterly 86, 3 (2023), 257–295. https://doi.org/10.1177/23294906231176517 arXiv:https://doi.org/10.1177/23294906231176517

- Cui et al. (2020) Wenzhe Cui, Suwen Zhu, Mingrui Ray Zhang, H. Andrew Schwartz, Jacob O. Wobbrock, and Xiaojun Bi. 2020. JustCorrect: Intelligent Post Hoc Text Correction Techniques on Smartphones. In Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology (Virtual Event, USA) (UIST ’20). Association for Computing Machinery, New York, NY, USA, 487–499. https://doi.org/10.1145/3379337.3415857

- Fang et al. ([n. d.]) Yuyang Fang, Yunkai Xu, Zhuyu Teng, Zhaoqu Jiang, and Wei Xiang. [n. d.]. SocializeChat: a GPT-based AAC Tool for Social Communication Through Eye Gazing. In Adjunct Proceedings of the 2023 ACM International Joint Conference on Pervasive and Ubiquitous Computing & the 2023 ACM International Symposium on Wearable Computing (Cancun, Quintana Roo Mexico, 2023-10-08). ACM, 128–132. https://doi.org/10.1145/3594739.3610705

- Fiannaca et al. (2018) Alexander Fiannaca, Jon Campbell, Ann Paradiso, and Meredith Ringel Morris. 2018. Voicesetting: Voice Authoring UIs for Improved Expressivity in Augmentative Communication.(2018). DOI: http://dx. doi. org/10.1145/3173574.3173857 (2018).

- Fiannaca et al. (2017) Alexander Fiannaca, Ann Paradiso, Mira Shah, and Meredith Ringel Morris. 2017. AACrobat: Using mobile devices to lower communication barriers and provide autonomy with gaze-based AAC. In Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing. 683–695.

- Fontana De Vargas et al. ([n. d.]) Mauricio Fontana De Vargas, Jiamin Dai, and Karyn Moffatt. [n. d.]. AAC with Automated Vocabulary from Photographs: Insights from School and Speech-Language Therapy Settings. In Proceedings of the 24th International ACM SIGACCESS Conference on Computers and Accessibility (Athens Greece, 2022-10-23). ACM, 1–18. https://doi.org/10.1145/3517428.3544805

- Fontana de Vargas and Moffatt ([n. d.]) Mauricio Fontana de Vargas and Karyn Moffatt. [n. d.]. Automated Generation of Storytelling Vocabulary from Photographs for use in AAC. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers) (Online, 2021-08), Chengqing Zong, Fei Xia, Wenjie Li, and Roberto Navigli (Eds.). Association for Computational Linguistics, 1353–1364. https://doi.org/10.18653/v1/2021.acl-long.108

- Gero et al. (2022) Katy Ilonka Gero, Vivian Liu, and Lydia Chilton. 2022. Sparks: Inspiration for Science Writing using Language Models. In Proceedings of the 2022 ACM Designing Interactive Systems Conference (Virtual Event, Australia) (DIS ’22). Association for Computing Machinery, New York, NY, USA, 1002–1019. https://doi.org/10.1145/3532106.3533533