When Source-Free Domain Adaptation Meets Learning with Noisy Labels

Abstract

Recent state-of-the-art source-free domain adaptation (SFDA) methods have focused on learning meaningful cluster structures in the feature space, which have succeeded in adapting the knowledge from source domain to unlabeled target domain without accessing the private source data. However, existing methods rely on the pseudo-labels generated by source models that can be noisy due to domain shift. In this paper, we study SFDA from the perspective of learning with label noise (LLN). Unlike the label noise in the conventional LLN scenario, we prove that the label noise in SFDA follows a different distribution assumption. We also prove that such a difference makes existing LLN methods that rely on their distribution assumptions unable to address the label noise in SFDA. Empirical evidence suggests that only marginal improvements are achieved when applying the existing LLN methods to solve the SFDA problem. On the other hand, although there exists a fundamental difference between the label noise in the two scenarios, we demonstrate theoretically that the early-time training phenomenon (ETP), which has been previously observed in conventional label noise settings, can also be observed in the SFDA problem. Extensive experiments demonstrate significant improvements to existing SFDA algorithms by leveraging ETP to address the label noise in SFDA.

1 Introduction

Deep learning demonstrates strong performance on various tasks across different fields. However, it is limited by the requirement of large-scale labeled and independent, and identically distributed (i.i.d.) data. Unsupervised domain adaptation (UDA) is thus proposed to mitigate the distribution shift between the labeled source and unlabeled target domain. In view of the importance of data privacy, it is crucial to be able to adapt a pre-trained source model to the unlabeled target domain without accessing the private source data, which is known as Source Free Domain Adaptation (SFDA).

The current state-of-the-art SFDA methods (Liang et al., 2020; Yang et al., 2021a; b) mainly focus on learning meaningful cluster structures in the feature space, and the quality of the learned cluster structures hinges on the reliability of pseudo labels generated by the source model. Among these methods, SHOT (Liang et al., 2020) purifies pseudo labels of target data based on nearest centroids, and then the purified pseudo labels are used to guide the self-training. G-SFDA (Yang et al., 2021b) and NRC (Yang et al., 2021a) further refine pseudo labels by encouraging similar predictions to the data point and its neighbors. For a single target data point, when most of its neighbors are correctly predicted, these methods can provide an accurate pseudo label to the data point. However, as we illustrate the problem in Figure 1i(a-b), when the majority of its neighbors are incorrectly predicted to a category, it will be assigned with an incorrect pseudo label, misleading the learning of cluster structures. The experimental result on VisDA (Peng et al., 2017), shown in Figure 1ii, further verifies this phenomenon. By directly applying the pre-trained source model on each target domain instance (central instance), we collect its neighbors and evaluate their quality. We observed that for each class a large proportion of the neighbors are misleading (i.e., the neighbors’ pseudo labels are different from the central instance’s true label), some even with high confidence (e.g., the over-confident misleading neighbors whose prediction score is larger than 0.75). Based on this observation, we can conclude that: (1) the pseudo labels leveraged in current SFDA methods can be heavily noisy; (2) some pseudo-label purification methods utilized in SFDA, which severely rely on the quality of the pseudo label itself, will be affected by such label noise, and the prediction error will accumulate as the training progresses. More details can be found in Appendix A.

In this paper, we address the aforementioned problem by formulating SFDA as learning with label noise (LLN). Unlike existing studies that heuristically rely on cluster structures or neighbors, we investigate the properties of label noise in SFDA and show that there is an intrinsic discrepancy between the SFDA and the LLN problems. Specifically, in conventional LLN scenarios, the label noise is generated by human annotators or image search engines (Patrini et al., 2017; Xiao et al., 2015; Xia et al., 2020a), where the underlying distribution assumption is that the mislabeling rate for a sample is bounded. However, in the SFDA scenarios, the label noise is generated by the source model due to the distribution shift, where we prove that the mislabeling rate for a sample is much higher, and can approach . We term the former label noise in LLN as bounded label noise and the latter label noise in SFDA as unbounded label noise. Moreover, we theoretically show that most existing LLN methods, which rely on bounded label noise assumption, are unable to address the label noise in SFDA due to the fundamental difference (Section 3).

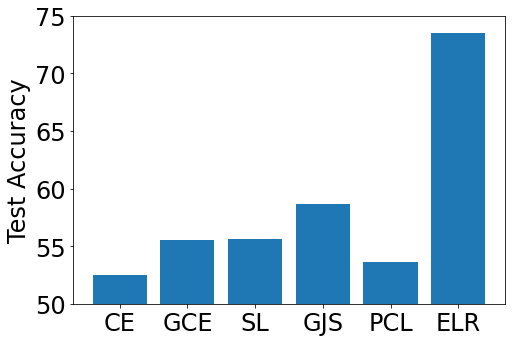

To this end, we leverage early-time training phenomenon (ETP) in LLN to address the unbounded label noise and to improve the efficiency of existing SFDA algorithms. Specifically, ETP indicates that classifiers can predict mislabeled samples with relatively high accuracy during the early learning phase before they start to memorize the mislabeled data (Liu et al., 2020). Although ETP has been previously observed in, it has only been studied in the bounded random label noise in the conventional LLN scenarios. In this work, we theoretically and empirically show that ETP still exists in the unbounded label noise scenario of SFDA. Moreover, we also empirically justify that existing SFDA algorithms can be substantially improved by leveraging ETP, which opens up a new avenue for SFDA. As an instantiation, we incorporate a simple early learning regularization (ELR) term (Liu et al., 2020) with existing SFDA objective functions, achieving consistent improvements on four different SFDA benchmark datasets. As a comparison, we also apply other existing LLN methods, including Generalized Cross Entropy (GCE) (Zhang & Sabuncu, 2018), Symmetric Cross Entropy Learning (SL) (Wang et al., 2019b), Generalized Jensen-Shannon Divergence (GJS) (Englesson & Azizpour, 2021) and Progressive Label Correction (PLC) (Zhang et al., 2021), to SFDA. Our empirical evidence shows that they are inappropriate for addressing the label noise in SFDA. This is also consistent with our theoretical results (Section 4).

Our main contribution can be summarized as: (1) We establish the connection between the SFDA and the LLN. Compared with the conventional LLN problem that assumes bounded label noise, the problem in SFDA can be viewed as the problem of LLN with the unbounded label noise. (2) We theoretically and empirically justify that ETP exists in the unbounded label noise scenario. On the algorithmic side, we instantiate our analysis by simply adding a regularization term into the SFDA objective functions. (3) We conduct extensive experiments to show that ETP can be utilized to improve many existing SFDA algorithms by a large margin across multiple SFDA benchmarks.

2 Related work

Source-free domain adaptation. Recently, SFDA are studied for data privacy. The first branch of research is to leverage the target pseudo labels to conduct self-training to implicitly achieve adaptation (Liang et al., 2021; Tanwisuth et al., 2021; Ahmed et al., 2021; Yang et al., 2021b). SHOT (Liang et al., 2020) introduces k-means clustering and mutual information maximization strategy for self-training. NRC (Yang et al., 2021a) further investigates the neighbors of target clusters to improve the accuracy of pseudo labels. These studies more or less involve pseudo-label purification processes, but they are primarily heuristic algorithms and suffer from the previously mentioned label noise accumulation problem. The other branch is to utilize the generative model to synthesize target-style training data (Qiu et al., 2021; Liu et al., 2021b). Some methods also explore the SFDA algorithms in various settings. USFDA (Kundu et al., 2020a) and FS (Kundu et al., 2020b) design methods for universal and open-set UDA. In this paper, we regard SFDA as the LLN problem. We aim to explore what category of noisy labels exists in SFDA and to ameliorate such label noise to improve the performance of current SFDA algorithms.

Learning with label noise. Existing methods for training neural networks with label noise focus on symmetric, asymmetric, and instance-dependent label noise. For example, a branch of research focuses on leveraging noise-robust loss functions to cope with the symmetric and asymmetric noise, including GCE (Zhang & Sabuncu, 2018), SL (Wang et al., 2019b), NCE (Ma et al., 2020), and GJS (Englesson & Azizpour, 2021), which have been proven effective in bounded label noise. On the other hand, CORES (Cheng et al., 2020) and CAL (Zhu et al., 2021) are shown useful in mitigating instance-dependent label noise. These methods are only tailed to conventional LLN settings. Recently, Liu et al. (2020) has studied early-time training phenomenon (ETP) in conventional label noise scenarios and proposes a regularization term ELR to exploit the benefits of ETP. PCL (Zhang et al., 2021) is another conventional LLN algorithm utilizing ETP, but it cannot maintain the exploit of ETP in SFDA as memorizing noisy labels is much faster in SFDA. Our contributions are: (1) We theoretically and empirically study ETP in the SFDA scenario. (2) Based on an in depth analysis of many existing LLN methods (Zhang & Sabuncu, 2018; Wang et al., 2019b; Englesson & Azizpour, 2021; Zhang et al., 2021), we demonstrate that ELR is useful for many SFDA problems.

3 Label Noise In SFDA

The presence of label noise on training datasets has been shown to degrade the model performance (Malach & Shalev-Shwartz, 2017; Han et al., 2018). In SFDA, existing algorithms rely on pseudo-labels produced by the source model, which are inevitably noisy due to the domain shift. The SFDA methods such as Liang et al. (2020); Yang et al. (2021a; b) cannot tackle the situation when some target samples and their neighbors are all incorrectly predicted by the source model. In this section, we formulate the SFDA as the problem of LLN to address this issue. We assume that the source domain and the target domain follow two different underlying distributions over , where and are respectively the input and label spaces. In the SFDA setting, we aim to learn a target classifier only with a pre-trained model on and a set of unlabeled target domain observations drawn from . We regard the incorrectly assigned pseudo-labels as noisy labels. Unlike the “bounded label noise” assumption in the conventional LLN domain, we will show that the label noise in SFDA is unbounded. We further prove that most existing LLN methods that rely on the bounded assumption cannot address the label noise in SFDA due to the difference.

Label noise in conventional LLN settings: In conventional label noise settings, the injected noisy labels are collected by either human annotators or image search engines (Lee et al., 2018; Li et al., 2017; Xiao et al., 2015). The label noise is usually assumed to be either independent of instances (i.e., symmetric label noise or asymmetric label noise) (Patrini et al., 2017; Liu & Tao, 2015; Xu et al., 2019b) or dependent of instances (i.e., instance-dependent label noise) (Berthon et al., 2021; Xia et al., 2020b). The underling assumption for them is that a sample has the highest probability of being in the correct class , i.e., , where is the noisy label and is the ground-truth label for input . Equivalently, it assumes a bounded noise rate. For example, given an image to annotate, the mislabeling rate for the image is bounded by a small number, which is realistic in conventional LLN settings (Xia et al., 2020b; Cheng et al., 2020). When the label noise is generated by the source model, the underlying assumption of these types of label noise does not hold.

Label noise in SFDA: As for the label noise generated by the source model, mislabeling rate for an image can approach , that is, . To understand that the label noise in SFDA is unbounded, we consider a two-component Multivariate Gaussian mixture distribution with equal priors for both domains. Let the first component () of the source domain distribution be , and the second component () of be , where and . For the target domain distribution , let the first component () of be , and the second component () of be , where is the shift of the two domains. Notice that the domain shift considered is a general shift and it has been studied in Stojanov et al. (2021); Zhao et al. (2019), where we also illustrate the domain shift in Figure 9 in supplementary material.

Let be the optimal source classifier. First, we build the relationship between the mislabeling rate for target data and the domain shift:

| (1) |

where , , , is the magnitude of domain shift, and is the standard normal cumulative distribution function. Eq. (1) shows that the magnitude of the domain shift inherently controls the mislabeling error for target data. This mislabeling rate increases as the magnitude of the domain shift increases. We defer the proof and details to Appendix B.

More importantly, we characterize that the label noise is unbounded among these mislabeled samples.

Theorem 3.1.

Without loss of generality, we assume that the is positively correlated with the vector , i.e., . For , if , then

| (2) |

where (i.e., ), , , and . Meanwhile, is non-empty when , where is the magnitude of the domain shift along the direction .

Conventional LLN methods assume that the label noise is bounded: , where is the labeling function, and if the number of clean samples of each component are the same (Cheng et al., 2020). However, Theorem 3.1 indicates that the label noise generated by the source model is unbounded for any . In practice, region is non-empty as neural networks are usually trained on high dimensional data such that , so is easy to satisfy. The probability measure on (i.e., ) increases as the magnitude of the domain shift increases, meaning more data points contradict the conventional LLN assumption. More details can be found in Appendix C.

Given that the unbounded label noise exists in SFDA, the following Lemma establishes that many existing LLN methods (Wang et al., 2019b; Ghosh et al., 2017; Englesson & Azizpour, 2021; Ma et al., 2020), which rely on the bounded assumption, are not noise tolerant in SFDA.

Lemma 3.2.

Let the risk of the function under the clean data be , and the risk of under the noisy data be , where the noisy data follows the unbounded assumption, i.e., for a subset and . Then the global minimizer of disagrees with the global minimizer of on data points with a high probability at least .

We denote by the existing noise-robust loss based LLN methods in Wang et al. (2019b); Ghosh et al. (2017); Englesson & Azizpour (2021); Ma et al. (2020). When the noisy data follows the bounded assumption, these methods are noise tolerant as the minimizer converges to the minimizer with a high probability. We defer the details and proof of the related LLN methods to Appendix D.

4 Learning With Label Noise in SFDA

Given a fundamental difference between the label noise in SFDA and the label noise in conventional LLN scenarios, existing LLN methods, whose underlying assumption is bounded label noise, cannot be applied to solve the label noise in SFDA. This section focuses on investigating how to address the unbounded label noise in SFDA.

Motivated by the recent studies Liu et al. (2020); Arpit et al. (2017), which observed an early-time training phenomenon (ETP) on noisy datasets with bounded random label noise, we find that ETP does not rely on the bounded random label noise assumption, and it can be generalized to the unbounded label noise in SFDA. ETP describes the training dynamics of the classifier that preferentially fits the clean samples and therefore has higher prediction accuracy for mislabeled samples during the early-training stage. Such training characteristics can be very beneficial for SFDA problems in which we only have access to the source model and the highly noisy target data. To theoretically prove ETP in the presence of unbounded label noise, we first describe the problem setup.

We still consider a two-component Gaussian mixture distribution with equal priors. We denote by the true label for , and assume it is a balanced sample from . The instance is sampled from the distribution , where . We denote by the noisy label for . We observe that the label noise generated by the source model is close to the decision boundary revealed in Theorem 3.1. So, to assign the noisy labels, we let , where is the label flipping function, and controls the mislabeling rate. If , then the data point is mislabeled. Meanwhile, the label noise is unbounded by adopting the label flipping function : , where .

We study the early-time training dynamics of gradient descent on the linear classifier. The parameter is learned over the unbounded label noise data with the following logistic loss function:

where , and is the learning rate. Then the following theorem builds the connection between the prediction accuracy for mislabeled samples at an early-training time .

Theorem 4.1.

Let be a set of mislabeled samples. Let be the prediction accuracy calculated by the ground-truth labels and the predicted labels by the classifier with parameter for mislabeled samples. If at most half of the samples are mislabeled (), then there exists a proper time and a constant such that for any and , with probability :

| (3) |

where is a monotone decreasing function that as , and .

The proof is provided in Appendix E. Compared to ETP found in Liu et al. (2020), where the label noise is assumed to be bounded, Theorem 4.1 presents that ETP also exists even though the label noise is unbounded. At a proper time T, the classifier trained by the gradient descent algorithm can provide accurate predictions for mislabeled samples, where its accuracy is lower bounded by a function of the variance of clusters . When , the predictions of all mislabeled samples equal to their ground-truth labels (i.e., ). When the classifier is trained for a sufficiently long time, it will gradually memorize mislabeled data. The predictions of mislabeled samples are equivalent to their incorrect labels instead of their ground-truth labels (Liu et al., 2020; Maennel et al., 2020). Based on these insights, the memorization of mislabeled data can be alleviated by leveraging their predicted labels during the early-training time.

To leverage the predictions during the early-training time, we adopt a recently established method, early learning regularization (ELR) (Liu et al., 2020), which encourages model predictions to stick to the early-time predictions for . Since ETP exists in the scenarios of the unbounded label noise, ELR can be applied to solve the label noise in SFDA. The regularization is given by:

| (4) |

where we overload to be the probabilistic output for the sample , and is the moving average prediction for , where is a hyperparameter. To see how ELR prevents the model from memorizing the label noise, we calculate the gradient of Eq. (4) with respect to , which is given by:

Note that minimizing Eq. (4) forces to close to . When is aligned better with , the magnitude of the gradient becomes larger. It makes the gradient of aligning with overwhelm the gradient of other loss terms that align with noisy labels. As the training progresses, the moving averaged predictions for target samples gradually approach their ground-truth labels till the time . Therefore, Eq. (4) prevents the model from memorizing the label noise by forcing the model predictions to stay close to these moving averaged predictions , which are very likely to be ground-truth labels.

Some existing LLN methods propose to assign pseudo labels to data or require two-stage training for label noise (Cheng et al., 2020; Zhu et al., 2021; Zhang et al., 2021). Unlike these LLN methods, Eq. (4) can be easily embedded into any existing SFDA algorithms without conflict. The overall objective function is given by:

| (5) |

where is any SFDA objective function, and is a hyperparameter.

Empirical Observations on Real-World Datasets.

We empirically verify that target classifiers have higher prediction accuracy for target data during the early training and adaptation stage. We propose leveraging this benefit to prevent the classifier from memorizing the noisy labels. The observations are shown in Figure 2. The parameters of classifiers are initialized by source models. Labels of target data are annotated by the initialized classifiers. We train the target classifiers on target data with the standard cross-entropy (CE) loss and the generalized cross-entropy (GCE) loss, a well-known noise-robust loss widely leveraged in bounded LLN scenarios. The solid green, orange and blue lines represent the training accuracy of optimizing the classifiers with CE loss, GCE loss, and ELR loss, respectively. The dotted red lines represent the labeling accuracy of the initialized classifiers. Considering that the classifiers memorize the unbounded label noise very fast, we evaluate the prediction accuracy on target data every batch for the first steps. After steps, we evaluate the prediction accuracy for every epoch. The green lines show that ETP exists in SFDA, which is consistent with our theoretical result. Meanwhile, in all scenarios, green and orange lines show that classifiers provide higher prediction accuracy during the first a few iterations. After a few iterations, they start to memorize the label noise even with noise-robust loss (e.g., GCE). Eventually, the classifiers are expected to memorize the whole datasets. For conventional LLN settings, it has been empirically verified that it takes a much longer time before classifiers start memorizing the label noise (Liu et al., 2020; Xia et al., 2020a). We provide further analysis in Appendix H. We highlight that PCL (Zhang et al., 2021) leverages ETP at every epoch, so it cannot capture the benefits of ETP and is inappropriate for unbounded label noise due to the fast memorization speed in SFDA. As a comparison, we choose ELR since it leverages ETP at every batch. The blue lines show that leveraging ETP via ELR can address the memorization of noisy labels in SFDA.

| Method | SF | ArCl | ArPr | ArRw | ClAr | ClPr | ClRw | PrAr | PrCl | PrRw | RwAr | RwCl | RwPr | Avg |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MCD (Saito et al., 2018b) | ✗ | 48.9 | 68.3 | 74.6 | 61.3 | 67.6 | 68.8 | 57.0 | 47.1 | 75.1 | 69.1 | 52.2 | 79.6 | 64.1 |

| CDAN (Long et al., 2018) | ✗ | 50.7 | 70.6 | 76.0 | 57.6 | 70.0 | 70.0 | 57.4 | 50.9 | 77.3 | 70.9 | 56.7 | 81.6 | 65.8 |

| SAFN (Xu et al., 2019a) | ✗ | 52.0 | 71.7 | 76.3 | 64.2 | 69.9 | 71.9 | 63.7 | 51.4 | 77.1 | 70.9 | 57.1 | 81.5 | 67.3 |

| Symnets (Zhang et al., 2019a) | ✗ | 47.7 | 72.9 | 78.5 | 64.2 | 71.3 | 74.2 | 64.2 | 48.8 | 79.5 | 74.5 | 52.6 | 82.7 | 67.6 |

| MDD (Zhang et al., 2019b) | ✗ | 54.9 | 73.7 | 77.8 | 60.0 | 71.4 | 71.8 | 61.2 | 53.6 | 78.1 | 72.5 | 60.2 | 82.3 | 68.1 |

| TADA (Wang et al., 2019a) | ✗ | 53.1 | 72.3 | 77.2 | 59.1 | 71.2 | 72.1 | 59.7 | 53.1 | 78.4 | 72.4 | 60.0 | 82.9 | 67.6 |

| BNM (Cui et al., 2020) | ✗ | 52.3 | 73.9 | 80.0 | 63.3 | 72.9 | 74.9 | 61.7 | 49.5 | 79.7 | 70.5 | 53.6 | 82.2 | 67.9 |

| BDG (Yang et al., 2020) | ✗ | 51.5 | 73.4 | 78.7 | 65.3 | 71.5 | 73.7 | 65.1 | 49.7 | 81.1 | 74.6 | 55.1 | 84.8 | 68.7 |

| SRDC (Tang et al., 2020) | ✗ | 52.3 | 76.3 | 81.0 | 69.5 | 76.2 | 78.0 | 68.7 | 53.8 | 81.7 | 76.3 | 57.1 | 85.0 | 71.3 |

| RSDA-MSTN (Gu et al., 2020) | ✗ | 53.2 | 77.7 | 81.3 | 66.4 | 74.0 | 76.5 | 67.9 | 53.0 | 82.0 | 75.8 | 57.8 | 85.4 | 70.9 |

| Source Only | ✓ | 44.6 | 67.3 | 74.8 | 52.7 | 62.7 | 64.8 | 53.0 | 40.6 | 73.2 | 65.3 | 45.4 | 78.0 | 60.2 |

| +ELR | ✓ | 52.4 | 73.5 | 77.3 | 62.5 | 70.6 | 71.0 | 61.1 | 50.8 | 78.9 | 71.7 | 56.7 | 81.6 | 67.3 |

| SHOT (Liang et al., 2020) | ✓ | 57.1 | 78.1 | 81.5 | 68.0 | 78.2 | 78.1 | 67.4 | 54.9 | 82.2 | 73.3 | 58.8 | 84.3 | 71.8 |

| +ELR | ✓ | 58.7 | 78.9 | 82.1 | 68.5 | 79.0 | 77.5 | 68.2 | 57.1 | 81.9 | 74.2 | 59.5 | 84.9 | 72.6 |

| G-SFDA (Yang et al., 2021b) | ✓ | 55.8 | 77.1 | 80.5 | 66.4 | 74.9 | 77.3 | 66.5 | 53.9 | 80.8 | 72.4 | 59.7 | 83.2 | 70.7 |

| +ELR | ✓ | 56.4 | 77.6 | 81.1 | 67.1 | 75.2 | 77.9 | 65.9 | 55.0 | 81.2 | 72.1 | 60.0 | 83.6 | 71.1 |

| NRC (Yang et al., 2021a) | ✓ | 56.3 | 77.6 | 81.0 | 65.3 | 78.3 | 77.5 | 64.5 | 56.0 | 82.4 | 70.0 | 57.1 | 82.9 | 70.8 |

| +ELR | ✓ | 58.4 | 78.7 | 81.5 | 69.2 | 79.5 | 79.3 | 66.3 | 58.0 | 82.6 | 73.4 | 59.8 | 85.1 | 72.6 |

5 Experiments

We aim to improve the efficiency of existing SFDA algorithms by using ELR to leverage ETP. We evaluate the performance on four different SFDA benchmark datasets: Office- (Saenko et al., 2010), Office-Home (Venkateswara et al., 2017), VisDA (Peng et al., 2017) and DomainNet (Peng et al., 2019). Due to the limited space, the results on the dataset Office- and additional experimental details are provided in Appendix G.

Evaluation. We incorporate ELR into three existing baseline methods: SHOT (Liang et al., 2020), G-SFDA (Zhang & Sabuncu, 2018), and NRC (Yang et al., 2021a). SHOT uses k-means clustering and mutual information maximization strategy to train the representation network while freezing the final linear layer. G-SFDA aims to cluster target data with similar neighbors and attempts to maintain the source domain performance. NRC also explores the neighbors of target data by graph-based methods. ELR can be easily embedded into these methods by simply adding the regularization term into the loss function to optimize without affecting existing SFDA frameworks. We average the results based on three random runs.

Results. Tables 1-4 show the results before/after leveraging the early-time training phenomenon, where Table 4 is shown in Appendix G. Among these tables, the top part shows the results of conventional UDA methods, and the bottom part shows the results of SFDA methods. In the tables, we use SF to indicate whether the method is source free or not. We use Source Only + ELR to indicate ELR with self-training. The results show that ELR itself can boost the performances. As existing SFDA methods are not able to address unbounded label noise, incorporating ELR into these SFDA methods can further boost the performance. The four datasets, including all pairs (e.g., ) of tasks, show better performance after solving the unbounded label noise problem using the early-time training phenomenon. Meanwhile, solving the unbounded label noise on existing SFDA methods achieves state-of-the-art on all benchmark datasets. These SFDA methods also outperform most methods that need to access source data.

Analysis about hyperparameters and . The hyperparameter is chosen from {, , , , , }, and is chosen from {, , , , }. We conduct the sensitivity study on hyperparameters of ELR on the DomainNet dataset, which is shown in Figure 3(a-b). In each Figure, the study is conducted by fixing the other hyperparameter to the optimal one. The performance is robust to the hyperparameter except . When , classifiers are sensitive to changes in learning curves. Thus, the performance degrades since the learning curves change quickly in the unbounded label noise scenarios. Meanwhile, the performance is also robust to the hyperparameter except when becomes too large. The hyperparameter is to balance the effects of existing SFDA algorithms and the effects of ELR. As we indicated in Tables 1-4, barely using ELR to address the SFDA problem is not comparable to these SFDA methods. Hence, a large value of makes neural networks neglect the effects of these SFDA methods, leading to degraded performance.

| Method | SF | RC | RP | RS | CR | CP | CS | PR | PC | PS | SR | SC | SP | Avg |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MCD (Saito et al., 2018b) | ✗ | 61.9 | 69.3 | 56.2 | 79.7 | 56.6 | 53.6 | 83.3 | 58.3 | 60.9 | 81.7 | 56.2 | 66.7 | 65.4 |

| DANN (Ganin et al., 2016) | ✗ | 63.4 | 73.6 | 72.6 | 86.5 | 65.7 | 70.6 | 86.9 | 73.2 | 70.2 | 85.7 | 75.2 | 70.0 | 74.5 |

| DAN (Long et al., 2015) | ✗ | 64.3 | 70.6 | 58.4 | 79.4 | 56.7 | 60.0 | 84.5 | 61.6 | 62.2 | 79.7 | 65.0 | 62.0 | 67.0 |

| COAL (Tan et al., 2020) | ✗ | 73.9 | 75.4 | 70.5 | 89.6 | 70.0 | 71.3 | 89.8 | 68.0 | 70.5 | 88.0 | 73.2 | 70.5 | 75.9 |

| MDD (Zhang et al., 2019b) | ✗ | 77.6 | 75.7 | 74.2 | 89.5 | 74.2 | 75.6 | 90.2 | 76.0 | 74.6 | 86.7 | 72.9 | 73.2 | 78.4 |

| Source Only | ✓ | 53.7 | 71.6 | 52.9 | 70.8 | 49.5 | 58.3 | 85.2 | 59.6 | 59.1 | 30.6 | 74.8 | 65.7 | 61.0 |

| +ELR | ✓ | 70.2 | 81.7 | 61.7 | 79.9 | 63.8 | 67.0 | 90.0 | 72.1 | 66.8 | 85.1 | 78.5 | 68.8 | 73.8 |

| SHOT (Liang et al., 2020) | ✓ | 73.3 | 80.1 | 65.8 | 91.4 | 74.3 | 69.2 | 91.9 | 77.0 | 66.2 | 87.4 | 81.3 | 75.0 | 77.7 |

| +ELR | ✓ | 78.0 | 81.9 | 67.4 | 91.1 | 75.9 | 71.0 | 92.6 | 79.3 | 68.0 | 88.7 | 84.8 | 77.0 | 79.7 |

| G-SFDA (Yang et al., 2021b) | ✓ | 65.8 | 78.9 | 60.2 | 80.5 | 64.7 | 64.6 | 89.3 | 69.9 | 63.6 | 86.4 | 78.8 | 71.1 | 72.8 |

| +ELR | ✓ | 69.4 | 80.9 | 60.6 | 81.3 | 67.2 | 66.4 | 90.2 | 73.2 | 64.9 | 87.6 | 82.1 | 71.0 | 74.6 |

| NRC (Yang et al., 2021a) | ✓ | 69.8 | 81.1 | 62.9 | 83.4 | 74.4 | 66.3 | 90.3 | 73.4 | 65.2 | 88.2 | 82.2 | 75.8 | 76.4 |

| +ELR | ✓ | 75.6 | 82.2 | 65.7 | 91.2 | 77.2 | 68.5 | 92.7 | 79.8 | 67.5 | 89.3 | 85.1 | 77.6 | 79.4 |

| Method | SF | plane | bcycl | bus | car | horse | knife | mcycl | person | plant | sktbrd | train | truck | Per-class |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DANN (Ganin et al., 2016) | ✗ | 81.9 | 77.7 | 82.8 | 44.3 | 81.2 | 29.5 | 65.1 | 28.6 | 51.9 | 54.6 | 82.8 | 7.8 | 57.4 |

| DAN (Long et al., 2015) | ✗ | 87.1 | 63.0 | 76.5 | 42.0 | 90.3 | 42.9 | 85.9 | 53.1 | 49.7 | 36.3 | 85.8 | 20.7 | 61.1 |

| ADR (Saito et al., 2018a) | ✗ | 94.2 | 48.5 | 84.0 | 72.9 | 90.1 | 74.2 | 92.6 | 72.5 | 80.8 | 61.8 | 82.2 | 28.8 | 73.5 |

| CDAN (Long et al., 2018) | ✗ | 85.2 | 66.9 | 83.0 | 50.8 | 84.2 | 74.9 | 88.1 | 74.5 | 83.4 | 76.0 | 81.9 | 38.0 | 73.9 |

| SAFN (Xu et al., 2019a) | ✗ | 93.6 | 61.3 | 84.1 | 70.6 | 94.1 | 79.0 | 91.8 | 79.6 | 89.9 | 55.6 | 89.0 | 24.4 | 76.1 |

| SWD (Lee et al., 2019) | ✗ | 90.8 | 82.5 | 81.7 | 70.5 | 91.7 | 69.5 | 86.3 | 77.5 | 87.4 | 63.6 | 85.6 | 29.2 | 76.4 |

| MDD (Zhang et al., 2019b) | ✗ | - | - | - | - | - | - | - | - | - | - | - | - | 74.6 |

| MCC (Jin et al., 2020) | ✗ | 88.7 | 80.3 | 80.5 | 71.5 | 90.1 | 93.2 | 85.0 | 71.6 | 89.4 | 73.8 | 85.0 | 36.9 | 78.8 |

| STAR (Lu et al., 2020) | ✗ | 95.0 | 84.0 | 84.6 | 73.0 | 91.6 | 91.8 | 85.9 | 78.4 | 94.4 | 84.7 | 87.0 | 42.2 | 82.7 |

| RWOT (Xu et al., 2020) | ✗ | 95.1 | 80.3 | 83.7 | 90.0 | 92.4 | 68.0 | 92.5 | 82.2 | 87.9 | 78.4 | 90.4 | 68.2 | 84.0 |

| Source Only | ✓ | 60.9 | 21.6 | 50.9 | 67.6 | 65.8 | 6.3 | 82.2 | 23.2 | 57.3 | 30.6 | 84.6 | 8.0 | 46.6 |

| +ELR | ✓ | 95.4 | 45.7 | 89.7 | 69.8 | 94.1 | 97.1 | 92.9 | 80.1 | 89.7 | 52.8 | 83.3 | 4.3 | 74.6 |

| SHOT (Liang et al., 2020) | ✓ | 94.3 | 88.5 | 80.1 | 57.3 | 93.1 | 94.9 | 80.7 | 80.3 | 91.5 | 89.1 | 86.3 | 58.2 | 82.9 |

| +ELR | ✓ | 95.8 | 84.1 | 83.3 | 67.9 | 93.9 | 97.6 | 89.2 | 80.1 | 90.6 | 90.4 | 87.2 | 48.2 | 84.1 |

| G-SFDA (Yang et al., 2021b) | ✓ | 96.0 | 87.6 | 85.3 | 72.8 | 95.9 | 94.7 | 88.4 | 79.0 | 92.7 | 93.9 | 87.2 | 43.7 | 84.8 |

| +ELR | ✓ | 97.3 | 89.1 | 89.8 | 79.2 | 96.9 | 97.5 | 92.2 | 82.5 | 95.8 | 94.5 | 87.3 | 34.5 | 86.4 |

| NRC (Yang et al., 2021a) | ✓ | 96.9 | 89.7 | 84.0 | 59.8 | 95.9 | 96.6 | 86.5 | 80.9 | 92.8 | 92.6 | 90.2 | 60.2 | 85.4 |

| +ELR | ✓ | 97.1 | 89.7 | 82.7 | 62.0 | 96.2 | 97.0 | 87.6 | 81.2 | 93.7 | 94.1 | 90.2 | 58.6 | 85.8 |

5.1 Discussion on Existing LLN Methods

As we formulate the SFDA as the problem of LLN, it is of interest to discuss some existing LLN methods. We mainly discuss existing LLN methods that can be easily embedded into the current SFDA algorithms. Based on this principle, we choose GCE (Zhang & Sabuncu, 2018), SL (Wang et al., 2019b) and GJS (Englesson & Azizpour, 2021) that have been theoretically proved to be robust to symmetric and asymmetric label noise, which are bounded label noise. We highlight that a more recent method GJS outperforms ELR in real-world noisy datasets. However, we will show that GJS is inferior to ELR in SFDA scenarios, because the underlying assumption for GJS does not hold in SFDA. Besides ELR, which leverages ETP, PCL is another method to leverage the same phenomenon, but we will show that it is also inappropriate for SFDA.

To show the effects of the existing LLN methods under the unbounded label noise, we test these LLN methods on various SFDA datasets with target data whose labels are generated by source models. As shown in Figure 4, GCE, SL, GJS, and PCL are better than CE but still not comparable to ELR. Our analysis indicates that ELR follows the principle of ETP, which is theoretically justified in SFDA scenarios by our Theorem 3.1. Methods GCE, SL, and GJS follow the bounded label noise assumption, which does not hold in SFDA. Hence, they perform worse than ELR in SFDA, even though GJS outperforms ELR in conventional LLN scenarios. PCL (Zhang et al., 2021) utilizes ETP to purify noisy labels of target data, but it performs significantly worse than ELR. As the memorization speed of the unbounded label noise is very fast, and classifiers memorize noisy labels within a few iterations (shown in Figure 2), purifying noisy labels every epoch is inappropriate for SFDA. However, we notice that PCL performs relatively better on DomainNet than on other datasets. The reason behind it is that the memorization speed in the DomainNet dataset is relatively slow than other datasets, which is shown in Figure 2. In conventional LLN scenarios, PCL does not suffer from the issue since the memorization speed is much lower than the conventional LLN scenarios.

In Figure 3(c), we also evaluate the performance by incorporating the existing LLN methods into the SFDA algorithms SHOT and NRC. Since PCL and SHOT assign pseudo labels to target data, PCL is incompatible with some existing SFDA methods and cannot be easily embedded into some SFDA algorithms. Hence, we only embed GCE, SL, GJS, and ELR into the SFDA algorithms. The figure illustrates that ELR still performs better than other LLN methods when incorporated into SHOT and NRC. We also notice that GCE, SL, and GJS provide marginal improvement to the vanilla SHOT and NRC methods. We think the label noise in SFDA datasets is the hybrid noise that consists of both bounded label noise and unbounded label noise due to the non-linearity of neural networks. The GCE, SL, and GJS can address the bounded label noise, while ELR can address both bounded and unbounded label noise. Therefore, these experiments demonstrate that using ELR to leverage ETP can successfully address the unbounded label noise in SFDA.

6 Conclusion

In this paper, we study SFDA from a new perspective of LLN by theoretically showing that SFDA can be viewed as the problem of LLN with the unbounded label noise. Under this assumption, we rigorously justify that robust loss functions are not able to address the memorization issues of unbounded label noise. Meanwhile, based on this assumption, we further theoretically and empirically analyze the learning behavior of models during the early-time training stage and find that ETP can benifit the SFDA problems. Through extensive experiments across multiple datasets, we show that ETP can be exploited by ELR to improve prediction performance, and it can also be used to enhance existing SFDA algorithms.

Acknowledgments

This work is supported by Natural Sciences and Engineering Research Council of Canada (NSERC), Discovery Grants program.

References

- Ahmed et al. (2021) Sk Miraj Ahmed, Dripta S Raychaudhuri, Sujoy Paul, Samet Oymak, and Amit K Roy-Chowdhury. Unsupervised multi-source domain adaptation without access to source data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10103–10112, 2021.

- Arpit et al. (2017) Devansh Arpit, Stanisław Jastrzębski, Nicolas Ballas, David Krueger, Emmanuel Bengio, Maxinder S Kanwal, Tegan Maharaj, Asja Fischer, Aaron Courville, Yoshua Bengio, et al. A closer look at memorization in deep networks. In International Conference on Machine Learning, pp. 233–242. PMLR, 2017.

- Berthon et al. (2021) Antonin Berthon, Bo Han, Gang Niu, Tongliang Liu, and Masashi Sugiyama. Confidence scores make instance-dependent label-noise learning possible. In International Conference on Machine Learning, pp. 825–836. PMLR, 2021.

- Cheng et al. (2020) Hao Cheng, Zhaowei Zhu, Xingyu Li, Yifei Gong, Xing Sun, and Yang Liu. Learning with instance-dependent label noise: A sample sieve approach. arXiv preprint arXiv:2010.02347, 2020.

- Cui et al. (2020) Shuhao Cui, Shuhui Wang, Junbao Zhuo, Liang Li, Qingming Huang, and Qi Tian. Towards discriminability and diversity: Batch nuclear-norm maximization under label insufficient situations. CVPR, 2020.

- Englesson & Azizpour (2021) Erik Englesson and Hossein Azizpour. Generalized jensen-shannon divergence loss for learning with noisy labels. arXiv preprint arXiv:2105.04522, 2021.

- Fukunaga (2013) Keinosuke Fukunaga. Introduction to statistical pattern recognition. Elsevier, 2013.

- Ganin et al. (2016) Yaroslav Ganin, Evgeniya Ustinova, Hana Ajakan, Pascal Germain, Hugo Larochelle, François Laviolette, Mario Marchand, and Victor Lempitsky. Domain-adversarial training of neural networks. The Journal of Machine Learning Research, 17(1):2096–2030, 2016.

- Ghosh et al. (2017) Aritra Ghosh, Himanshu Kumar, and P Shanti Sastry. Robust loss functions under label noise for deep neural networks. In Proceedings of the AAAI conference on artificial intelligence, volume 31, 2017.

- Gu et al. (2020) Xiang Gu, Jian Sun, and Zongben Xu. Spherical space domain adaptation with robust pseudo-label loss. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9101–9110, 2020.

- Han et al. (2018) Bo Han, Quanming Yao, Xingrui Yu, Gang Niu, Miao Xu, Weihua Hu, Ivor Tsang, and Masashi Sugiyama. Co-teaching: Robust training of deep neural networks with extremely noisy labels. Advances in neural information processing systems, 31, 2018.

- He et al. (2015) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE international conference on computer vision, pp. 1026–1034, 2015.

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778, 2016.

- Jin et al. (2020) Ying Jin, Ximei Wang, Mingsheng Long, and Jianmin Wang. Minimum class confusion for versatile domain adaptation. ECCV, 2020.

- Kundu et al. (2020a) Jogendra Nath Kundu, Naveen Venkat, R Venkatesh Babu, et al. Universal source-free domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4544–4553, 2020a.

- Kundu et al. (2020b) Jogendra Nath Kundu, Naveen Venkat, Ambareesh Revanur, R Venkatesh Babu, et al. Towards inheritable models for open-set domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12376–12385, 2020b.

- Lee et al. (2019) Chen-Yu Lee, Tanmay Batra, Mohammad Haris Baig, and Daniel Ulbricht. Sliced wasserstein discrepancy for unsupervised domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 10285–10295, 2019.

- Lee et al. (2018) Kuang-Huei Lee, Xiaodong He, Lei Zhang, and Linjun Yang. Cleannet: Transfer learning for scalable image classifier training with label noise. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 5447–5456, 2018.

- Li et al. (2017) Wen Li, Limin Wang, Wei Li, Eirikur Agustsson, and Luc Van Gool. Webvision database: Visual learning and understanding from web data. arXiv preprint arXiv:1708.02862, 2017.

- Liang et al. (2020) Jian Liang, Dapeng Hu, and Jiashi Feng. Do we really need to access the source data? source hypothesis transfer for unsupervised domain adaptation. In International Conference on Machine Learning, pp. 6028–6039. PMLR, 2020.

- Liang et al. (2021) Jian Liang, Dapeng Hu, Yunbo Wang, Ran He, and Jiashi Feng. Source data-absent unsupervised domain adaptation through hypothesis transfer and labeling transfer. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021.

- Liu et al. (2021a) Hong Liu, Jianmin Wang, and Mingsheng Long. Cycle self-training for domain adaptation. Advances in Neural Information Processing Systems, 34, 2021a.

- Liu et al. (2019) Lydia T. Liu, Max Simchowitz, and Moritz Hardt. The implicit fairness criterion of unconstrained learning. In Proceedings of the 36th International Conference on Machine Learning, pp. 4051–4060, 2019.

- Liu et al. (2020) Sheng Liu, Jonathan Niles-Weed, Narges Razavian, and Carlos Fernandez-Granda. Early-learning regularization prevents memorization of noisy labels. arXiv preprint arXiv:2007.00151, 2020.

- Liu & Tao (2015) Tongliang Liu and Dacheng Tao. Classification with noisy labels by importance reweighting. IEEE Transactions on pattern analysis and machine intelligence, 38(3):447–461, 2015.

- Liu et al. (2021b) Yuang Liu, Wei Zhang, and Jun Wang. Source-free domain adaptation for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1215–1224, 2021b.

- Long et al. (2015) Mingsheng Long, Yue Cao, Jianmin Wang, and Michael I Jordan. Learning transferable features with deep adaptation networks. ICML, 2015.

- Long et al. (2018) Mingsheng Long, Zhangjie Cao, Jianmin Wang, and Michael I Jordan. Conditional adversarial domain adaptation. In Advances in Neural Information Processing Systems, pp. 1647–1657, 2018.

- Lu et al. (2020) Zhihe Lu, Yongxin Yang, Xiatian Zhu, Cong Liu, Yi-Zhe Song, and Tao Xiang. Stochastic classifiers for unsupervised domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9111–9120, 2020.

- Ma et al. (2020) Xingjun Ma, Hanxun Huang, Yisen Wang, Simone Romano, Sarah Erfani, and James Bailey. Normalized loss functions for deep learning with noisy labels. In International Conference on Machine Learning, pp. 6543–6553. PMLR, 2020.

- Maennel et al. (2020) Hartmut Maennel, Ibrahim M Alabdulmohsin, Ilya O Tolstikhin, Robert Baldock, Olivier Bousquet, Sylvain Gelly, and Daniel Keysers. What do neural networks learn when trained with random labels? Advances in Neural Information Processing Systems, 33:19693–19704, 2020.

- Malach & Shalev-Shwartz (2017) Eran Malach and Shai Shalev-Shwartz. Decoupling" when to update" from" how to update". Advances in Neural Information Processing Systems, 30, 2017.

- Patrini et al. (2017) Giorgio Patrini, Alessandro Rozza, Aditya Krishna Menon, Richard Nock, and Lizhen Qu. Making deep neural networks robust to label noise: A loss correction approach. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1944–1952, 2017.

- Peng et al. (2017) Xingchao Peng, Ben Usman, Neela Kaushik, Judy Hoffman, Dequan Wang, and Kate Saenko. Visda: The visual domain adaptation challenge. arXiv preprint arXiv:1710.06924, 2017.

- Peng et al. (2019) Xingchao Peng, Qinxun Bai, Xide Xia, Zijun Huang, Kate Saenko, and Bo Wang. Moment matching for multi-source domain adaptation. In Proceedings of the IEEE/CVF international conference on computer vision, pp. 1406–1415, 2019.

- Qiu et al. (2021) Zhen Qiu, Yifan Zhang, Hongbin Lin, Shuaicheng Niu, Yanxia Liu, Qing Du, and Mingkui Tan. Source-free domain adaptation via avatar prototype generation and adaptation. arXiv preprint arXiv:2106.15326, 2021.

- Saenko et al. (2010) Kate Saenko, Brian Kulis, Mario Fritz, and Trevor Darrell. Adapting visual category models to new domains. In European conference on computer vision, pp. 213–226. Springer, 2010.

- Saito et al. (2018a) Kuniaki Saito, Yoshitaka Ushiku, Tatsuya Harada, and Kate Saenko. Adversarial dropout regularization. ICLR, 2018a.

- Saito et al. (2018b) Kuniaki Saito, Kohei Watanabe, Yoshitaka Ushiku, and Tatsuya Harada. Maximum classifier discrepancy for unsupervised domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3723–3732, 2018b.

- Shui et al. (2022a) Changjian Shui, Qi Chen, Jiaqi Li, Boyu Wang, and Christian Gagné. Fair representation learning through implicit path alignment. In Proceedings of the 39th International Conference on Machine Learning, pp. 20156–20175, 2022a.

- Shui et al. (2022b) Changjian Shui, Gezheng Xu, Qi CHEN, Jiaqi Li, Charles Ling, Tal Arbel, Boyu Wang, and Christian Gagné. On learning fairness and accuracy on multiple subgroups. In Alice H. Oh, Alekh Agarwal, Danielle Belgrave, and Kyunghyun Cho (eds.), Advances in Neural Information Processing Systems, 2022b.

- Song et al. (2022) Hwanjun Song, Minseok Kim, Dongmin Park, Yooju Shin, and Jae-Gil Lee. Learning from noisy labels with deep neural networks: A survey. IEEE Transactions on Neural Networks and Learning Systems, pp. 1–19, 2022. doi: 10.1109/TNNLS.2022.3152527.

- Stojanov et al. (2021) Petar Stojanov, Zijian Li, Mingming Gong, Ruichu Cai, Jaime Carbonell, and Kun Zhang. Domain adaptation with invariant representation learning: What transformations to learn? Advances in Neural Information Processing Systems, 34:24791–24803, 2021.

- Tan et al. (2020) Shuhan Tan, Xingchao Peng, and Kate Saenko. Class-imbalanced domain adaptation: an empirical odyssey. In European Conference on Computer Vision, pp. 585–602. Springer, 2020.

- Tang et al. (2020) Hui Tang, Ke Chen, and Kui Jia. Unsupervised domain adaptation via structurally regularized deep clustering. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 8725–8735, 2020.

- Tanwisuth et al. (2021) Korawat Tanwisuth, Xinjie Fan, Huangjie Zheng, Shujian Zhang, Hao Zhang, Bo Chen, and Mingyuan Zhou. A prototype-oriented framework for unsupervised domain adaptation. Advances in Neural Information Processing Systems, 34, 2021.

- Venkateswara et al. (2017) Hemanth Venkateswara, Jose Eusebio, Shayok Chakraborty, and Sethuraman Panchanathan. Deep hashing network for unsupervised domain adaptation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 5018–5027, 2017.

- Vershynin (2018) Roman Vershynin. High-dimensional probability: An introduction with applications in data science, volume 47. Cambridge university press, 2018.

- Wang et al. (2022) Boyu Wang, Jorge Mendez, Changjian Shui, Fan Zhou, Di Wu, Gezheng Xu, Christian Gagné, and Eric Eaton. Gap minimization for knowledge sharing and transfer, 2022.

- Wang et al. (2019a) Ximei Wang, Liang Li, Weirui Ye, Mingsheng Long, and Jianmin Wang. Transferable attention for domain adaptation. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 33, pp. 5345–5352, 2019a.

- Wang et al. (2019b) Yisen Wang, Xingjun Ma, Zaiyi Chen, Yuan Luo, Jinfeng Yi, and James Bailey. Symmetric cross entropy for robust learning with noisy labels. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 322–330, 2019b.

- Wu et al. (2020) Yuan Wu, Diana Inkpen, and Ahmed El-Roby. Dual mixup regularized learning for adversarial domain adaptation. ECCV, 2020.

- Xia et al. (2020a) Xiaobo Xia, Tongliang Liu, Bo Han, Chen Gong, Nannan Wang, Zongyuan Ge, and Yi Chang. Robust early-learning: Hindering the memorization of noisy labels. In International Conference on Learning Representations, 2020a.

- Xia et al. (2020b) Xiaobo Xia, Tongliang Liu, Bo Han, Nannan Wang, Mingming Gong, Haifeng Liu, Gang Niu, Dacheng Tao, and Masashi Sugiyama. Part-dependent label noise: Towards instance-dependent label noise. Advances in Neural Information Processing Systems, 33:7597–7610, 2020b.

- Xiao et al. (2015) Tong Xiao, Tian Xia, Yi Yang, Chang Huang, and Xiaogang Wang. Learning from massive noisy labeled data for image classification. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2691–2699, 2015.

- Xu et al. (2020) Renjun Xu, Pelen Liu, Liyan Wang, Chao Chen, and Jindong Wang. Reliable weighted optimal transport for unsupervised domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4394–4403, 2020.

- Xu et al. (2019a) Ruijia Xu, Guanbin Li, Jihan Yang, and Liang Lin. Larger norm more transferable: An adaptive feature norm approach for unsupervised domain adaptation. In The IEEE International Conference on Computer Vision (ICCV), October 2019a.

- Xu et al. (2019b) Yilun Xu, Peng Cao, Yuqing Kong, and Yizhou Wang. L_dmi: An information-theoretic noise-robust loss function. arXiv preprint arXiv:1909.03388, 2019b.

- Yang et al. (2020) Guanglei Yang, Haifeng Xia, Mingli Ding, and Zhengming Ding. Bi-directional generation for unsupervised domain adaptation. In AAAI, pp. 6615–6622, 2020.

- Yang et al. (2021a) Shiqi Yang, Joost van de Weijer, Luis Herranz, Shangling Jui, et al. Exploiting the intrinsic neighborhood structure for source-free domain adaptation. Advances in Neural Information Processing Systems, 34, 2021a.

- Yang et al. (2021b) Shiqi Yang, Yaxing Wang, Joost van de Weijer, Luis Herranz, and Shangling Jui. Generalized source-free domain adaptation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8978–8987, 2021b.

- Yi et al. (2022) Li Yi, Sheng Liu, Qi She, A. Ian McLeod, and Boyu Wang. On learning contrastive representations for learning with noisy labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 16682–16691, June 2022.

- Zhang et al. (2019a) Yabin Zhang, Hui Tang, Kui Jia, and Mingkui Tan. Domain-symmetric networks for adversarial domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5031–5040, 2019a.

- Zhang et al. (2021) Yikai Zhang, Songzhu Zheng, Pengxiang Wu, Mayank Goswami, and Chao Chen. Learning with feature-dependent label noise: A progressive approach. arXiv preprint arXiv:2103.07756, 2021.

- Zhang et al. (2019b) Yuchen Zhang, Tianle Liu, Mingsheng Long, and Michael Jordan. Bridging theory and algorithm for domain adaptation. In International Conference on Machine Learning, pp. 7404–7413, 2019b.

- Zhang & Sabuncu (2018) Zhilu Zhang and Mert R Sabuncu. Generalized cross entropy loss for training deep neural networks with noisy labels. In 32nd Conference on Neural Information Processing Systems (NeurIPS), 2018.

- Zhao et al. (2019) Han Zhao, Remi Tachet Des Combes, Kun Zhang, and Geoffrey Gordon. On learning invariant representations for domain adaptation. In International Conference on Machine Learning, pp. 7523–7532. PMLR, 2019.

- Zhu et al. (2021) Zhaowei Zhu, Tongliang Liu, and Yang Liu. A second-order approach to learning with instance-dependent label noise. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10113–10123, 2021.

Appendix A Neighbors Label Noise Observations on Real-World Datasets

This section provides more observed results and explanations of Neighbors’ label noise during the Source-Free Domain Adaptation process on real-world datasets.

Currently, most SFDA methods inevitably leverage the pseudo-labels for self-supervised learning or to learn the cluster structure of the target data in the feature space, in order to realize the domain adaptation goal. However, the pseudo labels generated by the source domain are usually noisy and of poor quality due to the domain distribution shift. Some neighborhood-based heuristic methods (Yang et al., 2021a; b) have been proposed to purify these target domain pseudo labels, which use the pseudo label of neighbors in the feature space to correct and reassign the central data’s pseudo label. In fact, such methods rely on a strong assumption: a relatively high quality of the neighbors’ pseudo label. However, in our experimental observations, we find that at the very beginning of the adaptation process, the similarity of two data points in the feature space can not fully represent their label space’s connection. Furthermore, such methods are easy to provide useless and noisy prediction information for the central data. We will show some statistical results on VisDA and Office-Home, these two real-world datasets.

Following the neighborhood construction method in Yang et al. (2021a; b), we use the pre-trained source model to infer the target data, extract the feature space outputs and get the prediction results. We use the cosine similarity on the feature space to find the top similar neighbors (e.g., ) for each data point (named as the central data point). Then, we collect the neighbors regarding the ground truth label of central data points and study the neighbor’s quality for each class.

Neighbors who do not belong to the correct category We define the neighbors who do not belong to the same category as its central data point as False Neighbor, which means their ground-truth labels are not the same: . And the results of VisDA (train validation) and Office-Home (Pr Cl) datasets are shown in Figure 6 and Figure 5.

Neighbors who can not provide useful prediction information We further study the prediction information provided by such neighbors. Regardless of their true category properties, we consider neighbors whose Predicted Label is the same as the Ground Truth Label of the central data point to be Useful Neighbors; otherwise, they are Misleading Neighbors, as they can not provide the expected useful prediction information. We denote the Misleading Neighbors Ratio as the proportion of noisy neighbors among all neighbors for each class. Besides, as some methods heuristically utilize the predicted logits as the predicted probability or confidence score in the pseudo label purification process, we further study the Over-Confident Misleading Neighbors Ratio for each class. We defined the over-confident misleading neighbors ratio as the number of over-confident misleading neighbors (misleading neighbors with a high predicted logit, larger than 0.75) divided by the number of all neighbors per class. The results on VisDA and Office-Home are shown in Figure 1ii and Figure 7.

We want to clarify that the above exploratory experiment results can only reflect the phenomenon of unbounded noise in SFDA to some extent: the set of over-confidence misleading neighbors is non-empty can correspond, to some extent, to the fact that R is non-empty proved in Theorem 3.1; but the definition of misleading neighbors does not rigorously satisfies the definition of unbounded label noise.

Appendix B Relationship Between Mislabeling Error And domain shift

In this part, we focus on explaining the relationship between the label noise and the domain shift, as illustrated in Figure 9. The following theorem characterizes the relationship between the labeling error and the domain shift.

Theorem B.1.

Without loss of generality, we assume that the is positively correlated with the vector , i.e., . Let be the Bayes optimal classifier for the source domain . Then

| (6) |

where , , , and is the standard normal cumulative distribution function.

Theorem B.1 indicates that the labeling error for the target domain can be represented by a function of the domain shift , which can be shown numerically in Figure 8. The projection of the domain shift on the vector is given by . Since is on the direction of , can also be represented by , where characterizes the magnitude of the domain shift. More specifically, in Figure 8, we present the relationship between the mislabeling rate and for all possible . When is positively correlated with (assumption in Theorem B.1), we have , and when is negatively correlated with , we obtain . In both situations, we can observe that the labeling error increases with the absolute value of increasing, which implies that the more severe the domain shift is, the greater the mislabeling error will be obtained. Besides, we note that when the source and target domains are the same, the mislabeling error in Eq. (6) is minimized and degraded to the Bayes error, which cannot be reduced (Fukunaga, 2013). This corresponds to the situation when is perpendicular to , , and shown in Figure 8.

B.1 Proofs for Theorem B.1

Proof.

The Bayes classifier predicts to the first component when

| (7) |

Since the distributions of the two components with the same priors for the source domain are given by and , respectively. Based on Bayes’ rule, Eq. (7) is equivalent to

| (8) |

Solving the left hand side of Eq. (8) by using the knowledge of two multivariate Gaussian distributions, we get

| (9) |

So predicts to the first component when and predicts to the second component when The decision boundary is such that . When there is no domain shift , we have , and the mislabeling rate is the Bayes error, which is given by:

| (10) |

We first study the first term in Eq. (10):

where the second equality is because of the rotationally symmetric property for isotropic Gaussian random vectors, is the cumulative distribution function of the standard Gaussian distribution, and . Applying the similar mathematical steps for the second term in Eq. (10), and take them into Eq. (10):

| (11) |

When there is no domain shift, the labeling error is the Bayes error, which is expressed by Eq. (11).

Then we consider the case when . The distributions of the first and the second component are and , respectively. Notice that the decision boundary is the affine hyperplane. Any shift paralleled to this affine hyperplane will not affect the final component predictions. The domain shift can be decomposed into the sum of two vectors: the one is paralleled to this affine hyperplane, and another is perpendicular to the hyperplane. It is straightforward to verify that is perpendicular to the hyperplane. Thus, we project the domain shift onto the vector to get the component of that is perpendicular to the hyperplane, which is given by:

| (12) |

Since we assume is positively correlated to the vector , can be regarded as the magnitude of the domain shift along the direction . Note that the results also hold for the case where is negatively correlated to . The whole proof can be obtained by following the very similar proof steps for the positively correlated case.

The mislabeling rate of the optimal source classifier on target data is:

| (13) |

We first calculate the first term of Eq. (13). Following the same tricks discussed above:

| (14) |

where .

Similarly, the second term is given by:

| (15) |

where .

Appendix C Proofs for Theorem 3.1

Proof.

Without loss of generality, we choose to assume as the convenient way to present our results. From the proof for Theorem B.1, we know that is at the decision boundary such that , where

Let be the optimal Bayes classifier for the target domain, which can be obtained the same way as mentioned in B.1. The equation implies that

Note that is on the affine hyperplane where . Any data points on this hyperplane will have the equal probabilities to be correctly classified. We start from this hyperplane and calculate another point , where is at least . Thus, for any points that are mislabeled and far away from will result in . We first aim to find such a data point . Let , where is the scalar measures the distance between the point to the hyperplane . We need to find such that

| (17) |

where

| (18) |

Taking Eq. (C) into Eq. (17), we get . Since the isotropic Gaussian random vectors has the rotationally symmetric property, we can transform the integration of multivariate normal distribution to standard normal distribution with different intervals of integration. Then any data points from a region that have at most distance to its mean will have at least probability coming from the first component. Let the region be:

Equivalently, taking can be simplified:

The region is valid when data dimension is large. This is realistic in practice. Since neural networks are usually dealing with high dimension data, for example , the region is valid.

On the other hand, we aim to find a region where all data points are mislabeled. From the proof for Theorem 1, the source classifier is given by

| (19) |

Any data points are classified to the second component if . Hence

We take the intersection of and , all data points from this intersection are (1) having at least probability coming from the first component, and (2) being classified to the second component. Formally, for , if , then

| (20) |

We note that is non-empty when , where is the magnitude of the domain shift along with the direction . Since is chosen from , to verify that is non-empty, we only need to verify that also belongs to .

if and only if:

where .

Therefore, if , is non-empty.

Next, we show increases as increases.

Let event be a set of such that they are mislabeled by (i.e. ). Let event be a set of such that they are from the first component but are mislabeled to the second component with a probability . Let event be a set of such that they are from the second component but are mislabeled to the first component with a probability . Thus

| (21) |

Let event be a set of such that they are from the first component such that or . Let event be a set of such that they are from the second component but are mislabeled to the first component. For ,

which does not change as the domain shift varies. Meanwhile,

which is given by Eq. (15). By our assumption, the domain shift is positively correlated with the vector . So when increases, decreases.

Since and , the probability measure on is given by:

| (22) |

where the first term is the mislabeling rate that increases as increases (given by Theorem B.1); the second term is a constant; the third term decreases as as increases. The equality in Eq. (C) holds when . Therefore, when the magnitude of the domain shift increases, the lower bound of increases, which forces more points to break the conventional LLN assumption.

∎

Appendix D Background Introduction and Proofs for Lemma 3.2

Learning with label noise is an important task and topic in deep learning and modern artificial intelligence research. The main idea behind it is robust training, which can be further divided into fine-grained categories, such as robust architecture, robust regularization, robust loss design, and simple selection (Song et al., 2022). For example, for the robust architecture-based methods, they propose to modify the deep model’s architecture, including adding an adaptation layer or leveraging a dedicated module, to learn the label transition process and to tackle the noisy label. In addition, the robust regularization approaches usually enforce the DNN to overfit less to false-labeled examples by adopting a regularizer, explicitly or implicitly. For instance, Yi et al. (2022) proposed to utilize a contrastive regularization term to learn a noisy-label robust representation. Recently, with the widespread implementation of AI technologies, the topics of trustworthiness and fairness have drawn a lot of interest (Liu et al., 2019; Shui et al., 2022a; b). How to provide trustworthy and fair learning in LLN problems is a significant research direction. In this paper, we will, however, develop our discussion based on the robust loss methods in LLN.

In this section, we will first introduce the concepts and technical details of some noise-robust loss based LLN methods, including GCE (Zhang & Sabuncu, 2018), SL (Wang et al., 2019b), NCE (Ma et al., 2020), and GJS (Englesson & Azizpour, 2021). Then, we will present the proof details of Lemma 3.2.

D.1 Noise-Robust Loss Functions in LLN methods

Among the numerous studies of LLN methods, loss correction is a major branch of research. The main idea of loss correction is to modify the loss function and make it robust to noisy labels.

As indicated in Ma et al. (2020), the loss function is defined to be noise robust if , where is a positive constant and is the overall class number of label space. For example, the most widely utilized Cross-Entropy (CE) loss is unbounded and therefore is not robust to the label noise. Some LLN studies show that existing loss functions such as mean absolute error (MAE) (Ghosh et al., 2017), reverse cross entropy (RCE) (Wang et al., 2019b), normalized cross entropy (NCE) (Ma et al., 2020), and normalized focal loss (NFL) are noise-robust and that combining them with CE can help mitigate the sensitivity of the model to noisy labels.

More specifically, for a given data and a classifier , GCE (Zhang & Sabuncu, 2018) leverages the negative Box-Cox transformation as a loss function, which can exploit the benefits of both the noise-robustness provided by MAE and the implicit weighting scheme of CE:

where is a hyperparameter to be decided.

Another noise-robust loss based method SL (Wang et al., 2019b) proposes combining the reverse cross entropy (RCE) loss, which is noise tolerant, with CE loss and obtain the :

where is the predicted distribution over labels by classifier and is the ground truth class distribution conditioned on sample .

GJS (Zhang & Sabuncu, 2018) utilizes the multi-distribution generalization of Jensen-Shannon Divergence as loss function, which has been proven noise-robust and is in fact a generalization of CE and MAE. Concretely, the generalized JS divergence and GJS loss are defined as:

where , are categorical distributions over classes, , a random perturbation of sample , and log

Further, Ma et al. (2020) shows a simple loss normalization scheme which can be applied for any loss :

The study found that the normalized loss can indeed satisfy the robustness condition. However, it will also cause an underfitting problem in some situations.

Note that generalized cross entropy (GCE (Zhang & Sabuncu, 2018)) extends MAE and symmetric loss (SL (Wang et al., 2019b)) extends RCE. So we study GCE and SL in our experiments instead studying MAE and RCE. Besides, GJS (Englesson & Azizpour, 2021) is shown to be tightly bounded around . All these methods have shown to be noise tolerant under either bounded random label noise or bounded class-conditional label noise with additional assumption that . We show that under the same assumption with unbounded label noise datasets, these methods are not noise tolerant in section D.2.

D.2 Proofs for Lemma 3.2

Proof.

Let be the probability of observing a noisy label given the ground-truth label and a sample . Let . The risk of under noisy data is given by

| (23) |

| (26) |

We note that implies and for , where is the probability output by for predicting the sample to be the class . This argument is proved given by Wang et al. (2019b); Ghosh et al. (2017); Yang et al. (2021b); Ma et al. (2020) (Theorem 1&2 in Ghosh et al. (2017), Theorem 1 in Wang et al. (2019b), Lemma 1&2 in Ma et al. (2020) and Theorem 1&2 in Englesson & Azizpour (2021)).

To let holds for all inputs , previous studies assume the bounded label noise, which is given by

| (27) |

For random label noise which assumes that the mislabeling probability from the ground-truth label to any other label is the same for all inputs, i.e. , where is a constant. Let , then Eq. (27) is degraded to

This bounded assumption is commonly assumed by Wang et al. (2019b); Ghosh et al. (2017); Yang et al. (2021b); Ma et al. (2020) (Theorem 1 in Ghosh et al. (2017), Theorem 1 in Wang et al. (2019b), Lemma 1 in Ma et al. (2020) and Theorem 1 in Englesson & Azizpour (2021)).

For class-conditional label noise, which assumes the for any inputs and . Let , Then the bounded assumption Eq. (27) is degraded to

This bounded assumption is also commonly assumed, and it can be found in Theorem 2 in Ghosh et al. (2017), Theorem 1 in Wang et al. (2019b), 2 in Ma et al. (2020) and Theorem 2 in Englesson & Azizpour (2021).

However, in SFDA, we proved that the following event holds with a probability at least :

| (28) |

Indeed, we first denote by the event that is mislabeled. Then

Given the result in Eq. (28), and combined it with the Eq. (26), we have

When the event holds, the condition holds.

Note that only means for and for . It means that the optimal classifier from noisy data can make correct predictions on any inputs, which is consistent with the optimal classifier obtained from clean data.

As for the condition , we can get for a , which means that the optimal classifier from noisy data cannot make correct predictions on samples . To verify this, we use the robust loss function RCE as an example, and it can be easily generalized to other robust los functions mentioned above. Based on the definition of the RCE loss (Wang et al., 2019b), we have

where is a constant. The above equations show that any can make the condition hold. Meanwhile, is the global minimizer of the risk over the noisy data, which makes memorize the noisy dataset.

Therefore, makes incorrect predictions for such that for a , and is the global optimal over clean data, which gives correct predictions for such that for a . That completes the proof as makes different predictions on compared to .

∎

Appendix E Proofs for Theorem 4.1

The proof for Theorem 4.1 is partially adopted from Liu et al. (2020). Note that we are dealing with unbounded label noise, whereas the bounded label noise is considered in Liu et al. (2020). As indicated in Liu et al. (2020), is set as the smallest positive integer such that , and with high probability. Parameters is initialized by Kaiming initialization (He et al., 2015) that , and converges in probability to . For simplicity, we assume without loss of generality. The proof consists of two parts. The first part is to show that is highly positively correlated with the ground truth classifier. The second part is to show that the prediction accuracy on mislabeled samples can be represented as the correlation between the learned classifier and the ground truth classifier.

Proof.

To begin with, we show the first part. Let samples , where . The gradient of the logistic loss function with respect to the parameter is given by:

| (29) |

Then we will show that is lower bounded by a positive number. We first show the bound on ①in Eq. (E). Since is sampled from standard normal distribution, has limited variance. By the law of large number, converges in probability to its mean. Therefore,

Note that is a Gaussian random vector with independent entries, we have , where . Therefore, the above expectation is equivalent to

| (30) |

where . Note that , which means that most half of samples are mislabeled. Thus

By triangle inequality of the norm,

where is a random vector with Gaussian coordinates. By Lemma E.1,

| (32) |

with probability when , where is a constant.

On the other hand,

| (33) |

where the second inequality is by Lemma 9 from Liu et al. (2020), the last inequality by Lemma E.1.

By Lemma 8 from Liu et al. (2020), we have . Therefore, Eq. (34) can be rewritten as:

| (35) |

where we let .

Then we prove by mathematical induction, which can help us get rid of the dependence on for the lower bound in Eq. (E).

For , the inequality holds trivially. By the gradient descent algorithm, , where .

As , we have . Taking it into Eq. (E), we have

To show is lower bounded by , we need to have

It is straightforward to verify that and it can be verified that when , we have . Therefore, for and any

Hence by gradient descent algorithm and the same proof above, we have

| (36) |

For the second part: the prediction accuracy on mislabeled sample set converges in probability to its mean. Therefore, the expectation of the prediction accuracy on mislabeled samples is given by

| (37) |

Note that is a standard Gaussian vector, is distributed as Thus, Eq. (E) is equivalent to .

By the inequality for , then we have

We denote by:

where for any . Note that when , and is monotone decreasing as increases since for .

∎

Lemma E.1.

Let be a random vector with independent, Gaussian coordinates with and . Then

where is a constant.

Appendix F Additional Learning Curves

We provide additional learning curves on DomainNet dataset, shown in Figure 10. The dataset contains pairs of tasks showing: (1) target classifiers have higher prediction accuracy during the early-training time; (2) leverage ETP by using ELR can alleviate the memorization of unbounded noisy labels generated by source models.

Appendix G Experimental Details

In this section, we additionally show the overall training process of our method, illustrated in Figure 11 and in Algorithm 1. Besides, we provide more experimental information of our paper in details.

Datasets. We use four benchmark datasets, which have been widely utilized in the Unsupervised Domain Adaptation (UDA) (Long et al., 2015; Tan et al., 2020; Wang et al., 2022) and Source-Free Domain Adaptation (SFDA) (Liang et al., 2020) scenarios, to verify the effectiveness of leveraging the early-time training phenomenon to address unbounded label noise. Office- (Saenko et al., 2010) contains images in three domains (Amazon, DSLR, and Webcam), and each domain consists of classes. Office-Home (Venkateswara et al., 2017) contains images in four domains (Real, Clipart, Art, and Product), and each domain consists of classes. VisDA (Peng et al., 2017) contains K synthetic images and K real object images with classes. DomainNet (Peng et al., 2019) contains around K images in six different domains (Clipart, Infograph, Painting, Quickdraw, Real and Sketch). Following previous work Tan et al. (2020); Liu et al. (2021a), we select the most commonly-seen classes from four domains: Real, Clipart, Painting, and Sketch.

Implementation. We use ResNet-50 (He et al., 2016) for Office-, Office-Home and DomainNet, and ResNet-101 (He et al., 2016) for VisDA as backbones. We adopt a fully connected (FC) layer as the feature extractor on the backbone and another FC layer as the classifier head. The batch normalization layer is put between the two FC layers and the weight normalization layer is implemented on the last FC layer. We set the learning rate to e- for all layers except for the last two FC layers, where we apply e- for the learning rate for all datasets. The training for source models are set to be consistent with the SHOT (Liang et al., 2020). The hyperparameters for ELR with self-training, ELR with SHOT, ELR with G-SFDA, and ELR with NRC on four different datasets are shown in Table 5. We note that for ELR with self-training, there is only one hyperparameter to tune. The hyperparameters for existing SFDA algorithms are set to be consistent with their reported values for Office-, Office-Home, and VisDA datasets. As these SFDA algorithms have not reported their performance for DomainNet dataset, We follow the hyperparameter search strategy from their work (Liang et al., 2020; Yang et al., 2021a; b), and choose the optimal hyperparameters for SHOT, and for NRC, and for G-SFDA.

| Method | SF | AD | AW | DW | WD | DA | WA | Avg |

|---|---|---|---|---|---|---|---|---|

| MCD (Saito et al., 2018b) | ✗ | 92.2 | 88.6 | 98.5 | 100.0 | 69.5 | 69.7 | 86.5 |

| CDAN (Long et al., 2018) | ✗ | 92.9 | 94.1 | 98.6 | 100.0 | 71.0 | 69.3 | 87.7 |

| MDD (Zhang et al., 2019b) | ✗ | 90.4 | 90.4 | 98.7 | 99.9 | 75.0 | 73.7 | 88.0 |

| BNM (Cui et al., 2020) | ✗ | 90.3 | 91.5 | 98.5 | 100.0 | 70.9 | 71.6 | 87.1 |

| DMRL (Wu et al., 2020) | ✗ | 93.4 | 90.8 | 99.0 | 100.0 | 73.0 | 71.2 | 87.9 |

| BDG (Yang et al., 2020) | ✗ | 93.6 | 93.6 | 99.0 | 100.0 | 73.2 | 72.0 | 88.5 |

| MCC (Jin et al., 2020) | ✗ | 95.6 | 95.4 | 98.6 | 100.0 | 72.6 | 73.9 | 89.4 |

| SRDC (Tang et al., 2020) | ✗ | 95.8 | 95.7 | 99.2 | 100.0 | 76.7 | 77.1 | 90.8 |

| RWOT (Xu et al., 2020) | ✗ | 94.5 | 95.1 | 99.5 | 100.0 | 77.5 | 77.9 | 90.8 |

| RSDA-MSTN (Gu et al., 2020) | ✗ | 95.8 | 96.1 | 99.3 | 100.0 | 77.4 | 78.9 | 91.1 |

| Source Only | ✓ | 80.8 | 76.9 | 95.3 | 98.7 | 60.3 | 63.6 | 79.3 |

| +ELR | ✓ | 90.9 | 89.0 | 98.2 | 100.0 | 67.1 | 64.1 | 84.9 |

| SHOT (Liang et al., 2020) | ✓ | 94.0 | 90.1 | 98.4 | 99.9 | 74.7 | 74.3 | 88.6 |

| +ELR | ✓ | 94.9 | 91.6 | 98.7 | 100.0 | 75.2 | 74.5 | 89.3 |

| G-SFDA (Yang et al., 2021b) | ✓ | 85.9 | 87.3 | 98.6 | 99.8 | 71.4 | 72.1 | 85.8 |

| +ELR | ✓ | 86.9 | 87.8 | 98.7 | 99.8 | 71.4 | 72.9 | 86.2 |

| NRC (Yang et al., 2021a) | ✓ | 93.7 | 93.8 | 97.8 | 100.0 | 75.5 | 75.6 | 89.4 |

| +ELR | ✓ | 93.8 | 93.3 | 98.0 | 100.0 | 76.2 | 76.9 | 89.6 |

| Hyperparameters: / | Office- | Office-Home | VisDA | DomainNet |

|---|---|---|---|---|

| ELR only | / | / | / | / |

| ELR + SHOT | / | / | / | / |

| ELR + G-SFDA | / | / | / | / |

| ELR + NRC | / | / | / | / |

Appendix H Memorization Speed Between Label Noise in SFDA and in Conventional LLN settings