When Does Differentially Private Learning

Not Suffer in High Dimensions?

Abstract

Large pretrained models can be fine-tuned with differential privacy to achieve performance approaching that of non-private models. A common theme in these results is the surprising observation that high-dimensional models can achieve favorable privacy-utility trade-offs. This seemingly contradicts known results on the model-size dependence of differentially private convex learning and raises the following research question: When does the performance of differentially private learning not degrade with increasing model size? We identify that the magnitudes of gradients projected onto subspaces is a key factor that determines performance. To precisely characterize this for private convex learning, we introduce a condition on the objective that we term restricted Lipschitz continuity and derive improved bounds for the excess empirical and population risks that are dimension-independent under additional conditions. We empirically show that in private fine-tuning of large language models, gradients obtained during fine-tuning are mostly controlled by a few principal components. This behavior is similar to conditions under which we obtain dimension-independent bounds in convex settings. Our theoretical and empirical results together provide a possible explanation for the recent success of large-scale private fine-tuning. Code to reproduce our results can be found at https://github.com/lxuechen/private-transformers/tree/main/examples/classification/spectral_analysis.

1 Introduction

Recent works have shown that large publicly pretrained models can be differentially privately fine-tuned on small downstream datasets with performance approaching those attained by non-private models. In particular, past works showed that pretrained BERT [DCLT18] and GPT-2 [RNSS18, RWC+19] models can be fine-tuned to perform well for text classification and generation under a privacy budget of [LTLH21, YNB+21]. More recently, it was shown that pretrained ResNets [HZRS16] and vision-Transformers [DBK+20] can be fine-tuned to perform well for ImageNet classification under single digit privacy budgets [DBH+22, MTKC22].

One key ingredient in these successes has been the use of large pretrained models with millions to billions of parameters. These works generally highlighted the importance of two phenomena: (i) large pretrained models tend to experience good privacy-utility trade-offs when fine-tuned, and (ii) the trade-off improves with the improvement of the quality of the pretrained model (correlated with increase in size). While the power of scale and pretraining have been demonstrated numerous times in non-private deep learning [KMH+20], one common wisdom in private learning had been that large models tend to perform worse. This intuition was based on (a) results in differentially private convex optimization, most of which predicted that errors would scale proportionally with the dimension of the learning problem in the worst case, and (b) empirical observations that the noise injected to ensure privacy tends to greatly exceed the gradient in magnitude for large models [YZCL21a, Kam20].

For instance, consider the problem of differentially private convex empirical risk minimization (ERM). Here, we are given a dataset of examples , a convex set (not necessarily bounded), and the goal is to perform the optimization

subject to differential privacy, where is convex over for all . For bounded , past works presented matching upper and lower bounds that are dimension-dependent under the usual Lipschitz assumption on the objective [BST14, CMS11]. These results seem to suggest that the performance of differentially private ERM algorithms inevitably degrades with increasing problem size in the worst case, and present a seeming discrepancy between recent empirical results on large-scale fine-tuning.111We judiciously choose to describe the discrepancy as seeming, since the refined analysis presented in the current work suggests that the discrepancy is likely non-existent.

To better understand the relation between problem size and the performance of differentially private learning, we study the following question both theoretically and empirically:

When does the performance of differentially private stochastic gradient descent (DP-SGD) not degrade with increasing problem dimension?

On the theoretical front, we show that DP-SGD can result in dimension-independent error bounds even when gradients span the entire ambient space for unconstrained optimization problems. We identify that the standard dependence on the dimension of the ambient space can be replaced by the magnitudes of gradients projected onto subspaces of varying dimensions. We formalize this in a condition that we call restricted Lipschitz continuity and derive refined bounds for the excess empirical and population risks for DP-SGD when loss functions obey this condition. We show that when the restricted Lipschitz coefficients decay rapidly, both the excess empirical and population risks become dimension-independent. This extends a previous work which derived rank-dependent bounds for learning generalized linear models in an unconstrained space [SSTT21].

Our theoretical results shed light on the recent success of large-scale differentially private fine-tuning. We empirically show that gradients of language models during fine-tuning are mostly controlled by a few principal components — a behavior that is similar to conditions under which we obtain dimension-independent bounds for private convex ERM. This provides a possible explanation for the observation that densely fine-tuning with DP-SGD need not necessarily experience much worse performance than sparsely fine-tuning [LTLH21]. Moreover, it suggests that DP-SGD can be adaptive to problems that are effectively low-dimensional (as characterized by restricted Lipschitz continuity) without further algorithmic intervention.

We summarize our contributions below.

-

(1)

We introduce a condition on the objective function that we term restricted Lipschitz continuity. This condition generalizes the usual Lipschitz continuity notion and gives rise to refined analyses when magnitudes of gradients projected onto diminishing subspaces decay rapidly.

-

(2)

Under restricted Lipschitz continuity, we present refined bounds on the excess empirical and population risks for DP-SGD when optimizing convex objectives. These bounds generalize previous dimension-independent results [SSTT21] and are broadly applicable to cases where gradients are full rank but most coordinates only marginally influence the objective.

-

(3)

Our theory sheds light on recent successes of large-scale differentially private fine-tuning of language models. We show that gradients obtained through fine-tuning mostly lie in a subspace spanned by a few principal components — a behavior similar to when optimizing a restricted Lipschitz continuous loss with decaying coefficients. These empirical results provide a possible explanation for the recent success of large-scale private fine-tuning.

2 Preliminaries

We define the notation used throughout this work and state the problems of differentially private empirical risk minimization and differentially private stochastic convex optimization. Finally, we give a brief recap of differentially private stochastic gradient descent, and existing dimension-dependent and dimension-independent results in the literature.

Notation & Terminology.

For a positive integer , define the shorthand . For a vector , denote its -norm by . Given a symmetric , let denote its eigenvalues. Given a positive semidefinite matrix , let denote the induced Mahalanobis norm. For scalar functions and , we write if there exists a positive constant such that for all input in the domain.

2.1 Differentially Private Empirical Risk Minimization and Stochastic Convex Optimization

Before stating the theoretical problem of interest, we recall the basic concepts of Lipschitz continuity, convexity, and approximate differential privacy.

Definition 2.1 (Lipschitz Continuity).

The loss function is -Lipschitz with respect to the norm if for all , .

Definition 2.2 (Convexity).

The loss function is convex if , for all , and in a convex domain .

Definition 2.3 (Approximate Differential Privacy [DR+14]).

A randomized algorithm is -differentially private if for all neighboring datasets and that differ by a single record and all sets , the following expression holds

In this work, we study both differentially private empirical risk minimization (DP-ERM) for convex objectives and differentially private stochastic convex optimization (DP-SCO).

In DP-ERM for convex objectives, we are given a dataset of examples. Each per-example loss is convex over the convex body and -Lipschitz. We aim to design an -DP algorithm that returns a solution which approximately minimizes the empirical risk . The term is referred to as the excess empirical risk.

In DP-SCO, we assume the per-example loss is convex and -Lipschitz for all , and we are given examples drawn i.i.d. from some (unknown) distribution . The goal is to design an -DP algorithm which approximately minimizes the population risk . The term is referred to as the excess population risk.

2.2 Differentially Private Stochastic Gradient Descent

Differentially Private Stochastic Gradient Descent (DP-SGD) [ACG+16, SCS13] is a popular algorithm for DP convex optimization. For the theoretical analysis, we study DP-SGD for unconstrained optimization. To facilitate analysis, we work with the regularized objective expressed as

To optimize this objective, DP-SGD independently samples an example in each iteration and updates the solution by combining the gradient with an isotropic Gaussian whose scale is proportional to , the Lipschitz constant of . Algorithm 1 presents the pseudocode.

2.3 On the Dimension Dependence of Private Learning

Early works on bounding the excess empirical and population risks for privately optimizing convex objectives focused on a constrained optimization setup where algorithms typically project iterates back onto a fixed bounded domain after each noisy gradient update. Results in this setting suggested that risks are inevitably dimension-dependent in the worst case. For instance, it was shown that the excess empirical risk bound and excess population risk bound are tight when privately optimizing convex -Lipschitz objectives in a convex domain of diameter [BST14]. Moreover, the lower bound instances in these works imply that such dimension-dependent lower bounds also apply when one considers the class of loss functions whose gradients are low-rank.

The body of work on unconstrained convex optimization is arguably less abundant, with the notable result that differentially private gradient descent need not suffer from a dimension-dependent penalty when learning generalized linear models with low-rank data (equivalently stated, when gradients are low-rank) [SSTT21]. Our main theoretical results (Theorems 3.3 and 3.5) extend this line of work and show that dimension-independence is achievable under weaker conditions.

3 Dimension-Independence via Restricted Lipschitz Continuity

In this section, we introduce the restricted Lipschitz continuity condition and derive improved bounds for the excess empirical and population risks for DP-SGD when optimizing convex objectives.

Definition 3.1 (Restricted Lipschitz Continuity).

We say that the loss function is restricted Lipschitz continuous with coefficients , if for all , there exists an orthogonal projection matrix with rank such that

for all and all subgradients .

Note that any -Lipschitz function is also trivially restricted Lipschitz continuous with coefficients , since orthogonal projections never increase the -norm of a vector (generally, it is easy to see that ). On the other hand, we expect that a function which exhibits little growth in some subspace of dimension to have a restricted Lipschitz coefficient of almost 0. Our bounds on DP convex optimization characterize errors through the use of restricted Lipschitz coefficients. We now summarize the main assumption.

Assumption 3.2.

The per-example loss function is convex and -Lipschitz continuous for all . The empirical loss is restricted Lipschitz continuous with coefficients .

3.1 Bounds for Excess Empirical Loss

We present the main theoretical result on DP-ERM for convex objectives. The result consists of two components: Equation (1) is a general bound that is applicable to any sequence of restricted Lipschitz coefficients; Equation (2) specializes the previous bound when the sequence of coefficients decays rapidly and demonstrates dimension-independent error scaling.

Theorem 3.3 (Excess Empirical Loss).

Let and . Under Assumption 3.2, for all , setting , , and , where , DP-SGD (Algorithm 1) is -DP, and

| (1) |

where , is the (random) output of DP-SGD (Algorithm 1), and the expectation is over the randomness of . Moreover, if for some , we have for all , and in addition , minimizing the right hand side of (1) with respect to yields

| (2) |

We include a sketch of the proof techniques in Subsection 3.3 and defer the full proof to Subsection 3.4.

Remark 3.4.

Consider DP-ERM for learning generalized linear models with convex and Lipschitz losses. When the (empirical) data covariance is of rank , the span of gradients is also of rank . Thus, the average loss is restricted Lipschitz continuous with coefficients where for all . Setting in (1) yields an excess empirical risk bound of order . This recovers the previous dimension-independent result in [SSTT21].

The restricted Lipschitz continuity condition can be viewed as a generalized notion of rank. The result captured in (2) suggests that the empirical loss achieved by DP-SGD does not depend on the problem dimension if the sequence of restricted Lipschitz coefficients decays rapidly. We leverage these insights to build intuition for understanding privately fine-tuning language models in Section 4.

3.2 Bounds for Excess Population Loss

For DP-SCO, we make use of the stability of DP-SGD to bound its generalization error [BE02], following previous works [BFTT19, BFGT20, SSTT21]. The bound on the excess population loss follows from combining the bounds on the excess empirical risk and the generalization error.

Theorem 3.5 (Excess Population Loss).

Let and . Under Assumption 3.2, for all , by setting , , and , where , DP-SGD (Algorithm 1) is -DP, and

where , is the (random) output of DP-SGD (Algorithm 1), and the expectation is over the randomness of .

Moreover, if for some , we have for all , and in addition , minimizing the above bound with respect to yields

Remark 3.6.

Our result on DP-SCO also recovers the DP-SCO rank-dependent result for learning generalized linear models with convex and Lipschitz losses [SSTT21].

Remark 3.7.

When , and , the population loss matches the (non-private) informational-theoretical lower bound [AWBR09].

Remark 3.8.

Our results on DP-ERM and DP-SCO naturally generalize to (full-batch) DP-GD.

3.3 Overview of Proof Techniques

The privacy guarantees in Theorems 3.3 and 3.5 follow from Lemma 2.4. It suffices to prove the utility guarantees. We give an outline of the main proof techniques first and present full proofs afterwards. The following is a sketch of the core technique for deriving (2) in Theorem 3.3. For simplicity, we write for and for when there is no ambiguity.

By convexity, the error term of SGD is upper bounded as follows

| (3) |

where is the random index sampled at iteration .

By definition of , we know that there is a dimensional subspace such that the gradient component orthogonal to is small when is small. Naïvely, one decomposes the gradient , where and , and separately bounds the two terms and . Since lies in a dimensional subspace, one can follow existing arguments on DP-SGD to bound . Unfortunately, this argument does not give a dimension-independent bound. Although (which can be small for large ), the term is as large as with high probability due to the isotropic Gaussian noise injected in DP-SGD.

Our key idea is to partition the whole space into orthogonal subspaces, expressing the error term as the sum of individual terms, each of which corresponds to a projection to a particular subspace. Fix a , and consider the following subspaces: Let , be the subspace orthogonal to all previous subspaces such that for , and be the subspace such that the orthogonal direct sum of all subspaces is , where . Here, is the orthogonal projection matrix with rank promised by in Assumption 3.2. Let be the orthogonal projection to the subspace , and observe that and Rewriting the right hand side of (3) with this decomposition yields

On the one hand, if decays quickly, can be small for large . On the other hand, we expect to be small for small where is an orthogonal projection onto a small subspace. Thus, for each , is small either due to a small gradient (small in expectation over the random index) or small noise (small ), since noise injected in DP-SGD is isotropic. More formally, in Lemma 3.9, we show that for any projection matrix with rank , can be upper bounded by a term that depends only on (rather than ).

3.4 Proof of Theorem 3.3

Before bounding the utility of DP-SGD, we first bound in expectation.

Lemma 3.9.

Suppose Assumption 3.2 holds. Let be an orthogonal projection matrix with rank and suppose that for all and . If we set in DP-SGD, then for all , we have

Proof of Lemma 3.9.

By the assumption, we know and . Let . Note that

where is the isotropic Gaussian noise drawn in th step. For simplicity, we use to denote the noisy subgradient . Hence, we have

Taking expectation over the random sample and random Gaussian noise , we have

where we used the fact that has zero mean, , and . Further simplifying and taking expectation over all iterations, we have

| (4) |

Using that , we know . Solving the recursion (Equation (3.4)) gives

for all . This concludes the proof. ∎

Now, we are ready to bound the utility. The proof builds upon the standard mirror descent proof.

Lemma 3.10.

Let , and . Under Assumption 3.2, let be the initial iterate and be such that . For all , setting , , and , we have

where , is the output of DP-SGD, and the expectation is under the randomness of DP-SGD.

Moreover, if for each for some , and in addition , picking the best for the bound above gives

Proof of Lemma 3.10.

The above statement is true for by standard arguments in past work [BST14, SSTT21]. Now fix a . Our key idea is to split the whole space into different subspaces. We define the following set of subspaces:

-

•

.

-

•

For , let be a subspace with maximal dimension such that for all .

-

•

For , let be the subspace such that , and for all .

Recall is the orthogonal projection matrix with rank that gives rise to in Assumption 3.2. In the above, we have assumed that the base of is 2. Let be the orthogonal projection matrix that projects vectors onto the subspace . Note that since . Moreover, it’s clear that for all . This is true by construction and that . Thus,

| (5) |

for all and all .

Let be the (uniformly random) index sampled in iteration of DP-SGD. By convexity of the individual loss ,

By construction, is the orthogonal direct sum of the subspaces , and thus any vector can be rewritten as the sum . We thus split the right hand side of the above as follows

| (6) |

We use different approaches to bound and when , and we discuss them separately in the following.

Bounding : Recall that

for some Gaussian . Hence, we have

| (7) |

where we used the fact that for any (since is a projection matrix), and the last equality follows from

Taking expectation on over both sides of Equation (3.4) and making use of the fact that has mean , we have

Recalling the definition of and that has rank at most , one has

Moreover, one has

Therefore, we have

| (8) | ||||

| (9) |

where we used at the end.

Bounding : We bound the objective above for each separately. By taking expectation over the random , we have

| (10) |

where we chose and used the bound (5) and Young’s inequality at the end.

Bounding Equation (6): Combining both the terms (9) and (10) and taking expectation over all randomness, we have

Recall . Under the other assumptions, by Lemma 3.9, one can show

Using , we have

Summing up over , by the assumption that and convexity of the function, we have

| (11) |

Set the parameters , , and for some large constants . Note that this choice of parameters satisfies

where we used the fact that , , , and is large enough.

Using the parameters we pick, we have

Moreover, assuming for some , we have . Hence,

Since the above bound holds for all , we may optimize it with respect to . Recall by assumption that . Letting

yields the bound

∎

3.5 Proof of Theorem 3.5

We study the generalization error of DP-SGD and make use of its stability. The bound on the excess population loss follows from combining bounds on the excess empirical loss and the generalization error. Before stating the proof, we first recall two results in the literature.

Lemma 3.11 ([BE02, Lemma 7]).

Given a learning algorithm , a dataset formed by i.i.d. samples drawn from the underlying distribution , and we replace one random sample in with a freshly sampled to obtain a new neighboring dataset . One has

where is the output of with input .

Lemma 3.12 ([BFGT20, Theorem 3.3]).

Suppose Assumption 3.2 holds, running DP-SGD with step size on any two neighboring datasets and for steps yields the following bound

where and are the outputs of DP-SGD with datasets and , respectively.

Proof of Theorem 3.5.

Let and be the outputs of DP-SGD when applied to the datasets and , respectively. is a neighbor of with one example replaced by that is independently sampled. Combining Lemma 3.11 and Lemma 3.12 yields

Similar to the DP-ERM case, by setting , , and for some large positive constants and , we conclude that . Hence, Equation (11) shows that, for any fixed dataset and any such that , we have

We can rewrite the population loss as follows

Substituting in the values for parameters , , , and yields

for all .

Similarly, if we have for some , and in addition , it immediately follows that

This completes the proof. ∎

4 Numerical Experiments

The aim of this section is twofold. In Section 4.1, we study a synthetic example that matches our theoretical assumptions and show that DP-SGD attains dimension-independent empirical and population loss when the sequence of restricted Lipschitz coefficients decays rapidly—even when gradients span the entire ambient space. In Section 4.2, we study a stylized example of privately fine-tuning large language models. Building on the previous theory, we provide insights as to why dense fine-tuning can yield good performance.

4.1 Synthetic Example: Estimating the Generalized Geometric Median

We privately estimate the geometric median which minimizes the average Mahalanobis distance. Specifically, let for be feature vectors drawn i.i.d. from some distribution , each of which is treated as an individual record. Denote the entire dataset as . Subject to differential privacy, we perform the following optimization

| (12) |

where we adopt the shorthand . When and (without the regularization term), the problem reduces to estimating the usual geometric median (commonly known as center of mass).

For this example, individual gradients are bounded since . More generally, the restricted Lipschitz coefficients of are the eigenvalues of , since

where is chosen to be the rank orthogonal projection matrix that projects onto the subspace spanned by the bottom eigenvectors of .

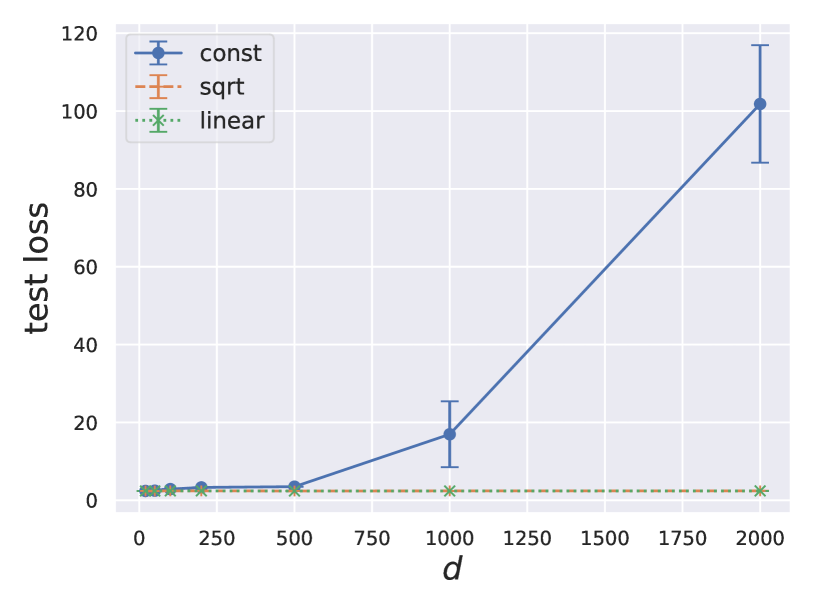

To verify our theory, we study the optimization and generalization performance of DP-SGD for minimizing (12) under Mahalanobis distances induced by different as the problem dimension grows. The optimization performance is measured by the final training error, and the generalization performance is measured by the population quantity , where denotes the random output of DP-SGD. We study the dimension scaling behavior for being one of

where maps vectors onto square matrices with inputs on the diagonal. In all cases, the span of gradients is the ambient space , since is of full rank. To ensure the distance from the initial iterate to the optimum is the same for problem instances of different dimensions, we let feature vectors take zero values in any dimension , where is the dimension of the smallest problem in our experiments. Our theoretical bounds suggest that when the sequence of restricted Lipschitz coefficients is constant (when ), the excess empirical loss grows with the problem dimension, whereas when the sequence of th-Lipschitz constants rapidly decays with (when or ), the excess empirical loss does not grow beyond a certain problem dimension. Figure 1 empirically captures this phenomenon. We include additional experimental setup details in Appendix A.

(a) empirical loss

(b) (estimated) population loss

4.2 Why Does Dense Fine-Tuning Work Well for Pretrained Language Models?

Stated informally, our bounds in Theorem 3.5 imply that DP-SGD obtains dimension-independent errors if gradients approximately reside in a subspace much smaller than the ambient space. Inspired by these results for the convex case, we now turn to study dense language model fine-tuning [LTLH21] and provide a possible explanation for their recent intriguing success — fine-tuning gigantic parameter vectors frequently results in moderate performance drops compared to non-private learning.

In the following, we present evidence that gradients obtained through fine-tuning mostly lie in a small subspace. We design subsequent experiments to work under a simplified setup. Specifically, we fine-tune DistilRoBERTa [SDCW19, LOG+19] under and for sentiment classification on the SST-2 dataset [SPW+13]. We reformulate the label prediction problem as templated text prediction [LTLH21], and fine-tune only the query and value matrices in attention layers.

We focus on fine-tuning these specific parameter matrices due to the success of LoRA for non-private learning [HSW+21] which focuses on adapting the attention layers. Unlike LoRA, we fine-tune all parameters in these matrices rather than focusing on low-rank updates. This gives a setup that is lightweight enough to run spectral analyses computationally tractably but retains enough parameters ( million) such that a problem of similar scale outside of fine-tuning results in substantial losses in utility.222For instance, an off-the-shelf ResNet image classifier has 10 to 20+ million parameters. A plethora of works report large performance drops when training these models from scratch [YZCL21b, LWAFF21, DBH+22]. For our setup, DP-SGD obtains a dev set accuracy approximately of and , privately and non-privately, respectively. These numbers are similar to previous results obtained with the same pretrained model [YNB+21, LTLH21]. We include the full experimental protocol and additional results in Appendix B.

To provide evidence for the small subspace hypothesis, we sample gradients during fine-tuning and study their principal components. Specifically, we “over-train” by privately fine-tuning for updates and collect all the non-privatized average clipped gradients along the optimization trajectory. While fine-tuning for 200 and 2k updates have similar final dev set performance under our hyperparameters, the increased number of steps allows us to collect more gradients around the converged solution. This yields a gradient matrix , where is the size of the parameter vector. We perform PCA for with the orthogonal iteration algorithm [Dem97] and visualize the set of estimated singular values in terms of both (i) the density estimate, and (ii) their relation with the rank. Figure 2 (a) shows the top 1000 singular values sorted and plotted against their rank and the least squares fit on log-transformed inputs and outputs. The plot displays few large singular values which suggests that gradients are controlled through only a few principal directions. The linear fit suggests that singular values decay rapidly (at a rate of approximately ).

To study the effects that different principal components have on fine-tuning performance, we further perform the following re-training experiment. Given the principal components, we privately re-fine-tune with gradients projected onto the top components. Note that this projection applies only to the (non-privatized) average clipped gradients and the isotropic DP noise is still applied to all dimensions. Figure 2 (b) shows that the original performance can be attained by optimizing within a subspace of only dimension , suggesting that most of the dimensions of the 7 million parameter vector encode a limited learning signal.

While these empirical results present encouraging insights for the dimension-independent performance of fine-tuning, we acknowledge that this is not a complete validation of the restricted Lipschitz continuity condition and fast decay of coefficients (even locally near the optimum). We leave a more thorough analysis with additional model classes and fine-tuning tasks to future work.

(a) singular values decay with rank

(b) retrain in fixed subspace

5 Related Work

DP-ERM and DP-SCO are arguably the most well-studied areas of differential privacy [CMS11, KST12, BST14, SCS13, WYX17, FTS17, BFTT19, MRTZ17, ZZMW17, WLK+17, FKT20, INS+19, BFGT20, STT20, LL21, AFKT21, BGN21, GTU22, GLL22]. Tight dependence on the number of model parameters and the number of samples is known for both DP-ERM [BST14] and DP-SCO [BFTT19]. In particular, for the error on general convex losses, an explicit polynomial dependence on the number of optimization variables is necessary. However, it is shown that if gradients lie in a fixed low-rank subspace , the dependence on dimension can be replaced by which can be significantly smaller [JT14, STT20]. We extend this line of work to show that under a weaker assumption (restricted Lipschitz continuity with decaying coefficients) one can obtain analogous error guarantees that are independent of , but do not require the gradients of the loss to strictly lie in any fixed low-rank subspace . As a consequence, our results provide a plausible explanation for the empirical observation that dense fine-tuning can be effective and that fine-tuning a larger model under DP can generally be more advantageous in terms of utility than fine-tuning a smaller model [LTLH21, YNB+21]. A concurrent work shows that the standard dimension dependence of DP-SGD can be replaced by a dependence on the trace of the Hessian assuming the latter quantity is uniformly bounded [MMZ22].

A complementary line of work designed variants of DP-SGD that either explicitly or implicitly control the subspace in which gradients are allowed to reside [AGM+21, LVS+21, ADF+21, KDRT21, YZCL21b]. They demonstrated improved dependence of the error on the dimension if the true gradients lie in a “near” low-rank subspace. Our results are incomparable to this line of work because of two reasons: (i) Our algorithm is vanilla DP-SGD and does not track the gradient subspace either explicitly or implicitly, and hence does not change the optimization landscape. Our improved dependence on dimensions is an artifact of the analysis. (ii) Our analytical results do not need the existence of any public data to obtain tighter dependence on dimensions. All prior works mentioned above need the existence of public data to demonstrate any improvement.

On the empirical front, past works have observed that for image classification tasks, gradients of ConvNets converge to a small subspace spanned by the top directions of the Hessian. In addition, this span remains stable for long periods of time during training [GARD18]. While insightful, this line of work does not look at language model fine-tuning. Another line of work measures for language model fine-tuning the intrinsic dimension—the minimum dimension such that optimizing in a randomly sampled subspace of such dimension approximately recovers the original performance [LFLY18, AZG20]. We note that a small intrinsic dimension likely suggests that gradients are approximately low rank. Yet, this statement should not be interpreted as a strict implication, since the notion of intrinsic dimension is at best vaguely defined (e.g., there’s no explicit failure probability threshold over the randomly sampled subspace in the original statement), and the definition involves not a fixed subspace but rather a randomly sampled one.

6 Conclusion

We made an attempt to reconcile two seemingly conflicting results: (i) in private convex optimization, errors are predicted to scale proportionally with the dimension of the learning problem; while (ii) in empirical works on large-scale private fine-tuning through DP-SGD, privacy-utility trade-offs become better with increasing model size. We introduced the notion of restricted Lipschitz continuity, with which we gave refined analyses of DP-SGD for DP-ERM and DP-SCO. When the magnitudes of gradients projected onto diminishing subspaces decay rapidly, our analysis showed that excess empirical and population losses of DP-SGD are independent of the model dimension. Through preliminary experiments, we gave empirical evidence that gradients of large pretrained language models obtained through fine-tuning mostly lie in the subspace spanned by a few principal components. Our theoretical and empirical results together give a possible explanation for recent successes in large-scale differentially private fine-tuning.

Given our improved upper bounds on the excess empirical and population risks for differentially private convex learning, it is instructive to ask if such bounds are tight in the mini-max sense. We leave answering this inquiry to future work. In addition, while we have presented encouraging empirical evidence that fine-tuning gradients mostly lie in a small subspace, more work is required to study the robustness of this phenomenon with respect to the model class and fine-tuning problem. Overall, we hope that our work leads to more research on understanding conditions under which DP learning does not degrade with increasing problem size, and more generally, how theory can inform and explain the practical successes of differentially private deep learning.

Acknowledgments

We thank Guodong Zhang for helpful discussions and comments on an early draft. XL is supported by a Stanford Graduate Fellowship. Lee and Liu are partially supported by NSF awards CCF-1749609, DMS-1839116, DMS-2023166, CCF-2105772, a Microsoft Research Faculty Fellowship, a Sloan Research Fellowship, and a Packard Fellowship.

References

- [ACG+16] Martin Abadi, Andy Chu, Ian Goodfellow, H Brendan McMahan, Ilya Mironov, Kunal Talwar, and Li Zhang. Deep learning with differential privacy. In Proceedings of the 2016 ACM SIGSAC conference on computer and communications security, pages 308–318, 2016.

- [ADF+21] Hilal Asi, John Duchi, Alireza Fallah, Omid Javidbakht, and Kunal Talwar. Private adaptive gradient methods for convex optimization. In International Conference on Machine Learning, pages 383–392. PMLR, 2021.

- [AFKT21] Hilal Asi, Vitaly Feldman, Tomer Koren, and Kunal Talwar. Private stochastic convex optimization: Optimal rates in geometry. arXiv preprint arXiv:2103.01516, 2021.

- [AGM+21] Ehsan Amid, Arun Ganesh, Rajiv Mathews, Swaroop Ramaswamy, Shuang Song, Thomas Steinke, Vinith M. Suriyakumar, Om Thakkar, and Abhradeep Thakurta. Public data-assisted mirror descent for private model training. CoRR, abs/2112.00193, 2021.

- [AWBR09] Alekh Agarwal, Martin J Wainwright, Peter Bartlett, and Pradeep Ravikumar. Information-theoretic lower bounds on the oracle complexity of convex optimization. Advances in Neural Information Processing Systems, 22, 2009.

- [AZG20] Armen Aghajanyan, Luke Zettlemoyer, and Sonal Gupta. Intrinsic dimensionality explains the effectiveness of language model fine-tuning. arXiv preprint arXiv:2012.13255, 2020.

- [BBG18] Borja Balle, Gilles Barthe, and Marco Gaboardi. Privacy amplification by subsampling: Tight analyses via couplings and divergences. Advances in Neural Information Processing Systems, 31, 2018.

- [BE02] Olivier Bousquet and André Elisseeff. Stability and generalization. The Journal of Machine Learning Research, 2:499–526, 2002.

- [BFGT20] Raef Bassily, Vitaly Feldman, Cristóbal Guzmán, and Kunal Talwar. Stability of stochastic gradient descent on nonsmooth convex losses. Advances in Neural Information Processing Systems, 33:4381–4391, 2020.

- [BFTT19] Raef Bassily, Vitaly Feldman, Kunal Talwar, and Abhradeep Thakurta. Private stochastic convex optimization with optimal rates. In Advances in Neural Information Processing Systems, pages 11279–11288, 2019.

- [BGN21] Raef Bassily, Cristóbal Guzmán, and Anupama Nandi. Non-euclidean differentially private stochastic convex optimization. In Conference on Learning Theory, pages 474–499. PMLR, 2021.

- [BST14] Raef Bassily, Adam Smith, and Abhradeep Thakurta. Private empirical risk minimization: Efficient algorithms and tight error bounds. In 2014 IEEE 55th Annual Symposium on Foundations of Computer Science, pages 464–473. IEEE, 2014.

- [CMS11] Kamalika Chaudhuri, Claire Monteleoni, and Anand D Sarwate. Differentially private empirical risk minimization. Journal of Machine Learning Research, 12(3), 2011.

- [DBH+22] Soham De, Leonard Berrada, Jamie Hayes, Samuel L Smith, and Borja Balle. Unlocking high-accuracy differentially private image classification through scale. arXiv preprint arXiv:2204.13650, 2022.

- [DBK+20] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- [DCLT18] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018.

- [Dem97] James W Demmel. Applied numerical linear algebra. SIAM, 1997.

- [DR+14] Cynthia Dwork, Aaron Roth, et al. The algorithmic foundations of differential privacy. Found. Trends Theor. Comput. Sci., 9(3-4):211–407, 2014.

- [FKT20] Vitaly Feldman, Tomer Koren, and Kunal Talwar. Private stochastic convex optimization: optimal rates in linear time. In Proceedings of the 52nd Annual ACM SIGACT Symposium on Theory of Computing, pages 439–449, 2020.

- [FTS17] Kazuto Fukuchi, Quang Khai Tran, and Jun Sakuma. Differentially private empirical risk minimization with input perturbation. In International Conference on Discovery Science, pages 82–90. Springer, 2017.

- [GARD18] Guy Gur-Ari, Daniel A Roberts, and Ethan Dyer. Gradient descent happens in a tiny subspace. arXiv preprint arXiv:1812.04754, 2018.

- [GKX19] Behrooz Ghorbani, Shankar Krishnan, and Ying Xiao. An investigation into neural net optimization via hessian eigenvalue density. In International Conference on Machine Learning, pages 2232–2241. PMLR, 2019.

- [GLL22] Sivakanth Gopi, Yin Tat Lee, and Daogao Liu. Private convex optimization via exponential mechanism. arXiv preprint arXiv:2203.00263, 2022.

- [GTU22] Arun Ganesh, Abhradeep Thakurta, and Jalaj Upadhyay. Langevin diffusion: An almost universal algorithm for private euclidean (convex) optimization. arXiv preprint arXiv:2204.01585, 2022.

- [GWG19] Diego Granziol, Xingchen Wan, and Timur Garipov. Deep curvature suite. arXiv preprint arXiv:1912.09656, 2019.

- [HSW+21] Edward J Hu, Yelong Shen, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, and Weizhu Chen. Lora: Low-rank adaptation of large language models. arXiv preprint arXiv:2106.09685, 2021.

- [HZRS16] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [INS+19] Roger Iyengar, Joseph P Near, Dawn Song, Om Thakkar, Abhradeep Thakurta, and Lun Wang. Towards practical differentially private convex optimization. In 2019 IEEE Symposium on Security and Privacy (SP), 2019.

- [JT14] Prateek Jain and Abhradeep Guha Thakurta. (near) dimension independent risk bounds for differentially private learning. In International Conference on Machine Learning, pages 476–484, 2014.

- [Kam20] Gautam Kamath. Lecture 14 — Private ML and Stats: Modern ML. http://www.gautamkamath.com/CS860notes/lec14.pdf, 2020.

-

[KDRT21]

Peter Kairouz, Monica Ribero Diaz, Keith Rush, and Abhradeep Thakurta.

(nearly) dimension independent private erm with adagrad rates

via publicly estimated subspaces. In COLT, 2021. - [KLL21] Janardhan Kulkarni, Yin Tat Lee, and Daogao Liu. Private non-smooth empirical risk minimization and stochastic convex optimization in subquadratic steps. arXiv preprint arXiv:2103.15352, 2021.

- [KMH+20] Jared Kaplan, Sam McCandlish, Tom Henighan, Tom B Brown, Benjamin Chess, Rewon Child, Scott Gray, Alec Radford, Jeffrey Wu, and Dario Amodei. Scaling laws for neural language models. arXiv preprint arXiv:2001.08361, 2020.

- [KST12] Daniel Kifer, Adam Smith, and Abhradeep Thakurta. Private convex empirical risk minimization and high-dimensional regression. In Conference on Learning Theory, pages 25–1, 2012.

- [LFLY18] Chunyuan Li, Heerad Farkhoor, Rosanne Liu, and Jason Yosinski. Measuring the intrinsic dimension of objective landscapes. arXiv preprint arXiv:1804.08838, 2018.

- [LL21] Daogao Liu and Zhou Lu. Curse of dimensionality in unconstrained private convex erm. arXiv preprint arXiv:2105.13637, 2021.

- [LOG+19] Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin Stoyanov. Roberta: A robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692, 2019.

- [LTLH21] Xuechen Li, Florian Tramer, Percy Liang, and Tatsunori Hashimoto. Large language models can be strong differentially private learners. arXiv preprint arXiv:2110.05679, 2021.

- [LVS+21] Terrance Liu, Giuseppe Vietri, Thomas Steinke, Jonathan Ullman, and Zhiwei Steven Wu. Leveraging public data for practical private query release. arXiv preprint arXiv:2102.08598, 2021.

- [LWAFF21] Zelun Luo, Daniel J Wu, Ehsan Adeli, and Li Fei-Fei. Scalable differential privacy with sparse network finetuning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5059–5068, 2021.

- [MMZ22] Yi-An Ma, Teodor Vanislavov Marinov, and Tong Zhang. Dimension independent generalization of dp-sgd for overparameterized smooth convex optimization. arXiv preprint arXiv:2206.01836, 2022.

- [MRTZ17] H Brendan McMahan, Daniel Ramage, Kunal Talwar, and Li Zhang. Learning differentially private recurrent language models. arXiv preprint arXiv:1710.06963, 2017.

- [MTKC22] Harsh Mehta, Abhradeep Thakurta, Alexey Kurakin, and Ashok Cutkosky. Large scale transfer learning for differentially private image classification. arXiv preprint arXiv:2205.02973, 2022.

- [NDR17] Jekaterina Novikova, Ondřej Dušek, and Verena Rieser. The e2e dataset: New challenges for end-to-end generation. arXiv preprint arXiv:1706.09254, 2017.

- [RNSS18] Alec Radford, Karthik Narasimhan, Tim Salimans, and Ilya Sutskever. Improving language understanding by generative pre-training. 2018.

- [RWC+19] Alec Radford, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei, and Ilya Sutskever. Language models are unsupervised multitask learners. OpenAI blog, 1(8):9, 2019.

- [SCS13] Shuang Song, Kamalika Chaudhuri, and Anand D Sarwate. Stochastic gradient descent with differentially private updates. In 2013 IEEE Global Conference on Signal and Information Processing, pages 245–248. IEEE, 2013.

- [SDCW19] Victor Sanh, Lysandre Debut, Julien Chaumond, and Thomas Wolf. Distilbert, a distilled version of bert: smaller, faster, cheaper and lighter. arXiv preprint arXiv:1910.01108, 2019.

- [SPW+13] Richard Socher, Alex Perelygin, Jean Wu, Jason Chuang, Christopher D Manning, Andrew Y Ng, and Christopher Potts. Recursive deep models for semantic compositionality over a sentiment treebank. In Proceedings of the 2013 conference on empirical methods in natural language processing, pages 1631–1642, 2013.

- [SSTT21] Shuang Song, Thomas Steinke, Om Thakkar, and Abhradeep Thakurta. Evading the curse of dimensionality in unconstrained private glms. In International Conference on Artificial Intelligence and Statistics, pages 2638–2646. PMLR, 2021.

- [STT20] Shuang Song, Om Thakkar, and Abhradeep Thakurta. Characterizing private clipped gradient descent on convex generalized linear problems. arXiv preprint arXiv:2006.06783, 2020.

- [WLK+17] Xi Wu, Fengan Li, Arun Kumar, Kamalika Chaudhuri, Somesh Jha, and Jeffrey F. Naughton. Bolt-on differential privacy for scalable stochastic gradient descent-based analytics. In Semih Salihoglu, Wenchao Zhou, Rada Chirkova, Jun Yang, and Dan Suciu, editors, Proceedings of the 2017 ACM International Conference on Management of Data, SIGMOD, 2017.

- [WYX17] Di Wang, Minwei Ye, and Jinhui Xu. Differentially private empirical risk minimization revisited: Faster and more general. In I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 30. Curran Associates, Inc., 2017.

- [YNB+21] Da Yu, Saurabh Naik, Arturs Backurs, Sivakanth Gopi, Huseyin A Inan, Gautam Kamath, Janardhan Kulkarni, Yin Tat Lee, Andre Manoel, Lukas Wutschitz, et al. Differentially private fine-tuning of language models. arXiv preprint arXiv:2110.06500, 2021.

- [YZCL21a] Da Yu, Huishuai Zhang, Wei Chen, and Tie-Yan Liu. Do not let privacy overbill utility: Gradient embedding perturbation for private learning. arXiv preprint arXiv:2102.12677, 2021.

- [YZCL21b] Da Yu, Huishuai Zhang, Wei Chen, and Tie-Yan Liu. Do not let privacy overbill utility: Gradient embedding perturbation for private learning. In 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, May 3-7, 2021. OpenReview.net, 2021.

- [ZZMW17] Jiaqi Zhang, Kai Zheng, Wenlong Mou, and Liwei Wang. Efficient private erm for smooth objectives. In IJCAI, 2017.

Appendix

Appendix A Protocol for Synthetic Example Experiments in Section 4.1

We detail the construction of the synthetic example in Section 4.1. The training and test sets of this example both have instances. Each instance is sampled from a distribution where the first coordinates are all multi-variate normal distributions with mean and standard deviation both being . All remaining coordinates are constantly . This ensures the optimal non-private training losses for problems of different dimensions are the same.

Appendix B Protocol and Additional Fine-Tuning Experiments for Section 4.2

B.1 Experimental Protocol

For experiments in Section 4.2, we fine-tuned the DistilRoBERTa model with -DP on the SST-2 training set with examples and measured performance on the companion dev set. We used the exact set of hyperparameters presented in [LTLH21] for this task. We repeated our re-training experiments over five independent random seeds. Fine-tuning for 3 epochs on this task takes 10 minutes on an A100-powered machine with sufficient RAM and CPU cores.

Our spectral analysis relies on running the orthogonal iteration algorithm on the set of collected gradients along the private fine-tuning trajectory [Dem97].333By gradients, we always mean the average of clipped per-example gradients — before adding noise — in this section. Unlike other numerical algorithms for estimating eigenvalues of a matrix, orthogonal iteration provably produces the set of eigenvalues that are largest in absolute value (and corresponding eigenvectors, if the underlying matrix is normal) in the limit.444The orthogonal iteration algorithm is also known as simultaneous iteration or subspace iteration. Recall that we needed the top eigenvectors for projection in our re-training experiment.555 Note that one commonly used algorithm in the neural net spectral analysis literature — Lanczos iteration [GKX19] — does not guarantee that the top eigenvalues are produced, even though its spectral estimates are frequently deemed accurate [GWG19]. By default, we run orthogonal iteration for ten iterations. We show in the following that our results are insensitivity to the number of iterations used in the orthogonal iteration algorithm. Each orthogonal iteration takes minutes on an A100-powered machine with gradient samples and principal components for the DistilRoBERTa experiments.

B.2 Robustness of Results

Robustness to the number of orthogonal iterations.

The orthogonal iteration algorithm is a generalization of the power method that simultaneously produces estimates of multiple eigenvalues. Its convergence is known to be sensitivity to the gap between successive eigenvalues. The algorithm converges slowly if consecutive eigenvalues (with the largest absolute values) are close in absolute value. To confirm that our results aren’t sensitivity to the choice of the number of iterations, we visualize the top 500 eigenvalues for the orthogonal iteration algorithm is run for different number of updates. Figure 3 shows that the linear fit to the top eigenvalues remains stable across different number of orthogonal iterations . Notably, produces similar results as . These results were obtained with gradients.

(a)

(b)

(c)

Robustness to the number of gradient samples.

We further ran experiments with different numbers of gradient samples collected along the fine-tuning trajectory, and plot the top eigenvalues. Figure 4 shows that while the slope and intercept of the fitted line in log-log space changes, the change is moderate. Notably, the decaying trend of the top eigenvalues remains stable.

(a)

(b)

Robustness to the gradient sampling strategy.

We observe that gradients at the beginning of fine-tuning tend to be larger in magnitude than gradients collected later on along the optimization trajectory. To eliminate the potential confounder that the top principal components are solely formed by the initial few gradients evaluated during fine-tuning, we re-ran the spectral analysis experiment without the initial gradients. Specifically, we performed PCA for the gradients evaluated from step 300 to step 1300 during private fine-tuning, and compared the distribution of top eigenvalues returned from this setup to when we used the first 1000 gradients. Note the dev set accuracy converged to by step 200. Figure 5 shows that while the slope and intercept of linear fits are slightly different in the new setup compared to the old setup (when all gradients along the fine-tuning trajectory were used for PCA), that the eigenvalues follow a rapidly decaying trend remains true under both setups.

(a) Gradients at iterations to

(b) Gradients at iterations to

Robustness to model size.

In previous experiments, we empirically verified that gradients for fine-tuning DistilRoBERTa are near low rank. Here, we show that similar observations also hold for Roberta-base and Roberta-large when fine-tuning only the attention layers. The former setup has approximately million trainable parameters, while the latter has approximately million. Figures 6 and 7 illustrate these results.

(a) singular values decay with rank

(b) retrain in fixed subspace

(a) singular values decay with rank

(b) retrain in fixed subspace

Non-robustness to fine-tuning strategy.

Recall our fine-tuning experiments for classification was based on the template-completion formulation detailed in [LTLH21]. As opposed to framing the task as integer label prediction, this formulation requires the model to predict one of candidate tokens to fill in a templated prompt for a -way classification problem. While we have also performed the experiment where we re-train in the subspace of top principal components under the usual fine-tuning setup (stack a randomly initialized prediction head on top of the embedding of the [CLS] token), we found it difficult to recover the original fine-tuning performance when gradients are projected onto the top eigen-subspace with dimensions. Retraining performance exhibited high variance and the final dev accuracy was bi-modal over random seeds with near guess accuracy () and original accuracy () being the two modes. We suspect this to be caused by the linear prediction head being randomly initialized.

B.3 Additional Fine-Tuning Experiments with DistilGPT-2 for Generation Tasks

Experiments in Section 4.2 demonstrated that gradients from fine-tuning for classification are mostly controlled by a few principal components. In this section, we show that similar observations hold for fine-tuning on a text generation task. We follow the setup and hyperparameters in [LTLH21] for privately fine-tuning DistilGPT-2 on the E2E dataset [NDR17] under -DP. We fine-tune all weight matrices in attention layers that produce the queries, values, and keys. This amounts to fine-tuning approximately million parameters of a model with a total parameter count of more than million. We again collected gradients evaluated during private fine-tuning, performed PCA, and conducted the eigenspectrum analysis. Figure 8 shows that the top eigenspectrum decays rapidly with a rate similar to what is observed in fine-tuning for the classification problem we studied.