What Appears Appealing May Not be Significant! - A Clinical Perspective of Diffusion Models

Abstract

Various trending image generative techniques, such as diffusion models, have enabled visually appealing outcomes with just text-based descriptions. Unlike general images, where assessing the quality and alignment with text descriptions is trivial, establishing such a relation in a clinical setting proves challenging. This work investigates various strategies to evaluate the clinical significance of synthetic polyp images of different pathologies. We further explore if a relation could be established between qualitative results and their clinical relevance.

1 Introduction

In recent years, creating a pictorial view of what we imagine has become possible with the advent of diffusion models. This capability is coupled with text-based control, allowing us to type in a text prompt that describes our desired image output. These models can be fine-tuned to downstream tasks, expanding their viability to different applications. Generally, in most such applications, the visual outcomes during each fine-tuning iteration can be used to correlate them with improvement or overfitting. However, does this concept extend to medical images? Does solely relying on appealing visuals provide enough evidence for the clinical significance of generated medical images? These are some crucial questions that need attention for critical medical analysis tasks. ††Accepted in WiCV (CVPR 2024) under poster category.

In this work, we investigate the feasibility of synthetic medical data in both qualitative terms and clinical relevance to provide insights into the above questions. This study aims to generate colonoscopy images featuring polyps, which are precursors to colorectal cancer, the third most common malignancy. These polyps can be adenomatous (AD), which means malignancy potential, or non-adenomatous (Non-AD), which means benign. Additionally, we emphasized high-quality image generation. The synthetic images are obtained using a stable diffusion model [7] and are further examined for pathological relevance by performing a binary classification (AD/Non-AD). The existing literature lacks such pathology-based studies and mainly focuses on polyp generation using binary masks [4, 6]. Our contributions are summarized below:

-

•

We investigate the correlation between diffusion models’ qualitative and quantitative results to determine their alignment or disparity in clinical settings.

-

•

We explore the strategies that can be adopted to conclude the clinical relevance of synthetically generated images.

2 Methodology

| Adenoma | Non-Adenoma | ||||||

|---|---|---|---|---|---|---|---|

| Training images | Precision | Recall | F1-score | Precision | Recall | F1-score | Balanced Accuracy |

| Real | 0.7901 | 0.8312 | 0.8101 | 0.5938 | 0.5278 | 0.5588 | 0.6795 |

| Real+Synthetic | 0.7654 | 0.8052 | 0.7848 | 0.5313 | 0.4722 | 0.5000 | 0.6387 |

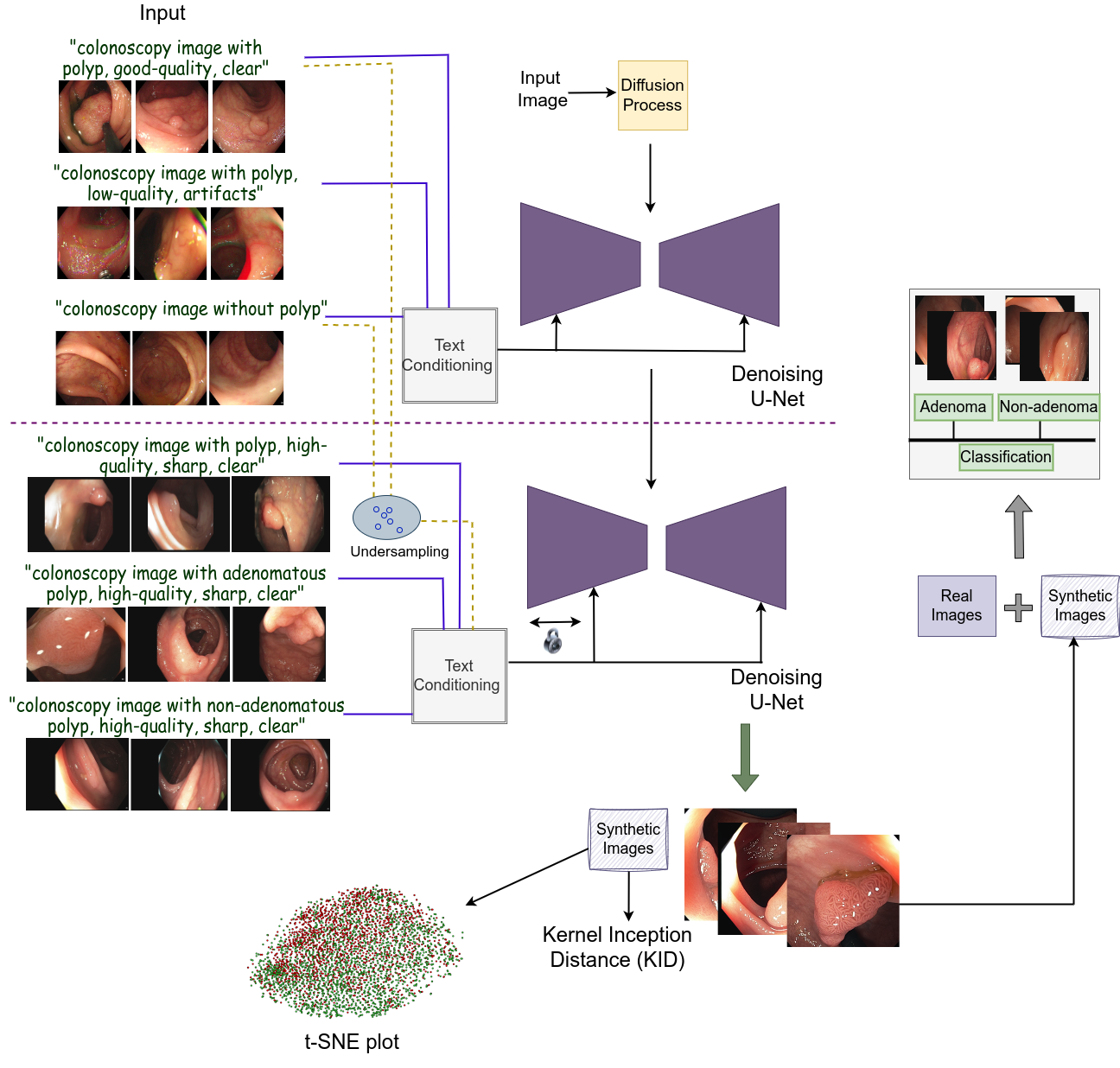

The overview of the proposed framework is shown in Fig. 1. Our approach adopts a stable diffusion based model with a denoising U-Net module. This module undergoes a two-step training process. Initially, the model learns from a large number of polyp and non-polyp colonoscopy images with additional quality-specific text prompts. This step allows it to learn polyp-focused patterns that could assist in further training it on a small-scale polyp classification dataset. Subsequently, we freeze some layers of the U-Net encoder and train it using pathology (AD/Non-AD) based high-quality images. To enhance the model’s performance, we also included a few samples of the previous dataset and some high-quality images for which pathological annotations are unavailable. The generated images of AD and Non-AD classes are further evaluated using three strategies to assess their clinical relevance. These strategies include plotting t-SNE embeddings, using the Kernel Inception Distance (KID) metric, and augmenting the real images with generated images for a binary classification. Additionally, our approach incorporates iteration-wise analysis to ascertain whether a relation between qualitative outcomes and their clinical viability could be established.

3 Experiments

The experiments are conducted using three publicly available datasets, namely, SUN Database [5] ( non-polyp and polyp frames), CVC-ClinicHDSegment [10, 2, 1] ( images), and CVC-ClinicHDClassif [3, 8] (train set: adenoma, non-adenoma, test set: adenoma, non-adenoma). We used the official validation set of CVC-ClinicHDClassif for testing due to the unavailability of the annotations in the official test set. The initial training of our diffusion model is conducted using the SUN Database, which is fine-tuned using the other two datasets. The pathological and clinical relevance evaluation is performed using CVC-ClinicHDClassif. The implementation uses the PyTorch framework, and execution is performed on NVIDIA A100 and NVIDIA Titan-Xp GPUs.

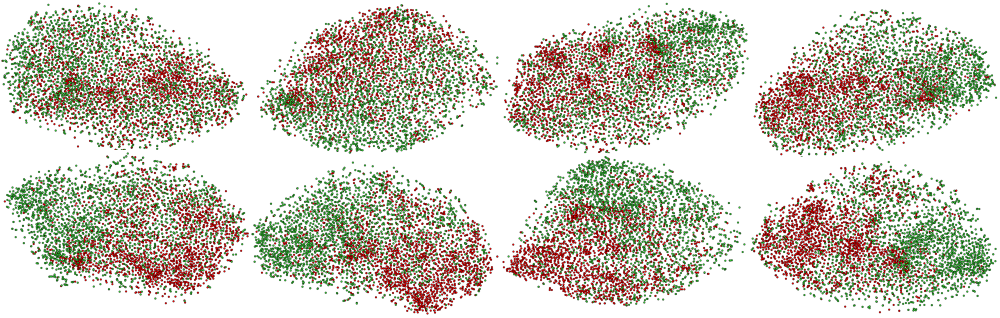

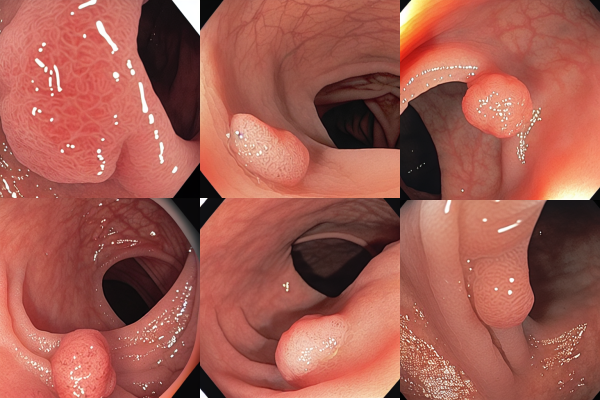

First, we conducted an iteration-wise analysis using t-SNE plots and the KID metric. Simultaneously, we tried to relate them with qualitative outcomes. It can be observed from Fig. 2, Fig. 3, and Table 2 that despite visually stunning outcomes in the initial iterations, the corresponding t-SNE plots and KID metrics signify clinical irrelevance of the synthetic images. This observation contradicts general images, where qualitative results often show correlations with quantitative outcomes. Moreover, it is difficult to visually conclude the pathology of such anomalies without clinical expertise. Furthermore, the best outcomes of t-SNE plots and KID metrics, i.e., iteration 8k, could not outperform real images when used for augmentation (see Table 1). The reason for underperformance can be inferred from Table 2, where iteration 8k is selected based on comparing synthetic AD/Non-AD with their real counterparts. However, it is important to consider the comparison between synthetic AD/Non-AD and real Non-AD/AD, respectively. This comparison signifies how distinct one class is from another, which is unfavorable in the 8k iteration.

| Iteration |

|

|

|

|

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1k | 0.073 (0.002) | 0.111 (0.003) | 0.094 (0.003) | 0.061 (0.001) | ||||||||

| 2k | 0.079 (0.002) | 0.118 (0.004) | 0.100 (0.003) | 0.067 (0.002) | ||||||||

| 3k | 0.077 (0.002) | 0.114 (0.004) | 0.087 (0.003) | 0.061 (0.001) | ||||||||

| 4k | 0.069 (0.001) | 0.106 (0.004) | 0.085 (0.003) | 0.057 (0.002) | ||||||||

| 5k | 0.074 (0.002) | 0.114 (0.004) | 0.083 (0.003) | 0.056 (0.002) | ||||||||

| 6k | 0.069 (0.002) | 0.104 (0.003) | 0.087 (0.003) | 0.064 (0.002) | ||||||||

| 7k | 0.076 (0.002) | 0.115 (0.003) | 0.091 (0.003) | 0.064 (0.002) | ||||||||

| 8k | 0.069 (0.002) | 0.105 (0.004) | 0.081 (0.003) | 0.061 (0.002) |

4 Conclusion

In this work, we proposed a diffusion-based approach to generate polyp images with different pathologies and investigated different strategies to assess their clinical relevance. We demonstrated that, unlike general images, it is difficult to establish a direct relation between qualitative outcomes and their clinical significance without some additional investigations. Our study provides pathways for future research using generative techniques with clinical images.

References

- Bernal et al. [2012] Jorge Bernal, Javier Sánchez, and Fernando Vilarino. Towards automatic polyp detection with a polyp appearance model. Pattern Recognition, 45(9):3166–3182, 2012.

- Bernal et al. [2015] Jorge Bernal, F Javier Sánchez, Gloria Fernández-Esparrach, Debora Gil, Cristina Rodríguez, and Fernando Vilariño. Wm-dova maps for accurate polyp highlighting in colonoscopy: Validation vs. saliency maps from physicians. Computerized medical imaging and graphics, 43:99–111, 2015.

- Bernal et al. [2019] Jorge Bernal, Aymeric Histace, Marc Masana, Quentin Angermann, Cristina Sánchez-Montes, Cristina Rodriguez de Miguel, Maroua Hammami, Ana García-Rodríguez, Henry Córdova, Olivier Romain, et al. Gtcreator: a flexible annotation tool for image-based datasets. International journal of computer assisted radiology and surgery, 14(2):191–201, 2019.

- Macháček et al. [2023] Roman Macháček, Leila Mozaffari, Zahra Sepasdar, Sravanthi Parasa, Pål Halvorsen, Michael A Riegler, and Vajira Thambawita. Mask-conditioned latent diffusion for generating gastrointestinal polyp images. In Proceedings of the 4th ACM Workshop on Intelligent Cross-Data Analysis and Retrieval, pages 1–9, 2023.

- Misawa et al. [2021] Masashi Misawa, Shin-ei Kudo, Yuichi Mori, Kinichi Hotta, Kazuo Ohtsuka, Takahisa Matsuda, Shoichi Saito, Toyoki Kudo, Toshiyuki Baba, Fumio Ishida, et al. Development of a computer-aided detection system for colonoscopy and a publicly accessible large colonoscopy video database (with video). Gastrointestinal endoscopy, 93(4):960–967, 2021.

- Pishva et al. [2023] Alexander K Pishva, Vajira Thambawita, Jim Torresen, and Steven A Hicks. Repolyp: A framework for generating realistic colon polyps with corresponding segmentation masks using diffusion models. In 2023 IEEE 36th International Symposium on Computer-Based Medical Systems (CBMS), pages 47–52. IEEE, 2023.

- Rombach et al. [2022] Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 10684–10695, 2022.

- Sánchez-Montes et al. [2019] Cristina Sánchez-Montes, Francisco Javier Sánchez, Jorge Bernal, Henry Córdova, María López-Cerón, Miriam Cuatrecasas, Cristina Rodríguez De Miguel, Ana García-Rodríguez, Rodrigo Garcés-Durán, María Pellisé, et al. Computer-aided prediction of polyp histology on white light colonoscopy using surface pattern analysis. Endoscopy, 51(03):261–265, 2019.

- Tan and Le [2019] Mingxing Tan and Quoc Le. Efficientnet: Rethinking model scaling for convolutional neural networks. In International conference on machine learning, pages 6105–6114. PMLR, 2019.

- Vázquez et al. [2017] David Vázquez, Jorge Bernal, F Javier Sánchez, Gloria Fernández-Esparrach, Antonio M López, Adriana Romero, Michal Drozdzal, and Aaron Courville. A benchmark for endoluminal scene segmentation of colonoscopy images. Journal of healthcare engineering, 2017, 2017.