Weights Augmentation: it has never ever ever ever let her model down

Abstract

Weights play an essential role in deep learning network models. Unlike network structure design, this article proposes the concept of weight augmentation, focusing on weight exploration. The core of Weight Augmentation Strategy (WAS) is to adopt random transformed weight coefficients training and transformed coefficients, named Shadow Weight(SW), for networks that can be used to calculate loss function to affect parameter updates. However, stochastic gradient descent is applied to Plain Weight(PW), which is referred to as the original weight of the network before the random transformation. During training, numerous SW collectively form high-dimensional space, while PW is directly learned from the distribution of SW instead of the data. The weight of the accuracy-oriented mode(AOM) relies on PW, which guarantees the network is highly robust and accurate. The desire-oriented mode(DOM) weight uses SW, which is determined by the network model’s unique functions based on WAT’s performance desires, such as lower computational complexity, lower sensitivity to particular data, etc. The dual mode be switched at anytime if needed. WAT extends the augmentation technique from data augmentation to weight, and it is easy to understand and implement, but it can improve almost all networks amazingly. Our experimental results show that convolutional neural networks, such as VGG-16, ResNet-18, ResNet-34, GoogleNet, MobilementV2, and Efficientment-Lite, can benefit much at little or no cost. The accuracy of models is on the CIFAR100 and CIFAR10 datasets, which can be evaluated to increase by 7.32% and 9.28%, respectively, with the highest values being 13.42% and 18.93%, respectively. In addition, DOM can reduce floating point operations (FLOPs) by up to 36.33%. The code is available at https://github.com/zlearh/Weight-Augmentation-Technology.

1 Introduction

Deep learning with data augmentation(DA) he2019bag ; dosovitskiy2020image ; cubuk2020randaugment ; tian2020improving ; zoph2020learning has achieved great success, with preprocessing methods consisting of rotation, translation, scaling, and random cropping moreno2020improving ; shorten2021text ; yang2022image . DA’s central core purpose is to make the distribution of the original data set more consistent with the distribution of natural scenes, thereby increasing the diversity and quantity of data. Wang et al. hao2020improved improved the Mosaic data augmentation algorithm. After analyzing the synthetic image area, a certain number of training set images are randomly filled in, further enhancing the synthesis ability. CMU trabucco2023effective proposed the DA-Fusion strategy using pre-trained text-to-image. Diffusion models generate variants of real images, effectively improving data diversity.

Weight is the core part of model and the basis for model decision-making in practical applications zamfirescu2023johnny ; alberts2023large ; ghosal2023text . Izmailov et al. izmailov2018averaging introduced Stochastic Weight Averaging, which averages weights during training rather than relying on the final set. This is necessary because finding parameters that map well to high-dimensional feature spaces is challenging, and inference requires a single set of weights. Figure 1.a is the traditional training method, in which data and weights are many-to-one. Model training aims to find the weights that match the data. The formula can be expressed as:

| (1) |

Here, denotes the feature and single-weight relationship mapping. represents the space of feature vectors, the m-dimensional real vector space. Similarly, signifies the space of potential weight vectors, the n-dimensional vector space.

Figure 1.b is our new strategy. We think the relationship between data and weights is many-to-many. As training, the weight space will become more and more complete. When WAS is used, the weight space will be expanded again. However, we do not find the weights that best meet the requirements of the weight space; we indirectly learn the distribution of the weight space. The announcement is as follows:

| (2) |

where represents the mapping between feature space and weight space.

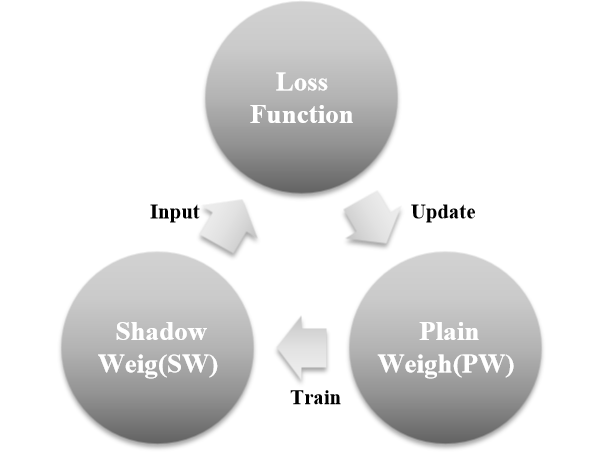

Dropout srivastava2014dropout ; bouthillier2015dropout ; tobergte2013improving addresses overfitting by randomly dropping training units, boosting model robustness. It doesn’t reduce computational complexity during inference, and there are alternative methods to unit dropping suresh2021adversarial ; zheng2024toward ; wang2022makes ; jiang2020can ; gou2021knowledge . Influenced by dropout and data augmentation, we propose Weight Augmentation Technology. The core of WAS is to transform weights during training randomly. We call SW that randomly transformed weight. The model uses SW to calculate the loss function to affect parameter updates. However, stochastic gradient descent (SGD) works on Plain Weight(PW). The model can achieve better results only when the actual weight is compatible with most shadow weights simultaneously.

Although there are two different types of weights, we only need to save PW, and then PW produces SW through random transformation. By this way, we can achieve weights with two modes with different functions at a minimal cost. During task execution, the working mode can be switched according to needs. As shown in Figure 2, The weight of accuracy-oriented mode uses PW, which is model accuracy-oriented. It can handle most application cases. The weight of the desire-oriented mod uses SW, which can grant the model extra desired properties, such as lower computational complexity, low sensitivity to special data, etc.

Our contributions are summarized as follows: (1)We propose WAS, which performance of model training by random transformations to change weights. (2)The weight dual-mode concept is proposed. In inference, only one model weight needs to be retained to achieve multiple states of the model and cope with the needs of different tasks. (3)Negative attitude towards traditional training methods that use data training to obtain a weight. Through train, what is received is the distribution of weights, not a weight. And our ultimate goal is to find the most robust weight in this distribution.

2 Related work

With the continuous development of deep learning, researchers have begun to seek more efficient and economical methods to obtain model weights. WiSE-FT wortsman2022robust enhances the robustness of fine-tuned pre-trained models by integrating the weights of zero-shot and fine-tuned models. This method can maintain high accuracy and perform well when the distribution changes. However, the performance of WiSE-FT depends on the pre-trained model and fine-tuning data. Guo et al. guo2020online demonstrated the Knowledge Distillation method via Collaborative Learning, which trains multiple student models simultaneously during training, allowing the student models to learn collaboratively to enhance their performance. This eliminates the need for an additional teacher model. However, the choice and number of student models can affect the final inference results, and this method has higher hardware requirements. Zhang et al. zhang2019your extended Self Distillation, which uses the network as a student and a teacher model and helps transfer knowledge within the network. It does not require an additional pre-trained teacher model and can improve accuracy without increasing inference time. However, Self Distillation introduces an additional shallow classifier, which prevents the model convergence and increases the complexity of training.

Ensembles valentini2002ensembles ; hansen1990neural ; bachman2014learning can combine multiple weak neural networks to improve model performance. As the number of networks increases, the cost of the ensemble increases linearly during training. Wen et al. wen2020batchensemble showed that BatchEnsemble has lower computational and memory costs than typical ensemble methods. It alleviates the fatal shortcomings of ensemble methods, but the memory cost is proportional to the number of neural networks. The Latest Weight Averaging (LAWA) method introduced by Kaddour et al. kaddour2022stop improves the convergence speed when training visual or language models on large datasets by averaging the model weights of the most recent k checkpoints, significantly shortening the training period. To some extent, the performance of LAWA is affected by hyperparameters. Therefore, it is necessary to test hyperparameters for specific tasks and datasets. To address the problem of integrating multiple models under limited resources, Singh et al. singh2020model used optimal transport for layer-by-layer fusion of various models, which can achieve knowledge transfer without retraining. Wortsman et al. wortsman2022model achieve Model Soups, which averages the weights of multiple fine-tuned models to enhance model performance. It requires the simultaneous training of all models to ensure differences between models.

To address the shortcomings of the above methods, we propose WAS. We randomly transform PW during training to obtain SW. PW is used to be compatible with various SW, enhancing the network’s robustness and reducing the model’s sensitivity to noise.

3 Method

3.1 Weight augmentation strategy

Some weights are considered to be "by-products" or "useless", but they can potentially help networks from different perspectives. On specific data, they exhibit good performance but could be more optimal when dealing with broader data. It is discarded during the model selection process. However, with the deepening of deep network research, researchers have begun to recognize the potential value of these "by-products". For example, Ensemble Learning dietterich2002ensemble ; krawczyk2017ensemble ; huang2009research can integrate these seemingly useless weights to form a more powerful and robust model. This method takes advantage of different weights on different data and ultimately improves the model’s overall performance by combining high-quality weights. This approach is merely the stitching of weights and has yet to reach the level of weight augmentation. A diverse weight space is crucial, and weights learned from weight distributions perform better. The formula for increasing the diversity of the weight space is:

| (3) |

where is the model’s weight vector. is its transpose. ReLU is a popular activation function. represents an augmentation strategy with transformations like rotation, translation, and scaling.

Reorganizing weights may cause bias and hinder generalization due to incomplete weight distribution capture. To solve this, we introduce randomness, the formula is as follows:

| (4) |

is significant as it’s not defined by a simple function. We enhance randomness through Whether to use WAS or select WAS, etc.

As shown in Figure. 3, each execution of mini-batch gradient descent WAS will lead to a weight change, thus producing a variant of SW. As training continues, the distribution of SW gradually improves. The model parameter update formula based on weight update is as follows:

| (5) |

| (6) |

Where and represent the jth parameter of SW and the jth parameter of PW, respectively. denotes the learning rate. is the loss function. indicate the partial derivative of the loss function with respect to the parameter . Equation(6) is the formula of the original network Gradient Descent with SGD, and Equation(6) is the SGD formula using WAS.

| (7) |

where represent the feature map at position (x, y), is the partial derivative of the loss function relative to , denote the partial derivative of a relative to . We use SW to calculate the loss function to evaluate the difference between output data and the actual results. This evaluation result will directly affect the update of PW. Additionally, SW is used to learn the distribution of data, and we use it as the input of the loss function to approximate the data distribution. PW mainly learns the distribution of SW, so only PW needs to be updated. The above is the biggest difference between this method and the traditional method. The relationship between PW, SW, and loss function is shown in Figure 4.

In their research, Ding et al. ding2021repvgg proposed the Repvgg, which reduces time complexity by adjusting weights during inference while maintaining model performance. This method exploits the inherent potential relationship between the model and the weights but does not associate the model with the weights. In this field, Zheng et al. zheng2023learn indicate the "Learn From Model" concept in their paper, emphasizing the importance of research, modification, and design of Foundation models based on model interfaces. Due to researchers’ superficial exploration of this field, more research is needed to uncover the intrinsic interaction between weights and model structure. Therefore, although the concept has revolutionary potential, direct manipulation of weights is undoubtedly challenging without such understanding. At this point, if the model is consciously guided during training. Doing so allows human intervention during inference, and direct adjustments to the weights do not cause significant degradation in model performance. To achieve this goal, we will use weight-based training instead of data-based training, allowing the final model to learn from the weight distribution instead of extracting features directly from the data. We can generate a lot of flawed weight through data-driven training, but it can still capture subtle features and deep relationships of data specific to particular datasets. If the model training based on data distribution is regarded as one stage, then the model training based on the distribution of defect weights is called two stages. These two stages alternate with each other in the entire training. The final weights depend on the model’s autonomous learning without human intervention. Such a design allows the model to be trained directly to optimize its structure and weights.

WAS encourages the model to explore a broader area of the weight space, promoting high-performance weight configurations while avoiding over-reliance on specific features. It also improves the model’s computational efficiency, reducing resource consumption training and inference.

3.2 Dual working mode of network model

The core of WAS is to encourage the generation of a large number of weights, which makes the weight distribution more complete, enhancing the generalization and robustness of the model. The model obtained always has two modes in training: AOM and DOM. AOM is akin to conventional deep learning models, as it is directly applied to inference. However, the key innovation of AOM lies in its dynamic weight formation mechanism. This is achieved through a competitive process that unfolds during training. The competition continuously drives the model to optimize its performance. Specifically, SW demonstrates superior performance and will effectively reduce the loss function. As a result, it significantly impacts PW determination. Conversely, those with poorer performance will have less influence on AW determination.

The second mode focuses on changing WAS, which has solved specific needs such as improving the model’s specificity, sparsity, and computational efficiency. In DOM, we can devise WAS tailored to the particular demands of the task and implement it within training. Subsequently, this strategy can be used to tune PW for inference. Although the model’s prediction accuracy may be worse than AOM in this mode, it has significant advantages in specific tasks. For instance, random weight cropping can increase the matrix’s sparsity of weight. This approach can reduce computational load, potentially by several orders or even tens of times, which is particularly advantageous for applications involving large-scale data processing or model deployment within environments with constrained resources. In summary, the DOM approach can reduce computational, potentially by several or even tens of times, which is particularly advantageous for applications involving large-scale data processing or model deployment within Special needs.

Through WAS, we have implemented a flexible model management strategy. In inference, only one weight needs to be saved to realize two different weight functions simultaneously, and the working mode can be flexibly switched according to the application scenario. This dual-mode simplifies the management of model storage and maintenance. It also strengthens the algorithm’s resilience and adaptability.

| Model | CIFAR10 | CIFAR100 |

|

||||

|---|---|---|---|---|---|---|---|

| AOM Top1 | DOM Top1 | AOM Top1 | GOM Top1 | ||||

| VGG | 87.42 | - | 59.01 | - | 333 | ||

| VGG16-C | 89.29 | 88.95 | 62.19 | 60.53 | 321 | ||

| VGG16-CT | 91.15 | 90.60 | 63.53 | 61.96 | 279 | ||

| ResNet18 | 85.53 | - | 59.70 | - | 608 | ||

| ResNet18-C | 88.15 | 87.38 | 63.42 | 62.44 | 542 | ||

| ResNet18-CT | 89.83 | 88.64 | 63.40 | 61.50 | 315 | ||

| ResNet34 | 86.54 | - | 58.64 | - | 1214 | ||

| ResNet34-C | 89.18 | 88.81 | 62.19 | 61.24 | 1099 | ||

| ResNet34-CT | 91.71 | 90.73 | 60.64 | 58.73 | 709 | ||

| GoogleNet | 90.55 | - | 72.04 | - | 1457 | ||

| GoogleNet-C | 91.99 | 91.43 | 73.05 | 72.02 | 1237 | ||

| GoogleNet-CT | 92.68 | 92.04 | 73.08 | 72.01 | 772 | ||

| MobileNetV2 | 73.2 | - | 47.02 | - | 47 | ||

| MobileNetV2-C | 82.58 | 82.43 | 55.43 | 54.65 | 45 | ||

| MobileNetV2-CT | 83.02 | 82.88 | 55.92 | 53.95 | 37 | ||

| EfficientNetLite | 73.05 | - | 43.13 | - | 8.00 | ||

| EfficientNetLite-C | 80.96 | 79.97 | 49.08 | 48.47 | 6.37 | ||

| EfficientNetLite-CT | 83.72 | 81.23 | 50.31 | 47.41 | 4.66 | ||

4 Experiments

We conducted ablation studies and comparative analyses to assess the performance of diverse variant networks in the CIFAR-10 and CIFAR-100 datasets krizhevsky2009learning . Our primary aim was to highlight the pivotal role that the integration of WAS plays within our models. Furthermore, we evaluate to delineate the distinctions and performance implications of dual operational modes. The findings from these studies consistently affirm the benefits that WAS confer upon enhancing the model.

|

|

Parameters |

|

|

|||||||

| 5.09 | 4.86 | ||||||||||

| 39.52 | 25.58 | ||||||||||

| VGG16-R | rotate | 46.29 | 42.74 | ||||||||

| 54.61 | 53.65 | ||||||||||

| 58.40 | 57.76 | ||||||||||

| (10%,10%) | 1.72 | 2.33 | |||||||||

| VGG16-T | translate | (20%,20%) | 6.62 | 8.47 | |||||||

| (30%,30%) | (30%,30%) | 14.42 | 18.21 | ||||||||

| (40%,40%) | 25.76 | 31.76 | |||||||||

| (0.8,1.0) | 3.34 | 2.79 | |||||||||

| VGG16-C | crop | (0.6,1.0) | 6.49 | 6.71 | |||||||

| (0.8,1.0) | (0.4,1.0) | 13.04 | 12.12 | ||||||||

| (0.2,1.0) | 24.19 | 22.42 |

|

|

Parameters |

|

|

|||||||

| (0.8,1.0) | 2.33 | 2.50 | |||||||||

| corp | (0.6,1.0) | 5.93 | 5.15 | ||||||||

| (0.4,1.0) | 11.35 | 11.70 | |||||||||

| VGG16-R | (0.2,1.0) | 22.31 | 23.08 | ||||||||

| (,) | (10%,10%) | 2.56 | 3.27 | ||||||||

| translate | (20%,20%) | 9.96 | 10.39 | ||||||||

| (30%,30%) | 19.95 | 20.45 | |||||||||

| (40%,40%) | 32.45 | 32.71 | |||||||||

| (,) | 5.09 | 5.27 | |||||||||

| (,) | 28.39 | 27.97 | |||||||||

| rotate | (,) | 46.80 | 47.28 | ||||||||

| VGG16-T | (,) | 55.18 | 56.28 | ||||||||

| (30%,30%) | (,) | 58.96 | 59.67 | ||||||||

| (0.8,1.0) | 2.69 | 2.61 | |||||||||

| crop | (0.6,1.0) | 5.16 | 6.11 | ||||||||

| (0.4,1.0) | 10.12 | 12.18 | |||||||||

| (0.2,1.0) | 21.53 | 25.10 | |||||||||

| (,) | 4.80 | 4.85 | |||||||||

| (,) | 26.68 | 28.14 | |||||||||

| rotate | (,) | 46.10 | 45.59 | ||||||||

| VGG16-C | (,) | 54.84 | 53.04 | ||||||||

| (0.8,1.0) | (,) | 58.98 | 56.51 | ||||||||

| (10%,10%) | 2.56 | 3.24 | |||||||||

| translate | (20%,20%) | 8.50 | 9.87 | ||||||||

| (30%,30%) | 17.70 | 18.21 | |||||||||

| (40%,40%) | 28.81 | 33.70 |

4.1 WAS for Classification

To explore the effectiveness of WAS, we have chosen six class deep learning architectures as our experimental models: VGG-16 simonyan2014very , ResNet18 he2016deep , ResNet34 he2016deep , GoogLeNet szegedy2015going , EfficientNet-Lite tan2019efficientnet , and MobileNetV2 sandler2018mobilenetv2 . As illustrated in Table LABEL:Table1, we have chanced a series of WAS for comparative experiments against the baseline.

We deliberately eschewed the incorporation of additional techniques, primarily to mitigate the impact of extraneous variables. On a solitary GPU, we established a global batch size of 128. We employed the conventional SGD, initializing the learning rate at 0.01. Furthermore, we fine-tuned the SGD optimizer, assigning a momentum coefficient of 0.9, thereby augmenting the model’s stability and hastening convergence throughout the training regimen.

On the CIFAR-10 dataset, AOG for the models demonstrated discernible enhancements. Specifically, the accuracy of VGG16-C and VGG16-CT saw an increase of 2.13% and 4.27% relative to the baseline VGG16, respectively. For ResNet18, ResNet18-C and ResNet18-CT attained an accuracy improvement of 3.06% and 5.03%. Similarly, the ResNet34-C and ResNet34-CT models realized respective accuracy improvements of 3.05% and 5.97%. The GoogleNet-C and GoogleNet-CT models recorded accuracy enhancements of 1.59% and 2.35%. Within the MobileNetV2 series, the accuracy for MobileNetV2-C and MobileNetV2-CT marked a significant rise of 12.76% and 13.42% over MobileNetV2. Lastly, the EfficientNetLite-C and EfficientNetLite-CT models secured accuracy improvements of 9.52% and 13.23%.

On the CIFAR-100 dataset, WAT also demonstrated enhanced performance. The accuracy of AOG for Models VGG16-C and VGG16-CT exhibited respective increases of 5.39% and 7.66% over the base VGG16. Similarly, the accuracy of AOG for Models ResNet18-C and ResNet18-CT recorded increases of 6.23% and 6.19% relative to the original ResNet18. Models ResNet34-C and ResNet34-CT demonstrated accuracy of AOG enhancements of 6.05% and 3.41% respectively, in comparison to ResNet34. The GoogleNet-C and GoogleNet-CT models noted slight improvements in the accuracy of AOG, with gains of 1.40% and 1.44% over GoogleNet. The MobileNetV2-C and MobileNetV2-CT models marked a significant advancement in the accuracy of AOG, showing increases of 17.89% and 18.93% over MobileNetV2. Finally, Models EfficientNetLite-C and EfficientNetLite-CT displayed significant accuracy of AOG improvements, with increases of 13.78% and 18.93% over EfficientNetLite.

The performance of Model-C and Model-CT under DOM is lower than under AOM. Their accuracy difference remains at about 1% to 2%, but DOM’s accuracy is still about 1% to 2% higher than the baseline model. This shows that both modes can improve the performance of the model. Particularly, in the context of lightweight models, these adventures are particularly prominent. Although computational speed is not our main consideration, it is worth noting that the floating point operations (FLOPs) of the GOMs of Model-C and Model-CT are both lower than their corresponding baseline models. The FLOPs of Model-C decreased by about 5% to 20%, while the reduction in FLOPs for Model-CT is more significant, with the maximum reduction reaching up to 47%.

4.2 Characteristic of WAS

WAS gives the model unique capabilities that can be customized to specific needs inference. These capabilities can include many aspects, such as reducing computational complexity, decreasing sensitivity to specific data, and so on. This functionality be used by switching the weights to SW during inference. It can customizable adjustments based on specific requirements, enabling the model to accommodate unique environmental conditions.

Table 2 presents three representative WAS: rotation (the random rotation angle of weights is set between 0° and 90°), translation (the random translation range of weights in horizontal and vertical directions is 0% to 30%), and cropping (the random cropping ratio of weights is between 0.8 and 1.0), following the official PyTorch example imambi2021pytorch . To verify the efficacy of WAS for processing particular data, we implemented random data augmentation on the test set to assess WAS strategies. After using WAS, the network model will have two working modes: AOM, a weight that does not apply WAS inference; and DOM, a weight that applies WAS inference. Shown in Table 2, when the strategy for WAT training is a random rotation of 0° 90°, AOM outperforms DOM in Top-1 accuracy. Especially when the rotation of data is limited to 0° 45° and 0° 90°, the accuracy loss of DOM compared to AOM is 13.84% and 3.55% lower. For other rotations, the accuracy loss of DOM is about 1% lower than that of AOM. When randomly cropping is the WAS strategy, as the weight cropping ratio increases, the accuracy loss of DOM compared to AOM decreases by 0.58%, 0.22%, 0.92%, and 1.77%Ẇe noticed that with the increase of the random cropping ratio, the disparity in accuracy loss between DOM Two and AOM initially contracts and subsequently expands. This fluctuation is mainly due to the increase in the random crop ratio during training, which causes the model performance to decrease when the parameters exceed a certain threshold. In summary, WAS helps to promote the ability to process specific data. We found that when the WAS is randomly translated, the accuracy loss of DOM is higher than that of AOM, which is inconsistent with our preliminary conclusion. After combining random crops, we think the reduction of model parameters leads to the fitting ability of decline.

|

|

|

Parameters |

|

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (,) | 4.80 | 4.85 | |||||||||||||

| (,) | 26.68 | 28.14 | |||||||||||||

| Crop (0.8,1.0) | 89.72 | 88.95 | (,) | 46.10 | 45.59 | ||||||||||

| (,) | 54.84 | 53.04 | |||||||||||||

| (,) | 58.98 | 56.51 | |||||||||||||

| (,) | 5.58 | 5.04 | |||||||||||||

| (,) | 27.85 | 26.85 | |||||||||||||

| Crop (0.6,0.8) | 90.34 | 89.71 | (,) | 46.54 | 45.82 | ||||||||||

| (,) | 56.18 | 53.37 | |||||||||||||

| (,) | 59.98 | 56.16 | |||||||||||||

| (,) | 5.79 | 4.51 | |||||||||||||

| (,) | 27.44 | 24.62 | |||||||||||||

| Crop (0.4,0.6) | 88.54 | 87.99 | (,) | 45.67 | 43.25 | ||||||||||

| (,) | 54.66 | 51.57 | |||||||||||||

| (,) | 58.01 | 53.99 | |||||||||||||

| (,) | 5.96 | 4.43 | |||||||||||||

| (,) | 27.57 | 25.72 | |||||||||||||

| Crop (0.2,0.4) | 87.09 | 86.19 | (,) | 45.85 | 44.4 | ||||||||||

| (,) | 54.52 | 52.77 | |||||||||||||

| (,) | 58.01 | 54.88 |

|

|

|

|

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (1.0,1.0) | - | 87.42 | 333 | - | ||||||||||

| (0.8,1.0) | - | 88.95 | 320.68 | 3.70 | ||||||||||

| (0.6,0.8) | - | 89.71 | 259.67 | 22.02 | ||||||||||

| (0.4,0.6) | - | 87.99 | 223.08 | 33.01 | ||||||||||

| (0.2,0.4) | - | 86.19 | 212.02 | 36.33 | ||||||||||

| - | (30%,30%) | 90.37 | 230.63 | 30.74 | ||||||||||

| (0.8,1.0) | (30%,30%) | 90.60 | 277.42 | 16.69 |

As depicted in Table 3, we have implemented AD pipeline that random translations within the test dataset. Moreover, the WAT strategy deviates from conventional AD. As the parameter for random translation from 10% to 40%, the AOM’s Top-1 drop rate surged from 2.56% to 32.45%, while the DOM’s Top-1 drop rate saw a similar rise, climbing from 3.27% to 33.70%. Furthermore, if the test set is replaced with randomly cropped data, as the cropping ratio is reduced from 1.0 to 0.2, the Top-1 accuracy drop rate for both modes increases. For AOM, the drop rate is from 2.69% to 21.53%, whereas for DOM, it ascends from 2.61% to 25.10%. DOM experiences a more rapid performance degradation rate than AOM, especially at higher cropping ratios. Based on the preliminary analysis of the above data, we can conclude that AOM has stronger generalization when the WAS strategy is inconsistent with the data augmentation strategy.

Similarly, as the rotation angle of the test set increases from 0° to 180°. the WAS strategy is random translation. The drop rate for AOM increases from 5.09% to 58.96%. DOM soared from 5.27% to a remarkable 59.67%. However, when WAS uses random cropping, the drop rate for AOM increases from 4.80% to 58.98%, and for DOM, it increases from 4.85% to 56.51%. This is the same conclusion we reached above. when WAS uses random cropping, the drop rate for AOM increases from 4.80% to 58.98%; for DOM, it increases from 4.85% to 56.51%. Contrary to the above conclusion, the drop rate of AOM under extreme conditions is higher than that of DOM. Contrary to the above conclusion, the drop rate of AOM under extreme conditions is higher than that of DOM.

In order to explore the above phenomenon, Table 4 illustrates the models trained with different random cropping ratios as WAS. DA uses random rotation. On the test set, how the training parameters of WAS change, the drop rate of AOM remains relatively stable. In contrast, as the random cropping ratio of WAS increases, the Top-1 accuracy drop rates of DOM are 2.47%, 3.82%, and 4.02% lower than AOM respectively. This trend shows that DOM is more effective in processing rotated data. As the cropping ratio ranges from 0.2 to 0.4, the Top-1 accuracy drop rates between AOM and DOM narrow. This is attributed to the number of decreases in parameters, which impacts model fitting. In summary, data rotation can lead to information loss, akin to the effects of cropping. Therefore, DOM (weight-based random cropping) has a lower drop rate than AOM.

WAS is not only reflected in its accuracy but also in the sparsity of the model. As shown in Table 5, we take the Crop as an example, using different cropping ratios. When the Cropping parameters are (0.4,0.6), the proportion of 0 elements in the entire model accounts for 33.01%, and the model’s accuracy is 87.99%, which is close to the base model. When the Cropping parameters are reduced to (0.2,0.6), the accuracy decreases by 1.41%, but the proportion of 0 elements increases by 3.32%, and the FLOPs are reduced by 11.06M. When introducing translation parameters (30%,30%), even without cropping, the sparsity rate is increased to 30.74%, floating-point operations(FLOPs) are reduced to 230.63M, and the model’s accuracy is improved by 3.26% compared to the base model. Combining cropping and translation weight pruning can further improve the model’s accuracy and reduce computational costs.

With cropping parameters set at (0.4,0.6), the model’s sparsity reaches 33.01% with zero elements, and its accuracy is 87.99%, nearly closing the base model. When the Cropping parameters are reduced to (0.2,0.6), the accuracy decreases by 1.41%, but the proportion of 0 elements increases by 3.32%, and the FLOPs are reduced by 11.06M. Introducing translation parameters of (30%,30%) boosts sparsity to 30.74%, cuts FLOPs to 230.63M, and improve accuracy by 3.26% over the base model. Combining cropping and translation, WAS improves model accuracy and simultaneously decreases computational costs.

4.3 Limitations

WAS as an effective approach for convolutional networks. It is capable of enhancing accuracy without increasing computational complexity. WAS shines in the customization of model training for specific tasks, offering tailored optimization strategies. Yet, despite its considerable benefits, WAS has not gained the same level of popularity as data augmentation practices.

5 Conclusion

We introduce WAS as a training method for models. Its core is to equip the model with a dual mode in inference, enabling weights to cater to tasks with varying demands. This innovative design empowers researchers to train weights that are finely tuned to the specific demands. In the case of AOM, WAT can boost model accuracy by up to 18.93% without incurring additional costs. For DOM, WAT can reduce FLOPs by up to 36.33% while keeping the accuracy intact.

References

- [1] Tong He, Zhi Zhang, Hang Zhang, Zhongyue Zhang, Junyuan Xie, and Mu Li. Bag of tricks for image classification with convolutional neural networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 558–567, 2019.

- [2] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- [3] Ekin D Cubuk, Barret Zoph, Jonathon Shlens, and Quoc V Le. Randaugment: Practical automated data augmentation with a reduced search space. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, pages 702–703, 2020.

- [4] Keyu Tian, Chen Lin, Ming Sun, Luping Zhou, Junjie Yan, and Wanli Ouyang. Improving auto-augment via augmentation-wise weight sharing. Advances in Neural Information Processing Systems, 33:19088–19098, 2020.

- [5] Barret Zoph, Ekin D Cubuk, Golnaz Ghiasi, Tsung-Yi Lin, Jonathon Shlens, and Quoc V Le. Learning data augmentation strategies for object detection. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXVII 16, pages 566–583. Springer, 2020.

- [6] Francisco J Moreno-Barea, José M Jerez, and Leonardo Franco. Improving classification accuracy using data augmentation on small data sets. Expert Systems with Applications, 161:113696, 2020.

- [7] Connor Shorten, Taghi M Khoshgoftaar, and Borko Furht. Text data augmentation for deep learning. Journal of big Data, 8(1):101, 2021.

- [8] Suorong Yang, Weikang Xiao, Mengcheng Zhang, Suhan Guo, Jian Zhao, and Furao Shen. Image data augmentation for deep learning: A survey. arXiv preprint arXiv:2204.08610, 2022.

- [9] Wang Hao and Song Zhili. Improved mosaic: Algorithms for more complex images. In Journal of Physics: Conference Series, volume 1684, page 012094. IOP Publishing, 2020.

- [10] Brandon Trabucco, Kyle Doherty, Max Gurinas, and Ruslan Salakhutdinov. Effective data augmentation with diffusion models. arXiv preprint arXiv:2302.07944, 2023.

- [11] JD Zamfirescu-Pereira, Richmond Y Wong, Bjoern Hartmann, and Qian Yang. Why johnny can’t prompt: how non-ai experts try (and fail) to design llm prompts. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, pages 1–21, 2023.

- [12] Ian L Alberts, Lorenzo Mercolli, Thomas Pyka, George Prenosil, Kuangyu Shi, Axel Rominger, and Ali Afshar-Oromieh. Large language models (llm) and chatgpt: what will the impact on nuclear medicine be? European journal of nuclear medicine and molecular imaging, 50(6):1549–1552, 2023.

- [13] Deepanway Ghosal, Navonil Majumder, Ambuj Mehrish, and Soujanya Poria. Text-to-audio generation using instruction-tuned llm and latent diffusion model. arXiv preprint arXiv:2304.13731, 2023.

- [14] Pavel Izmailov, Dmitrii Podoprikhin, Timur Garipov, Dmitry Vetrov, and Andrew Gordon Wilson. Averaging weights leads to wider optima and better generalization. arXiv preprint arXiv:1803.05407, 2018.

- [15] Nitish Srivastava, Geoffrey Hinton, Alex Krizhevsky, Ilya Sutskever, and Ruslan Salakhutdinov. Dropout: a simple way to prevent neural networks from overfitting. The journal of machine learning research, 15(1):1929–1958, 2014.

- [16] Xavier Bouthillier, Kishore Konda, Pascal Vincent, and Roland Memisevic. Dropout as data augmentation. arXiv preprint arXiv:1506.08700, 2015.

- [17] David R Tobergte and Shirley Curtis. Improving neural networks with dropout. Journal of Chemical Information and Modeling, 53(9):1689–1699, 2013.

- [18] Susheel Suresh, Pan Li, Cong Hao, and Jennifer Neville. Adversarial graph augmentation to improve graph contrastive learning. Advances in Neural Information Processing Systems, 34:15920–15933, 2021.

- [19] Chenyu Zheng, Guoqiang Wu, and Chongxuan Li. Toward understanding generative data augmentation. Advances in Neural Information Processing Systems, 36, 2024.

- [20] Huan Wang, Suhas Lohit, Michael N Jones, and Yun Fu. What makes a" good" data augmentation in knowledge distillation-a statistical perspective. Advances in Neural Information Processing Systems, 35:13456–13469, 2022.

- [21] Zhengbao Jiang, Frank F Xu, Jun Araki, and Graham Neubig. How can we know what language models know? Transactions of the Association for Computational Linguistics, 8:423–438, 2020.

- [22] Jianping Gou, Baosheng Yu, Stephen J Maybank, and Dacheng Tao. Knowledge distillation: A survey. International Journal of Computer Vision, 129(6):1789–1819, 2021.

- [23] Mitchell Wortsman, Gabriel Ilharco, Jong Wook Kim, Mike Li, Simon Kornblith, Rebecca Roelofs, Raphael Gontijo Lopes, Hannaneh Hajishirzi, Ali Farhadi, Hongseok Namkoong, et al. Robust fine-tuning of zero-shot models. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 7959–7971, 2022.

- [24] Qiushan Guo, Xinjiang Wang, Yichao Wu, Zhipeng Yu, Ding Liang, Xiaolin Hu, and Ping Luo. Online knowledge distillation via collaborative learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11020–11029, 2020.

- [25] Linfeng Zhang, Jiebo Song, Anni Gao, Jingwei Chen, Chenglong Bao, and Kaisheng Ma. Be your own teacher: Improve the performance of convolutional neural networks via self distillation. In Proceedings of the IEEE/CVF international conference on computer vision, pages 3713–3722, 2019.

- [26] Giorgio Valentini and Francesco Masulli. Ensembles of learning machines. In Neural Nets: 13th Italian Workshop on Neural Nets, WIRN VIETRI 2002 Vietri sul Mare, Italy, May 30–June 1, 2002 Revised Papers 13, pages 3–20. Springer, 2002.

- [27] Lars Kai Hansen and Peter Salamon. Neural network ensembles. IEEE transactions on pattern analysis and machine intelligence, 12(10):993–1001, 1990.

- [28] Philip Bachman, Ouais Alsharif, and Doina Precup. Learning with pseudo-ensembles. Advances in neural information processing systems, 27, 2014.

- [29] Yeming Wen, Dustin Tran, and Jimmy Ba. Batchensemble: an alternative approach to efficient ensemble and lifelong learning. arXiv preprint arXiv:2002.06715, 2020.

- [30] Jean Kaddour. Stop wasting my time! saving days of imagenet and bert training with latest weight averaging. arXiv preprint arXiv:2209.14981, 2022.

- [31] Sidak Pal Singh and Martin Jaggi. Model fusion via optimal transport. Advances in Neural Information Processing Systems, 33:22045–22055, 2020.

- [32] Mitchell Wortsman, Gabriel Ilharco, Samir Ya Gadre, Rebecca Roelofs, Raphael Gontijo-Lopes, Ari S Morcos, Hongseok Namkoong, Ali Farhadi, Yair Carmon, Simon Kornblith, et al. Model soups: averaging weights of multiple fine-tuned models improves accuracy without increasing inference time. In International conference on machine learning, pages 23965–23998. PMLR, 2022.

- [33] Thomas G Dietterich et al. Ensemble learning. The handbook of brain theory and neural networks, 2(1):110–125, 2002.

- [34] Bartosz Krawczyk, Leandro L Minku, João Gama, Jerzy Stefanowski, and Michał Woźniak. Ensemble learning for data stream analysis: A survey. Information Fusion, 37:132–156, 2017.

- [35] Faliang Huang, Guoqing Xie, and Ruliang Xiao. Research on ensemble learning. In 2009 International Conference on Artificial Intelligence and Computational Intelligence, volume 3, pages 249–252. IEEE, 2009.

- [36] Xiaohan Ding, Xiangyu Zhang, Ningning Ma, Jungong Han, Guiguang Ding, and Jian Sun. Repvgg: Making vgg-style convnets great again. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 13733–13742, 2021.

- [37] Hongling Zheng, Li Shen, Anke Tang, Yong Luo, Han Hu, Bo Du, and Dacheng Tao. Learn from model beyond fine-tuning: A survey. arXiv preprint arXiv:2310.08184, 2023.

- [38] Alex Krizhevsky, Geoffrey Hinton, et al. Learning multiple layers of features from tiny images. 2009.

- [39] Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

- [40] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [41] Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. Going deeper with convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1–9, 2015.

- [42] Mingxing Tan and Quoc Le. Efficientnet: Rethinking model scaling for convolutional neural networks. In International conference on machine learning, pages 6105–6114. PMLR, 2019.

- [43] Mark Sandler, Andrew Howard, Menglong Zhu, Andrey Zhmoginov, and Liang-Chieh Chen. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4510–4520, 2018.

- [44] Sagar Imambi, Kolla Bhanu Prakash, and GR Kanagachidambaresan. Pytorch. Programming with TensorFlow: Solution for Edge Computing Applications, pages 87–104, 2021.