Weakly-supervised Joint Anomaly Detection and Classification

Abstract

Anomaly activities such as robbery, explosion, accidents, etc. need immediate actions for preventing loss of human life and property in real world surveillance systems. Although the recent automation in surveillance systems are capable of detecting the anomalies, but they still need human efforts for categorizing the anomalies and taking necessary preventive actions. This is due to the lack of methodology performing both anomaly detection and classification for real world scenarios. Thinking of a fully automatized surveillance system, which is capable of both detecting and classifying the anomalies that need immediate actions, a joint anomaly detection and classification method is a pressing need. The task of joint detection and classification of anomalies becomes challenging due to the unavailability of dense annotated videos pertaining to anomalous classes, which is a crucial factor for training modern deep architecture. Furthermore, doing it through manual human effort seems impossible. Thus, we propose a method that jointly handles the anomaly detection and classification in a single framework by adopting a weakly-supervised learning paradigm. In weakly-supervised learning instead of dense temporal annotations, only video-level labels are sufficient for learning. The proposed model is validated on a large-scale publicly available UCF-Crime dataset, achieving state-of-the-art results.

I Introduction

Video surveillance is becoming more important in our daily life. Nowadays surveillance cameras are observed almost at every corner of cities. The primary goal of surveillance cameras is to increase security level and protect us from acts of violence, vandalism, terrorism, and theft. To ensure public safety, constantly monitoring these surveillance cameras on a 24/7 basis using manpower is a laborious and tedious task. Hence, development of automatic anomaly detection and classification methods for real-world surveillance videos is the utmost need of the hour.

Many surveillance systems in the current scenarios have adopted computer vision based methods for anomaly detection. However, only detecting anomalies in real time is not sufficient for preventing the loss of human life and money. For this, timely categorizing the detected anomalies and enforcing right action to it can reduce the risk substantially. Many recent surveillance systems work on a semi-automatic fashion, where only anomaly detection is done by the system but categorization of anomalies require human efforts. This is due to the limited robustness of anomaly classification methods in real world scenarios. Moreover, recent approaches [19, 28] address the task of anomaly detection and classification independently. Hence the substantial improvement in anomaly detection methods leads to marginal progress in anomaly classification methods. In order to fully automatize the recent surveillance systems, development of a single model is required which can not only detect the anomaly correctly but also can help in classifying the anomalies for providing the right action in right time. Motivated by this, in this paper an end-to-end model is proposed that jointly learns detection and classification of anomaly instances in real world surveillance videos.

The task of joint anomaly detection and classification is challenging due to (i) unavailability of large corpus of annotated data for learning a wide variety of normal and anomaly patterns, and (ii) the traits of anomaly activities such as abuse, stealing, shoplifting, shooting, and robbery differ in their temporal extent. The proposed model mitigates these challenges by adopting a weakly-supervised paradigm for the unavailability of temporally annotated video data and by designing a two-level attention mechanism to distinguish the anomaly activities which differs in their temporal extent. To learn from weak-supervision, Multiple Instance Learning (MIL) [17] with ranking loss for anomaly detection has been adopted. By incorporating ranking loss, the model learns to maximize the margin of separation between anomaly and normal instances. In our model, a two-level attention mechanism is incorporated to focus on the video clips containing the anomalies for learning discriminative representation for anomaly detection and classification. The first-level attention mechanism highlights the clips pertaining to anomalies for the task of detection. Moreover, a relation between the anomaly detection and classification branch is developed through another level of attention mechanism. This second-level of attention mechanism enables the model to learn salient video representation for classifying anomalies. The proposed model is validated on a publicly available dataset, namely UCF-crime achieving state-of-the-art results. In addition, baseline results are evaluated for joint anomaly detection and classification tasks introduced in this paper.

In summary, the contributions of the paper include:

-

•

A novel model for joint anomaly detection and classification with weak supervision aiming to fully automatize recent surveillance systems. To the best of our knowledge, this is the first work to jointly deal anomaly detection and classification in a single model.

-

•

A two-level attention mechanism at two different stages for discriminative video representation for anomaly detection as well as classification.

-

•

Validation of the proposed model on a large-scale public dataset achieving the state-of-the-art results for classification while jointly performing the task of anomaly detection and classification.

II Related Work

Anomaly detection in surveillance videos is a challenging and active research topic in computer vision. Due to its massive application in video surveillance and crime scene investigation several research studies [8, 3, 12, 14, 22] have been reported in the last decade using hand-crafted features. Many earlier approaches [18, 21, 2, 4, 6, 11, 15, 25] address the problem of anomaly detection with one-class learning methods. These methods learn only from the normal patterns during training, defined for a specific scene. These approaches suffer from the fact that it is often difficult to learn feature representations for a wide diversity of normal patterns and hence these methods are not suitable for general applications of anomaly detection.

To overcome the drawback of earlier methods, recent approaches [19, 24, 28, 27] learn feature representation from normal and anomaly videos. This formulation of anomaly detection as binary class has been introduced with the dataset UCF-Crime [19]. The success of deep learning models [23, 26] in action detection motivates the researchers [19, 27] to make use of 3D convolutional networks (ConvNets) as visual backbone for segment wise feature extraction. By segments, we mean short video clips partitioned from an untrimmed video. Training 3D ConvNets like C3D, I3D pre-trained on huge datasets like Kinetics [10] and Youtube-8M [1] requires strong supervision for learning spatio-temporal patterns in a short video clip. Whereas obtaining temporal annotation for anomaly activities is a laborious task. Thus, [19] addresses the task of anomaly detection under weak supervision which makes use of video-level annotation for untrimmed anomalous and normal videos. [19] have proposed a MIL-based model to map video-segment based feature vector to an anomaly score. This mapping is learned through a ranking loss which optimizes the separation of the anomaly and normal segments in a video. Inspired from previous studies, authors [28] use the motion-aware features with MIL model to improve the anomaly detection. In addition, they also employ an attention block to incorporate temporal context while detecting anomalies. Besides relying completely on the input features, the attention is applied only at the score level. Thus, the attention block does not modulate the feature maps leading to non-optimal detection. [24] model the temporal relation through Temporal Convolutional Network (TCN) [13] and also proposed a novel complementary loss to maximize the margin of separation between inter and intra class instances. However, obtaining only long range temporal dependency is not sufficient for detecting anomalies in video. Thus for better understanding of temporal context, we propose a first-level of temporal attention mechanism to modulate the input feature to the MIL model. Another approach [27] formulates the weakly-supervised problem as a supervised learning refining noise labels iteratively. But such methods are costly in terms of inference and is also data dependent, for instance relies too much on strong motion for detecting anomalies.

Furthermore, no earlier approaches [19, 27, 24, 28, 7] address the task of anomaly detection and classification jointly. As a result, there is no significant progess in anomaly classification task. Mostly anomaly classification is treated as an independent problem in [19, 28] where the videos are trained in an action recognition framework using visual backbones with video-level supervision. But these methods are not optimal as the visual backbones like TCNN [9] and C3D [20] extract video-segment features and classify the whole untrimmed video into normal and anomalous samples through simple aggregation techniques. Thus, the classification of anomalies still remains a challenging task.

Inspirations for joint anomaly detection and classification is taken from action detection algorithms like [23, 26] but these algorithms are fully supervised with temporal intervals for each actions. In contrast, we proposed a joint detection and classification algorithm in weakly-supervised settings where the temporal intervals for an anomaly are not given. In order to design such an model, we incorporate a second level of attention mechanism between the anomaly detection and classification task, that builds an inter-dependencies among the tasks. Consequently, we propose a model with a two-level of attention mechanism which is jointly trained through global optimization for the task of anomaly detection and their classification.

III Anomaly detection and classification Model

The proposed model shown in Fig. 1 for weakly-supervised joint anomaly detection and classification operates in two branches, where the first and second branch performs anomaly detection and classification respectively. From Fig. 1, It may be observed that the proposed model consists of four stages and the stages are described below in detail.

III-A Divide Video into Segments

In stage A of Fig. 1, videos are divided into fixed number (say ) of temporal segments. Since the videos are untrimmed and no temporal annotations are provided, the video segments obtained from normal video sequences have all normal video clips whereas from anomaly video sequences have both anomaly and normal video clips. We assume that, there exists at least one anomaly segment among all video segments obtained from untrimmed anomaly video.

III-B Feature Extraction

In stage B, spatio-temporal features are extracted from a 3D convolutional network for each video segment of anomaly and normal video sequences obtained in stage A. Each video segment at time might have several clips of 64 frames and features are extracted for each such 64 frame clips. In order to have a fixed dimensional descriptor per video segment, a max pooling operation is performed across time. Thus, an -dimensional feature vector is extracted for each video segment at time step . Now, for a given video sequence has an output feature map of dimension , is used in the subsequent stages for the given task.

III-C Modeling Temporal Information

Modeling of long-term temporal dependency is performed in stage C through a Recurrent Neural Network (RNN) and a first-level temporal attention model. In this stage, the extracted visual features are fed to a RNN, specifically LSTM in this case for modeling the temporal evolution of the anomalies in a video. The first-level temporal attention provides discriminative attention weights to the temporal segments those with probable occurrence of anomaly. Different from [28], here modulation of features is done instead of decision scores for detecting anomalies.

To obtain the temporal relationship among the segments in a video, a many-to-many LSTM with parameters is used. The input to this LSTM are the feature vectors of the video with temporal segments. The LSTM outputs a -dimensional feature vector at each time step. Although temporal modeling of the video segments is achieved through LSTM but some visual information is lost in this process due to the sigmoid activated learning gates in the LSTM. So in order to retain the properties of the spatio-temporal feature map, we use a skip connection. Thus, the output at time step of the LSTM is computed as where is the hidden state of LSTM. The symbol represents concatenation operation between two variables.

The LSTM has number of hidden neuron with tanh squashing. So the output dimension of the LSTM followed by the concatenate operation for a given video is .

First-level Temporal Attention: To highlight the temporal saliency to the LSTM feature vectors, a first-level attention weights is employed on them. Unlike [28], temporal attention weights are obtained from the same RGB feature map used for temporal feature learning.

Figure 2 shows the detailed diagram of temporal attention model, where denotes the input feature vector from 3D CNN as mentioned earlier. The feature vector is first l2 normalized, then input to the first-level temporal attention network. In this attention network, instead of treating each segment of the video independently, a global representation of the video in a low dimensional latent space () is obtained using time-distributed perceptrons with weight vector . This global representation of the video enables the model to consider the temporal context while learning appropriate weights for each temporal segment. For input with temporal segments, the corresponding attention scores are computed by

| (1) |

where , are the learn-able parameters with biases , . To obtain the temporal attention weights at tth timestep, the scores (st t T) are normalized with softmax activation. Thus, temporal attention weight at tth time step is computed by .

Finally, the modulated feature vector is computed by adding the LSTM output feature vector with attention mask generated from Hadamard product of the attention weights and the LSTM output feature vector. The modulated feature vector for temporal segment is computed by .

III-D Detection and Classification

In stage D, anomaly detection and classification is performed in two separate branches. The anomaly detection branch detect temporal segments containing anomaly patterns. Whereas, the anomaly classification branch classify the video to a specific anomaly class by its anomaly content. An inter-dependency between these two branches of the framework is also developed through a second-level attention mechanism. A detailed description of each branch is given below.

III-D1 Anomaly Detection

The input to the anomaly detection branch is the modulated feature vector from stage C. In order to process each temporal segment of independently, time-distributed perceptrons are used. As shown in Figure 1, this branch consists of 3 layer perceptrons. The final layer is sigmoid activated to compute anomaly score for each temporal segment independently.

Second-level Temporal Attention: This network builds a relationship between the detection and classification problem. Intuitively, the segments which produce high score in the anomaly detection branch must be an anomalous segment and such a segment should contribute more to recognize the anomaly than other segments. This functionality is performed by the second-level temporal attention network, which helps in highlighting the high scoring feature segments for the anomaly classification branch.

Figure 3 depicts our second-level temporal attention network. We aim at utilizing the features learning to detect anomalies for providing attention weights to the relevant temporal segments for the task of anomaly classification. Hence, a latent feature representation from the detection branch, the 2nd layer of anomaly detection branch in our case is input to our second-level attention mechanism. We denote this latent features as , where (=96, in our model) is the number of neurons in the 2nd layer of anomaly detection branch.

For tth time step, the second-level attention score is computed by a perceptron followed by aggregating the segment level features, and a final perceptron with neurons equal to the number of temporal segments . Thus, the attention score is given by

| (2) |

where , are trainable parameters with biases , . The is a concatenate operation over time T for aggregating features of T time steps.

Finally, to obtain the second-level attention weight corresponding to tth temporal segment, we employ sigmoid activation on the pre-computed attention score si given by .

III-D2 Anomaly Classification

The objective of anomaly classification is to classify the video by the category of anomaly present in it. The input to the anomaly classification branch is the feature map modulated by the second-level attention weight. The input to the anomaly classification branch at temporal segment is computed by .

In order to classify to a specific anomaly class, a single feature representation is obtained by averaging the feature segments over time. This feature is used for classification using 2-layer perceptron. The final perceptron containing the neurons equal to the number of anomaly classes + 1 (for normal video) is softmax activated to generate high prediction score for a specific class.

III-E Joint training all the networks

Jointly training the two branches (i.e. anomaly detection and anomaly classification branch) of the proposed model along with the two level attention network is a challenging task. In the proposed model, the anomaly detection branch follows a weakly-supervised learning and it is optimized based on a Multiple instance learning (MIL) ranking loss function proposed by [19], however the anomaly classification branch is optimized by categorical cross entropy loss with a video-level labels.

MIL Ranking Loss for detection

For optimizing the anomaly detection branch, maximization of margin of separation among normal and anomaly instances is a crucial task. But identification of normal and anomaly instances without temporal annotations is difficult. Following the fact, an anomaly video contains at least one instance of anomaly and a normal video contains no anomaly, [19] aims at identifying normal and anomaly instances at score level. Since, prediction scores of anomaly and normal video instances are collected in Sa and Sn, a hinge-based ranking loss is enforced for maximization by treating the maximum score of Sa and Sn as anomaly and normal respectively. Along with the hinge based ranking loss, temporal smoothing and sparsity constraints are also proposed by [19] given as follows:

| (3) | ||||

where N = T batchsize, and are the weighting factors of the temporal smoothing and sparsity constraints respectively. We employ the above ranking loss to dissociate the temporal segments in video into anomalies or normal video clip.

Cross Entropy Loss for classification

In real-world, count of normal videos are far more than anomalous videos. So to handle such challenge of class imbalance while computing the classification loss, we employ a weighting factor equivalent to inverse class frequency in the loss. We formulate the anomaly classification with the categorical cross-entropy loss for C classes of anomaly as:

| (4) |

where . Note that the loss is computed for classes, one pertaining to normal videos.

Regularization for attention

We invoke a regularization term for the first-level attention weights that forces the model to pay attention to all the segments in the feature map. This is due to the fact that the attention weight is prone to ignore some segments in the temporal dimension although they contribute in detecting anomalous activities. Hence, we impose a penalty . For the second-level attention, we employ another regularization term to regularize the learned attention weights with the norm to avoid their explosion.

| (5) |

Optimization

In order to train the framework jointly, we need to optimize the total loss. The total loss can be represented as follows:

| (6) |

where and are the weighting factors.

IV Experimental Analysis

Dataset description - We have performed our experiments on UCF-Crime dataset [19] containing real-world untrimmed surveillance videos. This is currently the largest anomaly detection dataset having a diversity in length of videos. The videos corresponding to a specific class can be of long ( 5 hrs) and short ( 1 min) which makes the problem of detection more challenging. It contains 1900 videos out of which 950 videos are normal and rest are from 13 categories of anomalies. For training and testing 1610 and 290 videos are used respectively. As we are performing both anomaly detection and classification jointly, we follow the official anomaly detection train-test protocol provided by [19]. Different from [19, 28] where the classification results have been provided on a separate uniform split of the dataset, we report the classification results on the same dataset split as for the detection task. Thus following this protocol, we provide new baseline for anomaly classification which can be used for follow-up comparisons.

Evaluation Metric - Since we are addressing two different tasks i.e. anomaly detection and classification, two separate metrics have been used to evaluate our method. Following earlier works [19], we use frame-level Receiver Operating Characteristics (ROC) and its corresponding Area Under the Curve (AUC) for anomaly detection performance evaluation. For anomaly classification task, a mean-Average-Accuracy (mAA) is used where the average accuracy for each class is obtained and then mean of all average accuracies is reported to evaluate the performance.

IV-A Implementation Details

Training

For training, at first we extract the spatio-temporal features from I3D backbone [5] for each temporal segment. We set the temporal segments in these experiments. For input to I3D, we take a center crop of dimension 224 224 from the full frame. The features are extracted from the Global Average Pooling layer of I3D which yields a feature of dimension . For temporal modeling of the segments, the neurons in the LSTM is set to 1024 (). Then we train our end-to end trainable joint detection and classification model using Adam optimizer at a learning rate 0.0001 and with the loss weighting factors = 0.9 and . We also randomly select 30 positive and 30 negative videos as a mini-batch and compute the gradient using reverse mode automatic differentiation on computation graph using Theano. Then the loss is computed and back-propagated for the whole batch.

Testing

During testing, we empirically found that center crop and the four corner crop is suitable for model testing and results in superior performance. This is to cover the fine detail of the anomaly, as in [27].

IV-B Ablation study

Table I shows the importance of each component in our proposed model. The statistics show a significant improvement in detection and classification accuracy compared with I3D base model with or without two-level of attention. As a first baseline, we perform experiments with only I3D feature along with the classification and detection branch. Prior to the two-level of attention, we incorporate LSTM for temporal modeling in untrimmed videos. It is visible from of Table I, temporal encoding through LSTM improve detection accuracy by 1.52 (78.6280.14) and classification accuracy by 5.36(14.6620.02). Reversing the chronology of our proposed model, we first observe the influence of second-level attention weights in classification by adding the second-level attention network in the model to invoke an inter-dependence between the anomaly detection and classification branch, keeping the detection branch unchanged. It is evident from of Table I, there is a significant improvement of 7.66 (20.0227.68) in classification accuracy and a marginal improvement in detection accuracy. This is due to the ability of the second-level attention mechanism to assign high weights to all those video segments which contributes more to the classification of the video. Finally, we invoke the first-level attention network to verify the improvement in the anomaly detection task as well as substantiating the fact that boost in detection performance improves the classification task as well. Thus in , we add the first-level attention network for highlighting the salient video segments containing possible anomaly pattern in the videos. The final row of Table I indicates that the first-level attention weights boosts the detection accuracy by 1.73 (80.3982.12) and it also leads to significant improvement in classification accuracy of 6.42 (27.6834.1).

| Method | Components | AUC(%) | mAA |

|---|---|---|---|

| I3D | 78.62 | 14.66 | |

| + LSTM | 80.14 | 20.02 | |

| + Second-level attention | 80.39 | 27.68 | |

| + First-level attention | 82.12 | 34.1 |

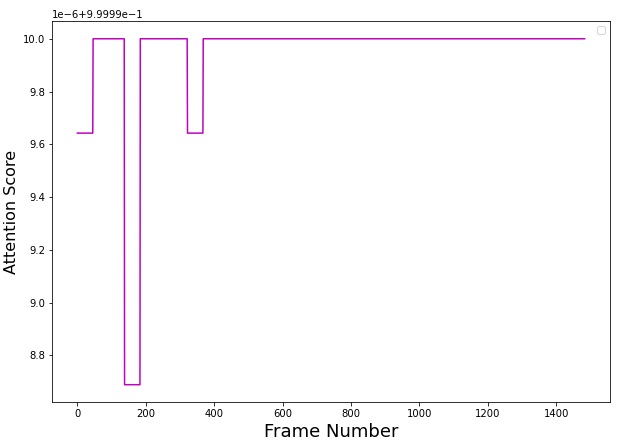

For qualitative analysis, we visualize the two-level of attention weights along with the prediction scores as illustrated in Figure 4. Interestingly, it is found that the proposed model fails to detect the anomalies in ”Shoplifting034” and also miss-classifies the video. In order to investigate about the ”Shoplifting” class, we found another video in the test set ”Shoplifting016” which is correctly detected and classified. To find out the possible cause of miss detection of video ”Shoplifting034”, we analyzed the video and found that a lady is stealing a black color handbag which is similar to the handbag that she is carrying.

This indicates that the attention mechanism derived from the appearance-only features fail in providing reasonable attention weights due to possible confusion among the similar appearance of the bags. This implies that our model still needs an spatial attention for better understanding of the scene.

| Methods | AUC(%) | mAA (%) |

|---|---|---|

| SVM Baseline | 50 | - |

| Hasan et al.[8] | 50.6 | - |

| Lu et al.[16] | 65.51 | - |

| Sultani et al.[19] | 75.41 | 13.9 |

| Dubey et al.[7] | 76.67 | - |

| I3D baseline | 78.62 | 14.6 |

| Zhang et al.[24] | 78.66 | - |

| Zhu et al.[28] | 79 | - |

| Zhong et al.[27] | 82.12 | - |

| Proposed Model | 82.12 | 34.1 |

IV-C Comparison with state-of-the-art

We have compared the recent state-of-the-art anomaly detection models on UCF-Crime dataset with our proposed model. We compare our model upon two indicators i.e. ROC curves and AUC as shown in Figure 5 and in Table II. As this is the first method to evaluate anomaly classification performance in detection split of UCF-crime dataset, we create baseline following the [19] approach with the C3D [20], I3D [5] backbone feature and K-NN classifier as shown in Table II. The authors in [27] achieves similar results in anomaly detection to our model but they do not address anomaly classification problem in their method. Earlier approaches lack in developing inter-dependencies between the anomaly detection and classification tasks, which resulted in anomaly detection performance not influencing the anomaly classification performance. In contrast, we formulated the two individual tasks jointly through an attention mechanism. Our attention mechanism significantly improves the classification performance by large margin while achieving state-of-the-art results for the task of detection as well. Following our approach, future developments in anomaly detection performance will lead to improvement in anomaly classification performance.

V Conclusion

In this work, we proposed a weakly-supervised joint anomaly detection and classification model, driven by two-level of attention network. This model achieves state-of-the-art performance for the task of anomaly detection, and when detection and classification tasks are dealt jointly, it achieves significant improvement in anomaly classification performance. To achieve, temporal context modeling in weakly-supervised setting, we incorporate two-level of attention block in different stages of the model. From experimentation, it is evident the attention weights not only modulates the input features for anomaly detection but also helps in anomaly classification by developing an inter-dependency between anomaly detection and classification tasks. It is observed from the result analysis that our model still lags in detecting anomalies where real-world challenges like occlusion, background clutter, inadequate illumination are present.

Acknowledgements. This work has been supported by the French government, through the 3IA Côte d’Azur Investments in the Future project managed by the National Research Agency (ANR) with the reference number ANR-19-P3IA-0002. The authors are also grateful to the OPAL infrastructure from Université Côte d’Azur for providing resources and support.

References

- [1] S. Abu-El-Haija, N. Kothari, J. Lee, P. Natsev, G. Toderici, B. Varadarajan, and S. Vijayanarasimhan. Youtube-8m: A large-scale video classification benchmark. arXiv preprint arXiv:1609.08675, 2016.

- [2] A. Adam, E. Rivlin, I. Shimshoni, and D. Reinitz. Robust real-time unusual event detection using multiple fixed-location monitors. IEEE transactions on pattern analysis and machine intelligence, 30(3):555–560, 2008.

- [3] A. Basharat, A. Gritai, and M. Shah. Learning object motion patterns for anomaly detection and improved object detection. In 2008 IEEE Conference on Computer Vision and Pattern Recognition, pages 1–8. IEEE, 2008.

- [4] Y. Benezeth, P.-M. Jodoin, V. Saligrama, and C. Rosenberger. Abnormal events detection based on spatio-temporal co-occurences. In 2009 IEEE Conference on Computer Vision and Pattern Recognition, pages 2458–2465. IEEE, 2009.

- [5] J. Carreira and A. Zisserman. Quo vadis, action recognition? a new model and the kinetics dataset. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), July 2017.

- [6] Y. Cong, J. Yuan, and J. Liu. Abnormal event detection in crowded scenes using sparse representation. Pattern Recognition, 46(7):1851–1864, 2013.

- [7] S. Dubey, A. Boragule, and M. Jeon. 3d resnet with ranking loss function for abnormal activity detection in videos. arXiv preprint arXiv:2002.01132, 2020.

- [8] M. Hasan, J. Choi, J. Neumann, A. K. Roy-Chowdhury, and L. S. Davis. Learning temporal regularity in video sequences. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016.

- [9] R. Hou, C. Chen, and M. Shah. Tube convolutional neural network (t-cnn) for action detection in videos. In Proceedings of the IEEE international conference on computer vision, pages 5822–5831, 2017.

- [10] W. Kay, J. Carreira, K. Simonyan, B. Zhang, C. Hillier, S. Vijayanarasimhan, F. Viola, T. Green, T. Back, P. Natsev, et al. The kinetics human action video dataset. arXiv preprint arXiv:1705.06950, 2017.

- [11] J. Kim and K. Grauman. Observe locally, infer globally: a space-time mrf for detecting abnormal activities with incremental updates. In 2009 IEEE Conference on Computer Vision and Pattern Recognition, pages 2921–2928. IEEE, 2009.

- [12] L. Kratz and K. Nishino. Anomaly detection in extremely crowded scenes using spatio-temporal motion pattern models. In 2009 IEEE Conference on Computer Vision and Pattern Recognition, pages 1446–1453. IEEE, 2009.

- [13] C. Lea, M. D. Flynn, R. Vidal, A. Reiter, and G. D. Hager. Temporal convolutional networks for action segmentation and detection. In proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 156–165, 2017.

- [14] W. Li, V. Mahadevan, and N. Vasconcelos. Anomaly detection and localization in crowded scenes. IEEE transactions on pattern analysis and machine intelligence, 36(1):18–32, 2013.

- [15] C. Lu, J. Shi, and J. Jia. Abnormal event detection at 150 fps in matlab. In Proceedings of the IEEE international conference on computer vision, pages 2720–2727, 2013.

- [16] C. Lu, J. Shi, and J. Jia. Abnormal event detection at 150 fps in matlab. In Proceedings of the IEEE international conference on computer vision, pages 2720–2727, 2013.

- [17] O. Maron and T. Lozano-Pérez. A framework for multiple-instance learning. In Proceedings of the 1997 Conference on Advances in Neural Information Processing Systems 10, NIPS ’97, page 570–576, Cambridge, MA, USA, 1998. MIT Press.

- [18] B. Ramachandra and M. Jones. Street scene: A new dataset and evaluation protocol for video anomaly detection. In The IEEE Winter Conference on Applications of Computer Vision, pages 2569–2578, 2020.

- [19] W. Sultani, C. Chen, and M. Shah. Real-world anomaly detection in surveillance videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 6479–6488, 2018.

- [20] D. Tran, L. Bourdev, R. Fergus, L. Torresani, and M. Paluri. Learning spatiotemporal features with 3d convolutional networks. In The IEEE International Conference on Computer Vision (ICCV), December 2015.

- [21] J. Wang and A. Cherian. Gods: Generalized one-class discriminative subspaces for anomaly detection. In Proceedings of the IEEE International Conference on Computer Vision, pages 8201–8211, 2019.

- [22] S. Wu, B. E. Moore, and M. Shah. Chaotic invariants of lagrangian particle trajectories for anomaly detection in crowded scenes. In 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pages 2054–2060. IEEE, 2010.

- [23] H. Xu, A. Das, and K. Saenko. R-c3d: Region convolutional 3d network for temporal activity detection. In Proceedings of the IEEE international conference on computer vision, pages 5783–5792, 2017.

- [24] J. Zhang, L. Qing, and J. Miao. Temporal convolutional network with complementary inner bag loss for weakly supervised anomaly detection. In 2019 IEEE International Conference on Image Processing (ICIP), pages 4030–4034. IEEE, 2019.

- [25] B. Zhao, L. Fei-Fei, and E. P. Xing. Online detection of unusual events in videos via dynamic sparse coding. In CVPR 2011, pages 3313–3320, June 2011.

- [26] Y. Zhao, Y. Xiong, L. Wang, Z. Wu, X. Tang, and D. Lin. Temporal action detection with structured segment networks. In Proceedings of the IEEE International Conference on Computer Vision, pages 2914–2923, 2017.

- [27] J.-X. Zhong, N. Li, W. Kong, S. Liu, T. H. Li, and G. Li. Graph convolutional label noise cleaner: Train a plug-and-play action classifier for anomaly detection. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2019.

- [28] Y. Zhu and S. Newsam. Motion-aware feature for improved video anomaly detection. arXiv preprint arXiv:1907.10211, 2019.