capbtabboxtable[][\FBwidth]

11email: [email protected]

2 School of Computer Science and Engineering, University of New South Wales, Sydney, Australia

3 Paige AI, New York, NY, USA

4 Sydney Pharmacy School, University of Sydney, Sydney, Australia

5 Sydney Nano Institute, University of Sydney, Sydney, Australia

Voxel-wise Cross-Volume Representation Learning for 3D Neuron Reconstruction

Abstract

Automatic 3D neuron reconstruction is critical for analysing the morphology and functionality of neurons in brain circuit activities. However, the performance of existing tracing algorithms is hinged by the low image quality. Recently, a series of deep learning based segmentation methods have been proposed to improve the quality of raw 3D optical image stacks by removing noises and restoring neuronal structures from low-contrast background. Due to the variety of neuron morphology and the lack of large neuron datasets, most of current neuron segmentation models rely on introducing complex and specially-designed submodules to a base architecture with the aim of encoding better feature representations. Though successful, extra burden would be put on computation during inference. Therefore, rather than modifying the base network, we shift our focus to the dataset itself. The encoder-decoder backbone used in most neuron segmentation models attends only intra-volume voxel points to learn structural features of neurons but neglect the shared intrinsic semantic features of voxels belonging to the same category among different volumes, which is also important for expressive representation learning. Hence, to better utilise the scarce dataset, we propose to explicitly exploit such intrinsic features of voxels through a novel voxel-level cross-volume representation learning paradigm on the basis of an encoder-decoder segmentation model. Our method introduces no extra cost during inference. Evaluated on 42 3D neuron images from BigNeuron project, our proposed method is demonstrated to improve the learning ability of the original segmentation model and further enhancing the reconstruction performance.

Keywords:

Deep learning Neuron reconstruction3D image segmentation3D optical microscopy1 Introduction

3D neuron reconstruction is essential for analysis of brain circuit activities to understand how human brain works [25, 12, 13, 21]. It traces neurons and reconstructs their morphology from 3D light microscopy image stacks for neuroscientists to investigate the identity and functionality of neurons. Traditional tracing algorithms rely on hand-crafted features to capture neuronal structures but they are sensitive to the image quality. However, due to various imaging conditions, obtained neuron images suffer from different extent of noises and uneven labelling distribution. To attain better tracing performance for 3D neuron reconstruction, an accurate segmentation method to distinguish a neuron voxel from its surrounding low-contrast background is in high demand and necessary. However, due to the complexity of neuronal structures and various imaging artefacts, the precise restoration of neuronal voxels remains a challenging task.

A line of deep learning based segmentation models [10, 26, 19, 20, 9, 22, 17] has recently been proposed to demonstrate their advances in neuron segmentation studies. Since the neuronal structures range from long tree-like branches to blob-shape somas, [10] adopted inception networks [16] with various kernel sizes to better learn neuron representations from an enlarged receptive field. The invention of U-Net [15] gave rise to a line of encoder-decoder architectures and popularised them to be one of the de-facto structures in medical image segmentation tasks. Under the unsupervised setting, traditional tracing algorithm is combined with 3D U-Net [6] to progressively learn representative feature and the learned network, in turn, helps improve the tracing performance [26]. In the fully-supervised manner, modifications have been made based on 3D U-Net to extend the receptive field of kernels. In MKF-Net [19], a multi-scale spatial fusion convolutional block, where kernels with different size are processed in parallel and fused together, was proposed to replace some of the encoder blocks of 3D U-Net and achieved better segmentation results. Further, [20] introduces graph-based reasoning to learn longer-range connection for more complete features. The bottom layer of 3D U-Net encodes the richest semantic information but loses the spatial information. To alleviate the loss of spatial cues, [9] proposed to replace the bottom layer with a combination of dilated convolutions [3] and spatial pyramid pooling operations [8]. Although larger local regions have been aggregated after introducing these modifications and better segmentation results have been gained, additional overhead for the inference has also been introduced when using these proposed models. To avoid such overhead, [22] and [17] use additional teacher-student model and GAN-based data augmentation techniques, respectively. However, the focus of these segmentation models resides in the learning of local structural information of neurons within a single volume while the intrinsic semantic information of voxels among different volumes has been rarely touched.

As the semantic label is able to help obtain a better representation for image pattern recognition [24, 23], such intrinsic features are beneficial to visual analysis. Siamese networks [1] based unsupervised representation learning methods [4, 7, 5] have recently gained impressive performance over image-level classification task by generating better latent representations through the comparison between two augmented views of the same image. Inspired by these work, we propose to encode the intrinsic semantic features into the representation of each voxel by maximising the similarity between two voxels belonging to the same class in a high-dimensional latent space. In our work, we follow the prevalent encoder-decoder architecture as the base segmentation model. Without the need of negative pairs [4] and momentum encoder [7], we design a class-aware voxel-wise Simple Siamese (SimSiam) [5] learning paradigm in a fully-supervised manner to maximise the similarity between two voxels with the same semantic meaning and encourage the base encoder to learn a better latent space for voxels of neuron images. Rather than being restricted within the same volume, to fully utilise the dataset, the voxel pairs can be sampled among different volumes. After training, only the original segmentation base model will be kept. Therefore, no extra cost is required to perform inference. Experimental results on 42 3D optical microscopy neuron images from the BigNeuron project [14] show that our proposed framework is able to achieve better segmentation results and further increase the accuracy of the tracing results.

2 Method

2.1 Supervised Encoder-Decoder Neuron Segmentation

We apply a 3D encoder-decoder architecture as the base model to perform neuron segmentation. As shown in Fig. 1, it consists of an encoder path, a decoder path, and skip connections linking in between, whose training progress is supervised via a binary cross-entropy loss that , where and represent the ground truth and the predictions of neuron segmentation masks, respectively.

provides semantic cues for each individual voxel but the relation learnt through the encoder is only within a local neighbourhood of each voxel, which ignores the correlation among voxels belonging to the same semantic class in a long distance, i.e., cross-volume. To decrease the distance of voxels with same category in the latent space, we introduce our proposed voxel-wise cross-volume SimSiam representation learning in next section.

2.2 VCV-RL: Voxel-wise Cross-Volume SimSiam Representation Learning

As demonstrated in Fig. 1, for each volume where , , and denote the depth, height, and width, respectively, we first obtain its corresponding downsized -dimensional latent code through encoders, and then interpolate it back to same size as to reach a voxel-wise embedding . Then, these volumes of latent codes are divided according to the ground truth labels , for the construction of two cross-volume point pools, one for the neurons voxels and one for the background voxels . In other words, each pool contains the latent codes of voxels belonging to the same class from all the volumes within a training batch.

To enforce the voxel embeddings within the same class-aware pool to be closer in their latent space, we adopt Siamese networks [1] to perform the similarity comparison for each input voxel pair, and we denote the voxel to compare as the anchor-voxel and the voxel being compared as the pair-voxel. Following [5], we use a 3-layer MLP projector as the channel mapping function to raise the latent code dimension from to , for both types of voxels. Another 2-layer MLP predictor is employed to match the output of anchor-voxel to that of the pair-voxel. To prevent the Siamese networks from collapsing to constant solution, a stop-gradient operation [5] is also adopted as shown in Fig. 1.

2.2.1 Voxel Similarity.

Following SimSiam [5], the symmetrised similarity loss between an anchor-voxel and a pair-voxel is defined with cos operator as:

| (1) |

where denotes the cosine similarity between two embeddings. The total similarity loss comparing voxel pairs is formulated as:

| (2) |

Together with loss , the encoded feature embedding for each voxel is expected to contain the intrinsic semantic cues shared among voxels of the same category. As the point-wise cross-entropy loss is complimentary to the representation learning loss [23], we keep the weight of these two losses the same. The segmentation loss is then formulated as .

2.2.2 Anchor-Voxel Sampling Strategy.

Given that latent codes of voxels being misclassified by the base segmentation model are more important for the representation learning [23], we design three different strategies to sample anchor-voxels from each point pool :

-

•

Random sampling (): Randomly sample anchor-voxels from the whole point pool ;

-

•

Purely hard sampling (): Randomly sample anchor-voxels from a subset of point pool . The subset is the collection of voxels whose prediction is wrong;

-

•

Hybrid combination sampling (): anchor-voxels are randomly sampled from the whole point pool while the rest anchor-voxels are randomly sampled from the subset stated in .

2.2.3 Pair-Voxel Sampling Strategy.

For each selected anchor-voxel, a pair-voxel is sampled from the same point pool to feed together into the Siamese networks. Apart from the candidate points in point pool , we propose a virtual point dsc and dsc as point descriptor for point pool and , respectively to represent an aggregation of semantic feature for each class. We design two ways of computing such a virtual point:

-

•

Relaxed: Average pooling of the entire point pool;

-

•

Strict: Average pooling of the subset of correctly classified points from the entire point pool.

To avoid outliers and stabilise the learning, inspired by Momentum2Teacher [11], we propose to use a momentum update mechanism to keep the semantic information of past pool descriptors. Formally, the pool descriptor for each pool is defined as:

| (3) |

where is the current iteration. is the momentum coefficient and decreases from to 0 with cosine scheduling policy [11] defined as , where is the total number of iterations.

3 Experiments and Results

3.1 Dataset and Implementation Details

3.1.1 Dataset.

Our studies on 3D neuron reconstruction were conducted on the publicly available 42-volume Janelia dataset developed for the BigNeuron project [14], which was further divided into 35, 3, and 4 samples as training, validation, and testing set, respectively. We applied random horizontal and vertical flipping, rotation, and cropping, as data augmentation techniques, to amplify our training set with 3D patches of size . Given that the number of neuron voxels is dramatically smaller than that of the background voxels, to ensure the existence of foreground neuron voxels, we re-choose the patch until the foreground voxels make up over 0.1% of the whole patch.

3.1.2 Network Setting and Implementation.

All the models involved in the experiments were implemented in PyTorch 1.5 and trained from scratch for 222 epochs. A model was saved when it reached a better F1-score on the validation set. We use Adam as the optimizer with the learning rate of and the weight decay of . The batch size is set as 4. We choose with the number of anchor-voxels as 512 and strict pool descriptor with MoUpdate mechanism. The hidden layer dimension is 512 and 128 for the projector and predictor, respectively. and are set as 128 and 512, respectively. To make fair comparison, all the 3D U-Net based segmentation models including Li2019 [9] and MKF-Net [19] were implemented with feature dimensions of 16, 32, 64, and 128 for respective layer from top to bottom. As for 3D LinkNet [2], in addition, we replaced the head and final blocks with convolutional layer without spatial changes to keep the same 3 times downsampling of feature maps.

3.2 Results and Analysis

To quantitatively compare among different methods for 3D neuron segmentation, we reported F1, Precision, and Recall as the evaluation metrics. They measure the similarity between the prediction and the ground truth segmentation. Following [9], we employed three extra metrics for the reconstruction results: entire structure average (ESA), different structure average (DSA), and percentage of different structures (PDS) for the measurement between the traced neuron and the manually annotated neurons.

3.2.1 Segmentation Results.

The quantitative segmentation result is presented in Table 1. Our proposed method achieves the best F1 score among all the other state-of-the-art segmentation methods and improves the performance of the base U-Net by 2.32%. It is also noticeable that our model includes no additional cost during inference. Our proposed VCV-RL module can be applied on the encoder output of encoder-decoder architecture easily. When applied on the 3D LinkNet, VCV-RL can enhance the segmentation performance by almost 2%.

| Method | F1 (%) | Precision (%) | Recall (%) | #Params |

|---|---|---|---|---|

| Li2019 [9] | 52.69±12.49 | 46.50±12.70 | 61.06±11.50 | 2.3M |

| 3DMKF-Net [19] | 52.31±12.14 | 46.51±11.82 | 59.88±12.32 | 1.5M |

| 3D U-Net [6] | 51.26±12.16 | 45.26±11.98 | 59.35±12.28 | 1.4M |

| + VCV-RL (proposed) | 53.54±11.39 | 46.68±11.58 | 63.02±10.38 | 1.4M |

| 3D LinkNet [2] | 50.74±12.67 | 42.93±11.41 | 62.22±14.50 | 2.1M |

| + VCV-RL | 52.66±12.14 | 46.10±11.92 | 61.55±11.87 | 2.1M |

| Method | ESA | DSA | PDS | F1 (%) | Prec. (%) | Recall (%) |

|---|---|---|---|---|---|---|

| APP2 [25] | 3.62±0.76 | 6.80±1.24 | 0.34±0.03 | 55.84±12.96 | 57.48±13.77 | 64.94±23.10 |

| + U-Net [6] | 1.59±0.19 | 3.66±0.81 | 0.22±0.02 | 64.92±15.33 | 86.23±7.76 | 56.30±19.39 |

| + MKF-Net [19] | 1.62±0.21 | 3.85±0.76 | 0.22±0.03 | 65.15±14.93 | 89.03±8.74 | 55.07±18.13 |

| + Proposed | 1.52±0.21 | 3.48±0.29 | 0.21±0.02 | 66.17±14.39 | 87.25±7.41 | 56.87±17.92 |

3.2.2 Neuron Reconstruction Results.

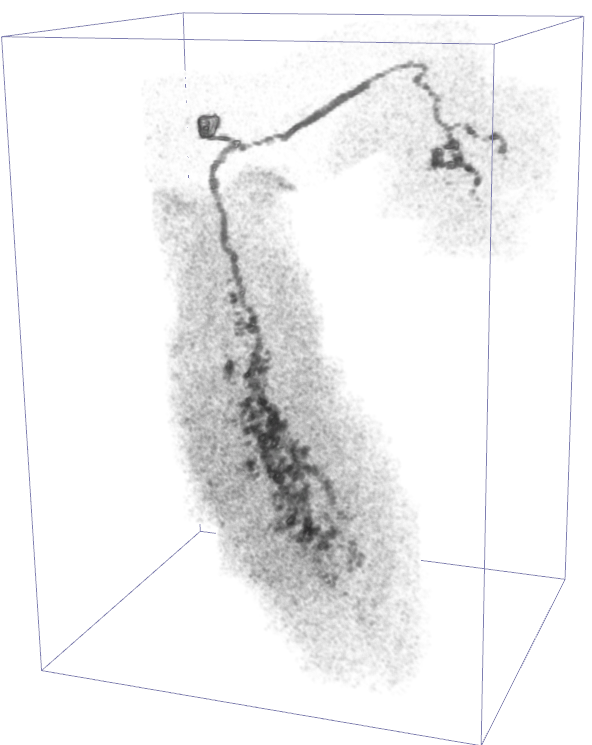

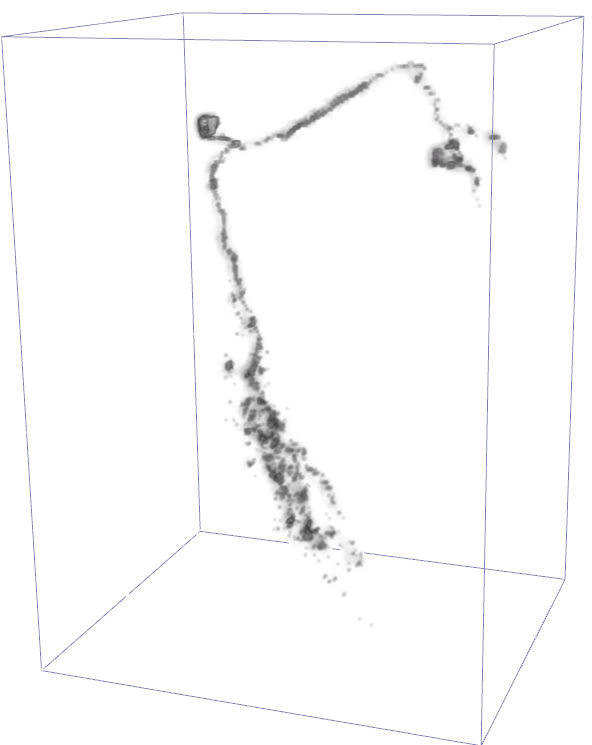

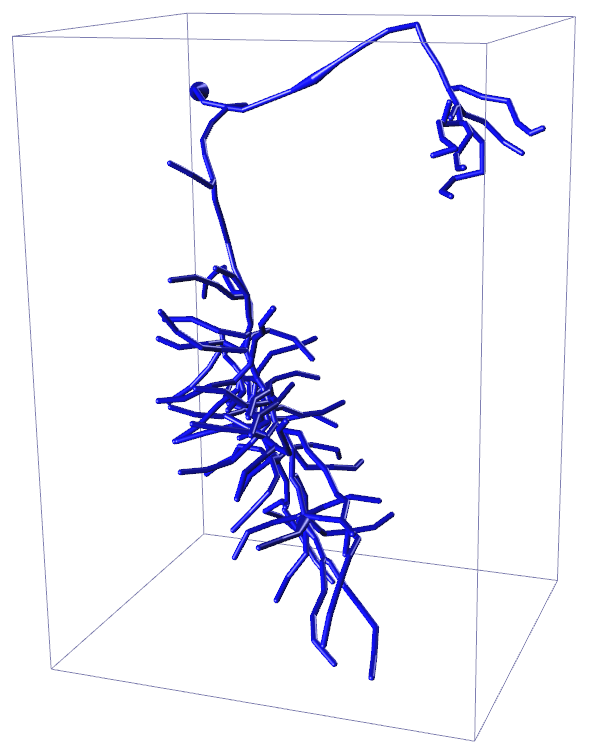

To validate whether the proposed segmentation method can facilitate the tracing algorithm, we choose the state-of-the-art tracing algorithm APP2 [25] as the main tracer. As in [10], we perform the tracing algorithm on adjusted input volume using the probability map predicted from each segmentation model. As presented in Table 2, without extra overhead during inference, our proposed method combined with the tracer achieves the best quantitative tracing results on all the metrics among all the other deep learning based reconstruction methods except for the precision. The reason why plain APP2 reaches such high recall is that it overtraces the neuron structures, which is likely to include more real neuron points. Fig. 2 displays the enhanced segmentation results after the image adjustment operation proposed in [10] in the first row. The second row presents the tracing results after applying APP2 [25] on the segmented images produced by different segmentation methods. Our proposed method combined with APP2 achieves competitive tracing result. We note that joint training of segmentation and tracing may further improve the 3D neuron reconstruction performance [18].

3.2.3 Ablation Study.

As presented in Table 4, model B, C, and D.1 reach better F1 score than Model A, which demonstrates the effect of representation learning in improving learning ability of the base U-Net model and the superiority of hybrid anchor sampling strategy. The reason why model D.2 and D.3 outperform D.1 is because the existence of the proposed pool descriptor can help improve the generality of the latent space. The strict way of computing can further enhance the segmentation performance. We also try to remove the momentum update mechanism of and the experiment result of model E demonstrates the importance of past information storage. In addition, we conducted experiments on the proposed method with different number of anchor-voxels . The result is presented in Fig. 4. When is 512, the F1-score is largest.

| ID | Method | F1 (%) |

|---|---|---|

| A | U-Net | 51.26 |

| B | + | 53.19 |

| C | + | 52.69 |

| D | + | |

| D.1 | w/o dscP | 53.20 |

| D.2 | w/ dscP (relaxed) | 53.34 |

| D.3 | w/ dscP (strict) | 53.54 |

| E | D.3 w/o MoUpdate | 53.09 |

4 Conclusions

In this paper, we propose a novel voxel-wise cross-volume representation learning with the aim of facilitating the challenging 3D neuron reconstruction task. The segmentation of 3D neuron image is beneficial to improve the performance of tracing algorithms but is challenging due to various imaging artefacts and complex neuron morphology. Recent deep learning based methods rely on specially-designed structures in order to fully utilise the scarce 3D dataset. Though effective, extra computational costs have also been introduced. To make better use of the small dataset without sacrificing the efficiency during inference, we propose a novel training paradigm which consists of a SimSiam representation learning module to explicitly encode the semantic cues into the latent code of each voxel by comparing two voxels belonging to the same semantic category among different volumes. Compared to other methods, our proposed method learns a better latent space for the base model without modifying any part of it. And our proposed method shows superior performance in both the segmentation and reconstruction tasks.

References

- [1] Bromley, J., Guyon, I., LeCun, Y., Säckinger, E., Shah, R.: Signature verification using a ”siamese” time delay neural network. Advances in Neural Information Processing Systems (NeurIPS) pp. 737–737 (1994)

- [2] Chaurasia, A., Culurciello, E.: Linknet: Exploiting encoder representations for efficient semantic segmentation. In: 2017 IEEE Visual Communications and Image Processing (VCIP). pp. 1–4. IEEE (2017)

- [3] Chen, L.C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H.: Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European Conference on Computer Vision (ECCV). pp. 801–818 (2018)

- [4] Chen, T., Kornblith, S., Norouzi, M., Hinton, G.: A simple framework for contrastive learning of visual representations. In: International Conference on Machine Learning (ICML). pp. 1597–1607. PMLR (2020)

- [5] Chen, X., He, K.: Exploring simple siamese representation learning. arXiv preprint arXiv:2011.10566 (2020)

- [6] Çiçek, Ö., Abdulkadir, A., Lienkamp, S.S., Brox, T., Ronneberger, O.: 3D U-Net: learning dense volumetric segmentation from sparse annotation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI). pp. 424–432. Springer (2016)

- [7] Grill, J.B., Strub, F., Altché, F., Tallec, C., Richemond, P.H., Buchatskaya, E., Doersch, C., Pires, B.A., Guo, Z.D., Azar, M.G., et al.: Bootstrap your own latent: A new approach to self-supervised learning. arXiv preprint arXiv:2006.07733 (2020)

- [8] He, K., Zhang, X., Ren, S., Sun, J.: Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) 37(9), 1904–1916 (2015)

- [9] Li, Q., Shen, L.: 3D neuron reconstruction in tangled neuronal image with deep networks. IEEE Transactions on Medical Imaging (TMI) 39(2), 425–435 (2019)

- [10] Li, R., Zeng, T., Peng, H., Ji, S.: Deep learning segmentation of optical microscopy images improves 3-D neuron reconstruction. IEEE Transactions on Medical Imaging (TMI) 36(7), 1533–1541 (2017)

- [11] Li, Z., Liu, S., Sun, J.: Momentum2teacher: Momentum teacher with momentum statistics for self-supervised learning. arXiv preprint arXiv:2101.07525 (2021)

- [12] Liu, S., Zhang, D., Liu, S., Feng, D., Peng, H., Cai, W.: Rivulet: 3D neuron morphology tracing with iterative back-tracking. Neuroinformatics 14(4), 387–401 (2016)

- [13] Liu, S., Zhang, D., Song, Y., Peng, H., Cai, W.: Automated 3-D neuron tracing with precise branch erasing and confidence controlled back tracking. IEEE Transactions on Medical Imaging (TMI) 37(11), 2441–2452 (2018)

- [14] Peng, H., Hawrylycz, M., Roskams, J., Hill, S., Spruston, N., Meijering, E., Ascoli, G.A.: BigNeuron: large-scale 3D neuron reconstruction from optical microscopy images. Neuron 87(2), 252–256 (2015)

- [15] Ronneberger, O., Fischer, P., Brox, T.: U-Net: Convolutional networks for biomedical image segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI). pp. 234–241. Springer (2015)

- [16] Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A.: Going deeper with convolutions. In: Proceedings of the IEEE conference on Computer Vision and Pattern Recognition (CVPR). pp. 1–9 (2015)

- [17] Tang, Z., Zhang, D., Song, Y., Wang, H., Liu, D., Zhang, C., Liu, S., Peng, H., Cai, W.: 3D conditional adversarial learning for synthesizing microscopic neuron image using skeleton-to-neuron translation. In: 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI). pp. 1775–1779. IEEE (2020)

- [18] Tregidgo, H.F., Casamitjana, A., Latimer, C.S., Kilgore, M.D., Robinson, E., Blackburn, E., Van Leemput, K., Fischl, B., Dalca, A.V., Mac Donald, C.L., et al.: 3D reconstruction and segmentation of dissection photographs for MRI-free neuropathology. In: International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI). pp. 204–214. Springer (2020)

- [19] Wang, H., Zhang, D., Song, Y., Liu, S., Huang, H., Chen, M., Peng, H., Cai, W.: Multiscale kernels for enhanced U-shaped network to improve 3D neuron tracing. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops. pp. 1105–1113 (2019)

- [20] Wang, H., Song, Y., Zhang, C., Yu, J., Liu, S., Pengy, H., Cai, W.: Single neuron segmentation using graph-based global reasoning with auxiliary skeleton loss from 3D optical microscope images. In: 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI). pp. 934–938. IEEE (2021)

- [21] Wang, H., Zhang, D., Song, Y., Liu, S., Gao, R., Peng, H., Cai, W.: Memory and time efficient 3D neuron morphology tracing in large-scale images. In: 2018 Digital Image Computing: Techniques and Applications (DICTA). pp. 1–8. IEEE (2018)

- [22] Wang, H., Zhang, D., Song, Y., Liu, S., Wang, Y., Feng, D., Peng, H., Cai, W.: Segmenting neuronal structure in 3D optical microscope images via knowledge distillation with teacher-student network. In: 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI). pp. 228–231. IEEE (2019)

- [23] Wang, W., Zhou, T., Yu, F., Dai, J., Konukoglu, E., Van Gool, L.: Exploring cross-image pixel contrast for semantic segmentation. arXiv preprint arXiv:2101.11939 (2021)

- [24] Wei, L., Xie, L., He, J., Chang, J., Zhang, X., Zhou, W., Li, H., Tian, Q.: Can semantic labels assist self-supervised visual representation learning? arXiv preprint arXiv:2011.08621 (2020)

- [25] Xiao, H., Peng, H.: APP2: automatic tracing of 3D neuron morphology based on hierarchical pruning of a gray-weighted image distance-tree. Bioinformatics 29(11), 1448–1454 (2013)

- [26] Zhao, J., Chen, X., Xiong, Z., Liu, D., Zeng, J., Zhang, Y., Zha, Z.J., Bi, G., Wu, F.: Progressive learning for neuronal population reconstruction from optical microscopy images. In: International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI). pp. 750–759. Springer (2019)