Visual Tactile Sensor Based Force Estimation

for Position-Force Teleoperation

Abstract

Vision-based tactile sensors have gained extensive attention in the robotics community. The sensors are highly expected to be capable of extracting contact information i.e. haptic information during in-hand manipulation. This nature of tactile sensors makes them a perfect match for haptic feedback applications. In this paper, we propose a contact force estimation method using the vision-based tactile sensor DIGIT [1], and apply it to a position-force teleoperation architecture for force feedback. The force estimation is carried out by (1) building a depth map for DIGIT gel’s surface deformation measurement, and (2) applying a regression algorithm on estimated depth data and ground truth force data to get the depth-force relationship. The experiment is performed by constructing a grasping force feedback system with a haptic device as a leader robot and a parallel robot gripper as a follower robot, where the DIGIT sensor is attached to the tip of the robot gripper to estimate the contact force. The preliminary results show the capability of using the low-cost vision-based sensor for force feedback applications.

I Introduction

Tactile sensors have gained extensive attention over the past years in the robotics community. Compared with conventional Force Torque sensors (FT sensors) which focus on precise measurement of contact forces, tactile sensors are inspired by human cutaneous perception and have advantages such as capturing surface deformation, detecting texture distribution, and detecting incipient slip [2]. Such multi-modal perception ability makes tactile sensors a suitable candidate to enhance the dexterity and performance of robotic hands [3].

Tactile sensor designs have several variations, each utilizing different sensing modalities. For example, BioTac® uses a pressure transducer to detect vibrations as small as a few nanometers for contact sensing [4], and the soft magnetic skin developed by Shen et al. detects normal and shear force through the change of magnetic flux densities [5]. On the other hand, vision-based tactile sensors provide cost-efficient but promising solutions for tactile sensing [6]. In vision-based tactile sensors, contact information is extracted by performing computer vision algorithms on streamed image sequences from a camera (or cameras) mounted inside the sensors, which makes the sensor easy to access, and easy to implement [7].

The applications of vision-based tactile sensors can be summarised as complementing robot tactile perception and enhancing the autonomous manipulation skills of robotic grippers to achieve human-like in-hand dexterity. In [8], a library of tactile skills was developed by utilizing force, slip, and visual information from a Finger Vision sensor. Similarly, [9], an end-to-end approach for tactile-based insertion with deep probabilistic ensembles and model predictive control was evaluated in contact-rich assembly tasks. Moreover, Yuan et al. have successfully estimated object hardness through a sophisticated surface deformation analysis algorithm [10].

However, the full potential of the vision-based sensors should not be limited to autonomous in-hand robotic applications. As the design of tactile sensors is deeply influenced and inspired by the intrinsic human capability of haptic sensation, it makes a perfect match for haptic applications i.e. teleoperation with force feedback. Teleoperation with force feedback is also known as bilateral control systems, in which a leader device and a follower device are dynamically coupled to reflect physical interactions from the follower to the leader and vice versa [11]. The research of bilateral control has a long history since the idea was first proposed by Goertz in the 1940s [12]. Throughout the development of bilateral control, it has been experimentally applied to space robotics, telesurgery, and hazardous material handling [13].

Bilateral control with force feedback is a direct control strategy that aims for ideal and seamless kinematic and dynamic coupling of leader and follower robots. It can be categorized into two control architectures: (1) position-position architecture, and (2) position-force architecture [14]. In the simplest case, position-position architecture implements proportional-derivative (PD) controllers on both leader and follower sides and tracks each other. In this sense, users will feel the follower robot’s driving forces as force feedback. However, the structure will present not only the surface contact force but also the inertia and other dynamic forces that drive the follower robots in free space. These extra forces’ impact on the control becomes severe when the leader and follower have substantially different dynamic properties.

To mitigate the undesired forces during free space movement, a position-force architecture is applied. In the control architecture, a force sensor is usually attached to the tip of the follower robot and it is used to measure contact force for feedback. The architecture only feedbacks the external forces acting between the follower robot and the environment and this makes the user’s force perception clear. To enhance the stability of position-force architecture, feedback scaling, or passivity theory are used in general [15].

In this paper, we adopt this position-force bilateral control for force feedback in robotic gripper teleoperation. The aim of this paper is to show the capability of vision-based tactile sensors on haptic (force) feedback applications. We use a vision-based tactile sensor for normal force estimation and integrate it with a position-force bilateral control system. The contributions of this paper are summarized as follows:

-

1.

A polynomial regression-based force estimation is performed to build a mapping of force value and measured depth value from vision-based tactile sensor DIGIT [1].

-

2.

Position-Force bilateral control that utilizes force estimation from DIGIT is implemented for force feedback in a teleoperated grasping system.

-

3.

The force feedback performance of the proposed system is evaluated by conducting preliminary experiments on rigid object contact and a teleoperated in-hand pivoting task.

II Position-Force Architecture and Force Estimation by Vision-Based Tactile Sensor

In this section, the implemented position-force architecture is described and the details of the force estimation algorithm is given.

II-A Position-Force Architecture

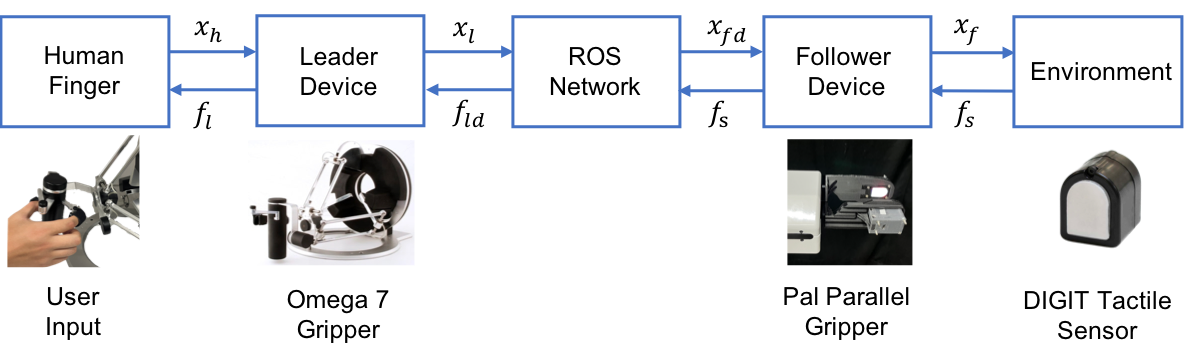

Fig. 1 shows the position-force architecture, in which tactile sensor DIGIT is used to substitute conventional FT sensor, and , , , , , , , denotes for human command position, leader position, follower desired position, follower position, leader force, leader desired force, measured force from DIGIT sensor, respectively. Here the leader robot is the gripper of the Omega 7 haptic device (Force Dimension) and the follower robot is a parallel robotic gripper by PAL robotics. The data communication is achieved through the ROS framework. The control law of the position-force architecture is given as follows,

| (1) | ||||

where, the leader robot is force controlled to track measured force from the DIGIT sensor , and the term is an input to the position controller of the follower robotic gripper as a reference position to track. Through this position control, the gripping force can be modulated by teleoperation.

II-B Force Estimation by Polynomial Regression and Depth Map from DIGIT

This section explains the details of how the depth maps are extracted from DIGIT RGB images. The depth map is defined as the deformation level of each pixel on the gel surface. The process is shown in Fig. 2. To get the depth map, we first train a three-layer Multi-Layer Perceptron (MLP) that learns a mapping from RGBXY values to surface normal values represented as ,, and , respectively. We used the Adam optimizer with a learning rate of 0.001 to minimize the MSELoss. In addition, a dropout rate of 0.05 is included. We collect 40 RGB images by pressing a steel ball on the gel to train the MLP model. The steel ball has a diameter of 6 mm, and theoretically, the hemispherical shape enables us to extract surface normals in all directions [6]. Surface normal image has a linear relationship with the surface gradients as described by the following equations,

| (2) |

where, , and represent image gradients in the X and Y directions, respectively. By applying this equation, we can obtain the surface gradients from surface normal images. Given the surface gradients, by using a fast Poisson solver with Discrete Sine Transform (DST), we can finally build the depth map of the gel surface, similar to prior work by Wang et al. [16], and Paloma et al [17].

Once the depth map is generated, we select the maximum depth value from the depth map. This maximum depth value corresponds to the maximum surface deformation of the DIGIT gel during contact. Then, by building a regression model to map the gel deformation and ground truth contact force, we can later estimate the contact force in real time. This process is similar to using Young’s modulus to estimate the counter force from an object with respect to surface deformation level.

To acquire the mapping between depth values and force values, we programmed the Omega 7 haptic device to press downwards (Z direction) with a constant displacement at each press. A rigid cylinder shape probe with a 5 mm radius and a curved surface facing down is attached to the tip of the device which is in direct contact with the DIGIT gel. The force value is read from the Omega 7 haptic device, and the depth value is read from the DIGIT sensor. The orange dots in Fig. 3 show the collected force data with respect to depth value when pressing the DIGIT gel. Then we applied a least-square regression with 3 degrees polynomial to fit the data. The 3 degrees polynomial is defined as

| (3) |

where denotes for coefficients. Once we get , we can use it for real-time force estimation. The result of polynomial fit is shown by a blue line in Fig. 3, where the R-square measurement is 0.9987. The estimated force is then published as a ROS topic with a 30HZ refreshing rate which is the same as the camera’s Frames Per Second (FPS).

III Experiments and Results

In this section, we evaluate the preliminary performance of the force feedback with a vision-based tactile sensor by conducting rigid object contact and in-hand pivoting experiments.

III-A Rigid object contact and force feedback

A rigid object contact experiment is conducted to show the force feedback capability. In the experiment, an operator controls the leader device to grasp a rigid object once without force feedback and once with force feedback. We treat the user’s hand position as the desired position. As Fig. 4 shows, in the beginning, the operator moves freely, then in the region (a) the operator has contact with a rigid object without any force feedback, which leads to a large deviation from the desired position. In region (b), the operator contacts the rigid object with force feedback from the DIGIT sensor. The mean value of the desired position in region (b) is 0.0121 m, and the mean value of the actual position is 0.0127 m. Hence, the mean error is 0.0006 m. The operator could maintain surface contact without large positional deviation and can feel the rigid object’s location. In the free space movement, due to the nature of position-force architecture, no follower dynamics are felt by the operator.

III-B In-hand pivoting

A preliminary experiment of teleoperated in-hand pivoting is carried out to evaluate the performance of the proposed force feedback architecture. In-hand pivoting is an important dexterous manipulation skill in robotics. Through in-hand pivoting, a robotic grasping system can perform repositioning tasks and hence compensate for environmental uncertainties and imprecise motion execution [18]. Humans rely on haptic sensations to perform this dexterous task, and in this preliminary experiment, we evaluate the haptic feedback performance of the proposed architecture for such a teleoperated dexterous task.

In this experiment, as Fig. 5 shows, the experiment task is to pivot a grasped cylinder marker from a horizontal position (Fig. 5a) to align with the bottom left corner of the DIGIT sensor (Fig. 5c). The surface friction can be controlled by controlling the distance between the gripper fingers, i.e. wider finger distance reduces friction hence the object will pivot because of gravity. Throughout the experiment, visual feedback with a fixed point of view is given (Fig. 5). We evaluated two conditions, Visual + Force feedback and Visual feedback. Three subjects participated in the experiment. During the experiment, subjects pivot the object 5 times for each condition. Task completion time and success rate are measured.

The experimental result is shown in Fig. 6. The blue and red plot represents the box chart of task completion time for the Visual + Force feedback condition and the Visual feedback condition, respectively. The median value of the visual + force feedback is 13.75, and the visual feedback condition is 15.37. The task success rate is 86.7% and 40.0%, respectively. The experimental results indicate that by using force feedback, subjects complete the pivoting with a higher success rate and slightly faster median completion time. However, in visual + force feedback, some trials required a higher completion time. This may be due to the subjects needing more time to adjust the grasping according to the perceived force, which requires further analysis in the future. The results also imply that the proposed position-force system can display the force feedback for operators, and proves the potential of tactile sensor DIGIT for such a force feedback application.

IV Conclusions and Future Works

Vision-based tactile sensors that aim to enhance the dexterity of robotic manipulation systems have gained extensive attention in the community. While the nature of the tactile sensors makes them a good candidate for in-hand manipulation tasks, the sensors can potentially be applied to haptic feedback in teleoperation. This paper proposes a force feedback methodology by integrating a position-force architecture and a vision-based tactile sensor DIGIT for contact force measurement. The force measurement is performed by learning a depth estimation model, followed by building a polynomial regression model that maps the relationship between force and depth. In a rigid object contact experiment, the operator can feel the force feedback and maintain surface contact. In the preliminary experiment of in-hand pivoting, by using force feedback the subjects are able to complete the pivoting task with a faster median time and a higher success rate. The preliminary experimental results indicate that the vision-based tactile sensor DIGIT can be integrated with a teleoperation system to provide force feedback. It would be interesting to further enhance the sensor performance with multi-point contact in future work. Moreover, the integration of haptic feedback and other sensing modalities such as shear force estimation, as well as surface slip prediction [19] can be explored in the future.

References

- [1] M. Lambeta, P.-W. Chou, S. Tian, B. Yang, B. Maloon, V. R. Most, D. Stroud, R. Santos, A. Byagowi, G. Kammerer, D. Jayaraman, and R. Calandra, “DIGIT: A novel design for a low-cost compact high-resolution tactile sensor with application to in-hand manipulation,” IEEE Robotics and Automation Letters (RA-L), vol. 5, no. 3, pp. 3838–3845, 2020.

- [2] S. Dong, D. Ma, E. Donlon, and A. Rodriguez, “Maintaining grasps within slipping bounds by monitoring incipient slip,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 3818–3824.

- [3] J. A. Fishel and G. E. Loeb, “Sensing tactile microvibrations with the biotac—comparison with human sensitivity,” in 2012 4th IEEE RAS & EMBS international conference on biomedical robotics and biomechatronics (BioRob). IEEE, 2012, pp. 1122–1127.

- [4] J. Reinecke, A. Dietrich, F. Schmidt, and M. Chalon, “Experimental comparison of slip detection strategies by tactile sensing with the biotac® on the dlr hand arm system,” in 2014 IEEE international Conference on Robotics and Automation (ICRA). IEEE, 2014, pp. 2742–2748.

- [5] Y. Yan, Z. Hu, Z. Yang, W. Yuan, C. Song, J. Pan, and Y. Shen, “Soft magnetic skin for super-resolution tactile sensing with force self-decoupling,” Science Robotics, vol. 6, no. 51, p. eabc8801, 2021.

- [6] W. Yuan, S. Dong, and E. H. Adelson, “Gelsight: High-resolution robot tactile sensors for estimating geometry and force,” Sensors, vol. 17, no. 12, p. 2762, 2017.

- [7] N. F. Lepora, Y. Lin, B. Money-Coomes, and J. Lloyd, “Digitac: A digit-tactip hybrid tactile sensor for comparing low-cost high-resolution robot touch,” IEEE Robotics and Automation Letters, 2022.

- [8] B. Belousov, A. Sadybakasov, B. Wibranek, F. Veiga, O. Tessmann, and J. Peters, “Building a library of tactile skills based on fingervision,” in 2019 IEEE-RAS 19th International Conference on Humanoid Robots (Humanoids). IEEE, 2019, pp. 717–722.

- [9] B. Belousov, B. Wibranek, J. Schneider, T. Schneider, G. Chalvatzaki, J. Peters, and O. Tessmann, “Robotic architectural assembly with tactile skills: Simulation and optimization,” Automation in Construction, vol. 133, p. 104006, 2022.

- [10] W. Yuan, C. Zhu, A. Owens, M. A. Srinivasan, and E. H. Adelson, “Shape-independent hardness estimation using deep learning and a gelsight tactile sensor,” in 2017 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2017, pp. 951–958.

- [11] R. J. Anderson and M. W. Spong, “Bilateral control of teleoperators with time delay,” IEEE Transactions on Automatic control, vol. 34, no. 5, pp. 494–501, 1989.

- [12] R. C. Goertz, “Manipulator systems developed at anl,” in Proceedings, The 12th Conference on Remote Systems Technology, 1964, pp. 117–136.

- [13] P. F. Hokayem and M. W. Spong, “Bilateral teleoperation: An historical survey,” Automatica, vol. 42, no. 12, pp. 2035–2057, 2006.

- [14] B. Siciliano, O. Khatib, and T. Kröger, Springer handbook of robotics. Springer, 2008, vol. 200.

- [15] Y. Zhu, T. Aoyama, and Y. Hasegawa, “Enhancing the transparency by onomatopoeia for passivity-based time-delayed teleoperation,” IEEE Robotics and Automation Letters, vol. 5, no. 2, pp. 2981–2986, 2020.

- [16] S. Wang, Y. She, B. Romero, and E. Adelson, “Gelsight wedge: Measuring high-resolution 3d contact geometry with a compact robot finger,” in 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2021, pp. 6468–6475.

- [17] P. Sodhi, M. Kaess, M. Mukadanr, and S. Anderson, “Patchgraph: In-hand tactile tracking with learned surface normals,” in 2022 International Conference on Robotics and Automation (ICRA). IEEE, 2022, pp. 2164–2170.

- [18] S. Cruciani and C. Smith, “In-hand manipulation using three-stages open loop pivoting,” in 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2017, pp. 1244–1251.

- [19] F. Veiga, J. Peters, and T. Hermans, “Grip stabilization of novel objects using slip prediction,” IEEE transactions on haptics, vol. 11, no. 4, pp. 531–542, 2018.