Visual Spatial Attention and Proprioceptive Data-Driven Reinforcement Learning for Robust Peg-in-Hole Task Under Variable Conditions

Abstract

Anchor-bolt insertion is a peg-in-hole task performed in the construction field for holes in concrete. Efforts have been made to automate this task, but the variable lighting and hole surface conditions, as well as the requirements for short setup and task execution time make the automation challenging. In this study, we introduce a vision and proprioceptive data-driven robot control model for this task that is robust to challenging lighting and hole surface conditions. This model consists of a spatial attention point network (SAP) and a deep reinforcement learning (DRL) policy that are trained jointly end-to-end to control the robot. The model is trained in an offline manner, with a sample-efficient framework designed to reduce training time and minimize the reality gap when transferring the model to the physical world. Through evaluations with an industrial robot performing the task in 12 unknown holes, starting from 16 different initial positions, and under three different lighting conditions (two with misleading shadows), we demonstrate that SAP can generate relevant attention points of the image even in challenging lighting conditions. We also show that the proposed model enables task execution with higher success rate and shorter task completion time than various baselines. Due to the proposed model’s high effectiveness even in severe lighting, initial positions, and hole conditions, and the offline training framework’s high sample-efficiency and short training time, this approach can be easily applied to construction.

Index Terms:

Robotics and automation in construction; reinforcement learning; deep learning for visual perception.I Introduction

Anchor-bolt insertion is a peg-in-hole task for holes in concrete that is extensively performed in the construction field [1]. Since it is repetitive, dirty, and dangerous [2], efforts have been made to automate this task with industrial robots [2, 3]. However, automating anchor-bolt insertion is particularly difficult in this field because the robot must be able to adapt to variable conditions such as drastic changes in lighting caused by natural light or various light sources, and different hole surface conditions due to the brittle nature of concrete. Furthermore, the robot should be setup (e.g., trained) and be able to execute the task in short time in order to reach a lead time close to the time taken by humans.

To this end, in our prior study [2], we proposed a peg-in-hole strategy that used a deep neural network (DNN) trained via deep reinforcement learning (DRL) to generate discrete robot motions that adapted to different hole conditions. This method used force, torque, and robot displacement as input to avoid visual-detection problems caused by harsh lighting conditions. While the method was effective for finding holes, it required long time to be executed.

The use of visual feedback with image feature detection algorithms is a promising approach to reduce the task completion time because it enables direct estimation of the hole position to effectively guide the robot. However, traditional algorithms such as template matching [4] struggle to perform well in poorly lit conditions. Subsequent approaches [5, 6, 7] involving deep convolutional neural networks (CNNs) have been used successfully in challenging lighting conditions, but they require large amounts of data to learn to be invariant to irrelevant image features [6].

Another strategy to reduce task completion time is to extensively train DRL algorithms in simulation environments and transfer the trained model to the real world [8]. Some peg-in-hole approaches [9, 10] have accomplished this transfer by reducing the reality gap using techniques such as domain randomization [11]. However, in the present study, it is difficult to model the friction of concrete to be close enough to reality to use these approaches successfully. This is because the concrete’s brittleness makes the wall surface to be inconsistent, with areas of high friction coefficient that prevent the peg from sliding, or make it slide unsteadily that results in excessively noisy forces and torques.

Recent deep learning approaches integrate spatial attention, autoencoders, and robot motion generation networks [12, 13, 14, 15] to learn to generate motion based on image features as well as task-dependent image features. These approaches have proven to be sample-efficient and generalize well to different image conditions. Using these approaches as our basis, we propose a sample-efficient vision-based robot control model for performing peg-in-hole tasks in concrete holes (Fig. 1). The model is robust to challenging lighting conditions (with misleading shadows), is trained in an offline manner and in a short time, and contributes to the improvement of the task success rate and completion time.

Our main contributions are as follows: (1) modifications of a spatial attention point network (SAP), introduced in prior work [14] to extract important points (referred to as “attention points”) of images even without a recurrent layer; (2) the introduction of a model that consists of SAP integrated to a DRL policy, and a method to jointly train them end-to-end to teach SAP to generate task-specific attention points, and the DRL policy for generating robot motion based on the attention points; (3) an evaluation of SAP and the proposed model for images with misleading shadows; and (4) an offline framework to train models in short time, with minimal data extraction from the environment and a minimal reality gap when transferring the models to the actual field.

I-A Related work

I-A1 Peg-in-hole with DRL

DRL has been commonly applied to real robots to perform peg-in-hole tasks without visual input [16, 9]. These “blind” approaches typically use force, torque, and position feedback to train DRL policies (e.g., deep q-network (DQN) [16], deep deterministic policy gradients (DDPG) [17], and soft actor critic (SAC) [9]) to control the robot by outputting position/orientation subgoals, force/moment subgoals, or parameters for robot compliance control [9]. Although these approaches are effective, they rely on simulators and sliding the peg around the hole to search for it, which is difficult for holes in concrete due to the concrete’s brittleness and high friction coefficient.

DRL has also been applied to peg-in-hole tasks with visual feedback [18, 17, 19]. However, these studies rely on cameras fixed apart from the robot end effector and require prior motion information from classical controllers, humans demonstrations [17, 19], or extensive simulation [18] to train safe and sample-efficient visuomotor policies. In addition, they did not fully evaluate the robustness of their approaches in challenging lighting variations.

In contrast, in this study we move the robot in a hopping manner to avoid the friction against concrete, and we avoid simulators by extracting small amounts of proprioceptive data and images (from a camera fixed to the end effector) from the field for offline training. We show that a model trained with this motion and training method can enable a robot to accomplish a peg-in-hole task in harsh lighting conditions.

I-A2 Attention for motion generation

Several models have been proposed that use visual attention to extract image features to provide highly relevant input for motion controllers [19, 13, 14, 15, 20]. Levine et al. [19, 13] proposed the use of CNNs and soft argmax to obtain position coordinates in an image for image feature extraction. They showed that jointly training the image feature extraction model and a reinforcement learning (RL) policy end-to-end results in higher success rates than training them separately. Their method enabled a robot to accomplish multiple tasks (e.g., coat hanging and cube fitting) but it required at least 15000 images, 3 to 4 hours of training time, and does not generalize to drastically different settings, especially when visual distractors occlude the target objects.

Ichiwara et al. [14, 15] proposed a method of jointly training a point-based attention mechanism that predicts attention points based on image reconstruction and a long-short term memory (LSTM) layer end-to-end to generate motion. Their method enabled a robot to perform simple pick and place tasks and a zipper closing task with variable backgrounds, light brightness, and distractor objects. Their method requires small amounts of data, but it also requires expert human demonstrations (for imitation learning) and high computation cost due to the LSTM layer (up to 3 h of training in 2 GPUs of 16GB of memory). Moreover, it was not tested with misleading shadows.

In this study, we propose a visual attention-based model which has low computational cost and is trained with small amounts of data acquired automatically from the environment (no expert demonstration). By evaluating this model in an experimental setup that replicates a construction site, which includes misleading shadows and variable target surface conditions, we demonstrate that it is able to effectively conduct a peg-in-hole task even in these conditions.

II Methods

II-A Hole search and peg insertion strategy

The hole search method used is shown in Fig. 2. It consists of the following steps: (a) moving the peg to a hole position roughly estimated by a camera; (b) attempting to insert the peg by moving it toward the hole; (c) separating the peg from the wall and moving it to the next search position if the peg touches the wall; (d) attempting to insert the peg again; and repeating steps (c) and (d) until (e) the hole is found. By separating the peg from the wall, the unwanted effect of the high friction coefficient of the concrete surface can be avoided when moving the peg between hole search positions. In anchor-bolt insertion, after the hole is found, the peg (i.e., anchor bolt) is completely inserted into the wall by hammering, which overcomes the peg “wedging” and “jamming” problems considered in classic studies [21].

II-B Motion generation model

II-B1 Model inputs and outputs

To control the robot to move toward the hole, we propose the model shown in Fig. 1. The model, namely the reinforcement learning (RL) agent, is given a set of six sequential observations of the environment (time t-5 to t). This window of observations is used because: (1) fully connected layers are used to generate sequential robot motion instead of LSTM as in [15], (2) it reduces computational cost as the LSTM’s back propagation through time is avoided, (3) it is effective for inferring temporal statistics [22], and (4) it simplifies data storage and random batch sampling from the replay buffer to train the agent. Each observation consists of an image , forces and torques , robot displacement , and the previous robot action . includes the forces in the x, y, and z axes and the moments in the x and y axes. is used because it increases as the peg approaches the hole since the brittle borders of holes in concrete often become chamfered. Previous actions are used because stochastic policies should also condition their action on the action history [23], and the previous actions prevent the robot from looping back to the same place [3]. After the model is input with this window of observations, it predicts the images of the next time and the actions to be taken by the robot (RL environment). The actions are peg movements in one of eight directions ( = up, down, left, right, or diagonals) and one of three discrete step sizes ( = 1, 2, or 3 mm), for a total of 24 different action options.

II-B2 Model structure

The proposed model consists of a spatial attention point network (SAP) integrated to a deep RL policy (Fig. 1). Both SAP and the RL policy are trained end-to-end; thus, we call it SAP-RL-E. Unlike prior work [14], we refer to SAP as the network that extracts the attention points. Thus, we removed the LSTM layer of the prior model so SAP could work alone (Fig. 3) or with the RL policy (Fig. 1). SAP consists of an attention point extraction block (hereinafter, attention block), an image feature extraction block, and an image prediction block. The attention block uses a CNN-based encoder block and soft argmax to extract the positional coordinates of maximum activation from an input image [13], which are the attention points . The image feature extraction block also extracts features of the input image with an encoder block and inputs it into the image prediction block in a skip connection manner. The attention points from the current time that are output by the attention block are input to the RL policy together with , , and (hereinafter referred to as proprioceptive data). The RL policy predicts the attention points of the next time as well as the action to be taken by the robot . The image prediction block uses the predicted attention points and the image features to predict (i.e., reconstruct) the image of the next time . This block does this by: (i) generating a heat map with an inverse argmax modified to be differentiable (heat map generator), (ii) multiplying the heat map element-wise with , and (iii) passing the result image through a transposed CNN block (decoder) that generates . The images are reconstructed because it was shown that the reconstruction improves the model performance [24], stabilizes the attention points [14], and avoids manual labelling the points [15]. This end-to-end approach makes it possible to simultaneously train SAP and the RL policy, enabling SAP to predict attention points that are relevant to the task and the RL policy to predict RL actions that are guided by those attention points.

II-B3 DRL algorithm

The DRL algorithm used for the RL policy was the double deep q-network (DDQN) as its effectiveness for this task was shown in our prior work [2]. The hyper-parameters used are shown in Table I. Under a given policy , Q-values are estimated by , where is the reward at time , is the discount factor, is the action, and is the input state of the DDQN ( + proprioceptive data of total size 3x23). The DNN of DDQN is trained to approximate the optimal Q-function , by updating its weights with the Bellman equation given by , where is the gradient function, is the learning rate, and is the loss function which will be presented in Section II-B4. The policy used to choose the actions based on the Q-function was the Boltzmann exploration policy, in which parameter was set to induce exploration at the beginning of the training and annealed every 50 episodes. A replay buffer was used to store images and proprioceptive data to provide random samples that stabilize DNN training. The reward function implemented for each step is when the DRL episode does not end and is given by the following when the episode ends:

| (1) |

Here, is the reward when the hole is found, is the initial distance from the hole, is the final distance, and is the distance limit. The negative reward at the end of each step encourages the DNN to minimize the number of steps. The comparison of and at the end of the episode induces the model to approximate the distance from the peg to the hole position. The DRL episode ends when (i) the peg reaches distance limit from the hole center, (ii) the number of steps taken exceeds 100, or (iii) the peg is inserted into the hole which is identified when and (see Table I). Note that our model is not limited to DDQN and can be used with complex algorithms such as DDPG and SAC. However, more time and elaborate training methods for continuous actions are required [12].

II-B4 Loss function

The loss function is defined as:

| (2) | ||||

| (3) | ||||

| (4) | ||||

| (5) |

Here, is the image, is the attention points, is the q value of the main DDQN, is the q value of the target DDQN (a copy of the main DDQN), and is the reward of the RL algorithm [25]. The variables with an apostrophe are the variables of the next time. and are the mean square error of the image prediction and the temporal difference (TD), respectively. is the mean square error of the Euclidean distance between the attention points of the RL policy input and output , and and denote the loss weight of and , respectively. By adding , the model can learn to predict attention points in positions near their predecessors. This reflects the reality since the position of important points in the images do not change significantly in one time-step. In this study, RGB images with a resolution of were used (height() = 64, width() = 64, and channels() = 3). There are eight attention points, and because they are xy coordinates, . The window size is six, and and are annealed from 0.0001 to 0.1 and 1.5 to 1.0, respectively, within episodes 0 and 1000. Starting with a high encourages the model to learn to encode images more optimally before learning to generate motion.

| Batch size | 32 | Optimizer | Adam |

| Activation hidden layers | ReLU | Learning rate () | 0.001 |

| Activation last layer | Linear | Discount factor () | 0.99 |

| Boltzmann parameter () | 100-1 | Distance limit () | 4.5 mm |

| Episodes for updating target network | 100 | ||

| Reward when hole is found () | 100 | ||

| Force threshold on z-axis () | 20 N | ||

| Displacement threshold on z-axis () | 9 mm | ||

II-C Offline training framework

Because short lead time and robustness are the key requirements for applying the proposed model to construction, we propose a framework that enables offline training of the proposed model with minimal data extraction from the environment. The offline training reduces training time, and the data extraction reduces the reality gap when using the robot in the real world. The framework is made up of three steps: (i) attempting anchor-bolt insertion at multiple points around the hole for a single hole (Fig. 4); (ii) storing observations (images, proprioceptive data) and search results (“hole found”, “searching”, or “out-of-bounds”) from each attempt along with the position of the attempt in order to create a hole map; and (iii) using the hole map data as input to train the model via DRL in place of the real robot.

This hole map can be created because, in this study, the robot moves in discrete steps, and such movement limits the positions around the hole that the robot (i.e., peg) can touch. Thus, the robot movement enables hole mapping that is data efficient. The mapping is performed by attempting insertions following a decided trajectory that is shown in the supplementary video. In contrast to our prior work[3], the hole map of this study also stores images in addition to the proprioceptive data.

II-D Models for comparison

For comparison with the proposed model, a model that uses only proprioceptive data (P-RL), one that uses an autoencoder (AE) trained separately from the RL policy (AE-RL), and one that uses SAP also trained separately from the RL policy (SAP-RL) were used. P-RL only consists of the RL policy and is trained in conditions similar to that of our prior work [2, 3]. AE-RL has its AE trained first with the images of a hole map, and then it uses the trained AE to generate image features to train the RL policy (see Fig. 3). In SAP-RL, SAP is trained in the same manner as that of AE and uses the trained SAP to generate attention points to train the RL policy. The CNNs of SAP and AE are of the same structure as the CNNs of SAP-RL-E. The RL policy of both AE-RL and SAP-RL are also input with proprioceptive data because it improves the network performance during target occlusions as shown in [15]. Note that unlike the proposed model, both the AE and SAP learn to generate image features or attention points of the current image and do not consider the robot motion for the predictions. All comparison models are trained with a window size of six similar to the proposed model.

III Experimental Setup and Conditions

III-A Experimental setup

The experimental setup used is shown in Fig. 5. The setup includes a Denso robot (VM-60B1) equipped with an air gripper (Airtac HFZ20), a force-torque (FT) sensor (DynPick® WEF-6A1000-30), and a camera with a wide-angle lens (Basler acA1920-40gc/ Kowa Lens LM6HC F1.8 f6 mm 1”). The camera was set with the default parameters, but its region of interest was set to capture only the area around the peg tip (64x64 px). The robot was programmed to grasp a wedge-type anchor bolt and perform the peg-in-hole task in 13 holes in a concrete wall. The holes were opened with a drill bit of the same diameter as a 12-mm anchor bolt (about 0.2 mm clearance between peg and hole).

During the hole search, the robot was moved following the flow presented in Subsection II-A. The forces and moments were measured by the FT sensor, and was calculated from the xyz position of the tip of the anchor bolt obtained through forward kinematics. Before each DRL training episode, the robot was placed in a home position perpendicular to the wall, the FT sensor was zeroed (to compensate for gravity), and then the robot was moved to an initial search position. After the episode ended, the robot was retracted and the aforementioned steps were repeated until the maximum number of episodes was reached.

The three lighting conditions used are shown in Fig. 6. They replicate the variations of lighting that occur in the field, which is our definition of variable lighting conditions. The room lighting, generated by multiple sources of white light on the ceiling, replicates a well illuminated field and provided the setup with images with almost no shadow. The left and bottom lighting conditions, generated by a flood light (DENSAN PDS-C01-100FL) set to emit white light at the left and bottom side of the peg respectively, provided images with misleading shadows. These two lighting conditions were chosen because they were considered the most challenging. They are possible if a light source external to the robot system is used. Right and top lights were not used because they did not cast shadows that were visible to the camera.

The PC used for training was equipped with an Intel i7-8700 CPU, 16GB of RAM memory and an NVIDIA GeForce GTX 1070Ti GPU with 8 GB of memory.

III-B Training conditions

To train the models, only the map of hole 1 taken in regular room light was used, which is in accordance with the proposed training framework. They were trained offline for 4000 episodes, starting from initial positions randomly chosen within the “searching” search result illustrated in Fig. 4. To avoid overfitting, Gaussian noise was added to the inputs.

III-C Evaluation conditions

To evaluate the proposed model, offline tests (with the hole maps) and online tests (with the real robot) were conducted with all models. Both tests were conducted by attempting to find 12 unknown holes (2 to 13) in all three lighting conditions with the peg starting from the initial positions illustrated in Fig. 7. For the offline tests, hole maps were extracted from all the 12 holes. The tests were repeated ten times per initial position (160 tests per hole per lighting condition, for a total of 5760 tests per model).

The initial positions were set at an absolute distance of 3 and 4 mm away from the hole origin in the x, y, or both axes (hereinafter referred to as initial positions at 3 and 4 mm, respectively). This is because, although the maximum initial positioning error estimated for the current setup is 3 mm, distances that are larger than this error have also to be overcame by the models in field applications.

IV Results

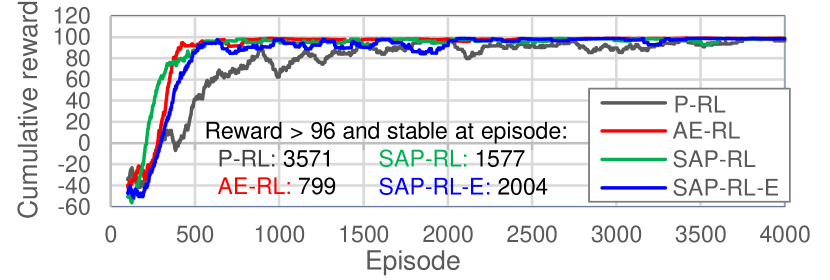

IV-A Training results

The offline training results are shown in Fig. 8 in terms of the average cumulative reward of 100 episodes (hereinafter, cumulative reward). The figure also lists the number of episodes in which the cumulative reward was above 96 (the maximum reached by P-RL) and stable. Overall, all models nearly reached the maximum cumulative reward of 100 during training, demonstrating that the models learned to control the robot to find the holes effectively. However, P-RL took more episodes to converge (3571 episodes), which was expected since it is provided with less input information on the environment. The cumulative reward of AE-RL, SAP-RL, and SAP-RL-E (proposed model) increased quickly, but AE-RL and SAP-RL converged more quickly than SAP-RL-E. This was also expected because, while AE-RL and SAP-RL are trained with image features from pre-trained image encoding networks, SAP-RL-E is trained directly from the image input, a condition which was previously observed to require a longer training time [26]. Nevertheless, considering the high number of episodes for P-RL to converge and the fact that SAP-RL-E trains the image encoding networks in parallel with the RL policy, it can be inferred that SAP-RL-E is sample efficient as its cumulative reward increased quickly and converged with only few episodes more than SAP-RL.

The time required to train the models is shown in Table II. “RL train” is the RL policy training time until convergence (i.e., until a stable cumulative reward above 96 is reached). The online training time, estimated only for P-RL (online P-RL) from the time taken by the robot during online tests, was considerably longer than the time needed to train the other models with the offline training framework. This demonstrates that the framework reduces the training time considerably. The time needed to train SAP-RL-E is almost the same as the time taken by P-RL and AE-RL, which suggests they have similar computational costs. This is because, unlike AE-RL and SAP-RL, SAP-RL-E does not require pre-training an image encoding network, and it takes fewer number episodes to converge than P-RL (2004 versus 3571 for P-RL, as shown in Fig. 8). This fewer number of episodes compensates for the longer time SAP-RL-E takes to train each episode. As a result, the proposed model can easily substitute P-RL at construction sites considering the lead time. Note that the training time of AE is shorter than of SAP because AE has fewer weights (2072 versus 3176 for SAP).

| Model | Input | Mapping | Pre-train | RL train | Total |

|---|---|---|---|---|---|

| Online P-RL | Proprioceptive | - | - | 15:04* | 15:04* |

| P-RL | Proprioceptive | 1:10 | - | 1:10 | 2:20 |

| AE-RL | Im+Proprio. | 1:10 | 0:58 | 0:15 | 2:23 |

| SAP-RL | Im+Proprio. | 1:10 | 2:05 | 0:27 | 3:42 |

| SAP-RL-E | Im+Proprio. | 1:10 | - | 1:09 | 2:19 |

IV-B Offline evaluation results

The offline test results are listed in Table III. In the room light, all vision-based models outperformed the model that only used proprioceptive feedback (P-RL). They presented higher success rates (SRs) and shorter completion times (CTs). In particular, AE-RL presented a considerably short CT of less than three steps (2.5 s/step), which is quite close to the performance of humans. However, AE-RL presented low SR in harsh lighting conditions, making it unsuitable for construction sites. The models that used SAP (SAP-RL and SAP-RL-E), however, demonstrated robustness to the challenging lighting conditions; namely, they could execute the peg-in-hole task with high SR and short CT, outperforming P-RL in both metrics. The proposed model (SAP-RL-E) yielded the most optimal results with an average SR of 97.4% and CT of 7.65 s, which is a 9.2% higher SR and 4.43 s shorter CT than that of the baseline P-RL. These results suggest that the SAP enables the extraction of relevant points of the image even under harsh lighting conditions, and the proposed SAP-RL-E can substitute the P-RL for executing peg-in-hole tasks in the construction field.

| Light | Room | Left | Bottom | All | ||||

|---|---|---|---|---|---|---|---|---|

| SR | CT | SR | CT | SR | CT | SR[%] | CT[s] | |

| [%] | [s] | [%] | [s] | [%] | [s] | CI(±E) | CI(±E) | |

| P-RL | 88.2 | 12.08 | - | - | - | - | 88.2 ±1.4 | 12.08 ±0.46 |

| AE-RL | 91.4 | 6.30 | 22.6 | 3.17 | 59.5 | 8.42 | 57.8 ±1.3 | 5.96 ±0.16 |

| SAP-RL | 95.1 | 9.57 | 93.6 | 10.25 | 95.3 | 9.23 | 94.7 ±0.6 | 9.68 ±0.21 |

| SAP-RL-E | 97.4 | 7.37 | 95.8 | 7.76 | 99.1 | 7.82 | 97.4 ±0.4 | 7.65 ±0.13 |

IV-C Online evaluation results

Table IV lists the online test results of the models. The tests with the real robot showed that, as in the offline test results, the proposed SAP-RL-E model was the most successful overall. AE-RL had the lowest CT, but its low SR in challenging lighting makes it ineffective in the field. The success rate of P-RL decreased the farther the peg initial position was from the hole center (average success rate of the initial positions 4 mm from the hole was 79.4%). We hypothesize that this is because the forces and moments of the x and y axes (in the wall plane) become smaller the farther the peg is from the hole center. This issue did not greatly affect the vision-based models, which suggests they can generalize more effectively to different initial positions.

Although SAP-RL-E and SAP-RL performed similarly, their confidence interval (CI) did not overlap. Also, the p-values for SR and CT calculated via two-tailed z-tests were 0.026 and 0.002 (0.05), respectively. These results suggest that the differences between the results of the models are statistically significant; thus, SAP-RL-E is more effective than SAP-RL.

| Light | Room | Left | Bottom | Average | ||||

|---|---|---|---|---|---|---|---|---|

| SR | CT | SR | CT | SR | CT | SR[%] | CT[s] | |

| [%] | [s] | [%] | [s] | [%] | [s] | CI(±E) | CI(±E) | |

| P-RL | 87.4 | 11.57 | - | - | - | - | 87.4 ±1.5 | 11.57 ±0.35 |

| AE-RL | 91.4 | 6.40 | 19.4 | 3.88 | 57.1 | 8.34 | 56.0 ±1.5 | 6.21 ±0.19 |

| SAP-RL | 92.8 | 8.78 | 92.6 | 10.26 | 92.2 | 9.01 | 92.5 ±0.7 | 9.35 ±0.20 |

| SAP-RL-E | 94.5 | 7.85 | 91.6 | 8.62 | 95.5 | 8.15 | 93.9 ±0.7 | 8.21 ±0.22 |

IV-D Image reconstruction and attention point analysis

Fig. 9 presents the image reconstruction and attention points generated by the vision-guided models. In the room light, all models were able to reconstruct the images adequately, and SAP and SAP-RL-E were able to generate attention points focused on the region between the peg and the hole (particularly the blue and orange points for SAP-RL-E). However, in the other lighting conditions, AE failed to reconstruct the image adequately, either predicting the hole to be in the shadow position or failing to reconstruct a clear image. In contrast, SAP-RL and SAP-RL-E were able to reconstruct the image clearly; they partially “erased” the shadow or created an image in which the shadow and the hole positions were clearly distinguishable. The positions of attention points of SAP-RL-E were also minimally affected by the shadows. The attention points from the current (cross-shaped) and predicted images (x-shaped) of SAP-RL-E provided a sense of direction for the robot’s movement. The combination of these attention point-based directions may be related to the robot’s movement direction, but this will need to be verified in future work. The results suggest that SAP was most effective for reconstructing the image and generating attention points relevant to the task.

V Discussion

V-A Application to construction

Although it was demonstrated that the proposed model is the most suitable for a wide range of construction environments, different models can be chosen depending on the environment. If a light source is placed close to the camera to reduce the influence of challenging external lights (e.g., dawn light), the AE-RL may be chosen because of its shorter CT. However, preliminary evaluations of AE-RL with a camera light showed that the SR dropped more than 20% when an external light was added, so the proposed model still yields a higher SR.

If the lighting conditions are extremely poor, P-RL can be chosen since it is independent of lighting conditions, given that the robot can make contact with the hole borders without vision (e.g., by using CAD-obtained hole positions). However, it is difficult to accurately estimate position without vision because construction environments are highly unstructured. Thus, if vision is already used to approach the hole, the choice of a vision-based hole search model is more optimal.

V-B Hole map size

The hole map size affects the mapping time and the image encoding capability of the image encoding networks (AE and SAP). If the map is large, due to a higher map resolution, for example, more time is required for mapping (extracting data). However, in this case, more images are available for training the image encoding networks, enabling them to learn to encode the images more robustly [6]. On the contrary, if the hole map is small, the mapping time is short, but the image encoding networks are not able to adequately encode the input images. Small resolution maps with interpolation functions could enable more detailed maps with less data extraction, but this approach creates synthetic data that may be different from reality, resulting in reduced robot performance in the real world. The results of this study suggest that the map size used provided a balance between mapping time and image encoding capability, which led to robust peg-in-hole task execution. To further improve the robustness of the proposed model, multiple holes maps can be used to train a model.

VI Conclusion

We proposed a vision and proprioceptive data-driven model to accomplish the peg-in-hole task in concrete holes under challenging lighting conditions. The model uses a spatial attention point network (SAP) and a RL policy trained jointly end-to-end in order to extract task-specific attention points from images and generate robot motion based on those attention points and proprioceptive data. We also proposed a training framework that involves mapping a target hole and using this hole map to train the model via DRL in an offline manner. The hole mapping minimizes the reality gap when transferring the model to the real world, and the offline training reduces training time. Through evaluations with an experimental setup that replicates a construction environment, we demonstrated that by training the proposed model with a single hole map and in room light, the model can successfully control an industrial robot to perform the peg-in-hole task in 12 unknown holes, starting the peg from 16 different initial positions and under three different lighting conditions (two with misleading shadows). The proposed model reached a success rate (SR) of 93.9% and completion time (CT) of 8.21 s, outperforming a series of baseline models, including one that only used proprioceptive data as input (SR:87.4%, CT:11.57 s). Although the proposed model was used for anchor-bolt insertion, it may be applied to similar tasks where peg sliding problems due to complex surfaces and light variations can occur, such as insertion of pins in furniture, or connectors in circuits in poorly lit assembly lines.

References

- [1] M. Hoehler and R. Eligehausen, “Behavior and testing of anchors in simulated seismic cracks,” Aci Structural Journal, vol. 105, pp. 348–357, 05 2008.

- [2] A. Y. Yasutomi, H. Mori, and T. Ogata, “A peg-in-hole task strategy for holes in concrete,” in IEEE International Conference on Robotics and Automation (ICRA), 2021, pp. 2205–2211.

- [3] A. Y. Yasutomi, H. Mori, and T. Ogata, “Curriculum-based offline network training for improvement of peg-in-hole task performance for holes in concrete,” in IEEE/SICE International Symposium on System Integration (SII), January 2022, pp. 712–717.

- [4] H. G. Barrow, J. M. Tenenbaum, R. C. Bolles, and H. C. Wolf, “Parametric correspondence and chamfer matching: Two new techniques for image matching,” in IJCAI, 1977, pp. 659–663.

- [5] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Los Alamitos, CA, USA: IEEE Computer Society, jun 2016, pp. 770–778.

- [6] Y. LeCun, Y. Bengio, and G. Hinton, “Deep learning,” Nature, vol. 521, pp. 436–444, 2015.

- [7] C.-Y. Wang, A. Bochkovskiy, and H.-Y. M. Liao, “Scaled-YOLOv4: Scaling cross stage partial network,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2021, pp. 13 029–13 038.

- [8] E. Salvato, G. Fenu, E. Medvet, and F. A. Pellegrino, “Crossing the reality gap: A survey on sim-to-real transferability of robot controllers in reinforcement learning,” IEEE Access, vol. 9, pp. 153 171–153 187, 2021.

- [9] C. C. Beltran-Hernandez, D. Petit, I. G. Ramirez-Alpizar, and K. Harada, “Variable compliance control for robotic peg-in-hole assembly: A deep-reinforcement-learning approach,” Applied Sciences, vol. 10, no. 19, 2020.

- [10] C. C. Beltran-Hernandez, D. Petit, I. G. Ramirez-Alpizar, T. Nishi, S. Kikuchi, T. Matsubara, and K. Harada, “Learning force control for contact-rich manipulation tasks with rigid position-controlled robots,” IEEE Robotics and Automation Letters, vol. 5, no. 4, pp. 5709–5716, 2020.

- [11] Y. Chebotar, A. Handa, V. Makoviychuk, M. Macklin, J. Issac, N. Ratliff, and D. Fox, “Closing the sim-to-real loop: Adapting simulation randomization with real world experience,” in IEEE International Conference on Robotics and Automation (ICRA), 2019, pp. 8973–8979.

- [12] D. Yarats, A. Zhang, I. Kostrikov, B. Amos, J. Pineau, and R. Fergus, “Improving sample efficiency in model-free reinforcement learning from images,” in AAAI Conference on Artificial Intelligence, 2019.

- [13] C. Finn, X. Y. Tan, Y. Duan, T. Darrell, S. Levine, and P. Abbeel, “Deep spatial autoencoders for visuomotor learning,” in IEEE International Conference on Robotics and Automation (ICRA), 2016, pp. 512–519.

- [14] H. Ichiwara, H. Ito, K. Yamamoto, H. Mori, and T. Ogata, “Spatial attention point network for deep-learning-based robust autonomous robot motion generation,” CoRR, vol. abs/2103.01598, 2021.

- [15] H. Ichiwara, H. Ito, K. Yamamoto, H. Mori, and T. Ogata, “Contact-rich manipulation of a flexible object based on deep predictive learning using vision and tactility,” in IEEE International Conference on Robotics and Automation (ICRA), 2022, pp. 5375–5381.

- [16] T. Inoue, G. De Magistris, A. Munawar, T. Yokoya, and R. Tachibana, “Deep reinforcement learning for high precision assembly tasks,” in IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2017, pp. 819–825.

- [17] G. Schoettler, A. Nair, J. Luo, S. Bahl, J. Aparicio Ojea, E. Solowjow, and S. Levine, “Deep reinforcement learning for industrial insertion tasks with visual inputs and natural rewards,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020, pp. 5548–5555.

- [18] Y. Wang, L. Zhao, Q. Zhang, R. Zhou, L. Wu, J. Ma, B. Zhang, and Y. Zhang, “Alignment method of combined perception for peg-in-hole assembly with deep reinforcement learning,” Journal of Sensors, vol. 2021, 2021.

- [19] S. Levine, C. Finn, T. Darrell, and P. Abbeel, “End-to-end training of deep visuomotor policies,” J. Mach. Learn. Res., vol. 17, no. 1, p. 1334–1373, jan 2016.

- [20] B. Chen, P. Abbeel, and D. Pathak, “Unsupervised learning of visual 3d keypoints for control,” in Proceedings of the 38th International Conference on Machine Learning, ser. Proceedings of Machine Learning Research, M. Meila and T. Zhang, Eds., vol. 139. PMLR, 18–24 Jul 2021, pp. 1539–1549.

- [21] D. E. Whitney, “Quasi-Static Assembly of Compliantly Supported Rigid Parts,” Journal of Dynamic Systems, Measurement, and Control, vol. 104, no. 1, pp. 65–77, 03 1982.

- [22] V. Mnih, K. Kavukcuoglu, D. Silver, A. Graves, I. Antonoglou, D. Wierstra, and M. A. Riedmiller, “Playing atari with deep reinforcement learning,” in NIPS - Deep Learning Workshop, 2013.

- [23] J. N. Foerster, Y. M. Assael, N. de Freitas, and S. Whiteson, “Learning to communicate to solve riddles with deep distributed recurrent q-networks,” in IJCAI - Deep Learning Workshop, 2016.

- [24] M. Babaeizadeh, M. T. Saffar, D. Hafner, H. Kannan, C. Finn, S. Levine, and D. Erhan, “Models, pixels, and rewards: Evaluating design trade-offs in visual model-based reinforcement learning,” arXiv preprint arXiv:2012.04603, 2020.

- [25] H. v. Hasselt, A. Guez, and D. Silver, “Deep reinforcement learning with double q-learning,” in Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence. AAAI Press, 2016, p. 2094–2100.

- [26] Y. Tassa, Y. Doron, A. Muldal, T. Erez, Y. Li, D. de Las Casas, D. Budden, A. Abdolmaleki, J. Merel, A. Lefrancq, T. P. Lillicrap, and M. A. Riedmiller, “Deepmind control suite,” CoRR, vol. abs/1801.00690, 2018.