Visual Reasoning Evaluation of Grok, Deepseek’s Janus, Gemini, Qwen, Mistral, and ChatGPT

Abstract

Traditional evaluations of multimodal large language models (LLMs) have been limited by their focus on single-image reasoning, failing to assess crucial aspects like contextual understanding, reasoning stability, and uncertainty calibration. This study addresses these limitations by introducing a novel benchmark that uniquely integrates multi-image reasoning tasks with rejection-based evaluation and positional bias detection. To rigorously evaluate these dimensions, we further introduce entropy as a novel metric for quantifying reasoning consistency across reordered answer variants. We applied this innovative benchmark to assess Grok 3, ChatGPT-4o, ChatGPT-o1, Gemini 2.0 Flash Experimental, DeepSeek’s Janus models, Qwen2.5-VL-72B-Instruct, QVQ-72B-Preview, and Pixtral 12B across eight visual reasoning tasks, including difference spotting and diagram interpretation. Our findings reveal ChatGPT-o1 leading in overall accuracy (82.5%) and rejection accuracy (70.0%), closely followed by Gemini 2.0 Flash Experimental (70.8%). QVQ-72B-Preview demonstrated superior rejection accuracy (85.5%). Notably, Pixtral 12B (51.7%) showed promise in specific domains, while Janus models exhibited challenges in bias and uncertainty calibration, reflected in low rejection accuracies and high entropy scores. High entropy scores in Janus models (Janus 7B: 0.8392, Janus 1B: 0.787) underscore their susceptibility to positional bias and unstable reasoning, contrasting with the low entropy and robust reasoning of ChatGPT models. The study further demonstrates that model size is not the sole determinant of performance, as evidenced by Grok 3’s underperformance despite its substantial parameter count. By employing multi-image contexts, rejection mechanisms, and entropy-based consistency metrics, this benchmark sets a new standard for evaluating multimodal LLMs, enabling a more robust and reliable assessment of next-generation AI systems.

Index Terms:

Grok 3, Deepseek, Janus, Gemini 2.0 Flash, ChatGPT-4o, ChatGPT-o1, Mistral, Benchmark, Visual Reasoning, Evaluation,I Introduction

The rapid advancement of large language models (LLMs) has transformed artificial intelligence, enabling state-of-the-art performance in natural language processing (NLP), reasoning, and generative tasks [4, 8, 33]. While early LLMs were primarily trained on text-based data, recent developments have led to the emergence of multimodal LLMs, capable of processing and reasoning over diverse data modalities, including text, images, videos, and structured information [22] [6, 29]. These models integrate vision and language understanding, significantly enhancing their applications across various domains, such as visual question answering (VQA) [2, 24], document comprehension [27, 17], medical image interpretation [35, 28], and multimodal dialogue systems [31, 44].

Several multimodal models have demonstrated impressive capabilities in bridging vision and language understanding. AI’s Grok 3 [40] introduces advanced multimodal reasoning with a large parameter count, aiming to enhance contextual understanding and consistency. OpenAI’s GPT-4o [29] extends its predecessor’s capabilities by incorporating image processing and reasoning over complex visual inputs. Google DeepMind’s Gemini 2.0 [9] also advances multimodal interactions by integrating video and spatial reasoning. Meta’s LLaVA [22] aligns language and vision for improved visual grounding and generative capabilities, while Mistral’s Pixtral 12B [1] introduces high-resolution image reasoning. In addition, open-source models such as DeepSeek-VL Janus [11] and Qwen-VL [3] are pushing multimodal AI research forward, democratizing access to powerful vision-language models.

Evaluating multimodal LLMs remains a significant challenge, as existing benchmarks often assess isolated perception tasks rather than the complex reasoning required for real-world applications. While early datasets such as VQAv2 [14] and AI2D [19] focused on single-image understanding, recent benchmarks like NLVR2 [32], MMMU [42], and MathVista [23] have introduced multi-image tasks, logical comparisons, and mathematical reasoning. Moreover, MUIRBench [34] further advanced the field by integrating unanswerable question variants into multimodal visual reasoning. However, these efforts still fall short in systematically evaluating reasoning consistency, uncertainty calibration, and bias susceptibility. Addressing these gaps is crucial to ensuring that multimodal models move beyond pattern recognition and heuristic shortcuts to demonstrate genuine comprehension.

Unlike previous benchmarks, this study extends on the MUIRBench benchmark [34] and systematically evaluates overall reasoning capabilities, reasoning stability, rejection-based reasoning, and bias susceptibility by: (i) Reordering answer choices to assess whether models rely on heuristic-driven shortcuts rather than content understanding; (ii) introducing entropy as a novel metric to quantify reasoning stability and consistency across reordered variants, allowing for the detection of positional biases and randomness in answer selection; and (iii) testing rejection-based reasoning and abstention rate to measure whether models correctly abstain from answering when no valid option is provided.

The study evaluates multiple state-of-the-art multimodal LLMs, including Grok 3 [40], ChatGPT-o1 and ChatGPT-4o [30], Gemini 2.0 Flash Experimental [10], DeepSeek’s Janus models [7], Pixtral 12B [1], and Qwen-VL models [3] to analyze how well-trending models generalize across these reasoning challenges. The results reveal notable discrepancies in performance. ChatGPT-4o and ChatGPT-o1 consistently achieve higher accuracy and reasoning stability, while Janus 7B and Janus 1B exhibit poor accuracy and high entropy scores, indicating significant reasoning variability and susceptibility to positional biases. This suggests that Janus models rely more on surface-level patterns rather than genuine comprehension, highlighting the importance of entropy as a consistency metric in identifying unstable reasoning.

The remainder of this paper is structured as follows. Section II reviews related work on multimodal benchmarks and evaluation methodologies. Section III describes the dataset used, the evaluated models, the experimental setup, and the evaluation framework, including the integration of new evaluation metrics based on reordered answers and entropy for reasoning consistency. Section V presents the results of the evaluation, highlighting key trends in accuracy, reasoning consistency, and rejection-based decision-making. Section VI explores the broader implications of the findings, model-specific weaknesses, and improvements for multimodal AI evaluation. Finally, Section VII provides the conclusion and outlines future directions for refining multimodal benchmarking and reasoning assessment.

II Related Work

II-A Visual Reasoning and Multi-Image Understanding Benchmarks

Early benchmarks, such as VQAv2 [14], GQA [18], and AI2D [19], laid the groundwork for evaluating multimodal models through single-image visual question answering (VQA). However, these datasets primarily assess perception and image-text alignment rather than deeper reasoning across multiple images.

To address this, recent benchmarks have expanded to multi-image contexts. NLVR2 [32] introduced logical comparisons between paired images, while MMMU [42] and MathVista [23] incorporated mathematical and diagrammatic reasoning using multiple visual inputs. MINT [36] and BLINK [12] further extended these evaluations by integrating temporal and spatial reasoning. Despite these improvements, these benchmarks do not explicitly evaluate reasoning consistency, answer stability, or a model’s ability to reject unanswerable questions, leaving a gap in assessing robustness in real-world scenarios.

Recent benchmarks such as SEED-Bench [20] and ChartQA [26] have introduced confidence-based evaluation, but their focus remains limited to generative comprehension rather than multi-image, multi-choice answer stability. Meanwhile, MMLU-Math [16] and ReClor [41] analyze answer order biases in textual reasoning, yet they do not extend this approach to multimodal contexts.

MUIRBench [34] represents a significant advancement in multi-image visual reasoning by incorporating an unanswerable question framework to evaluate rejection-based reasoning, testing whether models can recognize when no correct answer exists. However, MUIRBench does not incorporate reordered answer variations, making it difficult to determine whether models rely on positional heuristics rather than genuine comprehension. Without testing reasoning consistency across reordering, models may appear competent in fixed-choice settings while failing to generalize across structurally altered answer formats. Addressing these limitations is essential for evaluating multimodal models’ robustness and ensuring they do not exploit shortcuts in answer positioning rather than engaging in true visual reasoning.

II-B Reordered Answers, Positional Bias, and Rejection-Based Evaluation

One of the overlooked weaknesses in current multimodal benchmarks is the reliance of models on heuristic patterns or randomness rather than true comprehension. This is particularly evident in the positional bias of multiple-choice answers, where models may favor specific answer choices based on their order rather than reasoning through the question. While text-based evaluations such as ReClor [41] and MMLU-Math [16] incorporate reordered answers to test robustness, no major benchmark systematically applies this technique in multi-image reasoning.

To provide a more comprehensive assessment, our benchmark builds on MUIRBench by incorporating four key criteria: (i) Multi-image reasoning, (ii) unanswerable question recognition, (iii) reordered answer variations, and (iv) entropy-based reasoning consistency. In particular, we introduce entropy as a novel metric to measure consistency in responses across reordered versions of the same question. By quantifying variability in answer distributions, entropy identifies models that exhibit instability in reasoning, revealing reliance on positional heuristics or superficial patterns rather than genuine content understanding. Moreover, our benchmark evaluates rejection accuracy, determining whether models correctly abstain from answering when no valid option exists.

By integrating multi-image reasoning, reordered answer variations, entropy-based reasoning consistency, and rejection-based evaluation, this benchmark contributes a novel framework for diagnosing model weaknesses and guiding future advancements in multimodal LLMs and visual reasoning benchmarks. This dual approach of reordered answers and entropy sets a new standard for reasoning consistency evaluation, ensuring more robust, reliable, and generalizable AI systems.

III Benchmark Setup

III-A Dataset

To evaluate the multi-image reasoning capabilities of multimodal LLMs, we curated a subset of 120 questions and 376 images from the MUIRBench [34] dataset, ensuring a balanced assessment across diverse reasoning tasks. Unlike traditional benchmarks that focus on single-image tasks, this dataset challenges models to process and integrate multiple visual inputs, making it a more rigorous evaluation framework for contextual, spatial, and logical reasoning.

The dataset maintains diversity in image types, as illustrated in Figure 1, spanning real-world photographs, medical imagery, scientific diagrams, and satellite views. By integrating both curated and synthetic data, the benchmark mitigates data contamination risks, ensuring models are tested on truly novel inputs.

Additionally, the dataset includes a range of multi-image relations, depicted in Figure 1, challenging models to process various dependencies such as temporal sequences, complementary perspectives, and multi-view representations. This structured approach ensures that models are tested beyond simple pattern recognition, requiring advanced spatial, logical, and comparative reasoning.

The selection of questions from MUIRBench was guided by the need for a balanced and diverse evaluation while maintaining computational feasibility. Given that MUIRBench consists of 2,600 multiple-choice questions across 12 multi-image reasoning tasks, we curated a representative subset of 120 questions and 376 images, averaging 3.13 images per question. This selection ensures an efficient assessment of multimodal LLMs while focusing on key reasoning abilities.

We prioritized coverage of core multi-image tasks, selecting 8 diverse tasks that span both low-level perception (e.g., difference spotting) and high-level reasoning (e.g., image-text matching) as seen in Fig 1. Tasks with high significance in multimodal evaluation, such as Image-Text Matching and Difference Spotting, were given higher representation, each comprising 28 questions. Additionally, we ensured diversity in multi-image relationships, including temporal sequences, complementary perspectives, and multi-view representations, to evaluate models’ ability to integrate spatial, logical, and comparative reasoning across multiple images.

Our selection also maintained MUIRBench’s answerable-unanswerable pairing strategy by including 40 unanswerable questions. This prevents models from exploiting shortcut biases and lucky guesses and encourages a deeper understanding of visual content.

Additionally, we incorporated alternate versions of each question by reordering the answer choices. This ensures that models do not rely on positional biases or heuristic patterns but genuinely understand and reason the multi-image context. By shuffling the answer choices, we can verify whether a model is consistently selecting the correct response rather than guessing based on positional tendencies.

This question refinement strategy improves model robustness by ensuring they rely on reasoning rather than heuristics. Our study offers a rigorous and efficient way to test how well next-generation vision-language models can reason about multiple images.

III-B Models

In our evaluation, we utilized a diverse set of multimodal language models, as seen in Table I, each designed with unique capabilities to process and reason over visual inputs. Below is a brief overview of each model:

III-B1 Janus 7B

Developed by DeepSeek and was released on January 27, 2025, Janus 7B [7] is a 7-billion parameter open-source multimodal model built for advanced image understanding and text-to-image generation. It supports multiple-image processing, making it suitable for complex visual reasoning tasks.

III-B2 Janus 1B

developed by DeepSeek and was Released alongside Janus 7B on January 27, 2025. Janus 1B [7] is a lightweight 1-billion parameter open-source variant, designed for efficient multi-image processing while maintaining a lower computational footprint.

III-B3 Grok 3

Grok 3 is a multimodal large language model developed by AI. Released on February 17, 2025, Grok 3 boasts 2.7 trillion parameters, making it one of the most advanced AI models to date.

III-B4 Gemini 2.0 Flash Experimental

Launched on February 10, 2025, Gemini 2.0 Flash Experimental [10] is an advanced multimodal model developed by DeepMind that processes both multiple images and videos. It is optimized for rapid inference and efficient memory usage, making it well-suited for real-time visual reasoning applications.

III-B5 QVQ-72B-Preview

Released on December 25, 2024, QVQ-72B-Preview [3] is a 72-billion parameter open-source vision-language model developed by Alibaba that introduces novel techniques in visual question answering. It supports multi-image reasoning, allowing for better contextual understanding across images.

III-B6 Qwen2.5-VL-72B-Instruct

Developed by Alibaba and released on September 19, 2024, Qwen2.5-VL-72B-Instruct [3] is a 72-billion parameter open-source instruction-tuned vision-language model. It incorporates dynamic resolution handling and multimodal rotary position embedding (M-ROPE), enabling multi-image and video comprehension.

III-B7 Pixtral 12B

Released on September 17, 2024, Pixtral 12B [1] is a 12-billion parameter open-source multimodal model developed by Mistral AI, specializing in high-resolution image analysis and text generation.

III-B8 ChatGPT-o1

Introduced on December 5, 2024, ChatGPT-o1 [30] is an advanced iteration of OpenAI’s multimodal models, supporting multiple images and video inputs. It enhances contextual multimodal understanding, making it effective for vision-language interactions.

III-B9 ChatGPT-4o

Released on May 13, 2024, ChatGPT-4o [30] is a multimodal model developed by OpenAI, capable of processing multiple images. It improves on its predecessors by enhancing image-text alignment and expanding multimodal reasoning capabilities.

| Model |

|

Release Date |

|

|

|||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Janus 7B | 7B | Jan 27, 2025 |

|

Yes | |||||||

| Janus 1B | 1B | Jan 27, 2025 |

|

Yes | |||||||

| Grok 3 | 2.7T | Feb 17, 2025 |

|

No | |||||||

|

N/A | Feb 10, 2025 |

|

No | |||||||

|

72B | Dec 25, 2024 |

|

Yes | |||||||

|

72B | Sep 19, 2024 |

|

Yes | |||||||

| Pixtral 12B | 12B | Sep 17, 2024 |

|

Yes | |||||||

| ChatGPT-o1 | N/A | Dec 5, 2024 |

|

No | |||||||

| ChatGPT-4o | N/A | May 13, 2024 |

|

No |

This selection encompasses a range of models with varying capacities and innovations, providing a comprehensive basis for evaluating multimodal language models in visual reasoning tasks.

IV Methodology

IV-A Experimental Setup

IV-A1 Question Presentation Format

To ensure a standardized evaluation across all models, each question follows a structured format where the model receives a textual prompt and the corresponding images. The format is as follows:

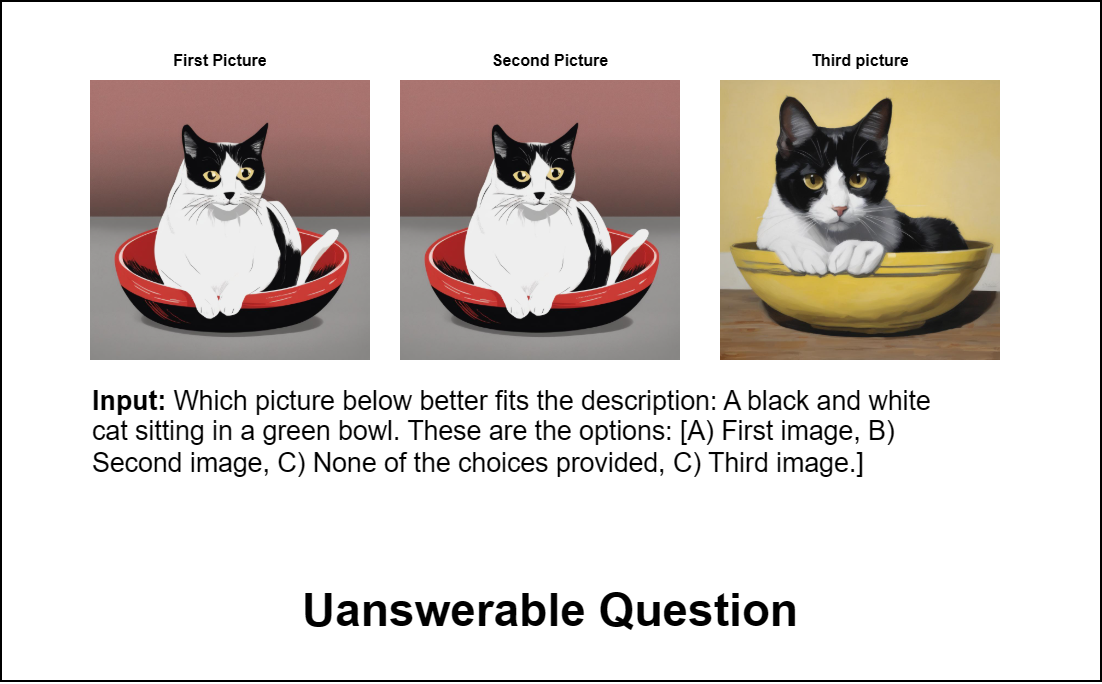

Input: {Question} These are the options: [A) First Option, B) Second Option, C) Third Option, D) Fourth.]

Example: Which picture below better fits the description: A black and white cat sitting in a green bowl? These are the options: [A) First image, B) Second image, C) None of the choices provided, D) Third image.]

Models are expected to select the most appropriate answer based on the given images. To assess model consistency and robustness, each question is tested in different variations:

-

1.

Answerable Version: The correct answer is available among the choices.

-

2.

Reordered Version: The same question with shuffled answer choices to detect positional biases.

-

3.

Unanswerable Version: The correct answer is removed or altered, testing the model’s ability to recognize when a question cannot be answered.

To further clarify this evaluation process, Figures 2, 3, and 4 illustrate examples of each variation.

IV-A2 Local Deployment for Janus Models

For Janus 7B and Janus 1B, we ran the experiments on a high-performance workstation equipped with two NVIDIA RTX 4090 GPUs, each featuring 16,384 CUDA cores due to the unavailability of an official online interface.

IV-A3 Web-Based Evaluation for Other Models

For the remaining models (Grok3, QVQ-72B-Preview, Qwen2.5-VL-72B-Instruct, Pixtral 12B, ChatGPT-4o, ChatGPT-o1, and Gemini 2.0 Flash Experimental), we conducted evaluations using their official online interfaces. To ensure zero-shot learning, a new chat session was initialized for each question, preventing any contextual memory retention. We set the temperature to 1.0, encouraging diverse yet unbiased model responses.

IV-A4 Consistency and Fairness Measures

All models were assessed using identical input formats, with questions presented in their original multiple-choice structure. No additional context was given beyond the provided question and images, ensuring models relied solely on intrinsic reasoning capabilities.

This setup guarantees a standardized comparison across models, enabling a robust assessment of multi-image reasoning capabilities.

IV-B Evaluation Setup

To ensure a standardized and fair assessment of all multimodal LLMs, we established a strict evaluation protocol that handles varying model constraints, answer formats, and consistency measures.

IV-B1 Handling of Image Restrictions

Some models have restrictive policies that prevent them from processing images, either due to safety mechanisms or inherent limitations. If a model fails to accept an image input, the response is automatically marked as incorrect, as it indicates an inability to engage with the core visual reasoning task.

IV-B2 Answer Parsing and Validity

To eliminate ambiguity in model responses, we adhere to a strict answer validation process:

-

•

If a model provides an answer choice that does not exist among the given options, the response is considered incorrect.

-

•

If the model correctly identifies the answer but provides an incorrect explanation, the response is still marked as correct, as the primary evaluation metric is answer selection. For instance, if a model produces an answer such as “C) First image” when the correct answer is “C) Second image”, the response is marked as correct since the answer letter aligns with the expected choice.

IV-B3 Ensuring Answer Consistency

To detect inconsistencies in model reasoning, we incorporate reordered answer choices across repeated questions. If a model guesses correctly on one instance but incorrectly on another reordered variant, this indicates reliance on positional heuristics rather than genuine understanding. This methodology allows us to identify unreliable answering patterns and assess whether a model is robust to answer order changes.

This evaluation setup ensures that models are judged on their actual reasoning abilities, eliminating potential issues or biases caused by format variations or restrictive policies.

IV-B4 Overall Accuracy Evaluation

Each correctly answered question is assigned a score of one point, with all questions weighed equally regardless of their difficulty or complexity. The evaluation metric is based on accuracy, defined as:

| (1) |

Accuracy is a fundamental metric in evaluating multimodal large language models (MLLMs), as it provides a straightforward measure of a model’s performance across various tasks. For instance, the MME benchmark employs accuracy to assess both perception and cognition abilities of MLLMs across multiple subtasks [39]. Similarly, the MM-BigBench framework utilizes accuracy to evaluate model performance on a wide range of multimodal content comprehension tasks [13].

IV-B5 Rejection Accuracy Evaluation

In addition to overall accuracy, we evaluate each model’s ability to recognize when no correct answer is available. Rejection accuracy measures how well a model correctly selects the “None of the provided options” choice when no valid answer is present. This is a critical aspect of uncertainty calibration, ensuring that models do not make incorrect guesses when faced with unanswerable questions.

Rejection accuracy is computed as:

| (2) |

where “Number of Correct Rejections” represents the cases where the model correctly abstains from selecting an incorrect answer, and “Total Rejection Questions” corresponds to a predefined subset of 40 unanswerable questions in the benchmark.

Evaluating a model’s ability to handle unanswerable questions is essential for deploying reliable AI systems. While traditional accuracy metrics assess performance on answerable queries, incorporating rejection accuracy provides a more comprehensive evaluation framework, as it accounts for a model’s uncertainty calibration and decision-making in ambiguous scenarios. Previous studies, such as MUIRBench [34], the comprehensive evaluation framework in ChEF [5], and the survey on multimodal LLM evaluation [21], highlight the importance of rejection accuracy in assessing model robustness and reliability.

IV-B6 Abstention Rate Evaluation

In addition to overall accuracy and rejection accuracy, we evaluate each model’s tendency to select “None of the provided options” across all questions. This metric, termed Abstention Rate, measures the proportion of times a model opts for “None of the provided options”, reflecting its inclination to abstain from answering.

| (3) |

A higher abstention rate indicates a model’s cautious approach, potentially avoiding incorrect answers when uncertain, while a lower rate suggests overconfidence or reluctance to admit uncertainty.

IV-B7 Entropy-Based Reasoning Consistency Evaluation

To quantify reasoning stability, we utilize Entropy as a metric to evaluate a model’s consistency in answering reordered variations of the same question. Entropy captures the uncertainty and variability in the model’s responses across these variants, providing a robust measure of reasoning consistency.

Entropy is calculated by grouping each original question with its reordered variants and analyzing the distribution of the model’s responses. Specifically, the frequency of each answer option is computed and normalized to form a probability distribution while the correct answer is unchanged throughout all the question variants. Entropy is then calculated using the formula:

| (4) |

where is the entropy for question group , is the total number of possible answer options, and is the probability of selecting option among the reordered variants.

The Mean Entropy for a model across all questions is computed as:

| (5) |

where represents the average reasoning consistency for model , and is the total number of question groups.

Lower entropy values indicate higher consistency, suggesting that the model consistently selects the same answer across reordered variants, reflecting stable reasoning. Conversely, higher entropy values suggest greater variability in answers, indicating potential instability or influence from positional biases rather than consistent reasoning patterns.

Previous studies have utilized entropy-based metrics to evaluate reasoning consistency and adaptive choice behavior in LLMs, such as in information selection for Chain-of-Thought prompting [43] and in data compression proficiency assessments [37]. These approaches mainly focus on text generation tasks, where entropy is used to adjust dynamic temperature sampling or to assess information selection efficiency. However, these methods do not address reasoning consistency across reordered variants in multimodal visual reasoning.

Our approach applies entropy to evaluate consistency specifically across reordered variants, which allows us to measure reasoning stability and detect positional biases that may influence model outputs. This offers a novel perspective on robustness and stability in multimodal LLMs, particularly in complex visual contexts where reasoning consistency is crucial for accurate interpretation. By assessing entropy across answer distributions for reordered variants, our method provides a more comprehensive understanding of reasoning patterns and positional biases compared to traditional accuracy metrics.

V results

| Model |

|

Counting |

|

|

|

|

Ordering |

|

Total | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Janus 7B | 0.5 | 0.875 | 0.75 | 0.214 | 0.125 | 0.5714 | 0.25 | 0.0833 | 0.433 | ||||||||||||

| Janus 1B | 0.25 | 1.0 | 0.65 | 0.1785 | 0.25 | 0.4285 | 0.25 | 0.166 | 0.3833 | ||||||||||||

| Grok 3 | 0.5 | 1.0 | 0.95 | 0.4642 | 0.5 | 0.5714 | 0.0 | 0.25 | 0.558 | ||||||||||||

|

0.5 | 0.75 | 0.95 | 0.5 | 0.625 | 0.8214 | 0.5 | 0.833 | 0.7083 | ||||||||||||

|

0.0 | 0.5 | 0.7 | 0.5714 | 1.0 | 0.8571 | 0.5 | 0.75 | 0.6583 | ||||||||||||

|

0.0 | 0.5 | 0.75 | 0.5 | 0.125 | 0.8928 | 0.625 | 0.916 | 0.625 | ||||||||||||

| Pixtral 12B | 0.625 | 0.625 | 0.85 | 0.2142 | 0.25 | 0.5714 | 0.375 | 0.5 | 0.5 | ||||||||||||

| ChatGPT-o1 | 0.5 | 1.0 | 1.0 | 0.6428 | 0.625 | 0.9642 | 0.625 | 1.0 | 0.825 | ||||||||||||

| ChatGPT-4o | 0.5 | 0.875 | 1.0 | 0.3571 | 0.5 | 0.9642 | 0.5 | 0.5833 | 0.6916 |

| Model |

|

Counting |

|

|

|

|

Ordering |

|

Total | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Janus 7B | 4 | 7 | 15 | 6 | 1 | 16 | 2 | 1 | 52 | ||||||||||||

| Janus 1B | 2 | 8 | 13 | 5 | 2 | 12 | 2 | 2 | 46 | ||||||||||||

| Grok 3 | 4 | 8 | 19 | 13 | 4 | 16 | 0 | 3 | 67 | ||||||||||||

|

4 | 6 | 19 | 14 | 5 | 23 | 4 | 10 | 85 | ||||||||||||

|

0 | 4 | 14 | 16 | 8 | 24 | 4 | 9 | 79 | ||||||||||||

|

0 | 4 | 15 | 14 | 1 | 25 | 5 | 11 | 75 | ||||||||||||

| Pixtral 12B | 5 | 5 | 17 | 6 | 2 | 16 | 3 | 6 | 60 | ||||||||||||

| ChatGPT-o1 | 4 | 8 | 20 | 18 | 5 | 27 | 5 | 12 | 99 | ||||||||||||

| ChatGPT-4o | 4 | 7 | 20 | 10 | 4 | 27 | 4 | 7 | 83 | ||||||||||||

| Number of Questions | 8 | 8 | 20 | 28 | 8 | 28 | 8 | 12 | 120 |

| Model |

|

|

|

|

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Janus 7B | 6 | 0.15 | 12 | 0.1 | |||||||||

| Janus 1B | 2 | 0.05 | 5 | 0.041 | |||||||||

| Grok 3 | 21 | 0.525 | 45 | 0.375 | |||||||||

|

20 | 0.5 | 26 | 0.216 | |||||||||

|

34 | 0.855 | 51 | 0.425 | |||||||||

|

21 | 0.525 | 33 | 0.275 | |||||||||

| Pixtral 12B | 12 | 0.3 | 18 | 0.15 | |||||||||

| ChatGPT-o1 | 28 | 0.7 | 32 | 0.267 | |||||||||

| ChatGPT-4o | 18 | 0.450 | 25 | 0.208 | |||||||||

| Perfect Score | 40 | 1 | 40 | 0.334 |

| Model | Entropy | ||

|---|---|---|---|

| Janus 7B | 0.8392 | ||

| Janus 1B | 0.787 | ||

| Grok 3 | 0.256 | ||

|

0.3163 | ||

|

0.3537 | ||

|

0.4892 | ||

| Pixtral 12B | 0.557 | ||

| ChatGPT-o1 | 0.1352 | ||

| ChatGPT-4o | 0.216 |

V-A Overall Model Performance

As illustrated in Tables II and III, and Figures 5 and 6, ChatGPT-o1 was of the highest performance, achieving an accuracy of 0.825, followed by Gemini 2.0 Flash Experimental (0.717) and ChatGPT-4o (0.691). The lowest-performing models were Janus 1B and Janus 7B, with accuracy scores of 0.383 and 0.433, respectively. Grok 3, despite its large parameter count of 2.7 trillion, showed disappointing results with an accuracy of 0.580, underperforming in tasks requiring complex reasoning and consistency.

Additionally, QVQ-72B-Preview demonstrated high performance in geographic understanding, but struggled with image-text matching and difference spotting, which highlights a domain-specific strength but overall inconsistency. Qwen2.5-VL-72B-Instruct displayed moderate accuracy (0.633) but encountered challenges in cartoon understanding, likely due to content restrictions preventing the processing of specific images.

The difference in accuracy between the highest- and lowest-performing models is 0.442, highlighting a substantial performance gap across evaluated models. ChatGPT-o1 leads by a margin of 13.3 percentage points over the second-best model, Gemini 2.0 Flash Experimental.

Additionally, as seen in Figure 6, ChatGPT-o1 maintains the most balanced high performance across tasks, exhibiting consistently strong accuracy without significant weaknesses in any category. In contrast, models like Pixtral 12B and Grok 3 display more uneven performance, excelling in certain tasks while struggling in others. Pixtral 12B demonstrates relatively strong results in tasks such as cartoon understanding and diagram interpretation but underperforms in difference spotting and image-text matching.

V-B Rejection Accuracy and Abstention Rate

As seen in Table IV and Fig 7, QVQ-72B-Preview recorded the highest rejection accuracy at 0.850, followed by ChatGPT-o1 at 0.700. Janus 7B (0.150) and Janus 1B (0.050) had the lowest rejection accuracies, showing a strong bias toward selecting an option.

For Abstention Rate, QVQ-72B-Preview had the highest rate at 0.425, followed by Grok 3 (0.375). Both exceed the threshold of 0.33, which corresponds to the proportion of questions where the correct answer was “None of the provided options.” This indicates a tendency to over-reject. In contrast, Janus 1B had the lowest abstention rate at 0.042, showing a strong reluctance to abstain even when uncertain. ChatGPT-o1 maintained a moderate abstention rate of 0.267, staying below the 0.33 threshold and demonstrating balanced uncertainty management.

V-C Overall Accuracy vs. Rejection Accuracy and Abstention Rate

ChatGPT-o1 achieved both high total accuracy (0.825) and balanced rejection accuracy (0.700) with an abstention rate of 0.267, showing well-calibrated uncertainty handling. QVQ-72B-Preview, despite high rejection accuracy (0.850), had an abstention rate (0.425) above the 0.33 threshold, suggesting overrejection. Grok 3 also showed an abstention rate of 0.375, indicating a tendency to overreject despite its high parameter count.

Janus 1B displayed the most extreme behavior with the lowest rejection accuracy (0.050) and abstention rate (0.042), reflecting an overconfident approach. ChatGPT-4o and Gemini 2.0 Flash Experimental both showed moderate abstention rates below 0.33, maintaining a balanced decision strategy.

V-D Reasoning Stability: Entropy-Based Consistency

To assess reasoning consistency across reordered answer variations, we compute entropy scores for each model. As shown in Table V, models with higher entropy scores, such as Janus 7B (0.8392), Janus 1B (0.787), and Pixtral 12B (0.557), exhibited greater response variability, suggesting fluctuations in answer selection across question variants.

In contrast, ChatGPT-o1 (0.1352), ChatGPT-4o (0.216), and Grok 3 (0.256) achieved the lowest entropy values, indicating the most consistent reasoning patterns. These results suggest that ChatGPT and AI models maintain more stable reasoning, whereas other models are more influenced by positional biases or random variations in answer selection.

VI Discussion

The evaluation of multimodal language models on this dataset reveals critical insights into their reasoning capabilities, robustness, and inherent biases. This section examines whether model size correlates with performance, the effectiveness of open-source models compared to proprietary ones, the restrictive nature of Qwen models, and the impact of reordered answers in detecting biases. Additionally, we discuss the performance of Grok 3 and DeepSeek’s Janus. While formal scientific validation of Grok 3 and DeepSeek’s Janus’s advanced capabilities is still emerging, online discussions suggest significant anticipation for their performance. Our analysis reveals that Grok 3 and DeepSeek’s Janus exhibited suboptimal performance.

VI-A Does Model Size Correlate with Performance?

A common misconception in the research community is larger models perform better due to increased capacity for processing and learning complex relationships. While this trend holds in many cases, our results suggest that size alone does not guarantee superior performance.

In this benchmark, the smallest models, Janus 7B and Janus 1B, significantly underperformed against their larger counterparts. Given their lower parameter count, this is expected, yet their frequent positional biases, and inconsistent reasoning indicate that their training or optimization was insufficient to compensate for their size limitations.

However, Grok 3, despite having the largest parameter count of 2.7 trillion, showcased low performance relative to its size, underperforming in tasks requiring complex reasoning and consistency. Its moderate rejection accuracy (0.525) indicate inconsistent reasoning stability, suggesting that sheer model size does not necessarily lead to more robust performance or consistent decision-making.

These findings underscore that while larger models generally have greater capacity, effective optimization and fine-tuning are critical for maximizing performance and consistency.

VI-B Are Open-Source Models Competitive Against Proprietary Models?

While open-source models offer transparency and adaptability, this benchmark demonstrates that proprietary models continue to hold a significant advantage in complex multimodal reasoning tasks. ChatGPT-o1 and Gemini 2.0 Flash outperformed all open-source models across most reasoning tasks, underscoring the benefits of high-quality fine-tuning and diverse pretraining data. In contrast, open-source models like Janus 1B and Janus 7B exhibited significant reasoning inconsistencies (i.e., hallucination) and biases, suggesting that limited access to high-quality data remains a major constraint for non-proprietary models. However, Pixtral 12B emerged as a surprising exception, performing well in specific tasks such as Cartoon Understanding, demonstrating that with targeted fine-tuning and optimization, open-source models can still be competitive in certain domains.

VI-C The Restrictive Nature of Qwen Models

One of the most unexpected findings was the high rate of unanswered or incorrectly answered questions from Qwen2.5-VL-72B-Instruct and QVQ-72B-Preview due to potential content restrictions. This issue was particularly evident in Cartoon Understanding, where the model struggled with half of the test cases despite the images being non-explicit or hazardous.

This suggests that Qwen’s content filtering mechanisms are overly aggressive, preventing it from engaging with conventional content. This restrictive nature severely limits Qwen’s applicability in real-world multimodal reasoning, particularly in cases where understanding humor, memes, or non-literal content is necessary. A more balanced content moderation approach could improve its usability in diverse applications without compromising ethical safeguards.

VI-D Grok 3’s Unmet Expectations

Grok 3, currently in its Beta version, was positioned as a powerful contender to ChatGPT-o1, boasting an impressive 2.7 trillion parameters, the largest in this benchmark. Despite its scale and claims of advanced reasoning, Grok 3 fell short of expectations, achieving underwhelming results taking its size into consideration.

Additionally, Grok 3 exhibited an unusually high abstention rate, indicating a tendency to reject answers more frequently than average, even when correct options were available. This suggests an overly conservative approach to uncertainty, which undermined its decision-making effectiveness.

While it showed moderate success in specific tasks, Grok 3 failed to outperform ChatGPT-o1 or leverage its scale for superior reasoning stability. Overall, Grok 3, although it is still in its beta version, demonstrates that model size alone does not equate to improved accuracy or reasoning consistency.

VI-E The Underperformance of DeepSeek Janus Models

The release of DeepSeek’s Janus models was widely anticipated, particularly following the success of DeepSeek’s R1 model, which made significant strides in competing with ChatGPT-o1 despite utilizing far fewer resources [15]. This led to growing expectations that DeepSeek could emerge as a serious competitor to OpenAI across multiple AI domains. However, this benchmark demonstrates that such expectations do not hold up in visual reasoning tasks.

Janus 7B and Janus 1B, the smallest models in this benchmark, also rank among the weakest performers, struggling significantly in numerous tasks, where they exhibit severe positional biases and incosistent reasoning. The accuracy evaluation shows that Janus models fail to generalize well across answer variations, reinforcing that their reasoning ability is not robust to minor changes in answer presentation.

While some expected DeepSeek Janus to be a strong open-source alternative to ChatGPT models, its current iteration suggests that DeepSeek’s approach does not yet scale effectively to multimodal reasoning. This indicates that DeepSeek’s advancements in language modeling with R1 do not yet extend effectively to multimodal reasoning, leaving the Janus models far behind proprietary competitors like ChatGPT-o1 in this domain.

VI-F The Continued Dominance of ChatGPT Models

While there has been significant development in multimodal models across different organizations, ChatGPT models continue to demonstrate an overwhelming advantage in visual reasoning tasks. ChatGPT-4o and ChatGPT-o1 outperformed every other model in almost all reasoning tasks, particularly excelling in Diagram Understanding, Image-Text Matching, and Visual Retrieval. The accuracy table further highlights that these models maintained stable performance across different variations of the same questions, indicating their robustness in reasoning and comprehension.

These models consistently provided the most stable and accurate responses, exhibiting the lowest rates of hallucination and positional bias. While some open-source models, such as Pixtral 12B, showed promising results in specific areas, none were able to compete across the board with OpenAI’s ChatGPT models. This result reinforces the idea that proprietary models still maintain a significant edge in multimodal visual reasoning, likely due to access to better training datasets, fine-tuning techniques, and alignment strategies that remain unavailable to the public. Future developments in open-source multimodal models will need to focus on improving robustness and reducing bias to close the gap with proprietary alternatives.

VI-G The Contribution of Reordered Answers and Entropy in Detecting Biases

One of the key contributions of this study was the inclusion of reordered answer variants and the application of entropy to detect positional biases and randomness in model responses. This combined approach exposed significant differences in reasoning stability across models, highlighting the extent to which certain models rely on positional heuristics and arbitrary answers rather than genuine comprehension.

By using entropy to quantify variability in answer distributions across reordered variants, we effectively measured reasoning consistency and stability. Models with high entropy scores, such as Janus 7B (0.8392) and Janus 1B (0.787), exhibited significant inconsistencies across reordered variants, suggesting reliance on positional heuristics or randomness rather than content-based reasoning. Their fluctuations indicate that answer selection was influenced by surface-level patterns rather than a stable understanding of the question, particularly in tasks requiring multi-image integration.

In contrast, ChatGPT-o1 (0.1352) and ChatGPT-4o (0.216) demonstrated the lowest entropy scores, indicating strong reasoning stability and resistance to positional biases. These models consistently selected the same answer across reordered variations, suggesting they engage in more content-driven decision-making. While low entropy does not inherently guarantee higher accuracy, most of the top-performing models exhibited lower entropy values, showing a strong correlation between reasoning stability and overall model performance.

These findings highlight the limitations of traditional VQA evaluations, which measure correctness but not reasoning stability. By combining reordered answer variants with entropy as a consistency metric, this study provides a more nuanced and robust assessment of reasoning stability. This dual approach distinguishes models that genuinely comprehend content from those that rely on answer positioning and randomness. Future multimodal benchmarks should incorporate both reordered answers and entropy-based consistency metrics to assess reasoning robustness more effectively and mitigate heuristic-driven decision-making.

VI-H Avoidance of “None of the Choices Provided”

The majority of models demonstrated a consistent tendency to avoid selecting “None of the choices provided,” even when it was the correct answer. This pattern was most pronounced in Janus 7B and Janus 1B, which exhibited extremely low rejection accuracy and abstention rates. Their strong bias towards selecting an option, regardless of correctness, suggests an overcommitment to answers, likely influenced by pretraining datasets that emphasize choosing the best available choice.

In contrast, QVQ-72B-Preview and Grok 3 displayed the highest abstention rates, exceeding the 0.33 threshold for the proportion of questions where “None of the provided options” was correct. This indicates a more conservative approach to decision-making, with a tendency to over-reject. QVQ-72B-Preview consistently identified unanswerable questions, while Grok 3 exhibited more variability, reflecting inconsistent uncertainty calibration.

ChatGPT-o1 and ChatGPT-4o maintained balanced rejection reasoning, selectively abstaining without excessive avoidance. Their abstention rates remained close to the 0.33 threshold, indicating well-calibrated uncertainty recognition and strategic decision-making.

These findings reveal distinct patterns in rejection behavior, highlighting that while some models consistently avoid selecting “None of the choices provided,” others exhibit over-rejection tendencies. The balanced approach observed in ChatGPT models underscores the importance of effective uncertainty calibration for strategic rejection-based reasoning.

VII Conclusion

This study provides a comprehensive evaluation of multimodal LLMs, revealing significant differences in reasoning stability, bias susceptibility, and uncertainty handling. ChatGPT-o1 and ChatGPT-4o consistently outperformed other models, demonstrating superior consistency, balanced rejection reasoning, and effective uncertainty calibration. These results highlight the advantages of extensive fine-tuning and high-quality training data in proprietary models.

Grok 3, despite its massive parameter count of 2.7 trillion, failed to meet expectations, showcasing inconsistent reasoning stability, excessive rejection behavior, and moderate overall accuracy. Its high abstention rate (0.375) indicates an overly conservative approach, emphasizing that scale alone does not guarantee better performance. Similarly, Janus 7B and Janus 1B displayed the lowest rejection accuracy and reluctance to abstain, reflecting a bias towards overcommitting to answers, likely due to insufficient exposure to rejection-based reasoning.

This study also highlights the impact of reordered answer variations in detecting positional biases. Models with high entropy scores, such as Janus 7B (0.8392) and Janus 1B (0.787), exhibited greater variability and susceptibility to positional heuristics, whereas ChatGPT-o1 (0.1352) and ChatGPT-4o (0.216) maintained consistent reasoning patterns. The introduction of entropy as a reasoning consistency metric provides a novel, quantitative measure of stability across reordered variants, revealing limitations in traditional VQA metrics that focus solely on correctness.

Rejection accuracy and abstention rates further exposed weaknesses in uncertainty calibration. QVQ-72B-Preview displayed the highest rejection accuracy but also over-rejected, exceeding the 0.33 threshold, reflecting risk-averse decision-making. Conversely, Janus models consistently avoided rejection, highlighting poor uncertainty recognition. The balanced rejection strategies of ChatGPT-o1 and ChatGPT-4o illustrate the importance of strategic abstention for reliable decision-making.

Overall, this study underscores the need for advanced benchmarks that incorporate reordered answers, entropy-based consistency metrics, and rejection accuracy to more effectively evaluate reasoning stability and uncertainty calibration. Addressing positional biases, refining rejection strategies, and enhancing generalization are crucial for advancing multimodal LLMs’ real-world applicability.

References

- [1] P. Agrawal, S. Antoniak, E. B. Hanna, B. Bout, D. Chaplot, J. Chudnovsky, D. Costa, B. De Monicault, S. Garg, T. Gervet, et al. Pixtral 12b. arXiv preprint arXiv:2410.07073, 2024.

- [2] S. Antol et al. Vqa: Visual question answering. Proceedings of the IEEE International Conference on Computer Vision, pages 2425–2433, 2015.

- [3] J. Bai, S. Bai, S. Yang, S. Wang, S. Tan, P. Wang, J. Lin, C. Zhou, and J. Zhou. Qwen-vl: A frontier large vision-language model with versatile abilities. arXiv preprint arXiv:2308.12966, 2023.

- [4] T. Brown, B. Mann, N. Ryder, et al. Language models are few-shot learners. Advances in Neural Information Processing Systems, 33:1877–1901, 2020.

- [5] L. Chen, Y. Feng, H. Wu, Y. Zhang, K. Li, and M. Xiao. Chef: A comprehensive evaluation framework for standardized assessment of multimodal large language models. OpenReview preprint, 2023.

- [6] M. A. Chen. Mistral 7b: Open-weight large language models. arXiv preprint arXiv:2401.00198, 2024.

- [7] X. Chen, Z. Wu, X. Liu, Z. Pan, W. Liu, Z. Xie, X. Yu, and C. Ruan. Janus-pro: Unified multimodal understanding and generation with data and model scaling. arXiv preprint arXiv:2501.17811, 2025.

- [8] A. Chowdhery et al. Palm: Scaling language modeling with pathways. arXiv preprint arXiv:2204.02311, 2022.

- [9] DeepMind. Gemini 2.0: A new era of multimodal models. Google DeepMind Research Blog, 2024.

- [10] G. DeepMind. Gemini: Multimodal large language model, 2023. Accessed: 2025-02-10.

- [11] DeepSeek. Deepseek-vl: Open-source multimodal language models. arXiv preprint arXiv:2403.11245, 2024.

- [12] X. Fu, Y. Hu, B. Li, Y. Feng, H. Wang, X. Lin, D. Roth, N. A. Smith, W.-C. Ma, and R. Krishna. Blink: Multimodal large language models can see but not perceive. In European Conference on Computer Vision, pages 148–166. Springer, 2024.

- [13] T. Goyal, G. Papadimitriou, A. Agrawal, C. Li, and M. Alikhani. Mm-bigbench: Evaluating multimodal models on multimodal content comprehension tasks. arXiv preprint arXiv:2310.09036, 2023.

- [14] Y. Goyal, T. Khot, D. Summers-Stay, D. Batra, and D. Parikh. Making the V in VQA matter: Elevating the role of image understanding in visual question answering. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 6904–6913, 2017.

- [15] D. Guo, D. Yang, H. Zhang, J. Song, R. Zhang, R. Xu, Q. Zhu, S. Ma, P. Wang, X. Bi, et al. Deepseek-R1: Incentivizing reasoning capability in LLMs via reinforcement learning. arXiv preprint arXiv:2501.12948, 2025.

- [16] D. Hendrycks, C. Burns, S. Kadavath, A. Arora, B. Mann, A. Askell, J. Kernion, E. Tran-Johnson, E. Clark, C. Gomez, A. Zou, D. Song, J. Steinhardt, S. McCandlish, and D. Amodei. Measuring mathematical problem solving with the mmlu-math benchmark. arXiv preprint arXiv:2306.12345, 2023.

- [17] L. Huang et al. Layoutlm: Pretraining of text and layout for document image understanding. arXiv preprint arXiv:2301.09569, 2023.

- [18] D. A. Hudson and C. D. Manning. GQA: A new dataset for real-world visual reasoning and compositional question answering. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 6700–6709, 2019.

- [19] A. Kembhavi, M. Salvato, E. Kolve, M. Seo, H. Hajishirzi, and A. Farhadi. A diagram is worth a dozen images. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part IV 14, pages 235–251. Springer, 2016.

- [20] B. Li, R. Wang, G. Wang, Y. Ge, Y. Ge, and Y. Shan. Seed-bench: Benchmarking multimodal llms with generative comprehension. arXiv preprint arXiv:2307.16125, 2023.

- [21] X. Li, L. Wang, W. Chen, X. Zhang, Y. Zhao, H. Wang, and Y. Wu. A survey on evaluation of multimodal large language models. arXiv preprint arXiv:2408.15769, 2023.

- [22] H. Liu et al. Visual instruction tuning. arXiv preprint arXiv:2304.08485, 2023.

- [23] P. Lu, H. Bansal, T. Xia, J. Liu, C. Li, H. Hajishirzi, H. Cheng, K.-W. Chang, M. Galley, and J. Gao. Mathvista: Evaluating mathematical reasoning of foundation models in visual contexts. arXiv preprint arXiv:2310.02255, 2023.

- [24] P. Lu et al. Learn to explain: Multimodal reasoning via thought chains for science question answering. NeurIPS, 2022.

- [25] A. Madhusudhan, Y. Cheng, and W. Wang. Do llms know when to not answer? investigating abstention abilities of large language models. Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), 2024.

- [26] A. Masry, D. X. Long, J. Q. Tan, S. Joty, and E. Hoque. Chartqa: A benchmark for question answering about charts with visual and logical reasoning. arXiv preprint arXiv:2203.10244, 2022.

- [27] M. Mathew, D. Karatzas, and C. Jawahar. Docvqa: A dataset for vqa on document images. In Proceedings of the IEEE/CVF winter conference on applications of computer vision, pages 2200–2209, 2021.

- [28] A. Mohta et al. Biogpt: A biomedical gpt model. arXiv preprint arXiv:2302.01253, 2023.

- [29] OpenAI. Gpt-4o: Openai’s multimodal language model. OpenAI Blog, 2024.

- [30] OpenAI. Chatgpt: Chatbot language model, 2025. Accessed: 2025-02-10.

- [31] K. Shuster et al. Multi-modal open-domain dialogue. EMNLP, 2021.

- [32] A. Suhr and Y. Artzi. NLVR2 visual bias analysis. arXiv preprint arXiv:1909.10411, 2019.

- [33] H. Touvron et al. Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288, 2023.

- [34] F. Wang, X. Fu, J. Y. Huang, Z. Li, Q. Liu, X. Liu, M. D. Ma, N. Xu, W. Zhou, K. Zhang, et al. Muirbench: A comprehensive benchmark for robust multi-image understanding. arXiv preprint arXiv:2406.09411, 2024.

- [35] S. Wang et al. Biomedclip: Learning biomedical multi-modal representations from large-scale multi-source data. arXiv preprint arXiv:2301.04747, 2023.

- [36] X. Wang, Z. Wang, J. Liu, Y. Chen, L. Yuan, H. Peng, and H. Ji. Mint: Evaluating llms in multi-turn interaction with tools and language feedback. arXiv preprint arXiv:2309.10691, 2023.

- [37] L. Wei, Z. Tan, C. Li, J. Wang, and W. Huang. Large language model evaluation via matrix entropy. arXiv preprint arXiv:2401.17139, 2024.

- [38] X. Wen, H. Li, and L. Zhang. Know your limits: A survey of abstention in large language models. arXiv preprint arXiv:2403.08129, 2024.

- [39] H. Wu, Z. Liu, B. Li, Y. Zhao, H. Zhang, Z. Shen, X. Zhao, J. Liu, L. Liu, X. Zhu, et al. Mme: A comprehensive evaluation benchmark for multimodal large language models. arXiv preprint arXiv:2306.13394, 2023.

- [40] xAI. Grok 3 beta — the age of reasoning agents. https://x.ai/blog/grok-3, 2025. Accessed: 2025-02-21.

- [41] W. Yu, Z. Jiang, Y. Dong, and J. Feng. Reclor: A reading comprehension dataset requiring logical reasoning. arXiv preprint arXiv:2002.04326, 2020.

- [42] X. Yue, Y. Ni, K. Zhang, T. Zheng, R. Liu, G. Zhang, S. Stevens, D. Jiang, W. Ren, Y. Sun, et al. Mmmu: A massive multi-discipline multimodal understanding and reasoning benchmark for expert agi. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9556–9567, 2024.

- [43] C. Zhou, W. You, J. Li, J. Ye, K. Chen, and M. Zhang. INFORM: Information eNtropy-based multi-step reasoning FOR large language models. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP), pages 3565–3576, Singapore, 2023. Association for Computational Linguistics.

- [44] H. Zhu et al. Chat with your images: A vision-language assistant. arXiv preprint arXiv:2402.01234, 2024.