Visual Prompt Tuning in Null Space for Continual Learning

Abstract

Existing prompt-tuning methods have demonstrated impressive performances in continual learning (CL), by selecting and updating relevant prompts in the vision-transformer models. On the contrary, this paper aims to learn each task by tuning the prompts in the direction orthogonal to the subspace spanned by previous tasks’ features, so as to ensure no interference on tasks that have been learned to overcome catastrophic forgetting in CL. However, different from the orthogonal projection in the traditional CNN architecture, the prompt gradient orthogonal projection in the ViT architecture shows completely different and greater challenges, , 1) the high-order and non-linear self-attention operation; 2) the drift of prompt distribution brought by the LayerNorm in the transformer block. Theoretically, we have finally deduced two consistency conditions to achieve the prompt gradient orthogonal projection, which provide a theoretical guarantee of eliminating interference on previously learned knowledge via the self-attention mechanism in visual prompt tuning. In practice, an effective null-space-based approximation solution has been proposed to implement the prompt gradient orthogonal projection. Extensive experimental results demonstrate the effectiveness of anti-forgetting on four class-incremental benchmarks with diverse pre-trained baseline models, and our approach achieves superior performances to state-of-the-art methods. Our code is available at https://github.com/zugexiaodui/VPTinNSforCL .

1 Introduction

Continual learning (CL) is crucial for AI models to adapt to the ever-changing environment by learning sequentially arrived data, where the catastrophic forgetting is the key challenge [21, 28]. Recently, prompt tuning-based continual learning methods [40, 32, 34, 43, 10, 22, 38, 45, 20, 12, 18] have been attracting increasing attention due to their impressive performances in the CL field. Existing prompt tuning-based works tackle the downstream continual learning problem by selecting and updating relevant prompts, which is encoded with full task-specific knowledge while exploiting the general knowledge of the pre-trained ViTs [40, 39].

On the contrary, this paper aims to learn each task by tuning the prompts in the direction orthogonal to the subspace spanned by previous tasks’ features, so as to ensure no interference with tasks that have been learned to overcome catastrophic forgetting in CL. It is worth noting that forgetting can be theoretically resolved by gradient orthogonal projection methods [42, 31, 36, 44], which have been extensively explored especially when adapting CNN models. Nevertheless, it remains a huge gap to introduce the orthogonal projection-based methods of CNNs to visual prompt tuning due to the following challenges: 1) the high-order and non-linear self-attention operation; 2) the drift of prompt distribution brought by the LayerNorm in the transformer block. For the linear operation in convolution or fully-connected layers, the output features of old tasks can remain unchanged by updating the weights in the orthogonal subspace of previous input features. While for self-attention, three linear transformations are employed on input tokens, followed by high-order and non-linear operations for the self-attention interaction of tokens. It makes the relationship between the update of prompts and the output image tokens much more complex, far exceeding mere linearity.

In this work, we theoretically deduced two consistency conditions to achieve the prompt gradient orthogonal projection, which provide a theoretical guarantee of eliminating interference on previously learned knowledge via the self-attention mechanism in visual prompt tuning. To be concrete, we firstly take the full self-attention and LayerNorm into consideration and derive a strict condition for eliminating the interference through a comprehensive analysis of the forward propagation of the ViT layer. Then we further propose to convert the condition of self-attention into its two sufficient conditions, which enables us to address the challenge of high order and nonlinearity. Thirdly, we propose a constraint of invariant prompt distribution that removes the obstacle to the final simplification of the conditions brought by the LayerNorm. The consistency conditions reveal that if the prompt update can be orthogonal to (1) the normalized previous input image tokens projected with the second-order qkv-transformation matrices of the pre-trained model, and (2) the activated attention map generated by image queries and prompt keys, the interference in visual prompt tuning can be eliminated theoretically.

In practice, based on the proposed consistency conditions, an effective null-space-based approximation solution [36] has been proposed to implement the prompt gradient orthogonal projection, while the invariant prompt distribution constraint is implemented by incorporating a loss function which penalizes the drifting of prompt distribution over sequential tasks. We validate our Null-Space Projection for Prompts (NSP2) approach on extensive class-incremental benchmarks: 10- and 20-split CIFAR-100, 10-split ImageNet-R [39] and 10-split DomainNet [38], with the sequential fine-tuning VPT and CLIP models as baselines. Our approach brings 4%10% improvements in accuracy, and reduces 9%17% forgetting, which is superior to state-of-the-art methods.

Our contributions are summarized as follows: (1) We introduce the orthogonal projection into the visual prompt tuning for continual learning, which comprehensively considers the full operations of a transformer layer on the interference problem. (2) Two sufficient consistency conditions for the self-attention and an invariant prompt distribution constraint for LayerNorm are theoretically deduced, based on which an effective null-space-based approximation solution is introduced to implement the prompt gradient orthogonal projection for visual prompt tuning. (3) Extensive experimental results demonstrate the effectiveness of anti-forgetting on four class-incremental benchmarks with diverse pre-trained baseline models, and our approach achieves superior performances to state-of-the-art methods.

2 Related Work

Prompting-Based Approaches: Most of the prompting-based approaches adopt a two-stage framework [37, 39, 14, 15, 32, 34, 35, 11, 18, 19]: querying a group of prompts for an individual sample and using them to prompt the pre-trained models. For example, L2P [40] first selects a group of prompts from a prompt pool and then feeds them into the ViT. CPrompt [11] proposes to mitigate the gap between training and testing stages to enhance prediction robustness and boost prompt selection accuracy. These approaches essentially focus on acquisition of task-specific prompts tailored to individual samples. There are also several one-stage methods [2, 22, 38, 43, 20] based on prompt tuning. (1) Slowly updating trainable parameters [10, 43]: e.g., LAE [10] updates an offline expert with a large momentum to reduce the change of features. (2) Expandable backbones [45, 20]: e.g., EASE [45] trains a distinct lightweight adapter module for each new task, and designs a semantic mapping to complement the drift of old class prototypes. (3) Enhancing classifiers rather than focusing on learning features [38, 22, 12]: e.g., ESN [38] proposes an anchor-based classifier alignment approach based on energy-based models. As introduced above, these works still lack of a theoretical solution to the interference problem for visual prompt tuning. In our work, we conduct a deep analysis of this problem and provide a theoretical guidance on eliminating the interference.

Orthogonal Projection-Based Approaches: Orthogonal projection-based approaches [42, 4, 8, 31, 36, 17, 44] can theoretically eliminate the interference of new tasks on old tasks for linear layers. OWM [42] constructs a projector to find the direction orthogonal to the input space. GPM [31] first projects new gradients to the subspace important to the old tasks and then subtracts the projected components for updating parameters. Adam-NSCL [36] projects the parameter updates to the approximate null space of previous inputs. However, due to the different relationships between parameter updates and outputs in the linear operation and self-attention, the consistency condition used in CNNs is not directly applicable to the prompt tuning in ViTs. In our work, we derive the consistency conditions for the visual prompt tuning, enabling the application of orthogonal projection-based approaches to it, where the null-space projection [36] is adopted in our approach to get an approximate solution efficiently. We notice that a recently emerged work PGP [26] implements GPM [31] to prompt-based frameworks. However, it obtains the same conclusion as that of the linear operation under a simplified attention, which limits its application and performance as compared in the appendix D .

3 Preliminaries

Continual Learning: In the setting of continual learning, a network with parameters is sequentially trained on a stream of disjoint tasks , where task is associated with paired data of size . When a task arrives, the model would be trained for the current task, while the data from previous tasks is unreachable.

Forward Propagation of Visual Prompt Tuning in ViT Layers: We describe the forward propagation process of the ViT layer for visual prompt tuning, as illustrated in Figure 1 . Let and denote the input image tokens of a sample (including the pre-trained class token if available) and prompts, respectively, where is the dimension of each token. In the ViT layer, only the prompts are trainable parameters. The remaining parameters in LayerNorm, qkv-transformations and subsequent MLP introduced below are pre-trained and kept frozen. We use to denote the concatenated input tokens. First, they undergo the LayerNorm [1] operation :

| (1) |

where , . The and division here denote the element-wise (Hadamard) product and division, respectively. Note that the vectors , , and are broadcasted to match the matrices of dimensions , enabling them to carry out operations with . Then the normalized tokens are fed into the qkv-transformations:

| (2) |

where . The vector is broadcasted to a matrix of dimensions to facilitate the addition operation. Next is the self-attention:

| (3) |

where denotes the image tokens serving as queries. Eq. (3) can be expanded as Affinity, softmax (on rows) and Aggregation operations:

| (4) | |||

| (5) | |||

| (6) |

It is worth noting that the rows of the attention map where the prompts serve as queries (i.e., ) do not need to be computed, as formulated in Eq. (4) and illustrated in Figure 1 . The reason is that in VPT-Deep [13], the output prompts of this ViT layer will be replaced with new trainable prompts in the subsequent layer. Omitting has no impact on the output image tokens of the ViT layer, as the subsequent Aggregation, LayerNorm and MLP operations are performed independently for each token. If no new prompts are added in the next layer, the output prompts can be just discarded as well.

After the self-attention, operations consist of another LayerNorm and the MLP layer are applied individually to each token, without any interaction among the tokens. Finally, the output fine-tuned image tokens are fed into the next ViT layer.

Orthogonal Projection in Convolutional Layers: A convolutional operation is actually a linear operation. For a convolutional layer in task , we use to denote its unrolled convolutional kernel matrix [5]. Here, represents the number of pixels within a kernel, and corresponds to the number of kernels. Each convolutional patch from the input feature map is flattened into a row vector with a dimension of . These row vectors of totaling patches compose the input feature matrix . The output feature for in task is expected to remain unchanged (referred to as consistent) in the next task to prevent forgetting:

| (7) |

By substituting , with denoting the weight update in , the consistency condition for the convolutional layer is established as follows:

| (8) |

which can be further simplified as:

| (9) |

Eq. (9) suggests that if the weight update is orthogonal to the previous input feature during training in the new task, the corresponding output feature will remain unchanged. Thereby, the interference of the new task on the old task is eliminated. This can be realized by projecting the candidate weight update into the orthogonal subspace of : , where is an orthogonal projection matrix [42, 36, 31].

Similarly, for the prompt tuning which fine-tunes the prompts in a ViT layer , we also aim to satisfy the following consistency objective for the purpose of anti-forgetting:

| (10) |

However, the consistency condition in Eq. (9) does not hold for Eq. (10), since in prompt tuning. Instead, all the tokens and first undergo a LayerNorm and then interact via the self-attention mechanism, as previously described. The complicated forward propagation within the ViT layer brings huge challenge to analyzing the consistency conditions in relation to the prompt update . In the next section, we will tackle this challenge and derive the consistency conditions for visual prompt tuning.

4 Method

We use and to denote the input tokens before and after updating the prompts, respectively, where . Our goal is to analyze how to satisfy Eq. (10) and derive one or more conditions expressed in terms of the prompt update . These conditions will subsequently guide the application of orthogonal projection to .

4.1 Analysis of Consistency Conditions

As can be seen in Figure 1, those outputs of LayerNorm and qkv-transformations corresponding to the image tokens remain unaffected by the updates to the prompts. Hence, the essence of attaining the consistency objective Eq. (10) can be turned into analyzing how to keep the output of self-attention in Eq. (3) unchanged as the prompts are updated, i.e., satisfying:

| (11) |

However, the nonlinear operation (i.e., ) and the potential higher-order term arising from in Eq. (3) complicate the direct resolution of this objective. Specifically, the non-injection property of the function causes non-unique solutions. The multiplication between derives a quadratic term , which result in difficult optimization for .

To address this issue, we propose two sufficient conditions consisting solely of linear operations. Specifically, we split the process of self-attention into two primary stages, i.e., the Affinity described by Eq. (4) and the Aggregation outlined in Eq. (6). We can achieve Eq. (11) by ensuring the consistency of each stage:

| (12) | |||

| (13) |

We first analyze the consistency objective of Affinity, i.e., Eq. (12), for and :

| (14) | |||

| (15) |

where is omitted for simplicity. Upon fulfilling Eq. (12), we can obtain , corresponding to the output of Eq. (5). Subsequently, we analyze the consistency objective of Aggregation in Eq. (13), yielding results for and as:

| (16) | |||

| (17) |

Based on Eq. (1217), we are able to derive the following two equations, respectively:

| (18) | |||

| (19) |

Note that we expect to further deduce Eq. (18) and Eq. (19) to obtain equations among , and . However, due to the square root and quadratic terms in the expressions of the standard deviations and , it is difficult to express in terms of and . Consequently, it is challenging to derive a straightforward equation that relates and through .

To simplify the problem, we introduce an additional constraint on the distribution of prompts. Concretely, we require that the updated prompts retain the same distribution as , i.e., meeting the following assumption:

| (20) |

In this way, we can establish a straightforward mathematical relationship connecting , and :

| (21) |

Consequently, we can apply Eq. (21) to simplify Eq. (18) and (19) as:

| (22) | |||

| (23) |

It should be noted that in Eq. 22 and Eq. 23 , , and are pre-trained parameters kept frozen throughout the continual learning process. and are two matrices derived from the input . As our objective is to ensure that the above two equations remain valid for the variables and , it is sufficient to meet the following conditions, in which can be ignored whereas remains crucial:

| (24) | |||

| (25) |

Now we have obtained the simplified formulas expressed by in Eq. (24) and (25).

To sum up, we convert the overall consistency equation Eq. (11) into two sufficient conditions Eq. (12) and (13) for Affinity and Aggregation, respectively. Consequently, we derive two corresponding consistency conditions Eq. (24) and (25) expressed by the prompt update , under the constraint of invariant prompt distribution formulated in Eq. (20). The deduced conditions can satisfy the consistency objective in Eq. (10), thereby achieving the goal of eliminating the interference of the new task on the old task for visual prompt tuning.

As , Eq. (24) implies that if the (transposed) prompt update can be orthogonal to the normalized previous input image tokens projected with a second-order transformation matrices of the pre-trained ViT, the consistency for Affinity can be guaranteed. When we ignore the normalization and the bias term in , Eq. (24) can be simplified as . The simplified condition is still essentially different from the consistency condition of linear layers (i.e., Eq. (9)) and that deduced in [26] (i.e., ). It indicates the interaction between the image tokens and prompts within ViT layers is fundamentally distinct, leading to a unique consistency condition related to the second-order transformation matrices of the pre-trained model. Moreover, Eq. (25) is also an essential condition served as one of the sufficient conditions for the consistency of the whole ViT layer. It implies that if the prompt update can be orthogonal to the activated attention map generated by the image queries () and prompt keys (), the consistency of Aggregation can be achieved.

4.2 Optimization of Consistency Conditions

To jointly optimize Eq. (24) and (25), we need to solve that can meet both equations concurrently. Here, we employ a separate optimization approach to get an approximate solution efficiently. Initially, it ensures is orthogonal to the subspace spanned by to satisfy Eq. (24). Subsequently, it makes orthogonal to the subspace spanned by to satisfy Eq. (25).

Specifically, we use to denote the candidate parameter update generated by the optimizer for the prompts. We aim to obtain a projection matrix such that . Following the previously mentioned separate optimization strategy, we first ensure is orthogonal to by the projection matrix : . Then is made orthogonal to by another projection matrix : . Therefore, the objective of the optimization turns into obtaining the two projection matrices and to satisfy Eq. (24) and (25). Inspired by the null-space projection method [36], the bases of and correspond to the null-space bases of and , respectively. We use and to denote the bases of the null spaces for and , where and indicate their nullities. and can be obtained from the right singular vectors associated with the zero singular values, through the process of singular value decomposition (SVD) applied by and , respectively. In this way, we get the projection matrices and , which are the solutions enabling to jointly satisfy Eq. (24) and (25):

| (26) |

For the constraint Eq. (20), we incorporate an additional loss function aimed at penalizing the drift of prompt distribution, hence realizing a relaxed version of this constraint:

| (27) |

In Eq. (27), and represent the target prompt distribution obtained in task , while and denote the distribution to be optimized in task .

4.3 Extension to Multi-Heads

We further extend the consistency conditions Eq. (24) and (25) to multi-head self-attention, a common feature in current transformer-based models. Suppose there are heads and represents the dimension of each token in a head. We use , and to denote the corresponding matrices in Eq. (24) and (25) for the -th head, respectively. The objective is to ensure these conditions are met across all heads, i.e., and , . Let and represent the concatenated matrices from all the heads, respectively. Based on block matrix properties, those two sets of conditions can be formulated as and . To sum up, The main difference between single-head and multi-heads is that the parameter update should be orthogonal to the subspace spanned by the concatenation matrices from all heads for multi-heads self-attention. Therefore, for the multi-heads variant, only an additional step of concatenation of the matrices from all heads is required in our algorithm.

5 Experiments

5.1 Experimental Setups

In our experiments, we mainly utilize the VPT [13] with a ViT-B/16 backbone [9] pre-trained on ImageNet-21k. Additionally, we validate the effectiveness on the CLIP [27] model, wherein the visual prompts are inserted into the image encoder. Our experiments are conducted across 4 class-incremental benchmarks: 10- and 20-split CIFAR-100, 10-split ImageNet-R and 10-split DomainNet. We report the mean values of the final average accuracy and final average forgetting over 3 runs with different random seeds. Given that the null spaces of and may not always exist in practice, we compute the approximate null spaces and determine the nullities and in an adaptive manner, rather than the way suggested in [36]. For more detailed information regarding the experimental setups, please refer to Appendix B.

| Method | 10S-CIFAR-100 | 20S-CIFAR-100 | 10S-ImageNet-R | 10S-DomainNet | ||||

|---|---|---|---|---|---|---|---|---|

| Acc. | Forgetting | Acc. | Forgetting | Acc. | Forgetting | Acc. | Forgetting | |

| VPT-Seq | 87.27 | 12.33 | 82.36 | 17.36 | 72.46 | 19.41 | 73.28 | 25.65 |

| VPT-NSP2 | 91.74 | 3.28 | 89.89 | 4.91 | 78.88 | 5.06 | 83.54 | 8.54 |

| Upper-bound | 93.87 | - | 93.87 | - | 84.60 | - | 89.25 | - |

| CLIP-Seq | 72.91 | 15.13 | 71.37 | 17.89 | 75.69 | 19.21 | 67.73 | 35.60 |

| CLIP-NSP2 | 80.96 | 12.45 | 79.83 | 13.77 | 82.17 | 6.42 | 77.04 | 18.33 |

| Upper-bound | 84.52 | - | 84.52 | - | 84.86 | - | 81.65 | - |

5.2 Main Results

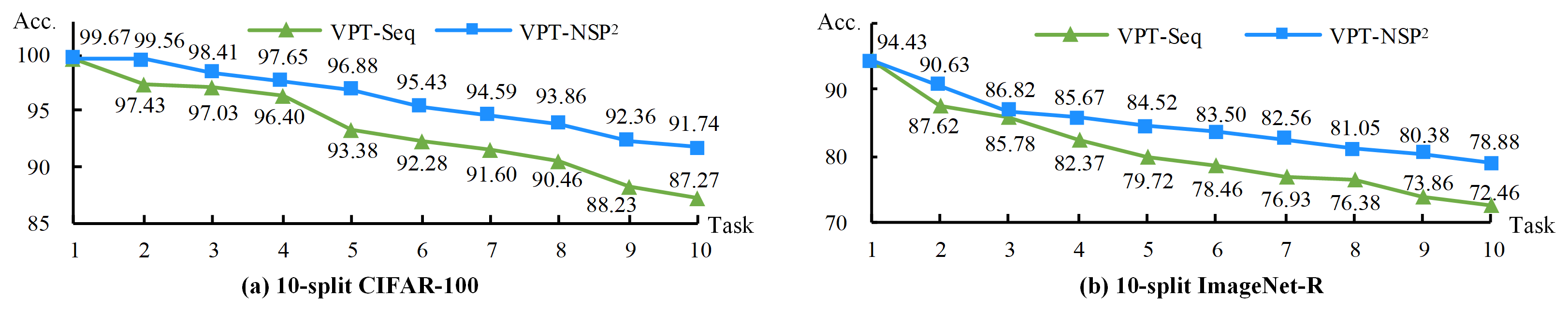

Validation of Effectiveness: The comparison between our approach and the sequential fine-tuning VPT and CLIP baselines is shown in Table 1 . For the VPT model, the proposed NSP2 achieves 4.47%10.26% improvements in accuracy on the 4 benchmarks. Meanwhile, it reduces the forgetting by 9.05%17.11%. As to the CLIP model, the NSP2 improves the accuracy by 6.48%9.31%, and reduces the forgetting by 2.68%17.27%. We calculate the accuracy across all previously encountered tasks after completing training on each task. The accuracy curves of VPT-Seq and VPT-NSP2 on 10-split CIFAR-100 and 10-split ImageNet-R are displayed in Figure 2. They demonstrate our approach consistently outperforms the baseline throughout the sequential learning of tasks.

We conduct additional experiments with the VPT model, utilizing the weights pre-trained on different datasets as well as different paradigms, as shown in Figure 3 . The pre-training paradigms and datasets include: naive classification on ImageNet-1k [30], DINO [3] on ImageNet-1k, MIIL [29] on ImageNet21k-P and CLIP on LAION-2B [6] (we only use its image encoder). As can be seen from the figure, our approach not only significantly enhances accuracy but also markedly mitigates forgetting. These results further demonstrate the generalizability of the proposed approach.

| Method | Venue | 10S-CIFAR-100 | 20S-CIFAR-100 | 10S-ImageNet-R | 10S-DomainNet | ||||

|---|---|---|---|---|---|---|---|---|---|

| Acc. | Forgetting | Acc. | Forgetting | Acc. | Forgetting | Acc. | Forgetting | ||

| L2P [40] | CVPR’22 | 83.830.04 | 7.630.30 | 80.100.72‡ | - | 61.570.66 | 9.730.47 | 81.170.83† | 8.981.25 |

| DualPrompt [39] | ECCV’22 | 86.510.33 | 5.160.09 | 82.020.32‡ | - | 68.130.49 | 4.680.20 | 81.700.78† | 8.040.31 |

| CODA-P [32] | CVPR’23 | 86.250.74 | 1.670.26 | - | - | 75.450.56 | 1.640.10 | 80.040.79† | 10.160.35 |

| ESN [38] | AAAI’23 | 86.340.52 | 4.760.14 | 80.560.94‡ | - | 62.610.96‡ | - | 79.222.04† | 10.622.12 |

| APG [33] | ICCV’23 | 89.35 | 6.01 | 88.64 | 6.51 | 73.27 | 8.59 | - | - |

| LAE [10] | ICCV’23 | 85.590.46 | - | 83.930.28 | - | 72.660.63 | - | - | - |

| DualP-LGCL [15] | ICCV’23 | 87.230.21 | 5.100.15 | - | - | 69.460.04 | 4.200.06 | - | - |

| C-LN [23] | ICCVW’23 | 86.950.37 | 6.980.43 | - | - | 76.360.51 | 8.311.28 | - | - |

| EvoPrompt [18] | AAAI’24 | 87.970.30 | 2.600.42 | 84.640.14 | 3.980.24 | 76.830.08 | 2.780.06 | - | - |

| OVOR-Deep [12] | ICLR’24 | 85.990.89 | 6.422.03 | - | - | 76.110.21 | 7.160.34 | - | - |

| DualP-PGP [26] | ICLR’24 | 86.920.05 | 5.350.19 | 83.740.01 | 7.910.15 | 69.340.05 | 4.530.04 | - | - |

| InfLoRA [20] | CVPR’24 | 87.060.25 | - | - | - | 75.650.14 | - | - | - |

| EASE [45] | CVPR’24 | 87.76 | - | 85.80 | - | 76.17 | - | - | - |

| CPrompt [11] | CVPR’24 | 87.820.21 | 5.060.50 | - | - | 77.140.11 | 5.970.68 | 82.970.34 | 7.450.93 |

| VPT-NSP2 | This work | 91.740.63 | 3.280.45 | 89.890.72 | 4.910.59 | 78.880.50 | 5.060.26 | 83.540.77 | 8.540.48 |

Comparison with Existing Methods: We compare our method with existing methods in Table 2, where the competitors include many recent works. The proposed VPT-NSP2 achieves state-of-the-art performance on the four benchmarks, with surpassing the second best approach by an average of 1.49% in accuracy. The forgetting of our approach is not the lowest, which is reasonable since our approach sacrifices some stability for a better trade-off between stability and plasticity. The outperforming accuracy can demonstrate the superiority of our method.

| 10S-CIFAR-100 | 20S-CIFAR-100 | 10S-ImageNet-R | 10S-DomainNet | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Acc. | Forgetting | Acc. | Forgetting | Acc. | Forgetting | Acc. | Forgetting | |||

| 87.27 | 12.33 | 82.36 | 17.36 | 72.46 | 19.41 | 73.28 | 25.65 | |||

| 90.58 | 6.91 | 88.13 | 10.27 | 78.05 | 8.14 | 82.31 | 10.89 | |||

| 88.74 | 10.85 | 83.32 | 16.48 | 74.71 | 14.69 | 78.87 | 17.81 | |||

| 91.33 | 4.22 | 88.96 | 6.42 | 78.37 | 6.25 | 83.17 | 8.95 | |||

| 91.42 | 3.94 | 88.46 | 8.64 | 78.30 | 6.31 | 83.13 | 9.32 | |||

| 89.36 | 9.32 | 86.67 | 11.59 | 75.27 | 13.35 | 79.45 | 16.50 | |||

| 91.74 | 3.28 | 89.89 | 4.91 | 78.88 | 5.06 | 83.54 | 8.54 | |||

Ablation Study: The two consistency conditions Eq. (24) and (25), along with the constraint Eq. (20), constitute the main components of our approach. They correspond to , in Eq. (26), and in Eq. (27). We study their effects on the four benchmarks using VPT-NSP2, with results presented in Table 3. We can see that the projection for Affinity () plays a crucial role, which brings 3.31%9.03% improvement in accuracy and 5.42%14.76% decline in forgetting. Furthermore, the projection for Aggregation () and the loss for invariant prompt distribution are indispensable as well for minimizing forgetting. Optimal accuracy is achieved when all three conditions are applied.

Model Analysis: We analyze the evolution of training losses on the 10-split CIFAR-100 and 10-split ImageNet-R benchmarks, as shown in Figure 4 . Each point on the curve represents the training loss of the data in / after the model has been trained on subsequent tasks. As can be seen, the losses of VPT-NSP2 on previous tasks can be almost retained, confirming that our approach can effectively mitigate the interference of new tasks on old tasks.

Trade-off between Stability and Plasticity: We first adaptively determine the nullities and for and to achieve near-minimum forgetting. Based on this, we assign two weights and to the projection matrices to control the trade-off between stability and plasticity: , where denotes the identity matrix. The effects of and which are set to a same value is shown in Figure 5 . As the weight decreases, the accuracy increases first owing to better plasticity, and then decreases due to worse stability caused by the forgetting. It implies that a trade-off can be achieved by the two weights of projections.

| Method | 50S-CIFAR100 | 50S-ImageNet-R | 50S-DomainNet | 100S-ImageNet-R | 100S-DomainNet | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Acc. | Forgetting | Acc. | Forgetting | Acc. | Forgetting | Acc. | Forgetting | Acc. | Forgetting | |

| L2P | 76.19 | 12.06 | 48.53 | 12.99 | 59.45 | 11.53 | 38.87 | 15.26 | 50.52 | 17.66 |

| EvoPrompt | 76.60 | 13.86 | 68.53 | 10.03 | 67.68 | 10.41 | 61.84 | 15.84 | 56.35 | 21.39 |

| OVOR | 65.69 | 14.28 | 60.08 | 5.86 | 66.27 | 7.43 | 40.49 | 8.12 | 47.65 | 8.91 |

| InfLoRA | 61.49 | 13.68 | 59.02 | 11.02 | 69.96 | 9.51 | 38.16 | 15.11 | 44.32 | 17.85 |

| EASE | 74.47 | 9.31 | 68.17 | 7.76 | 61.20 | 10.01 | 47.55 | 8.22 | 33.08 | 32.14 |

| CPrompt | 74.97 | 7.45 | 68.47 | 8.16 | 67.87 | 9.36 | 56.95 | 10.20 | 53.73 | 12.14 |

| VPT-Seq | 70.47 | 29.21 | 56.38 | 37.91 | 58.39 | 44.79 | 49.72 | 45.53 | 46.39 | 49.34 |

| VPT-NSP2† | 81.92 | 6.56 | 67.32 | 6.35 | 70.13 | 9.92 | 59.97 | 10.07 | 54.44 | 11.04 |

| VPT-NSP2‡ | 82.98 | 6.66 | 69.48 | 6.51 | 71.28 | 11.36 | 62.23 | 12.13 | 57.35 | 13.82 |

Long-sequence Continual Learning We experiment on 5 benchmarks under the protocols of 50 tasks and 100 tasks to validate that our approach remains effective even within the context of long-sequence continual learning. The results are presented in Table 4. Despite lacking plasticity enhancement, VPT-NSP2 can outperform existing state-of-the-art approaches and especially surpasses L2P by a large margin. This demonstrates that forgetting is still the predominant factor affecting performance in long sequence of tasks. With the plasticity enhancement, VPT-NSP2 achieves significant increase in accuracy (by 1.1%2.9%). This demonstrates that our plasticity enhancement is effective in learning new knowledge in long-sequence continual learning.

6 Conclusion

In this paper, we study the interference problem of visual prompt tuning in ViTs, and propose two consistency conditions which can eliminate the interference in theory under the constraint of invariant prompt distribution. They guarantee the consistency of Affinity, Aggregation and distribution of prompts in LayerNorm, respectively, which jointly achieve the consistency objective of the whole ViT layer. We adopt the null-space projection to implement the two conditions and utilize an extra loss to satisfy the constraint. Our experiments on various benchmarks demonstrate the effectiveness of the proposed conditions for anti-forgetting, and our approach achieves state-of-the-art performances.

Limitation Discussion: To simplify the derivation of our consistency conditions, we introduce a constraint of invariant prompt distribution. Although the superior results show that it may not be a very strong assumption, it is not an exact solution.

References

- [1] Lei Jimmy Ba, Jamie Ryan Kiros, and Geoffrey E. Hinton. Layer Normalization. CoRR, abs/1607.06450, 2016.

- [2] Benjamin Bowman, Alessandro Achille, Luca Zancato, Matthew Trager, Pramuditha Perera, Giovanni Paolini, and Stefano Soatto. À-la-carte Prompt Tuning (APT): Combining Distinct Data Via Composable Prompting. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 14984–14993, 2023.

- [3] Mathilde Caron, Hugo Touvron, Ishan Misra, Hervé Jégou, Julien Mairal, Piotr Bojanowski, and Armand Joulin. Emerging Properties in Self-Supervised Vision Transformers. In IEEE/CVF International Conference on Computer Vision, pages 9630–9640, 2021.

- [4] Arslan Chaudhry, Naeemullah Khan, Puneet K. Dokania, and Philip H. S. Torr. Continual Learning in Low-rank Orthogonal Subspaces. In Advances in Neural Information Processing Systems, 2020.

- [5] Kumar Chellapilla, Sidd Puri, and Patrice Simard. High performance convolutional neural networks for document processing. In Tenth international workshop on frontiers in handwriting recognition. Suvisoft, 2006.

- [6] Mehdi Cherti, Romain Beaumont, Ross Wightman, Mitchell Wortsman, Gabriel Ilharco, Cade Gordon, Christoph Schuhmann, Ludwig Schmidt, and Jenia Jitsev. Reproducible Scaling Laws for Contrastive Language-Image Learning. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 2818–2829, 2023.

- [7] Ekin D. Cubuk, Barret Zoph, Dandelion Mané, Vijay Vasudevan, and Quoc V. Le. AutoAugment: Learning Augmentation Strategies From Data. In IEEE/CVF International Conference on Computer Vision, pages 113–123, 2019.

- [8] Danruo Deng, Guangyong Chen, Jianye Hao, Qiong Wang, and Pheng-Ann Heng. Flattening Sharpness for Dynamic Gradient Projection Memory Benefits Continual Learning. In Advances in Neural Information Processing Systems, volume 34, pages 18710–18721, 2021.

- [9] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, and Neil Houlsby. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In International Conference on Learning Representations, 2021.

- [10] Qiankun Gao, Chen Zhao, Yifan Sun, Teng Xi, Gang Zhang, Bernard Ghanem, and Jian Zhang. A Unified Continual Learning Framework with General Parameter-Efficient Tuning. In IEEE/CVF International Conference on Computer Vision, pages 11449–11459, 2023.

- [11] Zhanxin Gao, Jun Cen, and Xiaobin Chang. Consistent Prompting for Rehearsal-Free Continual Learning. CoRR, abs/2403.08568, 2024.

- [12] Wei-Cheng Huang, Chun-Fu Chen, and Hsiang Hsu. OVOR: OnePrompt with virtual outlier regularization for rehearsal-free class-incremental learning. In International Conference on Learning Representations, 2024.

- [13] Menglin Jia, Luming Tang, Bor-Chun Chen, Claire Cardie, Serge J. Belongie, Bharath Hariharan, and Ser-Nam Lim. Visual Prompt Tuning. In European Conference on Computer Vision, volume 13693, pages 709–727, 2022.

- [14] Dahuin Jung, Dongyoon Han, Jihwan Bang, and Hwanjun Song. Generating instance-level prompts for rehearsal-free continual learning. In IEEE/CVF International Conference on Computer Vision, pages 11813–11823, October 2023.

- [15] Muhammad Gul Zain Ali Khan, Muhammad Ferjad Naeem, Luc Van Gool, Didier Stricker, Federico Tombari, and Muhammad Zeshan Afzal. Introducing language guidance in prompt-based continual learning. In IEEE/CVF International Conference on Computer Vision, pages 11429–11439, October 2023.

- [16] Diederik P. Kingma and Jimmy Ba. Adam: A Method for Stochastic Optimization. In International Conference on Learning Representations, 2015.

- [17] Yajing Kong, Liu Liu, Zhen Wang, and Dacheng Tao. Balancing Stability and Plasticity Through Advanced Null Space in Continual Learning. In European Conference on Computer Vision, volume 13686, pages 219–236, 2022.

- [18] Muhammad Rifki Kurniawan, Xiang Song, Zhiheng Ma, Yuhang He, Yihong Gong, Yang Qi, and Xing Wei. Evolving Parameterized Prompt Memory for Continual Learning. In AAAI Conference on Artificial Intelligence, pages 13301–13309, 2024.

- [19] Zhuowei Li, Long Zhao, Zizhao Zhang, Han Zhang, Di Liu, Ting Liu, and Dimitris N. Metaxas. Steering Prototypes with Prompt-Tuning for Rehearsal-Free Continual Learning. In IEEE/CVF Winter Conference on Applications of Computer Vision, pages 2523–2533, 2024.

- [20] Yan-Shuo Liang and Wu-Jun Li. InfLoRA: Interference-free low-rank adaptation for continual learning. arXiv preprint arXiv:2404.00228, 2024.

- [21] Michael McCloskey and Neal J Cohen. Catastrophic interference in connectionist networks: The sequential learning problem. In Psychology of Learning and Motivation, volume 24, pages 109–165. Elsevier, 1989.

- [22] Mark D. McDonnell, Dong Gong, Amin Parvaneh, Ehsan Abbasnejad, and Anton van den Hengel. RanPAC: Random Projections and Pre-trained Models for Continual Learning. In Advances in Neural Information Processing Systems, 2023.

- [23] Thomas De Min, Massimiliano Mancini, Karteek Alahari, Xavier Alameda-Pineda, and Elisa Ricci. On the Effectiveness of LayerNorm Tuning for Continual Learning in Vision Transformers. In IEEE/CVF International Conference on Computer Vision Workshops, pages 3577–3586, 2023.

- [24] Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Köpf, Edward Z. Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems, pages 8024–8035, 2019.

- [25] Xingchao Peng, Qinxun Bai, Xide Xia, Zijun Huang, Kate Saenko, and Bo Wang. Moment Matching for Multi-Source Domain Adaptation. In IEEE/CVF International Conference on Computer Vision, pages 1406–1415, 2019.

- [26] Jingyang Qiao, Zhizhong Zhang, Xin Tan, Chengwei Chen, Yanyun Qu, Yong Peng, and Yuan Xie. Prompt Gradient Projection for Continual Learning. In International Conference on Learning Representations, 2024.

- [27] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, and Ilya Sutskever. Learning Transferable Visual Models From Natural Language Supervision. In International Conference on Machine Learning, volume 139, pages 8748–8763, 2021.

- [28] Roger Ratcliff. Connectionist models of recognition memory: Constraints imposed by learning and forgetting functions. Psychological review, 97(2):285, 1990.

- [29] Tal Ridnik, Emanuel Ben Baruch, Asaf Noy, and Lihi Zelnik. ImageNet-21K Pretraining for the Masses. In NeurIPS Datasets and Benchmarks, 2021.

- [30] Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael S. Bernstein, Alexander C. Berg, and Li Fei-Fei. ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision, 115(3):211–252, 2015.

- [31] Gobinda Saha, Isha Garg, and Kaushik Roy. Gradient Projection Memory for Continual Learning. In International Conference on Learning Representations, 2021.

- [32] James Seale Smith, Leonid Karlinsky, Vyshnavi Gutta, Paola Cascante-Bonilla, Donghyun Kim, Assaf Arbelle, Rameswar Panda, Rogerio Feris, and Zsolt Kira. CODA-Prompt: COntinual Decomposed Attention-Based Prompting for Rehearsal-Free Continual Learning. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11909–11919, June 2023.

- [33] Yu-Ming Tang, Yi-Xing Peng, and Wei-Shi Zheng. When Prompt-based Incremental Learning Does Not Meet Strong Pretraining. In IEEE/CVF International Conference on Computer Vision, pages 1706–1716, 2023.

- [34] Liyuan Wang, Jingyi Xie, Xingxing Zhang, Mingyi Huang, Hang Su, and Jun Zhu. Hierarchical Decomposition of Prompt-Based Continual Learning: Rethinking Obscured Sub-optimality. In Advances in Neural Information Processing Systems, 2023.

- [35] Runqi Wang, Xiaoyue Duan, Guoliang Kang, Jianzhuang Liu, Shaohui Lin, Songcen Xu, Jinhu Lv, and Baochang Zhang. AttriCLIP: A Non-Incremental Learner for Incremental Knowledge Learning. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3654–3663, June 2023.

- [36] Shipeng Wang, Xiaorong Li, Jian Sun, and Zongben Xu. Training Networks in Null Space of Feature Covariance for Continual Learning. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 184–193, June 2021.

- [37] Yabin Wang, Zhiwu Huang, and Xiaopeng Hong. S-Prompts Learning with Pre-trained Transformers: An Occam’s Razor for Domain Incremental Learning. In Advances in Neural Information Processing Systems, 2022.

- [38] Yabin Wang, Zhiheng Ma, Zhiwu Huang, Yaowei Wang, Zhou Su, and Xiaopeng Hong. Isolation and Impartial Aggregation: A Paradigm of Incremental Learning without Interference. In AAAI Conference on Artificial Intelligence, pages 10209–10217, 2023.

- [39] Zifeng Wang, Zizhao Zhang, Sayna Ebrahimi, Ruoxi Sun, Han Zhang, Chen-Yu Lee, Xiaoqi Ren, Guolong Su, Vincent Perot, Jennifer G. Dy, and Tomas Pfister. DualPrompt: Complementary Prompting for Rehearsal-Free Continual Learning. In European Conference on Computer Vision, volume 13686, pages 631–648, 2022.

- [40] Zifeng Wang, Zizhao Zhang, Chen-Yu Lee, Han Zhang, Ruoxi Sun, Xiaoqi Ren, Guolong Su, Vincent Perot, Jennifer Dy, and Tomas Pfister. Learning to Prompt for Continual Learning. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 139–149, June 2022.

- [41] Ross Wightman. Pytorch image models. https://github.com/rwightman/pytorch-image-models, 2019.

- [42] Guanxiong Zeng, Yang Chen, Bo Cui, and Shan Yu. Continual Learning of Context-Dependent Processing in Neural Networks. Nature Machine Intelligence, 1(8):364–372, August 2019.

- [43] Gengwei Zhang, Liyuan Wang, Guoliang Kang, Ling Chen, and Yunchao Wei. SLCA: Slow Learner with Classifier Alignment for Continual Learning on a Pre-trained Model. In IEEE/CVF International Conference on Computer Vision, pages 19091–19101, October 2023.

- [44] Zhen Zhao, Zhizhong Zhang, Xin Tan, Jun Liu, Yanyun Qu, Yuan Xie, and Lizhuang Ma. Rethinking Gradient Projection Continual Learning: Stability/Plasticity Feature Space Decoupling. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3718–3727, June 2023.

- [45] Da-Wei Zhou, Hai-Long Sun, Han-Jia Ye, and De-Chuan Zhan. Expandable Subspace Ensemble for Pre-Trained Model-Based Class-Incremental Learning. CoRR, abs/2403.12030, 2024.

Appendix: Visual Prompt Tuning in Null Space for Continual Learning

Appendix A Algorithm

An overview and algorithm of our approach are provided in Figure 6 and Algorithm 1 , respectively. We first initialize the overall uncentered covariance matrices [36] and , as well as the null-space projection matrices and . During training, the cross-entropy loss for classification and the loss of prompt distribution are jointly utilized for optimization. Subsequently, we get the candidate prompt updates computed by the optimizer. Then is projected by the null-space projection matrices and for updating the prompts. After the convergence, we obtain the matrices and to temporarily store and for the data of the current task. Then they are used to update the uncentered covariance matrices and by addition. Finally, we update the null-space projection matrices using the uncentered covariance matrices, which will be used in the next task.

Algorithm 2 shows the process of computing a null-space projection matrix. First, an input uncentered covariance matrix is decomposed by SVD, from which we can get the singular values and right singular vectors. Next, we determine the nullity (i.e., the dimension of null space) of according to the maximum second derivative, which is introduced in Section C . Then we select right singular vectors corresponding to the smallest singular values considered close to 0 as the bases of null space. Finally, we compute the normalized projection matrix, which provides an upper bound for the scale of the projected gradients and prevents excessive gradient magnitudes. In our implementation, the null-space projection matrix is added by an identity matrix with a weight (specifically for and for ). is a hyper-parameter for the trade-off between stability and plasticity, which is also introduced in Section C

Appendix B Experimental Setups and Implementation Details

Models: We validate our approach on the Vision Transformer (ViT) [9] and CLIP [27] models in the experiments, whose backbones are both ViT-Base/16 [9]. The ViT is pre-trained on ImageNet-21k, and we insert 4 prompts into each of the 12 layers for fine-tuning, which is referred to as "VPT" [13]. The classifiers are dependently trained in each task and the orthogonal projection is not applicable to them. All the classifiers from the available tasks are concatenated to make prediction during inference. For the CLIP model pre-trained on the WebImageText, we insert 4 prompts into each of the first 3 layers of the image encoder, while the text encoder is kept frozen. The logit scale that serves as a learnable scalar parameter to scale the cosine similarities between image features and text features is also set to trainable. We observed a serious cross-task confusion among the tasks in the CLIP model. Hence, we follow [43] to utilize the class-wise mean and covariance of previous features extracted before the embedding projection head (i.e., the last linear layer of the image encoder) to refine the projection head, after the prompt tuning stage in each task.

Benchmarks: We conduct experiments under the class-incremental learning protocol, where the classes in each task are disjoint, and task identity is unknown during inference. Four class-incremental benchmarks with three widely used datasets are adopted: 10- and 20-split CIFAR-100, 10-split ImageNet-R [39] and 10-split DomainNet [25, 38]. For the CIFAR-100 dataset, the total of 100 classes are randomly split into 10 or 20 tasks, which can evaluate the ability to handle different numbers of tasks. We follow [39] to randomly split the 200 classes in ImageNet-R into 10 tasks, which forms the 10-split ImageNet-R benchmark. For the 10-split DomainNet, we follow the same dataset protocol adopted in [38] and [11] to select the top 200 classes with the most images from the original DomainNet [25], and randomly split them into 10 tasks with 20 classes per task. 25% samples of the training data in each dataset are picked as a validation set for searching optimal hyper-parameters.

Metrics: Formally, the final average accuracy and final average forgetting are defined as:

where is the number of tasks, is the accuracy of the -th model on the -th task samples, and is the accuracy of the -th model on the -th task samples.

Higher accuracy means the model performs better, while lower forgetting means stronger stability (i.e., the ability to retain old knowledge). However, lower forgetting does not always generate higher accuracy since the accuracy is also affected by plasticity (i.e., the ability to learn new knowledge). The accuracy is the main metric we should focus on as it reflects the precision of classification in practice.

Implementations Details: For all the datasets and models, the images fed into the models are resized to pixels and augmented by AutoAugment [7] during training. For the VPT-based models, we use the Adam optimizer [16] with , and a weight decay of to train 100 epochs with an initial learning rate of 0.01 and a batch size of 256 on all benchmarks. The learning rate is scaled by a factor of 0.1 at the 50-th and 80-th epoch. Our training losses consist of the cross-entropy loss for classification and the loss in Eq. (27) whose coefficient is set to 1. Through cross validation on the validation set, we set the temperatures in the cross-entropy loss to 28, 25, 30 and 30 for the 10-split CIFAR100, 20-split CIFAR100, 10-split ImageNet-R and 10-split DomainNet benchmarks. There are two hyper-parameters and used for the trade-off between stability and plasticity in null-space projection as introduced in Section C , and we set both of them to be 0.97, 0.95, 0.94 and 0.95 for the four benchmarks by cross validation.

As to the CLIP-based models, the differences in training settings are as follows. We train them for 20 epochs with the batch size of 220 and the learning rate 0.001 which decays at the 10-th and 16-th epoch. The temperatures are all set to 1 since the logit scale is trainable. and are set to 0.98 which is a proper value for all the benchmarks. We refine the embedding projection head for 50 epochs using the SGD optimizer with a learning rate of 0.001, a momentum of 0.9 and a weight decay of .

Appendix C Trade-off between Stability and Plasticity

Given that the null space of covariance matrix does not always exist in practice, Wang et al. [36] suggest approximating it by selecting the bases whose associated singular values approach zero, where the singular values smaller than a specified multiple (denoted as in our paper) of the smallest one are selected. However, we experimentally find this strategy and the experience for selecting are not suitable for prompt tuning in ViTs to determine the nullities and for the uncentered covariance matrices and in Algorithm 1 , which will be introduced afterwards. To solve this problem, we propose an adaptive nullity strategy to determine the nullities in an adaptive manner. Utilizing the characteristic that the curve of descending singular values forms an "L" shape, we divide the curve into two parts by the point where the gradient changes fastest to cover most of the small singular values. It is realized by calculating the maximum second derivative of the points:

| (28) |

where denotes the -th singular value. We find it reaches near-minimum forgetting in our experiments which also means reaching near-optimal stability. Furthermore, to enhance the plasticity, we fuse the projection matrices with identity matrices by the weights and which should be close to 1:

| (29) |

In this way, we can make a trade-off between stability and plasticity by enhancing the plasticity based on near-optimal stability, and and are the hyper-parameters to control the trade-off.

Appendix D Comparison with PGP

D.1 Difference in Methods

The main difference between our method and PGP [26] are summarized as follows. (1) We derive a different consistency condition for Affinity even if we ignore the LayerNorm operation and the bias terms in the qkv-transformation. Specifically, our simplified consistency condition for Affinity is , contrasted with in PGP. (2) We analyze the consistency conditions for the complete self-attention, i.e., which contains the Aggregation operation. However, PGP does not account for the Aggregation. (3) We take the LayerNorm before self-attention into consideration and propose an invariant prompt distribution constraint, while it is ignored in PGP.

In conclusion, we conduct a comprehensive analysis of prompt tuning for the consistency objective, which provides a complete guarantee to eliminate the interference of new tasks on previous tasks. As demonstrated in our ablation study in the Experiment section, the consistency of Aggregation and LayerNorm also contribute to reducing forgetting, and thereby they should not be ignored. We make a comparison of the performance between PGP and our approach in the next subsection.

D.2 Performance Comparison

We compare with PGP [26] using the VPT-Seq and L2P [40] baselines on the four benchmarks in our experiments. The results are shown in Table 5 . We implement PGP to VPT (i.e. VPT-PGP) under the same training settings as VPT-NSP2 for a fair comparison. For the L2P-based methods, we insert prompts into the first three layers instead of only the first layer in the original implementation [40]. An orthogonal projection is also applied to the prompt pool which is essentially a linear layer in L2P-based models. We follow the training setting of PGP to train the L2P-based methods. The results in Table 5 demonstrate that our full approach can achieve more improvements in accuracy and reduce more forgetting than PGP. Even when applying only the projection matrix for the Affinity operation, our approach also performs better than PGP, demonstrating the effectiveness of our proposed method for mitigating the interference problem.

| Method | 10S-CIFAR-100 | 20S-CIFAR-100 | 10S-ImageNet-R | 10S-DomainNet | ||||

|---|---|---|---|---|---|---|---|---|

| Acc. | Forgetting | Acc. | Forgetting | Acc. | Forgetting | Acc. | Forgetting | |

| VPT-Seq | 87.27 | 12.33 | 82.36 | 17.36 | 72.46 | 19.41 | 73.28 | 25.65 |

| VPT-PGP | 87.76 | 11.98 | 82.71 | 16.85 | 73.12 | 18.92 | 73.98 | 25.15 |

| VPT-NSP2- | 90.58 | 6.91 | 88.13 | 10.27 | 78.05 | 8.14 | 82.31 | 10.89 |

| VPT-NSP2 | 91.74 | 3.28 | 89.89 | 4.91 | 78.88 | 5.06 | 83.54 | 8.54 |

| L2P | 84.12 | 6.36 | 81.46 | 8.69 | 61.25 | 9.32 | 65.73 | 10.19 |

| L2P-PGP | 84.70 | 5.96 | 82.04 | 8.11 | 62.01 | 8.55 | 66.31 | 9.63 |

| L2P-NSP2- | 86.39 | 4.60 | 82.99 | 7.34 | 64.10 | 7.17 | 67.48 | 8.21 |

| L2P-NSP2 | 86.78 | 4.22 | 83.37 | 6.93 | 64.66 | 6.84 | 68.14 | 7.79 |