Visual-Inertial Odometry with Online Calibration of Velocity-Control Based Kinematic Motion Models

Abstract

Visual-inertial odometry (VIO) is an important technology for autonomous robots with power and payload constraints. In this paper, we propose a novel approach for VIO with stereo cameras which integrates and calibrates the velocity-control based kinematic motion model of wheeled mobile robots online. Including such a motion model can help to improve the accuracy of VIO. Compared to several previous approaches proposed to integrate wheel odometer measurements for this purpose, our method does not require wheel encoders and can be applied when the robot motion can be modeled with velocity-control based kinematic motion model. We use radial basis function (RBF) kernels to compensate for the time delay and deviations between control commands and actual robot motion. The motion model is calibrated online by the VIO system and can be used as a forward model for motion control and planning. We evaluate our approach with data obtained in variously sized indoor environments, demonstrate improvements over a pure VIO method, and evaluate the prediction accuracy of the online calibrated model.

Index Terms:

Vision-Based Navigation, Visual-Inertial SLAM, Calibration and Identification.I Introduction

In recent years, visual-inertial odometry has seen tremendous progress (e.g. [1, 2, 3, 4]), driven by the many potential applications of such technology for augmented/virtual reality and autonomous robots, in particular flying robots. Surprisingly, for wheeled robots, VIO is not trivial to employ due to observability limitations for planar linear motions [5]. This can be alleviated by integrating motion model constraints into the state estimate. A popular approach in the literature is to use wheel odometer measurements to this end (see e.g. [5, 6, 7]).

In this paper, we take a conceptually different approach by integrating a velocity-control based kinematic motion model which does not rely on wheel encoders. By integrating the model into the state estimate, the model parameters such as the relative position of the sensor on the robot or the offset between model and real robot can be calibrated online. Differently to wheel odometry based models, velocity-control based models can be directly used for downstream tasks such as model-predictive motion control and planning [8], [9], [10]. We base our method on a non-linear optimization based approach [3] to visual-inertial odometry which optimizes state variables such as sensor pose and velocity, IMU biases, and keypoint map in a window of recent frames. Old frames and IMU measurements are marginalized in a proper probabilistic way to maintain the prior observations as prior knowledge. The IMU measurements are preintegrated into relative motion measurements between frames. In this framework, we include a velocity based motion model which models the motion of a wheeled robot in a plane based on linear forward and rotational velocity controls. For accurate integration of the measurements and controls, an accurate calibration of the sensor placement with respect to the drive, the time synchronization of the controls relative to the visual and inertial measurements, and an identification of the effect of control commands for the underlying low-level robot motion controller on actual executed motion are required. To model the unknown properties of the controller, we aggregate the raw control commands with a kernel function. We add parameters for the kernels and the placement (extrinsics) of the sensor on the robot to the estimation problem. The parameters are calibrated online in the non-linear optimization framework.

We evaluate our approach on data obtained with a mobile robot in several sizes of environments. We demonstrate that incorporating the velocity-control based kinematic motion model improves the accuracy and robustness of the VIO estimate. Moreover, we provide results on the prediction accuracy of our online calibrated model for reference for model-based control and planning approaches.

In summary, our contributions are:

-

•

We propose a novel visual-inertial odometry approach for wheeled robots which includes a velocity-control based kinematic motion model into the state estimate.

-

•

The parameters of the motion model are calibrated online with the VIO estimate.

-

•

We demonstrate that inclusion of the motion can improve the VIO estimate in various indoor environments of different sizes. We also provide evaluation of the prediction accuracy of the calibrated model.

Our model can be an alternative to wheel-odometry based methods when a velocity-control based model should be directly calibrated for use in model-predictive control and motion planning methods.

II RELATED WORK

Motion estimation by fusing odometry, IMU and camera data has recently spurred significant interest by the robotics community due to its applications for inertial navigation systems in service robotics and autonomous driving.

II-A Inertial and inertial-wheel odometry

Various recent approaches combine IMU and wheel odometry. Brossard et al. [11] suggest an EKF based approach which uses deep learning to predict the noise properties in an EKF framework which fuses IMU measurements to predict motion. In [12], deep kernel Gaussian Process models are learned for the motion and observation models which are used to fuse IMU and wheel odometry measurements in an EKF framework. In RINS-W [13], Brossard et al. propose to estimate the motion from IMU and odometry measurements using an RNN which detects different motion profiles in an EKF framework. These approaches, however, do not use the complementary strengths of visual measurements and do not provide a forward model.

II-B Visual-inertial-wheel odometry

Cameras provide complementary information to inertial measurement units for motion estimation. For general motions, 3-DoF linear acceleration and rotational velocity measurements make roll and pitch orientation observable relative to the gravity direction. However, double integration of the linear acceleration requires accurate estimation of biases (offsets) in the measurements and makes linear position estimation prone to drift. Similarly, the yaw orientation around the gravity direction is not observable and prone to drift due to noisy and biased gyroscope measurements. Visual measurements provide a reference for pose estimation to a local 3D map of the environment which is concurrently build with the pose estimates in VIO approaches. By this, all DoFs become observable, while the IMU provides high frame-rate measurements which improve the accuracy between images.

The Multi-State-Constrained Kalman Filter (MSCKF [1]) for VIO has been recently extended to incorporate wheel odometry and overcome observability issues of monocular visual-inertial odometry on wheeled robots in VINS on wheels [5]. The authors analyze observability of monocular visual-inertial navigation systems on a mobile robot platform and show that for specific motions, scale and 3-DoF rotation become unobservable. They also show that adding wheel encoder measurements makes scale observable. The approach does not calibrate the motion model parameters online like our method. In our setting, scale is already observable through the fixed calibrated baseline of our stereo camera.

Ma et al. [6] adopt the VINS on wheels approach and extend it with an Ackerman drive model. Jung et al. [14] add GPS measurements directly to VINS on wheels to make position observable. Yang and Huang [7] analyze observability for VINS on wheels with line and plane observations. Another approach concurrently estimates the wheel slippage with VIO [15]. More closely related to our method is the approach by Lee et al. [16] which investigates online calibration of the wheel odometry parameters and analyzes observability of the calibration parameters for different constraint motion scenarios. In contrast, we employ a non-linear optimization based approach for visual-inertial odometry and incorporate an inverse motion model for constraints.

Some approaches integrate wheel odometry into non-linear optimization based approaches. Liu et al. [17] develop online calibration of the extrinsics between camera, IMU and odometer. Liu et at. [18] propose a novel initialization approach which corrects the initial state estimates after the first turning motion to handle unobservability of the calibration parameters for straight motions. Chen et al. [19] calibrate visual-inertial-wheel odometry offline. The approach in [20] integrates smooth motion manifold constraints. Zheng and Liu [21] incorporate a planar motion constraint for the mobile base but allow small deviations from this motion in 6 DoF for the camera-IMU system. Also, different to ours, these approaches use wheel encoders to measure odometry. We propose a new approach which incorporates an inverse motion model into optimization-based visual-inertial odometry and calibrates the model online including extrinsics and time synchronization.

II-C Learning dynamics models for control

Optimal control approaches typically rely on action-conditional dynamics models which use them as forward models to plan towards goals. One recent example is the approach of Williams et al. [22] which learns a dynamics model offline from GPS. In [23] a sensor-based localization method using LiDAR, optical speed sensors and INS is used to provide feedback for learning deviations from an analytic dynamics model.

In our approach, we tightly integrate a kinematic motion model in a visual-inertial odometry which allows for calibrating the model online.

III METHOD

We integrate a velocity-control based mobile robot motion model into visual-inertial odometry and optimize the parameters of the motion model concurrently with the camera trajectory and bias parameters of the IMU. The motion model further constrains the camera motion estimate. The calibrated motion model could be useful for model-predictive motion control and path planning.

III-A Visual-Inertial Odometry

We extend the non-linear optimization-based visual-inertial odometry approach in [3]. The approach uses a KLT-based keypoint tracking frontend to track the camera motion from frame to frame. Keyframes are extracted along the camera trajectory and the keypoint tracks generate landmarks in the keyframes with corresponding point measurements. Optimization variables are the camera poses for the frames and keyframes, and the camera velocity and biases in the frames. Only a set of recent keyframes and frames is optimized (3 frames and 7 keyframes in our experiments). Older frames and keyframes are marginalized from the optimization window and their information serves as a prior. More formally, for each frame at time , we estimate the camera pose , the camera velocity , and the bias parameters of the IMU. For the keyframes, their camera poses are optimized. The tracked keypoints become landmarks which are hosted in the keyframe in which they have been observed for the first time. Landmark is parameterized by 2D coordinates which minimally represent the bearing vector to the 3D point, and the inverse distance . The KLT tracking provides measurements of the landmarks in subsequent frames and keyframes. Residuals are determined which measure the difference between the measured 2D positions in image at time towards the reprojection of landmark hosted in frame into the image using function .

In addition to visual residuals, an IMU provides linear acceleration and rotational velocity measurements from which further residuals on the pose and velocity estimates are formed. To this end, the IMU measurements are preintegrated [24] to obtain pseudo-measurements , between successive frames at times , with associated uncertainty . The inertial residuals are

| (1) | ||||

| (2) | ||||

| (3) |

where is the gravity direction and .

The visual-inertial odometry corresponds to the non-linear optimization problem

| (4) |

where and are the set of keyframes and frames, respectively, is the set of landmarks observed in frame , and is the set of indices of subsequent frame pairs. To optimize the error function efficiently, old keyframes and frames outside an optimization window are marginalized and only the variables inside the window are optimized.

For further details on the visual-inertial odometry method, we refer the reader to [3].

III-B Velocity-Control Based Motion Model

We use a velocity-control based motion model [25] and assume that the robot can be controlled by a control command through a linear velocity in forward direction and a rotational velocity . The motion model propagates the robot pose on the ground plane with the control command, , where is the twist vector, maps a 3D vector to , and

| (5) |

is the exponential map of with time difference between the successive time steps. The exponential map finds the relative motion for constant velocities over time duration . The logarithm map of is the inverse of the exponential map and maps relative poses to Lie algebra elements in . The operator maps 3D twist coordinate vectors to twists in .

III-C Motion Model Residuals

We incorporate the velocity-control motion model into the visual-inertial odometry framework in order to calibrate the parameters of the model and improve the robustness of the VIO. There are basically two choices to form residuals with a motion model: relative pose constraint (forward) or velocity constraint (inverse). Mathematically the forward and inverse model residuals are equivalent to each other. Both kinds of residuals achieve similar results, while the Jacobian matrix of the forward model is computationally more expensive (see appendix). For our motion constraint, we treat the velocity controls as measurement and assume the measurement noise comes from the velocity controls. In this case, using the inverse model residuals is simpler while using the forward model residuals requires error propagation with linear approximation of the exponential mapping.

Velocity commands are executed by the robot in the robot base frame whose pose relative to the world frame is denoted by (transforming coordinates from base to world frame ). In the base frame, the -axis points in forward driving direction, while the -axis points upwards and is the axis of rotational robot motion. The VIO provides pose estimates of the body frame of the IMU-camera sensor in the world frame, i.e. . The sensor is placed rigidly on the robot at a relative pose to the robot base frame. To quantify the relative motion of the robot base frame from times to of subsequent image frames, we can hence determine . The rotation around the -axis of the base frame is calculated from the relative rotation in as the -component of . The translational motion in the --plane is determined from the corresponding entries of . The estimated twist is

| (6) |

where . We add residuals of the form which implicitly measure the difference between the state estimates and the motion model prediction.

III-D Effective Control Command

In practice, the real action of the robots differs from the received control commands due to effects such as time offsets and properties of low-level controllers.

Typically, control inputs and image frames are not synchronized but run asynchronously and often also at different rates. In our experiments, the control rate is 15 Hz and is lower than the 30 Hz image frame rate which is also used to update the VIO estimate. Moreover in the real world, a delay exists between the control command sent by the controller and the control command executed by the robot.

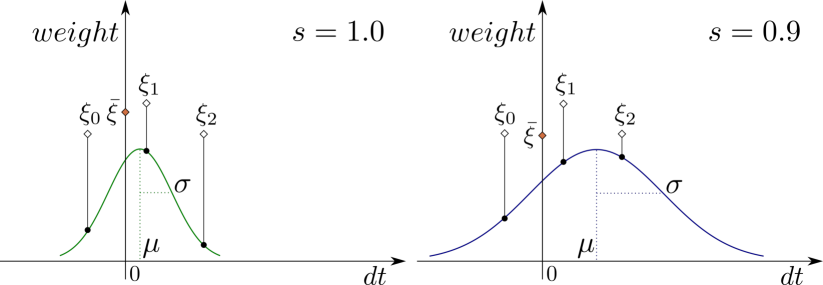

The robot physical hardware acceleration limits and internal controllers also prevent the robot from directly executing the control command even if the delay is known. To mitigate this difference and build a meaningful residual, we estimate an effective control at arbitrary time , e.g. at the time of an image frame, from a window of most recent commands. We average a window of recent control commands with weights determined by a RBF kernel (see Fig. 1) for the translational and rotational parts separately:

| (7) |

Here is a window of control commands indexed by their times at or before time and . For an image frame at time , the window typically spans the control commands that have occurred before the frame. We optimize for and and scale factor of both linear and angular parts as global parameters together with the VIO states. The RBF parameters are summarized in the state variables at time . In the experiments we demonstrate that the optimized RBF kernel can be used for motion prediction.

III-E Motion-Model-Based Error Function Terms

The robot body can vibrate during operation, the extrinsic pose is thus modeled as a time-variant state that is affected by white noise. For factors within the optimization window this kinematics-based error can be summarized as

| (8) |

where is the diagonal weight matrix for the velocity residuals, is the difference between two adjacent extrinsic pose estimates and is the diagonal weight matrix that reflects the white noise.

The VIO system takes the image frame at time and the raw controls up to the time of the previous frame as input for the optimization which also calibrates the RBF parameters for the motion model. The controls can be generated by manual control or an automatic high-level controller such as model predictive control for path tracking. In our experiments we use manual control commands as input and calibrate the RBF parameters online. A high-level controller would potentially require extrapolation of the last state estimate at the image rate to the current control time using the previous controls and the motion model.

III-F Plane Motion Constraint

We exploit prior knowledge that our robot moves on flat ground in indoor environments and add a stochastic plane constraint [5] for the robot pose. The plane can be parameterized as a 2 degree-of-freedom quaternion and a scalar which represents the distance between the ground plane to the world frame origin. The residual is

| (9) |

with . The plane motion error term becomes with covariance matrix . The stochasticity of the constraint allows for handling vibrations of the robot.

III-G Visual-Inertial Odometry with Motion Model Constraints

We integrate the above introduced calibration parameters of the constraints as additional variables into the visual-inertial odometry. The state of each frame in our optimization framework comprises the sensor pose , linear velocity , acceleration and gyroscope biases , , landmark parameters of hosted keypoints, base frame to sensor frame extrinsics , and the global variables including the RBF parameters , , and the plane parameters and . The optimized error function can be summarized as . During optimization the extrinsic poses will be marginalized like linear velocity and IMU biases while the global variables are kept and their linearization point is fixed once the first connected state is marginalized. A discussion of the observability of our model is provided in appendix.

IV EXPERIMENTS

| transl. RMSE RPE in m | rot. RMSE RPE in deg | transl. RMSE ATE in m | rot. RMSE ATE in deg | |||||

| dataset | vio | kin-vio (ours) | vio | kin-vio (ours) | vio | kin-vio (ours) | vio | kin-vio (ours) |

| small-01 | 0.035 | 0.021 | 0.659 | 0.597 | 0.037 | 0.014 | 0.713 | 0.463 |

| small-02 | 0.120 | 0.106 | 0.664 | 0.756 | 0.097 | 0.077 | 0.656 | 0.622 |

| small-03 | 0.042 | 0.027 | 0.832 | 0.693 | 0.037 | 0.019 | 1.060 | 0.561 |

| mid-01 | 0.232 | 0.197 | 1.439 | 1.242 | 0.190 | 0.153 | 0.978 | 0.957 |

| mid-02 | 0.195 | 0.158 | 1.171 | 1.100 | 0.150 | 0.108 | 0.807 | 0.739 |

| mid-03 | 0.342 | 0.271 | 1.490 | 1.257 | 0.150 | 0.088 | 1.674 | 1.224 |

| large-01 | 0.828 | 0.402 | 2.278 | 1.253 | 0.512 | 0.179 | 2.360 | 0.907 |

| large-02 | 0.467 | 0.381 | 1.495 | 1.001 | 0.237 | 0.216 | 1.032 | 0.749 |

| large-03 | 1.275 | 0.972 | 3.501 | 2.480 | 0.953 | 0.735 | 2.861 | 2.101 |

We evaluate the proposed kinematics-constraint VIO on a differential drive robot with a fisheye-stereo camera and IMU (see Figs. 3) in indoor environments. Similar as in [16] and [5], global offline optimization results are used as ground truth. To make sure enough loops can be found and the global optimization is accurate, the robot travels to the same location for several times in each recorded sequence. We evaluate the accuracy of the estimate in terms of absolute trajectory (ATE), relative pose error (RPE) [26] and the error of the effective control velocity with the ground truth velocity. The RPE is computed by averaging the errors over sequence lengths of the full trajectory. We also validate the prediction accuracy of the learned RBF kernel for different time horizons.

Three groups of data with different environment scales are collected and each group consists of three different sequences. In the sequences, the robot starts from a static pose and then approximately drives at its maximum speed of 0.5 m/s. The robot traveled over wooden floor, concrete and tiles, which also cause vibrations on the robot. The average lengths are 57.2 m (small scale), 222.3 m (middle scale), and 413.3 m (large scale). The ground truth for trajectory evaluation is computed using the global bundle adjustment layer of [3]. The method uses non-linear factor recovery to bound the computational and memory complexity of bundle adjustment using keyframes and to transfer information accumulated from intermediate frames and IMU measurements during VIO. For calculating the effective control velocity error and prediction results, we use the dense global mapping result as ground truth where we set every frame as a keyframe and optimize them globally. The image rate is 30 Hz, the IMU rate is 200 Hz and the linear and angular commands are sent at the rate of 15 Hz. The RBF parameters , and are initialized to 0, 0.5 and 1 for both linear and angular velocity commands. A small command window can not collect enough information, while a large command window includes the commands that are far away from the current frame. We empirically choose a command window size of 3. The extrinsic parameters between base and sensor frame are initialized with values from the robot CAD model.

IV-A Tracking Evaluation

Table I summarizes the RPE and ATE evaluation results. By integrating the kinematics motion constraint and the plane constraint (kin-vio) both ATE and RPE are reduced over pure VIO (vio). Fig. 1 illustrates and compares the results of data sequence big_01 estimated with purely VIO and our kinematics-constraint VIO. As can be seen, with the proposed method the deviation is decreased, especially in those parts of the trajectory with larger rotational motion.

In an ablation study, we compare the estimation accuracy of using RBF kernel weighting (kin-vio rbf) with other different weighting methods including RBF kernel with fixed initial parameters (kin-vio rbf w/o opt), non-weighted averaging of the command window (kin-vio avg), and using the last command that comes before the first frame of each frame pair (kin-vio raw). In addition, we also evaluate the estimate with only the plane motion constraint in addition to the VIO constraints. The error values are averaged over all data sequences and summarized in Tab. II. in which our combined method (kin-vio) consistently outperforms the others. The motion model can improve the tracking accuracy while the parameters are calibrated together with VIO. This can be attributed to the regularization by the parametric motion model whose parameters are adapted for a range of positive and negative velocity commands.

| avg. transl. RMSE in m | avg. rot. RMSE in deg | |||||||||

| kin-vio ours: rbf (w/o plane) | kin-vio rbf w/o opt (w/o plane) | kin-vio avg (w/o plane) | kin-vio raw (w/o plane) | kin-vio only plane | kin-vio ours: rbf (w/o plane) | kin-vio rbf w/o opt (w/o plane) | kin-vio avg (w/o plane) | kin-vio raw (w/o plane) | kin-vio only plane | |

| RPE | 0.282 | 0.324 | 0.336 | 0.337 | 0.296 | 1.153 | 1.180 | 1.183 | 1.184 | 1.164 |

| (0.393) | (0.441) | (0.452) | (0.452) | (1.507) | (1.592) | (1.600) | (1.601) | |||

| ATE | 0.177 | 0.210 | 0.219 | 0.220 | 0.190 | 0.925 | 0.947 | 0.948 | 0.950 | 0.935 |

| (0.270) | (0.303) | (0.311) | (0.311) | (1.356) | (1.413) | (1.418) | (1.418) | |||

| avg. RMSE of linear velocity in m/s | avg. RMSE of angular velocity in deg/s | |||||||||

| vel. error | 0.025 | 0.034 | 0.036 | 0.036 | 0.031 | 0.062 | 0.064 | 0.067 | ||

IV-B Prediction Evaluation

We also evaluate the performance of our method for forward motion prediction. Results for online prediction on data sequence small-01, mid-01 and large-01 are shown in Fig. 4. For this evaluation, we compute the prediction from each frame in the trajectory with different horizon lengths starting from the current estimate of the parameters. The command window is shifted along the future trajectory with the prediction step. We also compare the prediction accuracy with three alternative calibration methods. The first method uses the RBF model with constant initial parameters (rbf w/o opt). The second method uses the unweighted average value of the commands in the command window (avg). The third method calculates the prediction with the latest command (raw). Our proposed approach with calibrated RBF kernel parameters is denoted as ”rbf”.

It can be observed that the RBF kernel with optimized parameters has the smallest prediction error especially for longer prediction horizons. During the experiment we noticed that the improvement of the prediction accuracy is relatively smaller on the longer data sequences. This is because the rotations introduce larger errors and in the longer data sequences the robot mostly performs translational motion along the corridors with constant speed. Fig. 5 illustrates a prediction result on data sequence small-02 from beginning to the end of the trajectory with the final optimized RBF parameters. Our approach shows the smallest deviation compared with other methods.

IV-C Computation Time

We compare the run-time of our approach with the base VIO on our dataset using one Intel Xeon Silver 4112 [email protected] with 8 cores. On average the computation time increases by 34.14% from 14.79 ms to 19.84 ms for processing one frame, the maximum time stays similar with 60.05 ms at rare peaks, the minimum time is 3.90 ms. The approach can still process faster than real-time.

IV-D Discussion and Limitations

A potential limitation of our approach can be estimation bias in the VIO in settings such as texture-less scenes or biased camera intrinsics. In our work, we assume that the VIO result is sufficiently accurate with negligible systematic offsets so it can be used to calibrate the effective control. In future work, we could additionally integrate other types of sensors like GPS. Outlier measurements can be handled with robust norms in the VIO. One could also only activate the motion model when state variables like IMU bias are converged and indicate an accurate VIO.

Integrating wheel encoder information can also improve VIO accuracy, as it measures the actual rotation of the wheels at high frequency. To convert the wheel encoder information to body velocity or relative pose, one needs to consider the type of the vehicle. Our approach is simpler to integrate for robots whose motion can be modeled with our model (such as differential or Ackerman drives [25]). Moreover, our calibrated model could be used for downstream tasks such as model-predictive control based path tracking. In future work, we are also interested in combining wheel encoder measurements with our method.

V CONCLUSIONS

In this paper we present a VIO approach based on non-linear windowed optimization that includes velocity-control based kinematic motion model constraints. The motion model is integrated as a new factor between each image pair. We compute the 2D robot velocity between two consecutive images from the state estimate and compare it against the control command sent to the robot. To compensate the difference between the control command and the real robot action, we use RBF kernels along the time domain to determine an effective control command from the raw commands and calibrate the RBF parameters online in the VIO system. Our experiments demonstrate that by using this motion constraint in addition to a planar motion constraint not only the accuracy of the VIO is improved but the learned motion model can also predict the robot motion more accurately. In future work, more complex motion models or including other sensors like GPS or wheel odometry could be investigated.

APPENDIX

V-A Inverse vs. Forward Model Residuals

Given the relative pose between two frames , the corresponding pose in the horizontal plane consisting of rotational part and translational part , the effective control input with linear and angular velocity, and , the forward model can be written as

| (10) |

with derivatives and , while the inverse model is

| (11) |

with derivatives and .

V-B Observability

As in [5], the observability of the state variables can be analyzed based on the underlying state-space model irrespective of the implementation of the estimator. We discuss the observability properties of the state variables such as pose, plane parameters and RBF parameters for our model. A detailed analysis can be found in [27]. We follow the derivation in [5]. In general, the observability properties of the pose variables are the same for forward and inverse model. While in the forward model the derivative wrt. is an identity matrix, the derivative in the inverse model is . Because is an invertible matrix by definition, when we compute the observability matrix by multiplying this Jacobian matrix and the transition matrix, the rank of the observability matrix remains the same based on Sylvester’s inequality. Note that we use a stereo camera and hence scale becomes directly observable. We follow the proof scheme in [5] and show that the global orientation becomes observable by using the plane constraint and a set of priors in the initial frames. As in [5], the state transition is given by the IMU propagation model. The constraint from the velocity-based kinematic motion model is treated as an observation. The linearized transition matrix from time-step 1 to for the IMU propagation is derived in [28]. By including the transition of the RBF parameters as a constant propagation model, the transition matrix of our model becomes: with the augmented state vector including plane parameters , between ground and world frame , extrinsic parameters , between robot base and IMU frame and RBF parameters , .

As shown in [5], the plane distance is observable. Following the derivation in [5], the global orientation of the plane can be shown to be unobservable. Since our robot starts from still state, we use the initial accelerator measurement to initialize the plane angle. By adding this information as a Gaussian initial prior on the plane angle , the global orientation becomes observable. As shown in [16] the extrinsic parameters are also unobservable under specific motions. We used the extrinsics derived from the CAD model as an initial Gaussian prior to counteract this problem. We prove the observability of the RBF parameters () for the translational velocity. The parameters for the angular velocity have the same observability. We denote as , where is the time difference between control command at and image frame at . The Jacobian matrix is ,

| (12) | ||||

| (13) | ||||

| (14) |

The nullspace for the RBF parameters is . The corresponding bottom right block of the observability matrix for our inverse motion model is . The product of the motion model observability matrix and the nullspace is equal to as the other terms cancel out by multiplication with the components in . The RBF parameters are unobservable if the velocities in the window are constant, which can happen especially at the beginning of the datasets when the robot stands still. We placed a weak Gaussian prior on the initial values of the RBF parameter estimates. Due to the marginalization prior, the RBF parameters will remain observable even if they become temporarily unobservable in the window.

References

- [1] A. I. Mourikis and S. I. Roumeliotis, “A multi-state constraint kalman filter for vision-aided inertial navigation,” in IEEE ICRA, 2007.

- [2] S. Leutenegger, S. Lynen, M. Bosse, R. Siegwart, and P. Furgale, “Keyframe-based visual–inertial odometry using nonlinear optimization,” Int. J. of Robotics Research, vol. 34, no. 3, pp. 314–334, 2015.

- [3] V. Usenko, N. Demmel, D. Schubert, J. Stueckler, and D. Cremers, “Visual-inertial mapping with non-linear factor recovery,” IEEE Robotics and Automation Letters, vol. 5, no. 2, pp. 422–429, 2020.

- [4] C. Campos, R. Elvira, J. J. Gomez, J. M. M. Montiel, and J. D. Tardos, “ORB-SLAM3: An accurate open-source library for visual, visual-inertial and multi-map SLAM,” IEEE Transactions on Robotics, vol. 37, no. 6, pp. 1874–1890, 2021.

- [5] K. J. Wu, C. X. Guo, G. Georgiou, and S. I. Roumeliotis, “VINS on wheels,” in IEEE ICRA, 2017.

- [6] F. Ma, J. Shi, Y. Yang, J. Li, and K. Dai, “ACK-MSCKF: Tightly-Coupled Ackermann Multi-State Constraint Kalman Filter for Autonomous Vehicle Localization,” Sensors, vol. 19, no. 21, 2019.

- [7] Y. Yang and G. Huang, “Observability Analysis of Aided INS With Heterogeneous Features of Points, Lines, and Planes,” IEEE Trans. on Robotics, vol. 35, no. 6, pp. 1399–1418, 2019.

- [8] L. Kavraki, P. Svestka, J.-C. Latombe, and M. Overmars, “Probabilistic roadmaps for path planning in high-dimensional configuration spaces,” IEEE Trans. Rob. and Automation, vol. 12, no. 4, pp. 566–580, 1996.

- [9] S. LaValle and J. Kuffner, “Randomized kinodynamic planning,” in IEEE Int. Conf. on Robotics and Automation, 1999.

- [10] Y. Kuwata, J. Teo, S. Karaman, G. Fiore, E. Frazzoli, and J. P. How, “Motion planning in complex environments using closed-loop prediction,” in Proc. AIAA Guidance, Navigation, and Control Conf. and Exhibit, 2008.

- [11] M. Brossard, A. Barrau, and S. Bonnabel, “AI-IMU Dead-Reckoning,” IEEE Trans. on Intelligent Vehicles, vol. 5, no. 4, pp. 585–595, 2020.

- [12] M. Brossard and S. Bonnabel, “Learning Wheel Odometry and IMU Errors for Localization,” in IEEE ICRA, 2019.

- [13] M. Brossard, A. Barrau, and S. Bonnabel, “RINS-W: Robust Inertial Navigation System on Wheels,” in IEEE/RSJ IROS, 2019.

- [14] J. H. Jung, J. Cha, J. Y. Chung, T. I. Kim, M. H. Seo, S. Y. Park, J. Y. Yeo, and C. G. Park, “Monocular Visual-Inertial-Wheel Odometry Using Low-Grade IMU in Urban Areas,” IEEE Trans. on Intelligent Transportation Systems, pp. 1–14, 2020.

- [15] Z. Dang, T. Wang, and F. Pang, “Tightly-coupled Data Fusion of VINS and Odometer Based on Wheel Slip Estimation,” in IEEE International Conference on Robotics and Biomimetics (ROBIO), 2018.

- [16] W. Lee, K. Eckenhoff, Y. Yang, P. Geneva, and G. Huang, “Visual-Inertial-Wheel Odometry with Online Calibration,” in IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020.

- [17] J. Liu, W. Gao, and Z. Hu, “Visual-Inertial Odometry Tightly Coupled with Wheel Encoder Adopting Robust Initialization and Online Extrinsic Calibration,” in IEEE/RSJ IROS, 2019.

- [18] ——, “Bidirectional trajectory computation for odometer-aided visual-inertial slam,” IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 1670–1677, 2021.

- [19] Y. Chen, M. Zhang, D. Hong, C. Deng, and M. Li, “Perception System Design for Low-Cost Commercial Ground Robots: Sensor Configurations, Calibration, Localization and Mapping,” in IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2019.

- [20] Z. Zhang, G. Gallego, and D. Scaramuzza, “On the Comparison of Gauge Freedom Handling in Optimization-Based Visual-Inertial State Estimation,” IEEE Robotics and Automation Letters, vol. 3, no. 3, pp. 2710–2717, 2018.

- [21] F. Zheng and Y.-H. Liu, “Visual-Odometric Localization and Mapping for Ground Vehicles Using SE(2)-XYZ Constraints,” in IEEE Int. Conf. on Robotics and Automation (ICRA), 2019.

- [22] G. Williams, P. Drews, B. Goldfain, J. M. Rehg, and E. A. Theodorou, “Aggressive driving with model predictive path integral control,” in IEEE Int. Conf. on Robotics and Automation (ICRA), 2016.

- [23] J. Kabzan, L. Hewing, A. Liniger, and M. N. Zeilinger, “Learning-based model predictive control for autonomous racing,” IEEE Robotics and Automation Letters, vol. 4, no. 4, pp. 3363–3370, 2019.

- [24] C. Forster, L. Carlone, F. Dellaert, and D. Scaramuzza, “IMU preintegration on manifold for efficient visual-inertial maximum-a-posteriori estimation.” in Robotics: Science and Systems, 2015.

- [25] S. Thrun, W. Burgard, and D. Fox, Probabilistic robotics. MIT Press, 2005.

- [26] Z. Zhang and D. Scaramuzza, “A tutorial on quantitative trajectory evaluation for visual(-inertial) odometry,” in IEEE/RSJ IROS, 2018.

- [27] H. Li and J. Stueckler, “Observability analysis of visual-inertial odometry with online calibration of velocity-control based kinematic motion models,” Technical Report, arXiv, 2022.

- [28] J. A. Hesch, D. G. Kottas, S. L. Bowman, and S. I. Roumeliotis, “Consistency analysis and improvement of vision-aided inertial navigation,” IEEE Transactions on Robotics, vol. 30, no. 1, pp. 158–176, 2014.