Visual Exploration of Movement Relatedness for Multi-species Ecology Analysis

Abstract

Advances in GPS telemetry technology have enabled analysis of animal movement in open areas. Ecologists today are utilizing modern analytic tools to study animal behaviors from large quantity of GPS coordinates. Analytic tools with automatic event extraction functionality can be used to investigate potential interactions between animals by locating relevant segments in movement trajectories. However, such automation can easily overlook the spatial, temporal, social context as well as potential data problems. To this end, this paper explores the visual presentations that also clarify the spatial-temporal contexts, social surroudings, as well as underlying data uncertainties of multi-species animal interactions. The outcome system presents the proximity-based, time-varying relatedness between animal entities through pairwise (PW) or individual-to-group (i-G) perspectives. Focusing on the relational aspects, we employ both static depictions and animations to communicate the travelling of individuals. Our contributions are a novel visualization system that helps investigate the subtle variations of long term spatial-temporal relatedness while considering small group patterns. Our evaluation with movement ecologists shows that the system gives them quick access to valuable clues in discovering insights into multi-species movements and signs of potential interactions.

Index Terms:

Visualization, Movement Ecology, Spatial-Temporal Movement.1 Introduction

Our interest in animal movement is arguably as old as the history of science itself. The earliest explorations into this by Aristotle (384 - 322 BC) date back to 4th century BC[1]. With modern technologies such as sensory technology, telecommunication[2, 3, 4, 5], GIS[6, 7, 8], and data mining[9, 10], the subject has evolved into a field where data analysis of volume entities in large open areas plays a central role in scientific discoveries[11, 12]. As a result, scientists in the field of movement ecology are facing challenges from surging data availability and increasing demand for higher-level analytical capabilities[13]. Today, dedicated sense-making technology has become an essential stepping stone to unlock the full wealth of ecological data at hand[14].

Spatial movement is an important feature in finding ecological patterns[1, 15, 16]. As a recent trend in movement ecology, ecological insights into smaller stages has received proliferated attention[17], particularly the individual level analysis of movements[18, 19, 20, 21, 22]. In this category, cross-species interactions (antagonistic interactions like host - parasite, predator – prey and plant – herbivore[23, 24], for instance) are important because they are typically studied at a zoomed group scale, where individual behaviors are investigated.

Moreover, movement ecologists try to look beyond interaction instances alone. They wish to interpret the higher level meaning or motivation behind certain behaviors as a long-term pursuit[25, 16]. As phone data can reliably predict human social ties by their propinquity[26], the insights into the relationship between animals might be useful to collect evidence for investigating the less approachable motivations and meanings behind certain movements. This can be facilitated by visualizing movements that sufficiently express the details that relate to not only individuals in isolation but also the potential influence between adjacent group of multi-species entities.

To this end, we design an interactive visualization system that addresses the above concerns and help ecologist in the tasks of finding interesting patterns of animals’ relations. The implementation of the interactive system supports movement analyses through the perspective of relatedness, which concerns social intimacy or potential predatory threats in wild environments. It is determined by the general spatial proximity that varies unstably in longer periods.

We validate the system’s usefulness through use cases and expert evaluations. Positive results are received. This paper posits its contribution as an exploration characterized by:

-

•

a simplified, animation-based visual vocabulary designed to facilitate the communication of movements and time varying relatedness,

-

•

a visualization method to support pairwise and individual-to-group comparison of animal’s spatial situations, and

-

•

including uncertainty awareness in the study of animal movement relations.

2 Related Work

2.1 Visualization in Movement Ecology

Movements are usually referred as locomotive movements in the field of movement ecology. Location changes are useful clues to reveal ecological patterns in the problems such as resource use[27], population dynamics[13], and climate influence[28] between individuals, groups, or species. Many ecologists have already known the value of visualization in their ecological research. Generic visualization tools (e.g. Movebank[12], AMV[29], Env-DATA[30]) are employed to support common tasks like trajectory plotting and multivariate filtering. As they cover a wide range of species and data types for many research projects, these tools are enough for basic movement analyses.

However, some research tasks require analytic aggregation capabilities. Drosophigator[31] uses statistics from heterogeneous data sources to generate visualized predictions of the spread of invasive species. Xavier et al.[32] integrates environment data to study the connectivity (a technical term in ecology indicating the degree of environmental variables affecting its inhabitants in an area[33, 34, 35]) of landscape characteristics and animal behaviors. Konzack et al.[36] analyze the migratory trajectories to recognize the stopovers among gulls’ movements.

Visual design is also important to communicate the aggregated results that relates to the exact domain problem. Slingsby et al.[25] discusses the design choices of visual encoding in ecology visualization, suggesting that the use of visual language needs to convey the "ecological meaning" to support the research context. Spretke et al.[14] conceives the "enrichment" method as a way to enhance analytical reasoning with the visual elements of attributes like speed, distance, duration in the geographical context, allowing quick hypothesis iterations on local subsets.

Aggregation of attributes of individuals might be useful to understand the movement behavior, but behaviors is better explained in a context where influence of peers and surroundings are considered[11]. Since the interest in entity level behaviors is rising[17], investigating behaviors concerning the mutual influence of multiple entities can be a worthy starting point.

2.2 Trajectory Analysis

Manually searching for patterns in the long, twisted, and sometimes cluttered movement trajectories can be daunting. This makes event detection algorithms necessary as they alleviate the cognitive load for experts. The execution of automatic event detection usually requires defining a set of parameters, such as time window, speed, heading, and mutual distance, to narrow down the search space to a subset of trajectory fragments[37, 38, 39, 40]. The detection outcomes are usually visualized on top of the movement trajectories with reference to the original geographic context. As the detection processes are sensitive to the subject animal and landscape context [39], we need flexibility in a visualization tool to cope with the distinct characteristics of movements for a convincing result[37]. For example, Andrienko et al.[40] suggested a bottom-up approach where the detected elementary interactions (a concept derived from Bertin’s elementary level of analysis[41], meaning "particular instances of interaction between individual objects") are used as key clues to understand group level patterns. Bak et al.[37] proposed method to boost the performance in event detection at larger scale. The extra performance gain can thus be allocated to support interactive parameter input, through which the visual feedback of outcomes makes an essential part of the interactive loop to guide the next iteration. Bak et al.also mentions the classification of four types of higher level encounter patterns, which seems to be a continuation from Andrienko et al.[40]’s advocacy of characterizing elementary patterns.

Movement interactions are multifaceted. A visual feedback for adaption and fine-tuning of the analytic system is indispensable to extract interactions. Also, automatic techniques mostly solve lower level tasks such as matching route similarity or spatial-temporal closeness between trajectories. Recognizing general patterns and questioning with alternative assumptions are still a job of human expertise. Thus, we need to keep a open mind to the machine results but, in the meanwhile, expose more visual details for domain judgment.

2.3 Visualization of Spatial Temporal Movements

Movement traces are usually placed on a 2D map as discrete points[25] or linked trajectories[36, 42]. However, consideration of both spatial and temporal changes are necessary to avoid false identification of collocation (position overlap at different time period). There are several approaches to achieve this.

A common treatment here is the Space Time Cube (STC)[43, 44, 45], which projects the temporal dimension in the z-aixs of a 3D view. In STC, real collocation of two entities are depicted as the neighboring points in a 3D space. But it makes visualization work prone to problems like loss of perspective and obfuscation[46, 47]. Subjects that travels in the z-axis can also be another issue[48]. A workaround without using 3D space can be found in AMV[29]. It confines trajectories to the local duration and presents relative movements by removing distractions of trajectory parts that are temporally distant. But the fine details of proximity variations in the selected duration is not supported. Alternatively, abstracting movements from their geo-spatial context, as explored by Crnovrsanin et al.[49], is also a possible way to clarify the subjects’ spatial temporal relations.

In sum, we found visualization facilitation in the wildlife behavior analysis is non-trivial, especially when support of time space relatedness remains an open gap. This motivates new solutions with flexible interactivity for quick selection, navigation, and visual adjustments [50] to assist the domain research.

3 Context and Requirements

In this section, we describe the basic setup of the domain research including the expert collaborators, metadata, apparatus (for data collection), and domain requirements.

3.1 Project Background

The domain problem targets at a group of multi-species, free-roaming animals in a South African nature reserve. Researchers’ primary interest lies in the behavior along space/time variations. Instead of analyzing relationships with natural landscape, experts needs visual insights into behaviors of individual animals and how they would influence each other, which is also a valuable compensation to their current tool sets. Individual interactions are also potential to later applications such as to analyze other species, or even human social interactions.

Two ecologists are invited as domain experts (E1 and E2). They both have long interests and experience in animal movements. E1 has a background in movement ecology, spatial analysis. E2 also works in quantitative ecology and environmental sciences. They both conduct quantitative data analysis with R and use field-specific packages to plot the movements either by spatial attributes (map) or numeric attributes (bar chart or line chart). However, they both find their current tools limiting. For example, both of them complained about the lack of support in converting the multidimensionality of movement trajectories into useful information. E2 also mentioned that the analysis becomes challenging when facing multiple individuals regarding their temporal-spatial variations.

3.2 Data Description

For data collection, ecologists attach GPS collars[51] to each of the 25 sampled animals, cf. Figure 1. The subjects are consisted of 5 lions, 10 wildebeests and 10 zebras. The entire data collection lasted for roughly 30 months, which captures periodic changes of climate though different seasons. To ensure sufficient battery life, collar tag sensors were programmed to obtain and store GPS coordinates by every two hours.

Due to various conditions in climate and land features, the quality of tracking data suffered from unpredictable wild circumstances[52]. Cause of quality compromise can be any incident such as physical impact, wet situations on rainy days or water areas, or poor signal reception. To some extent, the collected data are often compromised with one or more of the following issues: a) unrealistic values, i.e. two subsequent data points have impossibly large position difference in between which is evidently an error, and b) missing points, i.e. failure to record a data point at the programmed time. Therefore, pre-processing before analysis is needed[53]. With their expertise, domain experts proceeded with the removal operations to deal with the first type of error (a), resulting in unstable trajectories with irregular gaps of varying length on it. They also trimmed off the unusable parts at the beginning or ending of every animal tracking, making each trajectory starts and ends at different time of its own.

3.3 Requirements

Integrating a visual approach into existing analytic workflow requires shared understanding from both ecology and visual analysis. Through six months’ exchanges with the domain experts, we have devised five design requirements as guidelines to add new capabilities to the current analysis. We begin with draft design proposals in the form of low-fi visual sketches, which inspire nuanced discussions to pin-point their most prominent concerns. To communicate more complex effects, we built iterations of animations with Processing[54] and evaluate of their usefulness in each version. There are also co-design sessions during which they take a more active role to freely propose ideas though paper sketches and explain the intended functionalities. To test for likely insights and also unexpected misinterpretations, qualitative surveys were also conducted among an expanded user group (7 people) of both experts and non-experts. We used structured questionnaires to prioritize the requirements and balance conflicting directions. With constant refinements, we conclude that the visualization design should facilitate movement ecology research by addressing the requirements in the following aspects:

R1 - The ability to intuitively visualize spatial temporal movements in a simple and straightforward manner.

The experts understand the challenges introduced by the multidimensionalilty of collected data. But their experience with interactive visualization tools with sophisticated functionalities is limited. Steeper learning curves can be worrying as they have developed existing habits in thinking with movements. So a design with simple and intuitive depictions would be preferred. They understand the value and efficiency of visual exploration in finding implicit patterns which can be easily missed otherwise. With minimum extra explanation, it should support them in confirming newly discovered visual knowledge with fine data details and facilitating model building with existing analytical skills.

R2 - Interactivity and navigation into spatial temporal space efficiently.

Considering a 2.5 years long time frame of the project, browsing the global timeline means navigating roughly 4000 data points for every 2 weeks of tracking time. Additionally, the patterns can exist in any level of time scopes, e.g. movement difference in days and nights, weeks and even seasons. Granular time scopes should be implemented as movement patterns are sensitive to the observation time frame. Interactively defining time point and temporal selection with flexible scope length will give them the autonomy to explore for a suitable length for particular research task.

R3 - Ability to visualize the movement with awareness and tolerance of inconsistent data quality.

As there are known issues in the data quality, the visualization pipeline should include the ability to produce smooth, consistent visual results. However, instead of ignoring the problems in data, the involved uncertainties should be truthfully presented so that user could easily distinguish whether the visualized outcome is based on solid trustworthy data or questionable delusion. A visual distinction should accommodate the difference clearly and allow for context-dependent judgments.

R4 - Comparing movements between different species.

Same species share behavioral similarities. And the opposite is true for different species. But extent of difference varies between sets of comparisons. For instance, both being herbivores, the wildebeests and zebras can find more commonalities, while lions, as predators, may behave differently than herbivores. To explore species-relevant aspects in movement behaviors, analysis with species awareness is needed. The visualization system should allow them to visually disintegrate the behavior differences, making the anomalies instantly distinguishable.

R5 - A focus on the relationship between animals.

Movement behavior is a complex problem which can be influenced by many environmental factors. It is also not surprising that animals would influence each other’s movements in more or less subtle ways from slight movement deviations to social interactions. Understanding how animals would influence or interact with their movements by both intra-species or inter-species means can contribute to valuable ecological insights. In our case, the queries into the dynamics of how animals are closely bounded to each other and whether such bounding is consistent should be facilitated.

As a side note, the requirement list follows a hierarchical structure where meeting the later, higher level ones involve solving basic ones prior to them.

4 Design Rationale

In search for proper methods and to convert the requirements to executable guidelines, we present a few conceptual elaborations as cornerstones to support the design.

4.1 Parametric Moving Trace Line

As aforementioned, context adaptability is important in movement analysis. Parameterized visualization is an effort to support this by controlling local variables, such as species, speed, or group size, to address the problem of concern. In our case, larger time interval size is used to record movement locations. Straight lines connecting location points would appear cluttered with angular shapes and presents little clue to anticipate the movements between locations. Regarding this issue, trajectory smoothing[55] can be helpful as the visualized path can help to build visual heuristics to makes potential behavior patterns easier to uncover and trajectories of different individual more visually distinguishable. The smoothing also produces other effects that will benefit the analysis. For example, the smoothing will make sharper turns with slower animals (ones with shorter distance between with consecutive points), while the opposite applies to faster moving animals. Domain experts can also use a middle point in the curve to adjust their estimations of the heading if necessary.

A decision upon the right amount of smoothness and trajectory shape requires careful deliberation and calibration. To support easy reconfiguration, parameter setting with instant visual feedback can improve the productivity of iterative decisions. This helps domain experts to understand the effect of each parameter to facilitate easier interpretation of trajectory lines regarding R1 and creates adaptability for different analytic scenarios. We understand that the domain experts may occasionally wish to fact-check the bare data points, therefore smoothing should be implemented with an option to be temporally turned off to make explicit locations points visible.

4.2 Defining Spatial Temporal Relatedness

The inherent entanglement of space and time (defined as spatio-temporal dependency in GIS) is a pivotal challenge in movement analysis[56]. Observations in the spatial domain without consideration of temporal stability may result in unreliable or even false positive discoveries[50]. Assisting domain experts to think temporally is essential[57].

To find potential interactions between animals, the spatial proximity should be discussed within the relevant duration context. Based on this principle, relatedness is a concept that describes relationships with spatial-temporal reference. To distinguish relatedness from proximity, relatedness concerns the distance variation in a time range, whereas the proximity only concerns distance in static a time point. Thus, the relatedness can be treated as summary of physical proximity through time.

We employ two basic modes for inspecting how related animals are bounded to each other – the pairwise (PW) relatedness and the individual-to-group (i-G) relatedness. The pairwise approach takes two entities as input and displays their dynamic relatedness variations. The i-G approach takes a focal entity and displays how closely it is connected to the rest of the animal group over time. These two modes compensate each other for different tasks.

Pairwise relatedness () is a time dependent scalar value describes physical proximity () comparing to the maximum distance () bounded in the captured area, i.e. . Here, represents all the possible states in the global timeline, while and are two elements from all the animals (). Relatedness of one versus multiple targets enables comparison between more candidates and is more complex than pairwise mode. Because the collective pattern of i-G relatedness is contextually understood as a qualitative, multivariate pattern which considers explicit movement trajectory, relative proximity, and relatedness trend which involves constant changing features among multiple targets. To ease communication, we use a different notation to represent: , where is the focal animal (the one to be compared with the rest of the group) and a time range from to is considered. To deal with the intricacies that are difficult to be summarized as a quantitative measurement, we implemented a combination of visual components to delineate the implicit patterns and variations.

4.3 Uncertainty Awareness

The uncertainty awareness is believed to be useful in improving decision-making[58, 59]. Communicating uncertainty is important in our case because it can false signal the absence of one entity in a mutual relationship, which could mislead the experts’ judgment and indicate a termination of related session. To avoid this, we take a series of measures: Firstly, we perform linear interpolation to fill the missing gaps to ensures steady data flow for the well-functioning of relatedness derivation model. Secondly, we treat the interpolated data points differently by labeling and measuring their reliability. This is realized by assigning a special Boolean label, i.e. interpolated or null, and a measurement of uncertainty extent. The measurement is computed as follows: given the current index and index range of consecutive missing data points (), the degree of uncertainty can be formulated as: , i.e. the uncertainty in current data point will be determined by the index distance to the nearest (temporally) reliable data point.

Thus, the uncertainty information prepared to be visualized with different levels of awareness(R3). The experts can make judgment on the credibility and integrity of a pattern by also referencing the visualized uncertainty[60].

5 System Description

We implemented our visualization system in an interactive, web-based application with D3.js. It consists of a main view, peripheral views, and control areas, see Figure 2. The functionalities are results from 3.3 Requirements and 4 Design Rationale. Main area (Figure 2 B) displays movements and trace lines in relation to their original geographical patterns. Timeline (Figure 2 A) is a time reader and controller to set the global "current" time and indicate the length of covered duration, which is shown as the red line along the center (Figure 2 C). Trace line adjustments and animal pair selector is placed in the control panel. Uncertainty in the data are indicated separately by each animal along the time progression (Figure 2 D). Relatedness measurements can be found in Figure 2 E1, E2. We expand with more details in the rest of this section.

5.1 Movement Encoding

Moving animals are represented as animating locations, each with an ID and a species color. Species are colored to resemble the animal’ natural appearance: orange for lion, cool gray for wildebeest, and black for zebra.

Each animal entity draws a trace line with the same color of itself, the length of which corresponds to the global duration. As time coverage is the same for every trace line, drastic movements (bigger distance between steps) will appear longer than sedentary animals, creating a contrast that enables comparison between animal individuals by its movements intensity. Thus, outlier movements can stand out more clearly.

To improve the readability of movement trajectories (as explained in 4.1 Parametric Moving Trace Line), we applied parametric smoothing to the trace lines. The technique is partially inspired by Sacha et al.’s trajectory simplification [55], we integrated commonly used methods like cubic basis spline, natural cubic spline, straightened cubic basis spline based on Holten’s edge bundles [61], Cardinal spline, Catmull-Rom spline with D3.js’ curve interpolation functions. This flexibility in configuration, cf. Figure 3 can be fine-tuned by mode or intensity. Here, the mode determines the type of underpinning smoothing function and the intensity, specified by a linear value between 0 and 1, offers the option to adjust the amount of smoothness: means no smoothing at all and means smoothest. The goal is to give domain experts extensive free options to pinpoint the right parameter to draw trajectories that caters to their analysis scenarios.

5.2 Uncertainty Encoding

Uncertainties can be visualized following the temporal axis, cf. Figure 4. Small horizontal heatmaps at the bottom right of the screen are drawn to inform data issues in three aspects: 1) the start and end time of available data, 2) the general distribution, and 3) the degree of uncertainty (by depth of color), cf. 4.3 Uncertainty Awareness. The time context is useful to guide expert to skip certain segments by informing where to expect reliable data.

In the spatial domain, moving entities will change both appearance and size once uncertainty happened in the data, cf. Figure 5. Filled circles change to dashed outlines, expanding their sizes to indicate a dilution of positional accuracy. Its opacity also decreases along with the size increase, telling the viewer that the system is unsure about exact location of current animal.

Expert can leverage both display methods to avoid risky interpretations and apply self-disretions with their domain knowledge.

5.3 Relatedness Encoding

The relatedness can be understood through different setups. We describe two modes to treat them respectively: the relatedness between two individuals (pairwise) and one individual comparing to a group of the rest (i-G).

Granular variation of is plotted with a line chart. Overview-and-zoom functionality[62] is needed here considering the amount of details in 2.5 years of data points. So we implemented a vertically mirrored line chart below it with different purposes for each half – the bottom one can be brushed to select the time range and the top side displays zoomed details of the brushed area., cf. B) in Figure 6.

The chord diagram can provide an overview of relatedness of all possible pairs being presented in the main view. Ones with higher relatedness are drawn in bolder and more saturated ribbons while lighter appearance applied for the lower relatedness, cf. A) in Figure 6. The delineation reacts differently to a duration and fixed time point. If a duration is selected, the system calculates averaged proximities of all intervals in the range to determine the ribbons’ appearance: . This approach is very similar to the pairwise mode but more aggregated. Experts can brush and drag to tweak the duration length. Such operations are useful to answer questions like "Were the animals’ movements more clustered (related) during the past eight hours? Were they the same for last two days?". It is a simpler and more straightforward way to search for patterns without looking at the spatial changes in the main view.

Geographical pattern of the i-G relatedness () is displayed in the main view where spatial distribution and social context are sensitive aspects. The individuals can be focused by clicking on its circle in the main view. The interaction halts any ongoing animations and creates an array of concentric circles. Relations of animals concerning the focal animal can be visually examined Figure 7. Radii of the circles indicate the spatial proximity to the animal: . The circles sort proximity of animals with scattered distances and moving trends (came closer or went further) into an egocentric diagram where unidirectional comparison of proximity is possible. Based on this, proximities of current time is easy to tell by circle sizes. The less explicit moving trends, however, are illustrated by the trace lines and relatedness error bars. Here, the former corresponds to the movement trajectory of the duration and the later is a depiction to analyze the trend of relatedness: line caps on both ends of the error bars are determined by the maximum and minimum values of relative proximity in the duration. Thus, whether an entity is drawing closer or moving farther can be read from the negative/positive sum of relatedness, which is derived by comparing length of error bars from the inside (positive relatedness) and outside (negative relatedness) of the proximity circle. For instance, ones with much larger outer length suggests the underlying animal spend most of the recent time in places more distant to the focal animal than its current location, which means it tends to move farther considering the past period. The trace line here is to verify the judgment on the trend of relatedness change with exact movement details.

6 Use Case

We tested the visualization system with domain experts to validate its usefulness in practical environments. Specifically, the system is hosted online as a web application, and we recorded two sessions of screen interactions and verbal communications remotely via Skype. No specific tasks were given during the experiment. Experts are encouraged to explain their reasoning following the think-aloud protocol [63], and support the explanation with domain knowledge if necessary. Two sessions with total length of 105 minutes are video recorded for post hoc analysis. We keep notes of the highlights with reference to their timeline position, flow of interaction, experts’ interpretation, filter settings, as well as the video time. We report on our observation of cases in the rest of this section.

6.1 Checking Seasonal Distribution Change

Background: Seasonal climate change would impact many aspects in an ecosystem. Ecologists need to understand how this is reflected by animal movements. Fortunately, the raw data covers sufficient season cycles of multi-annual time frame. Thus, seasonal difference in movement distribution can be visually compared.

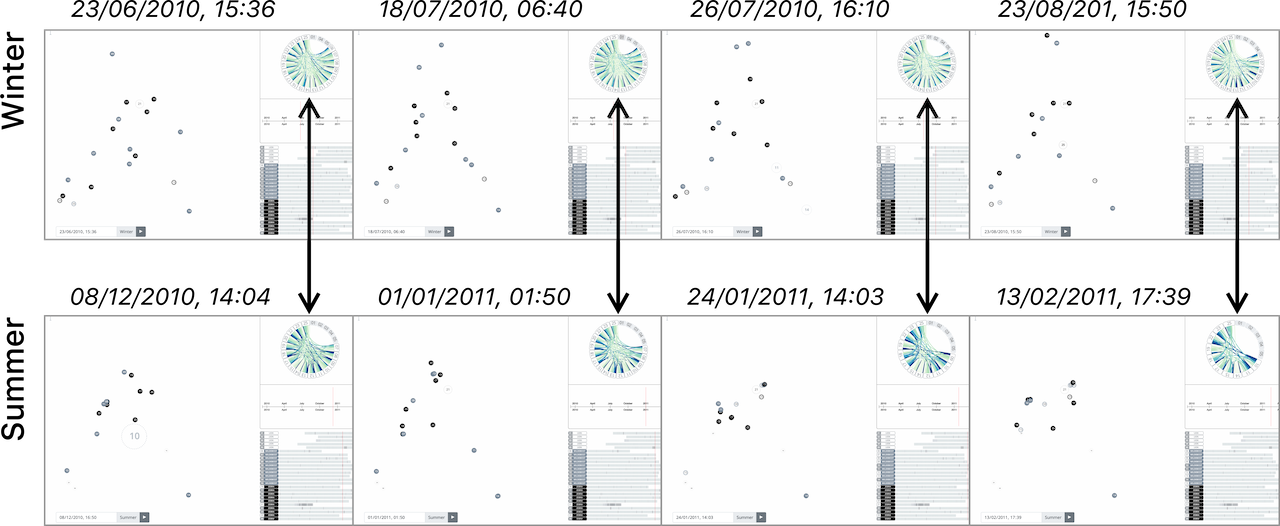

Method: By either picking a specific position on the global timeline with mouse or manual input of time digits (R2), experts can quickly preview the general distribution of animal locations. Either way, the season (resp. southern hemisphere) display on the time ticker will change to the specified time. The experts also turn on the trace line to portray areas of denser movements by following two steps (R1): 1) they extend the trace line duration length to 90 days (meaning location history of roughly three months) and 2) they move the "current" time to the end of the season precisely by digits, e.g. "". Thus, a long trajectory would take on a nested shape within which condensed areas indicate frequent visits in particular regions. They can also filter out herbivores or predators to make clean comparisons between species across different time of the year (R4). Experts use the overall color tone of the ribbons in the chord diagram altogether to tell how close animals are forming (local) groups.

Insight: Figure 8 are snapshots of animal distributions in winter vs. summer. It is observable that the herbivores tend to spread loosely in winter and gather closer during summer. Such a pattern tends to gravitate toward a few specific regions as it can be shown in the long term season trajectory Figure 9. The experts believe that this could be caused by the periodic rainfall change in a year which leads to denser natural resource such as vegetation and water accumulations in some regions. The outcome shows that the concentration of natural resources attracts herbivores while such a trend is less obvious among the predators. Also, the shape and size (without smoothing) of trace lines can provide useful clues regarding the relative sedentary/active state of an animal: longer, more dispersed lines indicate more active behaviors in the area, while the more condensed, wiggling lines suggests more sedentary ones. From the comparisons between summer months and winter months, experts has discovered/confirmed some obvious hypothesis that correlate to seasonal changes — 1) season does have effect on the concentration tendencies of animals, 2) such tendency is more significant among herbivores than predators and 3) relative sedentary states are most observed among herbivores during the winter.

6.2 Species Difference in Night Travel Distance

Background: Another potential influence on animal behavior is day-night transition. Unlike humans who tend to rest during most of the night hours, animals may react to day-night alternation differently in order to ensure their survival. The changes in temperature, visibility, as well as the effect of chronobiology could have heterogeneous effects on the movements of species. How the behavior difference could be visually reflected by geographical patterns is intriguing.

Method: The expert starts with setting the current time to 06:00 am on a random day and changes the trace line curve length to 9 hours. The main view displays movements trajectories of the last 9 hours — from 09:00 pm the day before to 06:00 am current time. They can use arrow keys to jump to consecutive days without changing the time of day and trace line length. As a result, the common pattern in night movements are more directly exposed to the viewer (R2). As the shape of trace lines can be morphed with type and smoothness, the experts select "bundle" for curved type and slide the value to maximum smoothing (), which produces straight lines that connect only the origin and destination of the entire movement, cf. Figure 3. This aggressive smoothing technique allows the experts to make sense of the absolute travel distance during the night hours (R1).

Insight: Figure 10 shows an example of the observations by experts. They found little correlation between the night activities and geographical distributions. However, the night travel distances of predators (lions) are distinctively longer than ones from herbivores. This indicates that lions are more active during the nights while wildebeests and zebras tend to stay and rest as much as possible in the meanwhile.

6.3 Examining Grouping/Pairing Behavior

Background: Nuanced understanding of animal social interactions with an awareness of species traits plays a key role in the study of animal behaviors. As mentioned before, the grouping and pairing are difficult to detect with the visualization of mere locations due to spatial-temporal dependency. Instead, visualizing the dynamic relatedness can support the ecologists to investigate the strength of social bounding in pairs.

Method: When experts find that two animals are potentially forming a pair as they stay together drawing close, comparable traces, the experts select the corresponding animal pair in the dropdown menu from the control panel (Figure 2 C). The global pairwise relatedness is then plotted to delineate the intimacy between the two animals. Ups and downs that vary from month to month or season to season can be observed. As the expert brushes on the lower half of the chart around the current time, indicated by a vertical red line, fine variation of relatedness in the brushed zone are magnified to a daily or hourly level of detail for examination (R5). By checking the line shape of relatedness on both macro and micro level, experts can make a more reflective judgment on the grouping or pairing behavior.

Insight: The situation in A) Figure 11 can be easily identified as pairing or grouping behavior by only looking at their temporary collocation from their trace line. The blue ribbon in the chord diagram that connects animal 17 and 20 seems to confirm that for the past 20 hours, the two are staying rather close together. But the pairwise relatedness view suggests that such relatedness is constantly changing and unstable. Thus, attributing grouping or pairing behavior is questionable in this case and needs further investigation. Another example in B) Figure 11 tells a very different story of stable pairing — lions 1 and 5 have approached each other and maintained near maximum relatedness for a rather extended time. Since the phenomenon has repeated several times, experts believe the pattern is a more reliable indicator of a strong social pairing. Similar patterns have also been discovered between lions 2 and 4, wildebeests 12 and 13 using the same technique. A stable one-month pairing is also found between wildebeests 10 and 14. But the time window for this is too small comparing to the other groups and no further rejoining is found. More investigation might be needed to justify if the pairing is strong. The experts learn that a safer identification of intimate pairs requires evidence of close movements for longer period. Members of strong social pairings are likely to rejoin each other soon after separation.

6.4 Analyzing Multi-Species Relatedness

Background: Unlike relatedness within the same species, the inter-species relatedness may indicate threat instead of cooperation particularly between predators and herbivores. According to E1, a real predation process usually takes place within 3-5 minutes, which is beyond the frequency resolution of the employed GPS devices. However, unexplored behavioral patterns and multi-species interactions can still take place over the span of hours, according to E1. The experts would like to use inter-species relatedness to investigate possible instances of such behaviors.

Method: Animals of more than one species can be seen as a potential stalking if one movement goes after another continuously. The smoothed trace line with duration length of several hours can be used to validate if the exact travel path fits well (R1). The expert uses the numerical input to fine-tune the trace line length to estimate the exact stalking time window. Clicking on one of the animals in the main view will trigger the i-G relatedness of between the selected animal and the distant one(s) of a different species B. This lets the experts knows the distance other animal(s) have covered to approach the selected one. In this case, the start and end of such episodes can be inferred with the help of pairwise relatedness.(R5).

Insight: The example in Figure 12 illustrates how inter-species interactions can be studied through the spatial-temporal relatedness. The involved animals are lion 5 and wildebeests 9, 12, 13. Through each stage, the relatedness would witness an increase-stable-decrease process in the inter-species relatedness episode. The development of such a pattern is can be interpreted as hypothetical threat between predators and herbivores. But without concrete ground truth like direct observations, we can only assert the likelihood.

7 Evaluation

We invited the experts (cf. 3.1 Project Background) to give conclusive remarks on the design. The evaluation starts with an open Q&A session of 20 minutes each, during which we first clear up confusions on both sides, e.g. assumptions of animal behavior, or misinterpretation of visual variables. After that, each expert summarizes a final evaluation in written form. The result was collected by delivering questionnaires containing questions in three implicit facets to validate the design’s usefulness – the enabling, the facilitating, and the applicability. Enabling emphasizes the aspects that provide novel analytical capabilities to find undiscovered insights whereas facilitating consists of cases where the system solves their existing problems with a significant productivity boost. Applicability addresses the conditions and contexts under which the system would achieve its maximum value. We set no strict time constrains to allow for as detailed answers as possible. The findings are given and discussed below.

7.1 Enabling

According to E1, an animal can be influenced not only by variation in environmental conditions but also by the behavior and location of another individual or group of animals. Because of displacement in space and time, such relationships are tricky to explore visually. The visualization system allows them to analyze movement through space/time variation (R1) of individuals as well as the relationships between individuals (R5). The expert asserts that exploration into inter-individual interactions is enabled by the chord diagram with color-coded relatedness.

Regarding the pairwise relatedness, the ability to zoom to specific time periods (in pairwise relatedness) is very convenient and easy to use. E2 believes the visualization system highlighted an important capability which is visualizing data along temporal dimension, particularly how relatedness changes over time (R2).

The i-G relatedness enables a visual understanding of the relatedness with actual distance between individuals in smaller time frames. The relatedness variation range raises the awareness of the time dependency in shorter movement trends. Unexpected patterns would emerge after testing and exploring with varying time scales (R2). E2 believes that the view mode is not only useful for generating ecological insights or hypotheses, but also creates more contextual awareness for the analysis.

7.2 Facilitating

Before using our system, plotting static figures is their primary way of visual analysis for movements. According to their comments, the visualization creates depictions beyond static figures, without which the dynamics in movements are otherwise hard to interpret. "(Such functionality) is very needed in data exploration", says E1 (R1).

As they are fully aware of the difficulties introduced by inconsistent data, the new visual approach to check data availability/uncertainty is well-appreciated. "To me, this is a very useful tool for exploration of movement data, allowing to focus on different potential problems, such as sociality between individuals, movements relative to predators, home ranging, etc." says E1. The expert also confirms that the awareness of uncertainty is reinforced by trace lines and smoothing parameters which can be used to smooth out uncertain measurements (R3). E2 appreciates the quick configuration changes on the fly. He says, "It makes comparisons between configurations very convenient." (R1).

7.3 Applicability

Movement ecology research often requires calculating implicit features from the data such as road impact[64] or stigmergy[19]. When the optimal features to describe animal behaviors are still unclear and yet to be confirmed, the research becomes challenging. Based on their experience, the experts believe that the system can play a key role in their exploratory stage of analysis, where setting different parameters and scoping down to subsets of data need to be frequently adjusted. Under such circumstances, a comprehensive integration of capabilities that can produce easy to interpret visual insights with quick and convenient configuration changes is essential. According to E2, visualizing certain variables in a spatial-temporal way has changed their way of computing variables, doing analysis, and develop new hypotheses or insights.

8 Discussion

Comparing to other visualization works in supporting ecological research, this paper emphasizes more on individual level inquiries, particularly potential interactions or threats. The study into these issues initially came as a wicked problem that lacks a proper structure. However, the perspective of relatedness puts the related information into clarified vision of movements in a relational context. This new attribute is not only a metric to aggregate proximity in time but also a variation pattern with a domain-friendly interpretation. In one way, exposing such patterns helps the expert to understand the unstable nature of spatial relation (cf. Figure 11), which enables comparisons that lead to deeper, more intuitive understanding. In another way, the new attribute is a mathematical inference from the original data (cf. 4.2 Defining Spatial Temporal Relatedness), which keeps the possibility to revert the patterns to source values and (re)model or test if necessary.

We acknowledge that distribution and behavior patterns caused by local resource change and land feature are as interesting to many ecologists, for example, the influence of seasonal change as in 6.1 Checking Seasonal Distribution Change. However, reasoning with more comprehensive factors such as temperature, rainfall is only feasible until we hold richer climate and GIS information of the area. Similarly, validation of visual interpretations such as predatory threat (cf. 6.4 Analyzing Multi-Species Relatedness) relies on obtaining the ground truth data, for instance, recorded lion kills locations. Like before, the evidence is even more expensive to get based on current technology. In this regard, the visualization is helpful as it guides us toward how future research could be improved by obtaining additional knowledge and data.

9 Conclusion

This article introduces a visual exploration system for movement ecologists with a focus on individual level insights into animal movements. Although analyzing individual level interactions has been touched by previous works[56, 50], a visualization tool that analyze small scale animal interactivity through the lens of relatedness is novel. Experts believe the design is useful in identifying of general movement patterns as well as locating possible pairing and matching in different time frames. The practicality of relatedness approach is supported by real cases and novel insights. Targeting at the emerging field of movement ecology[15, 1], we see our work as a useful contribution to support nuanced insights in fine scale relationships of multi-species animals.

In the future work, we are intrigued to find more supporting cases with species of different travel distances. For example, experts suggest the i-G relatedness approach to be viable in exploring predators’ prey base by searching for proximate herbivore candidates. Since our sample size is still small comparing to the number of all herbivores roaming in the area, redesigning the project setup by tracking a significant proportion of all possible preys of a different predator can be interesting. We also assume the usefulness of our methods in outlining shared behavioral principles by comparing human social movements with ones of wildlife. The insights could benefit the design of public spaces to promote social activities.

References

- [1] C. Holden, “Inching Toward Movement Ecology,” Science, vol. 313, no. 5788, pp. 779–782, Aug. 2006.

- [2] F. Cagnacci and F. Urbano, “Managing wildlife: A spatial information system for GPS collars data,” Environmental Modelling & Software, vol. 23, no. 7, pp. 957–959, Jul. 2008.

- [3] M. Gor, J. Vora, S. Tanwar, S. Tyagi, N. Kumar, M. S. Obaidat, and B. Sadoun, “GATA: GPS-Arduino based Tracking and Alarm system for protection of wildlife animals,” in 2017 International Conference on Computer, Information and Telecommunication Systems (CITS). Dalian, China: IEEE, Jul. 2017, pp. 166–170.

- [4] F. Hoflinger, R. Zhang, T. Volk, E. Garea-Rodriguez, A. Yousaf, C. Schlumbohm, K. Krieglstein, and L. M. Reindl, “Motion capture sensor to monitor movement patterns in animal models of disease,” in 2015 IEEE 6th Latin American Symposium on Circuits & Systems (LASCAS). Montevideo, Uruguay: IEEE, Feb. 2015, pp. 1–4.

- [5] Qin Jiang and C. Daniell, “Recognition of human and animal movement using infrared video streams,” in 2004 International Conference on Image Processing, 2004. ICIP ’04., vol. 2. Singapore: IEEE, 2004, pp. 1265–1268.

- [6] M. Teimouri, U. Indahl, H. Sickel, and H. Tveite, “Deriving Animal Movement Behaviors Using Movement Parameters Extracted from Location Data,” ISPRS International Journal of Geo-Information, vol. 7, no. 2, p. 78, Feb. 2018.

- [7] D. Sarkar, C. A. Chapman, L. Griffin, and R. Sengupta, “Analyzing Animal Movement Characteristics From Location Data: Analyzing Animal Movement,” Transactions in GIS, vol. 19, no. 4, pp. 516–534, Aug. 2015.

- [8] Y. Wang, Z. Luo, J. Takekawa, D. Prosser, Y. Xiong, S. Newman, X. Xiao, N. Batbayar, K. Spragens, S. Balachandran, and B. Yan, “A new method for discovering behavior patterns among animal movements,” International Journal of Geographical Information Science, vol. 30, no. 5, pp. 929–947, May 2016.

- [9] Z. Li, J. Han, B. Ding, and R. Kays, “Mining periodic behaviors of object movements for animal and biological sustainability studies,” Data Mining and Knowledge Discovery, vol. 24, no. 2, pp. 355–386, Mar. 2012.

- [10] Z. Li, J. Han, M. Ji, L.-A. Tang, Y. Yu, B. Ding, J.-G. Lee, and R. Kays, “MoveMine: Mining moving object data for discovery of animal movement patterns,” ACM Transactions on Intelligent Systems and Technology, vol. 2, no. 4, pp. 1–32, Jul. 2011.

- [11] R. Nathan, W. M. Getz, E. Revilla, M. Holyoak, R. Kadmon, D. Saltz, and P. E. Smouse, “A movement ecology paradigm for unifying organismal movement research,” Proceedings of the National Academy of Sciences, vol. 105, no. 49, pp. 19 052–19 059, 2008.

- [12] B. Kranstauber, A. Cameron, R. Weinzerl, T. Fountain, S. Tilak, M. Wikelski, and R. Kays, “The Movebank data model for animal tracking,” Environmental Modelling & Software, vol. 26, no. 6, pp. 834–835, Jun. 2011.

- [13] F. Cagnacci, L. Boitani, R. Powell, and M. Boyce, “Animal ecology meets GPS-based radiotelemetry: A perfect storm of opportunities and challenges,” Philosophical Transactions of the Royal Society B: Biological Sciences, vol. 365, no. 1550, pp. 2157–2162, 2010.

- [14] D. Spretke, P. Bak, H. Janetzko, B. Kranstauber, F. Mansmann, and S. Davidson, “Exploration Through Enrichment: A Visual Analytics Approach for Animal Movement,” in Proceedings of the 19th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, ser. GIS ’11. New York, NY, USA: ACM, 2011, pp. 421–424.

- [15] R. Nathan, “An emerging movement ecology paradigm,” Proceedings of the National Academy of Sciences, vol. 105, no. 49, pp. 19 050–19 051, Dec. 2008.

- [16] Westley Peter A. H., Berdahl Andrew M., Torney Colin J., and Biro Dora, “Collective movement in ecology: From emerging technologies to conservation and management,” Philosophical Transactions of the Royal Society B: Biological Sciences, vol. 373, no. 1746, p. 20170004, May 2018.

- [17] M. Holyoak, R. Casagrandi, R. Nathan, E. Revilla, and O. Spiegel, “Trends and missing parts in the study of movement ecology,” Proceedings of the National Academy of Sciences, vol. 105, no. 49, pp. 19 060–19 065, Dec. 2008.

- [18] J. M. Calabrese, C. H. Fleming, W. F. Fagan, M. Rimmler, P. Kaczensky, S. Bewick, P. Leimgruber, and T. Mueller, “Disentangling social interactions and environmental drivers in multi-individual wildlife tracking data,” Philosophical Transactions of the Royal Society B: Biological Sciences, vol. 373, no. 1746, p. 20170007, May 2018.

- [19] L. Giuggioli, J. R. Potts, D. I. Rubenstein, and S. A. Levin, “Stigmergy, collective actions, and animal social spacing,” Proceedings of the National Academy of Sciences, vol. 110, no. 42, pp. 16 904–16 909, Oct. 2013.

- [20] L. Polansky and G. Wittemyer, “A framework for understanding the architecture of collective movements using pairwise analyses of animal movement data,” Journal of The Royal Society Interface, vol. 8, no. 56, pp. 322–333, Mar. 2011.

- [21] A. Strandburg-Peshkin, D. Papageorgiou, M. C. Crofoot, and D. R. Farine, “Inferring influence and leadership in moving animal groups,” Philosophical Transactions of the Royal Society B: Biological Sciences, vol. 373, no. 1746, p. 20170006, May 2018.

- [22] C. J. Torney, M. Lamont, L. Debell, R. J. Angohiatok, L.-M. Leclerc, and A. M. Berdahl, “Inferring the rules of social interaction in migrating caribou,” Philosophical Transactions of the Royal Society B: Biological Sciences, vol. 373, no. 1746, p. 20170385, May 2018.

- [23] M. M. Pires and P. R. Guimaraes, “Interaction intimacy organizes networks of antagonistic interactions in different ways,” Journal of The Royal Society Interface, vol. 10, no. 78, pp. 20 120 649–20 120 649, Nov. 2012.

- [24] M. Hagen, W. D. Kissling, C. Rasmussen, M. A. De Aguiar, L. E. Brown, D. W. Carstensen, I. Alves-Dos-Santos, Y. L. Dupont, F. K. Edwards, J. Genini, P. R. Guimarães, G. B. Jenkins, P. Jordano, C. N. Kaiser-Bunbury, M. E. Ledger, K. P. Maia, F. M. D. Marquitti, Ó. Mclaughlin, L. P. C. Morellato, E. J. O’Gorman, K. Trøjelsgaard, J. M. Tylianakis, M. M. Vidal, G. Woodward, and J. M. Olesen, “Biodiversity, Species Interactions and Ecological Networks in a Fragmented World,” in Advances in Ecological Research. Elsevier, 2012, vol. 46, pp. 89–210.

- [25] A. Slingsby and E. van Loon, “Exploratory Visual Analysis for Animal Movement Ecology,” Computer Graphics Forum, vol. 35, no. 3, pp. 471–480, Jun. 2016.

- [26] N. Eagle, A. S. Pentland, and D. Lazer, “Inferring friendship network structure by using mobile phone data,” Proceedings of the National Academy of Sciences, vol. 106, no. 36, pp. 15 274–15 278, Sep. 2009.

- [27] D. A. Roshier, V. A. J. Doerr, and E. D. Doerr, “Animal movement in dynamic landscapes: Interaction between behavioural strategies and resource distributions,” Oecologia, vol. 156, no. 2, pp. 465–477, May 2008.

- [28] N. Ferreira, L. Lins, D. Fink, S. Kelling, C. Wood, J. Freire, and C. Silva, “BirdVis: Visualizing and Understanding Bird Populations,” IEEE Transactions on Visualization and Computer Graphics, vol. 17, no. 12, pp. 2374–2383, Dec. 2011.

- [29] D. Kavathekar, T. Mueller, and W. F. Fagan, “Introducing AMV (Animal Movement Visualizer), a visualization tool for animal movement data from satellite collars and radiotelemetry,” Ecological Informatics, vol. 15, pp. 91–95, May 2013.

- [30] S. Dodge, G. Bohrer, R. Weinzierl, S. C. Davidson, R. Kays, D. Douglas, S. Cruz, J. Han, D. Brandes, and M. Wikelski, “The environmental-data automated track annotation (Env-DATA) system: Linking animal tracks with environmental data,” Movement Ecology, vol. 1, p. 3, Jul. 2013.

- [31] D. Seebacher, J. Haualer, M. Hundt, M. Stein, H. Muller, U. Engelke, and D. Keim, “Visual Analysis of Spatio-Temporal Event Predictions: Investigating the Spread Dynamics of Invasive Species,” IEEE Transactions on Big Data, pp. 1–1, 2018.

- [32] G. Xavier and S. Dodge, “An Exploratory Visualization Tool for Mapping the Relationships Between Animal Movement and the Environment,” in Proceedings of the 22nd ACM SIGSPATIAL International Workshop on Interacting with Maps, ser. MapInteract ’14. New York, NY, USA: ACM, 2014, pp. 36–42.

- [33] P. D. Taylor, L. Fahrig, K. Henein, and G. Merriam, “Connectivity Is a Vital Element of Landscape Structure,” Oikos, vol. 68, no. 3, p. 571, Dec. 1993.

- [34] M. Baguette and H. Van Dyck, “Landscape connectivity and animal behavior: Functional grain as a key determinant for dispersal,” Landscape Ecology, vol. 22, no. 8, pp. 1117–1129, Oct. 2007.

- [35] S. L. Lima and P. A. Zollner, “Towards a behavioral ecology of ecological landscapes,” Trends in Ecology & Evolution, vol. 11, no. 3, pp. 131–135, Mar. 1996.

- [36] M. Konzack, P. Gijsbers, F. Timmers, E. van Loon, M. A. Westenberg, and K. Buchin, “Visual exploration of migration patterns in gull data,” Information Visualization, p. 147387161775124, Jan. 2018.

- [37] P. Bak, M. Marder, S. Harary, A. Yaeli, and H. J. Ship, “Scalable Detection of Spatiotemporal Encounters in Historical Movement Data,” Computer Graphics Forum, vol. 31, no. 3pt1, pp. 915–924, 2012.

- [38] G. Andrienko, N. Andrienko, and M. Heurich, “An event-based conceptual model for context-aware movement analysis,” International Journal of Geographical Information Science, vol. 25, no. 9, pp. 1347–1370, Sep. 2011.

- [39] F. d. L. Siqueira and V. Bogorny, “Discovering Chasing Behavior in Moving Object Trajectories,” Transactions in GIS, vol. 15, no. 5, pp. 667–688, 2011.

- [40] N. V. Andrienko, G. Andrienko, M. Wachowicz, and D. Orellana, “Uncovering Interactions between Moving Objects,” 2008.

- [41] J. Bertin, Semiology of Graphics: Diagrams, Networks, Maps, 1st ed. Redlands, Calif: Esri Press, Nov. 2010.

- [42] J. Shamoun-Baranes, E. E. van Loon, R. S. Purves, B. Speckmann, D. Weiskopf, and C. J. Camphuysen, “Analysis and visualization of animal movement,” Biology Letters, vol. 8, no. 1, pp. 6–9, Feb. 2012.

- [43] N. R. Hedley, C. H. Drew, E. A. Arfin, and A. Lee, “Hagerstrand Revisited: Interactive Space-Time Visualizations of Complex Spatial Data,” Informatica (Slovenia), vol. 23, no. 2, 1999.

- [44] P. Gatalsky, N. Andrienko, and G. Andrienko, “Interactive analysis of event data using space-time cube,” in Proceedings. Eighth International Conference on Information Visualisation, 2004. IV 2004. London, England: IEEE, 2004, pp. 145–152.

- [45] M. Kraak, “The space - time cube revisited from a geovisualization perspective,” in ICC 2003 : Proceedings of the 21st International Cartographic Conference. International Cartographic Association (ICA), 2003, pp. 1988–1996.

- [46] J. A. Walsh, J. Zucco, R. T. Smith, and B. H. Thomas, “Temporal-Geospatial Cooperative Visual Analysis,” in 2016 Big Data Visual Analytics (BDVA). Sydney, Australia: IEEE, Nov. 2016, pp. 1–8.

- [47] F. Amini, S. Rufiange, Z. Hossain, Q. Ventura, P. Irani, and M. J. McGuffin, “The Impact of Interactivity on Comprehending 2D and 3D Visualizations of Movement Data,” IEEE Transactions on Visualization and Computer Graphics, vol. 21, no. 1, pp. 122–135, Jan. 2015.

- [48] G. Andrienko, N. Andrienko, G. Fuchs, and J. M. C. Garcia, “Clustering Trajectories by Relevant Parts for Air Traffic Analysis,” IEEE Transactions on Visualization and Computer Graphics, vol. 24, no. 1, pp. 34–44, Jan. 2018.

- [49] T. Crnovrsanin, C. Muelder, C. Correa, and K. L. Ma, “Proximity-based visualization of movement trace data,” in 2009 IEEE Symposium on Visual Analytics Science and Technology, Oct. 2009, pp. 11–18.

- [50] G. Andrienko, N. Andrienko, P. Bak, D. Keim, and S. Wrobel, Visual Analytics of Movement. Berlin, Heidelberg: Springer Berlin Heidelberg, 2013.

- [51] R. Handcock, D. Swain, G. Bishop-Hurley, K. Patison, T. Wark, P. Valencia, P. Corke, and C. O’Neill, “Monitoring Animal Behaviour and Environmental Interactions Using Wireless Sensor Networks, GPS Collars and Satellite Remote Sensing,” Sensors, vol. 9, no. 5, pp. 3586–3603, May 2009.

- [52] A. Hurford, “GPS Measurement Error Gives Rise to Spurious 180° Turning Angles and Strong Directional Biases in Animal Movement Data,” PLoS ONE, vol. 4, no. 5, p. e5632, May 2009.

- [53] K. Bjørneraas, B. Moorter, C. M. Rolandsen, and I. Herfindal, “Screening Global Positioning System Location Data for Errors Using Animal Movement Characteristics,” The Journal of Wildlife Management, vol. 74, no. 6, pp. 1361–1366, Aug. 2010.

- [54] C. Reas and B. Fry, Processing: A Programming Handbook for Visual Designers and Artists. Cambridge, Mass.: MIT Press, 2007.

- [55] D. Sacha, F. Al-Masoudi, M. Stein, T. Schreck, D. A. Keim, G. Andrienko, and H. Janetzko, “Dynamic Visual Abstraction of Soccer Movement,” Computer Graphics Forum, vol. 36, no. 3, pp. 305–315, Jun. 2017.

- [56] U. Demšar, K. Buchin, F. Cagnacci, K. Safi, B. Speckmann, N. Van de Weghe, D. Weiskopf, and R. Weibel, “Analysis and visualisation of movement: An interdisciplinary review,” Movement Ecology, vol. 3, p. 5, Mar. 2015.

- [57] G. Andrienko, N. Andrienko, U. Demsar, D. Dransch, J. Dykes, S. I. Fabrikant, M. Jern, M.-J. Kraak, H. Schumann, and C. Tominski, “Space, time and visual analytics,” International Journal of Geographical Information Science, vol. 24, no. 10, pp. 1577–1600, Oct. 2010.

- [58] M. Riveiro, T. Helldin, G. Falkman, and M. Lebram, “Effects of visualizing uncertainty on decision-making in a target identification scenario,” Computers & Graphics, vol. 41, pp. 84–98, Jun. 2014.

- [59] M. Wunderlich, K. Ballweg, G. Fuchs, and T. von Landesberger, “Visualization of Delay Uncertainty and its Impact on Train Trip Planning: A Design Study,” Computer Graphics Forum, vol. 36, no. 3, pp. 317–328, Jun. 2017.

- [60] D. Sacha, H. Senaratne, B. C. Kwon, G. Ellis, and D. A. Keim, “The Role of Uncertainty, Awareness, and Trust in Visual Analytics,” IEEE Transactions on Visualization and Computer Graphics, vol. 22, no. 1, pp. 240–249, Jan. 2016.

- [61] D. Holten, “Hierarchical Edge Bundles: Visualization of Adjacency Relations in Hierarchical Data,” IEEE Transactions on Visualization and Computer Graphics, vol. 12, no. 5, pp. 741–748, Sep. 2006.

- [62] A. Cockburn, A. Karlson, and B. B. Bederson, “A review of overview+detail, zooming, and focus+context interfaces,” ACM Computing Surveys, vol. 41, no. 1, pp. 1–31, Dec. 2008.

- [63] T. Boren and J. Ramey, “Thinking aloud: Reconciling theory and practice,” IEEE Transactions on Professional Communication, vol. 43, no. 3, pp. 261–278, Sep. 2000.

- [64] N. Klar, M. Herrmann, and S. Kramer-Schadt, “Effects and Mitigation of Road Impacts on Individual Movement Behavior of Wildcats,” Journal of Wildlife Management, vol. 73, no. 5, pp. 631–638, Jul. 2009.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/bda3f85f-f669-4bc8-9038-6a6f98c41228/wei.jpg) |

Wei Li received the MSc degree in Industrial Design Engineering from School of Design, Jiangnan University, China. He is currently a PhD candidate in the Department of Industrial Design, Eindhoven University of Technology, the Netherlands. His current research interests include visualization application, movement behavior, game analytics, and healthcare visualization. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/bda3f85f-f669-4bc8-9038-6a6f98c41228/mathias.jpg) |

Mathias Funk has a background in Computer Science from RWTH Aachen, Germany, and got his PhD in Electrical Engineering at Eindhoven University of Technology. He is associate professor in the Department of Industrial Design, Eindhoven University of Technology. He leads the Things Ecology lab and is interested in design theory and processes for systems, designing systems for musical expression, and designing with data. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/bda3f85f-f669-4bc8-9038-6a6f98c41228/jasper.png) |

Jasper Eikelboom received received a MSc degree in Environmental Biology & MA degree in Geography Education both at Utrecht University. He is currently PhD candidate in quantitative ecology at the Resource Ecology group of Wageningen University. His current research interests include movement ecology, machine learning, and conservation biology. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/bda3f85f-f669-4bc8-9038-6a6f98c41228/aarnout.jpg) |

Aarnout Brombacher holds a BSc and MSc in Electrical Engineering and a PhD in Engineering Science, all from Twente University of Technology. He was appointed full professor at Eindhoven University of Technology. He has also worked as a senior consultant for the Reliability section of Philips CFT Development Support. He is currently member of the National TopTeam (advisory body of the Dutch government) on Sports and Vitality representing the 14 Dutch universities in this field. He is interested in sports, activity for human health, and individual activity data. |