Vision-Based Target Localization

for a Flapping-Wing Aerial Vehicle

Abstract

The flapping-wing aerial vehicle (FWAV) is a new type of flying robot that mimics the flight mode of birds and insects. However, FWAVs have their special characteristics of less load capacity and short endurance time, so that most existing systems of ground target localization are not suitable for them. In this paper, a vision-based target localization algorithm is proposed for FWAVs based on a generic camera model. Since sensors exist measurement error and the camera exists jitter and motion blur during flight, Gaussian noises are introduced in the simulation experiment, and then a first-order low-pass filter is used to stabilize the localization values. Moreover, in order to verify the feasibility and accuracy of the target localization algorithm, we design a set of simulation experiments where various noises are added. From the simulation results, it is found that the target localization algorithm has a good performance.

Index Terms:

FWAV, target localization, positioning algorithm, first-order low-pass filterI Introduction

The flapping-wing aerial vehicle (FWAV) is a new type of flying robot that mimics the flight mode of birds and insects during flight [1][2][3]. Compared with the conventional fixed-wing and rotary-wing aerial vehicles, the FWAVs are smaller in size with better flexibility during their flight, and they also hold great flight efficiency and a good biological concealment [4][5][6]. These characteristics give them more advantages in the field of military reconnaissance, environmental monitoring, and disaster rescue [7][8][9].

So far, there have been a lot of efforts in the research of FWAVs in recent years and many fruitful outcomes have also emerged. Alireza et al. [10] in University of Illinois at Urbana-Champaign (UIUC) created a fully self-contained, autonomously flying robot with a mass of 93 g, called Bat Bot (B2), to mimic such morphological properties of bat wings. Additionally, Aero Vironment in the U.S. [11] designed a FWAV named hummingbird with a mass of 19 g and a wingspan of 16.5 cm. Moreover, Yang et al. from Northwestern Polytechnical University also created a FWAV named Dove. It has a mass of 220 g, a wingspan of 50 cm, and the ability to operate autonomously. It can fly lasting half an hour, and transmit live stabilized colorful videos to a ground station over 4 km away [12].

In addition, a new dynamic analysis and engineering design method for flapping-wing flying robots is proposed in the background of engineering application [13]. Researchers from the Delft University of Technology designed a micro FWAV named DelFly II [14]. It equips a binocular vision system, which consists of two synchronous CMOS cameras with a total resolution of 720240 pixels. Using this vision system, DelFly II can carry out some simple obstacle detection tasks. However, its measurement distance is only 4 m and the error even reaches 30 cm, so the visual perception system is not suitable for complex tasks. Moreover, Baek et al. from the University of California, Berkeley, demonstrated a 13-gram autonomously controlled ornithopter by using a small X-Wing [15]. With a wingspan of 28 cm, it can land within a radius of 0.5 m from the target, and the success rate reaches more than 85%. Although the function of flying to the designated target independently has been realized, this system is not suitable for a FWAV with big wingspan.

Generally, the ground target localization system for a FWAV has not received much attention, but a great development has been achieved for other aircraft platforms instead [16]. As for aircraft-based ground target localization methods, there are three types at present: target location methods based on attitude measurement and laser ranging; target localization methods based on collinear conformation principle; target localization methods based on Digital Elevation Model (DEM) [17]. However, FWAVs have their own characteristics of less load capacity, short endurance time and motion jitter during flight, so most existing systems are not suitable for FWAVs.

In this paper, we concentrate on designing a ground target localization system for FWAVs. First, we introduce the generic camera model that we have chosen and explain the reason. Based on the generic camera model, the ground target localization algorithm is given next. In addition, considering the measurement errors of sensors and motion jitter during flight, when we then do the simulation experiments, we introduce Gaussian noises to the variables of height and camera angle between the camera and the target. Moreover, to suppress the influence of noises on the localization system, localization values are filtered by a first-order low-pass filter.

The paper is organized as follows. Five coordination systems and the generic camera model will be introduced firstly in Section II. In Section III, the target localization algorithm will be presented. And in Section IV, various simulation experiments are carried out. Experimental results are discussed and concluded in Section V.

II Preliminaries

II-A Five coordinate systems

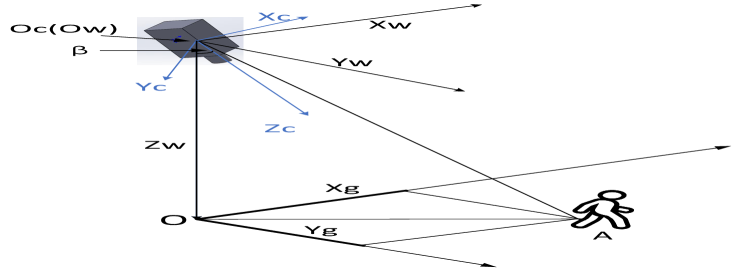

As shown in Fig.1, there are three coordinate systems. First, assuming a man (point A) as the target, we define that the ground coordinate system is , and the and axes point to the east and north respectively. Moreover, supposing point () is the centroid of the camera, the world coordinate system is -. The point is the perpendicularly vertical point of on the ground coordinate system, and the and axes are parallel to the and axes respectively. Additionally, the camera coordinate system - is formed as follows: take the camera centroid as origin, take the camera optical axis as the axis, and the , , axes are perpendicular to each other as shown in Fig.1. The angle between and is .

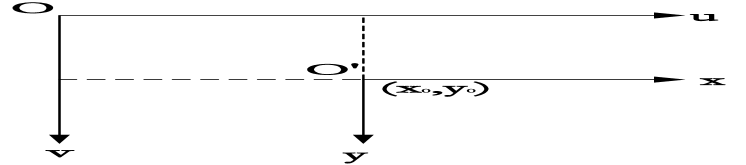

Besides, as shown in Fig.2, there are two image coordinate systems called image coordinate system I () and image coordinate system II (). Both of them are on the imaging plane, but their origin and measurement unit are different. The origin () of the image coordinate system I is the midpoint of the imaging plane, while the origin () of the image coordinate system II is on the top left corner. Moreover, the unit of image coordinate system I is mm, which belongs to its physical unit, while the unit of image coordinate system II is pixel. In addition, the conversion formula that from image coordinate system I to image coordinate system II is

| (1) |

where is the principal point and , give the number of pixels per unit distance (mm) in horizontal and vertical directions respectively [18].

II-B Generic camera model

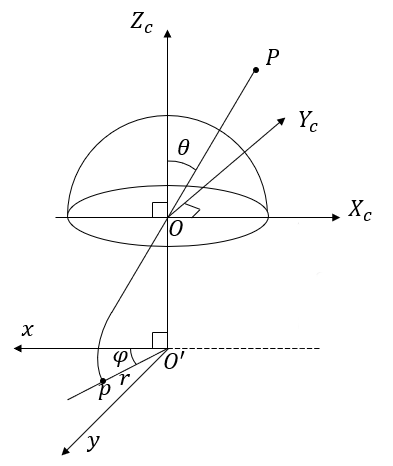

In order to increase the field of view of aerial photography based on FWAVs, a wide-angle lenses camera is usually chosen to be used [19]. However, the traditional pinhole image model is not suitable for wide-angle cameras, so we adopted a generic fish-eye lenses camera model as shown in Fig.3.

Point is the center of the camera lenses, is the image center, is the angle of incidence of projection, and is the distance from the projection point to the image center . The plane is the image surface, point is the image point of the space point , and is the angle between and axis. The approximate projection model is as follows

| (2) |

In the above equation, , , , , represent 5 parameters which can be determined by the calibration procedure.

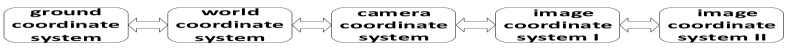

Based on the formula (2), the coordinate system transformation process is shown in Fig.4. And the projection process is to calculate the coordinate of the target in image coordinate system II for giving its ground coordinate.

Suppose that the coordinate of the ground target in the ground coordinate system is , the height of the camera is , and the angle between and is . Then the point A in the world coordinate system is . The point in the world coordinate system is transformed into the camera coordinate system by rigid transformation matrix

| (3) |

Then the coordinate of the ground target point in the camera coordinate system is

| (4) |

Then we can get the incidence angle , and the angle (as shown in Fig.3) as follows

| (5) |

| (6) |

By combining (2), we can get the distance . And then we can get the coordinate in the image coordinate system I

| (7) |

And then we can get the coordinate in the image coordinate system II

| (8) |

| (9) |

III GROUND TARGET POSITIONING ALGORITHM

The target localization algorithm aims to calculate the coordinate of the ground target in the ground coordinate system based on knowing its image coordinate.

In addition, Fig.5 shows one of the FWAVs named USTBird. Note that sensors exist measurement error and the camera also exists jitter and motion blur during flight. When we then calculate the coordinate of the target in the ground coordinate system, we suppose that the angle between and is , the height of the camera is , and the coordinate in image coordinate system II is .

Given the coordinate as mentioned, combining (1), we can get the coordinate in the image coordinate system I. Thus, the distance () and the angle between the and the axis () are

| (10) |

| (11) |

And then combining (2), we can get the incidence angle by solving the nine-degree polynomial given the internal parameters of the camera. Additionally, given the incident angle and the angle , the unit vector of incident angle in camera coordinate system is

| (12) |

| (13) |

| (14) |

Then according to (3), the rotation matrix from camera coordinate system to world coordinate system is

| (15) |

In addition, according to (4), we can then get the unit vector of incident angle in the world coordinate system

| (16) |

Then, combining (5) and (6), based on the the unit vector, the angles and are

| (17) |

| (18) |

Based on all the above formulas, we can finally obtain the coordinate of the target in the ground coordinate system given the height of the camera . And the coordinate is

| (19) |

| (20) |

IV SIMULATION EXPERIMENT

IV-A Simulation devices and configurations

In order to verify the feasibility and accuracy of the localization algorithm, we designed a set of comparative simulation experiments. The simulation code is written and run by python 3.7, and the experimental platform is a laptop with a 2.9 GHz CPU and 16 G memory, and the operating system is windows 10.

Moreover, according to our real cameras, we set parameters of the generic camera model with , , , , and the maximum camera angle is 150°. After calibration, we set the parameters in the projection model with , , .

IV-B Simulation with noises

The errors are caused by the measurement of sensors and signal transmission, which include the angle error approximately 0.1°, the height error introduced by the barometer is 0.5 m, and the image detection error is almost 4 pixels.

Moreover, the flight mode of flapping-wing aerial vehicles will cause periodic jitter in the fuselage during flight, leading to jitter and motion blur to the camera. So we then introduced Gaussian noises in the simulation experiment.

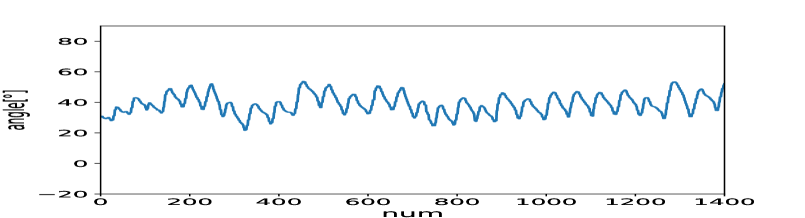

Fig.6 shows the attitude pitch angle of USTBird during the flight. The pitch of USTBird fluctuates periodically around 37° showing that the attack angle of USTBird is about 37°. So we then introduced the Gaussian noise in angle part with the mean value of 37° and with the standard deviation of 0.1° (the respective error level of the sensor as mentioned before). While in the height part, the Gaussian noise is introduced with the mean value of 0 and the standard deviation of 0.5 m (the respective error level of the sensor as mentioned before).

In doing the simulation experiment, we set a fixed point = as our target coordinate in the world coordinate system, and then calculate the coordinate by our localization algorithm many times.

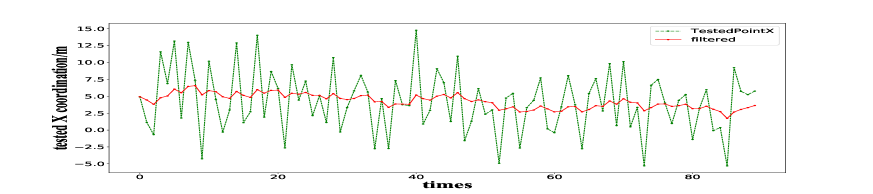

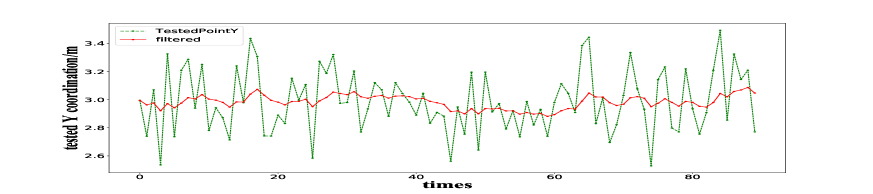

Specifically, the X and Y coordinates are calculated 90 times respectively, and the simulation results are shown in Fig.7 and Fig.8 with the dotted green lines.

As the noise existed in both part of measurement and motion jitter, both of the X and Y coordinate lines are not very smooth even with some shaking points in a very large attitude. So we should then introduce a filter to stabilize the localization values.

IV-C First-order low-pass filter

The first-order low-pass filtering algorithm originated from the first-order low-pass filter circuit, which is used to eliminate superimposed high-frequency interference components [20]. We could use a first-order low-pass filter to stabilize the localization values influenced by high-frequency noise of the measurement error and motion jitter.

The algorithm formula of the first-order low-pass filter is

| (21) |

where is the th value of sampling, is the th value of filter output, is the th output value of the filter, and is the filtering coefficient [21].

After testifying the filtering coefficient many times, we finally chose the filtering coefficient =0.125, which can receive a better filtering effect. The filtered result is shown in Fig.7 and Fig.8 with the red line, and the data analysis results are shown in Table I.

| Mean (original) | Mean (filtering) | Var. (original) | Var. (filtering) | |

|---|---|---|---|---|

| X / m | 4.54 | 4.11 | 18.76 | 1.24 |

| Y / m | 3.46 | 3.17 | 15.74 | 2.51 |

As shown in Table I, the mean value is closer to the set point, and the variance is greatly reduced after filtering. The simulation results show that the filter can eliminate the high-frequency interference and stabilize the localization value to our set real position value. In short, from the simulation results, the localization algorithm and the filter we used have a good effect.

V CONCLUSION

In this paper, we mainly developed a vision-based target localization algorithm for FWAVs. The five coordinates and generic camera model are introduced firstly, and based on that camera model, a target localization algorithm is presented. Moreover, we then carried out various simulation experiments. Considering measurement errors of sensors and motion fuselage jitter during flight, the simulation is processed by adding Gaussian noises to the variables of height and camera angle. In addition, we used a first-order low-pass filter to stabilize the calculated localization value influenced by the noises. The simulation results show that the target localization algorithm has a good effect, and the filter we used also makes a grea improvement. In the future, we will evaluate the performance of the proposed ground target localization algorithm in real experiments.

References

- [1] W. He, X. X. Mu, L. Zhang, and Y. Zou, “Modeling and trajectory tracking control for flapping-wing micro aerial vehicles,” IEEE/CAA Journal of Automatica Sinica, vol. 8, no. 1, pp. 148–156, 2021.

- [2] Z. Yin, W. He, Y. Zou, X. X. Mu and C. Y. Sun, “Efficient formation of flapping-wing aerial vehicles based on wild geese queue effect,” Acta Automatica Sinica, vol. 46, pp. 1–13, 2020.

- [3] Q. Fu, X. Y. Chen, Z. L. Zheng, Q. Li, and W. He, “Research progress on visual perception system of bionic flapping-wing aerial vehicles,” Chinese Journal of Engineering, vol. 41, no. 12, pp. 1512–1519, 2019.

- [4] M. A. Graule, P. Chirarattananon, S. B. Fuller, N. T. Jafferis, K. Y. Ma, M. Spenko, R. Kornbluh, and R. J. Wood, “Perching and takeoff of a robotic insect on overhangs using switchable electrostatic adhesion,” Science, vol. 352, no. 6288, pp. 978–982, 2016.

- [5] F. Y. Hsiao, L. J. Yang, S. H. Lin, C. L. Chen, and J. F. Shen, “Autopilots for ultra lightweight robotic birds: Automatic altitude control and system integration of a sub-10 g weight flapping-wing micro air vehicle,” IEEE Control Systems Magazine, vol. 32, no. 5, pp. 35–48, 2012.

- [6] E. Pan, X. Liang, and W. Xu, “Development of vision stabilizing system for a large-scale flapping-wing robotic bird,” IEEE Sensors Journal, vol. 20, no. 99, pp. 8017–8028, 2020.

- [7] J. Pietruha, K. Sibilski, M. Lasek, and M. Zlocka, “Analogies between rotary and flapping wings from control theory point of view,” in AIAA Atmospheric Flight Mechanics Conference and Exhibit, 2001.

- [8] C. S. Yuan, Y. Z. Li, and J. Tan, “Investigation in flight control system of flapping-wing micro air vehicles,” Computer Measurement and Control, vol. 19, no. 7, pp. 1527–1529, 2011.

- [9] Q. Fu, J. Wang, L. Gong, J. Y. Wang, ang W. He, “Obstacle avoidance of flapping-wing air vehicles based on optical flow and fuzzy control,” Transactions of Nanjing University of Aeronautics and Astronautics, vol. 38, no. 2, pp. 206–215, 2021.

- [10] A. Ramezani, X. Shi, S. J. Chung, and S. Hutchinson, “Bat Bot (B2), a biologically inspired flying machine,” in IEEE International Conference on Robotics and Automation, 2016.

- [11] M. Keennon, K. Klingebiel, and H. Won, “Development of the nano hummingbird: A tailless flapping wing micro air vehicle,” in 50th AIAA Aerospace Sciences Meeting including the New Horizons Forum and Aerospace Exposition, 2012.

- [12] W. Yang, L. Wang, and B. Song, “Dove: A biomimetic flapping-wing micro air vehicle,” International Journal of Micro Air Vehicles, vol. 10, no. 1, pp. 70–84, 2018.

- [13] A. A. Paranjape, S. J. Chung, and J. Kim, “Novel dihedral-based control of flapping-wing aircraft with application to perching,” IEEE Transactions on Robotics, vol. 29, no. 5, pp. 1071–1084, 2013.

- [14] G. C. H. E. D. Croon, M. Perin, B. D. W. Remes, R. Ruijsink, and C. D. Wagter, The DelFly: Design, Aerodynamics, and Artificial Intelligence of a Flapping Wing Robot, Springer Publishing Company, Incorporated, 2015.

- [15] S. S. Baek, F. L. G. Bermudez, and R. S. Fearing, “Flight control for target seeking by 13 gram ornithopter,” in 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2011.

- [16] Z. Zhang, B. Han, P. Li, F. Zhou, and W. Xu, “Small unmanned aerial vehicle visual system for ground moving target positioning,” in International Conference on Automatic Control and Artificial Intelligence, 2012.

- [17] W. Han, J. Wang, N. Wang, G. Sun, and D. He, “A method of ground target positioning by observing radio pulsars,” Experimental Astronomy, vol. 49, no. 1, pp. 43–60, 2020.

- [18] J. Kannala and S. S. Brandt, “A generic camera model and calibration method for conventional, wide-angle, and fish-eye lenses,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 28, no. 8, pp. 1335–1340, 2006.

- [19] Q. Fu, Y. H. Yang, X. Y. Chen, and Y. L. Shang, “Vision-based obstacle avoidance for flapping-wing aerial vehicles,” Science China Information Sciences, vol. 63, no. 7, pp. 170208, 2020.

- [20] Y. Wang, Z. Chen, M. Sun, and Q. Sun, “Ladrc-smith controller design and parameters analysis for first-order inertial systems with large timedelay,” in 2018 IEEE 7th Data Driven Control and Learning Systems Conference (DDCLS), 2018.

- [21] Y. Wang, D. Y. Sun, and Z. G. Qi, “Aging simulation method for EFI engine oxygen sensor based on first order inertial filter algorithm,” Jilin Daxue Xuebao (Gongxueban) /Journal of Jilin University (Engineering and Technology Edition), vol. 47, no. 4, pp. 1040–1047, 2017.