VINet: Visual and Inertial-based Terrain Classification and Adaptive Navigation over Unknown Terrain

Abstract

We present a visual and inertial-based terrain classification network (VINet) for robotic navigation over different traversable surfaces. We use a novel navigation-based labeling scheme for terrain classification and generalization on unknown surfaces. Our proposed perception method and adaptive scheduling control framework can make predictions according to terrain navigation properties and lead to better performance on both terrain classification and navigation control on known and unknown surfaces. Our VINet can achieve in terms of accuracy under supervised setting on known terrains and improve the accuracy by on unknown terrains compared to previous methods. We deploy VINet on a mobile tracked robot for trajectory following and navigation on different terrains, and we demonstrate an improvement of compared to a baseline controller in terms of RMSE.

I Introduction

The problems of perception and navigation have been extensively explored individually in the past. The perception tasks, including detection [1], segmentation [2, 3], classifications [4, 5], etc., can obtain very good overall accuracy running on challenging offline data sets [6, 7], which are clean and well-annotated. For mobile robot navigation in the outdoor environment, efficient perception methods such as terrain traversability analysis [8] have been proposed. Meanwhile, many control policies have been developed for mobile wheel or tracked robots to navigate on different terrains [9, 10].

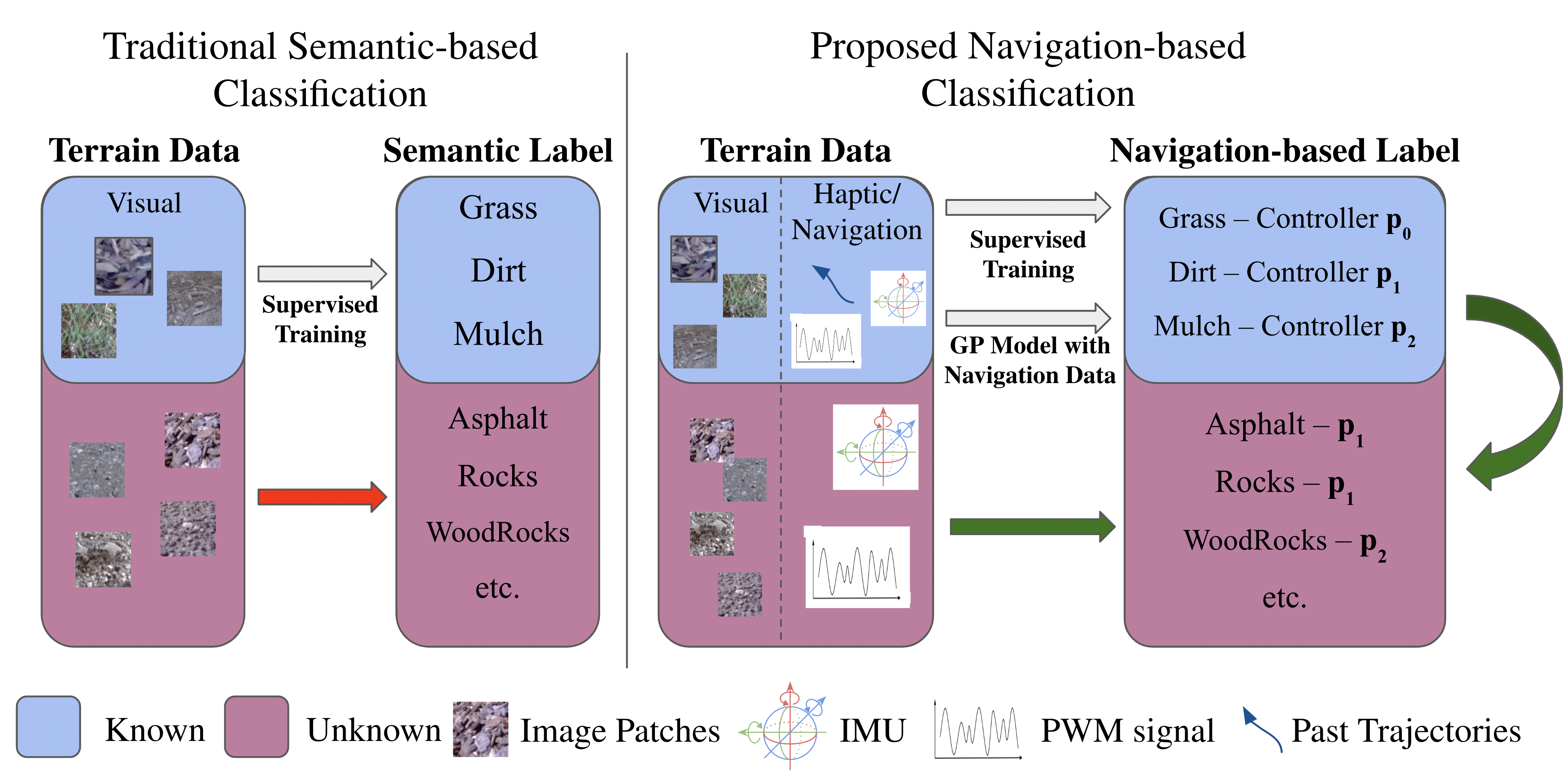

Perception and navigation problems, however, are rarely considered as a whole. Many sophisticated perception models confine the task and problem settings for standard bench-marking and fair comparisons. To truly benefit some downstream tasks like navigation and control, we need to reconsider the definition and context of the perception algorithms that are the same as those in standard benchmarks. For example, categories defined in a traditional classification or semantic segmentation task might not be used directly in navigation settings, since we need a mapping between the original categories and traversability of the terrain. There are some works that focus on robotic perception for terrain understanding [11, 12] and traversability analysis [13, 8], but most of those definitions are hand-crafted and heuristic-based. Instead, metrics based on navigation could provide insight on how to define such mapping or labeling scheme.

Perception for outdoor navigation is challenging, especially in an environment that is unknown and constantly changing with uncertainty. The perception model needs to generalize to unseen terrain well since it’s not possible to collect information and label all surfaces. In the context of navigation, unlike a traditional perception task, the perception algorithms should be trained in a way that maximizes the navigation performance and evaluated according to some navigation-related metrics, which is rarely explored in the past. The perception needs to be coupled with navigation and output the most useful result that can lead to stable and safe decision for the navigation and control modules.

In this paper, we propose VINet, a novel terrain classification network that combines both visual and inertial sensing through camera and Inertial Measurement Unit (IMU). Our method can work well on known terrains as well as completely unseen surfaces. There are two streams in the network: 1) an image stream that provides robust predictions on the training set and seen surfaces, and 2) an IMU temporal stream that can extract features of unseen terrains through inertial sensing and generalize to unknown classes. As shown in Figure 1, we used a navigation-based perception metric to measure the performance of our network for unseen terrains, which maximizes the performance of navigation. In addition, we propose a novel adaptive scheduling control framework and show that this pipeline with the proposed VINet leads to better navigation performance on both known and unknown terrain.

The key contributions of our work include:

-

1.

We propose a novel terrain classification network which fuses visual and inertial features of the terrain. Our method can achieve in terms of accuracy under the supervised setting and lead to an increase of under the unsupervised setting compared to previous methods.

-

2.

We propose a novel fusion scheme, which closes the latent embeddings between image and inertial data, and leverages those two modalities channel-wise. We also introduce an IMU denoising strategy to process noisy IMU data and further boost the classification performance.

-

3.

We deploy VINet on a tracked robot and propose a novel adaptive scheduling control framework enabled by VINet with navigation-based label generalization. Our navigation performance outperforms a baseline single terrain controller by in terms of RMSE.

II Related Work

II-A Terrain Classification

In [14], Xue et al. proposed an orderless-texture-based and spatial information-targeted neural network. [15] used both 3D LiDAR and camera to classify terrain by computing a 3D-scan feature that overcomes the issue in different lighting conditions. Similarly, Nguyen [16] introduced a structure-based method as well as estimating the surface smoothness to classify different roughness surface objects. [17] proposed an angular imaging method to distinguish different material types.

In addition, some methods try to solve the classification problem with inertial sensing. The earlier method like [18] utilizes a dynamic model to estimate the terrain profile and further label the terrain based on the spatial frequency part of the profile predicted. Likewise, [19] adopts the same frequency analysis to classify the terrain by filtering out the terrain impulses from the identified terrain profile. [20] tries to reveal the terrain types using the inertial data collected from the human walk. Recent work [21] proposes several deep learning methods for classifying the time-series IMU data on top of the engineered features.

II-B Navigation on different Terrain

Indoor navigation has been well developed in the past decade [22, 23, 24], but it still remains a challenge in the outdoor environment [25, 26, 27], especially on different terrains [28, 29]. Kumar et al. [28] investigate adaptive control strategies to enable a quadruped robot to walk on different material terrains, such as sand, mud, grass, and dirt. The wheeled robot or tracked robot [30] is another type of platform that is used in terrain navigation. [31, 32] and [33] cover the topics of unstructured environment modeling and controller design from the angle of the tracked robot. As mentioned in [28], the adaptive control policy enables the legged robot to quickly recover stability from falling, which poses a key factor towards the success of navigating in dynamics-changing environments of different terrain. Such related learning-based adaptive controller methods have been discussed in [34, 35, 36].

III Method

In this section, we present VINet, a novel terrain classification method for adaptive navigation and control. Our method combines visual and inertial information to make a classification prediction on different types of terrains and can classify known and unknown terrains categorized by navigation metrics. We later show that our network can directly benefit decision-making for control and navigation, even on terrains that the network has never seen before.

The rest of the sections are structured as follows: We begin by defining our problem settings and each component of our network, including backbones, IMU denoising, classification head, and loss functions. We move on to our implementation of adaptive scheduling control and the entire framework for navigation. Finally, we give some insights into the designs, including the navigation-based labeling and using IMU data as input for the classification task.

III-A Problem Definition and Terminology

The inputs of the network consist of an image patch taken along the intended trajectory of the mobile robot from the RGB camera, a sequence of 6 dimensional IMU data with three linear accelerations and three angular velocities for each axis, and a pulse-width modulation (PWM) signal , where is the number of motors relating to navigation and each dimension represents the command sent to a specific motor. The task of our method is to utilize visual and inertial perception sensors on a mobile robot for terrain classification. Our model will output a terrain category , where is the number of terrain types. In our setting, we only focus on surfaces that are navigable with different textures and physical properties and try to reduce the trajectory-following errors during navigation. Avoiding non-traversable surfaces like water or large rocks is not the point of the paper.

let be the set of known terrains, and the set of unknown terrains be the complement of . Given several pre-defined trajectories on surface , we define as the mean squared error of the controller trying to follow those trajectories on surface . From the set of known surfaces , we can obtain a set of trained controller , and we have

where is the controller pre-trained on the known surface . The ground truth category of surface is defined as follows:

|

|

(1) |

In our setting, known terrains are surfaces on which our model has training data, so the perception model and nonlinear model predictive controller (NMPC) can be trained or fine-tuned on those surfaces; unknown terrains are ones that our model has never encountered during training. The ground truth categories of the terrain are defined by the characteristics of the surfaces during navigation, or specifically, by the type of controllers that has the best performance on such terrain. For known terrain, we collected image, IMU, state of the tracked robot, planned trajectories, and actual trajectories to train the perception model and controller; for unknown terrain, we collected perception data as well as the performance of each controller pre-trained on the known surfaces according to definition 1, for the purpose of evaluation.

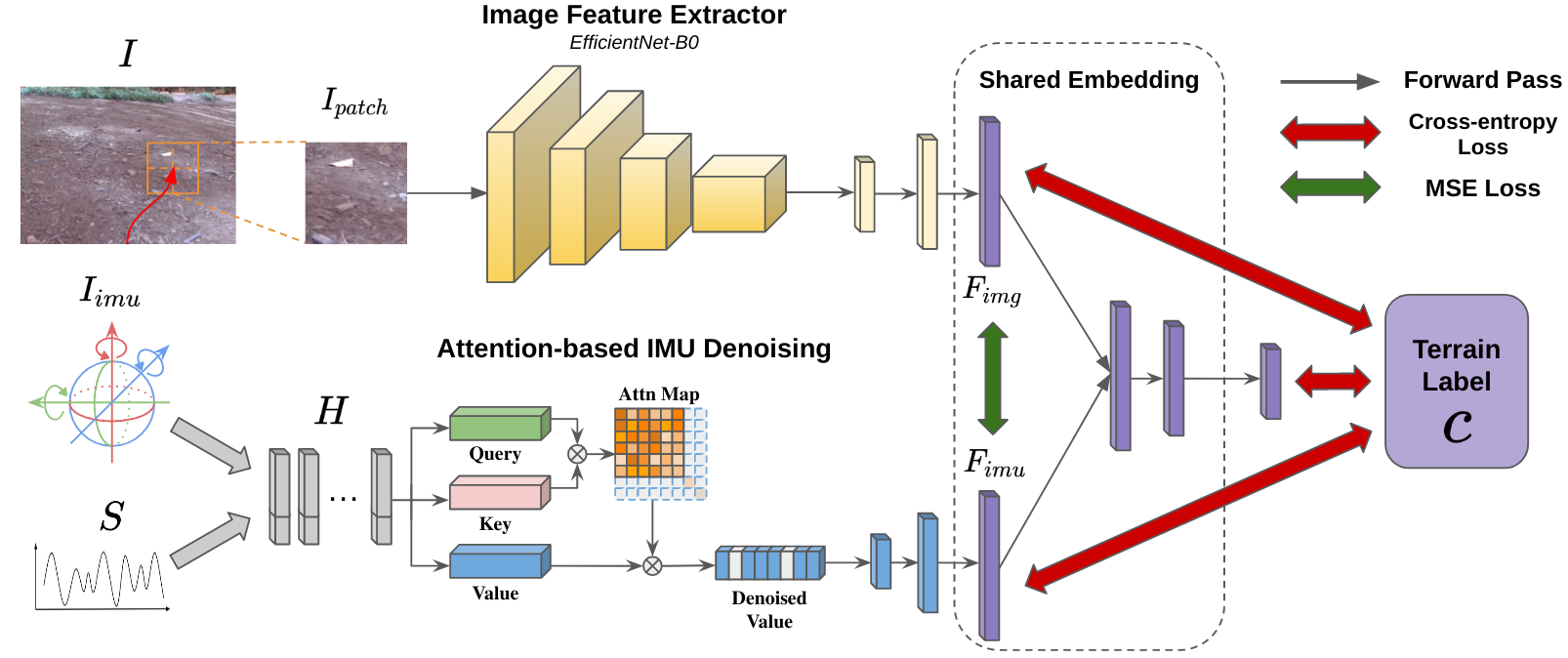

III-B Image and IMU Backbone

The proposed VINet consists of one image stream and one IMU stream, as shown in Figure 2. For the image stream, we adopted EfficientNet-B0 [37] as our image feature extractor. Given an image patch , the image branch takes a series of convolution operations with kernel size of 3 and 5, and eventually down-sampled to 1280 channels with spatial resolution of . After flattening and two linear layers with batch normalization and drop-out, we can obtain an intermediate feature , where is the shared dimension between and IMU feature .

Considering the effect of actions on the IMU pattern, our IMU stream takes an IMU sequence and the corresponding PWM signal for some time duration . Given the raw input sequence , the output feature is computed as:

| (2) |

where represent the key, query, and value feature, respectively, as in the self-attention [38] literature. Before the features are passed into the classification head, we process the feature with the IMU denoising module.

III-C IMU Denoising

Due to the noisy nature of IMU data, we introduce an attention-based denoising method to sample useful features in the time dimension. The attention map is defined as , and we can calculate the denoised IMU feature as:

| (3) |

where is the sampling rate, and sort the value in increasing order and returns the corresponding indices. This step removes the features at some specific timestamp with a low attention value for denoising purposes. After passing through a few linear layers with batch normalization and flattening, we can obtain an IMU feature .

III-D Classification Head in Shared Embedding Space

After going through the image and IMU stream, we can obtain and with dimension . We used a learnable weight for each channel of the features to fuse and . After several fully connected layers and activation, the network will output the vector with a classification score.

In the classification head, we want to train the network to learn a shared embedding space for and , which is achieved by adding a similarity constraint in the network during training. The purpose of creating a shared embedding space is to alleviate the effect of one feature taking over the other. In addition, we want to add some robustness to the network: if the data from one branch is corrupted and produced a terrible feature, it would not significantly affect and corrupt the classification head because the other branch could have some effect of averaging the corrupted features back to the shared embedding space.

III-E Loss Function

Mean-Squared-Error Loss: To create a shared embedding space for and , we penalize the network for extracting significantly different feature vectors from different modalities of the same data point. The MSE loss is defined as follows:

| (4) |

Cross-Entropy Loss: This is a standard loss for the classification task, defined as follows:

| (5) |

where denotes the set of known terrain, denotes the output probability map corresponding to terrain , and corresponds to the ground-truth label. We use this loss on each branch of the network as well as the final classification head.

III-F Adaptive Scheduling Control Framework

In this subsection, we give more details of our controller and present our adaptive scheduling control framework with VINet on known and unknown terrain.

Nonlinear Model Predictive Controller: The overall goal of the controller is to closely follow the planned trajectory, which is significantly affected by the terrain type. For known terrains, we collected some navigation data and use those to fit a nonlinear model predictive controller (NMPC) and a Gaussian Process (GP) model to learn the interaction between the tracks and the terrain. The NMPC trajectory tracking control problem at time instance can be formulated as follows:

| (6) | ||||

where is the prediction horizon. and denote the predicted values of the model state and input, respectively, at time based on the information that is available at time . is included in the cost function to smooth the control output. The state vector is , where and are the 2D Cartesian coordinates of the robot, and is the heading. The control output vector is composed of the linear and angular speed commands of the robot. The nonlinear prediction model can be described by the following equations:

| (7) |

The output of the NMPC, , is then mapped to the PWM signals controlling the two motors driving each track of the robot. The terrain track interaction, caused by terrain resistance and deformation, is captured by the GP model: . The GP models provide the required for each terrain, so that the actual linear and angular speeds match with the desired calculated by the NMPC. Since is two-dimensional, corresponding to the left and right track motors, two one-dimensional GP models are fitted for each known terrain. The Radial Basis Function (RBF) kernel is used in the GP models:

| (8) |

where is the diagonal length scale matrix and , represent the data and prior noise variance, and , represent data features.

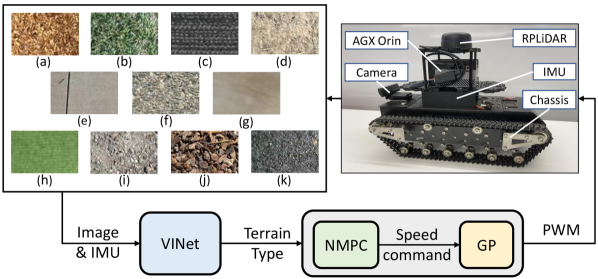

Adaptive Scheduling Control: As shown in Figure 3, we deploy VINet on a tracked robot, and our model can use visual and inertial feedback during navigation to predict a terrain class, which corresponds to the pre-trained controller and would have the best performance on this terrain if the prediction is correct. Once the robot receives the prediction, it will adjust to the suitable controller on this terrain.

For unknown terrain, our classifier would still choose one of the categories in the original label set since our classifier is only trained on known surfaces. Because of the introduction of IMU as inertial feedback and the terrain label assignment measured by the navigation feedback from the controller, VINet can make a prediction based more on generalized navigation features instead of purely semantic labels, leading to a better choice of the controller on an unknown surface. For evaluation of perception, we find a few unknown terrains and test our pre-trained controller to assign those terrains to one of our pre-trained controllers, as defined in Equation 1.

III-G Motivation for Using IMU data and Navigation-based Labeling Scheme

While the image-based classifier can achieve a good result on standardized classification benchmarks, it does not directly translate to good performance in navigation. First, the categories in standard data sets are usually semantic-based; on the other hand, it’s less intuitive to give a fair traversability or material label that is useful for navigation, and most existing practices are usually heuristic-based [12, 13] for benchmarking purposes.

Second, a purely vision-based method could easily overfit the known data and makes it harder to generalize to more unknown terrains. For example, grass and artificial grass might be visually similar, but they might have different hardness and slipperiness. Because of such difference, the controller fitted with data navigating over grass might lead to worse navigation on the artificial grass because the classifier could not distinguish their navigation features by only relying on the visual difference.

To solve those issues, we eliminate the heuristics in the labeling by using some pre-trained controllers as labels for the terrain, on which the controller has the best performance. The labeling becomes navigation-based instead of semantic-based: once our model is trained according to this labeling scheme and the model can predict correctly, the output would choose the best controller to use while navigating on the corresponding surface, which could directly benefit the navigation. Through navigation-based labeling, the unknown terrain will be predicted as one of the known terrains and the interpretation is that those two types of terrains could have similar navigation features due to some visual textures. The output could still be random and meaningless sometimes unless we have some feedback during the navigation. To improve the Interpretability and generalizability of the output, we add an IMU sequence as input during navigation, in addition to the visual input.

By introducing the IMU, we want to improve the model’s ability to generalize on unseen terrains. The model can build connections between the extracted features from inertial sensing of IMU and the navigation features of the terrain, so the network can have less bias due to over-fitting on the images. Because all of the terrain labels are based on navigation data from known terrains, the prediction output is optimized with respect to the IMU and Image input. In Section IV, we validate our statement by showing comparisons with and without IMU as input to the network.

IV Implementation Details and Analysis

IV-A Data Collection and Controller Pre-training

We collected 4923 images of size among 11 different terrain types and the corresponding inertial sequences at 30 Hz. The 11 terrain types include black carpet, green carpet, smooth tiles, concrete, grass, soil, mulch, wood rocks, rocky surface, asphalt, and small rocks.

Gaussian Process models used in the tracking controller are fitted by the collected data on each known terrain, including the PWM signal and the corresponding robot’s linear and angular speeds calculated based on the robot localization output. For each terrain, about 1500 data pairs were collected and used to train the GP model. Then, the NMPC controller is tuned manually on each terrain type to further optimize the trajectory tracking performance on each known type. For the purpose of evaluation, we find some other surfaces as unknown terrains and evaluate those pre-trained controllers on such terrains to get the best controller as its navigation-based label. Due to limited space, we show the details of each terrain class and evaluated trajectories in the full report [39].

IV-B Implementation Details

We evaluate our network with the collected data as described in IV-A. We use the Adam optimizer with a learning rate of 0.0003 and exponential decay of 0.95. All experiments are conducted on a single GeForce RTX 2080 Ti GPU and trained with a batch size of 128 for 200 epochs. We sample a patch of size along the expected trajectory based on the current velocity and heading, and extract the corresponding IMU while the robot navigates near that image patch region. For all experiments, we set the length of the IMU sequence to be 60, and the sampling rate of the IMU sequence to be . We choose the dimension of the shared embedding space to be 512 and 256 in our experiments. For evaluation, we use the averaged accuracy, which is calculated as:

| (9) |

IV-C Comparisons and Ablation Study

In this section, we show some quantitative comparisons under different settings. For comparison methods, we used a recent method [40], which is based on SVM with principle component analysis. We implement this method according to the paper since code is not available.

| Modality | IMG Backbone | Pre-training | IMU Dims | Shared Dims | Accuracy |

| SVM-based [40] | - | - | - | - | 0.0282 |

| IMU Branch | - | - | 6 | 512 | 0.4227 |

| - | - | 6 | 256 | 0.4629 | |

| IMU Branch | - | - | 8 | 512 | 0.5072 |

| - | - | 8 | 256 | 0.5175 | |

| IMG Branch | EfficientNet-b0 | ✗ | - | 512 | 0.4227 |

| EfficientNet-b0 | ✗ | - | 256 | 0.4198 | |

| EfficientNet-b0 | ✓ | - | 512 | 0.6297 | |

| EfficientNet-b0 | ✓ | - | 256 | 0.6797 | |

| IMG + IMU (VINet) | EfficientNet-b0 | ✗ | 6 | 512 | 0.5486 |

| EfficientNet-b0 | ✗ | 6 | 256 | 0.5595 | |

| EfficientNet-b0 | ✓ | 6 | 512 | 0.6642 | |

| EfficientNet-b0 | ✓ | 6 | 256 | 0.6809 | |

| IMG + IMU (VINet) | EfficientNet-b0 | ✗ | 8 | 512 | 0.5532 |

| EfficientNet-b0 | ✗ | 8 | 256 | 0.7171 | |

| EfficientNet-b0 | ✓ | 8 | 512 | 0.6786 | |

| EfficientNet-b0 | ✓ | 8 | 256 | 0.7648 |

5 known surfaces and 6 unknown surfaces: In Table I, we show the performance of several models with different parameters. The five known terrains are black carpet, concrete, grass, soil, and mulch. The IMU-only and IMG-only models are directly separated from each branch of the proposed VINet. We can see that utilizing both branches would have better performance than using either one of the branches. For the image backbone, using models pre-trained on ImageNet [6] can also boost the performance, but our method still has the best performance with or without pre-training. In addition, we discover that adding the PWM signal command to the original 6-axis IMU data can greatly improve the classification accuracy for the IMU branch. Please refer to Equ. 1 for the definition of ground truth labels of unknown surfaces.

11 known surfaces and no unknown surfaces: To illustrate the performance of VINet on traditional terrain classifications task, we consider all 11 surface types as known and split the types evenly to training and testing according to 7:3 ratio. As shown in Table II, the performance of VINet goes up to with pre-training.

Ablation study: In Table III, we consider the effect of each component of our proposed network. We can see that removing any one of the components would lead to a performance drop, especially when the shared dimension is 256. In addition, we discover that for most of the models, except for the one without any inter. CE loss and shared embedding, using 256 for the shared embedding dimensions leads to overall better performance, especially with all components, even though the number of parameters is less.

| Modality | IMG Backbone | Pre-training | Shared Dims | Accuracy |

| SVM-based [40] | - | - | - | 0.5943 |

| IMU Branch | - | - | 512 | 0.7109 |

| - | - | 256 | 0.729 | |

| IMG Branch | EfficientNet-b0 | ✗ | 512 | 0.5481 |

| EfficientNet-b0 | ✗ | 256 | 0.6143 | |

| EfficientNet-b0 | ✓ | 512 | 0.9026 | |

| EfficientNet-b0 | ✓ | 256 | 0.8979 | |

| IMG + IMU (VINet) | EfficientNet-b0 | ✗ | 512 | 0.779 |

| EfficientNet-b0 | ✗ | 256 | 0.8348 | |

| EfficientNet-b0 | ✓ | 512 | 0.9692 | |

| EfficientNet-b0 | ✓ | 256 | 0.9837 |

| Inter. CE Loss | Shared Embedding | IMU Denoising | Learned Weighting | Shared Dims | Accuracy |

| ✗ | ✗ | ✓ | ✓ | 512 | 0.6803 |

| ✗ | ✗ | ✓ | ✓ | 256 | 0.6711 |

| ✓ | ✗ | ✓ | ✓ | 512 | 0.6578 |

| ✓ | ✗ | ✓ | ✓ | 256 | 0.6728 |

| ✓ | ✓ | ✗ | ✓ | 512 | 0.6515 |

| ✓ | ✓ | ✗ | ✓ | 256 | 0.6561 |

| ✓ | ✓ | ✓ | ✗ | 512 | 0.6619 |

| ✓ | ✓ | ✓ | ✗ | 256 | 0.6912 |

| ✓ | ✓ | ✓ | ✓ | 512 | 0.6786 |

| ✓ | ✓ | ✓ | ✓ | 256 | 0.7648 |

IV-D Navigation With Adaptive Control

We use a tracked robot to evaluate the performance of the method, as shown in Figure 3. The external dimension of the robot is , and the track width is . The tracked robot has a 12V DC motor with 5 kg-cm rated torque specs on each side of the robot and it can carry a maximum payload of 30kg. We equipped the robot with a NVIDIA Jetson AGX Orin for processing, a single-line LiDAR (RPLiDAR-A3) for navigation, a 1080p camera (Logitech C920) for terrain image capturing, and an IMU (3DM-GX5-25) for motion data recording. All computations including data processing, inference and control are computed on the edge.

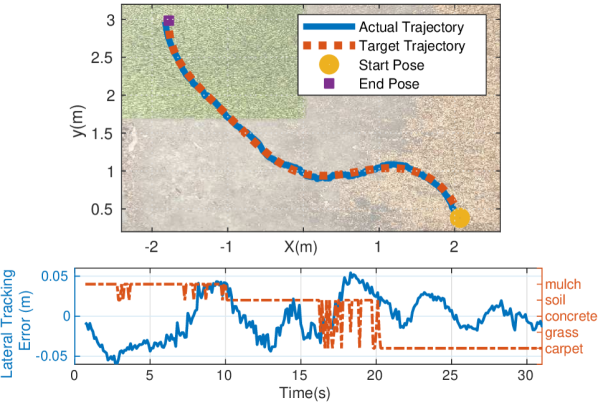

As shown in Figure 3, the adaptive tracking controller receives the terrain type from the VINet and switches to the corresponding controller in real-time. We conducted multiple test cases with the adaptive controller with the same trajectories covering three different terrains, including mulch, soil as known surfaces, and artificial grass as unknown surfaces, as shown in Figure 4. For comparison, the baseline controller does not receive the terrain type output from VINet and thus uses only one controller on all three types of terrains. In the test, we choose the carpet and mulch controllers as the baseline controllers. Figure 4 shows a sample trajectory tracking test case with the adaptive controller.

The tracking error of the adaptive and the baseline controllers are shown in Table IV. Our proposed VINet-based adaptive control framework has the best performance in terms of the root-mean-square error (RMSE) between the actual and target trajectories.

| Adaptive | Carpet baseline | Mulch baseline | ||

|

0.026 | 0.050 | 0.029 |

V Conclusions, Limitations, and Future Works

In this paper, we present a novel terrain classification method VINet for terrain classification and adaptive navigation control. We propose a novel IMU denoising module and a shared latent embedding space for image and IMU sequence. Our proposed navigation-based labeling and generalization can enable safe and stable navigation on unknown terrain, which is more intuitive and beneficial for navigation tasks compared to traditional semantic labels.

There are some limitations. For example, we did not extensively test our adaptive framework on more unknown terrains in a single run, since it’s difficult to find multiple terrains on a single site. In the future, we want to fully exploit the controller design to better utilize the navigation-based classification scheme, and also combine it with other functionalities like traversability analysis and obstacle avoidance.

APPENDIX

VI More details on terrain classes

In Table V, we give more details on the assignment of navigation-based labels for unknown classes based on known classes. On each type of unknown terrain, we sample trajectories for each different controller as measurement for label assignment. In particular, we give an example of assignment of rocky surface in Table VI. We also show one sampled trajectory for each controller on rocky surface in Figure 5.

|

|

|

||||||

|---|---|---|---|---|---|---|---|---|

| Black Carpet | Known | - Carpet | ||||||

| Concrete | Known | - Concrete | ||||||

| Grass | Known | - Grass | ||||||

| Soil | Known | - Soil | ||||||

| Mulch | Known | - Mulch | ||||||

| Green Carpet | Unknown | - Carpet | ||||||

| Rocky Surface | Unknown | - Concrete | ||||||

| Wood Rocks | Unknown | - Mulch | ||||||

| Asphalt | Unknown | - Soil | ||||||

| Small Rocks | Unknown | - Soil | ||||||

| Smooth Tiles | Unknown | - Concrete | ||||||

| Artificial Grass* | Unknown | - Carpet |

| Controller | Overall | Longtitude | Latitude |

|---|---|---|---|

| Type | RMSE | RMSE | RMSE |

| - Carpet | 0.045516 | 0.034005 | 0.030255 |

| - Concrete | 0.032945 | 0.031562 | 0.009446 |

| - Grass | 0.175690 | 0.077231 | 0.157800 |

| - Soil | 0.045092 | 0.037987 | 0.024296 |

| - Mulch | 0.066245 | 0.061325 | 0.025052 |

References

- [1] Z. Liu, H. Hu, Y. Lin, Z. Yao, Z. Xie, Y. Wei, J. Ning, Y. Cao, Z. Zhang, L. Dong, F. Wei, and B. Guo, “Swin transformer v2: Scaling up capacity and resolution,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2022, pp. 12 009–12 019.

- [2] E. Xie, W. Wang, Z. Yu, A. Anandkumar, J. M. Alvarez, and P. Luo, “Segformer: Simple and efficient design for semantic segmentation with transformers,” in Advances in Neural Information Processing Systems, 2021.

- [3] Y. Yuan, X. Chen, and J. Wang, “Object-contextual representations for semantic segmentation,” in 16th European Conference Computer Vision (ECCV 2020), August 2020.

- [4] Z. Dai, H. Liu, Q. V. Le, and M. Tan, “Coatnet: Marrying convolution and attention for all data sizes,” in Advances in Neural Information Processing Systems, A. Beygelzimer, Y. Dauphin, P. Liang, and J. W. Vaughan, Eds., 2021. [Online]. Available: https://openreview.net/forum?id=dUk5Foj5CLf

- [5] A. J. Sathyamoorthy, K. Weerakoon, T. Guan, J. Liang, and D. Manocha, “Terrapn: Unstructured terrain navigation using online self-supervised learning,” in 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022, pp. 7197–7204.

- [6] O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, A. C. Berg, and L. Fei-Fei, “ImageNet Large Scale Visual Recognition Challenge,” International Journal of Computer Vision (IJCV), vol. 115, no. 3, pp. 211–252, 2015.

- [7] T.-Y. Lin, M. Maire, S. J. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” in ECCV, 2014.

- [8] T. Guan, Z. He, R. Song, D. Manocha, and L. Zhang, “TNS: Terrain Traversability Mapping and Navigation System for Autonomous Excavators,” in Proceedings of Robotics: Science and Systems, New York City, NY, USA, June 2022.

- [9] C. D. McKinnon and A. P. Schoellig, “Experience-based model selection to enable long-term, safe control for repetitive tasks under changing conditions,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018, pp. 2977–2984.

- [10] J. Dallas, M. P. Cole, P. Jayakumar, and T. Ersal, “Terrain adaptive trajectory planning and tracking on deformable terrains,” IEEE Transactions on Vehicular Technology, vol. 70, no. 11, pp. 11 255–11 268, 2021.

- [11] T. Guan, D. Kothandaraman, R. Chandra, A. J. Sathyamoorthy, K. Weerakoon, and D. Manocha, “Ga-nav: Efficient terrain segmentation for robot navigation in unstructured outdoor environments,” IEEE Robotics and Automation Letters, vol. 7, no. 3, pp. 8138–8145, 2022.

- [12] K. Viswanath, K. Singh, P. Jiang, P. Sujit, and S. Saripalli, “Offseg: A semantic segmentation framework for off-road driving,” in 2021 IEEE 17th International Conference on Automation Science and Engineering (CASE), 2021, pp. 354–359.

- [13] A. Chilian and H. Hirschmüller, “Stereo camera based navigation of mobile robots on rough terrain,” in 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2009, pp. 4571–4576.

- [14] J. Xue, H. Zhang, and K. Dana, “Deep texture manifold for ground terrain recognition,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 558–567.

- [15] S. Laible, Y. N. Khan, K. Bohlmann, and A. Zell, “3d lidar- and camera-based terrain classification under different lighting conditions,” in AMS, 2012.

- [16] D.-V. Nguyen, “Vegetation detection and terrain classification for autonomous navigation,” Ph.D. dissertation, 01 2013.

- [17] J. Xue, H. Zhang, K. Dana, and K. Nishino, “Differential angular imaging for material recognition,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 6940–6949.

- [18] C. C. Ward, “Terrain sensing and estimation for dynamic outdoor mobile robots,” Ph.D. dissertation, Massachusetts Institute of Technology, 2007.

- [19] C. C. Ward and K. Iagnemma, “Speed-independent vibration-based terrain classification for passenger vehicles,” Vehicle System Dynamics, vol. 47, no. 9, pp. 1095–1113, 2009.

- [20] M. Z. Hashmi, Q. Riaz, M. Hussain, and M. Shahzad, “What lies beneath one’s feet? terrain classification using inertial data of human walk,” Applied Sciences, vol. 9, p. 3099, 07 2019.

- [21] F. Lomio, E. Skenderi, D. Mohamadi, J. Collin, R. Ghabcheloo, and H. Huttunen, “Surface type classification for autonomous robot indoor navigation,” arXiv preprint arXiv:1905.00252, 2019.

- [22] D. Khan, Z. Cheng, H. Uchiyama, S. Ali, M. Asshad, and K. Kiyokawa, “Recent advances in vision-based indoor navigation: A systematic literature review,” Computers & Graphics, 2022.

- [23] A. J. Sathyamoorthy, J. Liang, U. Patel, T. Guan, R. Chandra, and D. Manocha, “Densecavoid: Real-time navigation in dense crowds using anticipatory behaviors,” in 2020 IEEE International Conference on Robotics and Automation (ICRA), 2020, pp. 11 345–11 352.

- [24] A. J. Sathyamoorthy, U. Patel, T. Guan, and D. Manocha, “Frozone: Freezing-free, pedestrian-friendly navigation in human crowds,” IEEE Robotics and Automation Letters, vol. 5, no. 3, pp. 4352–4359, 2020.

- [25] D. Droeschel, M. Schwarz, and S. Behnke, “Continuous mapping and localization for autonomous navigation in rough terrain using a 3d laser scanner,” Robotics and Autonomous Systems, vol. 88, pp. 104–115, 2017.

- [26] K. Weerakoon, A. J. Sathyamoorthy, U. Patel, and D. Manocha, “Terp: Reliable planning in uneven outdoor environments using deep reinforcement learning,” in 2022 International Conference on Robotics and Automation (ICRA), 2022, pp. 9447–9453.

- [27] J. Liang, K. Weerakoon, T. Guan, N. Karapetyan, and D. Manocha, “Adaptiveon: Adaptive outdoor local navigation method for stable and reliable actions,” IEEE Robotics and Automation Letters, vol. 8, no. 2, pp. 648–655, 2023.

- [28] A. Kumar, Z. Fu, D. Pathak, and J. Malik, “Rma: Rapid motor adaptation for legged robots,” Robotics: Science and Systems, 2021.

- [29] H. Karnan, K. S. Sikand, P. Atreya, S. Rabiee, X. Xiao, G. Warnell, P. Stone, and J. Biswas, “Vi-ikd: High-speed accurate off-road navigation using learned visual-inertial inverse kinodynamics,” in 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022, pp. 3294–3301.

- [30] C. H. Lee, S. H. Kim, S. C. Kang, M. S. Kim, and Y. K. Kwak, “Double-track mobile robot for hazardous environment applications,” Advanced Robotics, vol. 17, no. 5, pp. 447–459, 2003.

- [31] I. Vincent and Q. Sun, “A combined reactive and reinforcement learning controller for an autonomous tracked vehicle,” Robotics and Autonomous Systems, vol. 60, no. 4, pp. 599–608, 2012.

- [32] C. Zong, Z. Ji, J. Yu, and H. Yu, “An angle-changeable tracked robot with human-robot interaction in unstructured environments,” Assembly Automation, vol. 40, no. 4, pp. 565–575, 2020.

- [33] C. Ordonez, R. Alicea, B. Rothrock, K. Ladyko, M. Harper, S. Karumanchi, L. Matthies, and E. Collins, “Modeling and traversal of pliable materials for tracked robot navigation,” in Unmanned Systems Technology XX, vol. 10640. SPIE, 2018, pp. 76–83.

- [34] L. Hewing, K. P. Wabersich, M. Menner, and M. N. Zeilinger, “Learning-based model predictive control: Toward safe learning in control,” Annual Review of Control, Robotics, and Autonomous Systems, vol. 3, pp. 269–296, 2020.

- [35] G. Torrente, E. Kaufmann, P. Föhn, and D. Scaramuzza, “Data-driven mpc for quadrotors,” IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 3769–3776, 2021.

- [36] A. H. Chang, C. Hubicki, A. Ames, and P. A. Vela, “Every hop is an opportunity: Quickly classifying and adapting to terrain during targeted hopping,” in 2019 International Conference on Robotics and Automation (ICRA), 2019, pp. 3188–3194.

- [37] M. Tan and Q. Le, “EfficientNet: Rethinking model scaling for convolutional neural networks,” in Proceedings of the 36th International Conference on Machine Learning, ser. Proceedings of Machine Learning Research, K. Chaudhuri and R. Salakhutdinov, Eds., vol. 97. PMLR, 09–15 Jun 2019, pp. 6105–6114. [Online]. Available: https://proceedings.mlr.press/v97/tan19a.html

- [38] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, “Attention is all you need,” CoRR, vol. abs/1706.03762, 2017.

- [39] T. Guan, R. Song, Z. Ye, and L. Zhang, “Vinet: Visual and inertial-based terrain classification and adaptive navigation over unknown terrain,” 2022. [Online]. Available: https://arxiv.org/abs/2209.07725

- [40] A. Kurup, S. Kysar, J. Bos, P. Jayakumar, and W. Smith, “Supervised terrain classification with adaptive unsupervised terrain assessment,” SAE International, 1 2021.