VideoMix: Rethinking Data Augmentation for Video Classification

Abstract

State-of-the-art video action classifiers often suffer from overfitting. They tend to be biased towards specific objects and scene cues, rather than the foreground action content, leading to sub-optimal generalization performances. Recent data augmentation strategies have been reported to address the overfitting problems in static image classifiers. Despite the effectiveness on the static image classifiers, data augmentation has rarely been studied for videos. For the first time in the field, we systematically analyze the efficacy of various data augmentation strategies on the video classification task. We then propose a powerful augmentation strategy VideoMix. VideoMix creates a new training video by inserting a video cuboid into another video. The ground truth labels are mixed proportionally to the number of voxels from each video. We show that VideoMix lets a model learn beyond the object and scene biases and extract more robust cues for action recognition. VideoMix consistently outperforms other augmentation baselines on Kinetics and the challenging Something-Something-V2 benchmarks. It also improves the weakly-supervised action localization performance on THUMOS’14. VideoMix pretrained models exhibit improved accuracies on the video detection task (AVA).

1 Introduction

Video action classification models have achieved remarkable performance improvements in recent years. The main innovations have stemmed from the introduction of large-scale video dataset like Kinetics [17] and Sports-1M [15] and the development of powerful network architectures using 3D convolutional neural networks (3D CNNs).

As the architecture of video models become deeper and more complex, overfitting and the resulting loss of generalizability become greater concerns. For example, models trained on large-scale video dataset still suffer from the object and scene biases: models rely heavily on specific discriminative objects and scene elements [26, 21, 37]. This has led to sub-optimal generalization performances and the decrease in localization abilities of video action classifiers. Ideally, a model should extract cues for recognition from diverse sources to enhance generalizability and the robustness to missing features.

The above problems are already identified and studied in static image recognition tasks, especially in the context of modern models based on 2D CNN architectures. For 2D image recognition, data augmentation has proven to be effective, while not requiring extra annotation or training time [29, 3, 39].

In contrast, there is a lack of extensive studies on the data augmentation strategies for video recognition tasks. In this paper, we examine the efficacy of image-domain data augmentation strategies on video data, especially the ones based on feature erasing that are known to improve model robustness and generalizability [6, 41]. In particular, we consider a generalization of CutMix [39] to video sequences. We show experiments to decide which axis (spatial or temporal) CutMix needs to be extended for the best performance on video sequences. As a result of our analysis, we introduce the VideoMix augmentation strategy. A new training video sample is constructed by cutting and pasting a random video cuboid patch from one video to the other. The ground truth label for this video is a volume-proportional combination of the source video labels.

| Task | Action recognition | Localization | Detection | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dataset |

|

|

|

|

|

||||||||||

| Model | SlowOnly-50 | SlowFast-50 | SlowFast-50 | I3D T-CAM | SlowFast-50 | ||||||||||

| Baseline | 73.6 | 79.5 | 61.5 | 17.8 | 23.2 | ||||||||||

| VideoMix | 74.9 | 81.9 | 62.3 | 19.3 | 24.9 | ||||||||||

| Improve. | () | () | () | () | () | ||||||||||

We show the effectiveness of VideoMix with extensive evaluations on various 3D CNN architectures, datasets, and tasks. Table 1 summarizes the improvements by VideoMix. VideoMix consistently improves video classification models without any additional parameter and a significant amount of computation.

2 Related Works

We briefly discuss the related works of the video classification task and the data augmentation in this section.

2.1 Video Classification

The key difference between video and image classifications is that the former must capture temporal information. Some prior works [28, 8, 2] have extracted temporal cues explicitly (e.g. via optical flow). With the development of deep learning and large-scale video datasets [17, 16], 3D convolutional neural networks (3D CNNs) [33, 11, 2, 34] and non-local modules [36] are proposed to learn the temporal cues automatically. Recently proposed SlowFast network [7] and CSN [34] show the state-of-the-art performances on the video classification using 3D CNNs. SlowFast proposed a dual-branch architecture to combine a slow path for static spatial features and a fast path for dynamic motion features, and CSN utilizes depth-wise convolution for lightweight 3D CNN architecture. While the advances in video classification have been focused on the architectural axis, we explore the orthogonal data axis, which is seldom explored in the context of video recognition tasks. We show the effectiveness of VideoMix by conducting experiments on top of the state-of-the-art SlowFast and CSN networks.

2.2 Data Augmentation

Data augmentation for image classification.

There are many augmentation strategies for static image classification tasks. Horizontal flipping, random resizing, and cropping have been used in training ImageNet classifiers and are now considered the standard set of augmentation strategies [32]. There have been regional dropout methods [6, 41] which remove random regions of an image to enhance robustness and generalization. Other single-image augmentation strategies include RandAugment [5] and AutoAugment [4]. They consider the combination of extensive pixel-level image augmentation types, such as rotation, shear, translation, and color jittering. RandAugment and AutoAugment train classifiers with above operations via random selection and learned policy, respectively. Augmentation strategies that combine more than one image include Mixup [40] and CutMix [39]. Mixup averages the RGB values of two images and the ground truth labels to create new samples. CutMix [39] has improved upon regional dropout by filling in image patches from other images in the dropped-out region, thereby maximizing the pixel efficiency during training. The labels are mixed among the source images as in Mixup. We discuss and experiment with the above static-image augmentation strategies on video classification tasks in our analysis.

Data augmentation for video classification.

There do exist a few attempts to apply data augmentation strategies on videos. On the spatial side, the standard single-image augmentation strategies for image classification have been considered: horizontal flipping and random cropping [35]. Along the temporal axis, a widely-used augmentation strategy is to randomly sub-sample a shorter video clip from the full sequence. However, there has been an overall lack of extensive studies on video augmentation methods. This work contributes the first studies on the impact of video augmentation strategies on the generalization, localization, and transfer learning capabilities.

3 VideoMix

In this section, we analyze existing data augmentations, describe the VideoMix algorithm, and discuss the effectiveness of VideoMix.

3.1 Revisiting Data Augmentation Techniques

| Methods | top1 | top5 |

|---|---|---|

| Vanilla | 75.2 | 91.7 |

| Mixup [40] | 77.0 | 93.1 |

| RandAugment [5] | 75.6 | 92.2 |

| Cutout [6] | 76.1 | 92.6 |

| CutMix [39] | 76.7 | 92.9 |

| VideoMix | 77.6 | 93.5 |

We review existing data augmentation methods that partially remove regions in an image [6], add pixel-level noises [4, 5], or manipulate both input pixels and labels [40, 39]. We first evaluate their effectiveness by simply extending the two-dimensional methods to the three spatio-temporal dimensions.

Table 2 compares the augmentation strategies including Mixup [40], RandAugment [5], Cutout [6], and CutMix [39]. It is straightforward to extend Mixup and RandAugment to videos: they apply global operations over the pixels. Mixup averages the RGB values of two images and RandAugment applies rotation, shear, and uniform perturbations on the images. We apply the same operation over the spatio-temporal frames. For Cutout and CutMix, we choose the full spatio-temporal extension where sub-cuboids in videos are randomly selected to be removed or replaced. We set the hyperparameter of Mixup and CutMix to , the mask size for Cutout to , the magnitudes for RandAugment to . Table 2 shows that even the naive extension of the baselines lead to improvements in video classification against the vanilla model. For example, Mixup achieves 77.0% top-1 accuracy on Mini-Kinetics, an improvement against the vanilla SlowOnly-34 () model.

In the rest of the section, we seek ways to boost the video recognition performances further by studying the design choices in augmentation strategies in greater depth.

3.2 VideoMix Algorithm

We introduce the VideoMix algorithm. Let be a video sequence, where , , and are the number of frames, width, and height of video sequences, respectively111We omit the input channel dimension (3 for RGB) for simplicity.. Let be the corresponding ground truth label, represented as a one-hot vector. VideoMix generates a new training sample by combining two training samples and . The generated is used for training the model with its original loss function.

More precisely, VideoMix first defines a binary tensor mask signifying the cut-and-paste locations in two video tensors. The new video is generated by the below procedure:

| (1) |

where is the element-wise multiplication and denotes the proportion of the volume occupied by .

The binary tensor mask is decided by drawing the 3D cuboid coordinates at random. More specifically,

| (2) |

We will investigate the design choices for the random selection of coordinates in the next part.

3.3 Investigation of spatial and temporal axes for VideoMix

| Methods | top1 | top5 |

|---|---|---|

| Spatial VideoMix | 77.6 | 93.5 |

| Temporal VideoMix | 75.6 | 92.5 |

| Spatio-temporal VideoMix | 76.7 | 92.9 |

We identify three types of VideoMix: Temporal, Spatial, and Spatio-temporal VideoMix. Temporal VideoMix samples the cuboid coordinates only along the temporal axis ( are sampled) and fixes spatial coordinates at . Spatial VideoMix samples the spatial coordinates , while fixing . Spatio-temporal VideoMix samples all the coordinates . The VideoMix variants are illustrated in Figure 1. Note that the Spatio-temporal VideoMix is the same as the CutMix in Table 2.

Table 3 compares the performances of the VideoMix variants. Spatial VideoMix is the best among them. We hypothesize that the video sub-cuboid must secure a sufficient number of frames to represent the semantic information for the video category. Temporal VideoMix or Spatio-temporal VideoMix is limited in terms of the semantic content in cut and pasted video cuboids. Spatial VideoMix, on the contrary, retains the full temporal semantics.

Based on this observation, we define Spatial VideoMix as our default VideoMix setting for video classification task. The random coordinate selection strategy follows that of CutMix [39]. The spatial ratio is sampled from the Beta distribution Beta(,), where we set 222When , it is the uniform distribution .. The center coordinates are sampled from . Other cuboid endpoints are determined by

| (3) |

and fixed . Codes for VideoMix variants are presented in Appendix A.

VideoMix for temporal localization.

Under certain application scenarios, it is difficult to directly manipulate the input videos. For example, features may have already been extracted from the original frames and the raw frames are unavailable because of the storage limits or legal issues [1]. As we will cover in the experiments of temporal action localization, Temporal VideoMix is a good alternative that improves the localization ability of a video classifier.

3.4 Discussion

Effect on preventing overfitting.

To see the effect of VideoMix on preventing overfitting and stabilizing the training process, we compare validation loss and validation accuracy of SlowOnly-34 models during the training over Mini-Kinetics action recognition dataset in Figure 2. We confirm that VideoMix enables video models to obtain lower validation loss and higher validation accuracy than the baseline. After about 200 training epochs, the baseline performance saturates and the loss does not decrease further. Applying VideoMix lets the model overcome the barrier and improve further beyond this point. The training samples generated by VideoMix allow the video classifiers to generalize better.

What does the model learn with VideoMix?

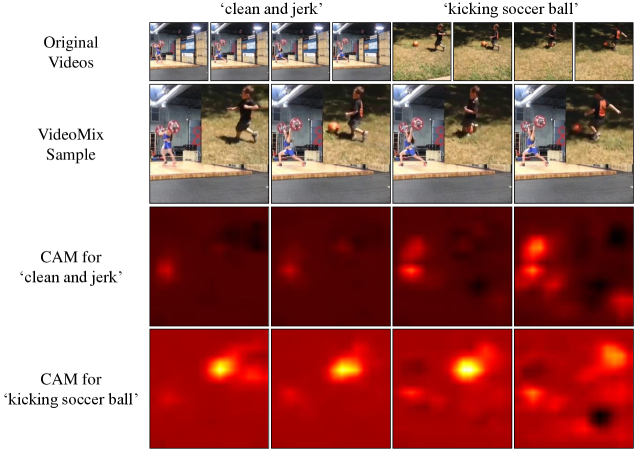

We expect VideoMix to let an action classifier simultaneously recognize multiple actions present in the mixed videos. To verify that this is achieved, we visualize the spatio-temporal attention of a video on the synthetic video generated by VideoMix with the class activation maps (CAM) [42]. We use a Kinetics-400 pre-trained SlowOnly-50 model. We extend the original CAM proposed for static images to its spatio-temporal version that generates the sequential score maps. Detail description of spatio-temporal CAM and more examples are in Appendix A. Figure 3 shows VideoMix samples and corresponding class activation maps with respect to the two action classes, “playing harmonica” and “passing American football”. The CAM results show that VideoMix guides a model to see multiple actions at once (e.g., the CAM for “playing harmonica” highlights the player’s mouth and hands, and the CAM for “passing American football” emphasizes the kid’s hands and the football object). Furthermore, VideoMix reduces scene contexts of videos, as the background scene of “passing American football” are partially removed, and also hides some object cues, as the football of “passing American football” and the hands holding a harmonica of “playing harmonica” are blocked in some frames, which leads to learning more robust and generalized cues beyond the object and scene for action recognition.

4 Experiments

We evaluate VideoMix in terms of the improved generalization performances as well as the transfer-learning performances of VideoMix pre-trained models on multiple tasks. We first verify the effect of VideoMix on video classification tasks: Kinetics action recognition [17] and Something-Something-V2. We show the temporal action localization performance via weakly-supervised temporal action localization experiments. Finally, VideoMix is evaluated in terms of the transfer-learning performances on the video action detection task. We also provide additional experiments on HMDB-51 [19] and UCF-101 [30] action recognition benchmarks in Appendix B. All experiments were implemented and evaluated on NAVER Smart Machine Learning (NSML) [18] platform with PyTorch [23]

4.1 Kinetics Action Recognition

Dataset.

Kinetics-400 [17] is a widely used large-scale action recognition benchmark consisting of 240k training videos and 20k validation videos in 400 human action classes. The performances are evaluated with the top-1 and top-5 accuracies. Note that about 10% of the Kinetics-400 videos are not available on YouTube to be downloaded. It is not possible to reproduce the exact accuracies reported in the original paper; we train the action classifier over the available subset and treat this result as the baseline.

Training and evaluation.

We follow the original training recipes of the baseline architectures in [7]. We train models from scratch using the stochastic gradient descent optimizer for 250 epochs with batch size and initial learning rate , which is decayed by cosine annealing. For a training video clip, consecutive frames are randomly sampled from a video and frames are sub-sampled with temporal stride as an input for video models. For every model, random resize crop and random horizontal flip are applied on training video clips as standard augmentations. For evaluation, we use view ensembles as in [7], where clips are uniformly sampled along the temporal dimension from the entire video sequences and spatial regions are uniformly sampled along the longer side of the frames.

Network architecture.

We use the SlowOnly, SlowFast [7], and interaction preserved CSN (ip-CSN) [34] to show the impact of VideoMix on the video classification task. Every model is based on the ResNet architecture [13]. We denote the specific ResNet type with the suffix “-(#depth)”. We also denote each video model with frame length and temporal stride in the trailing bracket . For example, SlowOnly-50 () is based on the ResNet-50 architecture, considers input frames sub-sampled from the original frames with temporal stride . SlowFast-50 () takes two separate input streams, the slow and fast pathways, each with and total number of input frames () with temporal strides () and , respectively.

Kinetics-400 results.

| Model | VideoMix | top1 | top5 | GFlopsviews |

| I3D | 72.1 | 90.3 | 108 N/A | |

| Two-Stream I3D | 75.7 | 92.0 | 216 N/A | |

| Nonlocal-ResNet50 | 76.5 | 92.6 | 282 30 | |

| SlowOnly-50 () | 71.8 | 89.6 | 26 30 | |

| SlowOnly-50 () | ✓ | 72.7 | 90.3 | 26 30 |

| SlowOnly-50 () | 73.6 | 90.7 | 54 30 | |

| SlowOnly-50 () | ✓ | 74.9 | 91.7 | 54 30 |

| SlowFast-50 () | 75.9 | 91.9 | 65 30 | |

| SlowFast-50 () | ✓ | 76.6 | 92.6 | 65 30 |

We evaluate VideoMix on Kinetics-400 with SlowOnly and SlowFast networks [7] as the base network architectures. SlowFast combines two branches: the slow branch for static spatial features and the fast branch for dynamic motion features. SlowOnly only has the slow branch that is similar to the ResNet [13] architecture with 3D convolutional kernels. The experimental results are shown in Table 4. The accuracies in the table are reproduced results333The original paper has reported the top-1 accuracies for SlowOnly-50 (), SlowOnly-50 (), and SlowFast-50 () as , , and , respectively. The difference is due to the 10% unavailable videos in Kinetics-400 and smaller batch sizes due to GPU limitations.. We also report the inference cost (GFlops) of a single view (a temporal clip with spatial crop) as well as the number of views for the prediction of a single video. We observe that VideoMix consistently improves the accuracy of baseline models. VideoMix achieves the top-1 accuracy of , , and for SlowOnly-50 (), SlowOnly-50 (), and SlowFast-50 () with improvements of , , and , respectively. We also show that VideoMix-augmented SlowFast recognizer achieves a competitive performance () against other methods such as I3D [2] (), Two-Stream I3D [2] (), and Nonlocal-ResNet50 [36] () which require more computational costs (GFlops) than the SlowFast architecture.

Mini-Kinetics results.

| Model | VideoMix | top1 | top5 |

|---|---|---|---|

| ip-CSN-50 () | 74.8 | 91.9 | |

| ip-CSN-50 () | ✓ | 75.9 | 93.1 |

| SlowOnly-50 () | 74.4 | 91.3 | |

| SlowOnly-50 () | ✓ | 76.0 | 93.0 |

| SlowOnly-50 () | 77.5 | 93.2 | |

| SlowOnly-50 () | ✓ | 79.2 | 94.1 |

| SlowFast-50 () | 79.5 | 93.9 | |

| SlowFast-50 () | ✓ | 81.9 | 95.1 |

Ablation Studies.

We conduct ablation studies on the Mini-Kinetics dataset. SlowOnly-34 is used as the running baseline, where the BasicBlock is used as in ResNet-34 [13]. The results are shown in Table 6.

| Augmentation type | top1 | top5 |

| Baseline | 75.2 | 91.7 |

| VideoMix (Ours; =1.0) | 77.6 | 93.5 |

| VideoMix (=0.2) | 77.0 | 93.5 |

| VideoMix (=0.5) | 77.6 | 93.4 |

| VideoMix (=2.0) | 77.3 | 93.6 |

| VideoMix (=0.5) | 77.0 | 93.0 |

| VideoMix (#videos=3) | 75.7 | 93.0 |

| VideoMix (#videos=4) | 71.9 | 91.4 |

| Temporal VideoMix | 75.6 | 92.5 |

| Spatio-temporal VideoMix | 76.7 | 92.9 |

| Per-frame VideoMix | 74.8 | 92.8 |

We first examine the impact of the mixture area hyperparameter . VideoMix at various values exhibits stable performances, uniformly outperforming the vanilla baseline. VideoMix is not sensitive to the hyperparameter . When we reduce the chance of applying VideoMix on a minibatch to = (default is =), the top-1 accuracy drops by percent point. The simple strategy of applying VideoMix on every video in the batch is a better choice. When we increase the number of mixing videos (“#videos”) more than two, the performance significantly drops, which indicates that two videos are enough for VideoMix and mixing more than two videos may hinder the training convergence.

Temporal VideoMix and Spatio-temporal VideoMix are not as effective as the VideoMix (Spatial VideoMix), with only and boosts over the baseline. Per-frame VideoMix independently applies VideoMix at every frame, ruining the temporal continuity of the VideoMix operation. It shows an even worse accuracy of 74.8%, lower than the original model. Temporal continuity is an important ingredient for the video augmentation.

More ablation studies to see the effect of dataset sizes and applying other data augmentation methods together with VideoMix are provided in Appendix B.

4.2 Something-Something-V2

| Model | VideoMix | top1 | top5 |

|---|---|---|---|

| SlowOnly-50 () | 59.1 | 85.1 | |

| SlowOnly-50 () | ✓ | 60.0 | 86.0 |

| SlowFast-50 () | 61.5 | 86.9 | |

| SlowFast-50 () | ✓ | 62.3 | 87.6 |

Dataset.

Something-Something-V2 dataset [9] contains 169k training and 25k validation videos with 174 action classes. We evaluate the performances with top-1 and top-5 accuracies. Something-Something-V2 is known for the fine-grainedness of actions, the diversity of contexts. It poses new challenges for action recognition not covered by Kinetics [17].

Implementation details.

We use SlowOnly-50 () and SlowFast-50 () models. The models are pre-trained on Kinetics-400 with the standard training strategy, and the final fully-connected layer is replaced with the new one with output dimensions. The entire models are then fine-tuned for the Something-Something-V2 dataset for epochs with the batch size and learning rate , which is decayed by a factor of 10 after and epochs. Other implementation details are in Appendix C.

Results.

We investigate how well VideoMix improves the generalizability of action recognition models in the challenging benchmark beyond the Kinetics. To separate the fine-tuning effects of VideoMix, it is applied only during the fine-tuning stage. The pretrained model is the same as the baseline. Table 7 shows the results. We observe that VideoMix improves the top-1 accuracies of SlowOnly-50 () and SlowFast-50 () by and against the baselines, respectively. VideoMix is effective on Something-Something-V2 as well.

4.3 Weakly Supervised Temporal Action Localization

The goal of weakly supervised temporal action localization (WSTAL) is to localize actions in untrimmed videos with a classifier trained using video-wise class labels only. Given a video input sequence, WSTAL model predicts the sequence’s class label and also generates one-dimensional temporal proposals to localize actions in the video. WSTAL models do not exploit temporal action annotations during training and the generated temporal proposals are evaluated on validation videos with validation ground truth annotations. To localize the action instances well, a video model recognizes action categories from full video sequences and not focus on small discriminant frames of the action. Through the WSTAL experiments, we verify that VideoMix improves the temporal localization ability of an action recognition models by guiding them to attend on wider frames of action. To evaluate the temporal localizability, we apply VideoMix over the baseline WSTAL methods.

Dataset.

We conduct weakly supervised temporal action localization (WSTAL) task on THUMOS’14 dataset [14]. THUMOS’14 dataset originally consists of 13,320 trimmed videos for training and 2,584 untrimmed videos for validation with 101 action categories. We follow previous WSTAL methods’ setting [20, 22, 27]. We train WSTAL models with the 20 class subset of the untrimmed videos, which consists of 200 untrimmed videos without temporal annotations. The temporal localization performance of a model is evaluated by 212 untrimmed videos with temporal annotations. WSTAL on THUMOS’14 dataset is a challenging task since the length of untrimmed video could be quite long (up to 26 minute) and multiple actions could exist in a video.

Training and inference.

For training, we first extract I3D [2] features from the training videos as done in [20]. We sample video segments from a training video and RGB frames and optical flows are separately fed into dual-path I3D network. Each RGB and optical flow frame results in -dimensional feature, thus the dimension of extracted feature for a video is for RGB input, and for both using RGB and optical flow input (RGB+flow). The WSTAL model, which consists of two convolutional layers followed with LeakyReLU activation and a convolutional layer, takes the extracted features and predicts its class label. Since the network is trained with pre-computed features, we do not consider spatial VideoMix, but utilize temporal VideoMix on the extracted features. Implementation details are in Appendix D. For evaluation, temporal class activation mapping (T-CAM) [42, 22] is utilized to localize action instances along the temporal dimension. We threshold T-CAM below 50 of the highest value, and all 1-dimensional continuous segments are considered as action instance proposals as in [29]. Evaluation metric is average precision (AP) of intersection over union (IoU) thresholds from to , and we report their mean value (mAP) as in [29, 22, 20].

Results.

Table 8 shows the WSTAL performances with and without optical flow features. We compare VideoMix against the baselines including Hide-and-Seek [29], which has been reported to improve weakly supervised object localization on static images and WSTAL on videos. We also compare against Mixup [40]. We observe that VideoMix improves the temporal localization accuracy mAP of baseline by and with and without optical flows, respectively. VideoMix also outperforms the Hide-and-Seek and Mixup in both scenarios showing its superior temporal localization ability. We also conducted VideoMix with a stronger baseline, W-TALC [24], and confirmed that VideoMix improves the performance of W-TALC from 31.1 to 32.3 (+1.2) mAP, which is at the state-of-the-art level.

4.4 AVA Action Detection

Kinetics pretraining is a widely-used practice for many video recognition tasks [10, 31, 7]. We validate whether VideoMix pretrained models bring better performance on the downstream task of detecting actions in videos.

Dataset.

AVA v2.2 dataset [10] consists of 235 training and 64 validation videos of human actions in videos. Each video is 15 minutes long, and the action locations are densely annotated as bounding boxes in space and time. We follow the protocol in [10] to train and evaluate the detection of 60 human action classes. We use the mean average precision (mAP) metric to measure the performance of video action detection using a frame-level intersection-over-union (IoU) threshold .

Detection framework.

Our detector is based on the Faster R-CNN [25] architecture, which is modified as in [7] to adapt to the video action detection task. The spatial stride of the final convolutional block is reduced from to to increase the feature map size. The 2D RoIAlign layer [12] is replaced by the 3D RoIAlign. SlowOnly-50 () and SlowFast-50 () have been used as the backbone network for the detection framework. We use the human bounding box proposals provided by [7] computed by an off-the-shelf human detector fine-tuned on AVA persons.

Training and inference.

We initialize two detectors with the weights pretrained on Kinetics-400 with or without VidoeMix. We apply the same fine-tuning strategy afterwards to separate the effect of VideoMix on pretraining. We train detectors for epochs using the SGD optimizer with initial learning rate decayed by factor at and epoch. The spatial dimension of the shorter side is resized to 256 pixels while maintaining the aspect ratio. consecutive frames are extracted for training. Further details are in Appendix E.

| Backbone | VideoMix | val mAP |

|---|---|---|

| Pretrained | ||

| SlowOnly-50 () | 19.1 | |

| SlowOnly-50 () | ✓ | 20.4 |

| SlowFast-50 () | 23.2 | |

| SlowFast-50 () | ✓ | 24.9 |

Results.

Table 9 shows the performances of our detector on the AVA benchmark. Pretraining the detector with VideoMix improves the performance of SlowOnly-50 and SlowFast-50 to () and () mAP, respectively. Switching the pretrained weights to the VideoMix version introduces the gain in detection performance for free. The weights will be published in the future.

5 Conclusion

We have analyzed the augmentation strategies for video action classification task. We have introduced VideoMix, a simple, efficient, and effective augmentation method. On Kinetics action recognition, VideoMix improves the top-1 accuracies of SlowOnly-50 and SlowFast-50 by and , respectively. On Something-Something-V2 dataset, VideoMix brings and gains in top-1 accuracies on SlowOnly-50 and SlowFast-50, respectively. VideoMix also improves the localization ability of the classifiers: on weakly supervised temporal action localization (WSTAL), VideoMix consistently improves the localization accuracy over the baselines. Finally, VideoMix improves the Kinetics-pretrained model for the transfer-learning task of video action detection. VideoMix, as well as VideoMix pretrained weights, provide a simple and cheap solution to boost up the video recognition performances across diverse tasks.

Acknowledgement

We would like to thank NAVER AI LAB team members, especially Jung-Woo Ha for his helpful feedback and discussion.

References

- [1] Sami Abu-El-Haija, Nisarg Kothari, Joonseok Lee, Paul Natsev, George Toderici, Balakrishnan Varadarajan, and Sudheendra Vijayanarasimhan. Youtube-8m: A large-scale video classification benchmark. arXiv preprint arXiv:1609.08675, 2016.

- [2] Joao Carreira and Andrew Zisserman. Quo vadis, action recognition? a new model and the kinetics dataset. In proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 6299–6308, 2017.

- [3] Junsuk Choe and Hyunjung Shim. Attention-based dropout layer for weakly supervised object localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2219–2228, 2019.

- [4] Ekin D Cubuk, Barret Zoph, Dandelion Mane, Vijay Vasudevan, and Quoc V Le. Autoaugment: Learning augmentation strategies from data. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 113–123, 2019.

- [5] Ekin D Cubuk, Barret Zoph, Jonathon Shlens, and Quoc V Le. Randaugment: Practical data augmentation with no separate search. arXiv preprint arXiv:1909.13719, 2019.

- [6] Terrance DeVries and Graham W Taylor. Improved regularization of convolutional neural networks with cutout. arXiv preprint arXiv:1708.04552, 2017.

- [7] Christoph Feichtenhofer, Haoqi Fan, Jitendra Malik, and Kaiming He. Slowfast networks for video recognition. In Proceedings of the IEEE International Conference on Computer Vision, pages 6202–6211, 2019.

- [8] Christoph Feichtenhofer, Axel Pinz, and Andrew Zisserman. Convolutional two-stream network fusion for video action recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1933–1941, 2016.

- [9] Raghav Goyal, Samira Ebrahimi Kahou, Vincent Michalski, Joanna Materzynska, Susanne Westphal, Heuna Kim, Valentin Haenel, Ingo Fruend, Peter Yianilos, Moritz Mueller-Freitag, et al. The” something something” video database for learning and evaluating visual common sense. In ICCV, volume 1, page 5, 2017.

- [10] Chunhui Gu, Chen Sun, David A Ross, Carl Vondrick, Caroline Pantofaru, Yeqing Li, Sudheendra Vijayanarasimhan, George Toderici, Susanna Ricco, Rahul Sukthankar, et al. Ava: A video dataset of spatio-temporally localized atomic visual actions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 6047–6056, 2018.

- [11] Kensho Hara, Hirokatsu Kataoka, and Yutaka Satoh. Can spatiotemporal 3d cnns retrace the history of 2d cnns and imagenet? In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, pages 6546–6555, 2018.

- [12] Kaiming He, Georgia Gkioxari, Piotr Dollár, and Ross Girshick. Mask r-cnn. In Proceedings of the IEEE international conference on computer vision, pages 2961–2969, 2017.

- [13] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In CVPR, 2016.

- [14] Y.-G. Jiang, J. Liu, A. Roshan Zamir, G. Toderici, I. Laptev, M. Shah, and R. Sukthankar. THUMOS challenge: Action recognition with a large number of classes. http://crcv.ucf.edu/THUMOS14/, 2014.

- [15] Andrej Karpathy, George Toderici, Sanketh Shetty, Thomas Leung, Rahul Sukthankar, and Li Fei-Fei. Large-scale video classification with convolutional neural networks. In CVPR, 2014.

- [16] Andrej Karpathy, George Toderici, Sanketh Shetty, Thomas Leung, Rahul Sukthankar, and Li Fei-Fei. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE International Conference on Computer Vision, 2014.

- [17] Will Kay, Joao Carreira, Karen Simonyan, Brian Zhang, Chloe Hillier, Sudheendra Vijayanarasimhan, Fabio Viola, Tim Green, Trevor Back, Paul Natsev, et al. The kinetics human action video dataset. arXiv preprint arXiv:1705.06950, 2017.

- [18] Hanjoo Kim, Minkyu Kim, Dongjoo Seo, Jinwoong Kim, Heungseok Park, Soeun Park, Hyunwoo Jo, KyungHyun Kim, Youngil Yang, Youngkwan Kim, Nako Sung, and Jung-Woo Ha. NSML: meet the mlaas platform with a real-world case study. CoRR, abs/1810.09957, 2018.

- [19] Hildegard Kuehne, Hueihan Jhuang, Estíbaliz Garrote, Tomaso Poggio, and Thomas Serre. Hmdb: a large video database for human motion recognition. In 2011 International Conference on Computer Vision, pages 2556–2563. IEEE, 2011.

- [20] Pilhyeon Lee, Youngjung Uh, and Hyeran Byun. Background suppression network for weakly-supervised temporal action localization. In AAAI, 2020.

- [21] Yi Li and Nuno Vasconcelos. Repair: Removing representation bias by dataset resampling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 9572–9581, 2019.

- [22] Phuc Nguyen, Ting Liu, Gautam Prasad, and Bohyung Han. Weakly supervised action localization by sparse temporal pooling network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 6752–6761, 2018.

- [23] Adam Paszke, Sam Gross, Soumith Chintala, Gregory Chanan, Edward Yang, Zachary DeVito, Zeming Lin, Alban Desmaison, Luca Antiga, and Adam Lerer. Automatic differentiation in pytorch. 2017.

- [24] Sujoy Paul, Sourya Roy, and Amit K Roy-Chowdhury. W-talc: Weakly-supervised temporal activity localization and classification. In Proceedings of the European Conference on Computer Vision (ECCV), pages 563–579, 2018.

- [25] Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. Faster r-cnn: Towards real-time object detection with region proposal networks. In Advances in neural information processing systems, pages 91–99, 2015.

- [26] Laura Sevilla-Lara, Shengxin Zha, Zhicheng Yan, Vedanuj Goswami, Matt Feiszli, and Lorenzo Torresani. Only time can tell: Discovering temporal data for temporal modeling. arXiv preprint arXiv:1907.08340, 2019.

- [27] Zheng Shou, Hang Gao, Lei Zhang, Kazuyuki Miyazawa, and Shih-Fu Chang. Autoloc: Weakly-supervised temporal action localization in untrimmed videos. In Proceedings of the European Conference on Computer Vision (ECCV), pages 154–171, 2018.

- [28] Karen Simonyan and Andrew Zisserman. Two-stream convolutional networks for action recognition in videos. In Advances in neural information processing systems, pages 568–576, 2014.

- [29] Krishna Kumar Singh and Yong Jae Lee. Hide-and-seek: Forcing a network to be meticulous for weakly-supervised object and action localization. In 2017 IEEE International Conference on Computer Vision (ICCV), pages 3544–3553. IEEE, 2017.

- [30] Khurram Soomro, Amir Roshan Zamir, and Mubarak Shah. Ucf101: A dataset of 101 human actions classes from videos in the wild. arXiv preprint arXiv:1212.0402, 2012.

- [31] Chen Sun, Abhinav Shrivastava, Carl Vondrick, Kevin Murphy, Rahul Sukthankar, and Cordelia Schmid. Actor-centric relation network. In Proceedings of the European Conference on Computer Vision (ECCV), pages 318–334, 2018.

- [32] Christian Szegedy, Vincent Vanhoucke, Sergey Ioffe, Jon Shlens, and Zbigniew Wojna. Rethinking the inception architecture for computer vision. In CVPR, 2016.

- [33] Du Tran, Lubomir Bourdev, Rob Fergus, Lorenzo Torresani, and Manohar Paluri. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE international conference on computer vision, pages 4489–4497, 2015.

- [34] Du Tran, Heng Wang, Lorenzo Torresani, and Matt Feiszli. Video classification with channel-separated convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, pages 5552–5561, 2019.

- [35] Limin Wang, Yuanjun Xiong, Zhe Wang, and Yu Qiao. Towards good practices for very deep two-stream convnets. arXiv preprint arXiv:1507.02159, 2015.

- [36] Xiaolong Wang, Ross Girshick, Abhinav Gupta, and Kaiming He. Non-local neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 7794–7803, 2018.

- [37] Philippe Weinzaepfel and Grégory Rogez. Mimetics: Towards understanding human actions out of context. arXiv preprint arXiv:1912.07249, 2019.

- [38] Saining Xie, Ross Girshick, Piotr Dollár, Zhuowen Tu, and Kaiming He. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1492–1500, 2017.

- [39] Sangdoo Yun, Dongyoon Han, Seong Joon Oh, Sanghyuk Chun, Junsuk Choe, and Youngjoon Yoo. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE International Conference on Computer Vision, pages 6023–6032, 2019.

- [40] Hongyi Zhang, Moustapha Cisse, Yann N Dauphin, and David Lopez-Paz. mixup: Beyond empirical risk minimization. arXiv preprint arXiv:1710.09412, 2017.

- [41] Zhun Zhong, Liang Zheng, Guoliang Kang, Shaozi Li, and Yi Yang. Random erasing data augmentation. arXiv preprint arXiv:1708.04896, 2017.

- [42] Bolei Zhou, Aditya Khosla, Agata Lapedriza, Aude Oliva, and Antonio Torralba. Learning deep features for discriminative localization. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2921–2929, 2016.

Appendix A VideoMix Algorithm

We describe code-level algorithm of VideoMix variants in Algorithm 1. The input video of a minibatch is -size tensor, where , , , , and denote the size of a minibatch, the channel size, the the width, and the height of a frame. VideoMix first shuffles the order of the minibatch along the first dimension of the tensor. Next is sampled from the uniform distribution. Then the cuboid coordinate are sampled corresponding to the type of VideoMix. Note that ‘clip’ function truncates the coordinates to fit in the frame space (e.g., =).

Spatial VideoMix (line 7-10) is the same as we described in the main paper. For Temporal VideoMix (line 12-15), we only samples and . For Spatio-temporal Videomix (line 17-20), , , , , , and are simultaneously sampled. After sample a cuboid , we combine two videos by cutting and inserting the cuboid region, and is adjust by computing the exact fraction ratio of the cuboid. The target label is also blended by interpolation manner. Note that VideoMix is simple and easy to implement with few lines, but it is very effective on various video tasks.

Appendix B Additional Experiments

B.1 HMDB-51 and UCF-101

We evaluated VideoMix on HMDB-51 [19] and UCF-101 [30] benchmarks. Table 10 and Table11 shows the results. Our method consistently boosts the top-1 accuracy against the baseline models.

| Methods | top1 acc. |

|---|---|

| I3D (Baseline) | 66.0 |

| I3D + VideoMix | 66.9 (+0.9) |

| Methods | top1 acc. |

|---|---|

| VGG16 (Baseline) | 79.8 |

| VGG16 + VideoMix | 81.7 (+2.1) |

| I3D (Baseline) | 93.3 |

| I3D + VideoMix | 93.4 (+0.1) |

B.2 Additional ablation study

We subsample the mini-Kinetics dataset (10, 25, 50, and 100) and train the SlowOnly-34 model on the sampled datasets. Table 12 shows the results. Results show that VideoMix consistently improves accuracies against the baseline for all the subsets of the Mini-Kinetics dataset.

| Dataset size | 10 | 25 | 50 | 100 |

|---|---|---|---|---|

| Baseline | 31.2 | 47.2 | 67.6 | 75.2 |

| VideoMix | 33.3 | 49.6 | 68.0 | 77.6 |

To see the complementarity among the data augmentation methods (e.g., Cutout, Mixup, RandAug, and VideoMix), we conduct various combinations of data augmentation methods. Starting from the standard augmentation (flip and random resize), we stack up VideoMix, Mixup, Cutout, and RandAugment. Table 13 shows the results. VideoMix boosts performance against the standard augmentation, a relatively weak augmentation. Combining VideoMix with other strong augmentations (Cutout or RandAugment) degrades performance since the combination leads to an excessive amount of regularization. Mixup also shows similar tendency that combining with other augmentations leads to degraded performance.

| Standard Aug. | VideoMix | Mixup | Cutout | RandAug | top1 |

| ✓ | 75.2 | ||||

| ✓ | ✓ | 77.6 | |||

| ✓ | ✓ | ✓ | 76.3 | ||

| ✓ | ✓ | ✓ | 76.4 | ||

| ✓ | ✓ | ✓ | ✓ | 73.5 | |

| ✓ | ✓ | 77.0 | |||

| ✓ | ✓ | ✓ | 74.2 | ||

| ✓ | ✓ | ✓ | ✓ | 76.7 |

Appendix C Something-Something-V2 Action Recognition

We describe the implementation details for Something-Something-V2 Action Recognition here.

We train models on Something-Something-V2 dataset for 40 epochs with the batch size 64 and learning rate 0.01. The learning rate is decayed by a factor of 10 after 26 and 33 epochs. Other training details are the same as Kinetics (Section 4.1 of the main paper), except for the standard data augmentation.

The standard data augmentation of Kinetics experiments is that horizontal flipping, random cropping, and temporal uniform sampling. Temporal uniform sampling samples a random clip of the entire sequences with a uniform frame interval.

For Something-Something-V2, we do not use horizontal flipping augmentation since the action’s direction is critical for this dataset (e.g., there is a ‘pushing something from left to right’ action category). Also, we sample frames with temporally perturbed interval instead of temporal uniform sampling. In detail, we first split the entire frames with bins ( is the number of sampled frames), and we select a frame from each bin and aggregate frames.

For inference, we use 3 spatial crops and single temporal crop.

Appendix D THUMOS’14 Weakly Supervised Temporal Action Localization

We describe the implementation details for THUMOS’14 Weakly Supervised Temporal Action Localization (WSTAL) task.

We utilize the codebase444https://github.com/Pilhyeon/BaSNet-pytorch of [20] for I3D [2] baseline. We extract I3D [2] features from training video using this repository555https://github.com/piergiaj/pytorch-i3d. We sample video segments from a training video. Each segment has frames and the segments are not overlapped.

The input video has RGB frames and also optical flows, and they are separately fed into dual-path I3D network. Each segment of RGB and optical flow frames results in 1024-dimensional feature. Thus the dimension of extracted feature for a video (i.e., segments) is for RGB input, and for both using RGB and optical flow input (RGB+flow). We apply Temporal VideoMix along the temporal dimension (i.e., the first axis of the feature ) on the extracted feature.

To see the effectiveness of VideoMix with more stronger baseline, we conducted VideoMix with W-TALC [24] using the official pytorch codebase666https://github.com/sujoyp/wtalc-pytorch. We follow the original codebase’s setting and we apply Temporal VideoMix along the temporal dimension as in the I3D experiment.

Appendix E AVA Action Detection

Our action detector is based on the Faster R-CNN [25] architecture, which is modified as in [7] to adapt to the video action detection task. We use PySlowFast777https://github.com/facebookresearch/SlowFast and Detectron2888https://github.com/facebookresearch/detectron2 codebases. The spatial stride of the final convolutional block is reduced from 2 to 1 to increase the feature map size. We extend 2D RoIAlign layer [12] to 3D RoIAlign layer, which extracts RoI features spatially and then aggregate via global average pooling. We use the human bounding box proposals provided by [7] computed by an off-the-shelf human detector fine-tuned on AVA persons, which is a Faster RCNN with a ResNeXt-101-FPN [38] backbone. The person region proposals are detected by human detector with a confidence threshold of .

We train detectors for 20 epochs using the SGD optimizer with initial learning rate 0.1 decayed by factor 0.1 at 10 and 15 epoch. The spatial size of the input video is , and consecutive frames are extracted for training. For inference, the spatial dimension of the shorter side is resized to pixels while maintaining the aspect ratio.

Appendix F Spatio-temporal Class Activation Mapping

We describe the spatio-temporal class activation mapping (ST-CAM) which is extended from spatial CAM of the original paper [42]. We use a SlowOnly-50 () network [7] which is pretrained on Kinetics-400 [17] dataset.

To obtain a ST-CAM of a given video input, we first extract the final feature map of the SlowOnly-50 network before the global average pooling layer. The temporal dimension of the feature map is which is the same as the number of input frames. We reduce the spatial stride of the last convolution from to of the original SlowOnly-50 network, so that the spatial dimension of the feature map is to clearly see the CAM heatmap, while the original size is . The number of channels of the feature map is . Then, similar to the original paper [42], the extracted final feature map is multiplied with the fully-connected layer’s weight corresponding to the target class , resulting in -dimensional spatio-temporal class activation mapping. We sub-sampled frames of the ST-CAM in the main paper due to the limit of page width.

We present additional ST-CAM visualizations in Figure 4, 5, 6, and 7 which show the VideoMix samples and corresponding class activation maps with respect to the two action classes.