VegaEdge: Edge AI Confluence Anomaly Detection for Real-Time Highway IoT-Applications

Abstract

Vehicle anomaly detection plays a vital role in highway safety applications such as accident prevention, rapid response, traffic flow optimization, and work zone safety. With the surge of the Internet of Things (IoT) in recent years, there has arisen a pressing demand for Artificial Intelligence (AI) based anomaly detection methods designed to meet the requirements of IoT devices. Catering to this futuristic vision, we introduce a lightweight approach to vehicle anomaly detection by utilizing the power of trajectory prediction. Our proposed design identifies vehicles deviating from expected paths, indicating highway risks from different camera-viewing angles from real-world highway datasets. On top of that, we present VegaEdge – a sophisticated AI confluence designed for real-time security and surveillance applications in modern highway settings through edge-centric IoT-embedded platforms equipped with our anomaly detection approach. Extensive testing across multiple platforms and traffic scenarios showcases the versatility and effectiveness of VegaEdge. This work also presents the Carolinas Anomaly Dataset (CAD), to bridge the existing gap in datasets tailored for highway anomalies. In real-world scenarios, our anomaly detection approach achieves an AUC-ROC of 0.94, and our proposed VegaEdge design, on an embedded IoT platform, processes 738 trajectories per second in a typical highway setting. The dataset is available at https://github.com/TeCSAR-UNCC/Carolinas_Dataset#chd-anomaly-test-set.

Index Terms:

Highway safety, real-time, deep learning, IoT, embedded, edge, dataset, real-world, anomaly detectionI Introduction

In today’s digital age dominated by the Internet of Things (IoT), camera-based infrastructure has become an integral part of our interconnected world. With urbanization intensifying, our highways face increasing congestion and unpredictable driving patterns. Although current highway cameras offer surveillance, their true potential to harness real-time analytics remains largely untapped. Integrating edge-based AI frameworks with these cameras can revolutionize traffic management and safety [1]. This integration not only promises rapid detection and response to anomalies but also increases bandwidth efficiency, lowers latency, and scales highway monitoring, marking a transformative approach to road safety and management.

The AI-based edge applications can help with real-time detection of erratic driving behaviors that can help tackle the distressing surge in accidents, especially within work zones. From 2003 to 2020, worker fatalities rose, with 135 deaths in 2019 and 117 in 2020 [2, 3]. The Federal Highway Administration’s 2021 report highlighted 106,000 work zone accidents, resulting in 42,000 injuries and 956 fatalities [4, 5]. While traditional safety mechanisms in these zones are primarily reactive [6], often leading to late interventions, integrating AI at the data’s edge ensures timely decision-making crucial for highway safety, surveillance, and traffic analysis applications.

Anomaly detection for roadways mainly focuses on the complexities of autonomous driving [7] in urban settings, where interactions among vehicles, infrastructure, and pedestrians are intricate. Influenced by factors like intersections and diverse road alignments, urban trajectories are notably unpredictable. In contrast, highway travel, designed for longer distances, exhibits more predictable behaviors [8, 9, 10, 11].

For effective AI in highway settings, models need training on specific datasets differentiating normal from abnormal driving. Many existing datasets, however, lack resolution and relevance. Addressing this, we present the Carolinas Anomaly Dataset (CAD) with real-world highway anomalies. Moreover, real-time anomaly detection is crucial for edge-based safety applications prioritizing nimbleness. CAD emphasizes identifying vehicle trajectories that deviate from standard paths, especially those moving outside of their lanes. While many anomaly detection methods exist [12, 13, 14], they’re not highway-specific and require significant computational power. Overall, there is a clear deficiency in real-world highway anomaly datasets and corresponding algorithms, leading to a void in AI frameworks for real-time anomaly detection in practical applications.

In this context, we introduce VegaEdge, an edge AI confluence tailored for real-time highway IoT applications operating on embedded edge devices using lightweight anomaly detection. Fig. 1 shows how VegaEdge can monitor highway traffic and detect anomalies at the edge at various locations on embedded platforms. Our evaluations on real-world datasets confirm VegaEdge’s effectiveness and its performance with real-world video detection will be detailed in subsequent sections. In this paper, we also introduce a lightweight method that uses ground truth and trajectory prediction for quick anomaly detection, promoting enhanced highway safety. This method seamlessly integrates with the State-of-the-Art (SotA) trajectory prediction [15] integrated with VegaEdge providing swift real-world anomaly detection.

We extensively evaluated VegaEdge across three distinct platforms, two of which are edge-based, low-power devices, underscoring its versatility. Tests were conducted to validate its robustness using real-world and simulated videos, demonstrating VegaEdge’s capability to function with digital twins and across varying traffic densities. Furthermore, we examined its performance specifically for highway work zone safety, analyzing the impact of diverse prediction windows on the buffer times afforded to workers during potential hazards. The efficacy of our anomaly detection is showcased through evaluations on both adversarial and real-world datasets. These tests underscore the pronounced differences between adversarial-generated trajectories and real-world scenarios, emphasizing the imperative of employing real-world videos in authentic system deployments. We also perform extensive power analysis on an embedded to provide insights into the power consumption of VegaEdge in different power modes that can be utilized based on the desired application.

The main contributions of this paper are summarized as follows:

-

•

We introduce Carolinas Anomaly Dataset (CAD), a new real-world anomaly dataset for highway applications. This dataset empowers researchers to validate anomaly detection techniques within genuine highway contexts.

-

•

We present a novel anomaly detection technique that foresees anomalous driving behaviors out of the predicted trajectories by extrapolating angel-based and displacement errors. Its effectiveness is demonstrated with adversarial and real-world trajectories on select datasets.

-

•

We introduce VegaEdge, a cutting-edge AI-powered IoT solution for vehicle anomaly detection designed for edge-based embedded systems. It is adept at identifying vehicles that diverge from their anticipated route, indicating possible hazardous intrusions on highways in real-time.

-

•

We subject VegaEdge and proposed anomaly detection techniques to exhaustive evaluations across multiple platforms and scenarios. The results showcase its adaptability and superior performance in real-world and simulated environments. We also demonstrate its effectiveness, emphasizing its application in work zone safety.

II Related Works

Efforts have been made to adapt anomaly and vision models for IoT devices. [16] presents an IoT-focused video surveillance system, primarily analyzing human-related events. [17] explores vision model applications in IoT, while [18] investigates anomaly detection in time series data for domains like smart cities. Recently, there’s been increased focus on highway safety. [19] introduces a trajectory prediction framework for dense traffic, utilizing LSTMs and CNNs. [20] uses road geometry for vehicle counting, speed estimation, and classification. [21] suggests a real-time flow estimation system based on pairwise scoring for vehicle counting. MultEYE [22] is an aerial viewpoint vehicle tracking system, leveraging segmentation for detection accuracy in edge devices and IoT applications. Nevertheless, a notable gap persists within AI-based solutions for highway applications, primarily due to the limited availability of real-world datasets and dedicated frameworks tailored specifically to highway-based edge applications with real-time processing capabilities.

Anomaly detection in vehicle frameworks has been explored in various studies. [12] proposes an IoT system detecting abnormal driving using semantic analysis, vehicle detection, and 5G communication. [23] employs re-identification and multi-camera tracking with Gaussian Mixture Models (GMMs) to analyze vehicles. Anomalies are identified based on foreground-background changes. [13] offers a tracking algorithm for anomaly detection in road scenes. [24] enhances vehicle anomaly detection accuracy by integrating road geometry with movement predictions. [14] presents a multi-granularity design combining various tracking levels for vehicle anomalies. Using clustering, [25] introduces a probabilistic framework for anomaly detection via vehicle trajectories.

Several studies, such as DSAB [7], focus on the vehicle anomaly detection problem individually. DSAB reconstructs vehicle social graphs using the Recurrent Graph Attention Network. [26] employs Graph Convolutional Networks (GCNs) with a contrastive encoder for feature extraction, with the features later used in an SVM classifier. They also explore unsupervised methods using an Adversarial Autoencoder.

While there’s a scarcity of comprehensive vehicle datasets in highway safety due to data gathering challenges, AI City Challenge offers a benchmark [27, 28]. Still, its alignment with highway safety is limited. The Carolinas Highway Dataset (CHD) [15] provides videos from multiple viewpoints, ideal for highway safety. Given the rarity of anomalous driving behaviors, [29] suggests an adversarial framework to generate anomalies on existing datasets. Recent studies like [26] are utilizing this approach for more exhaustive anomaly detection evaluations. This lack of resources and use of adversarial approaches underscores the urgency of developing and advancing real-world datasets and AI-based IoT-edge solutions that capable of handling the unique challenges and anomalies of highway safety and surveillance.

III CAD: Carolinas Anomaly Dataset

Building upon discussions from prior sections, the absence of dedicated highway-based trajectory anomaly datasets presents a challenge in validating our anomaly detection methodologies. To circumvent this limitation, we adopt the recent advancements in adversarial anomaly generation as a testing bed for our proposed approach. Zhang et al. [29], introduce an adversarial attack-based technique designed to craft realistic anomalous trajectories by perturbing standard trajectories within a dataset.

While adversarial approaches have made significant strides in improving the fidelity of generated results, they still fall short of perfectly mirroring real-world scenarios. Although the disparity between machine-generated anomalies and actual real-world anomalies has diminished, it has not been completely eradicated. In light of the challenges inherent in evaluating highway anomalies and the existing gap in relevant datasets, we present the ”Carolinas Anomaly Dataset (CAD)”. This dataset, derived from the Carolinas Highway Dataset (CHD) [15], encompasses 22 videos, each exhibiting at least one anomalous driving trajectory. These videos are captured from two distinct vantage points: high-angle and eye-level, offering a versatile tool for surveillance and road safety applications. Specifically, CAD is composed of one-minute video segments, evenly split between the two perspectives, showcasing variety of anomaly behavior.

In this context, anomalous behavior pertain to the atypical movement patterns exhibited by vehicles, including actions such as vehicles deviating from their designated lanes on the highway, abruptly halting in front of the camera’s view, or vehicles that approach the camera while diverging away from their designated lane. These unusual and non-standard behaviors have the potential to pose significant risks to nearby structures, infrastructure, and, most critically, to the safety of workers, particularly within the dynamic and often high-speed environment of highway work zones.Designed to enable the evaluation of various anomaly detection methodologies, CAD serves as an invaluable resource for researchers focused on innovating highway safety through anomaly detection algorithms.

IV Anomaly detection Methodology

In this section, we present our methods for anomaly detection using predicted trajectories. For anomaly detection, the trajectory prediction output is used to evaluate the error and angle-based approaches. The goal was to evaluate both methodologies for detecting anomalous behavior in trajectory and video datasets. Through this methodology, we allow the detection of unusual vehicle behaviors, such as sudden lane changes, erratic driving, or potential security threats in desired applications with minimum computation.

IV-A ADE-based Anomaly Detection

Average Displacement Error (ADE) based Anomaly Detection is a method of computing the average error from the predicted trajectory to assess the accuracy of trajectory predictions for vehicles and comparing it against a threshold () as per the application. It computes the average Euclidean distance between predicted trajectories () and actual trajectories () overall predicted time steps () and subjects () in a scene. The ADE in predicted trajectory from last seconds is compared with the desired ADE threshold, as:

| (1) |

where is time and, representing the index of the vehicle. By setting a threshold, a criterion is set to identify anomalous trajectories. Value exceeding the threshold indicates a significant disparity between the predicted and ground truth trajectories, resulting from unexpected driving behavior, going off the lane, etc. As PishguVe is designed to predict the normal trajectory of the ego vehicle, the ADE for any vehicle that exceeds the threshold is marked as an anomaly.

IV-B Angle-based Anomaly Detection

Angle-based Anomaly Detection calculates the angle between the predicted future trajectory vector, and the actual trajectory vector, of the ego vehicle and compares it with a threshold according to the application. Given the x and y coordinates of and for past few seconds , we can compute the direction vectors:

| (2) |

, here and are the position of vehicle a frame before the start of prediction. The angle between these direction vectors and is compared with the threshold, as:

| (3) |

where is the dot product of and , and and are their respective magnitudes.

V VegaEdge Design

VegaEdge is an integration of high-performing AI models to empower IoT-embedded edge devices. Specifically designed to enhance real-time safety and surveillance on highways, its core capabilities encompass vehicle detection, tracking, and trajectory prediction, all converging toward the final goal of anomaly detection. Fig. 2 provides a step-by-step visual representation, illustrating how the entire VegaEdge system operates in a union. The high-level pseudocode of VegaEdge is also shown in Algorithm 1.

As shown in Fig. 2 at a high level, VegeEdge detects vehicles within an image and subsequently tracks them across consecutive frames on a frame-by-frame basis. Following this, the system filters and accumulates the trajectories of distinct vehicles identified in the prior phase. Lastly, leveraging the gathered vehicle data from the past 3 seconds, it projects the trajectories for the upcoming five seconds, utilizing this foresight for effective anomaly detection. In the following subsections, we discuss our design choices and the working of each step shown in Fig. 2.

V-A Vehicle Detection

For efficient and rapid vehicle detection for edge-integrated IoT devices, we opt for the YOLOv8l [30] model and trained it on the BDD100k vehicle dataset [31]. Our decision was influenced by the system’s overall performance, such as latency, accuracy, and memory requirements.

Model size and performance are critical in edge deployments, particularly for IoT devices. YOLOv8l addresses this by being 35.9% smaller than YOLOv8x [30], making it ideal for resource-limited embedded-IoT devices. Despite its reduced size, its mean Average Precision (mAP) is a competitive 52.9%, only 1% less than the 53.9% of YOLOv8x.

V-B Vehicle Tracking

Multi-object tracking (MOT) is fundamentally concerned with the identities of objects within video sequences. ByteTrack [32] uses an innovative association method that considers every detection box. Detection boxes with lower scores are processed by comparing their similarities with existing tracklets to accurately identify true objects while filtering out unwanted detections.

Within this context, the VegaEdge employs the ByteTrack algorithm, renowned for its efficient and robust tracking capabilities. ByteTrack’s architecture uses deep association techniques, ensuring consistent tracking across frames, even for challenges like occlusions and complex interactions. Its performance ia shown in Table I on datasets like BDD100K and MOT20. Furthermore, ByteTrack boasts an impressive running speed without compromising on accuracy, making it an ideal choice for real-time applications such as VegaEdge. As discussed in [32], ByteTrack achieves metrics of 80.3 MOTA, 77.3 IDF1, and 63.1 HOTA on the MOT17 test set, all while maintaining a 30 FPS running speed.

V-C Data Conditioning

The output from detection and tracking is rigorously cleaned to ensure precise input, as the quality of the input directly dictates the accuracy of trajectory forecasts in the next step. To obtain precise vehicular trajectories, we’ve performed targeted data filtration and smoothing:

-

1.

Class-specific Inclusion: To eliminate potential noise from extraneous vehicular types, we selectively retain only cars, buses, and trucks.

-

2.

Unidirectional Movement: To focus on the flow of incoming vehicles, we exclude the vehicles operating in non-targeted directions, thereby standardizing the directional flow and reducing complexity.

-

3.

Temporal Presence Validation: Vehicles with transient appearances can introduce data anomalies. This validation process sets a minimum frame threshold, below which vehicular entries are deemed non-contributory and are subsequently removed.

-

4.

Trajectory Continuity: Despite thorough validation in previous steps, some trajectories may have missing frames. We fill such gaps through interpolation techniques, ensuring continuous and complete trajectories.

In summary, the data cleansing and validation processes outlined above are crucial in ensuring the integrity and precision of vehicular trajectories used by VegaEdge’s downstream tasks. By emphasizing class-specific inclusion, standardizing directional flow, validating temporal presence, and ensuring trajectory continuity, we lay the foundation for subsequent trajectory forecasts. This approach mitigates potential inaccuracies and fortifies our framework’s reliability, positioning it to deliver consistent and high-quality results in real-world applications for which VegaEdge will be used.

V-D Trajectory Prediction

We focus on highway-centric performance in the framework of VegaEdge’s IoT applications. To meet this need, VegaEdge integrates the SotA PishguVe[15] trajectory prediction algorithm on highway datasets, ensuring fast and accurate results without straining the device.

PishguVe was selected for its ability to make predictions at the pixel level, as shown in Table I, its proven track record of state-of-the-art accuracy on multiple datasets [33, 34, 15], and its efficient memory footprint. Table I, CHD-HA and CHD-EL represents CHD-High Angle and CHD-Eye-level trajectories from CHD dataset respectively. Built on a fusion of graph isomorphism, convolutional neural networks, and attention mechanisms, PishguVe [15] can forecast future vehicle positions with a model size of only 133K parameters. The input to PishguVe is past trajectories of vehicles are represented by a set of absolute coordinates, denoted as , and a set of relative coordinates, denoted as . The absolute coordinates are defined as , where , representing the index of the vehicle and and are x and y coordinates of the center of bounding box of vehicle at time for past trajectory denoted by . The predicted future trajectories are shown as , here , denotes future trajectory, and , are generated as a set of coordinates for each vehicle in the image.

V-E Prediction-based Anomaly Detection

VegaEdge uses the trajectory prediction-based anomaly detection approach discussed in section IV of the paper, utilizing ADE and angle-based anomaly detection techniques. These methods offer a straightforward and efficient approach to detecting anomalies. This streamlined process makes our proposed method well-suited for integration within VegaEdge’s IoT-based framework, which operates on hardware and time-constrained embedded IoT platforms. This efficiency allows for quick anomaly detection, enhancing the overall performance and responsiveness of the system. The performance of both approaches on two different datasets is demonstrated in the upcoming section.

| Task | Method | Performance | Dataset |

|---|---|---|---|

| Object Detection | YOLOv8l [30] | 52.9 (mAP) | COCO [35] |

| 57.14 (mAP)@mAP50 | BDD100K [36] | ||

| 34.50 (mAP)@mAP50-95 | BDD100K [36] | ||

| Tracking | ByteTrack [32] | 77.8 (MOTA) | MOT20 [37] |

| Path Prediction | PishguVe [15] | 20.75 (Pixels/ADE) | CHD-EL [15] |

| 16.81 (Pixels/ADE) | CHD-HA [15] | ||

| 0.77(m/ADE) | NGSIM [33, 34] |

V-F VegaEdge Performance Benchmarking

VegaEdge’s performance was evaluated across multiple platforms to understand its versatility and efficiency. Our testing platforms comprised a server with an Intel Xeon Silver 4114 CPU, Nvidia’s V100 GPU, and Nvidia’s Jetson Orin and Xavier NX boards. We chose the server setup as a reference point to contrast the performance metrics of the Jetson boards. These Jetson boards are designed for real-world tasks and are notable for their power efficiency, with Orin operating at 50W and Xavier NX at just 20W. Their efficiency and AI capabilities position them as ideal candidates for a wide range of IoT applications requiring edge computing.

Transitioning from the hardware evaluation, Table I shows the performance metrics of three algorithms VegaEdge utilizes for crafting its workflow, as shown in Algorithm 1 and Figure 2. In the domain of Object Detection, YOLOv8l achieves an mAP of 52.9 on COCO and 57.14 at mAP50 on BDD100K. It further scored 34.50 at mAP50-95. The Tracking algorithm, ByteTrack performs at a MOTA of 77.8 on the MOT20 dataset. Lastly, in trajectory prediction, the PishguVe algorithm was assessed on three distinctive datasets. It registered a Pixels/ADE of 20.75 and 16.81 on CHD-EL and CHD-HA, respectively, and a commendable m/ADE of 0.77 on NGSIM.

VI Results

VI-A Anomaly detection on NGSIM Dataset:

In this section, we evaluate our anomaly detection methodology on adversarial trajectories. These trajectories are derived using the NGSIM dataset with bird’s eye camera-view of the trajectories, following the adversarial attack approach [29], adopted by [26]. Our evaluation encompasses both the ADE and angle-based anomaly detection techniques. Our tests include both adversarial generated trajectories [29] and actual real-world data from the NGSIM test dataset, similar to the study in [38].

| Time-step | EER | |

|---|---|---|

| (s) | ADE-based | Angle-based |

| 0.2 | 0.16 | 0.02 |

| 0.4 | 0.17 | 0.05 |

| 0.6 | 0.19 | 0.10 |

| 0.8 | 0.19 | 0.15 |

| 1.0 | 0.20 | 0.19 |

| 2.0 | 0.22 | 0.38 |

| 3.0 | 0.24 | 0.56 |

| 4.0 | 0.25 | 0.69 |

| 5.0 | 0.26 | 0.77 |

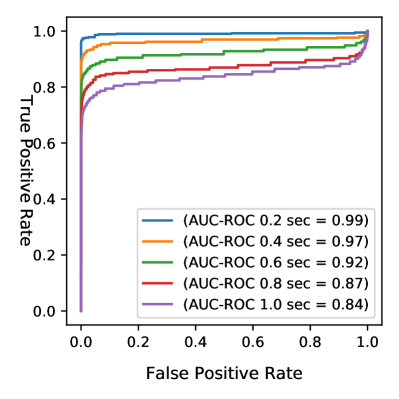

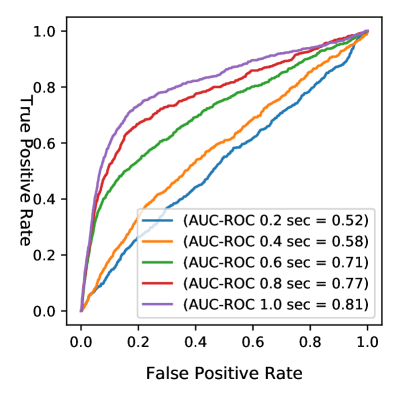

The ADE-based anomaly detection method study revealed a distinct pattern regarding the Area Under the Receiver Operating Characteristic curve (AUC-ROC). The ADE-based anomaly detection method consistently performed best at an AUC-ROC of 0.91 for an ADE window of 0.2s, as visualized in Figs. 3 and 3a. The angle-based approach initially outperformed the ADE-based method for short prediction windows, as seen in Fig. 4a, but its efficacy declined with larger windows, evident in Fig. 4b. Such behavior in the angle-based approach may stem from how anomalies are generated by applying constrained perturbations to real-world trajectories, making anomalies challenging to discern over more extended prediction periods.

The Equal Error Rate (EER) plot in Fig. 5 shows the EER value obtained by plotting False Negative and False Positive Rate for ADE and Angle anomaly methods for 1 second of predicted trajectory. Table II presents the EER for two anomaly detection methods on NGSIM adversarial trajectories across different time-step thresholds. The Angle method outperforms the ADE approach at shorter thresholds, such as 0.2s and 0.4s. However, as the time threshold grows, their performance converges. By 5.0 seconds, the EERs are 0.26 for ADE and 0.77 for the Angle method, indicating a faster performance drop for the Angle approach over extended time steps.

VI-B Anomaly detection on CAD

In this section, we evaluate our anomaly detection approach on real-world trajectories sourced from CAD consisting of high-angle (CHD-HA) and eye-level (AHD-EL) camera-view of highway vehicles, as introduced in section III. To offer a comprehensive view of the results, the AUC-ROC values for both fine and coarse grain time steps are graphically represented in Fig. 6 for the ADE-based anomaly detection method and in Fig. 7 for the angle-based method.

Expanding on the earlier analysis, Table III provides a breakdown of the Equal Error Rate (EER) performance for various time thresholds, contrasting the ADE and angle-based anomaly detection methods on CAD’s data. It is clear that the Angle approach consistently outperforms the ADE method across all examined time steps. Starting from an EER of 0.48 at the 0.2s mark, the angle method demonstrates a steady improvement, with an EER of 0.12 at the 5s threshold. In contrast, the ADE-based method initiates with an EER of 0.58 at 0.2s and gradually improves to 0.32 at 5s. These metrics show the superior efficacy of the angle-based approach, especially since the prediction windows are elongated, making it a more robust choice for anomaly detection in the context of the CAD.

VI-C Real-world vs Adversarial Anomaly Trajectories

Intriguingly, the real-world trajectories demonstrate an inverse trend compared to the results of the adversarial anomaly dataset. Specifically, the AUC-ROC values for the ADE anomaly detection method peak at a 4 and 5-second prediction window, recording the area of 0.80 for a 4s window as shown in Fig. 6b. This suggests a higher sensitivity of the ADE method to longer prediction windows when applied to real-world data. Similarly, in Fig. 7b the angle-based method exhibits stellar performance with an AUC-ROC of 0.94 for the same 5s window. Such observations indicate that while synthetic or constrained trajectory datasets may favor short prediction windows, real-world trajectories might inherently contain more distinguishable anomalies in longer prediction intervals.

Comparing the EER values from the real-world CAD trajectories (Table III) with those from the adversarial NGSIM trajectories (Table II), several striking differences emerge. The NGSIM adversarial trajectories exhibit substantially lower EER values in the initial time steps, especially for the angle-based approach. For example, at the 0.2-second mark, the NGSIM data record a notably lower EER of 0.02 for the angle-based method than 0.48 for the CAD dataset.

| Time-step | EER | |

|---|---|---|

| (s) | ADE-based | Angle-based |

| 0.2 | 0.58 | 0.48 |

| 0.4 | 0.55 | 0.43 |

| 0.6 | 0.50 | 0.35 |

| 0.8 | 0.46 | 0.28 |

| 1.0 | 0.41 | 0.24 |

| 2.0 | 0.34 | 0.22 |

| 3.0 | 0.30 | 0.17 |

| 4.0 | 0.30 | 0.14 |

| 5.0 | 0.32 | 0.12 |

A potential reason for this marked divergence might be the inherent nature of the datasets. The NGSIM adversarial trajectories, being synthetically generated, likely present more pronounced and discernible anomalies that the detection methods can more readily identify, especially within shorter prediction windows. In contrast, the CAD real-world trajectories, a genuine reflection of real-world driving behaviors, might be embedded with subtler and more intricate anomalies. These nuances could pose more significant challenges in detection, resulting in higher EER values, especially in the shorter time-step intervals. Moreover, the complexities and variances found in real-world driving behaviors could introduce a wider array of anomalies, making distinguishing between normal and anomalous patterns more intricate for the detection methods when applied to the CAD.

VI-D VegaEdge Performance

In our evaluation, we primarily focus on the performance of VegaEdge on the Jetson platforms. With its superior computational capabilities, the server platform serves as a benchmarking reference to underscore the efficiency and feasibility of deploying VegaEdge in more constrained environments.

VI-D1 Performance on Jetson Platforms

Table IV delineates the throughput of VegaEdge across different road scenarios and traffic densities. On Jetson platforms, VegaEdge’s performance showcases its adaptability and efficiency, particularly given the embedded nature of these devices.

For the 3 lanes high traffic density scenario, the Jetson Orin processes 758 trajectories every second, with 140 unique vehicles detected per second and the intricate nature of merging scenarios. This throughput ensures real-time processing capabilities essential for many applications. Meanwhile, the Xaview NX, while trailing with 243 trajectories per second, still provides a usable metric for less time-sensitive tasks or preliminary data-gathering efforts.

The 2 lanes with workzone with low traffic density scenario provides a different challenge, simulating a common urban environment with traffic modulations due to work zones. In this context, the Jetson Orin delivers a performance of 132 trajectories per second, making it ideal for surveillance and alert systems in smart city setups. This reduced throughput is attributed to the fewer vehicles present in the scene, not the capability constraints. Meanwhile, the Xavier NX offers 47 trajectories per second, which may be apt for tasks requiring less frequent monitoring.

VI-D2 Digital Twin Systems

Another aspect of our evaluation is the 2 lanes (simulated video) (13) scenario. The Jetson Orin’s capability to process 92 trajectories per second in a simulated environment underscores its potential utility in digital twin systems. Digital twin systems, which mirror and simulate real-world environments, are important in predictive maintenance, system optimization, and various simulation-driven tasks. The ability of VegaEdge to run efficiently on simulated data on the Jetson Orin emphasizes its versatility and readiness for such advanced applications.

| Road Type | Throughput | ||

|---|---|---|---|

| (Traffic Density) | Server | Jetson Orin | Xaview NX |

| 3 lanes and merger (140) | 1770 | 758 | 243 |

| 2 lanes with workzone (18) | 2868 | 132 | 47 |

| 2 lanes (simulated video) (13) | 1050 | 92 | 31 |

| Error Detection | Buffer | Distance |

|---|---|---|

| Window (s) | Time (s) | (m) |

| 0.2 | 8.8 | 235 |

| 1.0 | 8 | 213 |

| 3.0 | 6 | 160 |

| 5.0 | 4 | 107 |

VI-E Highway Workzone Safety Application

In highway work zones, safeguarding workers from oncoming vehicles is a primary concern. Through trajectory prediction combined with anomaly detection, VegaEdge detects trajectories that may pose threats and alerts workers. To achieve this objective, the proposed design must demonstrate real-time performance on edge devices. The efficacy of VegaEdge, particularly when implemented on Jetson Orin, is exemplified in Table V.

Given an error detection window of 0.2s, VegaEdge provides a buffer time of 8.8s, corresponding to a 235m distance from a camera for a vehicle moving at 60 mph. As the detection window widens, the buffer diminishes but remains noteworthy, with 1s, 3s, and 5s windows yielding buffer times of 8s, 6s, and 4s, respectively. A clear trade-off emerges between larger buffer windows and heightened accuracy, as noted in the anomaly detection results for CAD in section VI-B.

In hazardous highway work zones, these buffer times are beneficial and vital. Even minor increments in warning time can significantly alter outcomes. VegaEdge’s ability to grant these buffers, particularly on Jetson platforms, underscores its practicality in real-world scenarios and its role in bolstering worker safety.

| Power | Total | GPU | CPU | Throughput |

|---|---|---|---|---|

| Mode | Power | Power | Power | |

| MAXN | 18.14 | 3.66 | 8.80 | 758 |

| 30W | 11.44 | 3.09 | 3.72 | 477 |

| 15W | 8.43 | 2.82 | 1.3 | 214 |

VI-F VegaEdge Power Analysis on IoT Platform

In this subsection, we conduct a comprehensive power consumption analysis of the Nvidia Jetson Orin platform with the primary objective of assessing the practical utility and performance of VegaEdge across various power modes. We report the total power consumed across all the channels () calculated using the following equation:

| (4) |

Where is the total power consumed by the GPU and SOC cores, is the power consumed by the CPU and CV cores, and is the power consumed by the system’s 5V rail for various input and output ports, respectively on one of the power monitors [39]. stands for power consumed by Always On (AO) power rail on another power monitor [39].

In Table VI, VegaEdge’s power consumption and throughput on the Jetson Orin are evaluated across different power modes for a real-world highway scenario. At the MAXN (40W) setting, the system processes 758 trajectories per second, consuming 18.14W, and reducing the power mode to 30W and 15W results in decreased throughputs of 477 and 214 trajectories per second, respectively, with corresponding reductions in power consumption. Despite the higher power demands compared to typical IoT devices, VegaEdge on Jetson Orin showcases a valuable trade-off between power consumption and high-throughput processing, making it a viable solution for edge applications requiring rapid data processing.

VII Conclusion

In this work introduced a minimalist anomaly detection approach based on predicted trajectories and showcased its effectiveness across various prediction windows on adversarial and real-world anomalies. We presented VegaEdge, a real-time highway safety solution optimized IoT-edge applications utilizing our anomaly detection method, achieving a throughput of up to 758 processed vehicle trajectories per second in high-traffic conditions. Furthermore, our application of VegaEdge demonstrated its ability to adapt buffer times for workzone personnel, highlighting the trade-off between buffer time and accuracy for such applications. The introduction of the Carolinas Anomaly Dataset (CAD) as a dedicated resource for real-world highway anomaly detection, combined with our innovative approach, highlights the potential of IoT and AI in advancing highway safety.

Acknowledgments

This research is supported by the National Science Foundation (NSF) under Award No. 1932524.

References

- [1] S. Sabeti, O. Shoghli, M. Baharani, and H. Tabkhi, “Toward ai-enabled augmented reality to enhance the safety of highway work zones: Feasibility, requirements, and challenges,” Advanced Engineering Informatics, vol. 50, p. 101429, 2021.

- [2] C. Nnaji, J. Gambatese, and H. W. Lee, “Work zone intrusion: Technology to reduce injuries and fatalities,” Professional Safety, vol. 63, no. 04, pp. 36–41, 2018.

- [3] F. H. Administration. (2022) Work zones are a sign to slow down. [Online]. Available: https://ops.fhwa.dot.gov/publications/fhwahop22050/index.htm

- [4] N. H. T. S. Administration. (2022) Early estimate of 2021 traffic fatalities. [Online]. Available: https://www.nhtsa.gov/press-releases/early-estimate-2021-traffic-fatalities

- [5] F. H. Administration. (2023) Fhwa work zone facts and statistics. Accessed: [Insert Date of Access]. [Online]. Available: https://ops.fhwa.dot.gov/wz/resources/facts_stats.htm#:~:text=Total%20work%20zone%20fatalities%20by,and%20in%202021%20are%20956.

- [6] C. Nnaji, J. Gambatese, H. W. Lee, and F. Zhang, “Improving construction work zone safety using technology: A systematic review of applicable technologies,” Journal of traffic and transportation engineering (English edition), vol. 7, no. 1, pp. 61–75, 2020.

- [7] Y. Hu, Y. Zhang, Y. Wang, and D. Work, “Detecting socially abnormal highway driving behaviors via recurrent graph attention networks,” in Proceedings of the ACM Web Conference 2023, 2023, pp. 3086–3097.

- [8] Y. Tian, “Earth observation data for assessing global urbanization-sustainability nexuses,” Ph.D. dissertation, Wageningen University, 2023.

- [9] R. Izquierdo, Á. Quintanar, D. F. Llorca, I. G. Daza, N. Hernández, I. Parra, and M. Á. Sotelo, “Vehicle trajectory prediction on highways using bird eye view representations and deep learning,” Applied Intelligence, vol. 53, no. 7, pp. 8370–8388, 2023.

- [10] V. Radermecker and L. Vanhaverbeke, “Estimation of public charging demand using cellphone data and points of interest-based segmentation,” World Electric Vehicle Journal, 2023. [Online]. Available: https://oa.mg/work/10.3390/wevj14020035?utm_source=chatgpt

- [11] S. Chen, L. Piao, X. Zang, Q. Luo, J. Li, J. Yang, and J. Rong, “Analyzing differences of highway lane-changing behavior using vehicle trajectory data,” Physica A Statistical Mechanics and its Applications, vol. 624, p. 128980, Aug. 2023.

- [12] K. Deng, “Anomaly detection of highway vehicle trajectory under the internet of things converged with 5g technology,” Complexity, vol. 2021, pp. 1–12, 2021.

- [13] J. Wu, X. Wang, X. Xiao, and Y. Wang, “Box-level tube tracking and refinement for vehicles anomaly detection,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 4112–4118.

- [14] Y. Li, J. Wu, X. Bai, X. Yang, X. Tan, G. Li, S. Wen, H. Zhang, and E. Ding, “Multi-granularity tracking with modularlized components for unsupervised vehicles anomaly detection,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 2020, pp. 586–587.

- [15] V. Katariya, G. A. Noghre, A. D. Pazho, and H. Tabkhi, “A pov-based highway vehicle trajectory dataset and prediction architecture,” arXiv preprint arXiv:2303.06202, 2023.

- [16] A. Danesh Pazho, C. Neff, G. A. Noghre, B. R. Ardabili, S. Yao, M. Baharani, and H. Tabkhi, “Ancilia: Scalable intelligent video surveillance for the artificial intelligence of things,” IEEE Internet of Things Journal, vol. 10, no. 17, pp. 14 940–14 951, 2023.

- [17] S. Chen, H. Xu, D. Liu, B. Hu, and H. Wang, “A vision of iot: Applications, challenges, and opportunities with china perspective,” IEEE Internet of Things Journal, vol. 1, no. 4, pp. 349–359, 2014.

- [18] A. A. Cook, G. Mısırlı, and Z. Fan, “Anomaly detection for iot time-series data: A survey,” IEEE Internet of Things Journal, vol. 7, no. 7, pp. 6481–6494, 2020.

- [19] R. Chandra, U. Bhattacharya, C. Roncal, A. Bera, and D. Manocha, “Robusttp: End-to-end trajectory prediction for heterogeneous road-agents in dense traffic with noisy sensor inputs,” in Proceedings of the 3rd ACM Computer Science in Cars Symposium, 2019, pp. 1–9.

- [20] C. Liu, D. Q. Huynh, Y. Sun, M. Reynolds, and S. Atkinson, “A vision-based pipeline for vehicle counting, speed estimation, and classification,” IEEE Transactions on Intelligent Transportation Systems, vol. 22, no. 12, pp. 7547–7560, 2020.

- [21] P. Wei, H. Shi, J. Yang, J. Qian, Y. Ji, and X. Jiang, “City-scale vehicle tracking and traffic flow estimation using low frame-rate traffic cameras,” in Adjunct Proceedings of the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2019 ACM International Symposium on Wearable Computers, 2019, pp. 602–610.

- [22] N. Balamuralidhar, S. Tilon, and F. Nex, “Multeye: Monitoring system for real-time vehicle detection, tracking and speed estimation from uav imagery on edge-computing platforms,” Remote sensing, vol. 13, no. 4, p. 573, 2021.

- [23] N. Peri, P. Khorramshahi, S. S. Rambhatla, V. Shenoy, S. Rawat, J.-C. Chen, and R. Chellappa, “Towards real-time systems for vehicle re-identification, multi-camera tracking, and anomaly detection,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 2020, pp. 622–623.

- [24] S. Tak, J.-D. Lee, J. Song, and S. Kim, “Development of ai-based vehicle detection and tracking system for c-its application,” Journal of advanced transportation, vol. 2021, pp. 1–15, 2021.

- [25] F. Jiang, S. A. Tsaftaris, Y. Wu, and A. K. Katsaggelos, “Detecting anomalous trajectories from highway traffic data,” Electrical Engineering and Computer Science Department, Northwestern University, Evanston, Illinois, 2009.

- [26] R. Jiao, J. Bai, X. Liu, T. Sato, X. Yuan, Q. A. Chen, and Q. Zhu, “Learning representation for anomaly detection of vehicle trajectories,” arXiv preprint arXiv:2303.05000, 2023.

- [27] M. Naphade, S. Wang, D. C. Anastasiu, Z. Tang, M.-C. Chang, Y. Yao, L. Zheng, M. S. Rahman, M. S. Arya, A. Sharma et al., “The 7th ai city challenge,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 5537–5547.

- [28] M. Naphade, Z. Tang, M.-C. Chang, D. C. Anastasiu, A. Sharma, R. Chellappa, S. Wang, P. Chakraborty, T. Huang, J.-N. Hwang et al., “The 2019 ai city challenge.” in CVPR workshops, vol. 8, 2019, p. 2.

- [29] Q. Zhang, S. Hu, J. Sun, Q. A. Chen, and Z. M. Mao, “On adversarial robustness of trajectory prediction for autonomous vehicles,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 15 159–15 168.

- [30] G. Jocher, A. Chaurasia, and J. Qiu. (2023, Jan.) YOLO by Ultralytics. [Online]. Available: https://github.com/ultralytics/ultralytics

- [31] F. Yu, H. Chen, X. Wang, W. Xian, Y. Chen, F. Liu, V. Madhavan, and T. Darrell, “Bdd100k: A diverse driving dataset for heterogeneous multitask learning,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 2636–2645.

- [32] Y. Zhang, P. Sun, Y. Jiang, D. Yu, F. Weng, Z. Yuan, P. Luo, W. Liu, and X. Wang, “Bytetrack: Multi-object tracking by associating every detection box,” in European Conference on Computer Vision. Springer, 2022, pp. 1–21.

- [33] J. Colyar and J. Halkias. (2007) Next generation simulation (NGSIM), US Highway-101 dataset. FHWA-HRT-07-030. [Online]. Available: https://www.fhwa.dot.gov/publications/research/operations/07030/

- [34] ——. (2006) Next generation simulation (NGSIM), Interstate 80 freeway dataset. FHWA-HRT-06-137. [Online]. Available: https://www.fhwa.dot.gov/publications/research/operations/06137/

- [35] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” in Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13. Springer, 2014, pp. 740–755.

- [36] F. Yu, H. Chen, X. Wang, W. Xian, Y. Chen, F. Liu, V. Madhavan, and T. Darrell, “Bdd100k: A diverse driving dataset for heterogeneous multitask learning,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2020.

- [37] P. Dendorfer, H. Rezatofighi, A. Milan, J. Shi, D. Cremers, I. Reid, S. Roth, K. Schindler, and L. Leal-Taixé, “Mot20: A benchmark for multi object tracking in crowded scenes,” arXiv preprint arXiv:2003.09003, 2020.

- [38] G. Alinezhad Noghre, V. Katariya, A. Danesh Pazho, C. Neff, and H. Tabkhi, “Pishgu: Universal path prediction network architecture for real-time cyber-physical edge systems,” in Proceedings of the ACM/IEEE 14th International Conference on Cyber-Physical Systems (with CPS-IoT Week 2023), 2023, pp. 88–97.

- [39] N. C. . AFFILIATES. (2023) Software-based power consumption modeling. Last updated on Aug 11, 2023. [Online]. Available: https://docs.nvidia.com/jetson/archives/r35.4.1/DeveloperGuide/text/SD/PlatformPowerAndPerformance/JetsonOrinNanoSeriesJetsonOrinNxSeriesAndJetsonAgxOrinSeries.html

VIII Biography Section

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7cc78a7f-9819-418c-832f-40db762191f8/Vinit_Katariya.jpg) |

Vinit Katariya (S’14) is a PhD Candidate at the Department of Electrical and Computer Engineering, University of North Carolina at Charlotte, USA. He received his Master’s degree from the University of North Carolina at Charlotte in 2016. His work addresses challenges in the fields of Computer Vision, Deep Learning, and Intelligent Transportation. His current research focuses on real-time Artificial Intelligence frameworks and applications for real-world systems utilizing embedded-edge platforms. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7cc78a7f-9819-418c-832f-40db762191f8/fjannat.jpeg) |

Fatema-E- Jannat (S’22) is currently pursuing her Ph.D. in Electrical and Computer Engineering at the University of North Carolina at Charlotte, USA. She holds a Master’s degree from the University of South Florida, USA, earned in 2019. Her research focuses on the strategic application of deep-learning techniques specifically in the domain of object detection, anomaly detection, and intelligent transportation systems aiming to improve societal well-being. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7cc78a7f-9819-418c-832f-40db762191f8/Armin.jpg) |

Armin Danesh Pazho (S’22) is currently a Ph.D. student at the University of North Carolina at Charlotte, NC, United States. With a focus on Artificial Intelligence, Computer Vision, and Deep Learning, his research delves into the realm of developing AI for practical, real-world applications and addressing the challenges and requirements inherent in these fields. Specifically, his research covers action recognition, anomaly detection, person re-identification, human pose estimation, and path prediction. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7cc78a7f-9819-418c-832f-40db762191f8/Ghazal.jpg) |

Ghazal Alinezhad Noghre (S’22) is currently pursuing her Ph.D. in Electrical and Computer Engineering at the University of North Carolina at Charlotte, NC, United States. Her research concentrates on Artificial Intelligence, Machine Learning, and Computer Vision. She is particularly interested in the applications of anomaly detection, action recognition, and path prediction in real-world environments, and the challenges associated with these fields. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7cc78a7f-9819-418c-832f-40db762191f8/x15.jpg) |

Hamed Tabkhi (S’07–M’14) is an Associate Professor in the Department of Electrical and Computer Engineering, University of North Carolina at Charlotte, USA. He was a post-doctoral research associate at Northeastern University. Hamed Tabkhi received his Ph.D. in 2014 from Northeastern University under the direction of Prof. Gunar Schirner. His research focuses on transformative computer systems and architecture for cyber-physical, real-time streaming, and emerging machine learning applications. |