Variational Deep Survival Machines:

Survival Regression with Censored Outcomes

Abstract

Survival regression aims to predict the time when an event of interest will take place, typically a death or a failure. A fully parametric method [18] is proposed to estimate the survival function as a mixture of individual parametric distributions in the presence of censoring. In this paper, We present a novel method to predict the survival time by better clustering the survival data and combine primitive distributions. We propose two variants of variational auto-encoder (VAE), discrete and continuous, to generate the latent variables for clustering input covariates. The model is trained end to end by jointly optimizing the VAE loss and regression loss. Thorough experiments on dataset SUPPORT and FLCHAIN show that our method can effectively improve the clustering result and reach competitive scores with previous methods. We demonstrate the superior result of our model prediction in the long-term. Our code is available at https://github.com/qinzzz/auton-survival-785

1 Introduction

Survival Analysis is a machine learning problem for analyzing the expected duration of time until an event occurs, typically the death of a patient in medical treatment. It has been extensively used in many medical applications. Formally, the problem can be described as a regression problem to estimate the conditional survival curve , which T is the event of interest and is covariate. Given , the survival function predicts the probability of an event that happens after time . In practice, the presence of censoring, where the outcome measurement is only partially known during the whole experiment, makes the survival analysis more difficult. For example, if the study ends before the event happens, we would not know the exact outcome for this individual’s data collection. The censored observations cannot be simply discarded because they contain crucial information [24]. [22] has also shown the importance of censored instances for deriving the accurate estimation.

Traditional statistical approaches are usually non-parametric or semi-parametric for survival regression. However, non-parametric methods will face curse of dimensional problem. Meanwhile semi-parametric methods depend on strong modelling assumptions which are usually unrealistic. Recently, some fully parametric deep learning approaches have been proposed for survival regression. For example, Deep Survival Machine[18] estimates the survival function as a mixture of individual parametric distributions in the presence of censoring and it doesn’t need strong assumptions. [20] generalizes DSM by assuming within each latent group the proportional hazards assumption holds.

Clustering is also a valuable tool in survival analysis. Patients can usually be clustered into different latent groups and each of these groups would have an individual distribution. [14] presents a novel method for clustering survival data — variational deep survival clustering (VaDeSC) that discovers groups of patients characterised by different generative mechanisms of survival outcome.

In this project, we propose two models named VDSM-cat and VDSM-clus, which are novel approaches combine DSM and VAE to both cluster data and predict survival time. The input data will first pass through VAE’s encoder and we will get latent variables. These latent variables will be transformed as weights of DSM’s mixed individual distributions. Compared to original DSM, our model achieve better results in the long term, which suggests the embedded VAE could give a better cluster outcome and is helpful for final prediction.

Our main contributions are as follows:

(i) We propose two new methods VDSM-cat and VDSM-clus, which corresponds to categorical distribution and Gaussian Mixture distribution for the latent variable respectively.

(ii) We compare our approaches with DSM on SUPPORT and FLCHAIN dataset and find our methods could achieve superior results in long time prediction.

2 Related Works

Time-to-event analysis with censoring is an important problem which could be applied into many domains including bio-statistics and health informatics[25], actuarial sciences[3] and econometrics[21]. It has also been increasingly popular in engineering, including applications to predict maintenance time of equipments. [15] proposed a Bayesian hierarchical model to predict the end of life (EoL) and end of discharge (EoD) of Li-ion batteries using load profiles and discharge data. [5] used survival regression model to track the degradation of the system and plan optimal maintenance strategy.

Research in survival analysis pays more attention to deep learning approaches in recent years [17] [9]. [13] proposed a fully parametric model named Deephit to predict survival outcomes in competing risks situation. However, their architecture could only predict failure times over a discrete set of fixed size. When survival horizons become long, this approach would require large number of parameters to be learnt, which can be very impractical. Another limitation of this approach is that it can be more sensitive to events at shorter horizons and it will not model long term horizon well. [12] propose using black box optimization to adaptively select the best model for a given event horizon. Deep Survival Machine (DSM) [18] is proposed as a fully-parametric approach to estimate time-to-event in the presence of censoring and competing risks. In DSM, the survival function is estimated as a mixture of individual parametric survival distributions, where the distributions parameters are learnt by training a Multilayer Perceptron. The strong assumptions of proportional hazards is not required. In this way, it enables the model to learn a rich distributed representation of the input covariates. [20] proposed a new approach based on learning mixtures of Cox regressions to model individual survival distributions. It involves estimating hazard ratios within latent clusters followed non-parametric estimation of the baseline survival rates.

Semi-supervised clustering for survival data has been first studied in [2]. It proposed pre-selecting variables based on univariate Cox regression hazard scores and then performing k-means clustering on the subset of features to discover patient subpopulations. [1] uses Cox regression to explore differences across subgroups of diabetic patients discovered by hierarchical clustering. [16] propose a deep clustering approach to differentiate between long- and short- term survivors based on a modified Kuiper statistic in the absence of end-of-life signals.[23] used a multitask learning approach for the outcome-driven clustering of acute syndrome patients. In [18] and [20], DSM and DCM set a mixture of survival models through representations learnt by an encoder neural network.Recently, Variational AutoEndoers (VAE) has also been involved in time-to-event analysis and clustering. [24] introduces a varitional time-to-event prediction model, which uses Variational Survival Inference (VSI) to predict the time-to-event distribution without the need to specify a parametric form for the baseline distribution. In this way, they avoid the restrictive assumptions in classical survival analysis models. [14] proposed a semi-supervised approach to cluster survival data. It outperforms baseline methods through clustering the latent variables and is comparable in terms of time-to-event predictions.

3 Our Method

3.1 Problem Definition

We consider a dataset of right censored observations , where are the covariates of an individual i, is an indicator of whether an event occurred or not and is either the time of event or censoring as indicated by . Our goal is to learn a survival function from the input data. We denote the uncensored subset of data as and the censored subset as .

3.2 Deep Survival Machine

In this section, we briefly describe the model proposed by Deep Survival Machine (DSM) [18]. Given the above variable and , the survival time can be modeled as

| (1) |

The model aims to maximize the probability over the entire dataset. Considering both censored and uncensored data, we model the probability by

| (2) |

And the loss can be expressed as

| (3) |

We denote it as combining two separate loss for uncensored and censored data:

| (4) |

The log likelihood of the uncensored dataset can be denoted as

| (5) |

For the censored data, we maximize the log likelihood by computing the probability of event happening after .

| (6) |

3.3 Proposed Model

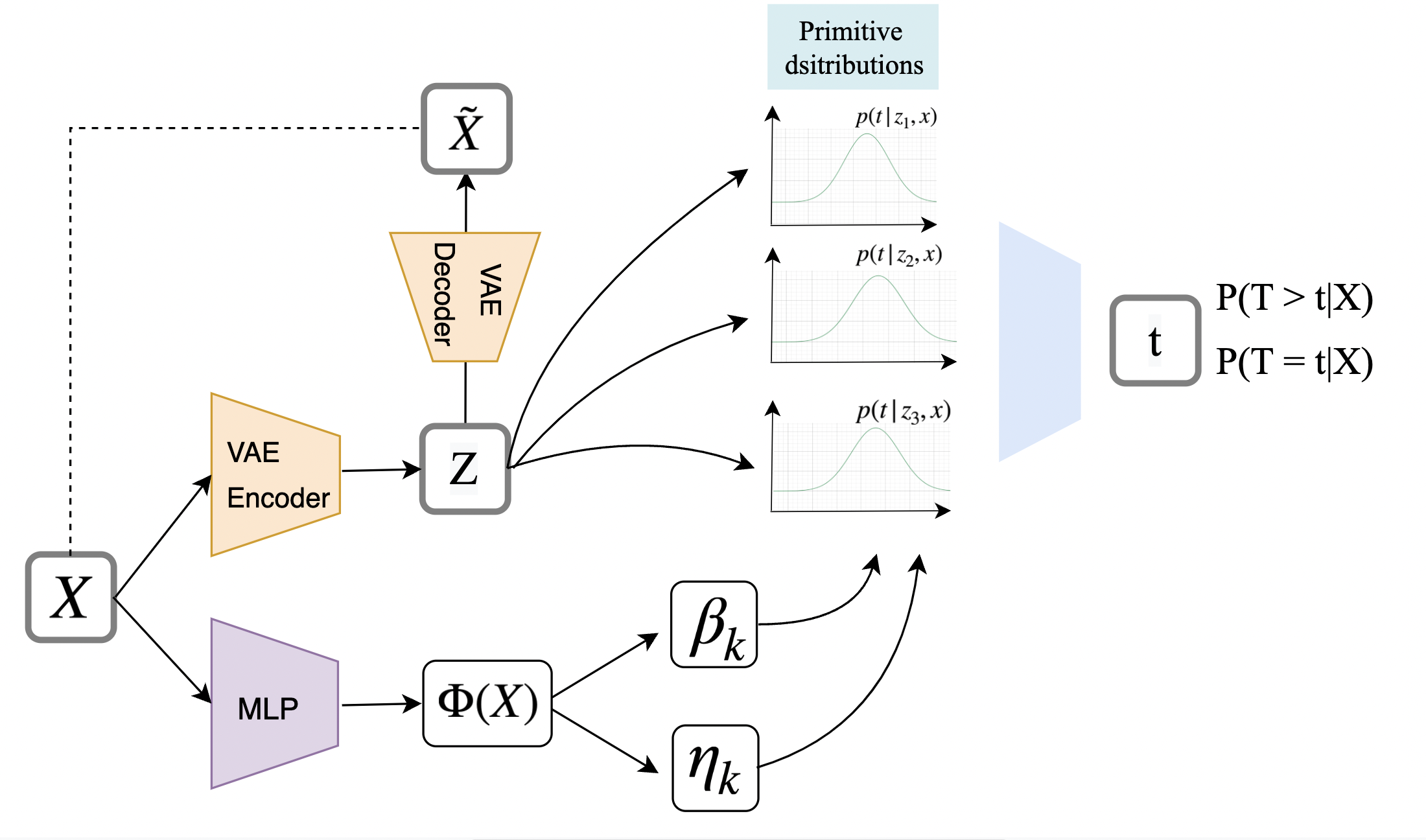

As in DSM, we choose to model the conditional distribution of as a mixture over K well-defined distributions. We refer to them as primitive distributions. To satisfy certain properties of survival time prediction, DSM experimented with two types of distributions, the Weibull and the Log-Normal distribution (see Table 1). For each primitive distribution, we need a independent set of parameters . The final individual survival distribution is a weighted average over these K distributions.

In DSM paper, they use a linear transform to get a with classes. Though simple and intuitive, this classification method fails to learn a good weight over K categories in a unsupervised setting. We propose a variant of DSM[18], Variational Deep Survival Machine (VDSM), by introducing Variational AutoEncoder[10] into the stage of sampling from latent space given input . Our goal is to learn a better cluster assignment with VAE models. Figure 1 shows an overview of the model structure. The input X is a set of features an individual patient. We use a VAE encoder to generate the latent variable Z. The Z constains of K classes, where each cluster has an independent underlying survival distribution. The final predictied distribution is is a weighted average over the K distributions.

In the VAE encoder and decoder module, we experimented with two different VAE for classification and clustering respectively. We refer to them as VDSM-cat and VDSM-clus.

Weibull Log-Normal PDF(t) CDF(t)

Variational Deep Survival Machine with categorical VAE (VDSM-cat)

We introducing Categorical Variational AutoEncoder proposed in [7] into the stage of sampling from latent space given input , which is illustrated in Figure 2. Consider a latent space with clusters , is sampled from a categorical distribution . We know that categorical distribution is discrete and not differentiable. To make the model amenable to gradient based optimization, we use the Gumbel-Max trick[6] to generate samples which can be used in backpropagation.The Gumbel-Max trick is an efficient method to generate samples from a categorical distribution:

| (7) |

where is the samples drawn from , is the ith-class possibility of the categorical distribution. To cover the gap that argmax is not differentiable, the Gumbel-Softmax[7] is proposed as a continuous approximation:

| (8) |

The loss for this categorical VAE would be the Kullback-Leibler divergence between generated distribution of with the expected categorical distribution , plus the reconstruction loss of .

| (9) |

Variational Deep Survival Machine with generative clustering (VDSM-clus)

The assumption behind categorical VAE is that belongs to one of the categories. However, the latent variable of VAE assumes a Gaussian mixture as prior. We believe the latent cluster assumption of Gaussian Mixture Model (GMM) can be applied to extend it with a mixture of Gaussian distributions. Here we first describe the process of the generative model. A cluster is sampled from a categorical distribution . Every cluster is a Gaussian distribution with mean and variance . Then a continuous latent embedding is sampled from the Gaussian Distribution. The input covariant is generated from a distribution conditioned on . Finally, the survival time depends on and , i.e., . The main difference here is that conforms to a normal distribution depends on cluster .

The generative process above can be defined by the following,

| (10) |

where

| (11) | ||||

| (12) | ||||

| (13) |

[8] proposed an unsupervised generative clustering framework VaDE that combines VAE and GMM together, as is shown in Figure 3. Inspired by their method, we optimized the VAE by maximizing the evidence lower bound (ELBO) using the SGVB estimator and the reparameterization trick. We borrowed the learning objective derived in the paper, which is defined as:

| (14) | ||||

| (15) | ||||

| (16) |

The first term in Equation 14 is the reconstruction term between encoder input and decoder output. The second term is the Kullback-Leibler divergence between the Mixture-of-Gaussian prior p(z|c) to the posterior q(z,c|x). The training procedure aims to maximize the ELBO with regard to parameters. The cluster assignment is obtained by the approximation of q(c|x):

| (17) |

Finally, the combined loss of our two proposed models are:

| (18) |

4 Experimental Setup

4.1 Baseline

Deep Survival Machine

Deep Survival Machine is a novel approach to estimate time-to-event in the presence of censoring. By leveraging a hierarchical graphical model parameterized by neural networks, the model learns distributional representations of the input covariates and mitigate existing challenges in survival regression. DSM estimates the conditional survival function as a mixture of individual parametric survival distributions without a strong assumptions of proportional hazards, and enables learning with time-varying risks. In this way, DSM allows for learning of rich distributed representations of the input covariates, helping knowledge transfer across multiple competing risks.

4.2 Dataset

SUPPORT

(Study to understand prognoses and preferences for outcomes and risks of [11]): This Dataset is composed of 9,105 terminally ill patients on life support. The median of studied patients’ survival time was 58 days. A majority with 79% population were recorded as ‘White’, while the rest were coded as ‘Black’, ‘Hispanic’ and ‘Asian’.

FLCHAIN

(Assay of Serum Free Light Chain): This is a public dataset developed by [4] aiming to study the relationship between serum free light chain and mortality. It includes covariates like age, gender, serum creatinine and presence of monoclonal gammapothy. We used a subset of this dataset, where all the individuals with missing covariates are removed. The remaining dataset consists of 6,524 individuals.

| \cellcolor[HTML]FFFFFF | \cellcolor[HTML]FFFFFFTime-dependent Concordance-Index | ||

|---|---|---|---|

| \cellcolor[HTML]FFFFFF | \cellcolor[HTML]FFFFFFQuantiles of Event Times | ||

| \cellcolor[HTML]FFFFFFModels | 25% | 50% | 75% |

| DSM | 0.7758 ± 0.0013 | 0.7085 ± 0.0023 | 0.6560 ± 0.0032 |

| VDSM-cat | 0.7580 ± 0.0025 | 0.6920 ± 0.0012 | 0.6734 ± 0.0023 |

| VDSM-clu | 0.7585 ± 0.0011 | 0.6923 ± 0.0055 | 0.6736 ± 0.0022 |

| \cellcolor[HTML]FFFFFF | \cellcolor[HTML]FFFFFFROC-AUC | ||

|---|---|---|---|

| \cellcolor[HTML]FFFFFF | \cellcolor[HTML]FFFFFFQuantiles of Event Times | ||

| \cellcolor[HTML]FFFFFFModels | 25% | 50% | 75% |

| DSM | 0.7841 ± 0.0017 | 0.7298 ± 0.0033 | 0.7097 ± 0.0038 |

| VDSM-cat | 0.7672 ± 0.0025 | 0.7127 ± 0.0054 | 0.7212 ± 0.0004 |

| VDSM-clu | 0.7677 ± 0.0011 | 0.7130 ± 0.0027 | 0.7215 ± 0.0015 |

4.3 Experiment Metrics

We evaluate the baseline model DSM, the proposed model VDSM-cat and VDSM-clus with two popular metrics in survival analysis literature. Similar to DSM settings [18], we evaluate these two metrics with different truncation event horizon quantiles of , and to measure how good the model can be at showing the risks at different scale of time.

Concordance index

It is used to evaluate the proportion of all comparable pairs in which the predictions and outcomes are concordant. More specifically, Concordance intuitively means that two samples were ordered correctly by the model. If the one with a higher estimated risk score has a shorter actual survival time, we can say the two samples are concordant.

ROC-AUC

In survival analysis, the area under receiver operating characteristic (ROC) curve is used by defining sensitivity and specificity as time-dependent measures. All individuals that experienced an event prior to or at time are Cumulative cases and dynamic controls are those individuals experienced an event after the time.

4.4 Hyper-parameter Searching

To achieve the best performance, we use grid search to find the best hyper-parameter settings for the DSM model. As reported in the DSM paper [18], we evaluate the model with the following parameter settings: the number of clusters is chosen from , the discounting factor is chosen from , and the learning rate of optimizer Adam is chosen from . The best performance is achieved with clusters, as the discounting factor and as the learning rate.

For our proposed VDSM-cat and VDSM-clus, we research the hyper-parameter settings. VAEs are added to the DSM to get better clustering representation and therefore it may behave differently with different number of clusters. Our experiment shows that the VDSM-clus outperforms the VDSM-cat with every cluster number in according to the Concordance index and ROC-AUC. For SUPPORT dataset, clusters gives the best result and for FLCHAIN dataset, clusters shows the best performance.

| \cellcolor[HTML]FFFFFF | \cellcolor[HTML]FFFFFFTime-dependent Concordance-Index | ||

|---|---|---|---|

| \cellcolor[HTML]FFFFFF | \cellcolor[HTML]FFFFFFQuantiles of Event Times | ||

| \cellcolor[HTML]FFFFFFModels | 25% | 50% | 75% |

| DSM | 0.6033 ± 0.0033 | 0.6641 ± 0.0039 | 0.6170 ± 0.0032 |

| VDSM-cat | 0.5848 ± 0.0015 | 0.6229 ± 0.0016 | 0.6125 ± 0.0010 |

| VDSM-clu | 0.5635 ± 0.0006 | 0.6449 ± 0.0049 | 0.6219 ± 0.0050 |

| \cellcolor[HTML]FFFFFF | \cellcolor[HTML]FFFFFFROC-AUC | ||

|---|---|---|---|

| \cellcolor[HTML]FFFFFF | \cellcolor[HTML]FFFFFFQuantiles of Event Times | ||

| \cellcolor[HTML]FFFFFFModels | 25% | 50% | 75% |

| DSM | 0.6057 ± 0.0020 | 0.6689 ± 0.0007 | 0.6201 ± 0.0014 |

| VDSM-cat | 0.5874 ± 0.0052 | 0.6260 ± 0.0048 | 0.6215 ± 0.0019 |

| VDSM-clu | 0.5656 ± 0.0003 | 0.6502 ± 0.0051 | 0.6430 ± 0.0026 |

5 Results and Discussion

5.1 Experiment Results

5.2 Clustering Results

We run multiple experiments on SUPPORT and FLCHAIN dataset to collect results. First, we generate the latent variable after training the model. Since the latent variable represents a distribution over latent clusters, we choose the category with the largest probability as the label and draw a plot to visualize the clustering result with the t-SNE algorithm.

Figure 4 and Figure 5 show the clustering result on SUPPORT and FLCHAIN dataset, respectively. Compared to DSM, VDSM-clus has a better clustering result, which is not surprising because the reconstruction loss forces the VAE to learn the latent embedding better.

It should be noted that VDSM-cat does not show better clustering result. In categorical VAE, the latent variable consists of latent dimension and category dimension . In our implementation, we set as the number of latent clusters in DSM, and regard as a multi-nominal distribution of with independent trails. To be specific, the probability of selecting category c is . Since we assume the N latent dimensions are independent, we have . This may not be the best way to handle the latent dimension in categorical VAE and is probably the reason why the clustering result of VDSM-cat is not as expected.

5.3 Better Prediction at Long-time Scale

We compare the results between our models and DSM based on the Concordance Index. The Concordance Index is a commonly used metric in survival analysis, and it evaluate the probability of the pair risk of different patient, at the time one event has already occurred to one of the patients, which means, ideally, that patient should be assigned with a higher risk. The bigger this probability is, the more consistent the prediction is with the truth.

It shows the potential of VAE to capture the long-time-dependent features with the latent embedding of inputs. Another possible explanation is that the latent representation clustering is more important to survival prediction in a long time scale. We are still working on this to prove our assumptions. The ROC-AUC also shows similar results.

5.4 Effect of Clustering on Time-to-event Prediction

We also found that different quality of clustering does not affect the time-to-event prediction much. According Figure 4 and Figure 5, on both SUPPORT and FLCHAIN datasets the VDSM-clus has a better result than the VDSM-cat. However, experiment results show that there is no much difference between these two models.

Manduchi et al.[14] also show similar results by comparing different clustering method. Their proposed model is better at clustering but only stay at competitive with other state-of-the-art survival models. Perhaps the clustering is not the best way to represent the input data.

6 Conclusion & Future Work

We proposed to incorporate Variational AutoEncoder to the Deep Survival Machines, which is a semi-supervised deep probabilistic model for clustering survival data. By experimentation, we demonstrated that the VAE model leads to a better result in clustering patients to different latent groups than the naive approach in DSM architecture. Furthermore, the grouping information allows us to get more accurate survival time prediction for patients comparing with state-of-art models. Interesting future work directions include trying other variational clustering methods for survival data, e.g. Random Forest, a Multiple Task Variational Autoencoder to better study the latent relationship between features. Furthermore, the model can be modified to be able to predict survival time based on image input.

7 Division of Work

Jiaming Liu - Jiaming co-researched the survival models, collected references about VAE and other deep learning models for survival regression and took part in the design of VAE-cat and VAE-clus. He pre-processed MNIST to do some early experiments and implemented several visualization tools to help analyze model results. He also wrote Introduction and Related Works part in this report.

Jiayuan Huang - Jiayuan co-researched the survival models used in survival analysis, and designed the method of incorporating categorical into DSM. He implemented the proposed model VDSM-cat and conducted experiments on two datasets to collect results. He also wrote the Result and Discussion part in this report.

Junhui Li - Junhui co-researched the survival models used in survival used in survival analysis. She transferred existing VAE methods to Pytorch framework for experiment.She also researched into potential improvement methods and wrote the Dataset, Conclusion and Future Work part in this report.

Qinxin Wang - Qinxin co-researched the survival models (DSM and DCM), and worked on the mathematical formulation of our new methods. She surveyed multiple VAEs for clustering, implemented the proposed model VDSM-clus, and conducted experiments. She wrote the Method part and also drew model diagrams in this report.

References

- [1] Emma Ahlqvist, Petter Storm, Annemari Käräjämäki, Mats Martinell, Mozhgan Dorkhan, Annelie Carlsson, Petter Vikman, Rashmi B Prasad, Dina Mansour Aly, Peter Almgren, et al. Novel subgroups of adult-onset diabetes and their association with outcomes: a data-driven cluster analysis of six variables. The lancet Diabetes & endocrinology, 6(5):361–369, 2018.

- [2] Eric Bair, Robert Tibshirani, and Todd Golub. Semi-supervised methods to predict patient survival from gene expression data. PLoS biology, 2(4):e108, 2004.

- [3] Claudia Czado and Florian Rudolph. Application of survival analysis methods to long-term care insurance. Insurance: Mathematics and Economics, 31(3):395–413, 2002.

- [4] Angela Dispenzieri, Jerry A. Katzmann, Robert A. Kyle, Dirk R. Larson, Terry M. Therneau, Colin L. Colby, Raynell J. Clark, Graham P. Mead, Shaji Kumar, L. Joseph Melton, and S. Vincent Rajkumar. Use of nonclonal serum immunoglobulin free light chains to predict overall survival in the general population. Mayo Clinic Proceedings, 87(6):517–523, June 2012. Funding Information: Grant Support: This work was supported in part by grants CA62242 (A.D, R.A.K. S.V.R.), CA107476 (A.D., S.V.R., J.A.K., R.A.K.), and CA91561 (A.D) from the National Cancer Institute , The JABBS Foundation , and The Predolin Foundation . Binding Site provided the serum immunoglobulin free light chain reagent. This study was supported in part by National Institutes of Health grant R01 AG034676 and the Rochester Epidemiology Project (grant number R01-AG034676 ; Principal Investigator: Walter A. Rocca, MD).

- [5] Alireza Ghasemi, S Yacout, and MS Ouali. Optimal condition based maintenance with imperfect information and the proportional hazards model. International journal of production research, 45(4):989–1012, 2007.

- [6] Emil Julius Gumbel. Statistical theory of extreme values and some practical applications : A series of lectures. 1954.

- [7] Eric Jang, Shixiang Shane Gu, and Ben Poole. Categorical reparameterization with gumbel-softmax. ArXiv, abs/1611.01144, 2017.

- [8] Zhuxi Jiang, Yin Zheng, Huachun Tan, Bangsheng Tang, and Hanning Zhou. Variational deep embedding: An unsupervised and generative approach to clustering. In IJCAI, 2017.

- [9] Jared Katzman, Uri Shaham, Alexander Cloninger, Jonathan Bates, Tingting Jiang, and Yuval Kluger. Deep survival: A deep cox proportional hazards network. ArXiv, abs/1606.00931, 2016.

- [10] Diederik P. Kingma and Max Welling. Auto-encoding variational bayes. CoRR, abs/1312.6114, 2014.

- [11] William A Knaus, Frank E Harrell, Joanne Lynn, Lee Goldman, Russell S Phillips, Alfred F Connors, Neal V Dawson, William J Fulkerson, Robert M Califf, Norman Desbiens, et al. The support prognostic model: Objective estimates of survival for seriously ill hospitalized adults. Annals of internal medicine, 122(3):191–203, 1995.

- [12] Changhee Lee, William Zame, Ahmed Alaa, and Mihaela Schaar. Temporal quilting for survival analysis. In The 22nd international conference on artificial intelligence and statistics, pages 596–605. PMLR, 2019.

- [13] Changhee Lee, William R. Zame, Jinsung Yoon, and Mihaela van der Schaar. Deephit: A deep learning approach to survival analysis with competing risks. In AAAI, 2018.

- [14] Laura Manduchi, Ricards Marcinkevics, Michela Carlotta Massi, Verena Gotta, Timothy Müller, Flavio Vasella, Marian C. Neidert, Marc Pfister, and Julia E. Vogt. A deep variational approach to clustering survival data. CoRR, abs/2106.05763, 2021.

- [15] Madhav Mishra, Jesper Martinsson, Matti Rantatalo, and Kai Goebel. Bayesian hierarchical model-based prognostics for lithium-ion batteries. Reliability Engineering and System Safety, 172(C):25–35, 2018.

- [16] S Chandra Mouli, Bruno Ribeiro, and Jennifer Neville. A deep learning approach for survival clustering without end-of-life signals. 2018.

- [17] Chirag Nagpal, Mononito Goswami, Keith A. Dufendach, and Artur W. Dubrawski. Counterfactual phenotyping with censored time-to-events. ArXiv, abs/2202.11089, 2022.

- [18] Chirag Nagpal, Xinyu Li, and Artur Dubrawski. Deep survival machines: Fully parametric survival regression and representation learning for censored data with competing risks. IEEE Journal of Biomedical and Health Informatics, 25(8):3163–3175, 2021.

- [19] Chirag Nagpal, Willa Potosnak, and Artur Dubrawski. auton-survival: an open-source package for regression, counterfactual estimation, evaluation and phenotyping with censored time-to-event data. arXiv preprint arXiv:2204.07276, 2022.

- [20] Chirag Nagpal, Steve Yadlowsky, Negar Rostamzadeh, and Katherine Heller. Deep cox mixtures for survival regression. In Machine Learning for Healthcare Conference, pages 674–708. PMLR, 2021.

- [21] Maria Stepanova and Lyn Thomas. Survival analysis methods for personal loan data. Operations Research, 50(2):277–289, 2002.

- [22] DC Watt, TC Aitchison, RM Mackie, and JM Sirel. Survival analysis: the importance of censored observations. Melanoma research, 6(5):379–385, 1996.

- [23] Eryu Xia, Xin Du, Jing Mei, Wen Sun, Suijun Tong, Zhiqing Kang, Jian Sheng, Jian Li, Changsheng Ma, Jianzeng Dong, et al. Outcome-driven clustering of acute coronary syndrome patients using multi-task neural network with attention. In MedInfo, pages 457–461, 2019.

- [24] Zidi Xiu, Chenyang Tao, and Ricardo Henao. Variational learning of individual survival distributions. In Proceedings of the ACM Conference on Health, Inference, and Learning, pages 10–18, 2020.

- [25] Xinliang Zhu, Jiawen Yao, and Junzhou Huang. Deep convolutional neural network for survival analysis with pathological images. In 2016 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), pages 544–547, 2016.