Validation tests of Gaussian boson samplers with photon-number resolving detectors

Abstract

An important challenge with the current generation of noisy, large-scale quantum computers is the question of validation. Does the hardware generate correct answers? If not, what are the errors? This issue is often combined with questions of computational advantage, but it is a fundamentally distinct issue. In current experiments, complete validation of the output statistics is generally not possible because it is exponentially hard to do so. Here, we apply phase-space simulation methods to partially verify recent experiments on Gaussian boson sampling (GBS) implementing photon-number resolving (PNR) detectors. The positive-P phase-space distribution is employed, as it uses probabilistic sampling to reduce complexity. It is times faster than direct classical simulation for experiments on modes where quantum computational advantage is claimed. When combined with binning and marginalization to improve statistics, multiple validation tests are efficiently computable, of which some tests can be carried out on experimental data. We show that the data as a whole shows discrepancies with theoretical predictions for perfect squeezing. However, a small modification of the GBS parameters greatly improves agreement. Hence, we suggest that such validation tests could form the basis of feedback methods to improve GBS quantum computer experiments.

I Introduction

Theoretical and experimental interest in photonic networks employed as quantum computers has grown considerably [1, 2]. These devices interfere nonclassical photons in a large scale interferometric network, then measure the resulting output photons using photodetectors. The computational task is sampling from a computationally hard probability distribution. Gaussian boson sampling (GBS) has proved scalable to the sizes needed for quantum advantage, which recent experiments have now claimed [3, 4, 5, 6]. In this case, photons are prepared in a squeezed state, with output probabilities in a #P-hard computational class [7, 8]. For photon-number resolving (PNR) detectors this is a Hafnian, while for threshold detectors it is a Torontonian. Neither function is computable in polynomial time, so that the random counts cannot be classically replicated at large scale.

In this paper, we use positive P-representation simulations to validate Gaussian boson sampling with photon number resolving (PNR) detectors, extending the tests that can be applied to experimental data. This is essential, as a number of large scale GBS experiments have claimed quantum advantage using either PNR [5] or threshold detectors [3, 4, 6]. To perform the tests, we generalize a binning method developed by Mandel [9, 10] for simulating photon statistics of classical light. The original formulation, which utilized the Glauber-Sudarshan P-representation [11, 12] to simulate moments for classical fields, is extended to the positive P-representation, which can treat any quantum state. An extension to binning in multiple dimensions is also provided, as these are more sensitive tests of quantum advantage than one-dimensional distributions.

We show that in the challenging prospect of using quantum computers to demonstrate quantum computational advantage, the GBS approach has a very important advantage. Unlike many other proposed quantum computing architectures, there are multiple quantitative statistical tests that are dependent on the experimental parameters, which can be used to validate the output distributions of the data. If experimental data fail such tests, it is invalid. This is an important criterion, used in comparable tests of classical random number generators and cryptographic security [13, 14]. Implementing such tests requires algorithms that compute the correlations or moments without needing to compute the full #P hard distribution.

An efficient and scalable method for validating GBS with threshold detectors, demonstrated for up to modes, was proposed in Ref.[15] and used to analyze data from a -mode experiment [3]. The normally ordered positive P-representation was utilized to simulate grouped count probabilities (GCPs). This is a generalized binned photon counting distribution that includes all measurable marginal and binned correlations. The exponential sparsity of the detected count pattern probabilities means the full distribution cannot be either computed or measured. Therefore, validation tests based on marginals and binned probability distributions appear to be the most practical route to comprehensive validation.

Like phase-space methods for threshold detectors [15], the PNR method is efficient and scalable. Increased dimension of binning causes an increase in computation time, where in the limit of dimension equaling mode number the GCP converges to the Hafnian. PNR phase-space methods allow one to perform simulations of either the ground truth distribution or modifications such as decoherence, measurement errors and shot-to-shot fluctuations. Such errors are typically not analyzed, despite evidence suggesting that a thermalized, non-ideal ground truth distribution provides stronger claims of quantum advantage than the ideal ground truth distribution [16]. Unlike threshold detection, PNR detector binning has the advantage of not requiring the computation of multidimensional inverse discrete Fourier transforms that increase computation time for multidimensional GCPs with threshold detection.

The positive-P phase-space distribution is exact, non-singular and positive for any quantum state. The moments of the distribution are identical to normally ordered operator moments including intensity correlations [17], which are reproduced to any order. This property, coupled with probabilistic random sampling, makes the positive P-representation ideal for validating the statistics of nonclassical photon counting experiments such as GBS. It does not have the computational restraints imposed by other validation methods [18, 19, 20], since it is many orders of magnitude faster than direct classical simulation, with negligible rounding errors. While there are sampling errors, for current experimental parameters they are comparable to sampling errors in the measurement statistics. Both can be reduced in a controllable way.

Other validation methods involve comparing experimental count patterns to those obtained from classical algorithms that replicate the photo-detection measurements either exactly [20] or approximately [18, 19]. Exact algorithms generate count patterns by directly sampling the distributions, but are limited to small experiments only. Approximate algorithms typically neglect high-order correlations, and only use the low-order correlations. Despite the usefulness of these algorithms in defining classical simulation boundaries of quantum advantage and determining the correlations present in a GBS experiment, they are either computationally demanding or lack high-order correlations, which is necessary for claims of quantum advantage. This limits the application of these approaches when validating GBS experiments and investigating physical processes arising within networks.

In order to determine the validity of using the positive P-representation to simulate nonclassical photon counting experiments with PNR detectors, we compare simulated GCP moments to the exactly known photon counting distributions for special cases. Here, multi-mode pure squeezed states are transformed by a Haar random unitary matrix which is multiplied by a uniform amplitude loss coefficient. For losses comparable to current experiments, we see excellent agreement. In the less realistic lossless case, the resulting non-Gaussian number distribution oscillates strongly between even and odd total counts. While this is far from current experiments, the standard positive P-representation requires a much larger number of phase-space samples to converge in such cases. However, this limit can also be efficiently simulated if necessary using a symmetry-projected [21] phase-space method, which will be treated elsewhere.

Finally, using photon count data from the recent Borealis experiment implementing PNR detectors [5], we apply the simulated GCPs to validate the moments of measured photon counting distributions by comparing theory and experiment. Differences are quantified using chi-square and Z-statistic tests. As with earlier work [15, 16], we show that the experimental GCPs are far from the ground truth distribution for pure squeezed state inputs. Instead, they are described much better by a different target distribution which includes decoherence and measurement error corrections of the transmission matrix. Although we focus specifically on implementations to GBS, we emphasize that one can use the positive P-representation to simulate GCPs of any linear photon counting experiment using classical or nonclassical photons.

This paper is structured as follows, Section II introduces the necessary background and theoretical methods for simulating GCPs of any photon counting experiment using PNR detectors with nonclassical photons in phase-space. Section IV then applies this method to the exactly known multi-mode squeezed state distributions, while Section V simulates the theoretical distributions corresponding to experimental data from the Borealis GBS claiming quantum advantage.

II Grouped count probabilities

We first consider a standard GBS experiment where single-mode squeezed vacuum states are sent into a linear photonic network defined by an transmission matrix . If each input mode is independent, the full input density operator is defined as

| (1) |

where denotes the squeezed vacuum state of the -th mode with squeezing parameter and is the squeezing operator [22].

Since squeezed states are Gaussian, they are characterized entirely by their quadrature variances

| (2) |

where the quadrature operators and satisfy the commutation relations , and the mean photon number and coherence per mode is defined as and , respectively.

While most theoretical analyses of GBS consider pure squeezed state inputs, this is impractical, since decoherence effects such as mode mismatches [3], partial distinguishability [23], and many more [24] will always be present during experimental applications in quantum optics. Therefore, a realistic model of input states are Gaussian thermalized squeezed states for which a simple parameterization was introduced in [15]. Here, a thermalization component alters the intensity of the input photons as measured by the coherence, which is reduced by a factor of such that . The quadrature variances are then altered as [15]

| (3) |

where for the pure squeezed state definition Eq.(2) returns, while produces fully decoherent thermal states with . More complex thermalized models are also possible, for example with mode dependent thermalization, .

In the ideal GBS scenario, the transmission matrix is a Haar random unitary. However experimentally realistic transmission matrices contain losses, making them non-unitary. Throughout this paper, is used to define a lossy transmission matrix, while defines a Haar random unitary. When losses are present, the creation and annihilation operators for the input modes are converted to output modes following

| (4) |

where is a random noise matrix representing photon loss to -the environmental mode . The resulting output photon number is then measured by a series of photodetectors.

II.1 Photon counting projectors

| (5) |

where denotes normal ordering and is the number of measured photon counts at the -th detector. Although theoretically there is no upper bound on , practically PNR detectors saturate for some maximum number of counts . This value is variable, typically depending on the physical implementation of the detector.

In any multi-mode photon counting experiment, a set of photon count patterns are observed by measuring the output state . A straightforward extension of Eq.(5) allows one to define the projection operator for a specific count pattern as

| (6) |

For GBS, each pattern is a sample from the full output probability distribution and computing its expectation value corresponds to solving the matrix Hafnian [7, 27]

| (7) |

which is a #P-hard computational task due to its relationship to the matrix permanent. Here, is the Hafnian of the square sub-matrix of for pure state inputs with zero displacement, where the sub-matrix is formed from the output channels with recorded photon counts. The symmetric matrix is related to the kernel matrix which is formed from the covariance matrix as [28]

| (8) |

where denotes the identity matrix. When the covariance matrix elements are non-negative, the kernel matrix and hence the symmetric matrix are also non-negative [28] and the Hafnian can be estimated in polynomial time [29, 30].

In the current generation of GBS networks, photon loss is the dominant noise source. Although there is evidence that sampling from a lossy GBS distribution is also a #P-hard computational task [31], output photons from experiments implementing high loss networks become classical [32], allowing the output distribution to become efficiently sampled. There is currently no clear boundary between the computationally hard and efficiently sampled regimes, but a classical algorithm that exploits large experimental photon loss has recently been developed [33] and shown to produce samples that are closer to the ideal ground truth than recent experiments claiming quantum advantage. The effects of thermalization on the computational complexity of GBS is currently unknown, despite thermalization causing nonclassical photons to become classical at a faster rate than pure losses.

II.2 Grouped count probabilities for PNR detectors

Determining photon counting distributions by binning the photons arriving at photodetectors has a long history in quantum optics. Although binning methods can vary, e.g. binning continuous measurements within a time interval such as inverse bandwidth time [34, 35], such distributions are both experimentally accessible and theoretically useful. For pure squeezed states these can provide evidence of nonclassical behavior, as observed in the even-odd count oscillations [36]. For GBS there is also a statistical purpose, as the set of all possible observable patterns grows exponentially with mode number, causing the output probabilities to become exponentially sparse.

In this paper, we are interested in the total output photon number operator obtained from a subset of efficient detectors:

| (9) |

Our observable of interest is then the photon number distribution corresponding to counting the projectors onto eigenstates of .

Following from the nomenclature introduced in [15], we define the -dimensional grouped photon counting distribution as the probability of observing grouped counts in distinct subsets of output modes , such that

| (10) |

where is the total correlation order and each grouped count is obtained from the summation . The general form of the pattern projector for any number of output modes within each subset is

| (11) |

Multi-dimensional probabilities are defined over a vector of disjoint subsets of mode numbers , in which case GCPs become joint probability distributions. This is readily illustrated when and , giving . Increasing the dimension to while keeping the correlation order constant at causes the GCP to converge to the Hafnian.

Therefore, if the correlation order satisfies , an increase in GCP dimension corresponds to observing the -th binned Glauber intensity correlation, hence high-order correlations become more statistically significant in the limit . If such correlations are present in the experimental data, multi-dimensional GCPs provide a more thorough test of quantum advantage as they allow one to directly compare these high-order correlations with their theoretical values [16]. If , the GCP becomes a marginal probability as the remaining output modes are ignored.

III Normally-ordered phase-space methods

Here we summarize phase-space methods, and explain the motivation for using them. The purpose is to transform an exponentially complex Hilbert space into a positive distribution that can be randomly sampled. Normally-ordering methods are the closest to experimental statistics using photon-counting. Representations that are not normally ordered, like the Wigner [37] or Q-function [38] approach, have a rapidly growing sampling error with system size.

III.1 Glauber-Sudarshan representation

In its original formulation, Mandel [9, 10] used the normally ordered Glauber-Sudarshan P-representation [11, 12] to evaluate the expectation value in Eq.(10). The P-representation expands the density operator as a diagonal sum of coherent states [11]

| (12) |

hence its commonly called the diagonal P-representation, where is a multi-mode coherent state vector with eigenvalues and is the P-representation probability distribution. The diagonal P-representation can be used to evaluate expectation values of the product of normally ordered operators due to the relationship

| (13) |

where is a quantum expectation value, is an ensemble of random phase-space samples and is the diagonal-P phase-space ensemble mean.

| (14) |

where . However, this is only valid for classical states such as coherent, thermal and squashed states [39] as they are defined entirely by their diagonal density matrix elements. Since the density operator of nonclassical states contains off-diagonal elements due to quantum superpositions, if the diagonal P-representation is used for simulations of nonclassical states becomes singular and negative. From standard mathematical requirements that it should be real, normalizable, non-negative and non-singular, it is no longer a probability distribution.

III.2 Positive-P representation

To simulate the photon number distributions of nonclassical states one must instead use the normally ordered positive P-representation [40], which is part of a family of generalized P-representations that produce non-singular and exact phase-space distributions for any quantum state. The positive P-representation expands the density operator as a -dimensional volume integral [40]

| (15) |

where the additional dimensions allow off-diagonal density matrix elements to be included in the coherent state basis with eigenvalues . The coherent amplitudes , are independent and can vary along the entire complex plane. We can also take the real part of Eq.(15), since the density matrix is hermitian. The benefit of using the positive P-representation for photon counting experiments is that it includes the diagonal P-representation when the positive-P distribution satisfies . This allows us to define classical phase-space as having , while a nonclassical phase space requires non-vanishing probability of .

Expectation values of products of normally ordered operators for nonclassical states can now be directly calculated from the moments of the positive-P distribution

| (16) |

where we define as the positive-P ensemble mean and have used the c-number replacements and .

To simulate Eq.(10) for nonclassical states requires replacing the total output photon number Eq.(9) by the phase-space observable

| (17) |

such that the general multidimensional probability distribution is

| (18) |

where and the phase-space pattern projector is

| (19) |

We have taken the real part as is required for the applications presented in the next section.

Equation 18 is a more general definition of GCPs than Mandel’s original formulation, since one can now simulate the marginal and multidimensional probabilities of any classical or nonclassical photon counting experiment. To simulate GCPs for input photons in a number state, it is more efficient to use another generalized P-representation: the complex P-representation. In this case includes a complex weight due to expanding the density operator as a contour integral [40]. Although the positive P-representation exists for Fock states, it has larger sampling errors than the complex version [41], meaning fewer stochastic samples are needed to perform accurate simulations.

Unlike methods for threshold detectors [15], Eq.(18) doesn’t require multidimensional inverse discrete Fourier transforms, which increase computation time significantly [16]. Since threshold detector outputs are always binary, the largest number of observable photon counts in a pattern corresponds to a detection event in every output mode, such that where the largest observable grouped count is .

Since PNR detectors can resolve multiple photons arriving at each detector, an experiment or classical algorithm replicating photo-detection measurements can now produce count patterns where , in which case is no longer the largest observable grouped count. Instead of scaling with mode number, numerical simulations scale with photon count bins, where we define the maximum observable grouped count as . For experiments with small mean input photon numbers or high losses, one may have , while low losses and large photon numbers will produce . Typically, we choose to occur when probabilities are of the order or smaller, providing a standard cutoff for very small probabilities as was implemented in previous methods [15, 16].

Since our observable of interest is the total output photon number Eq.(9), this method requires a photon counting experiment to accurately measure the number of counts arriving at each detector, such that . Failure to satisfy this requirement would mean output photons in the summations Eq.(9) would be larger than detected counts, leading to inaccurate comparisons. In some regards this is a benefit, as it allows one to determine whether the experimental counts are actually measuring all possible output photons, as some exact algorithms exploit these unmeasured photons to decrease GBS simulation time [20].

III.3 Squeezed states in phase-space

Initial coherent amplitudes are generated by randomly sampling the phase-space distribution of a specific state. For most states, analytical forms of this distribution exist, allowing one to derive an analytical expression for the initial samples of each state. Here we simply recall previously derived results for squeezed states [15], so that the theory presented is complete, since the form of the positive-P distribution doesn’t depend on the type of measurement, that is, PNR or not.

For applications to GBS, we first consider the ideal case with pure squeezed state inputs. In order to define the input density operator Eq.(1) in the positive P-representation we first expand each state as an integral over real coherent states [42]

| (20) |

with normalization constant and . After some straightforward calculations, the input density operator becomes

| (21) |

where the positive-P distribution for pure squeezed states is [15]

| (22) |

For small , thermalized squeezed states remain nonclassical and produce a modified output distribution that more closely resembles recent experimental distributions using threshold detectors [15, 16]. Due to this non-classicality, it has been shown [16] that even if an experiments claim of quantum advantage against the ideal distribution becomes invalidated by classical algorithms that replicate photo-detection measurements [18, 19, 16], it may still be able to claim quantum advantage against this non-ideal thermalized distribution.

From this model of decoherence, one can introduce a general random sampling formalism to generate initial stochastic samples for any Gaussian input [15]

| (23) |

where are real Gaussian noises and the quadrature variances are defined in Eq.(3). For classical inputs, is real and . For quantum inputs, is imaginary and . Following from Eq.(4), the output stochastic amplitudes used to perform simulations are then obtained via the transformations and where the amplitudes , correspond to measurements of the density matrix .

IV Exact models of GBS grouped count distributions

The photon statistics of pure squeezed states have been studied extensively in quantum optics [34, 35, 7, 31]. Assuming the input modes have equal squeezing parameters and hence, equal photon numbers , the one-dimensional GCP, or total count probability, can be computed exactly. For the case of pure squeezed states transformed by a matrix with uniform amplitude loss coefficient , applied as , the exact total count distribution takes the form [31]

| (24) |

which is defined for , where , is the success probability, and is the Gauss hypergeometric function with , , .

| (25) |

which contains distinct oscillations between even and odd counts. In the limit , it reduces to a Poissonian for the pair counts [35]

| (26) |

while the full distribution is not Poissonian.

Such photon statistics are characteristic of photon bunching which, unlike photon anti-bunching, is not an exclusively nonclassical phenomena [25]. However as squeezed photons are always generated in highly correlated pairs, bunching is indicative of the non-classicality of the measured photons. The presence of even-odd oscillations [36], even for networks with decoherence and loss, provides an explicit test of non-classicality although their absence does not exclude non-classicality.

In this section, we focus on comparing positive-P moments to exact distributions of GBS networks whose size, mean squeezing parameters and loss rates correspond to those found in the PNR experiments of Madsen et al [5]. However, we note that when even-odd count oscillations are present in lossless and ultra-low loss photonic networks, the positive-P moments suffer from slow convergence and large sampling errors.

Since the even-odd count oscillations vanish as soon as one has a high chance of losing a photon, the lossless and ultra-low loss regimes are not relevant to any current or near future experimental network, which are both far from lossless and whose photon number distribution is approximately Gaussian for large system sizes with loss rates as small as . Therefore, a detailed theoretical analysis of the positive P-representation in the lossless and ultra-low loss regimes will not be performed here, as projected methods [21] may converge faster in such regimes.

IV.1 Comparisons with small and large sized networks

To test whether Eq.(18) can be utilized to simulate nonclassical photon counting experiments using PNR detectors with perfect squeezers, photodetectors and photonic networks with uniform photon loss, we first simulate small sized GBS networks and with uniform squeezing and amplitude loss rates and , respectively.

Comparisons between positive-P moments and the exact distribution Eq.(24) are presented in Fig.(1a) and Fig.(1b) for these networks where simulations are performed for an ensemble size of . In the limit of large the positive-P moments converge to moments of a distribution (see Eq.(16)). The exact size of required for a given accuracy varies. An ensemble size of the order is generally needed for relative errors less than , typical of current experimental accuracy.

For both network sizes and loss rates, the positive-P moments converge to the required exact distribution with errors . Increasing the network sizes to and , positive-P moments simulated for loss rates and are presented in Fig.(1c) and Fig.(1d). In both cases, moments converge to the exact distribution with errors such that they are not visible in the graphics. Therefore, in the loss regimes of the current experiments, the positive P-representation provides an accurate method to validate experimental networks. For larger sized networks within the ultra-low loss regime this is also true, as the even-odd count oscillations vanish for much smaller amplitude loss rates.

V Numerical simulations of experimental networks

V.1 Statistical tests

A number of statistical tests have been proposed to validate experimental GBS networks. These include total variation distance [18, 5], cross-entropy measures [18, 19, 33], two and higher-order correlation functions [43, 4], and Bayesian hypothesis testing [4, 39]. Although these tests provide useful insights into the behavior of specific correlations, they are limited to either small size systems or patterns with small numbers of detected photons, as they require computing the probabilities of specific count patterns, which is a #P-hard task.

If these patterns are closer to the ground truth distribution than classical algorithms replicating photo-detection measurements, then its assumed patterns from larger sized systems claiming quantum advantage will also pass these tests. The ground truth distribution is the target distribution GBS aims to sample from when losses are included in the transmission matrix. Generally its assumed to be close to the ideal lossless distribution, although this may not necessarily be the case.

In this work, the primary statistical test used to validate experimental data are chi-square tests [44], which in terms of GCPs are defined as [15]

| (27) |

Here we use the shorthand notation for the stochastic trajectory to denote the positive-P GCP ensemble means of the photon count bin for the grouped count, which coverages to the true theoretical GCP in the limit for any general input state . Simulated ensemble means are then compared to the experimental GCPs formed from photon count patterns.

Chi-square tests are used for two reasons: the first is that its a standard test applied to data from random number generators [14], where the summation is performed over count bins satisfying . The second is that it contains an explicit dependence on both theoretical and experimental sampling errors as , where is the estimated experimental sampling error. This provides a method of accurately determining whether observed differences are caused by large sampling error, as is the case for sparse data.

Output values follow a chi-squared distribution that is positively skewed for small . Performing a Wilson-Hilferty transformation [45], which transforms the chi-square result as , the chi-square distribution converges to a Gaussian distribution with mean and variance for due to the central limit theorem [45, 46]. This allows one to convert chi-square results into measurable probability distances using approximate Z-statistic, or Z-score, tests [47]

| (28) |

which measures how many standard deviations away a test statistic is from its expected normally distributed mean.

The results presented in subsequent subsections use the notation introduced in [16]. Comparisons between theory and experiment for simulations with pure squeezed states input into the experimental -matrix are denoted by the subscript , which we call the ideal ground truth distribution. Our ideal ground truth corresponds to the ground truth distributions used in Ref. [5]. However they differ from the non-ideal, or modified, ground truth distribution that includes realistic experimental effects such as thermalization, and measurement corrections in the theoretical simulations, which are denoted by the subscript .

In all cases, agreement within sampling errors produces test results of and when accounting for stochastic fluctuations in the output statistics. For Z-statistic tests we use a regime of validity with the limit to define accurate comparisons. Above this limit test statistics are more than from their predicted means, corresponding to very small observable probabilities.

V.2 Grouped count probability comparisons

Due to claims of quantum advantage, in this section we focus predominantly on validating count patterns from the -mode high squeezing (HS) parameter and -mode experimental data sets. Summary tables of chi-square and Z-statistic test results for all Borealis data sets can be found in Appendix A.

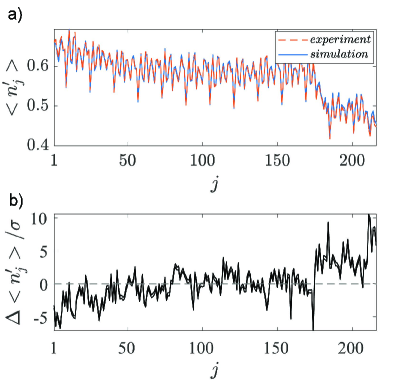

First, we compare the theoretical one-dimensional GCPs of the ideal ground truth distribution to the experimental total counts. Similar comparisons were performed in [5], however it was found that at least for the -mode data set the ideal ground truth total count distribution was incorrect [48], leading to inaccurate comparisons. Results of phase-space simulations are presented in Fig.(2a) for the -mode HS data set and Fig.(3a) for the -mode data set. In both cases, the simulated GCP ideal ground truth distributions appear to closely resemble the experimental data. However, upon closer inspection there are significant differences between the two distributions.

This is first observed numerically from statistical testing, where the -mode HS experiment produces a chi-square result of with and count patterns from the -mode experiment output with . Although both experimental GCPs are far from their pure squeezed state distributions, the -mode distribution is further from its expected normally distributed mean. These numerical differences are also reflected graphically by plotting the normalized difference between distributions , which is plotted in Fig.(2b) for the -mode HS data set and Fig.(3d) for the -mode data set.

Once a thermalization component is added to the input states and a transmission matrix measurement correction is applied as , the positive-P simulated GCPs agree within sampling error to the experimental distributions. The -mode HS data set requires and to output and , while the best-fit theoretical GCP for -mode data uses a similar thermalization component and measurement correction to produce and . This exact agreement between theory and experiment is reflected in the normalized difference plots as seen in Fig.(2d) and Fig.(3d) which have noticeably decreased from their pure squeezed state comparisons.

Similar results are obtained for the -mode and -mode low squeezing (LS) experiments. In these cases, comparisons with the ideal ground truth, which output and respectively, converge to a within sampling error agreement with a thermalized theory such that for the -mode experiment and for the -mode LS experiment.

Despite this excellent agreement between theoretical and experimental GCPs once decoherence and measurement corrections are accounted for, we note that stronger validation tests of quantum advantage based on multidimensional GCPs cannot be performed on these data sets. As the dimension increases, the number of bins generated scales as . If there are too few counts per bin, i.e. the bins become more sparse, the experimental sampling errors of these bins increase dramatically, causing statistical tests to become dominated by sampling errors, outputting artificially small results.

Earlier works [16] on recent threshold detector experiments generating count patterns showed these sampling error limitations arose for a GCP dimension of , such that reliable validation tests could only be performed for . However, the majority of data sets from Borealis generate patterns, causing sampling errors to become dominant at only , limiting comparisons to only .

The exception to this is the -mode experiment whose samples were used benchmark the system architecture through total variation distance tests as the system is small enough that output photo-detection measurements can be replicated exactly. Interestingly, the one-dimensional GCP for this data set is further from its ideal ground truth distribution than most experiments performed using the Borealis quantum network, where and . Unlike data sets claiming quantum advantage, including decoherence and -matrix corrections is no longer enough to obtain a within sampling error agreement between theoretical and experimental GCPs, instead producing statistical test results of and .

Due to the large number of samples, statistical tests of multi-dimensional GCPs can be performed on this data set. A small increase in the GCP dimension to shows the -mode data is even further from both the ideal and non-ideal ground truth distributions once high-order correlations become more statistically prevalent, outputting and . An exponential number of comparison tests can be performed by randomly permuting the measured photon count patters [16], where each permutation allows one to compare different binned correlation moments. As some correlation moments may be closer to their theoretical values than others, Z-scores are likely to vary between permutations, as was observed in threshold detector experiment comparisons [16]. Focusing on comparisons with the non-ideal ground truth, a mean increase in the resulting Z-scores is observed from ten permutations, such that , where denotes a mean over random permutations. Although some permutations are closer to the non-ideal ground truth, with the closest being , the majority are further, where the largest observed Z-score was from a single permutation.

Although this is not unexpected, such results indicate further systematic errors besides those tested are present. We conjecture that the presence of further systematic errors other than decoherence and measurement errors are also present in the -mode HS and -mode implementations. However the small sample size means such errors cannot be resolved in statistical testing for these data sets, limiting a thorough analysis.

V.3 Photon number moments

Finally, for completeness, we simulate the mean output photon number moments for all modes, where summary tables of statistical test results for all data sets is also presented in Appendix A. As with the GCP comparisons, count patterns from the -mode HS and -mode experiments are far from their theoretical first-order moments for pure squeezed state inputs with and for the -mode HS and -mode data sets respectively.

However, unlike the GCP comparisons, decoherence and transmission matrix corrections no longer cause the theoretical moments to be within error of the experimental moments, where for the -mode HS data set and the -mode data set is standard deviations away from its expected mean. Similar trends are present for all data sets where, as was the case for GCPs, the -mode moments are furthest from their expected first order moments than any other experiment. We emphasize that statistical testing is more accurate for this data set due to the large number of experimental samples, allowing one to resolve finer differences between theoretical and experimental photon number moments.

One noticeable feature in the experimental and theoretical moments is the decrease in as , with sharp decreases occurring for later modes as seen in Fig.(4) for and Fig.(5) for , while distinct sharp spikes in the moments occur every modes. This clear structure is present in all data sets from this experiment but is not present in previous implementations with threshold detectors [3, 4] and is likely due to the time-domain multiplexing scheme used to form the linear network in Ref. [5]. The experimental set-up interferes a train of input squeezed photons on multiple fibre delay lines separated by temporal bins of various dimension. Output pulses are then sent into a 1-to-16 mode demultiplexer before measurements are performed by PNR detectors.

Considering its input-output ratio the demultiplexer may be the cause of the periodic spikes. However the structure in the form of the overall decrease in for increasing mode number may be due to the use of fibre delay lines. Although providing a more compact architecture, it comes at the expense of reduced connectivity, i.e. photon interference between all modes isn’t possible. This has the effect of introducing a structure to the output photons that can be exploited by classical samplers such as the tree-width sampler [49].

VI Conclusion

In this paper, we extended a binning method developed by Mandel [9, 10] to simulate nonclassical photon statistics of photon-number resolving detectors using the positive P-representation. We performed validation tests on quantum computers claiming quantum advantage with photon-number resolving detectors.

This method is computationally efficient, with computational run-times of a few minutes on a 12-core desktop at floating point operations/ sec (Linpack Flops) for one-dimensional GCP simulations of the largest sized networks implemented in the paper. Since the positive P-representation accurately computes correlation moments to any order generated in the photonic network, it is a time and resource efficient method of validation compared to classical algorithms that compute the Hafnian to replicate photo-detection measurements, or tensor network approximations [31]. Increasing the GCP dimension causes an increase in computation time, although this is still significantly faster than other validation methods. Directly calculating Hafnians are estimated [31] to take hours on Fugaku, one of the worlds largest supercomputers in 2024, at Flops. This would compute one probability, but rounding errors are unknown, as the calculation has not occurred. Generating enough classical counts for validation using this approach would take [31] on Fugaku, indicating an overall speed-up of using the +P method at these network sizes.

We emphasize that our method does not generate classical counts, so it is strictly for validation of the computational task, and does not replicate the required random counts. Validation tests were performed on GBS data from a recent experiment claiming quantum advantage [5]. We showed that within sampling error agreement between theory and experiment for GCPs is obtained once decoherence is included in the input states, and a transmission matrix measurement error correction is applied. There are still large discrepancies in individual channel count probabilities, possibly because these can be calculated very accurately. This result agrees with a trend obtained previously for threshold detector experiments [15, 16].

These comparisons with threshold detector experiments are slightly misleading. Due to the smaller number of experimental samples from data sets claiming quantum advantage using PNR methods, only a small fraction of the possible tests could be performed. This was because experimental sampling errors were too large to apply more sensitive tests. Based on comparisons of the -mode experimental data, whose sample size was large enough to perform accurate statistical testing, we conjecture that more experimental errors are present in the quantum advantage data sets than those tested for in this paper.

In the longer term, due to its computational efficiency and ability to generate any measurable moment, the positive-P based binning method could be used as a means to provide error correction, either by correcting parameter values as shown in this paper, or by giving feedback so they can be adjusted. We suggest that this could provide a more reasonable route to quantum computational advantage than simply using improved hardware, as is also thought to be the case for logic gate based quantum computers.

Finally, even though we focused here on GBS networks with pure or thermalized squeezed states, both the binning method and positive P-representation can be used to simulate photon statistics of any nonclassical state.

Acknowledgments

ASD would like to thank Nicholas Quesada, Fabian Laudenbach and Jonathan Lavoie for helpful discussions. This research was funded through grants from NTT Phi Laboratories and a Templeton Foundation grant ID 62843.

Appendix A: Summary of all experimental comparisons

The Borealis GBS implemented in [5] performed experiments for varying system sizes of . The -mode experiment was repeated for the mean squeezing parameters and , hence they are distinguished as high squeezing (HS) and low squeezing (LS) experiments, respectively. The ideal ground truth distributions for each data set are formulated from their corresponding lossy transmission matrices and simulated pure squeezed states using squeezing vectors .

The graphs presented above have results of statistical tests for comparisons of theory and experiment predominantly for the -mode HS and -mode data sets, due to claims of quantum advantage. Here we summarize statistical test results for all data sets in [5], starting with one-dimensional GCPs for the ideal ground truth in Table.(1). We use the subscripts and to denote comparisons between experimental data and the ideal and non-ideal ground truth distributions, respectively.

A summary of the non-ideal ground truth comparisons are presented in Table.(2), where the and fitting parameters are found using a Nelder-Mead simplex algorithm which minimizes the distance between experimental and theoretical distributions. As stated earlier, experimental data agrees with their theoretical predictions within sampling error for all data sets bar the -mode experiment. Meanwhile comparisons of the mean output photon number moments are presented in Table.(3) for both the ideal and non-ideal ground truth distributions.

| Mode number | |||

|---|---|---|---|

| -(LS) | |||

| -(HS) | |||

| Mode number | |||||

|---|---|---|---|---|---|

| -(LS) | |||||

| -(HS) | |||||

| Mode number | ||

|---|---|---|

| -(LS) | ||

| -(HS) | ||

Appendix B: Estimating experimental sampling errors of moments

Accurately estimating sampling errors is essential when comparing theoretical simulations with experimental data obtained via sampling. This is particularly important when using statistical tests that are dependent on sampling errors, as incorrect estimates will result in misleading test results.

Theoretical sampling errors are estimated numerically by subdividing the total phase-space ensemble as . Breaking the full ensemble of phase-space samples into sub-ensembles is a common numerical procedure for applications of phase-space representations yielding two complementary benefits: efficient multi-core parallel computing, decreasing computation time depending on available resources, and estimates of theoretical sampling errors [16, 41].

The first sub-ensemble corresponds to sampling from the Gaussian phase-space distribution times and is the number of times the computation is repeated. Provided , the second sub-ensemble allows one to accurately estimate the standard deviation in the ensemble mean as [47, 50], where is the computed sample standard deviation which takes the general form [16, 41]

| (29) |

Here, is some general phase-space observable, denotes the first sub-ensemble mean for the trajectory and is the full ensemble mean. In statistics literature [47, 51], is commonly called the standard error of the mean and its valid for both binned distributions and moments.

For experimental sampling errors defined as , we are interested in estimating the standard deviation of the experimental sample means . Unlike theoretical sampling errors this isn’t performed numerically. Instead, the variances of GCPs for both threshold and PNR detector experiments have Poissonian fluctuations, such that for some general input state . Since the true theoretical GCP is seldom known, we estimate it in phase-space as .

However, estimating for moments (either raw or central) and cumulants is somewhat more complicated as these aren’t probabilities but instead tell one about various properties of a probability distribution. In this appendix, we describe the method of estimating experimental sampling errors of raw moments used in the comparisons presented in the main text. Details on how to estimate sampling errors for central moments and cumulants can be found in [51, 52, 53].

Sampling errors of raw moments

Let be an i.i.d random variable with probability density function , then the -th raw moment is defined as

| (30) |

Suppose one generates samples denoted from the distribution . The -th sample raw moment is then defined as [51, 52, 53]

| (31) |

the expected value of which is an unbiased estimator of

| (32) |

For our purposes, we are interested in the variance . Following from Ref. [51], the expectation value of the squared moment is simplified as

| (33) |

where the summations have been expanded to include instances of equal and non-equal samples. Utilizing the simplifications from Eq.(32) and , the second-order sample raw moment is simplified as

| (34) |

allowing one to estimate the variance in the sample raw moment as [51]

| (35) |

Its possible to extend the above description for univariate statistics to the multivariate case. Let be a vector of i.i.d random variables with multivariate probability density , where . The -th raw moment is then defined as

| (36) |

As in the univariate case, suppose one generates samples of the -th random variable in , denoted , then the multivariate -th sample raw moment is defined as [52]

| (37) |

whose expected value is an unbiased estimate of the multivariate raw moment [53]

| (38) |

Following from the derivation of the univariate case, the variance of the multivariate raw sample moment is obtained as [54]

| (39) |

By setting , sampling errors for higher photon number moments such as , can now be estimated.

Accuracy of sampling error estimates

In this paper, the raw moments of interest are the mean output photon numbers per mode . Using Eq.(35), the experimental sampling errors can therefore be estimated as

| (40) |

where, as was the case for GCPs, we estimate the true theoretical photon number moments in phase-space as . To determine the accuracy of this estimate, we must generate photon count patterns corresponding to PNR detectors from an exactly known model and compare the estimated sampling error with the standard deviation of the mean output photon number of the count patterns from their exact values. To do this, the simplest method is to use classical states.

Classical state PNR photo-detection algorithm

In the case of classical states input into a GBS linear network, one has rotated to a classical phase-space and we can use the coherent amplitudes corresponding to the diagonal P-representation to replicate photo-detection measurements of PNR detectors.

Generally, each stochastic trajectory in corresponds to a single experimental shot. Therefore one can conserve the classical correlations generated within the network by computing the phase-space observable projector of the -th detector , which corresponds to the operator Eq.(5), for the -th trajectory as

| (41) |

where , in the diagonal P-representation and is a photon count of our fake experiment up to some maximum cut-off .

The phase-space projectors determine the probability of observing a specific count . Since each detector can distinguish between multiple oncoming photons, the actual computation involves calculating a vector of probabilities for each stochastic trajectory:

| (42) |

where is the success probability of observing no counts at the -th detector, is the success probability of observing a single count at the -th detector, and so on. The PNR photo-detection measurements are then replicated by randomly sampling Eq.(42), which outputs the fake photon count vector

As the method scales with the total phase-space ensemble size, the number of faked patterns generated is . These are binned in the same way as the experimental data, where is the fake grouped count corresponding to the GCP .

Tests of estimate accuracy

The photon counting distributions for a number of classical states can be exactly computed, one of which is multi-mode thermal states. The one-dimensional GCP of a multi-mode thermal state is obtained from the single-mode distribution [25]

| (43) |

where is the success probability requiring a uniform squeezing parameter and hence equal photon numbers . The single-mode theory follows a geometric distribution which is a special case of the negative binomial distribution: if is an independent and geometrically distributed random variable with parameter , then follows a negative binomial distribution with parameters and .

The one-dimensional GCP theory of multi-mode thermal state inputs therefore follows a negative binomial distribution

| (44) |

For the case of thermal photons transformed by a Haar random unitary, the input and output mean photon numbers are equal and the photon number variance can be computed exactly as [55].

Now that we have a method of generating photon counts and an exactly known model with which to compare those counts, we calculate the accuracy of the estimate Eq.(40) for thermal states with uniform squeezing sent into a lossless linear network described by a Haar random unitary matrix . We generate count patterns using a detector cut-off of and bin them to compute the mean output photon number , which is compared to the exact value in Fig.(6).

Following from Eq.(40), the sampling error of our fake experiment is estimated by setting , such that

| (45) |

If this is an accurate estimate, it should converge to the actual error between moments

| (46) |

which is simply the standard deviation as is our expected mean value.

For the data in Fig.(6), our estimated sampling error is while the actual error is , corresponding to an agreement. If instead we assumed one could estimate the errors as Poissonian fluctuations, such as for GCPs, then which is only of the actual error.

Therefore, Eq.(40) is an accurate method of estimating experimental sampling errors of moments. Naturally, the larger the number of experimental samples the better the estimate will be as the experimental moments will converge to their ground truth values more readily.

References

- Knill et al. [2001] E. Knill, R. Laflamme, and G. J. Milburn, A scheme for efficient quantum computation with linear optics, Nature 409, 46 (2001).

- Aaronson and Arkhipov [2013] S. Aaronson and A. Arkhipov, The Computational Complexity of Linear Optics, Theory of Computing 9, 143 (2013).

- Zhong et al. [2020] H.-S. Zhong, H. Wang, Y.-H. Deng, M.-C. Chen, L.-C. Peng, Y.-H. Luo, J. Qin, D. Wu, X. Ding, Y. Hu, et al., Quantum computational advantage using photons, Science 370, 1460 (2020).

- Zhong et al. [2021] H.-S. Zhong, Y.-H. Deng, J. Qin, H. Wang, M.-C. Chen, L.-C. Peng, Y.-H. Luo, D. Wu, S.-Q. Gong, H. Su, Y. Hu, P. Hu, X.-Y. Yang, W.-J. Zhang, H. Li, Y. Li, X. Jiang, L. Gan, G. Yang, L. You, Z. Wang, L. Li, N.-L. Liu, J. J. Renema, C.-Y. Lu, and J.-W. Pan, Phase-Programmable Gaussian Boson Sampling Using Stimulated Squeezed Light, Phys. Rev. Lett. 127, 180502 (2021).

- Madsen et al. [2022] L. S. Madsen, F. Laudenbach, M. F. Askarani, F. Rortais, T. Vincent, J. F. F. Bulmer, F. M. Miatto, L. Neuhaus, L. G. Helt, M. J. Collins, A. E. Lita, T. Gerrits, S. W. Nam, V. D. Vaidya, M. Menotti, I. Dhand, Z. Vernon, N. Quesada, and J. Lavoie, Quantum computational advantage with a programmable photonic processor, Nature 606, 75 (2022).

- Deng et al. [2023] Y.-H. Deng, Y.-C. Gu, H.-L. Liu, S.-Q. Gong, H. Su, Z.-J. Zhang, H.-Y. Tang, M.-H. Jia, J.-M. Xu, M.-C. Chen, J. Qin, L.-C. Peng, J. Yan, Y. Hu, J. Huang, H. Li, Y. Li, Y. Chen, X. Jiang, L. Gan, G. Yang, L. You, L. Li, H.-S. Zhong, H. Wang, N.-L. Liu, J. J. Renema, C.-Y. Lu, and J.-W. Pan, Gaussian boson sampling with pseudo-photon-number-resolving detectors and quantum computational advantage, Phys. Rev. Lett. 131, 150601 (2023).

- Hamilton et al. [2017] C. S. Hamilton, R. Kruse, L. Sansoni, S. Barkhofen, C. Silberhorn, and I. Jex, Gaussian boson sampling, Phys. Rev. Lett. 119, 170501 (2017).

- Quesada et al. [2018] N. Quesada, J. M. Arrazola, and N. Killoran, Gaussian boson sampling using threshold detectors, Physical Review A 98, 062322 (2018).

- Mandel and Wolf [1965] L. Mandel and E. Wolf, Coherence Properties of Optical Fields, Rev. Mod. Phys. 37, 231 (1965).

- Mandel and Wolf [1995] L. Mandel and E. Wolf, Optical Coherence and Quantum Optics (Cambridge University Press, Cambridge, 1995).

- Glauber [1963a] R. J. Glauber, Coherent and Incoherent States of the Radiation Field, Phys. Rev. 131, 2766 (1963a).

- Sudarshan [1963] E. C. G. Sudarshan, Equivalence of Semiclassical and Quantum Mechanical Descriptions of Statistical Light Beams, Phys. Rev. Lett. 10, 277 (1963).

- Bassham III et al. [2010] L. E. Bassham III, A. L. Rukhin, J. Soto, J. R. Nechvatal, M. E. Smid, E. B. Barker, S. D. Leigh, M. Levenson, M. Vangel, D. L. Banks, et al., Sp 800-22 rev. 1a. a statistical test suite for random and pseudorandom number generators for cryptographic applications (2010).

- Knuth [2014] D. E. Knuth, Art of computer programming, volume 2: Seminumerical algorithms (Addison-Wesley Professional, 2014).

- Drummond et al. [2022] P. D. Drummond, B. Opanchuk, A. Dellios, and M. D. Reid, Simulating complex networks in phase space: Gaussian boson sampling, Phys. Rev. A 105, 012427 (2022).

- Dellios et al. [2023] A. S. Dellios, B. Opanchuk, M. D. Reid, and P. D. Drummond, Validation tests of gbs quantum computers give evidence for quantum advantage with a decoherent target (2023), arXiv:2211.03480 [quant-ph] .

- Glauber [1963b] R. J. Glauber, The Quantum Theory of Optical Coherence, Phys. Rev. 130, 2529 (1963b).

- Villalonga et al. [2021] B. Villalonga, M. Y. Niu, L. Li, H. Neven, J. C. Platt, V. N. Smelyanskiy, and S. Boixo, Efficient approximation of experimental gaussian boson sampling, arXiv preprint arXiv:2109.11525 (2021).

- Oh et al. [2023] C. Oh, L. Jiang, and B. Fefferman, Spoofing Cross-Entropy Measure in Boson Sampling, Phys. Rev. Lett. 131, 010401 (2023).

- Bulmer et al. [2022] J. F. F. Bulmer, B. A. Bell, R. S. Chadwick, A. E. Jones, D. Moise, A. Rigazzi, J. Thorbecke, U.-U. Haus, T. Van Vaerenbergh, R. B. Patel, I. A. Walmsley, and A. Laing, The boundary for quantum advantage in Gaussian boson sampling, Sci. Adv. 8, eabl9236 (2022).

- Drummond and Reid [2016] P. D. Drummond and M. D. Reid, Coherent states in projected hilbert spaces, Physical Review A 94, 063851 (2016).

- Drummond and Ficek [2004] P. D. Drummond and Z. Ficek, eds., Quantum Squeezing (Springer-Verlag, Berlin, Heidelberg, New York, 2004).

- Shi and Byrnes [2022] J. Shi and T. Byrnes, Effect of partial distinguishability on quantum supremacy in Gaussian Boson sampling, npj Quantum Inf 8, 54 (2022).

- Drummond and Opanchuk [2020] P. D. Drummond and B. Opanchuk, Initial states for quantum field simulations in phase space, Physical Review Research 2, 033304 (2020).

- Walls and Milburn [2008] D. Walls and G. Milburn, Quantum Optics (Springer, 2008).

- Sperling et al. [2012] J. Sperling, W. Vogel, and G. S. Agarwal, True photocounting statistics of multiple on-off detectors, Phys. Rev. A 85, 023820 (2012).

- Kruse et al. [2019] R. Kruse, C. S. Hamilton, L. Sansoni, S. Barkhofen, C. Silberhorn, and I. Jex, Detailed study of gaussian boson sampling, Physical Review A 100, 032326 (2019).

- Quesada and Arrazola [2020] N. Quesada and J. M. Arrazola, Exact simulation of gaussian boson sampling in polynomial space and exponential time, Physical Review Research 2, 023005 (2020).

- Barvinok [1999] A. Barvinok, Polynomial Time Algorithms to Approximate Permanents and Mixed Discriminants Within a Simply Exponential Factor, Random Struct. Alg. 14, 29 (1999).

- Rudelson et al. [2016] M. Rudelson, A. Samorodnitsky, and O. Zeitouni, Hafnians, perfect matchings and Gaussian matrices, Ann. Probab. 44, 10.1214/15-AOP1036 (2016).

- Deshpande et al. [2022] A. Deshpande, A. Mehta, T. Vincent, N. Quesada, M. Hinsche, M. Ioannou, L. Madsen, J. Lavoie, H. Qi, J. Eisert, D. Hangleiter, B. Fefferman, and I. Dhand, Quantum computational advantage via high-dimensional Gaussian boson sampling, Sci. Adv. 8, eabi7894 (2022).

- Qi et al. [2020] H. Qi, D. J. Brod, N. Quesada, and R. García-Patrón, Regimes of classical simulability for noisy gaussian boson sampling, Physical review letters 124, 100502 (2020).

- Oh et al. [2024] C. Oh, M. Liu, Y. Alexeev, B. Fefferman, and L. Jiang, Classical algorithm for simulating experimental gaussian boson sampling, Nature Physics , 1 (2024).

- Huang and Kumar [1989] J. Huang and P. Kumar, Photon-counting statistics of multimode squeezed light, Phys. Rev. A 40, 1670 (1989).

- Zhu and Caves [1990] C. Zhu and C. M. Caves, Photocount distributions for continuous-wave squeezed light, Phys. Rev. A 42, 6794 (1990).

- Mehmet et al. [2010] M. Mehmet, H. Vahlbruch, N. Lastzka, K. Danzmann, and R. Schnabel, Observation of squeezed states with strong photon-number oscillations, Phys. Rev. A 81, 013814 (2010).

- Wigner [1932] E. Wigner, On the Quantum Correction For Thermodynamic Equilibrium, Phys. Rev. 40, 749 (1932).

- Husimi [1940] K. Husimi, Some formal properties of the density matrix, Proc. Phys. Math. Soc. Jpn. 22, 264 (1940).

- Martínez-Cifuentes et al. [2023] J. Martínez-Cifuentes, K. M. Fonseca-Romero, and N. Quesada, Classical models may be a better explanation of the Jiuzhang 1.0 Gaussian Boson Sampler than its targeted squeezed light model, Quantum 7, 1076 (2023).

- Drummond and Gardiner [1980] P. D. Drummond and C. W. Gardiner, Generalised p-representations in quantum optics, Journal of Physics A: Mathematical and General 13, 2353 (1980).

- Opanchuk et al. [2018] B. Opanchuk, L. Rosales-Zárate, M. D. Reid, and P. D. Drummond, Simulating and assessing boson sampling experiments with phase-space representations, Physical Review A 97, 042304 (2018).

- Adam et al. [1994] P. Adam, I. Földesi, and J. Janszky, Complete basis set via straight-line coherent-state superpositions, Physical Review A 49, 1281 (1994).

- Phillips et al. [2019] D. Phillips, M. Walschaers, J. Renema, I. Walmsley, N. Treps, and J. Sperling, Benchmarking of gaussian boson sampling using two-point correlators, Physical Review A 99, 023836 (2019).

- Pearson [1900] K. Pearson, X. on the criterion that a given system of deviations from the probable in the case of a correlated system of variables is such that it can be reasonably supposed to have arisen from random sampling, The London, Edinburgh, and Dublin Philosophical Magazine and Journal of Science 50, 157 (1900).

- Wilson and Hilferty [1931] E. B. Wilson and M. M. Hilferty, The Distribution of Chi-Square, Proc. Natl. Acad. Sci. U.S.A. 17, 684 (1931).

- Johnson [1970] N. L. Johnson, Continuous Univariate Distributions, Houghton Mifflin Series in Statistics (Houghton Mifflin, Boston, 1970).

- Freund and Wilson [2003] R. J. Freund and W. J. Wilson, Statistical Methods (Elsevier, 2003).

- [48] Private communication. Photon counting distribution for the ideal ground truth of the 288-mode data was independently cross-validated. The distribution presented in the Borealis paper is closer to the experimental data than the actual ideal ground truth presented here.

- Oh et al. [2022] C. Oh, Y. Lim, B. Fefferman, and L. Jiang, Classical Simulation of Boson Sampling Based on Graph Structure, Phys. Rev. Lett. 128, 190501 (2022).

- Kloeden and Platen [1992] P. E. Kloeden and E. Platen, Stochastic Differential Equations, in Numerical Solution of Stochastic Differential Equations (Springer Berlin Heidelberg, Berlin, Heidelberg, 1992) pp. 103–160.

- Rao [2009] C. R. Rao, Linear Statistical Inference and Its Applications (John Wiley & Sons, 2009).

- Fisher [1930] R. A. Fisher, Moments and Product Moments of Sampling Distributions, Proceedings of the London Mathematical Society s2-30, 199 (1930).

- McCullagh [2018] P. McCullagh, Tensor Methods in Statistics: Monographs on Statistics and Applied Probability, first edition. ed., CRC Revivals (Chapman and Hall/CRC, Boca Raton, FL, 2018).

- Kendall et al. [1987] M. G. M. G. Kendall, A. Stuart, and J. K. Ord, Kendall’s advanced theory of statistics, 5th ed. (C. Griffin, London, 1987).

- Loudon [1983] R. Loudon, The Quantum Theory of Light, 2nd ed., Oxford Science Publications (Clarendon Press, Oxford, 1983).