UWB Radar SLAM: an Anchorless Approach in Vision Denied Indoor Environments

Abstract

LiDAR and cameras are frequently used as sensors for simultaneous localization and mapping (SLAM). However, these sensors are prone to failure under low visibility (e.g. smoke) or places with reflective surfaces (e.g. mirrors). On the other hand, electromagnetic waves exhibit better penetration properties when the wavelength increases, thus are not affected by low visibility. Hence, this paper presents ultra-wideband (UWB) radar as an alternative to the existing sensors. UWB is generally known to be used in anchor-tag SLAM systems. One or more anchors are installed in the environment and the tags are attached to the robots. Although this method performs well under low visibility, modifying the existing infrastructure is not always feasible. UWB has also been used in peer-to-peer ranging collaborative SLAM systems. However, this requires more than a single robot and does not include mapping in the mentioned environment like smoke. Therefore, the presented approach in this paper solely depends on the UWB transceivers mounted on-board. In addition, an extended Kalman filter (EKF) SLAM is used to solve the SLAM problem at the back-end. Experiments were conducted and demonstrated that the proposed UWB-based radar SLAM is able to map natural point landmarks inside an indoor environment while improving robot localization.

Index Terms:

Range Sensing, SLAM.I Introduction

Simultaneous localization and mapping (SLAM) is essential for a mobile robot to navigate through a previously unexplored environment to accomplish a given task. SLAM algorithms usually rely on the sensors attached to the robot. Hence, it is of interest to explore new sensing technologies to overcome the limitations of the existing methods.

At present, the most common exteroceptive sensors in SLAM are light detection and ranging (LiDAR) and optical camera systems[1, 2]. LiDAR uses laser beams to measure distance using time-of-flight, thus ambient lighting conditions do not affect its performance. LiDAR is widely used in state-of-the-art SLAM systems due to high accuracy and high sample density [3, 4]. Unlike LiDAR, typical visual cameras can be used in well-lit surroundings only. Furthermore, the light sources of both LiDAR and camera operate near the visible frequency region in the electromagnetic spectrum (see Fig. 1a). As a result, these optical measuring instruments are prone to fail under low visibility. This critically affects autonomous systems in adverse indoor conditions such as smoke and mirrors [5, 6, 7].

Sound navigation and ranging (sonar) is a good alternative to perform under low visibility, especially in underwater environments [8, 9]. Sonar deploys acoustic waves to detect objects. However, both optical and sonar systems suffer from the refraction effect. This is a key issue while navigating in high temperature environments, especially when it comes to fire-rescue missions and operations inside mines.

Considering these facts, recently, there have been several studies to exploit radio frequency (RF) bands instead of dedicated sensors for SLAM. RF signals are not significantly affected by temperature or low visibility weather [10]. Hence, the robot can be localized by ubiquitous narrowband RF systems such as Wi-Fi and Bluetooth which use received signal strength indication (RSSI) with trilateration or comparing the radio fingerprints [11, 12]. However, these systems depend on the existing infrastructure such as Wi-Fi access points and Bluetooth beacons. On the other hand, wideband RF systems such as mmWave frequency-modulated continuous wave (FMCW) radar and pulse-modulated ultra-wideband (UWB) radar are also used in SLAM systems [5, 13, 14, 15, 16].

This paper proposes a UWB radar-based system for a single mobile robot (mono-agent) to solve the SLAM problem in a vision denied indoor setting (see Fig. 1b) while generating a landmark-based map and estimating the pose of the robot. Most importantly, the proposed method entirely depends on the onboard sensors of the robot. Furthermore, a simple outlier filtering scheme has been proposed before fusing the radar observations with odometry.

The contributions of this paper are summarized as follows.

-

•

We present an UWB radar-based anchorless SLAM system mobile robot which is capable of loop closure using point landmarks identified inside indoor environments;

-

•

We propose removal of detected false observations using density-based spatial clustering of applications with noise (DBSCAN) to detect point landmarks;

-

•

Experiments were performed to evaluate the proposed approach in different indoor environments;

The rest of the paper is organised as follows. Related work is discussed in Section II. Section III gives an insight to UWB including the radar sensor used in our approach. A detailed explanation of the proposed approach is given in section IV which covers all the key aspects: signal acquisition and processing, trilateration, outlier removal using density-based clustering, and EKF SLAM with unknown correspondences. Section V validates the proposed approach in a real-world scenario. Finally, Section VI concludes the paper.

II Related Work

Most UWB-based robot localization approaches use anchor-tag sensor configurations. In such systems, a single or multiple anchors are usually fixed to known locations in the environment and the tags are mounted on the robots. The anchors are localizing the robot using two-way ranging (TWR)-based trilateration or by employing both TWR and angle-of-arrival information [19, 20, 21]. The predominant limitation of anchor-tag approach is that the robot depends on the existing infrastructure (i.e. pre-installed anchors).

The other major approach towards anchorless collaborative localization is by using peer-to-peer range measurements between multi-agents [22, 23]. In addition to localization, map building has also been studied under adverse conditions using UWB radar [10], [24].

There have been several studies which use a posteriori knowledge of the environment to perform UWB radar SLAM without anchors [15, 25]. These studies have been inspired from BatSLAM [26] which is an adaptation of RatSLAM [27]. The basic idea is to mimic the rat’s brain where it gathers experiences about the surrounding by remembering its pose and distinct visual scenes. Nonetheless, this method keeps a large dataset of previous observations (e.g. spectrograms [15] or cochleograms [26]) and requires high computational cost in order to compare the current place descriptor with the database. The major limitation is that the radar observations being susceptible to minor perturbations in the environment due to multipath propagation.

Moreover, Deißler et al. [28, 29] have used an onboard Bat-type UWB radar to perform SLAM using the detected features in the environment such as walls, points and corners. They have mainly focused on evaluating mapping accuracy using a precisely moving mechanical platform as well as odometry. However, they have not performed loop closure with their trajectory and the move-stop-rotation policy during the observations is quite tedious in practice. Moreover, their antenna setup takes a considerable footprint compared to off-the-shelf UWB radar modules.

In contrast to the above methods, the proposed system uses noisy odometry data along with the observations from off-the-shelf UWB radar modules to realize anchorless SLAM. We have systematically evaluated both mapping and localizing performance of the proposed system inside indoor environments with loop closures. Leveraging on simplicity in design and versatility, UWB radar SLAM systems generate sufficiently accurate results, and is deemed to be low cost and consume less power compared to FMCW radar [10].

III Background of Ultra-wideband

UWB is referred to in radio technology as a -10dB bandwidth that is larger or equal to 500 MHz or a fractional bandwidth greater than 20%. This technology is generally used to communicate between two devices and for indoor positioning (i.e. TWR discussed earlier) [30]. However, this study mainly focuses on utilizing UWB radar towards anchorless mobile robot SLAM. UWB radar is deemed to have a high range resolution and ability to distinguish multiple targets due to high bandwidth and ultra-narrow pulses [31]. Hence, UWB radar is also called impulse radar UWB (IR-UWB) and it is a prominent sensing principle for vital sign detection [32, 33, 34].

There are two types of radar: pulsed and continuous waves (CW) in which UWB falls under the pulsed category. Moreover, there are three types of radar systems based on antenna arrangement. i) Monostatic (i.e. transmitter and receiver collocated) ii) Bistatic (i.e. transmitter and the receiver are distant), and iii) Multistatic (i.e. a combination of monostatic and bistatic systems with a shared area of coverage) [35].

The principle sensor of the proposed setup is XeThru X4M300 by Novelda (see Fig. 2a). It is a monostatic IR-UWB radar module with inbuilt directional antennas for the transmitter and receiver (see Fig. 2b). Hence, this module is capable of ranging on its own[10], unlike tag-to-tag systems [36]. As shown in the radiation patterns in Fig. 3, the main lobe has an opening angle of 65∘ in both azimuth and elevation with a detection range up to 9.4 m and the back lobe effect is inconsiderable [37, 17, 18].

As mentioned in Section I, the other popular technology in the context of radar SLAM is mmWave (which belongs to FMCW). However, UWB is deemed to have higher accuracy and signal to noise ratio (SNR) compared to FMCW. In addition, FMCW tends to attenuate more in indoor settings [33, 38]. Considering these facts, UWB is proposed in this study to achieve anchorless radar SLAM.

IV System Overview

This section explains all the building blocks of the proposed radar SLAM system. As shown in Fig. 4, there are five major building blocks in the proposed SLAM system: acquire observations (radar signal acquisition) and preprocessing, then trilaterating the detections and remove outliers to obtain clear observations. Finally, those observations are combined with the odometry motion model in EKF SLAM.

IV-A Radar Signal Acquisition

In this paper, four XeThru UWB radar modules are attached to the either side of the robot as shown in Fig. 5. The minimum requirement to proceed with trilateration is having at least two sensors at one side. Hence, additional sensors can be added if required to improve both accuracy and precision of the observations via trilateration. The detection zone of each module lies between 0.2 m and 9.4 m. Peak amplitudes in the radar readings correspond to radar observations from obstacles in its field of view (FOV) as shown in Fig. 6. The subsequent sections will explain the procedure and limitations encountered while acquiring the peak amplitudes.

By default, the XeThru X4M300 has downconverted its range readings to baseband with 181 samples (bins) to ease post-processing. As a result, the spatial resolution of the baseband output is limited to 5.1 cm. In order to improve the resolution, the default settings were neglected, and the raw readings were taken into consideration (see Fig. 6). The raw readings have 1431 bins, so that the range is sampled at 6.4 mm intervals, which is much better than the baseband output. However, the raw signal is very noisy as expected. Thus, the next part explains the steps carried out to denoise the signal in order to obtain the necessary observations.

IV-B Signal Processing

Two steps are proposed to smoothen the noisy signal and they are as follows:

-

1.

Basic statistical filter (all amplitudes under the mean amplitude will be removed. i.e. equalled to )

-

2.

Then use Savitzky-Golay (SG) filter (fits a polynomial of order in a given frame length )

Initially, a basic statistical filter removes background white noise with near-zero amplitude. As a result, only the significant data points are sent to the SG filter, thus reducing the computational load. SG filter is a finite impulse response low-pass filter which fits number of noisy data points to a polynomial of order using least squares method. SG filter can smoothen a noisy signal while preserving its shape and peaks [39] and an example is illustrated in Fig. 6b.

After smoothing the signal, local maxima (peak amplitudes) have to be found in order to obtain radar detections. There are two hyper-parameters to be considered: minimum peak height () and minimum peak prominence () (i.e. how much the peak stands out relative to other peaks). These parameters were adjusted using trial and error methods so that radar detections with low radar cross-sections (RCS) are omitted. As mentioned earlier, a peak corresponds to a target. Thus, two peaks from two nearby radar sensors are trilaterated to obtain 2D positional observations.

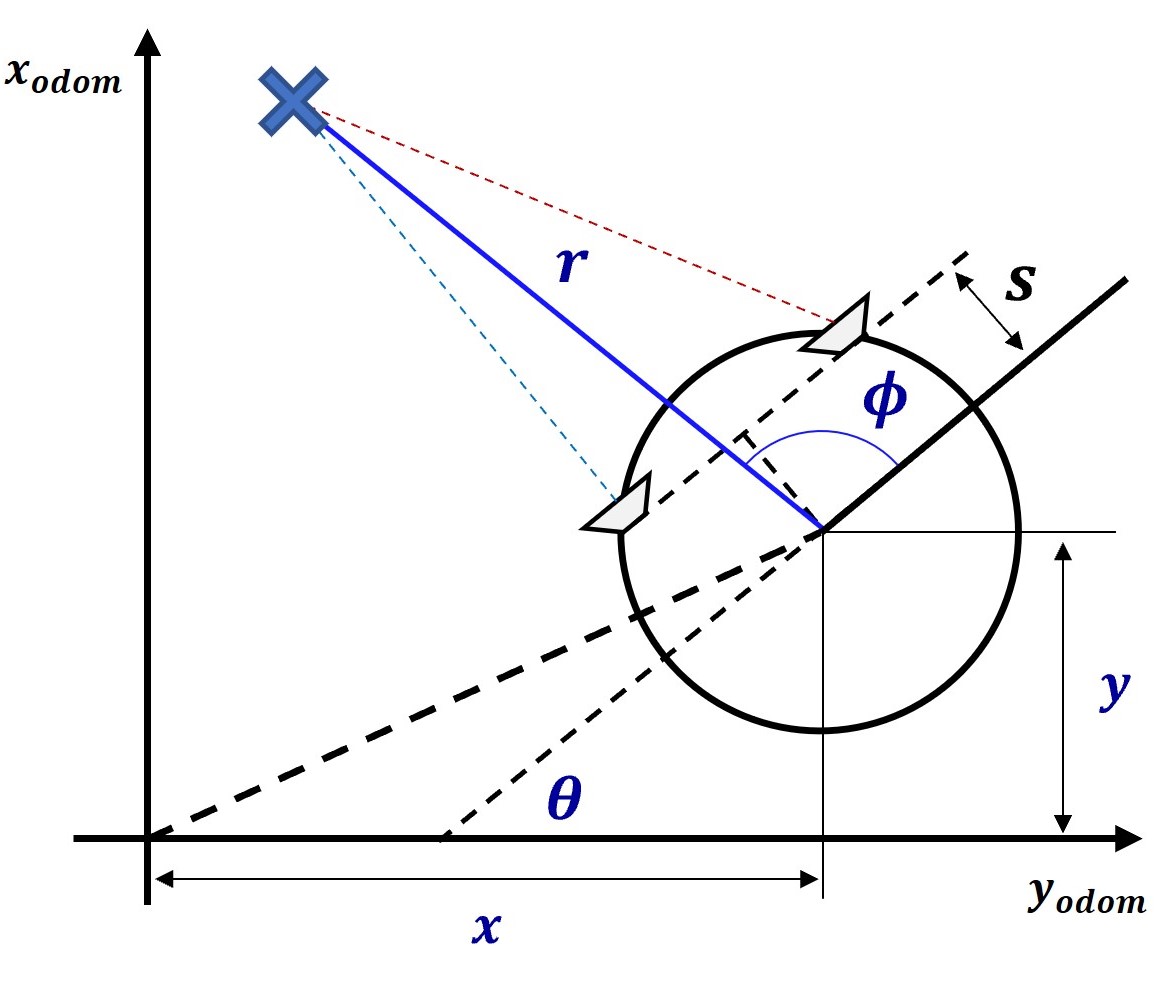

IV-C Trilateration

Trilateration is the fundamental principle behind most of the metrology systems in both 2D and 3D domains [40]. The basic idea of trilateration is depicted in Fig. 7 using a pair of UWB radar modules. After detecting the peaks from each radar scan cycle, the corresponding ranges to the obstacles can be calculated (i.e. ). Here, is a user defined constraint to omit observations beyond that range (i.e. similar to laser_min_dist and laser_max_dist in Hector SLAM). According to the Eqn. (1), depends on the azimuthal FOV () of the sensor. is the distance between two radar sensor modules.

| (1) |

It is important that the intersection point (i.e. target) belongs to both radar sensors. Therefore, and angles are compared with and other intersections are omitted:

| (2) |

Radar readings to range and bearing conversion can be obtained via:

| (3) |

| (4) |

These and are observations (i.e. ) used in EKF SLAM together with the robot pose information from odometry data [41].

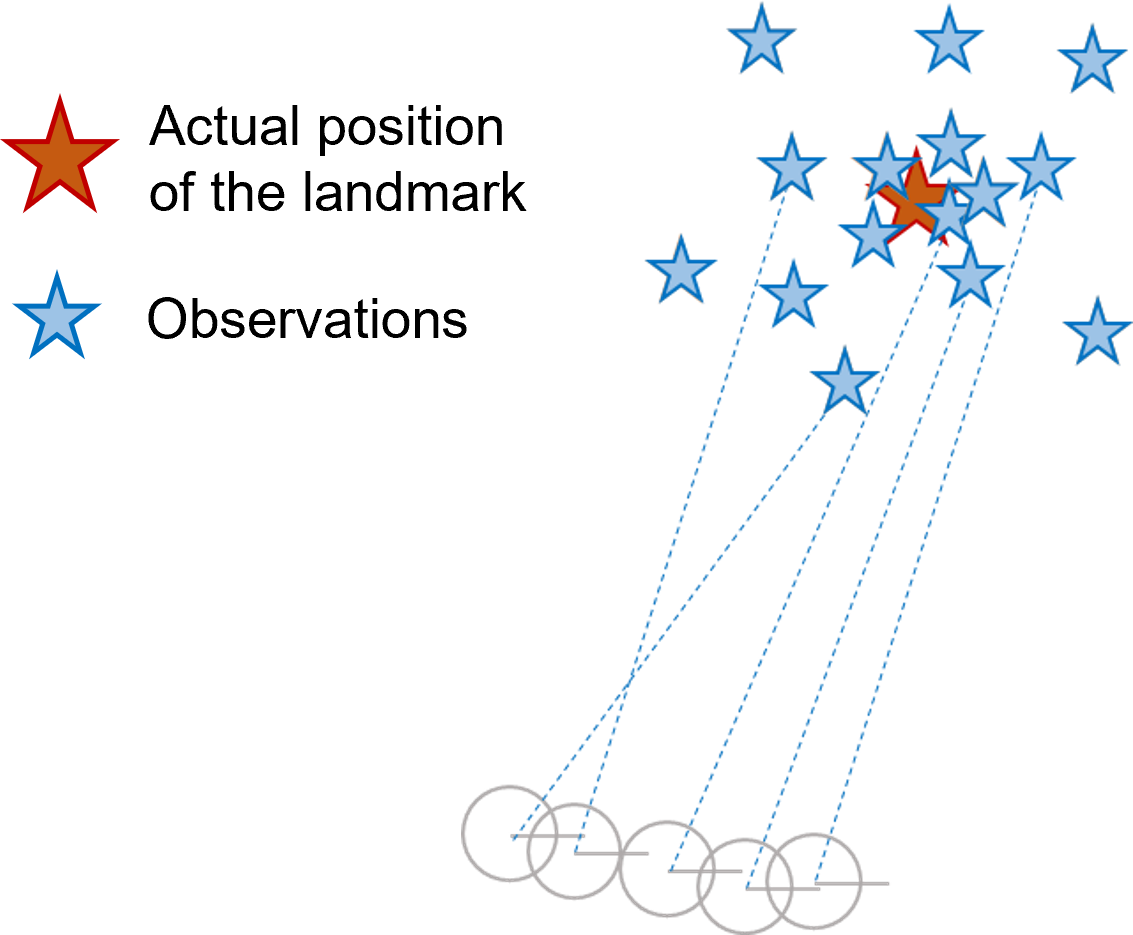

IV-D Outlier Filtering using DBSCAN

Although the preprocessing steps filter out the unnecessary observations in Section IV-C, there still exist noisy sensory data which lead to outliers after trilateration as depicted in Fig. 8a. This figure shows known robot poses and corresponding observations. Obviously, the expected observations still have a certain uncertainty, but that will be handled by the EKF SLAM algorithm later. In order to obtain a result similar to Fig. 8b, this paper proposes density-based spatial clustering of applications with noise (DBSCAN) to filter the outliers as illustrated in Fig. 9. DBSCAN has been used in FMCW radar to filter outliers in a point cloud [42]. We are following a similar approach with UWB radar.

The proposed DBSCAN-based method maintains a provisional observation list before they are sent to the EKF SLAM. The provisional observation list is clustered using DBSCAN and if a cluster is identified, its centroid is sent to EKF SLAM as the observation (see Fig. 9c). This method has three hyper-parameters: size of the list of provisional observations (window_size), minimum number of points (i.e. observations) per cluster (min_opc), and the search radius (search_rad).

window_size is used to determine the number of states (with observations) of the robot to be collected before applying DBSCAN. A state is collected once the robot moves a threshold distance or an angle (min_disp) which is similar to map_update_distance_thresh and map_update_angle_thresh in Hector SLAM. The robot motion is determined using wheel odometry information. Hence, the higher the number of states to be collected (i.e. window_size), the more odometry-dependant the observations will be. On the other hand, the window_size cannot be decreased to a small number. If the window_size is reduced, there will be only a few observations, thus there will not be much difference between outliers and correct observations.

IV-E EKF SLAM

Input: motion uncertainty , observation uncertainty , window_size for outlier removal , observation update displacement threshold min_disp, EKF SLAM: initial mean , initial covariance , Mahalanobis distance threshold , Odometry data, Observations from UWB radar.

Output: robot pose and covariance.

EKF SLAM is used in this study in order to demonstrate the idea of UWB radar SLAM. EKF SLAM uses an extended Kalman filter (EKF) to estimate the pose of the robot and the positions of landmarks. When it comes to anchor-tag systems where anchors are landmarks, the anchors can embed unique identifications to inform the tag about the observation origin (known correspondences). However, as discussed in the previous sections, the final observation from the UWB radar does not contain any unique information about the landmark. Therefore, unlike in anchor-tag systems, the proposed method uses EKF SLAM with unknown correspondences algorithm. The Mahalanobis distance is calculated between each previously predicted landmark and a new observation before performing data association with respect to the threshold . The steps to be followed while obtaining trilaterated radar observations are in Algorithm 1.

V Experiments and Results

A TurtleBot2 research robot is used in the experiment as shown in Fig. 10. RPLIDAR-A2 laser range scanner is mounted on top of the robot and is used to perform Hector SLAM in order to obtain the ground truth. The radar modules are operating at a frequency of 5 Hz while the robot is moving at 5 cm/s.

1) Smoke Experiments: In order to evaluate the performance of UWB radar in a vision-denied environment, we partially enclosed an indoor space with a point feature and performed Hector SLAM while obtaining UWB radar observations in clear visibility. After that, the space was filled with dense smoke and same steps were followed. As shown in Fig. 11, UWB radar has performed the same regardless of visibility, whereas LiDAR has performed poorly.

Meanwhile, we examined the influence of the proposed filtering algorithm in Section IV-D using wheel odometry as pose estimations. The filtered observations are more refined and clustered around the expected point landmarks (see Fig. 11). During SLAM, the filter collects observations from window_size number of poses ahead of the current EKF pose estimation. These provisional observations are collected using only the odometry information. Hence, a small window_size is preferred to avoid odometry dependency on the filtering scheme. After collecting the provisional observations, there should be at least min_opc set of observations inside a radius of search_rad. Hence, it is preferred to have high min_opc and low search_rad to achieve maximum filter performance with minimum outliers. However, if these thresholds are initialized beyond practical extremities anticipating a high filter performance, the filter may end up omitting inliers as well.

The main objective in hyper-parameter tuning is to obtain low-noise observations at the front-end. The detection range upper bound was chosen to detect only the nearby objects in order to avoid distant detections which may have resulted from multipath propagations, and to neglect the walls (i.e. line features). Later, we tuned min_ph and min_pp in order to identify prominent peaks from the UWB radar observations within the selected detection range, thus we could detect objects with large RCS.

| Parameter | Range | Remarks |

|---|---|---|

| f_len |

|

SG filter: The number of data points to

which the polynomial is fitted. Must be an odd number. |

|

n < f_len

|

SG filter: Order of the polynomial to

be fitted. |

|

| min_ph | Minimum peak height. | |

| min_pp | Minimum peak prominence. | |

|

cm

cm |

User-defined upper bound of the radar

range. Adjusted to detect only the nearby targets. |

|

| Azimuth opening angle. | ||

|

cm

cm |

Distance between receiver antennas

(RX) of two radar modules. Depends on the robot geometry. Higher is better. |

|

|

cm

cm |

Perpendicular distance from the radars

to the base footprint. Depends on the robot geometry. |

|

| min_disp |

mm or

0.1 rad |

Updates the provisional observations

list and the SLAM once the robot moves exceeding these predefined minimum displacement thresholds. |

| window_size | Size of the provisional observation list to which DBSCAN is applied. | |

| min_opc |

Minimum observations per cluster for

DBSCAN-based outlier filtering. |

|

| search_rad | cm | Search radius of clusters with min_opc. |

|

|

Motion uncertainty of the robot between two poses. Depends on min_dist. Larger the min_dist is, larger the uncertainty will be, and vice versa.

Lab experiment: mm Classroom exp: mm |

|

|

|

Observation uncertainty. Large uncertainty due to inherent measurement

noise. |

|

|

Mahalanobis distance threshold.

Lab experiment: Classroom exp: |

Table I summarises the hyper-parameters used during the experiments included in this paper. It is important to notice that all these parameters are interrelated. If the proposed system is to be recreated using different UWB radar modules or in an environment consisting of objects with low RCS, all these parameters may have to be retuned. However, the intuition behind parameter selection is essential to improve the overall performance of the proposed SLAM system.

2) SLAM Experiments: Two environments were selected to evaluate the proposed UWB radar SLAM: a highly cluttered lab environment and a classroom as shown in Fig. 12. These environments consist of point features with large RCS, such as vertical metal rods. Radar SLAM creates a landmark-based map while estimating the robot pose, whereas Hector SLAM creates a 2D occupancy grid map. The slam_out_pose published by Hector SLAM was taken as the ground truth reference. We evaluate the accuracy of the proposed system with root mean squared (RMS) absolute trajectory error (ATE) after aligning the estimated poses with ground truth using the iterative closest point (ICP) algorithm.

In the first experiment, the robot was teleoperated in a square-shaped trajectory inside a lab. The ground truth and wheel odometry are superpositioned in Fig. 13a. Compared to the ground truth, the odometry has shifted anticlockwise during the experiment. Although the UWB radar SLAM initially tends to follow the odometry, it finally identifies previously known landmarks and corrects its pose. Both ground truth and radar SLAM have started and stopped at almost the same position (see Fig. 13b). Compared to the ground truth, the estimated positions had a ATE of 12 mm.

Notice that the landmarks: #3-7, #10 and #12-15 were not captured by Hector SLAM, but they were detected by radar SLAM (see Fig. 13b). However, rest of the landmarks coincide with their ground truth with a very small uncertainty. The reason is that the RPLIDAR-A2 captures observations on a 2D plane whereas the UWB radar antenna has a cone-shaped main lobe in 3D space. As a result, the UWB radar has been able to detect observations which were not visible to the 2D LiDAR. This is one of the advantages of radar, despite unnecessary targets can also be detected in its FOV.

The second experiment was conducted in a classroom where study tables could be detected as landmarks. The carpeted floor tends to drift the odometry more than in the lab floor. Moreover, the landmarks were somewhat distant from each other ( 1 m apart). Hence, Both motion uncertainty and the Mahalanobis distance threshold had to be increased compared to the first experiment (). However, the results were quite satisfactory (see Fig. 14). Similar to the first experiment, the radar SLAM initially tends to follow odometry and after detecting a previously observed landmark, it corrects its pose. Compared to the ground truth, the estimated poses had a ATE of 62 mm.

Notice that in some instances, multiple landmarks are assigned in close proximity despite being belonged to the same landmark in the ground truth (see Fig. 14). This is one of the issues in SLAM with unknown correspondences. This is worse when it comes to high motion uncertainty due to noisy odometry. It can be suggested to increase , but there will discrepancy with nearby landmarks after a certain limit. Hence, it is of interest to implement a suitable map management or a feature matching methodology to identify previously visited landmarks in the future.

VI Conclusion

This paper presented an anchorless approach using UWB radar for SLAM in an unknown environment. XeThru X4M300 IR-UWB sensors were used to obtain radar observations. Instead of the default downconverted baseband output with large range samples, filtered raw observations were used with small range samples to improve spatial accuracy. The proposed method trilaterates two radar observations to identify the point landmarks in the environment. DBSCAN-based algorithm is then proposed for further filtering before applying EKF SLAM with unknown correspondences.

Experiments were carried out in a lab environment and a classroom in order to validate the proposed method. The results show that the robot has been able to mitigate the odometry drift with sufficient accuracy while reconstructing the map of the environment successfully. If higher accuracy is desired, a radar sensor array can be used to improve the observation accuracy. On the other hand, currently, the proposed system is incapable of classifying the feature type (i.e. point and line features). Hence, we intend to develop a robust feature classification method in the future. Moreover, we plan on applying machine learning to uniquely identify landmarks using their inherent UWB radar signatures [43].

References

- [1] M. U. Khan, S. A. A. Zaidi, A. Ishtiaq, S. U. R. Bukhari, S. Samer, and A. Farman, “A comparative survey of lidar-slam and lidar based sensor technologies,” in 2021 Mohammad Ali Jinnah University International Conference on Computing (MAJICC), pp. 1–8.

- [2] D. Zou, P. Tan, and W. Yu, “Collaborative visual slam for multiple agents:a brief survey,” Virtual Reality & Intelligent Hardware, vol. 1, no. 5, pp. 461–482, 2019.

- [3] D. Droeschel and S. Behnke, “Efficient continuous-time slam for 3d lidar-based online mapping,” in 2018 IEEE International Conference on Robotics and Automation (ICRA), pp. 5000–5007.

- [4] R. Yagfarov, M. Ivanou, and I. Afanasyev, “Map comparison of lidar-based 2d slam algorithms using precise ground truth,” in 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), pp. 1979–1983.

- [5] Z. Hong, Y. Petillot, and S. Wang, “Radarslam: Radar based large-scale slam in all weathers,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 5164–5170.

- [6] J. M. Santos, M. S. Couceiro, D. Portugal, and R. P. Rocha, “Fusing sonars and lrf data to perform slam in reduced visibility scenarios,” in 2014 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Conference Proceedings, pp. 116–121.

- [7] Y. Shao-Wen and W. Chieh-Chih, “Dealing with laser scanner failure: Mirrors and windows,” in 2008 IEEE International Conference on Robotics and Automation, Conference Proceedings, pp. 3009–3015.

- [8] S. Rahman, A. Q. Li, and I. Rekleitis, “Sonar visual inertial slam of underwater structures,” in 2018 IEEE International Conference on Robotics and Automation (ICRA), pp. 5190–5196.

- [9] J. M. Santos, M. S. Couceiro, D. Portugal, and R. P. Rocha, “A sensor fusion layer to cope with reduced visibility in slam,” Journal of Intelligent & Robotic Systems, vol. 80, no. 3, pp. 401–422, 2015.

- [10] W. Chen, F. Zhang, T. Gu, K. Zhou, Z. Huo, and D. Zhang, “Constructing floor plan through smoke using ultra wideband radar,” Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, vol. 5, no. 4, pp. 1–29, 2021.

- [11] F. Schwiegelshohn, P. Wehner, F. Werner, D. Gohringer, and M. Hubner, “Enabling indoor object localization through bluetooth beacons on the radio robot platform,” in 2016 International Conference on Embedded Computer Systems: Architectures, Modeling and Simulation (SAMOS), pp. 328–333.

- [12] R. Liu, S. H. Marakkalage, M. Padmal, T. Shaganan, C. Yuen, Y. L. Guan, and U. Tan, “Collaborative slam based on wifi fingerprint similarity and motion information,” IEEE Internet of Things Journal, vol. 7, no. 3, pp. 1826–1840, 2020.

- [13] F. Schuster, C. G. Keller, M. Rapp, M. Haueis, and C. Curio, “Landmark based radar slam using graph optimization,” in 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), pp. 2559–2564.

- [14] J. W. Marck, A. Mohamoud, E. v. Houwen, and R. v. Heijster, “Indoor radar slam a radar application for vision and gps denied environments,” in 2013 European Radar Conference, pp. 471–474.

- [15] G. Schouten and J. Steckel, “Radarslam: Biomimetic slam using ultra-wideband pulse-echo radar,” in 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), pp. 1–8.

- [16] M.-C. Yeh and H.-Y. Lin, Robust SLAM System by Incorporating UWB Positioning and Landmark Localization. Springer International Publishing, 2022, pp. 77–91.

- [17] X4M300 Datasheet, Novelda AS, 2018, version 1.6.8. [Online]. Available: http://laonuri.techyneeti.com/wp-content/uploads/2019/02/X4M300_DATASHEET.pdf

- [18] X4M300 Datasheet, Novelda AS, 2017, rev. D. [Online]. Available: http://laonuri.techyneeti.com/wp-content/uploads/2019/02/X4M02_DATASHEET.pdf

- [19] K. Guo, Z. Qiu, C. Miao, A. H. Zaini, C.-L. Chen, W. Meng, and L. Xie, “Ultra-wideband-based localization for quadcopter navigation,” Unmanned Systems, vol. 04, no. 01, pp. 23–34, 2016.

- [20] J. Tiemann, A. Ramsey, and C. Wietfeld, “Enhanced uav indoor navigation through slam-augmented uwb localization,” in 2018 IEEE International Conference on Communications Workshops, pp. 1–6.

- [21] T. Wang, H. Zhao, and Y. Shen, “An efficient single-anchor localization method using ultra-wide bandwidth systems,” Applied Sciences, vol. 10, no. 1, p. 57, 2020.

- [22] R. Liu, C. Yuen, T.-N. Do, M. Zhang, Y. L. Guan, and U. X. Tan, “Cooperative positioning for emergency responders using self imu and peer-to-peer radios measurements,” Information Fusion, vol. 56, pp. 93–102, 2020.

- [23] S. Güler, M. Abdelkader, and J. S. Shamma, “Peer-to-peer relative localization of aerial robots with ultrawideband sensors,” IEEE Transactions on Control Systems Technology, vol. 29, no. 5, pp. 1981–1996, 2021.

- [24] T. Deißler, M. Janson, R. Zetik, and J. Thielecke, “Infrastructureless indoor mapping using a mobile antenna array,” in 2012 19th International Conference on Systems, Signals and Image Processing (IWSSIP), Conference Proceedings, pp. 36–39.

- [25] E. Takeuchi, A. Elfes, and J. Roberts, Localization and Place Recognition Using an Ultra-Wide Band (UWB) Radar. Cham: Springer International Publishing, 2015, pp. 275–288.

- [26] J. Steckel and H. Peremans, “Batslam: Simultaneous localization and mapping using biomimetic sonar,” PLoS ONE, vol. 8, no. 1, p. e54076, 2013.

- [27] M. J. Milford, G. F. Wyeth, and D. Prasser, “Ratslam: a hippocampal model for simultaneous localization and mapping,” in IEEE International Conference on Robotics and Automation, 2004. Proceedings. ICRA ’04. 2004, vol. 1, pp. 403–408 Vol.1.

- [28] T. Deißler and J. Thielecke, “Uwb slam with rao-blackwellized monte carlo data association,” in 2010 International Conference on Indoor Positioning and Indoor Navigation, Conference Proceedings, pp. 1–5.

- [29] ——, “Fusing odometry and sparse uwb radar measurements for indoor slam,” in 2013 Workshop on Sensor Data Fusion: Trends, Solutions, Applications (SDF), Conference Proceedings, pp. 1–5.

- [30] S. Ullah, M. Ali, A. Hussain, and K. S. Kwak, “Applications of uwb technology,” arXiv preprint arXiv:0911.1681, 2009.

- [31] J. Li, Z. Zeng, J. Sun, and F. Liu, “Through-wall detection of human being’s movement by uwb radar,” IEEE Geoscience and Remote Sensing Letters, vol. 9, no. 6, pp. 1079–1083, 2012.

- [32] F. Khan, A. Ghaffar, N. Khan, and S. H. Cho, “An overview of signal processing techniques for remote health monitoring using impulse radio uwb transceiver,” Sensors, vol. 20, no. 9, p. 2479, 2020.

- [33] D. Wang, S. Yoo, and S. H. Cho, “Experimental comparison of ir-uwb radar and fmcw radar for vital signs,” Sensors, vol. 20, no. 22, p. 6695, 2020.

- [34] B. Walid, J. Ma, M. Ma, A. Qi, Y. Luo, and Y. Qi, “Recent advances in radar-based sleep monitoring - a review,” in 2021 IEEE Intl Conf on Dependable, Autonomic and Secure Computing, Intl Conf on Pervasive Intelligence and Computing, Intl Conf on Cloud and Big Data Computing, Intl Conf on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech), pp. 759–766.

- [35] S. Kingsley and S. Quegan, Understanding Radar Systems. Raleigh: The Institution of Engineering and Technology, 1999.

- [36] K. Li, W. Ni, B. Wei, and M. Guizani, “An experimental study of two-way ranging optimization in uwb-based simultaneous localization and wall-mapping systems,” in 2022 International Wireless Communications and Mobile Computing (IWCMC), Conference Proceedings, pp. 871–876.

- [37] F. Thullier, A. Beaulieu, J. Maître, S. Gaboury, and K. Bouchard, “A systematic evaluation of the xethru x4 ultra-wideband radar behavior,” Procedia Computer Science, vol. 198, pp. 148–155, 2022.

- [38] M. Martinez-Ingles, J. Molina-Garcia-Pardo, J. Rodríguez, J. Pascual-García, and L. Juan-Llácer, “Experimental comparison of uwb against mm-wave indoor radio channel characterization,” in 2013 IEEE Antennas and Propagation Society International Symposium (APSURSI), pp. 1946–1947.

- [39] R. W. Schafer, “What is a savitzky-golay filter? [lecture notes],” IEEE Signal Processing Magazine, vol. 28, no. 4, pp. 111–117, 2011.

- [40] J. Chow, K. Ang, D. Lichti, and W. Teskey, “Performance analysis of a low-cost triangulation-based 3d camera: Microsoft kinect system,” Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci, vol. 39, pp. 175–180, 2012.

- [41] S. Thrun, W. Burgard, and D. Fox, Probabilistic Robotics. Cambridge, MA, USA: MIT Press, 2005.

- [42] Y. Li, Y. Liu, Y. Wang, Y. Lin, and W. Shen, “The millimeter-wave radar slam assisted by the rcs feature of the target and imu,” Sensors, vol. 20, no. 18, p. 5421, 2020.

- [43] L. Sakkila, A. Rivenq, C. Tatkeu, Y. E. Hillali, J. P. Ghys, and J. M. Rouvaen, “Methods of target recognition for uwb radar,” in 2010 IEEE Intelligent Vehicles Symposium, Conference Proceedings, pp. 949–954.