UVOSAM: A Mask-free Paradigm for Unsupervised Video Object Segmentation via Segment Anything Model

Abstract

The current state-of-the-art methods for unsupervised video object segmentation (UVOS) require extensive training on video datasets with mask annotations, limiting their effectiveness in handling challenging scenarios. However, the Segment Anything Model (SAM) introduces a new prompt-driven paradigm for image segmentation, offering new possibilities. In this study, we investigate SAM’s potential for UVOS through different prompt strategies. We then propose UVOSAM, a mask-free paradigm for UVOS that utilizes the STD-Net tracker. STD-Net incorporates a spatial-temporal decoupled deformable attention mechanism to establish an effective correlation between intra- and inter-frame features, remarkably enhancing the quality of box prompts in complex video scenes. Extensive experiments on the DAVIS2017-unsupervised and YoutubeVIS19&21 datasets demonstrate the superior performance of UVOSAM without mask supervision compared to existing mask-supervised methods, as well as its ability to generalize to weakly-annotated video datasets. Code can be found at https://github.com/alibaba/UVOSAM.

Introduction

Video object segmentation (VOS) (Miao, Wei, and Yang 2020; Zhou et al. 2022; Yang, Wei, and Yang 2020) is a crucial computer vision task that aims to segment primary objects in video sequences. It has wide-ranging applications in video editing, autonomous driving, and robotics. VOS can be classified into two main types: semi-supervised VOS (Oh et al. 2019; Xie et al. 2021; Chen et al. 2019; Park et al. 2022), which involves supervision in both training and inference, and unsupervised VOS (Liu et al. 2021; Cho et al. 2023; Lee et al. 2023; Ren et al. 2021; Yang et al. 2021c), which generally require no prior data during inference.

Semi-supervised VOS leverages the initial segmentation mask from the first frame to initialize the model, enabling it to track and segment specific objects throughout the entire video sequence. On the other hand, unsupervised VOS (UVOS) relies on the model to autonomously discover and extract masks for prominent objects without any prior information. UVOS is becoming popular for its automatic segmentation capability during inference, eliminating the need for manual annotation. However, current state-of-the-art UVOS methods (Cho et al. 2023; Lee et al. 2023; Pei et al. 2022) still require a large-scale manually annotated video dataset (Perazzi et al. 2016; Xu et al. 2018; Qi et al. 2022; Ding et al. 2023) for training. Annotating video object segmentation is prohibitively expensive as it involves providing masks and trajectories. Even annotating masks using coarse polygons is significantly more time-consuming than annotating video bounding boxes (Cheng, Parkhi, and Kirillov 2022). The high cost of annotating masks poses a challenge in scaling existing VOS approaches. Therefore, a mask-free setting may be necessary for more efficient handling of this task.

Recent advancements in segmentation foundation models (Kirillov et al. 2023; Wang et al. 2023a; Zou et al. 2023) have greatly enhanced image segmentation. One notable model in this field is the Segment Anything Model (SAM) (Kirillov et al. 2023), which has emerged as a leading contender. SAM’s training on a vast dataset of over 1 billion masks has enabled it to achieve impressive zero-shot generalization capabilities. Moreover, SAM is designed to be promptable with various types of prompts, such as bounding boxes and points, enabling it to transfer zero-shot knowledge to new image distributions and tasks. It consistently exhibits robust performance across various downstream tasks, surpassing existing methods in zero-shot settings. This raises an intriguing question: Can we leverage SAM in UVOS by utilizing auto-generated prompts, thereby eliminating the labor-intensive process of segmentation mask annotation?

To achieve our objective, we begin by investigating the most suitable type of prompts for the UVOS task. As shown in Figure 1(a), even when the number of sampling points is increased to 10, the performance of the bounding box remains superior to that of points. This can be explained intuitively in the following ways. Firstly, the bi-directional cross-attention module in the decoding path heavily relies on the positional encoding of the point prompts, which depends on their coordinates. As the image embeddings are updated accordingly, point prompts located at different positions, despite having similar semantic contexts, may result in variations in the final segmentation masks. Secondly, since SAM is trained for general segmentation rather than specific tasks, it may struggle to accurately handle segmentation boundaries, especially when only a few points are used.

When selecting the bounding box as the prompt, both detection and association are crucial. Recent advancements in multi-object tracking (MOT) (Du et al. 2023; Cao et al. 2023; Maggiolino et al. 2023) have shown superior performance in identity association. Therefore, our focus is primarily on the detection quality in terms of Intersection over Union (IoU) between the predicted location and the object’s boundary. As shown in Figure 1(c), unsuitable shapes and locations of box prompts can lead to segmentation ambiguity issues for SAM. To address this, we propose STD-Net, a tracking network that combines DeformableDETR (Zhu et al. 2020) and DeepOCSORT (Maggiolino et al. 2023). In the deformable transformer, we introduce a novel approach called spatial-temporal decoupled deformable attention (STD-DA). This approach allows us to independently leverage multi-scale deformable attention in the spatial and temporal domains, resulting in spatially discriminative and temporally representative features. We also employ a dynamic fusion module to effectively combine these features and enhance the accuracy of non-overlapping bounding boxes in complex video scenes. As demonstrated in Figure 1(b), the bounding box prompts generated by STD-Net exhibit significantly superior performance in terms of average IoU compared to state-of-the-art MOT methods. Furthermore, it can be proven that the value of IoU greatly affects the segmentation performance.

In summary, we present UVOSAM, a mask-free paradigm for UVOS. UVOSAM combines STD-Net and SAM, with STD-Net trained exclusively using bounding box annotations, while SAM is kept frozen to preserve its valuable pre-trained knowledge. Our main contributions can be summarized as follows:

-

•

We conduct a pioneering study to investigate the potential of SAM for UVOS through the exploration of various prompt strategies. Compared to existing UVOS methods, SAM demonstrates effective segmentation capabilities for weakly-annotated video data.

-

•

We propose UVOSAM, an innovative mask-free paradigm for UVOS. UVOSAM employs a novel tracker called STD-Net to generate high-quality trajectories, which are served as box prompts for SAM. STD-Net incorporates a spatial-temporal decoupled deformable attention mechanism to establish a strong correlation between intra- and inter-frame features. This enhancement obviously improves the quality of box prompts, particularly in complex video scenes.

-

•

Extensive experiments conducted on the DAVIS2017-unsupervised and YoutubeVIS19&21 datasets showcase the promising results of UVOSAM and its superiority over existing mask-supervised methods.

Related Works

Unsupervised Video Object Segmentation

UVOS is designed to segment salient objects in a class-agnostic manner, eliminating the need for manual guidance during inference. This approach reduces the time and cost of Video Object Segmentation (VOS), thus paving the way for a variety of real-time applications. Early techniques (Fragkiadaki et al. 2015; Tokmakov, Alahari, and Schmid 2017; Yang et al. 2021a) for detecting salient objects assumed that object pixels exhibited the same motion patterns across successive frames, employing motion cues such as optical flow to facilitate the segmentation process. However, these methods have a notable limitation: their performance is heavily influenced by the optical flow’s quality, especially in situations involving occlusions and rapid motion. Several studies (Zhou et al. 2020; Zhang et al. 2021; Ren et al. 2021; Yang et al. 2021c; Cho et al. 2023) have also investigated the use of learning to focus on object appearance features. For example, MATNet (Zhou et al. 2020) employs the MAT-block to convert appearance features into motion-attentive representations, while RTNet (Ren et al. 2021) mutually transforms appearance and motion features to identify primary objects. Moreover, AMC-Net (Yang et al. 2021c) implements a multi-modality co-attention gate to foster a deep collaboration between appearance and motion data for precise segmentation. DPA (Cho et al. 2022) leverages inter-modality attention to densely incorporate context information from both motion and appearance. Lastly, PMN (Lee et al. 2023) efficiently extracts appearance and motion data by deriving superpixel-based component prototypes.

In the realm of unsupervised multiple object segmentation, the majority of methods employ the track-by-detect paradigm. This involves generating object proposals using instance segmentation models, which are subsequently tracked by re-identification models. The Target-Aware model (Zhou et al. 2021), for instance, uses a target-aware adaptive tracking framework to associate proposals across frames, yielding robust matching results. Similarly, INO (Pan et al. 2022) enhances the association of proposals by incorporating inter-frame consistency through the affinity matrix. On the other hand, MCMPG (Yuan et al. 2023) builds a graph using object proposals generated from several frames around the key frame, and then propagates them to the key frame to reason about key-frame objects. Unlike these methods that rely on costly annotation-intensive segmentation datasets for training, our mask-free UVOSAM model achieves accurate segmentation by providing simple prompts to the vision foundation model SAM (Kirillov et al. 2023). This approach makes UVOS more accessible.

Vision Foundation Models

Vision foundation models, which have shown remarkable adaptability across a range of tasks, have recently been attracting significant attention. CLIP (Radford et al. 2021), a groundbreaking model trained on web-scale image-text pairs, demonstrates impressive zero-shot capabilities and has been widely implemented in numerous multi-modal tasks for feature alignment. Similarly, BLIP (Li et al. 2022, 2023) utilizes a dataset bootstrapped from large-scale noisy data for multi-modal pre-training, enhancing zero-shot performance in text-to-video retrieval tasks. In a bid to develop a universal detection framework, UniDetector (Wang et al. 2023b) uses images from a variety of sources and diverse label spaces for training, achieving zero-shot performance that significantly outperforms supervised methods.

In the realm of segmentation tasks, SAM (Kirillov et al. 2023), the first promptable foundation model, has been recognized for its ability to segment any object in any context using various prompts, such as boxes and points. Models such as SegGPT (Wang et al. 2023a) and SEEM (Zou et al. 2023), which share a similar idea with SAM, were proposed as generalist models to perform arbitrary segmentation tasks with different prompt types. Some contemporaneous works have extended SAM to video segmentation tasks. For instance, TAM (Yang et al. 2023) and SAM-Track (Cheng et al. 2023) utilize cutting-edge mask trackers to interactively segment video objects, while SAM-PT (Rajivc et al. 2023) employs sparse points input for mask generation. In this paper, our focus is on the UVOS task, and we optimize the box prompts of SAM to significantly reduce the need for intensive video mask annotation. Moreover, our approach inherently possesses SAM’s robust zero-shot capability, making it suitable for handling weakly labeled video segmentation datasets.

Multi-object Tracking

Multi-object tracking aims to accurately detect multiple objects while providing continuous trajectories for each object. The dominant paradigm in this field is Tracking-By-Detection, which consists of two tasks: detection and association. To better predict the next position of objects, SORT (Bewley et al. 2016) first uses the Kalman filter (Welch, Bishop et al. 1995) and then matches predicted boxes with detected boxes using the Hungarian algorithm (Kuhn 1955). DeepSORT (Wojke, Bewley, and Paulus 2017) effectively mitigates occlusion problems by extracting ReID features of bounding boxes with a separate CNN model. TransTrack (Sun et al. 2020) obtains bounding boxes and ReID features through the vision transformer. ByteTrack (Zhang et al. 2022) adopts YOLOX (Ge et al. 2021) as the detector and tracks bounding boxes with scores below the threshold. StrongSORT (Du et al. 2023) designs an appearance-free link model and comprehensively optimizes DeepSORT, achieving a balance between inference speed and accuracy. OCSORT (Cao et al. 2023) designs a cumulative error correction module and utilizes a learnable motion model to improve tracking performance under occlusion and non-linear motion conditions. DEEPOCSORT (Maggiolino et al. 2023) adaptively integrates ReID features with OCSORT and achieves promising tracking results. Given that the aforementioned approaches have shown outstanding performance in identity association, our proposed UVOSAM leverages the association methods of DeepOCSORT, primarily focusing on enhancing the detection quality of box prompts in terms of Intersection over Union (IoU) between predicted boxes and object boundaries.

Methodology

This section provides a detailed introduction to our proposed method, UVOSAM, which stands for Unsupervised Video Object Segmentation using SAM. Our approach does not depend on any video segmentation mask labels. We begin by revisiting the SAM architecture and then proceed to present the UVOSAM pipeline, highlighting the key features of STD-Net.

Preliminaries: architecture of SAM

SAM is a segmentation foundation model that has been trained using over one billion masks, demonstrating remarkable zero-shot proficiency. Its primary components consist of an image encoder denoted as , a prompt encoder denoted as , and a lightweight mask decoder denoted as . To elaborate further, SAM takes an image and its corresponding prompts (such as points, bounding boxes, text, or masks) as inputs. These two input streams are then embedded separately by the image encoder and prompt encoder, which can be formulated as follows:

| (1) |

where , and represent the height, width, and channel number of the feature map, and denotes the length of the prompt tokens. Following that, and are passed to . In addition, there are multiple learnable tokens concatenated to as a prefix to enable flexible prompting. These two embeddings are then interacted through cross-attention, and the zero-shot mask is generated by decoding the interactive features. This process can be described as follows:

| (2) |

As mentioned previously, SAM is trained for general segmentation tasks rather than specific ones. Consequently, it may encounter difficulties in accurately handling segmentation boundaries, especially when prompt information is limited. For instance, a single prompt such as a single point prompt can lead to segmentation ambiguity issues, where SAM cannot differentiate which mask the prompt corresponds to. Hence, the objective of this paper is to investigate methods for optimizing the quality of box prompts. By generating high-quality box prompts, we can potentially achieve promising outcomes in UVOS tasks.

The overall of proposed UVOSAM

UVOSAM consists of two components: a STD-Net and a SAM. As shown in Figure 2, let represent the input clip centered at time , which includes the -th frame and its neighboring frames. The encoder of STD-Net initially extracts enriched spatial-temporal features using a novel spatial-temporal decoupled deformable attention (STD-DA) module. These features are then utilized to refine the object queries and obtain discriminative instance embeddings, which are subsequently decoded into the category, location, and ReID features of each object through three output heads. These outputs are further combined to generate the object trajectories. Subsequently, the object trajectories are utilized as box prompts in SAM to obtain refined video segmentation results. The entire SAM is kept frozen to retain its pre-trained knowledge, and only the STD-Net is trained using category and bounding box annotations.

STD-Net

Our STD-Net is composed of a vision backbone, a deformable transformer, and three output heads. In order to leverage the temporal information among frames for precise object discovery, we introduce a novel STD-DA module in the transformer encoder to effectively capture spatial-temporal correlations. Furthermore, we employ contrastive learning, following the approach (Wu et al. 2022b), to obtain ReID features. The key design principles will be explained in detail in the following paragraphs.

STD-DA. The proposed STD-DA module initially utilizes multi-scale deformable attention separately in the spatial and temporal domains, and subsequently combines them to generate dynamically enriched spatial-temporal features. The STD-DA architecture consists of three sub-modules: spatial multi-scale deformable attention (S-MSDA), temporal multi-scale deformable attention (T-MSDA), and dynamic attention fusion.

The S-MSDA module, proposed in this work, calculates attention on points sampled from the multi-scale feature maps of the current frame. Let the feature map from frame be denoted as , and the normalized coordinates of the reference point for as . With these definitions, the S-MSDA module can be described as follows, given the query feature :

| (3) | ||||

where denotes the index of multiple attention heads, is the total sampled number from each feature level. and represent the pixel offset and attention weight of the sampling point in the feature level. Both of them are obtained via linear projection over . Function re-scales the normalized coordinates to the input feature map of the level.

We further expand the S-MSDA module to the temporal domain and introduce T-MSDA. The key concept behind T-MSDA is that, for each query in the current frame, it attends to a subset of key points sampled from multi-scale feature maps of neighboring frames. This allows our method to gather enriched temporal representations and preserve temporal consistency of the foreground instances. The formulation of T-MSDA can be expressed as follows:

| (4) | ||||

where denotes the neighboring frame with frame . represents the number of sampled points in each feature level across neighboring frames.

Let and represent the outputs for frame obtained by and , respectively. According to our findings, contains more salient instance information about the current frame, which is crucial for distinguishing the instance from the background. On the other hand, contains more discriminative representations to detect changes in the instance’s appearance. To effectively combine the strengths of both modules, we propose a dynamic attention fusion module that uses channel-wise attention to highlight the semantic information. We aggregate the two outputs using element-wise addition and then apply a fully connected layer to adaptively select from . Subsequently, we use two additional fully connected layers to generate gate vectors , which control the flow of information from the attention maps . To generate adaptive weights , we utilize the softmax function along the channel dimension. Finally, we obtain the enriched spatial-temporal features of frame by performing a weighted addition:

| (5) |

ReID embeddings. To obtain ReID embeddings based on DeformableDETR (Zhu et al. 2020), we introduce a temporal contrastive loss that maps different object queries to unique ReID representations. For each video clip, we consider queries belonging to the same object as positive samples and all other queries as negative samples.

Here define be the object queries of frame , where and is the total number of queries. Automatically, from frame and its neighboring frame can be treated as the different views of the instance in terms of temporal domain. We first adopt two FC layers as the ReID head to generate the ReID representations of the queries. Then, InfoNCE (Oord, Li, and Vinyals 2018) is employed between two frames, which can be described as:

| (6) |

where represents the temperature hyper-parameter, and denotes the cosine similarity. During inference, the ReID features of the queries are utilized to measure visual similarity. In summary, the total losses of STD-Net can be defined as follows:

| (7) |

where and represent classification loss and bounding box loss. Herein, we use focal loss (Lin et al. 2017) as the classification loss. The box loss consists of L1 loss and GIou loss (Rezatofighi et al. 2019): .

| Methods | - | - | - | - | |

| UnOVOST (Luiten, Zulfikar, and Leibe 2020) | 67.9 | 66.4 | 76.4 | 69.3 | 76.9 |

| Target-Aware (Zhou et al. 2021) | 65.0 | 63.7 | 71.9 | 66.2 | 73.1 |

| Propose-Reduce (Lin et al. 2021) | 68.3 | 65.0 | - | 71.6 | - |

| INO (Pan et al. 2022) | 72.5 | 68.7 | - | 76.3 | - |

| MCMPG + STCN (Yuan et al. 2023) | 78.4 | 75.4 | 83.9 | 81.4 | 88.9 |

| UVOSAM | 80.9 | 77.5 | 84.9 | 84.2 | 91.7 |

| Methods | YoutubeVIS-2019 | YoutubeVIS-2021 | ||||||||

| CrossVIS (Yang et al. 2021b) | 36.3 | 56.8 | 38.9 | 35.6 | 40.7 | 34.2 | 54.4 | 37.9 | 30.4 | 38.2 |

| VISOLO (Han et al. 2022) | 38.6 | 56.3 | 43.7 | 35.7 | 42.5 | 36.9 | 54.7 | 40.2 | 30.6 | 40.9 |

| IFC (Hwang et al. 2021) | 41.2 | 65.1 | 44.6 | 42.3 | 49.6 | 35.2 | 55.9 | 37.7 | 32.6 | 42.9 |

| Mask2Former-VIS (Cheng et al. 2021) | 46.4 | 68.0 | 50.0 | - | - | 40.6 | 60.9 | 41.8 | - | - |

| TeViT (Yang et al. 2022) | 46.6 | 71.3 | 51.6 | 44.9 | 54.3 | 37.9 | 61.2 | 42.1 | 35.1 | 44.6 |

| SeqFormer (Wu et al. 2022a) | 47.4 | 69.8 | 51.8 | 45.5 | 54.8 | 40.5 | 62.4 | 43.7 | 36.1 | 48.1 |

| MinVIS (Huang, Yu, and Anandkumar 2022) | 47.4 | 69.0 | 52.1 | 45.7 | 55.7 | 44.2 | 66.0 | 48.1 | 39.2 | 51.7 |

| IDOL (Wu et al. 2022b) | 49.5 | 74.0 | 52.9 | 47.7 | 58.7 | 43.9 | 68.0 | 49.6 | 38.0 | 50.9 |

| VITA (Heo et al. 2022) | 49.8 | 72.6 | 54.5 | 49.4 | 61.0 | 45.7 | 67.4 | 49.5 | 40.9 | 53.6 |

| GenVIS (Heo et al. 2023) | 51.3 | 72.0 | 54.6 | 49.5 | 59.7 | 47.1 | 67.5 | 51.5 | 41.6 | 54.7 |

| UVOSAM | 52.4 | 74.1 | 56.8 | 51.2 | 61.9 | 48.4 | 69.4 | 53.8 | 42.1 | 56.3 |

Experiment

Experimental Setup

Datasets. We evaluate UVOSAM on three popular video segmentation datasets: DAVIS2017-unsupervised (Pont-Tuset et al. 2017) and Youtube-VIS 2019&2021 (Yang, Fan, and Xu 2019). Video instance segmentation builds upon unsupervised video object segmentation by incorporating object category recognition. Therefore, the corresponding dataset of YouTubeVIS can also be used for experiments. The DAVIS2017-unsupervised dataset consists of 120 high-quality videos in total, which are further split into 60 for training, 30 for validation, and 30 for test-dev. The YoutubeVIS-2019 dataset comprises 2,238 training, 302 validation, and 343 test videos. The semantic category number is 40. The YoutubeVIS-2021 dataset adds more samples based on YoutubeVIS-2019, with 3,859 high-quality videos, while the number of annotations is also doubled.

Evaluation Metrics. For DAVIS2017-unsupervised, we employ the official evaluation measures including region similarity , boundary accuracy and the overall metric . For Youtube-VIS dataset, we adopt Average Precision (AP) and Average Recall (AR).

Implements Details. For STD-Net, we select ResNet-50 (He et al. 2016) as the default backbone network. All hyper-parameters related to the network architecture are the same as those in the official DeformableDETR. For DAVIS2017-unsupervised, we modify the class head to a binary classification output, identifying only whether the object is foreground. We use 4 NVIDIA A100 GPUs with a global batch size of 16. We first pre-train STD-Net on MSCOCO (Lin et al. 2014) for 120,000 steps and then train on DAVIS2017-unsupervised and Youtube-VIS for 2,0000 steps and 80,000 steps, respectively. The AdamW optimizer (Loshchilov and Hutter 2019) is adopted with a base learning rate of and for the two-stage training. For SAM, we employ the pre-trained model with the ViT-H (Dosovitskiy et al. 2020) image encoder as the base segmentation model. Following mainstream methods, we resize the frame to a shorter size of 360 and 480 pixels on Youtube-VIS and DAVIS2017-unsupervised during inference, respectively.

Main Results

We compare UVOSAM with the state-of-the-art methods on DAVIS2017-unsupervised and YouTube-VIS 2019 & 2021. Among these competitive methods, our UVOSAM sets new state-of-the-art results, using only the training data of boxes.

DAVIS2017-unsupervised. Table 1 illustrates the results of all compared methods on DAVIS2017-unsupervised validation set. Our UVOSAM achieves scores of 80.9, which outperforms current mask-supervised methods by a large margin. Specifically, UVOSAM makes 2.5 absolute improvements over the best competitor MCMPG+STCN, which utilizes a more complex ResNeXt-101 backbone.

YoutubeVIS-2019&2021. We present our comparisons of YoutubeVIS-2019&2021 in Table 2. When using the ResNet-50 backbone, our UVOSAM sets a new state-of-the-art with AP of 52.4 and 48.4, respectively. The higher AP and AR values demonstrate that our method can effectively leverage enriched spatial-temporal information to optimize segmentation results. Notably, without using any masks for training, our UVOSAM achieves an absolute gain of 1.1 &1.3 AP over the previous best method, GenVIS. Additionally, it is worth mentioning that UVOSAM outperforms GenVIS by approximately 2.3 in the overlap threshold of , indicating that UVOSAM is capable of optimizing the non-overlapping cases.

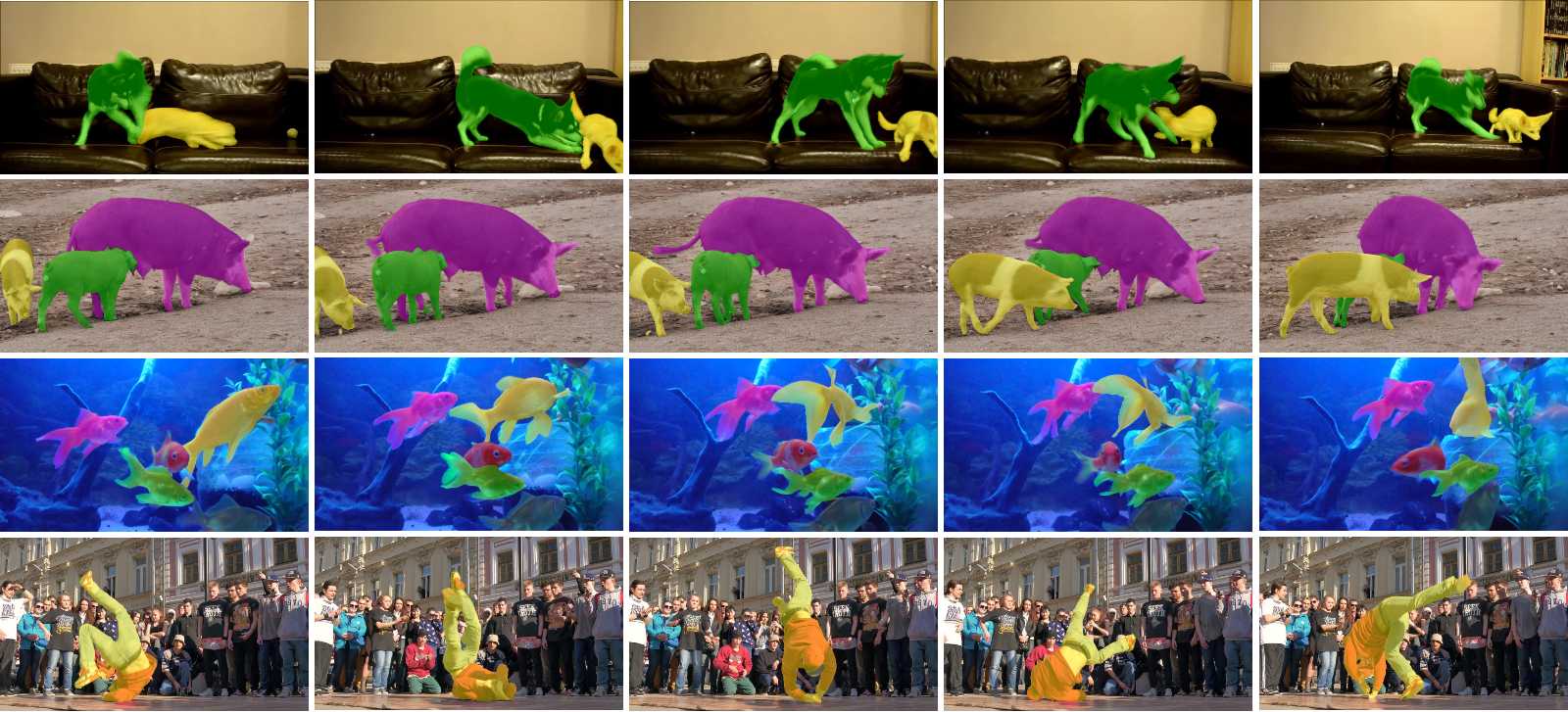

Figure 3 visualizes the results of UVOSAM in four challenging scenarios. It shows UVOSAM can generate high quality masks and maintain stable tracking trajectories.

Ablation Study

In this section, we conduct ablation studies to figure out the contribution of different designs. All the ablation experiments are conducted on the DAVIS2017-unsupervised, using the same implementation details as previously described.

The impact of the proposed modules on the quality of prompt. We verify the effectiveness of the proposed modules for improving the quality of box prompts. Firstly, we train the baseline DeepOCSORT (Maggiolino et al. 2023) with the detector of DeformableDETR. As shown in Table 3, it only achieves of 78.1 . Compared to the baseline model, the introduction of temporal contrastive learning provides an obvious gain of 1.0 . By performing STD-DA in the deformable transformer, it further brings the to 80.9. In summary, our STD-Net outperforms baseline by 2.8. Moreover, we additionally offer box annotations as prompts for SAM, which are specifically denoted as ”Humans”. The superior UVOS results produced by SAM are evident when the prompts are sufficiently accurate.

| Methods | - | - | |

| baseline | 78.1 | 74.5 | 81.7 |

| baseline +TCL | 79.1 | 75.8 | 82.3 |

| baseline + TCL + STD-DA | 80.9 | 77.5 | 84.2 |

| Humans* | 87.3 | 83.5 | 91.0 |

Bidirectional neighboring window size of STD-DA. In Table 4, we study the impact of the bidirectional neighboring window size on overall performance. By increasing from 1 to 3, it brings the to , and , respectively. It is evident that a larger window size can utilize richer temporal information from multiple frames, which facilitates better understanding of the context of instances. To achieve a balance between computation and precision, we set the value of to 3 for all experiments. Nonetheless, we hypothesize that our performance will continue to improve as increases.

| - | - | - | - | ||

| 0 | 78.8 | 75.5 | 82.7 | 82.0 | 89.4 |

| 1 | 79.7 | 76.5 | 84.0 | 82.9 | 90.5 |

| 2 | 80.4 | 77.4 | 83.9 | 83.4 | 91.1 |

| 3 | 80.9 | 77.5 | 84.9 | 84.2 | 92.0 |

| 4 | 81.1 | 77.6 | 85.2 | 84.5 | 92.3 |

Number of temporal sampling points of STD-DA. Table 5 shows there is a large performance improvement when changes from 1 to 4. It can be seen that when further increases, the performance is basically unchanged. Hence, we choose as our default settings.

| - | - | - | - | ||

| 0 | 78.8 | 75.5 | 82.7 | 82.0 | 89.4 |

| 1 | 79.3 | 75.9 | 83.3 | 82.6 | 89.1 |

| 2 | 79.9 | 76.6 | 83.9 | 83.1 | 90.6 |

| 3 | 80.4 | 77.1 | 84.4 | 83.7 | 91.9 |

| 4 | 80.9 | 77.5 | 84.9 | 84.2 | 92.0 |

| 5 | 81.0 | 77.6 | 85.0 | 84.4 | 92.4 |

| 6 | 80.8 | 77.3 | 84.9 | 84.2 | 92.1 |

Runtime Analysis. In terms of speed, UVOSAM achieves FPS on DAVIS2017, which is comparable to MCMPG (about 10.5 FPS).

The Generalization ability to weakly-annotated video data. To assess the transfer ability of UVOSAM, we additionally conduct experiments on the MOT20 (Dendorfer et al. 2020), which only provides box annotations. As depicted in Figure 4, the existing UVOS methods fail to produce satisfactory segmentation results due to the lack of training data. In contrast, UVOSAM, leveraging the weak annotations of boxes, demonstrates exceptional segmentation performance.

Conclusion

This paper presents UVOSAM, a mask-free framework that utilizes the SAM to address the issue of label-expensiveness in UVOS. To resolve segmentation ambiguity problems caused by off-size box prompts in complex scenes, we introduce a novel tracking network called STD-Net within UVOSAM. This network optimizes non-overlapping bounding boxes using spatial-temporal decoupled deformable attention, and the trajectories generated by it are used as prompts in SAM to sequentially generate segmentation results. We evaluate our approach on three popular datasets and the results show that UVOSAM outperforms mask-supervised methods obviously. Furthermore, our framework demonstrates impressive transferability in handling segmentation on weakly annotated video data. We hope that our work will inspire future research on label-efficient UVOS by promoting the development of fundamental vision models.

References

- Bewley et al. (2016) Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; and Upcroft, B. 2016. Simple online and realtime tracking. In 2016 IEEE international conference on image processing (ICIP), 3464–3468. IEEE.

- Cao et al. (2023) Cao, J.; Pang, J.; Weng, X.; Khirodkar, R.; and Kitani, K. 2023. Observation-centric sort: Rethinking sort for robust multi-object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 9686–9696.

- Chen et al. (2019) Chen, Y.; Hao, C.; Liu, A. X.; and Wu, E. 2019. Multilevel model for video object segmentation based on supervision optimization. IEEE Transactions on Multimedia, 21(8): 1934–1945.

- Cheng et al. (2021) Cheng, B.; Choudhuri, A.; Misra, I.; Kirillov, A.; Girdhar, R.; and Schwing, A. G. 2021. Mask2former for video instance segmentation. arXiv preprint arXiv:2112.10764.

- Cheng, Parkhi, and Kirillov (2022) Cheng, B.; Parkhi, O.; and Kirillov, A. 2022. Pointly-supervised instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2617–2626.

- Cheng, Tai, and Tang (2021) Cheng, H.; Tai, Y.-W.; and Tang, C.-K. 2021. Rethinking space-time networks with improved memory coverage for efficient video object segmentation. Advances in neural information processing systems, 34: 3–8.

- Cheng et al. (2023) Cheng, Y.; Li, L.; Xu, Y.; Li, X.; Yang, Z.; Wang, W.; and Yang, Y. 2023. Segment and Track Anything. ArXiv, abs/2305.06558.

- Cho et al. (2022) Cho, S.; Lee, M.; Lee, S.; Lee, D.; and Lee, S. 2022. Dual Prototype Attention for Unsupervised Video Object Segmentation. arXiv preprint arXiv:2211.12036.

- Cho et al. (2023) Cho, S.; Lee, M.; Lee, S.; Park, C.; Kim, D.; and Lee, S. 2023. Treating motion as option to reduce motion dependency in unsupervised video object segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 5140–5149.

- Dendorfer et al. (2020) Dendorfer, P.; Rezatofighi, H.; Milan, A.; Shi, J.; Cremers, D.; Reid, I.; Roth, S.; Schindler, K.; and Leal-Taixé, L. 2020. MOT20: A benchmark for multi object tracking in crowded scenes. arXiv preprint arXiv:2003.09003.

- Ding et al. (2023) Ding, H.; Liu, C.; He, S.; Jiang, X.; Torr, P. H.; and Bai, S. 2023. Mose: A new dataset for video object segmentation in complex scenes. arXiv preprint arXiv:2302.01872.

- Dosovitskiy et al. (2020) Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; and Unterthiner, T. 2020. Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929.

- Du et al. (2023) Du, Y.; Zhao, Z.; Song, Y.; Zhao, Y.; Su, F.; Gong, T.; and Meng, H. 2023. Strongsort: Make deepsort great again. IEEE Transactions on Multimedia.

- Fragkiadaki et al. (2015) Fragkiadaki, K.; Arbelaez, P.; Felsen, P.; and Malik, J. 2015. Learning to segment moving objects in videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 4083–4090.

- Ge et al. (2021) Ge, Z.; Liu, S.; Wang, F.; Li, Z.; and Sun, J. 2021. Yolox: Exceeding yolo series in 2021. arXiv preprint arXiv:2107.08430.

- Han et al. (2022) Han, S. H.; Hwang, S.; Oh, S. W.; Park, Y.; Kim, H.; Kim, M.-J.; and Kim, S. J. 2022. Visolo: Grid-based space-time aggregation for efficient online video instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2896–2905.

- He et al. (2016) He, K.; Zhang, X.; Ren, S.; and Sun, J. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, 770–778.

- Heo et al. (2023) Heo, M.; Hwang, S.; Hyun, J.; Kim, H.; Oh, S. W.; Lee, J.-Y.; and Kim, S. J. 2023. A Generalized Framework for Video Instance Segmentation.

- Heo et al. (2022) Heo, M.; Hwang, S.; Oh, S. W.; Lee, J.-Y.; and Kim, S. J. 2022. VITA: Video Instance Segmentation via Object Token Association. In Advances in Neural Information Processing Systems.

- Huang, Yu, and Anandkumar (2022) Huang, D.-A.; Yu, Z.; and Anandkumar, A. 2022. MinVIS: A Minimal Video Instance Segmentation Framework without Video-based Training. In Advances in Neural Information Processing Systems.

- Hwang et al. (2021) Hwang, S.; Heo, M.; Oh, S. W.; and Kim, S. J. 2021. Video instance segmentation using inter-frame communication transformers. Advances in Neural Information Processing Systems, 34: 13352–13363.

- Kirillov et al. (2023) Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A. C.; Lo, W.-Y.; et al. 2023. Segment anything. arXiv preprint arXiv:2304.02643.

- Kuhn (1955) Kuhn, H. W. 1955. The Hungarian method for the assignment problem. Naval research logistics quarterly, 2(1-2): 83–97.

- Lee et al. (2023) Lee, M.; Cho, S.; Lee, S.; Park, C.; and Lee, S. 2023. Unsupervised Video Object Segmentation via Prototype Memory Network. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 5924–5934.

- Li et al. (2023) Li, J.; Li, D.; Savarese, S.; and Hoi, S. 2023. Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. arXiv preprint arXiv:2301.12597.

- Li et al. (2022) Li, J.; Li, D.; Xiong, C.; and Hoi, S. 2022. Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In International Conference on Machine Learning, 12888–12900. PMLR.

- Lin et al. (2021) Lin, H.; Wu, R.; Liu, S.; Lu, J.; and Jia, J. 2021. Video instance segmentation with a propose-reduce paradigm. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 1739–1748.

- Lin et al. (2017) Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; and Dollár, P. 2017. Focal loss for dense object detection. In Proceedings of the IEEE international conference on computer vision, 2980–2988.

- Lin et al. (2014) Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; and Zitnick, C. L. 2014. Microsoft coco: Common objects in context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13, 740–755. Springer.

- Liu et al. (2021) Liu, D.; Yu, D.; Wang, C.; and Zhou, P. 2021. F2net: Learning to focus on the foreground for unsupervised video object segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 35, 2109–2117.

- Loshchilov and Hutter (2019) Loshchilov, I.; and Hutter, F. 2019. Decoupled Weight Decay Regularization. In 7th International Conference on Learning Representations, ICLR 2019.

- Luiten, Zulfikar, and Leibe (2020) Luiten, J.; Zulfikar, I. E.; and Leibe, B. 2020. Unovost: Unsupervised offline video object segmentation and tracking. In Proceedings of the IEEE/CVF winter conference on applications of computer vision, 2000–2009.

- Maggiolino et al. (2023) Maggiolino, G.; Ahmad, A.; Cao, J.; and Kitani, K. 2023. Deep OC-SORT: Multi-Pedestrian Tracking by Adaptive Re-Identification. arXiv preprint arXiv:2302.11813.

- Miao, Wei, and Yang (2020) Miao, J.; Wei, Y.; and Yang, Y. 2020. Memory aggregation networks for efficient interactive video object segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 10366–10375.

- Oh et al. (2019) Oh, S. W.; Lee, J.-Y.; Xu, N.; and Kim, S. J. 2019. Video object segmentation using space-time memory networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 9226–9235.

- Oord, Li, and Vinyals (2018) Oord, A. v. d.; Li, Y.; and Vinyals, O. 2018. Representation learning with contrastive predictive coding. arXiv preprint arXiv:1807.03748.

- Pan et al. (2022) Pan, X.; Li, P.; Yang, Z.; Zhou, H.; Zhou, C.; Yang, H.; Zhou, J.; and Yang, Y. 2022. In-n-out generative learning for dense unsupervised video segmentation. In Proceedings of the 30th ACM International Conference on Multimedia, 1819–1827.

- Park et al. (2022) Park, K.; Woo, S.; Oh, S. W.; Kweon, I. S.; and Lee, J.-Y. 2022. Per-clip video object segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 1352–1361.

- Pei et al. (2022) Pei, G.; Shen, F.; Yao, Y.; Xie, G.-S.; Tang, Z.; and Tang, J. 2022. Hierarchical feature alignment network for unsupervised video object segmentation. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXXIV, 596–613. Springer.

- Perazzi et al. (2016) Perazzi, F.; Pont-Tuset, J.; McWilliams, B.; Van Gool, L.; Gross, M.; and Sorkine-Hornung, A. 2016. A benchmark dataset and evaluation methodology for video object segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, 724–732.

- Pont-Tuset et al. (2017) Pont-Tuset, J.; Perazzi, F.; Caelles, S.; Arbeláez, P.; Sorkine-Hornung, A.; and Van Gool, L. 2017. The 2017 davis challenge on video object segmentation. arXiv preprint arXiv:1704.00675.

- Qi et al. (2022) Qi, J.; Gao, Y.; Hu, Y.; Wang, X.; Liu, X.; Bai, X.; Belongie, S.; Yuille, A.; Torr, P. H.; and Bai, S. 2022. Occluded video instance segmentation: A benchmark. International Journal of Computer Vision, 130(8): 2022–2039.

- Radford et al. (2021) Radford, A.; Kim, J. W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. 2021. Learning transferable visual models from natural language supervision. In International conference on machine learning, 8748–8763. PMLR.

- Rajivc et al. (2023) Rajivc, F.; Ke, L.; Tai, Y.-W.; Tang, C.-K.; Danelljan, M.; and Yu, F. 2023. Segment Anything Meets Point Tracking. ArXiv, abs/2307.01197.

- Ren et al. (2021) Ren, S.; Liu, W.; Liu, Y.; Chen, H.; Han, G.; and He, S. 2021. Reciprocal transformations for unsupervised video object segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 15455–15464.

- Rezatofighi et al. (2019) Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; and Savarese, S. 2019. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 658–666.

- Sun et al. (2020) Sun, P.; Cao, J.; Jiang, Y.; Zhang, R.; Xie, E.; Yuan, Z.; Wang, C.; and Luo, P. 2020. Transtrack: Multiple object tracking with transformer. arXiv preprint arXiv:2012.15460.

- Tokmakov, Alahari, and Schmid (2017) Tokmakov, P.; Alahari, K.; and Schmid, C. 2017. Learning motion patterns in videos. In Proceedings of the IEEE conference on computer vision and pattern recognition, 3386–3394.

- Wang et al. (2023a) Wang, X.; Zhang, X.; Cao, Y.; Wang, W.; Shen, C.; and Huang, T. 2023a. Seggpt: Segmenting everything in context. arXiv preprint arXiv:2304.03284.

- Wang et al. (2023b) Wang, Z.; Li, Y.; Chen, X.; Lim, S.-N.; Torralba, A.; Zhao, H.; and Wang, S. 2023b. Detecting Everything in the Open World: Towards Universal Object Detection. arXiv preprint arXiv:2303.11749.

- Welch, Bishop et al. (1995) Welch, G.; Bishop, G.; et al. 1995. An introduction to the Kalman filter.

- Wojke, Bewley, and Paulus (2017) Wojke, N.; Bewley, A.; and Paulus, D. 2017. Simple online and realtime tracking with a deep association metric. In 2017 IEEE international conference on image processing (ICIP), 3645–3649. IEEE.

- Wu et al. (2022a) Wu, J.; Jiang, Y.; Bai, S.; Zhang, W.; and Bai, X. 2022a. SeqFormer: Sequential transformer for video instance segmentation. In European Conference on Computer Vision.

- Wu et al. (2022b) Wu, J.; Liu, Q.; Jiang, Y.; Bai, S.; Yuille, A.; and Bai, X. 2022b. In defense of online models for video instance segmentation. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXVIII, 588–605. Springer.

- Xie et al. (2021) Xie, H.; Yao, H.; Zhou, S.; Zhang, S.; and Sun, W. 2021. Efficient regional memory network for video object segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 1286–1295.

- Xu et al. (2018) Xu, N.; Yang, L.; Fan, Y.; Yue, D.; Liang, Y.; Yang, J.; and Huang, T. Y.-V. 2018. A large-scale video object segmentation benchmark. arXiv preprint.

- Yang et al. (2021a) Yang, C.; Lamdouar, H.; Lu, E.; Zisserman, A.; and Xie, W. 2021a. Self-supervised video object segmentation by motion grouping. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 7177–7188.

- Yang et al. (2023) Yang, J.; Gao, M.; Li, Z.; Gao, S.; Wang, F.; and Zheng, F. 2023. Track Anything: Segment Anything Meets Videos. arXiv preprint arXiv:2304.11968.

- Yang, Fan, and Xu (2019) Yang, L.; Fan, Y.; and Xu, N. 2019. Video instance segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 5188–5197.

- Yang et al. (2021b) Yang, S.; Fang, Y.; Wang, X.; Li, Y.; Fang, C.; Shan, Y.; Feng, B.; and Liu, W. 2021b. Crossover learning for fast online video instance segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 8043–8052.

- Yang et al. (2022) Yang, S.; Wang, X.; Li, Y.; Fang, Y.; Fang, J.; Liu, W.; Zhao, X.; and Shan, Y. 2022. Temporally Efficient Vision Transformer for Video Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2885–2895.

- Yang et al. (2021c) Yang, S.; Zhang, L.; Qi, J.; Lu, H.; Wang, S.; and Zhang, X. 2021c. Learning motion-appearance co-attention for zero-shot video object segmentation. In Proceedings of the IEEE/CVF international conference on computer vision, 1564–1573.

- Yang, Wei, and Yang (2020) Yang, Z.; Wei, Y.; and Yang, Y. 2020. Collaborative video object segmentation by foreground-background integration. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part V, 332–348. Springer.

- Yuan et al. (2023) Yuan, J.; Jay, P.; Hung, N.; Kim, C.; and Li, F. 2023. Maximal Cliques on Multi-Frame Proposal Graph for Unsupervised Video Object Segmentation. arXiv preprint arXiv:2301.12352.

- Zhang et al. (2021) Zhang, K.; Zhao, Z.; Liu, D.; Liu, Q.; and Liu, B. 2021. Deep transport network for unsupervised video object segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 8781–8790.

- Zhang et al. (2022) Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; and Wang, X. 2022. Bytetrack: Multi-object tracking by associating every detection box. In European Conference on Computer Vision, 1–21. Springer.

- Zhou et al. (2021) Zhou, T.; Li, J.; Li, X.; and Shao, L. 2021. Target-aware object discovery and association for unsupervised video multi-object segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 6985–6994.

- Zhou et al. (2022) Zhou, T.; Porikli, F.; Crandall, D. J.; Van Gool, L.; and Wang, W. 2022. A Survey on Deep Learning Technique for Video Segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence.

- Zhou et al. (2020) Zhou, T.; Wang, S.; Zhou, Y.; Yao, Y.; Li, J.; and Shao, L. 2020. Motion-attentive transition for zero-shot video object segmentation. In Proceedings of the AAAI conference on artificial intelligence, volume 34, 13066–13073.

- Zhu et al. (2020) Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; and Dai, J. 2020. Deformable detr: Deformable transformers for end-to-end object detection. arXiv preprint arXiv:2010.04159.

- Zou et al. (2023) Zou, X.; Yang, J.; Zhang, H.; Li, F.; Li, L.; Gao, J.; and Lee, Y. J. 2023. Segment everything everywhere all at once. arXiv preprint arXiv:2304.06718.