UV-SLAM: Unconstrained Line-based SLAM Using Vanishing Points for Structural Mapping

Abstract

In feature-based simultaneous localization and mapping (SLAM), line features complement the sparsity of point features, making it possible to map the surrounding environment structure. Existing approaches utilizing line features have primarily employed a measurement model that uses line re-projection. However, the direction vectors used in the 3D line mapping process cannot be corrected because the line measurement model employs only the lines’ normal vectors in the Plücker coordinate. As a result, problems like degeneracy that occur during the 3D line mapping process cannot be solved. To tackle the problem, this paper presents a UV-SLAM, which is an unconstrained line-based SLAM using vanishing points for structural mapping. This paper focuses on using structural regularities without any constraints, such as the Manhattan world assumption. For this, we use the vanishing points that can be obtained from the line features. The difference between the vanishing point observation calculated through line features in the image and the vanishing point estimation calculated through the direction vector is defined as a residual and added to the cost function of optimization-based SLAM. Furthermore, through Fisher information matrix rank analysis, we prove that vanishing point measurements guarantee a unique mapping solution. Finally, we demonstrate that the localization accuracy and mapping quality are improved compared to the state-of-the-art algorithms using public datasets.

I Introduction

The feature-based visual simultaneous localization and mapping (SLAM) has been primarily developed based on point features because a point is the smallest unit that can express the characteristics of an image and has an advantage in low computation environment. In addition, point features have been used for localization because they are easy to track. However, point features have several drawbacks[1]. First, point features are not robust in low-texture environments such as hallways. Moreover, they are weak against illumination change. Finally, point features are sparse, making it difficult to visualize the surrounding environment with a 3D map.

To supplement the point features, line-based methods have been proposed. Line features can additionally be used in environments with low textures, such as corridors. In addition, because the line consists of several points, there is a high probability that the characteristics will be maintained even when an illumination change occurs[4, 5]. Finally, because line features have structural regularities, the surrounding environment can be easily identified through 3D mapping[6].

With the above advantages, many studies have been conducted to apply line features to visual SLAM. First, a method using the Plücker coordinate and the orthonormal representation for representing 3D lines was proposed[7] to express a 3D line with a higher degree of freedom (DoF) than a 3D point. Based on this, line measurement model was defined in a similar way to the point measurement model. It re-projects a 3D line and calculates the difference from the new observed line. Most line-based algorithms adopt similar methods on existing point-based methods. Filtering-based approaches[8, 9] were developed from MSCKF[10]. Some optimization-based methods[11, 12, 13] exploited ORB-SLAM[14] and other approaches[1, 15, 2, 3] used VINS-Mono[16].

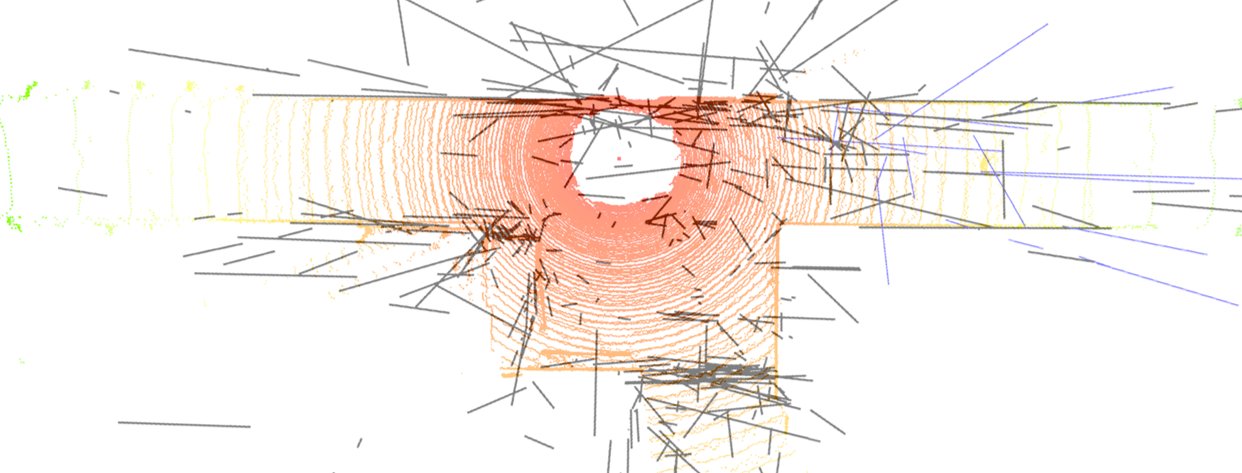

However, the above algorithms applying only line measurement model does not solve the problems in the 3D mapping of lines. Among the difficulties, a degeneracy problem occurs in the 3D mapping of line features. The degeneracy refers to a phenomenon in which 3D features cannot be uniquely determined through the triangulation of features[17]. When the observed 2D point is close to the epipole, a point feature cannot be determined as a single 3D point because the 3D point exists on the baseline. Similarly, when the observed 2D line passes close to the epipole, a line feature cannot be determined as a single 3D line because the 3D line exists on the epipolar plane. The mapping of line features is inaccurate because degeneracy occurs more frequently than point features do. However, because the line measurement model used in the existing algorithm employs only each line’s normal vector in the Plücker coordinate, their direction vectors cannot be corrected. Fig. 1 shows the comparison of top views of 3D line mapping results between ALVIO[2, 3] and the proposed algorithm with the LiDAR point clouds overlaid in a real corridor environment. The ALVIO has poor mapping result despite the lines having structural regularities as shown in Fig. 1.

In this paper, we propose a UV-SLAM, an algorithm that solves the above problems using the lines’ structural regularities as shown in Fig. 1. The main contributions of this paper are as follows:

-

•

To the best of our knowledge, the proposed UV-SLAM is the first optimization-based monocular SLAM using vanishing point measurements for structural mapping without any restriction such as camera motion and environment. In particular, our algorithm does not use the Manhattan world assumption in the process of extracting the vanishing points and using them as measurements.

-

•

We define a novel residual term and Jacobian of the vanishing point measurements based on the most common methods of expressing 3D line features: the Plücker coordinate and the orthonormal representation.

-

•

We prove that the proposed method guarantees the observability of 3D lines through Fisher information matrix (FIM) rank analysis. Through this, problems that occur in the 3D mapping of line features are proven to be solved.

The rest of this paper is organized as follows. Section II gives a review of related works. Section III explains the proposed method in depth. Section IV analyzes the Fisher information matrix rank to prove the validity of the proposed method. Section V provides the experimental results. Finally, Section VI concludes by summarizing our contributions and discussing future work.

II Related works

II-A Degeneracy of Line Features

Some works looked into the degeneracy for line features[18, 19]. In addition, Yang et al. investigated degenerate motions using two distinct line triangulation methods[9]. Subsequently, they analyzed various features’ observability and degenerate camera motions in the inertial measurement unit (IMU) aided navigation system[20]. However, when line degeneracy occurs in these investigations, all of the degenerate lines have been eliminated. As a result, there is a limitation in that information loss occurs due to the removed lines.

To handle degenerate lines, Ok et al. reconstructed 3D line segments using imaginary points when the lines are close to the epipolar line[21]. However, this approach can be used only when degenerate lines intersect with other lines, and it restricts applicability. Our previous work solved the degeneracy by using structural constraints in parallel conditions, investigating the fact that degenerate lines frequently occur in pure translation motions[3]. However, this method has a limitation in that it can be used only in pure translational motions. Therefore, in order to improve the quality of line mapping, a method that can be used independent of camera motion is required.

II-B Line-based SLAM with Manhattan or Atlanta World Assumption

In [22, 23], rotation matrix was estimated using the Manhattan world assumption. Based on this, decoupled methods have been proposed to estimate the translation after calculating the rotation through the vanishing points[24, 25, 26]. In addition, some approaches applied line features to SLAM by using the Manhattan or Atlanta world assumption [27, 28]. These methods use a novel 2-DoF line representation to exploit lines with structural regularities only. Furthermore, there is a study using 2-DoF line representation to classify structural lines and non-structural lines[29]. However, as these approaches use structural lines with dominant direction only, they are practical only in an indoor environment where the assumptions are mostly correct. Therefore, there is a need for a novel algorithm that is not restricted by assumptions.

II-C Vanishing Point Measurements

Some approaches use vanishing point measurements without assumptions. In [30], parallel lines were clustered based on vanishing points. Then, residuals were constructed using the conditions that parallel lines should be in one plane and their cross product should be zero. However, accurate mapping results could not be obtained when the initial estimation was inaccurate as degeneracy occurred.

Moreover, there is a paper using vanishing points as an observation model. In [31], the residuals are defined to apply unbounded vanishing point measurements to line-based SLAM. Unfortunately, it does not provide a proof that vanishing point measurement improves the localization accuracy and line mapping results of line-based SLAM.

III Proposed method

III-A Framework of Algorithm

The overall framework of the UV-SLAM is shown in Fig. 2. The proposed method is based on VINS-Mono[16], and the way IMU and point measurements are used is similar to it. The point features are extracted from Shi-Tomasi[32] and are tracked by KLT[33]. In addition, the IMU measurement model is defined by the pre-integration method[34]. Finally, our optimization-based method employs two-way marginalization with Schur complement[35].

To add line features to the monocular visual-inertial odometry (VIO) system, the line features are extracted from line segment detector (LSD)[36] and are tracked by line binary descriptor (LBD)[37]. Whereas 3D points can be intuitively expressed in , 3D lines require a complicated way to express themselves. Therefore, the proposed algorithm employs a Plücker coordinate and a orthonormal representation used in [7]. The Plücker coordinate is an intuitive way to represent 3D lines, and a 3D line is represented as follows:

| (1) |

where and represent normal and direction vectors, respectively. The Plücker coordinate is used in the triangulation and re-projection process. Whereas 3D lines are actually 4-DoF, the lines in the Plücker coordinate are 6-DoF. Therefore, over-parameterization problem occurs in the optimization process of VIO or visual SLAM. To solve this, the orthonormal representation is employed, which is a 4-DoF representation of lines. It is used in the optimization process and can be expressed as follows:

| (2) |

where is the 3D line’s rotation matrix in Euler angles with respect to the camera coordinate system, and is the parameter representing the minimal distance from the camera center to the line. The conversion between the Plücker coordinate and the orthonormal representation is given in [7].

In addition, the UV-SLAM can determine whether the extracted lines have structural regularities or not. For vanishing point detection, the proposed algorithm use J-linkage[38], which can find multiple instances in the presence of noise and outliers. The overall process is as follows: First, vanishing point hypotheses are created through random sampling for all lines extracted from the image. Subsequently, after merging similar ones through comparison between the hypotheses, vanishing points are calculated. Because the J-linkage can find all possible vanishing points through the hypotheses, it can find more vanishing points than other algorithms with the Manhattan world assumption.

III-B State Definition

In this paper, , , and represent the world coordinate, camera coordinate, and body coordinate, respectively. In addition, reflects the coordinate transformations of a rotation matrix, quaternion, or translation from the body coordinate to the world coordinate. The state vector used in our system is as follows:

| (3) | ||||

where represents the entire state, and represents the body state in the -th sliding window, which is made up of the following parameters: position, quaternion, velocity, and biases of the accelerometer and gyroscope. In addition, the entire state includes the inverse depths of point features, which are represented as . In this paper, lines expressed in the orthonormal representations are newly added as . , , and are the numbers of sliding window, point features, and line features, respectively.

III-C UV-SLAM

Employing defined states in (3), the entire objective for optimization is as follows:

|

|

(4) |

where , , , , and represent marginalization, IMU, point, line, and vanishing point measurement residuals, respectively. In addition, , , , and stand for observations of IMU, point, line, and vanishing point, respectively; is the set of all pre-integrated IMU measurements in a sliding window; , and are the sets of point, line, and vanishing point measurements in observed frames; and , , and represent IMU, point, line, and vanishing point measurement covariance matrices, respectively. , , and mean loss functions of the point, line, and vanishing point measurements, respectively. and are set to the Huber norm function [39] and is set to the inverse tangent function because of the vanishing point measurement model’s unbound problem. An example of the factor graph for the defined cost function is shown in Fig. 3. If there is no vanishing point measurement for a specific line, only the line feature factor is used as in the case of . If a specific line has corresponding vanishing point measurement, the line feature and vanishing point factors are employed as in the case of . For the optimization process, Ceres Solver[40] is used.

III-D Line Measurement Model

First, the re-projection of the 3D line in the Plücker coordinate is as follows:

| (5) | ||||

where , and represent the re-projected line, the projection matrix of a line feature, and the camera’s intrinsic parameter, respectively. (, ) and (, ) denote image’s focal lengths and principal points, respectively. Because the proposed algorithm applies to a normalized plane, and are identity matrices. As a result, the re-projected line is equal to the normal vector in the proposed method.

As shown in Fig. 4, the residual of the line measurement model is defined as the following re-projection error:

| (6) |

where

| (7) |

and denotes the line residual and denotes the distance between both endpoints of the observed line and the re-projected line. and are the endpoints of the observed line in the image. The corresponding Jacobian matrix with respect to the 3D line can be represented by the body state change, , and the orthonormal representation change, , as follows:

| (8) |

with

| (9) | ||||

where

| (10) | ||||

and is a transformation matrix from the camera coordinate to the body coordinate in the Plücker coordinate.

III-E Vanishing Point Measurement Model

After the vanishing points are calculated, the observed line features use the corresponding vanishing points as new observations. An example of clustering lines through vanishing points is shown in Fig. 5. The lines with the same vanishing point are expressed in the same color.

To estimate the vanishing points, the point on the 3D line is expressed in a homogeneous coordinate as follows[17]:

| (11) |

where

| (12) |

and represents a point on the 3D line. Then, the vanishing point equals the projection of a point at infinity on the 3D line as follows:

| (13) |

where is a camera projection matrix. In the UV-SLAM, the vanishing point from the line is equal to the direction vector of the line. The vanishing point estimation is calculated by the intersection of the and the image plane, as shown in Fig. 6. Finally, the vanishing point residual is as follows:

| (14) |

where and represent the vanishing point residual and the vanishing point observation, respectively. The corresponding Jacobian matrix with respect to the vanishing point can be obtained in terms of and as follows:

| (15) |

where

| (16) | ||||

IV Fisher Information Matrix Rank Analysis

We rigorously analyze the observability of line features through Fisher information matrix (FIM) rank analysis. If the FIM is singular, the system is unobservable[41]. Wang et al. proved that the Jacobian matrix used in the FIM calculation must satisfy the full column rank condition for the FIM to satisfy nonsingularity[42]. We also use this approach to analyze the observability of the proposed method.

First, the FIM of the line measurement in the orthonormal representation is as follows:

| (17) |

where

| (18) | ||||

and is the non-zero element in the -th row and the -th column of , which is obtained by substituting (9) into (18). represents the inverse of covariance matrix of the line observation. Because , , and are full rank matrices from (9), the rank of is determined by in (18). From (9), the maximum rank of is 2, and the case in which the rank becomes 1 is as follows:

| (19) |

However, because a line in the orthonormal representation has four parameters, the observability of the line cannot be guaranteed with the line measurement model alone. To solve this problem, a new observation on the line other than both endpoints is introduced as follows:

| (20) |

where represents the new observation. However, despite adding a new observation, the rank of is up to 2. Therefore, the line features using only the line measurement model are still not observable.

From a new perspective, we propose to calculate the FIM with the vanishing point measurements. The FIM of the vanishing point measurement in the orthonormal representation is as follows:

| (21) |

where

| (22) | ||||

and is the non-zero element in the -th row and the -th column of , which is obtained by substituting (16) into (22). represents the inverse of covariance matrix of the vanishing point observation. Similarly, all matrices are full rank except . Therefore, the rank of is 2, which can be obtained from the rank of in (16).

By eigenvalue decomposition, the FIM considering both the line measurement and the vanishing point measurement can be obtained as follows:

| (23) | ||||

where

| (24) |

At this time, the rows of the line measurement and the vanishing point measurement are independent in . Therefore, the rank of is 4, except for the case of (19). We can confirm that the line features become fully observable by additionally using the vanishing point meausrement model.

V Experimental Results

The experiments were carried out on an Intel Core i7-9700K processor with 32GB of RAM. Using the EuRoC micro aerial vehicle (MAV) datasets [43], we tested the state-of-the-art algortihms and the UV-SLAM. Each dataset offers a varied level of complexity depending on factors like lighting, texture, and MAV speed. Therefore, the datasets were appropriate to validate the performance of the proposed method.

We compared the localization accuracy of the proposed method with that of VINS-Mono which is our base algorithm. In addition, we also compared PL-VINS[15], ALVIO, and our previous work which use line features on top of VINS-Mono. The parameters of compared algorithms are set to the default values in the open-source codes. We employed the rpg trajectory evaluation tool[44]. Table I shows the translational root mean square error (RMSE) for the EuRoC datasets. The proposed method has better performance than state-of-the-art algorithms in all datasets. In particular, the proposed algorithm shows 32.3%, 23.8%, 26.4%, and 17.6% smaller average error than VINS-Mono, PL-VINS, ALVIO, and our previous work, respectively. More accurate results could be obtained in the proposed algorithm because the line features become fully observable using the vanishing point measurements. The results for trajectory, rotation error, and translation error of V2_02_medium in the EuRoC datasets are shown in Fig. 7.

| Translation | VINS-Mono | PL-VINS | ALVIO | Our method | UV-SLAM |

|---|---|---|---|---|---|

| RMSE | in [3] | ||||

| MH_01_easy | 0.159 | 0.164 | 0.148 | 0.142 | 0.139 |

| MH_02_easy | 0.140 | 0.174 | 0.136 | 0.126 | 0.094 |

| MH_03_medium | 0.225 | 0.187 | 0.209 | 0.198 | 0.189 |

| MH_04_difficult | 0.408 | 0.335 | 0.389 | 0.301 | 0.261 |

| MH_05_difficult | 0.312 | 0.347 | 0.317 | 0.293 | 0.188 |

| V1_01_easy | 0.094 | 0.071 | 0.085 | 0.087 | 0.067 |

| V1_02_medium | 0.115 | 0.086 | 0.075 | 0.072 | 0.070 |

| V1_03_difficult | 0.203 | 0.152 | 0.200 | 0.156 | 0.109 |

| V2_01_easy | 0.099 | 0.090 | 0.094 | 0.098 | 0.085 |

| V2_02_medium | 0.161 | 0.120 | 0.133 | 0.103 | 0.112 |

| V2_03_difficult | 0.341 | 0.278 | 0.288 | 0.277 | 0.213 |

In addition, the mapping results for MH_05_difficult and V2_01_easy in the EuRoC datasets are shown in Fig. 8. In the case of ALVIO, the quality of mapping is low due to degenerate lines. In our previous work, degenerate lines were corrected only in pure translational camera motion. Noteworthily, lines’ direction vectors are aligned thanks to the vanishing point measurements in UV-SLAM. All top views of the line mapping results for the EuRoC datasets are available at: https://github.com/url-kaist/UV-SLAM/blob/main/mapping_result.pdf.

The average runtime is about 53.528ms for the frontend and 47.086ms for the backend for the EuRoC datasets. UV-SLAM has only about 3ms longer frontend runtime than other algorithms because it extracts vanishing points. Moreover, the runtime of the backend corresponding to optimization is similar to those of other algorithms. This is because the proposed vanishing point measurement model does not use new parameters.

VI Conclusion

In summary, we proposed UV-SLAM, which is the unconstrained line-based SLAM using a vanishing point measurement. The proposed method can be used without any assumptions such as the Manhattan world. We calculated the residual and Jacobian matrices of the vanishing point measurements. Through FIM rank analysis, we verified that line’s observability is guaranteed by introducing the vanishing point measurements into the existing method. In addition, we showed that localization accuracy and mapping quality have increased through quantitative and qualitative comparisons with state-of-the-art algorithms. For future work, we will implement mesh or pixel-wise mapping through sparse line mapping from the proposed algorithm.

References

- [1] Y. He, J. Zhao, Y. Guo, W. He, and K. Yuan, “PL-VIO: Tightly-coupled monocular visual–inertial odometry using point and line features,” Sensors, vol. 18, no. 4, p. 1159, 2018.

- [2] K. Jung, Y. Kim, H. Lim, and H. Myung, “ALVIO: Adaptive line and point feature-based visual inertial odometry for robust localization in indoor environments,” in Proc. International Conference on Robot Intelligence Technology and Applications (RiTA), 2020, https://arxiv.org/abs/2012.15008.

- [3] H. Lim, Y. Kim, K. Jung, S. Hu, and H. Myung, “Avoiding degeneracy for monocular visual SLAM with point and line features,” in Proc. IEEE International Conference on Robotics and Automation (ICRA), 2021, pp. 11 675–11 681.

- [4] D. G. Kottas and S. I. Roumeliotis, “Efficient and consistent vision-aided inertial navigation using line observations,” in Proc. IEEE International Conference on Robotics and Automation (ICRA), 2013, pp. 1540–1547.

- [5] X. Kong, W. Wu, L. Zhang, and Y. Wang, “Tightly-coupled stereo visual-inertial navigation using point and line features,” Sensors, vol. 15, no. 6, pp. 12 816–12 833, 2015.

- [6] G. Zhang, J. H. Lee, J. Lim, and I. H. Suh, “Building a 3-D line-based map using stereo SLAM,” IEEE Transactions on Robotics, vol. 31, no. 6, pp. 1364–1377, 2015.

- [7] A. Bartoli and P. Sturm, “Structure-from-motion using lines: Representation, triangulation, and bundle adjustment,” Computer Vision and Image Understanding, vol. 100, no. 3, pp. 416–441, 2005.

- [8] F. Zheng, G. Tsai, Z. Zhang, S. Liu, C.-C. Chu, and H. Hu, “Trifo-VIO: Robust and efficient stereo visual inertial odometry using points and lines,” in Proc. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018, pp. 3686–3693.

- [9] Y. Yang, P. Geneva, K. Eckenhoff, and G. Huang, “Visual-inertial odometry with point and line features,” in Proc. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2019, pp. 2447–2454.

- [10] A. I. Mourikis and S. I. Roumeliotis, “A multi-state constraint Kalman filter for vision-aided inertial navigation,” in Proc. IEEE International Conference on Robotics and Automation (ICRA), 2007, pp. 3565–3572.

- [11] A. Pumarola, A. Vakhitov, A. Agudo, A. Sanfeliu, and F. Moreno-Noguer, “PL-SLAM: Real-time monocular visual SLAM with points and lines,” in Proc. IEEE International Conference on Robotics and Automation (ICRA), 2017, pp. 4503–4508.

- [12] X. Zuo, X. Xie, Y. Liu, and G. Huang, “Robust visual SLAM with point and line features,” in Proc. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2017, pp. 1775–1782.

- [13] S. J. Lee and S. S. Hwang, “Elaborate monocular point and line SLAM with robust initialization,” in Proc. of the IEEE International Conference on Computer Vision, 2019, pp. 1121–1129.

- [14] R. Mur-Artal, J. M. M. Montiel, and J. D. Tardos, “ORB-SLAM: A versatile and accurate monocular SLAM system,” IEEE Transactions on Robotics, vol. 31, no. 5, pp. 1147–1163, 2015.

- [15] Q. Fu, J. Wang, H. Yu, I. Ali, F. Guo, and H. Zhang, “PL-VINS: Real-time monocular visual-inertial SLAM with point and line,” arXiv preprint arXiv:2009.07462, 2020.

- [16] T. Qin, P. Li, and S. Shen, “VINS-mono: A robust and versatile monocular visual-inertial state estimator,” IEEE Transactions on Robotics, vol. 34, no. 4, pp. 1004–1020, 2018.

- [17] R. Hartley and A. Zisserman, Multiple View Geometry in Computer Vision. Cambridge University Press, 2003.

- [18] T. Sugiura, A. Torii, and M. Okutomi, “3D surface reconstruction from point-and-line cloud,” in Proc. International Conference on 3D Vision, 2015, pp. 264–272.

- [19] H. Zhou, D. Zhou, K. Peng, W. Fan, and Y. Liu, “SLAM-based 3D line reconstruction,” in Proc. World Congress on Intelligent Control and Automation (WCICA), 2018, pp. 1148–1153.

- [20] Y. Yang and G. Huang, “Observability analysis of aided INS with heterogeneous features of points, lines, and planes,” IEEE Transactions on Robotics, vol. 35, no. 6, pp. 1399–1418, 2019.

- [21] A. Ö. Ok, J. D. Wegner, C. Heipke, F. Rottensteiner, U. Sörgel, and V. Toprak, “Accurate reconstruction of near-epipolar line segments from stereo aerial images,” Photogrammetrie-Fernerkundung-Geoinformation, no. 4, pp. 345–358, 2012.

- [22] P. Kim, B. Coltin, and H. J. Kim, “Low-drift visual odometry in structured environments by decoupling rotational and translational motion,” in Proc. IEEE International Conference on Robotics and Automation (ICRA), 2018, pp. 7247–7253.

- [23] ——, “Indoor RGB-D compass from a single line and plane,” in Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018, pp. 4673–4680.

- [24] Y. Li, N. Brasch, Y. Wang, N. Navab, and F. Tombari, “Structure-SLAM: Low-drift monocular SLAM in indoor environments,” IEEE Robotics and Automation Letters, vol. 5, no. 4, pp. 6583–6590, 2020.

- [25] Y. Li, R. Yunus, N. Brasch, N. Navab, and F. Tombari, “RGB-D SLAM with structural regularities,” in Proc. IEEE International Conference on Robotics and Automation (ICRA), 2020, pp. 11 581–11 587.

- [26] R. Yunus, Y. Li, and F. Tombari, “ManhattanSLAM: Robust planar tracking and mapping leveraging mixture of Manhattan frames,” in Proc. IEEE International Conference on Robotics and Automation (ICRA), 2021, pp. 6687–6693.

- [27] H. Zhou, D. Zou, L. Pei, R. Ying, P. Liu, and W. Yu, “StructSLAM: Visual SLAM with building structure lines,” IEEE Transactions on Vehicular Technology, vol. 64, no. 4, pp. 1364–1375, 2015.

- [28] D. Zou, Y. Wu, L. Pei, H. Ling, and W. Yu, “StructVIO: Visual-inertial odometry with structural regularity of man-made environments,” IEEE Transactions on Robotics, vol. 35, no. 4, pp. 999–1013, 2019.

- [29] B. Xu, P. Wang, Y. He, Y. Chen, Y. Chen, and M. Zhou, “Leveraging structural information to improve point line visual-inertial odometry,” in Proc. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2021.

- [30] J. Lee and S.-Y. Park, “PLF-VINS: Real-time monocular visual-inertial SLAM with point-line fusion and parallel-line fusion,” IEEE Robotics and Automation Letters, 2021.

- [31] J. Ma, X. Wang, Y. He, X. Mei, and J. Zhao, “Line-based stereo SLAM by junction matching and vanishing point alignment,” IEEE Access, vol. 7, pp. 181 800–181 811, 2019.

- [32] J. Shi et al., “Good features to track,” in Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 1994, pp. 593–600.

- [33] C. Tomasi and T. Kanade, “Detection and tracking of point,” International Journal of Computer Vision, vol. 9, pp. 137–154, 1991.

- [34] C. Forster, L. Carlone, F. Dellaert, and D. Scaramuzza, “On-manifold preintegration for real-time visual–inertial odometry,” IEEE Transactions on Robotics, vol. 33, no. 1, pp. 1–21, 2016.

- [35] G. Sibley, L. Matthies, and G. Sukhatme, “Sliding window filter with application to planetary landing,” Journal of Field Robotics, vol. 27, no. 5, pp. 587–608, 2010.

- [36] R. G. Von Gioi, J. Jakubowicz, J.-M. Morel, and G. Randall, “LSD: A fast line segment detector with a false detection control,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 32, no. 4, pp. 722–732, 2008.

- [37] L. Zhang and R. Koch, “An efficient and robust line segment matching approach based on LBD descriptor and pairwise geometric consistency,” Journal of Visual Communication and Image Representation, vol. 24, no. 7, pp. 794–805, 2013.

- [38] R. Toldo and A. Fusiello, “Robust multiple structures estimation with J-linkage,” in Proc. European Conference on Computer Vision (ECCV). Springer, 2008, pp. 537–547.

- [39] P. J. Huber, “Robust estimation of a location parameter,” in Breakthroughs in Statistics. Springer, 1992, pp. 492–518.

- [40] S. Agarwal, K. Mierle, and Others, “Ceres solver,” Accessed on: Sep. 09, 2021. [Online], Available: http://ceres-solver.org.

- [41] Y. Bar-Shalom, X. R. Li, and T. Kirubarajan, Estimation with Applications to Tracking and Navigation: Theory, Algorithms, and Software. John Wiley & Sons, 2004.

- [42] Z. Wang and G. Dissanayake, “Observability analysis of SLAM using Fisher information matrix,” in Proc. IEEE International Conference on Control, Automation, Robotics and Vision (ICARCV), 2008, pp. 1242–1247.

- [43] M. Burri, J. Nikolic, P. Gohl, T. Schneider, J. Rehder, S. Omari, M. W. Achtelik, and R. Siegwart, “The EuRoC micro aerial vehicle datasets,” The International Journal of Robotics Research, vol. 35, no. 10, pp. 1157–1163, 2016.

- [44] Z. Zhang and D. Scaramuzza, “A tutorial on quantitative trajectory evaluation for visual (-inertial) odometry,” in Proc. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018, pp. 7244–7251.