Utilitarian Welfare Optimization in the Generalized Vertex Coloring Games: An Implication to Venue Selection in Events Planning

Abstract

We consider a general class of multi-agent games in networks, namely the generalized vertex coloring games (G-VCGs), inspired by real-life applications of the venue selection problem in events planning. Certain utility responding to the contemporary coloring assignment will be received by each agent under some particular mechanism, who, striving to maximize his own utility, is restricted to local information thus self-organizing when choosing another color. Our focus is on maximizing some utilitarian-looking welfare objective function concerning the cumulative utilities across the network in a decentralized fashion. Firstly, we investigate on a special class of the G-VCGs, namely Identical Preference VCGs (IP-VCGs) which recovers the rudimentary work by Chaudhuri et al. (2008). We reveal its convergence even under a completely greedy policy and completely synchronous settings, with a stochastic bound on the converging rate provided. Secondly, regarding the general G-VCGs, a greediness-preserved Metropolis-Hasting based policy is proposed for each agent to initiate with the limited information and its optimality under asynchronous settings is proved using theories from the regular perturbed Markov processes. The policy was also empirically witnessed to be robust under independently synchronous settings. Thirdly, in the spirit of “robust coloring”, we include an expected loss term in our objective function to balance between the utilities and robustness. An optimal coloring for this robust welfare optimization would be derived through a second-stage MH-policy driven algorithm. Simulation experiments are given to showcase the efficiency of our proposed strategy.

keywords:

vertex coloring game , Markov Chain methods , the Metropolis-Hasting algorithm , venue selection problem , welfare optimization[label1]organization=School of Physical and Mathematical Sciences, Nanyang Technological University, country=Singapore

1 Introduction

Selecting a right venue is crucial to the success of an event and worth emphasis in events planning. Critical criteria for being “right” may consider the venue’s layout and size, the accessibility, technical requirements, the atmosphere or tone the planner wishes to convey, etc., and often differ across specific activities (Allen et al., 2022). A common challenge confronted in reality is for a group of different event managers to select their preferred locations among a limited number of venues, especially when there exist mutual timetable clashes between events, and a beneficial-to-all venue assignment is always desirable. For instance, multiple clubs on a campus who intend to organize respective events in celebration of certain festival may fail to obtain their respective favorite venue due to the limited number of available function halls. A minimum requirement for any event planning is to guarantee that each event would be placed somewhere without a timetable clash with others, which can be modelled by a classical graph (vertex) coloring problem. Vertex coloring has long been a prominent tool for modelling network problems with multifrious applications including scheduling, channel assignment, text or image segmentation, etc.; see, e.g. a survey by Ahmed (2012). Given a connected graph (network) with and a collection of colors with cardinality , a coloring is a function that assigns each vertex a color . Denote the color assigned to a vertex by . We say that a clash occurs between two vertices and when , . It is desired for many scenarios to obtain a coloring without clash, namely a proper coloring such that whenever . Denote the space of all possible colorings by and the space of all proper colorings by . For the sake of representation, the graph is often translated to a square location matrix where . The nighborhood of a vertex , , is defined as the set .

Concretely during events planning, one may represent different function halls as different colors. Let each club manager be represented by a vertex, then an edge can be linked between any pair whose event schedules clash, which establishes a connected graph (network). The goal is then to look for a proper coloring; i.e. a coloring in which adjacent vertices are associated with distinguished colors. However, classical procedures of solving such a vertex coloring problem often provide few practical insights for event planners due to two drawbacks. One unfavorable shortcoming is that classical vertex colorings always bother a “central agent” to gather and manipulate with the information contained in the entire network (Kearns et al., 2006; Chaudhuri et al., 2008). In real-life planning, this agent with “omniscient” view is most times absent, and each club manager needs to propose and apply for a venue autonomously. Algorithmically speaking, though centralized colorings may converge to a proper one quickly, they require heavy workload or computation, especially when is large, which are most times out of reach in reality. The other limitation is regarding the overlooked real value, or social welfare, generated by the eventual coloring. As mentioned, managers do have varied preferences on different venues, thus beyond the properness of the eventual coloring, the satisfiability of each agent under the coloring is worth further attention.

Bearing the above concerns in mind, in this paper, we keep the agents in the network self-organized and extend the pursuit of a proper coloring to more general objectives. To eliminate any dependence on “central agent”, each vertex is motivated to strive for some target coloring themselves through limited information sharing and mutual negotiations. In other words, instead of being “allocated” a color, each vertex is required to “select” a color autonomously in rounds and solve the possible improper situations themselves through compliant communications. Such a “self-organizing” setting better mimics the real dynamics and interactions occuring in the multi-agent system and converts the original static task to an evolutionary game, namely a Vertex Coloring Game (VCG). Meanwhile, in an attempt to include the group satisfiability into consideration, certain preference mechanism is employed to measure personal satisfiabilities. Analogue to event planners’ customized preferences to different function halls, a preference function is developed by each vertex to measure its preference to the current color in hold, with the total number of colors provided as a constraint. Like in many conventional game-theoretic settings, we are interested in the social welfare across the entire network which is evaluated via some welfare functional defined by which is essentially a function of all individual utilities. Such functions are often referred to as “cardinal” welfare functions and cover a wide range of candidates including utilitarian welfare, minmax welfare, Foster’s welfare, etc. (Keeney and Kirkwood, 1975). Finding a most beneficial venue assignment is thus equivalent to maximizing the social welfare without violating the constraint of a proper coloring, which is summarized in the following constrained welfare optimization problem

| () | ||||

Remark 1

We would like to accentuate that, the optimal coloring to (), , is necessarily a Nash equilibrium. Suppose not, since (by the properness of ), the only way for to obtain better utility is to select another color he prefers more but different from all his neighbots’ choices, otherwise his utility would be reduced to zero. This operation destines an increase in social welfare thus contracting to the fact that is optimal.

Note that the set constraint of proper colorings typically do not enjoy nice geometric structures and the preference functions are completely arbitrary and purely decided by the particular event manager. Consequently, it is often difficult to explicitly control the feasibility of a new coloring when optimizing the objective in Problem . Hence, it is natural to consider some relaxation to somehow decompose and reflect this network constraint to individual agents; i.e. each agent would commit the duty to satisfy the constraint. To achieve this goal, we define a utility function for each agent as

| (1) |

and formulate another optimization problem

| () |

Denote the optimal colorings of () and () by and in respective. In Section 3, we will show that under several conditions.

As mentioned, the information accessible to each event manager is limited and it is extremely expensive for each event manager to collect the latest situations of all other clubs in the entire network. We integrate this consideration of information scarcity into our modelling and restrict vertices’ exposures to two types of “local” information:

-

(Info-Type1)

A vertex can access to the information of his neighbors, including the current utility, the current color assignment and the weight in social welfare.

-

(Info-Type2)

A vertex can access to the information of the members holding the same color, including the current utility, the current color assignment and the weight in social welfare.

Despite its advantages in simulating realistic behaviours, such a VCG with restricted information may be associated with several vulnerabilities. For instance, scarce and unbalanced information can obstruct the convergence to an optimal solution to Problem , and manager’s personal incentives may inconsilient with the global interest. We illuminate three threatening features of the VCGs as follows:

-

(Feature 1)

The agents are greedy: if an agent is offered an alternative color that improves his utility, he would have a high incentive to embrace it; otherwise, when confronted with a color he disdains, he would most times reject on the assignment.

-

(Feature 2)

The agents are uncomplacent: The agents are not shiftless but always active to try some other color, even if he is not aware of whether a better choice exists.

-

(Feature 3)

The agents are myopic. Since each agent is only informed of the current situation without presaging capabilities, he can hardly realize that a temporary sacrifice on the utility may result in better personal outcomes in later rounds.

In each case, the optimization procedure regarding Problem () would be affected or even undermined. For instance, the game is likely to fall stuck when any agent occupies a certain color regardless of his neighbor’s benefits, or constantly rejects on taking any color he dislikes. The search space thus severely shrinks and the optimal solution may become inattainable. Fortunately, we will show in later sections that, under mild conditions and some proper strategy, the convergence to an optimal or suboptimal solution for Problem () is immuned to the above features.

Throughout our following discussions, the games are most modeled by discrete-time Markov Chains (MCs) with carefully designed transition matrices. This is because of the stochastic nature embedded in our game-theoretic setting, and the fact that the G-VCG obeys the Markov property: evolution of the game is memoryless and would only be determined by the present state. The corresponding state space would be the space of all possible colorings. Techniques and methods like the first-step analysis and Markov Chain Monte Carlo (MCMC) would be employed for mathematical derivations and algorithm designs.

To summarize, the main contributions of this paper are as follows.

-

(1)

We extend the goal of finding a proper coloring (a feasibility problem) to finding some proper max-welfare coloring solution (a constrained optimization problem) through information restrictive VCGs as expatiated in Problem and Problem , which better caters for the goal for venue selection in event planning.

-

(2)

We investigate on a special class of G-VCGs where agents are indifferent about the color assigned but only concern possible clashes, namely the Identical Preference VCGs (IP-VCGs). As a continuation to the work led by Chaudhuri et al. (2008), we further propose and prove a stochastic upper bound of for the convergence time to the optimal coloring, under mild assumptions.

-

(3)

Novel discussions are given on broad G-VCGs where an agent’s personal utility is contingent both on whether he is clash-free and the specific color assigned to him. Though a completely greedy policy no longer leads to optimality, we propose and show that a Metropolis-Hasting based policy that, while respecting agents’ feature of being greedy, help the self-organizing network move towards a optimal coloring in asynchronous settings and a special class of independently synchronous settings. Theories from the regular perturbed Markov Process (RPMP) are employed in proofs.

-

(4)

In a spirit of “robust coloring”, we further integrate into our formulation an extra term representing the expected loss from possible complementary edge connection, to increase the robustness of the optimal coloring in the sense firstly mentioned in yanez2003robust). To our knowledge, this is the first attempt to interact the objective of robust coloring with another optimization scheme such that the welfare and the risk are well balanced. A second-stage Metropolis-Hasting based algorithm taking the optimal solution to as input is presented to solve RWO.

2 Literature Review

2.1 Network Coloring Game for Conflict Resolving

The concept of the self-organized VCG was informally proposed in a behavioural study reported in Kearns et al. (2006), which was motivated by a self-organizing venue assignment problem among the faculty members. With the same aim of resolving conflicts (i.e. achieving some proper coloring), experiments were conducted against different network topologies and with variations to the extent of limits on information sharing among the agents. The conflict-resolving status and time were well monitored and compared across networks with distinguished features. In Chaudhuri et al. (2008), such social interactions within a network was first formulated as a game with binary payoffs, and theoretical results were derived through ingenious combinatorial arguments. It was shown that, even the agents would adopt completely greedy strategies and are allowed to act simultaneously, a proper coloring is attainable when available colors are two more in number than the maximum degree of the graph, and the procedure ends in rounds with probability . Almost at the same time, an independent work led by Panagopoulou and Spirakis (2008) studied a similar problem with a slightly different payoff scheme from a more game-theoretic perception. The authors showed that every Nash equilibrium of the corresponding VCG is feasible and locally optimal, and a characterization of the Nash equilibria was provided.

The two pioneering works attracted numerous attention to the field of decentralized coloring game. One branch of work focuses on customized results when the VCG is restricted to certain special graph structures (see, e.g., (Enemark, McCubbins, Paturi and Weller, 2011)). Another popular ramification is induced by the flexible game settings, both on game types ((Pelekis and Schauer, 2013; Carosi, Fioravanti, Gualà and Monaco, 2019)) and payoff features ((Kliemann, Sheykhdarabadi and Srivastav, 2015)) and employed strategies ((Hernández and Blum, 2012)). Of course, constant efforts have also been paid on improving the upper bounds for the convergence rate thus the complexity of the VCG (Bermond, Chaintreau, Ducoffe and Mazauric, 2019) or reduing the color supply (Fryganiotis, Papavassiliou and Pelekis, 2023). The idea of the VCG also shed light on many engineering problem-solvings; see, e.g. (Goonewardena, Akbari, Ajib and Elbiaze, 2014; Marden and Wierman, 2013; Touhiduzzaman, Hahn and Srivastava, 2018).

2.2 The Use of Markov Chains in Graph Coloring

A Markov chain is a stochastic process that models a sequence of random events in which the probability of each event depends only on the state of the system at the previous event. It has been widely used in graph coloring, which is NP-hard, due to its advantage in handling large-scale search in a systematic and efficient manner. One of the earliest Markov chain algorithms for graph coloring was proposed by Kirkpatrick, Gelatt Jr and Vecchi (1983) where the transition probability depends on the difference in the number of conflicting edges between the two colorings. The algorithm proved to be effective on small to medium-sized graphs had difficulty with larger graphs. In recent years, there have been several other Markov chain algorithms proposed for graph coloring, such as the Genetic Algorithm (Fleurent and Ferland, 1996; Hindi and Yampolskiy, 2012; Marappan and Sethumadhavan, 2013), Ant Colony Optimization (Salari and Eshghi, 2005; Dowsland and Thompson, 2008), and Particle Swarm Optimization (Cui, Qin, Liu, Wang, Zhang and Cao, 2008; Marappan and Sethumadhavan, 2021). Promising results have been shown on a wide range of graph coloring problems.

Markov chains have also been applied for color samplings. Aiming to approximately counting the number of the k-colorings in a graph, Jerrum (1995) converted this counting problem to estimating the mixing time of a Markov Chain. Inspired by the term of Glauber Dynamic in statistical physics, he presented an approach to ramdomly sampling colorings with maximum degree in time with at least colors provided. Vigoda (2000) later proved that it suffices for the number of colors to be only for the bound. Further results were developed for some graphs with special features (see, e.g., (Hayes and Vigoda, 2006; Hayes, Vera and Vigoda, 2007)). Recently, Vigoda’s result on general graphs was improved by Chen, Delcourt, Moitra, Perarnau and Postle (2019) who proved that the chains are rapidly mixing in time when there are at least colors where is a small positive constant, using the linear programming approach.

2.3 The Metropolis-Hasting Algorithm for Optimization

The Metropolis-Hastings algorithm is a versatile algorithm for solving complex optimization problems that was first introduced in a seminal paper by Metropolis et al. (1953) and further modified by Hastings (1970) who included a correction factor to ensure detailed balance. It relies on constructing a Markov chain that has the desired target distribution as its stationary distribution, from which it generates a sequence of samples. The algorithm has since been adapted for engineering applications emerging in various fields, including but not limited to signal processing (Luengo and Martino, 2013; Vu, Vo and Evans, 2014; Marnissi, Chouzenoux, Benazza-Benyahia and Pesquet, 2020), self-reconfiguration systems (Pickem, Egerstedt and Shamma, 2015), task allocation (Hamza, Toonsi and Shamma, 2021; Moayedikia, Ghaderi and Yeoh, 2020), etc.

A snapshot of the algorithm is as follows: In a finite-state space , denote the target distribution by where is the variable ot interest. As an initialization one constructs a starting state and an irreducible proposal transition matrix to generate a new state from . The transition probability is then multiplied by an acceptance rate

and the updated transition probability, namely the target transition probability, is

Note that the target transition probability satisfies the local balanced equation

thus the target distribution o is exactly a stationary distribution of the Markov process induced by . The uniqueness of the stationary distribution is given by irreducibility of the chain.

3 Game Formulation

3.1 Setting

Let us first introduce the notations and terminologies that are used throughout this paper. Notations of the graph structure and the color collection follow from Section 1, and the maximum degree of the graph is denoted by . We require where is the chromatic number of G; i.e. the least number of colors that enables a proper coloring. It is denoted by if the information of can be shared to , and ’s family is defined as the set . Let (k = 1, 2, …, ) be the coloring realizations. By adding a subscript we specify the particular color assigned to ; e.g. specifies the assignment under the coloring . When there is a change in coloring, say from to , let and denote the difference in ’s utility and the welfare respectively under the two colorings. To emphasize on the color choice of a particular agent, we sometimes specify as .

We say an agent is active in round if he wishes to update his color choice. Starting from any coloring , the game continues in discrete rounds. Let denote the color assigned to in round and denote the active set containing all active agents in round . In each round, every active agent, driven by either (Feature 1) or (Feature 2), would attempt to update his color from certain available color set (or stategy set) under some policy, while the inactive ones keep their colors unchanged. This induces a discrete-time Markov Chain on the coloring space with its transition matrix determined by the policy. A formal definition of the Generalized Vertex Coloring Game (G-VCG) is given as follows:

Definition 1 (G-VCG)

A G-VCG is a quadruplet where:

-

–

: the set of agents (vertices).

-

–

: the connected network constraining agents’ information, which is apriori to agents.

-

–

: the set of available colors (pure strategies) for .

-

–

: the set of coloring profiles.

-

–

: the set of utility functions.

In the following sections, there will be discussions and experiments of G-VCGs on both asynchronous and synchronous conditions. A discrete game is said to be asynchronous if at most one agent is active in each round, while synchrony allows multiple active agents to update in the same round. In this paper, two particular types of synchrony, namely independent synchrony and complete synchrony, will be discussed.

Definition 2 (Independent and completely synchronous G-VCGs)

A G-VCG is said to be independently synchronous if in each round of a G-VCG , the probability that becomes active, denoted by , is identical across . A completely synchronous game has all agents be active in each round; i.e. .

3.2 Essential Assumptions

In this part of the section, we make several essential assumptions, most of which are to be assumed thoughout the paper unless mentioned otherwise.

Focusing on maximizing the total utility of the network with little regard to the utility distribution, we now make an additional assumption that the welfare function is utilitarian or weighted utilitarian where the weights are priori information to the agents. In later sections we will see that such welfare formulas would enable individual agents to evaluate their contributions to the total welfare by selecting a new color, even without refering to the entire network.

Assumption 1 (Utilitarian/Weighted-Utilitarian Welfare)

The welfare function is defined by

where and .

As mentioned in Section 1, our main concern is whether the optimal solutions of () coincide with the ones of (). It is straitforward that if is a proper coloring, then ; otherwise would be a better solution for (). Yet, whether is proper highly depends on the abundance of colors provided. We make another assumption on the relationship between the caridinality of the color set and the maximum degree of the graph.

Assumption 2 (Abundant Colors)

The number of colors provided is at least one more than the maximum degree of the graph; i.e.

Assumption 2 guarantees to be proper because each vertex always has a color that is distinguished from the neighborhood; i.e.

| (2) |

To show that Assumption 2 is the weakest to ensure the properness of in whatever graph structures, we would like to give a counterexample when cases with thus may fail.

Example 1 ( is insufficient)

Consider the network structure in Figure 2 where each vertex has equal weight in contribution to the social welfare. Suppose so that . The preferences of each vertex on each color are given in Table 1. One can easily observe that the optimal coloring is as in Figure 2 where , and all obtain their top-preferred colors, and the optimal welfare is

In this case, and compromises to bear in a clash whatever color he selects, therefore is not proper.

| Red | 1 | 1 | 1 | 1 |

|---|---|---|---|---|

| Green | 1 | 1 | 10 | 1 |

| Blue | 1 | 1 | 1 | 10 |

Moreover, special attention should be given to the step when the active agents modify their color choices. If not well regulated, the game would easily become vulnerable and two typical jeopardies include:

-

(i)

Small search space: Due to (Feature 1), every agent would incline to top-preferred colors and is loath to adopt other ones. This would severely reduce the search space of the process thus the optimal coloring is often attainable.

-

(ii)

Deadlock: Due to (Feature 3) and (Feature 1), an agent is not aware that sacrifice would bring “win-win” outcomes for both himself and the neighbors later. This would result in deadlocks in network, especially when the preference systems differ across the neighbors, when imprudent agents pursues temporary self-seeking colors while impairing the neighborhood’s progress.

In reality, one common approach to avoid these emotional behaviors is to involve in randomness (e.g. by lottery). We make an analogical assumption here yet still respect agents’ acceptance or rejection on the new color.

Assumption 3 (Random Color Update with Policy Acceptance)

We assume the following rules during the color updates of active agents in each round:

-

(R1)

An active agent, say , would first randomly select a color from a color set . Let in each round unless mentioned otherwise.

-

(R2)

After given , is given a transient probation period, during which he is only aware of and possible influences to his family members. Utilities under other unchosen colors are not accessible.

-

(R3)

Based on the possible utility, a decision of acceptance (update the new color) or rejection (keep the old color) would be made by under some policy.

When making a decision, the latest information that an active agent can refer to is the current situations of the family members (which are obtained in the last round); i.e. the active agent assumes when deciding whether to accept the offer. The completely greedy policy is defined as follows:

Definition 3 (Completely Greedy Policy)

A completely greedy policy accepts whatever colors leading to better utility and rejects any color implying a worse or identically bad transcient utility; i.e. given active in round and a new color ,

| (CGP) |

Here is a brief summary on the game procedure:

-

(i)

Before round 0, an initial coloring is assigned to the network . Each agent calculates his utility by evaluating and detect clashes in his family.

-

(ii)

In round , each element of pursues a new color by randomly sampling a color . The inactive agents remain their colors in the previous rounds; i.e. for .

-

(iii)

Each decides whether to accept under certain acceptance policy. If is accepted, ; otherwise . The round t is finished and the game steps to round .

4 Identical Preference VCGs

In this section, we focus on a particular category of the G-VCGs called Identical Preference VCGs (IP-VCGs), whose definition is given as below.

Definition 4 (IP-VCGs)

A G-VCG is an IP-VCG if each vertex lays identical preference on every color; i.e.

where is a constant. In other words, for each , his utility function is indifferent about the specific color assigned. In reality, such situations are frequently witnessed when event managers are in a rush such that their goal is to confirm a feasible venue regardless of other preferences.

Note that the social welfare of an IP-VCG is

where the equality holds if and only if for . Therefore, solving Problem () for IP-VCGs is equivalent to finding a proper coloring which recovers the binary-payoff setting in previous works (see, e.g. Kearns et al. (2006); Chaudhuri et al. (2008)). Thus, in the rest of this section, we restrict our attention to the simplified problem with utility

| (3) |

4.1 Convergence of Completely Synchronous IP-VCGs to Optimality under the CGP

We examine in this subsection whether the convergence of an IP-VCG to its optimal coloring under CGP which is often driven by (Feature 1). As mentioned, it suffices to answer this question: can a proper coloring be achieved in a game with binary utilities (3) under CGP? It is obvious that an active agent with zero utility would accept any color unused by his neighbor to avoid clashes, and those agents with utility one, though can still be active, would never accept any new color because they have already attained their best personal utilities. Under our Assumption 2, it is straightforward that asynchronous IP-VCGs will converge to an optimal coloring in finite rounds since there are always extra colors different from all neighbors’ for each agent to select, and the probability of such a selection is positive. In general, analysis on an arbitrary synchronous G-VCG could be quixotic for tremendous randomness and the optimality may not be guaranteed. However, we observe that the extreme setting of complete synchrony brings peculiar properties which are worth an extra attention. As such, in the rest of the section, we concentrate our arguments on the completely synchronous cases.

We would like to point out that a similar problem was discussed in the seminal work Chaudhuri et al. (2008) which also concerned about the binary utility VCG. The main difference of their setting is that, unlike in Assumption 3 where we assume , they restricted the available color set for each agent to be the colors currently unused by his neighbors; i.e. . Though could be more efficient, their setting suffers from a severely reduced search space because state transfers are often only allowed in one direction, which impairs possible generalizations. Besides, their setting requires a stronger assumption than our Assumption 2 that the number of colors must be at least two more than the maximum degree. Notice that the counterexample they gave on a failure of convergence when , however, can be solved in our setting for better color availability. We place their example here for the sake of completeness and better understanding.

Example 2 (Theorem 2 in Chaudhuri et al. (2008))

Consider being a cycle with five vertices thus . Given 3 colors, say , and , and suppose that the initial configuration is . In the scenario when all agents with zero utility are active and obtain colors unused by neighbors, and will always be active yet keep clashing with each other, thus a proper coloring would never be reached. Nevertheless, under our setting and Assumption 3, this conflict can be easily resolved; e.g. when selects the while does not, the configuration becomes .

Before stepping further, we first introduce a special type of Markov Chain, namely Absorbing Markov Chain (AMC), which will be our main tool of proving the convergence.

Definition 5 (Absorbing Markov Chain)

Given a Markov Chain , a state is called absorbing if , , otherwise is called transient. The chain is defined to be an absorbing Markov Chain (AMC) if there exists at least one absorbing state that is accessible from any transient state.

In the context of a 0-1 VCG, which is a simplification of IP-VCGs, one may use a list with binary digits to represent the utility of players after each round. Such utility lists have a length of with each element representing the utility of a vertex, and there are possible outcomes. Additionally, since it is impossible for all but one players to have utility equal to 1, the total number of possible cases is reduced by . Let denote the utility space consisting of these utility lists on which a Markov Chain can be run. The transition probability reflects the likelihood of a change in utility. The following Lemma 1 states that is an AMC.

Lemma 1 (AMC on Utility Space)

Remark 2

Note that always jumps from a low-welfare state to a high-welfare state but never in the reverse direction, because agents with utility one would never accept a new color thus remains his utility forever under the completely greedy policy.

A classical property of an AMC is that it will be absorbed eventually, which means the convergence to a proper coloring in our case.

Theorem 2 (Convergence to Optimality)

4.2 Stochastic Boundedness on the Time to Convergence of Completely Synchronous IP-VCGs

Given an optimal convergence of IP-VCGs, we are interested in the time to convergence with complexity measurements. In their setting of , Chaudhuri et al. (2008) proposed and proved that the game ends in rounds with probability at least when . They took advantage of an important property that any zero-utility agent could earn a utility rise in two consecutive rounds with a probability above some positive constant. It supprisingly proves that this property extends to the completely synchronous 0-1 IP-VCGs thus to all IP-VCGs under CGP. This is given as Theorem 3 below and the proof is an anlogue to Lemma 3 in Chaudhuri et al. (2008) with subtle adjustments.

Theorem 3 (Individual Utility Rise in Two Consecutive Rounds)

Let Assumptions 1, 2 and 3 hold. Consider a completely synchronous IP-VCG under CGP with utility functions 3. Suppose has a clash in round thus , then with at least a constant probability, he would become clash-free and obtain a positive utility after two rounds; i.e.

where is a positive constant number.

The constant lower bound, though relatively small, is useful because it provides some deterministic information in a stochastic self-organizing game scenario. More importantly in our discussions, the constant probability for a utility rise in two consecutive rounds sheds light on the behavior of the Markov Chain as defined in Theorem 1, besides the upshot of being absorbed. The following lemma demonstrates respective bounds on the expectation and variance of the number of rounds to convergence.

Lemma 4 (Bounded Expectation and Variance for Time to Convergence)

Proof of Lemma 4 4

See Appendix B.2. Here is a sketch for our proof: Again we focus on the representative candidate of IP-VCGs with binary utility functions 3. To most utilize the information given in Theorem 3, we generate to jump two steps at a time and then double the number of steps. We also pay special attention to the first migration of and conduct the so-called “first-step analysis” to derive out certain induction relationship on the expected step between different initial states.

We are now ready to state the main theorem in this section, in which we show the stochastic boundedness of , the time (i.e. number of steps) to convergence.

Theorem 5 (Stochastic Boundedness of the Time to Convergence)

Let Assumptions 1, 2 and 3 hold. Consider a completely synchronous IP-VCG under CGP associated with a Markov Chain as defined in Theorem 1. Let denote the number of steps needed for to converge in an optimal coloring . Then is ; i.e. is bounded in probability as

Moreover, can be taken as a constant independent of when is fixed.

5 A Metropolis-Hasting Based Optimal Policy for G-VCGs

In this section, we wish to examine the likelihood for the self-organizing G-VCGs in more general settings to attain its optimal coloring, possibly with some carefully-designed acceptance policy. A rudimentary scrutiny would reveal that the completely greedy policy CGP would no longer guarantee a convergence to optimality as a consequence of (Feature 1) and (Feature 3). Below is a simple example.

Example 3

Consider the graph in Figure 3 and the color preference matrix in Table 2. Assumption 2 would be satisfied when three colors are provided, namely . Given the initial color assignment to be . The optimal coloring would be . However, under CGP, would never accept another color because for . Consequently, and would never accept when active because, for example, for . The game thus never converges to its optimality.

| Red | 1 | 1 | 1 |

|---|---|---|---|

| Green | 10 | 10 | 10 |

| Blue | 1 | 2 | 1 |

Therefore, it is necessary for the agent temporarily with high utility to be a bit more “altruistic” to avoid such standstills.

Among literatures on solving the agent-based evolutionary optimization procedure, a similar network setting was witnessed in Marden and Shamma (2012) where a policy based on “log-linear learning” was evaluated. Nevertheless, this approach does not apply to G-VCGs because they assumed

-

(i)

The game is potential; i.e. . This is not true in our setting as a modification on an agent’s choice would bring not only a difference to his utility but also to his neighbors’.

- (ii)

Alternatively, inspired by the Metropolis-Hasting algorithm as reviewed in 2.3, we propose another delicate policy adpative to our setting of G-VCGs with sound converging behaviours. We will call it MH-Policy and details are given in Section 5.2. We would like to mention that the spirit has been informally applied to task allocation of robots in Hamza et al. (2021). Yet, there lacked a mathematical discussion, from agents’ standpoints, on whether the policy violates agents’ incentives (e.g. (Feature 1) and (Feature 3)); otherwise the self-organizing agents woudl be reluctant to follow the rule. The gap is then filled in this section. Note that instead of the explicit stationary distribution, our focus is on the support of its stationary distribution, namely the stochastically stable states.

5.1 Detour: Regular Perturbed Markov Processes and Resistance Tree

It is acknowledged that, despite the mentioned limitations, we still gain parts of our insights from the work led by Marden and Shamma (2012). Specifically, this detour section introduces some preliminary terminologies and results from Young (1993) that will be invoked in the proofs of our later results. We first give the definition of a regular perturbed Markov process (RPMP).

Definition 6 (Regular Perturbed Markov Process)

Let be a finite-state Markov chain over the finite-state space with a transition matrix . We refer to it as an unperturbed process. Consider a perturbed process such that the extent of perturbation can be indexed by a scalar , and let be the associated transition matrix. Then is defined to be a regular perturbed Markov process (RPMP) if the following conditions are satisfied:

-

(i)

is irreducible, .

-

(ii)

, .

-

(iii)

for some .

where in (iii) is some nonnegative real number and is referred to as the resistance of the transition .

Remark 3

We would like to remark that since irreducible, a RPMP on a finite-state space has a unique stationary distribution, namely , which is uniquely determined by the balance equation . See, e.g., Rosenthal (2006) for details.

Another important concept we would like to review here is the construction of a resistance tree with some associated stochastic potential.

Definition 7 (Resistance Tree and Stochastic Potential)

Given the state space in Definition 6, we construct a complete graph (i.e. a graph with an edge between any pair of its vertices) with vertices, each denoted as for . For any directed edge , we assign on it a weight of where is the corresponding resistance. A directed path with length is a sequence of joint directed edges and the restistence of the path is defined as . Let be a tree containing all vertices and rooted at the vertex , such that there exists a unique directed path from any . The resistance of is defined as

Among for , there exist (s) having minimum resistance . is then referred to as stochastic potential of state .

The above terminologies will play key roles in our later proofs, via a powerful theorem lemma from Young (1993).

Theorem 6 (Characterization for Stochastically Stable States)

Consider an RPMP with a transition matrix defined on the state space . The stochastically stable states are exactly .

5.2 The MH-Policy in Asynchronous G-VCGs

We start from the easiest setting where agents become active and update their colors one by one. A Markov chain is established on the coloring space with each coloring as a state. The elements of the transition matrix correspond to the probability of a direct transition between the two states. Our aim is to adjust the transition probabilities of the chain (i.e. the acceptance probability of an active agent) so that stochastically stable states only contain ’s.

We would like to explain on some possible concerns regarding the policy before stepping towards the details in the MH-policy. In the policy, one may question that (Info-Type2) seems useless for individual decision-making. Indeed, without a loss of generality, we would restrict our consideration only on (Info-Type1) (i.e. assume (Info-Type2) is not inaccessible) for the sake of simplicity in the rest of this section, which equalizes the neighborhood with the family . However, we are to show in Section 6 on why (Info-Type2) is important for some special purposes of the G-VCGs and how it can be tractably integrated in our formulations.

The proposed MH-policy is now presented. In order to maximize the objective value in , as in the Metropolis-Hasting algorithm, we wish the acceptance probability for each active agent given the selected color to be

| (4) |

to satisfy the local blanced equation for the stationary distribution , where

is a Boltzmann distribution (McQuarrie, 2000) and is some temperature parameter. Here we omit the terms of the proposal probability because all proposal probabilities equal in a randomized selection. Notice that, as one decreases the temperature , the target distribution only puts weights on the state(s) where the social welfare is maximized.

The welfare difference in the acceptance ratio (4) cannot be directly reflected from individual’s utility difference as a modification on an agent’s choice would likely affect his neighbors’ utilities as well. Yet, we notice that, a clever way for an active agent to keep aware of the total difference in the entire network is to monitor the imminent local utility difference across his family before he accepts or rejects. This is because an agent choice can affect the utility of nobody other than his neighbors , and we have unified and to be equivalent. Formally, when is the unique active agent in the game, it holds that

| (5) |

where and , and the term on the righth-and side is defined as

| (6) |

Using the terminology in a foundational work Monderer and Shapley (1996), there exists a potential relationship between and . The procedure of realizing the MH-policy is summarized in the pseudo-code Algorithm 1 in the asynchronous version.

Before moving on to prove the optimality of Algorithm 1, we would like to demonstrate why the MH-policy can be appealing to agents; in other words, it respects the human nature of (Feature 1) and (Feature 3). This is because when updating under the MH-policy:

-

(i)

An active agent will accept whenever his own utility increases: if and then since ’s zero-utility neighbors may also have the clash resolved; else, if and , then as the neighbors’ utilities are unaffected. In both cases, .

-

(ii)

An active agent is not obliged to give up on his old color whenever his own utility decreases: if and then since induces color clashes between and some neighbors whose utility would immediately drop to zero; else, if and , then as the neighbors’ utilities are unaffected. In both cases, .

-

(iii)

The more ’s utility would drop, the more likely he would keep his old color. The arguments are the same as (ii) along with the fact that the worst utility is zero.

We now step to prove that the MH-policy indeed leads to . To do so we first state that the Markov Chain induced by the MH-policy is in fact an RPMP.

Lemma 7 (MH-Policy Induces RPMP)

We are now able to invoke properties of RPMP to investigate on the support of the stationary distribution , i.e. the set of stochastically stable states, which only contains states leading to the global optimal welfare rather than a local optimum. Besides, it turns out that we can establish relationship between the resistances and the final welfares. Equipped with these tools, we substantiate that the MH-policy indeed leads the game to .

Theorem 8 (The MH-Policy is Optimal)

5.3 The MH-Policy in Independently Synchronous G-VCGs

We turn to the more general synchronous setting where agents may become active simultaneously. As already mentioned in Section 4, an arbitrary synchronous G-VCG is extremely difficult to trace and optimality may be no longer guaranteed. However, inspired by Marden and Shamma (2012), we show in this section that when restricted to a collection of independently synchronic G-VCGs, the optimality of the MH-policy is still preserved when the probability of each agent being active is small enough.

Analogue to our discussions in Section 5.2, the following Lemma 9 indicates that the MH-policy in independent synchronous settings again induces some RPMP with different resistances of state transitions.

Lemma 9 (MH-Policy Induces RPMP with independent synchrony)

Let Assumptions 1, 2 and 3 hold. Consider an independently synchronous G-VCG with an activation parameter . Define . Then the MH-policy induces an RPMP and the unperturbed process only accesses to the colorings bringing in better welfares. The corresponding resistance of a state transition is

where .

It remains to check whether a stochastically stable state corresponds to a maximum welfare value. Unfortunately, this is not always the case as a G-VCG would be likely to get stuck in some local maximum state. Nevertheless, in the next Theorem 10, we will show the optimality of the MH-policy is preserved when the activation probability is small enough when is constant.

Theorem 10 (Optimality of the MH-Policy with low- independent synchrony)

Remark 4

Theorem 10 serves as a theoretical gurantee for the asymptotic behavior of the RPMP induced by MH policy, yet would be impractical to observe as becomes extremely small when . However, empirical evidence from experiments presented in Section 7 show that the MH policy still performs well for independently synchronous cases with some constant . Any relaxation on the assumption made in Theorem 10 would be left to future work.

6 Robust Welfare Optimization for G-VCGs

In previous sections, we exhaust the information of (Info-Type1) for agents’ decision-making while (Info-Type2) is not fully employed. In this section, we would convince the readers on the importance of the latter in real-life events planning and how it can help with some welfare optimization in G-VCGs for additional purposes, namely robust welfare optimization (RWO). Besides the factors introduced above, another key consideration in venue selection of events planning is the time between consecutive events conducted in the same venue. If two events arranged in the same venue have their schedules close to each other, the second one would take higher risk of being affected by a possible overrun of the previous one. Therefore, an ideal welfare function should impose penalties for colorings where two inadjacent vertices highly likely to be linked are share the same colors. The probability of the presence of an complementary edge between vertices and can be estimated by the below formula proposed by Lim and Wang (2005)

| (7) |

where is the minimum event transfer time, and are the starting and ending time for the two events, and is some predefined constant. In the rest of this section, we assume such probabilities are public information in each family.

The idea of RWO comes from the concept of robust coloring problem (RCP) firstly proposed in yanez2003robust and soon gained numerous popularities; see, e.g., Lim and Wang (2005); Wang and Xu (2013); Archetti, Bianchessi and Hertz (2014). All these literatures analyzed the robust coloring as a separate optimization problem while, to our knowledge, have not considered its iteraction with other objectives. We therefore fill the gap to include in our discussion the robust welfare optimization as an “extension” to the baseline and , thus control the social welfare with more influencing factors in the network. By “extension”, we will explain later that would be an input of our algorithm to solve RWO which should have been figured out in Algorithm 1; i.e. one can interpret RWO as a second-stage problem.

6.1 Motivation and Formulation

We would like to give a brief overview on the main idea behind the RCP before formally introducing our RWO problem. Besides requiring the coloring to be proper as classical graph coloring does, a robust coloring takes into account the complementary edges in a network. Given each complementary edge a probability to be connected, the objective is to fathom out a most “robust” coloring in a sense that the probability for the properness to be destroyed by connecting one or more complementary edges is minimized. The number of colors is fixed as a constraint. An RCP is formulated as follows:

| (RCP) | ||||

where is the probability for a complementary to be connected.

The literature works as mentioned explored multifarious applications which can be modelled by RCP, which simply assumed an equal “damage” brought by any new pair-connection, to our knowledge. Note that the formulation of RCP reflects on this assumption by giving each pair-connection a damage of 1, without a loss of generality. This is, however, not true in our setting of G-VCGs with utility variations, since the amount of welfare can be affected differently when a different complementary edge is connected. Once a complementary edge is connected between two vertices in the same color, the utilities of both get eliminated from the total social welfare as a new clash occurs. Indeed in reality, robustness is most times not the only pursuit and there is always a trade-off between welfare and risk (i.e. decreasing robustness). To integrate the consideration of such robustness into our welfare optimization problem, we add in an “expected loss” term to be minimized. This is inspired by the spirit of the general stochastic optimization to quantify the average uncertainty. We formulate our RWO problem to be

| (RWO) | ||||

Suppose each complementary edge has a probability to be connected. Denote to be the set of new-connected complementary edges. Let be the set of complementary edges taking as an endpoint and with endpoints’ colors identical . It is important to note that in RWO is not a simple summation of the utility loss in both endpoints from a pair-connection, otherwise the losses would be counted repeatedly. For example, after the utility of is eliminated due to a new connected clash, another complementary edge with him as an endpoint would no longer reduce its utility. Instead, we may express as a weighted sum of random variables defined by

thus

| (8) |

6.2 The MH-Policy for RWO

In this subsection, we again employ the Metropolis-Hasting principle to solve RWO in a decentralized fashion. A key difference to the MH-policy in Section 5 is that the family structure is dynamic when (Info-Type2) is involved. Note that the proposed MH-policy for RWO is continued as an extension to solving , thus taking the output of Algorithm 1 as input.

Assumption 4

Remark 5

We would like to clarify on some possible doubts regarding our design:

-

(i)

Note that Assumption 4 is not a loss of generality. An equivalent approach is to work with Assumption 3 and set the loss for an improper coloring to be so that the game never accepts improper colorings if starting from which is proper. In other words, instead of a change in the general setting, Assumption 4 merely allows an acceleration compared to Assumption 3.

-

(ii)

The reason why we start our algorithm from rather than thoroughly starting from the very beginning arbitrary coloring is to guarantee the properness of our optimal coloring. Note that if we use the relaxation in in solving RWO, the optimal coloring may not be proper; for example, when a vertex’s utility is less than the possible loss that might be triggered. Starting from along with Assumption 4 makes sure the game keeps running on proper colorings.

As in Section 5, we would first consider asynchronous cases for solving RWO. Denote by the loss occurs in a set of vertices under a coloring . When the coloring is changed from to in round where and , the difference in the total welfare would be

| (9) | ||||

where is defined as in 6 and

| (10) |

The second equality in (9) holds because a color change in will only affect the utilities of his neighbors sharing (Info-Type1) and only affect the underlying loss of his non-neighboring family members sharing (Info-Type2), and by Assumption 4. Since the probability terms in (8) can be expressed using by

| (11) | ||||

and vertices other than keep their colors unchanged, we can decompose 10 as

| (12) | ||||

Notice that the terms included in 12 are all accessible to during his color updating procedure, since losses can only be triggered by vertices in the same color which are all included in the family. Therefore, 9 can be calculated by each decentralized active agent. The pseudo-code for realizing the MH policy for RWO is provided in Algorithm 2.

Remark 6

Note that the probability of a connection in any complementary edge whose both endpoints share the same color is known to the corresponding vertices, since it belongs to (Info-Type2).

The optimality of the MH-policy for RWO in either asynchronous and independently synchronous settings can be again substantiated using theories of RPMP. Arguments are similar to the ones in Section 5 though associated with some other resistance with a minor modification, and we will not repeat the procedure here.

7 Simulation Experiments

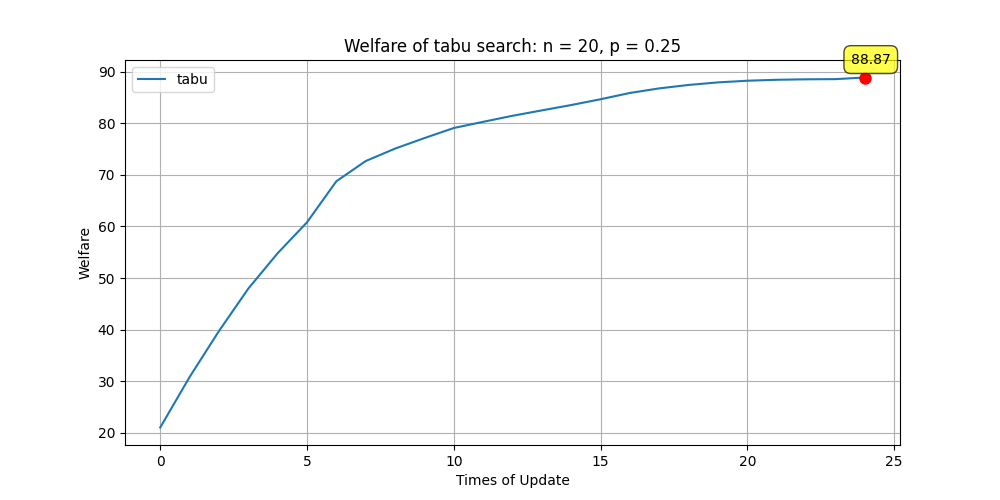

We examine the effectiveness of the proposed MH-policies for G-VCGs in this section. The probabilitsitic network stuctures were constructed using the Erdős–Rényi model provided by the networkx package in Python with certain connection probabilities. Meanwhile, we randomly generated preferences from for each vertex as well as weights from which was further normalized to sum up to 1. We monitor the dynamics induced by the networks with different sizes, different connection probabilities (thus different ) and different temperature reduction schemes. We also checked on the effects of varied activation parameters in synchronous settings to see how synchrony affects the efficiency of the MH-policy driven algorithms. For comparison purposes, we also implemented a tabu search (TS) algorithm adapted from Lim and Wang (2005) as an aid to determine whether the obtained welfares are optimal (or, at least, sub-optimal), of which the details are given in Algorithm 3 in Section A.

| Temperature Scheme | Formula | |

|---|---|---|

| constant | 0.01 | |

| exponential multiplicative | 10 | |

| logarithmical | 0.1 | |

| trigonometric additive | 10 |

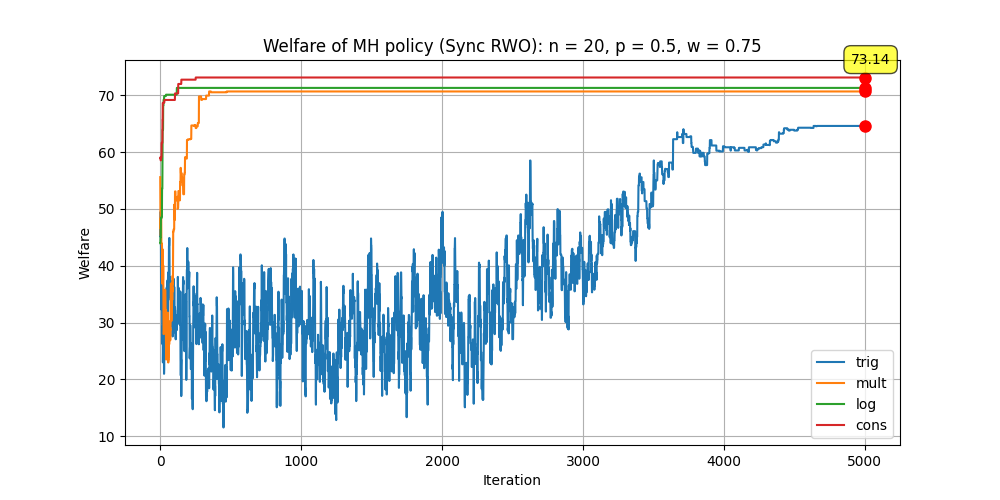

Four temperature reduction schemes were considered in our experiments: constant, exponential multiplicative, logarithmical and trigonometric additive. The specific parameters are given in Table 3. The experiements were divided into several groups, each of which has one monitored variable with other variables controlled. For each group, we ran three parallel simulations and would present one of them in this section. The number of iterations in each simulation experiment was set to be .

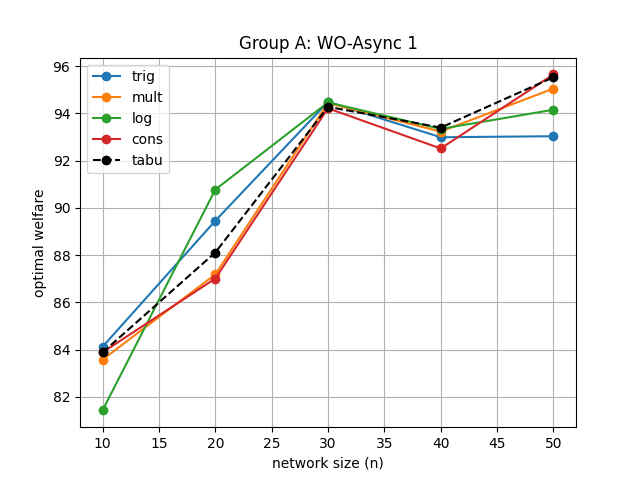

Table 4 gives the results for the three groups which focus on solving . Among the data given by the four temperature schemes, the welfares outperforming TS are labelled in red while the ones in blue suggest that the difference between it and the TS outcome is less than 0.5. The experiments in the first two groups were all conducted in an asynchronous manner as described in Section 3.1. The group “WO-Async 1” aims to detect on the influence made by variations of the network size. It can be observed from Figure 6 that the MH-policy supported by the logarimical and trigonometric addtive schemes achieves higher welfares on this task than TS did, and all methods perform equally well in general. In terms of group “WO-Async 2”, we are able to analyze on the impacts under different network degrees by adjusting the priori connection probability between edges when generating the graph. It turned out that trigonometric additive and logarithmical models still prevail over TS as can be observed from Figure 6.

| Group | Network Features | Trig | Exp | Log | Const | TS |

|---|---|---|---|---|---|---|

| WO-Async 1 | , | 84.12 | 83.57 | 81.43 | 83.88 | 83.88 |

| , | 89.45 | 87.18 | 90.77 | 87.01 | 88.11 | |

| , | 94.49 | 94.44 | 94.47 | 94.21 | 94.28 | |

| , | 92.99 | 93.23 | 93.34 | 92.52 | 93.40 | |

| , | 93.03 | 95.05 | 94.16 | 95.65 | 95.53 | |

| WO-Async 2 | 89.93 | 89.74 | 89.56 | 88.71 | 88.87 | |

| 89.45 | 87.18 | 90.77 | 87.01 | 88.11 | ||

| 92.53 | 89.91 | 91.95 | 90.94 | 91.38 | ||

| WO-Sync | 90.15 | 88.82 | 87.39 | 85.73 | 88.11 | |

| 90.83 | 90.21 | 86.93 | 87.70 | 88.11 | ||

| 90.62 | 90.44 | 87.15 | 88.82 | 88.11 | ||

| 90.44 | 90.63 | 89.19 | 88.51 | 88.11 |

The MH-policies under synchronous settings were examined in the third group, namely “WO-Sync”. It is uplifting to witness that the proposed MH-policies can almost achieve more optimal solutions than TS, especially when driven by trignometric addtive and exponential multiplicative schemes. It is also remarkable that the algorithms are fairly robust even under completely synchronous cases. A visualization for this is given in Figure 6.

We further proceed our investigation to solving RWO, the results of which are reported in Table 5. Due to our formulation of the expected loss term in 8, the final objective highly depends on the probability of a future connection of each complementary edge, which is directly related to the degree of the network. Therfore, in the fourth “RWO-Async” group, we had the network size controlled and modified the connection probability , as did in “WO-Async 2”. It turned out that the MH-policy driven Algorithm 2 performs well when is relatively large, no matter what scheme it employed, as shown in Figure 8A possible explanation for this phenomenon is that, when is high, the number of complementary edges falls thus the optimal solution of RWO stays close , thus the two-stage MH-policy becomes advantageous. Figure 8 corresponds to the last group which again focused on the impact of synchrony. Like in “WO-Sync”, the effectiveness of the proposed MH-policy confronted by different activation parameters are also well demonstrated.

| Group | Network Features | Trig | Exp | Log | Const | TS |

|---|---|---|---|---|---|---|

| RWO-Async | 67.17 | 64.30 | 66.89 | 65.65 | 70.93 | |

| 67.73 | 66.76 | 74.16 | 65.83 | 69.92 | ||

| 86.80 | 89.17 | 87.25 | 87.55 | 82.65 | ||

| RWO-Sync | 74.35 | 68.17 | 72.70 | 65.35 | 69.92 | |

| 73.66 | 68.53 | 73.76 | 69.95 | 69.92 | ||

| 64.62 | 70.69 | 71.31 | 73.14 | 69.92 | ||

| 70.17 | 59.30 | 69.75 | 68.45 | 69.92 |

8 Conclusion

In this paper, we focus on the venue selection problem commonly confronted in event planning, and model its dynamics by the proposed generalized vertex coloring games which go beyond the basic requirement of properness in search of some max-welfare coloring. It was shown that in an IP-VCG, being completely greedy and completely synchronous does not prevent agents reaching the optimal coloring asymptotically, and the converging time was shown to be stochastically bounded by . In more general G-VCGs in asynchronous manner, there is still some policy driven by the Metropolis-Hasting algorithm which enables the self-organized agents to reach the optimal coloring without violating their greediness. The optimality of the MH policy even holds in some particular independently synchronous settings. Finally, we integrate the idea of robust coloring into our formulation seeking for a balance between the welfare and the risk, and a corresponding adaptive MH-policy for the robust welfare optimization problem is provided. The effectiveness of the proposed policies are substantiated in our simulation experiments.

9 Acknowledgement

This project was supported by Nanyang Technological University under the URECA Undergraduate Research Programme.

References

- Ahmed (2012) Ahmed, S., 2012. Applications of graph coloring in modern computer science. International Journal of Computer and Information Technology 3, 1–7.

- Allen et al. (2022) Allen, J., Harris, R., Jago, L., Tantrai, A., Jonson, P., D’Arcy, E., 2022. Festival and special event management. John Wiley & Sons.

- Archetti et al. (2014) Archetti, C., Bianchessi, N., Hertz, A., 2014. A branch-and-price algorithm for the robust graph coloring problem. Discrete Applied Mathematics 165, 49–59.

- Bermond et al. (2019) Bermond, J.C., Chaintreau, A., Ducoffe, G., Mazauric, D., 2019. How long does it take for all users in a social network to choose their communities? Discrete Applied Mathematics 270, 37–57.

- Carosi et al. (2019) Carosi, R., Fioravanti, S., Gualà, L., Monaco, G., 2019. Coalition resilient outcomes in max k-cut games, in: SOFSEM 2019: Theory and Practice of Computer Science: 45th International Conference on Current Trends in Theory and Practice of Computer Science, Novỳ Smokovec, Slovakia, January 27-30, 2019, Proceedings, Springer. pp. 94–107.

- Chaudhuri et al. (2008) Chaudhuri, K., Chung Graham, F., Jamall, M.S., 2008. A network coloring game, in: Internet and Network Economics: 4th International Workshop, WINE 2008, Shanghai, China, December 17-20, 2008. Proceedings 4, Springer. pp. 522–530.

- Chen et al. (2019) Chen, S., Delcourt, M., Moitra, A., Perarnau, G., Postle, L., 2019. Improved bounds for randomly sampling colorings via linear programming, in: Proceedings of the Thirtieth Annual ACM-SIAM Symposium on Discrete Algorithms, SIAM. pp. 2216–2234.

- Cui et al. (2008) Cui, G., Qin, L., Liu, S., Wang, Y., Zhang, X., Cao, X., 2008. Modified pso algorithm for solving planar graph coloring problem. Progress in Natural Science 18, 353–357.

- Dowsland and Thompson (2008) Dowsland, K.A., Thompson, J.M., 2008. An improved ant colony optimisation heuristic for graph colouring. Discrete Applied Mathematics 156, 313–324.

- Enemark et al. (2011) Enemark, D.P., McCubbins, M.D., Paturi, R., Weller, N., 2011. Does more connectivity help groups to solve social problems, in: Proceedings of the 12th ACM conference on Electronic commerce, pp. 21–26.

- Fleurent and Ferland (1996) Fleurent, C., Ferland, J.A., 1996. Genetic and hybrid algorithms for graph coloring. Annals of operations research 63.

- Fryganiotis et al. (2023) Fryganiotis, N., Papavassiliou, S., Pelekis, C., 2023. A note on the network coloring game: A randomized distributed (+ 1)-coloring algorithm. Information Processing Letters 182, 106385.

- Goonewardena et al. (2014) Goonewardena, M., Akbari, H., Ajib, W., Elbiaze, H., 2014. On minimum-collisions assignment in heterogeneous self-organizing networks, in: 2014 IEEE Global Communications Conference, IEEE. pp. 4665–4670.

- Hamza et al. (2021) Hamza, D., Toonsi, S., Shamma, J.S., 2021. A metropolis-hastings algorithm for task allocation, in: 2021 60th IEEE Conference on Decision and Control (CDC), IEEE. pp. 4539–4545.

- Hastings (1970) Hastings, W.K., 1970. Monte carlo sampling methods using markov chains and their applications .

- Hayes et al. (2007) Hayes, T.P., Vera, J.C., Vigoda, E., 2007. Randomly coloring planar graphs with fewer colors than the maximum degree, in: Proceedings of the thirty-ninth annual ACM symposium on Theory of computing, pp. 450–458.

- Hayes and Vigoda (2006) Hayes, T.P., Vigoda, E., 2006. Coupling with the stationary distribution and improved sampling for colorings and independent sets .

- Hernández and Blum (2012) Hernández, H., Blum, C., 2012. Distributed graph coloring: an approach based on the calling behavior of japanese tree frogs. Swarm Intelligence 6, 117–150.

- Hindi and Yampolskiy (2012) Hindi, M.M., Yampolskiy, R.V., 2012. Genetic algorithm applied to the graph coloring problem, in: Proc. 23rd Midwest Artificial Intelligence and Cognitive Science Conf, pp. 61–66.

- Jerrum (1995) Jerrum, M., 1995. A very simple algorithm for estimating the number of k-colorings of a low-degree graph. Random Structures & Algorithms 7, 157–165.

- Kassir (2018) Kassir, A., 2018. Absorbing Markov chains with random transition matrices and applications. Ph.D. thesis. University of California, Irvine.

- Kearns et al. (2006) Kearns, M., Suri, S., Montfort, N., 2006. An experimental study of the coloring problem on human subject networks. science 313, 824–827.

- Keeney and Kirkwood (1975) Keeney, R.L., Kirkwood, C.W., 1975. Group decision making using cardinal social welfare functions. Management Science 22, 430–437.

- Kirkpatrick et al. (1983) Kirkpatrick, S., Gelatt Jr, C.D., Vecchi, M.P., 1983. Optimization by simulated annealing. science 220, 671–680.

- Kliemann et al. (2015) Kliemann, L., Sheykhdarabadi, E.S., Srivastav, A., 2015. Price of anarchy for graph coloring games with concave payoff. arXiv preprint arXiv:1507.08249 .

- Lim and Wang (2005) Lim, A., Wang, F., 2005. Robust graph coloring for uncertain supply chain management, in: Proceedings of the 38th Annual Hawaii International Conference on System Sciences, IEEE. pp. 81b–81b.

- Luengo and Martino (2013) Luengo, D., Martino, L., 2013. Fully adaptive gaussian mixture metropolis-hastings algorithm, in: 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, IEEE. pp. 6148–6152.

- Marappan and Sethumadhavan (2013) Marappan, R., Sethumadhavan, G., 2013. A new genetic algorithm for graph coloring, in: 2013 Fifth International Conference on Computational Intelligence, Modelling and Simulation, IEEE. pp. 49–54.

- Marappan and Sethumadhavan (2021) Marappan, R., Sethumadhavan, G., 2021. Solving graph coloring problem using divide and conquer-based turbulent particle swarm optimization. Arabian Journal for Science and Engineering , 1–18.

- Marden and Shamma (2012) Marden, J.R., Shamma, J.S., 2012. Revisiting log-linear learning: Asynchrony, completeness and payoff-based implementation. Games and Economic Behavior 75, 788–808.

- Marden and Wierman (2013) Marden, J.R., Wierman, A., 2013. Overcoming the limitations of utility design for multiagent systems. IEEE Transactions on Automatic Control 58, 1402–1415.

- Marnissi et al. (2020) Marnissi, Y., Chouzenoux, E., Benazza-Benyahia, A., Pesquet, J.C., 2020. Majorize–minimize adapted metropolis–hastings algorithm. IEEE Transactions on Signal Processing 68, 2356–2369.

- McQuarrie (2000) McQuarrie, D.A., 2000. Statistical mechanics. Sterling Publishing Company.

- Metropolis et al. (1953) Metropolis, N., Rosenbluth, A.W., Rosenbluth, M.N., Teller, A.H., Teller, E., 1953. Equation of state calculations by fast computing machines. The journal of chemical physics 21, 1087–1092.

- Moayedikia et al. (2020) Moayedikia, A., Ghaderi, H., Yeoh, W., 2020. Optimizing microtask assignment on crowdsourcing platforms using markov chain monte carlo. Decision Support Systems 139, 113404.

- Monderer and Shapley (1996) Monderer, D., Shapley, L.S., 1996. Potential games. Games and economic behavior 14, 124–143.

- Panagopoulou and Spirakis (2008) Panagopoulou, P.N., Spirakis, P.G., 2008. A game theoretic approach for efficient graph coloring, in: Algorithms and Computation: 19th International Symposium, ISAAC 2008, Gold Coast, Australia, December 15-17, 2008. Proceedings 19, Springer. pp. 183–195.

- Pelekis and Schauer (2013) Pelekis, C., Schauer, M., 2013. Network coloring and colored coin games, in: Search Theory: A Game Theoretic Perspective. Springer, pp. 59–73.

- Pickem et al. (2015) Pickem, D., Egerstedt, M., Shamma, J.S., 2015. A game-theoretic formulation of the homogeneous self-reconfiguration problem, in: 2015 54th IEEE Conference on Decision and Control (CDC), IEEE. pp. 2829–2834.

- Rosenthal (2006) Rosenthal, J.S., 2006. First Look At Rigorous Probability Theory, A. World Scientific Publishing Company.

- Salari and Eshghi (2005) Salari, E., Eshghi, K., 2005. An aco algorithm for graph coloring problem, in: 2005 ICSC Congress on computational intelligence methods and applications, IEEE. pp. 5–pp.

- Touhiduzzaman et al. (2018) Touhiduzzaman, M., Hahn, A., Srivastava, A.K., 2018. A diversity-based substation cyber defense strategy utilizing coloring games. IEEE Transactions on Smart Grid 10, 5405–5415.

- Vigoda (2000) Vigoda, E., 2000. Improved bounds for sampling colorings. Journal of Mathematical Physics 41, 1555–1569.

- Vu et al. (2014) Vu, T., Vo, B.N., Evans, R., 2014. A particle marginal metropolis-hastings multi-target tracker. IEEE transactions on signal processing 62, 3953–3964.

- Wang and Xu (2013) Wang, F., Xu, Z., 2013. Metaheuristics for robust graph coloring. Journal of Heuristics 19, 529–548.

- (47)

Appendix A Tabu search algorthm used in Section 7

Appendix B Proof of results in Section 4

B.1 Proof of results in Section 4.1

In this part of the Appendix, we present proofs regarding the lemmas and theorems on the convergence of IP-VCGs, as discussed in Section 4.1.

Proof of Lemma 1 11

Under Assumption 2, the state space must contain the state corresponding to any proper coloring. One may easily observe that the state is absorbing since none of the agents would accept new colors thus the utility remains. All other states are transient because they are likely to alter in later rounds. It then suffices to prove the accessibility between other states to the state .

For an active zero-utility agent, say , consider the probability of a utility rise from round to round . Let denote the number of ’s neighbors and denote the number of colors held by his neighbors in round . We also consider the number of neighbors of ’s neighbors, denoted by , , in respective. Then can only have a utility rise when his new color is unused by his neighbors in round and does not clash with the new colors of his active neighbors. For a completely synchronous game under Assumption 2, we have

Note that this constant lower bound for is independent of the network structure and neighborhood behaviours. By the definition of the AMC on the utility space,

As such, the probability of transition from a low-welfare state to a high-welfare state is always positive. Then is accessible from any transcient state, which completes the proof. \qed

Proof of Theorem 2 12

According to the arguments in Section 4, it suffices to prove that a synchronous IP-VCG with utility functions 3 converges to some proper coloring. This is equivalent to show that the AMC defined in Theorem 1 would eventually be absorbed in .

Suppose, from an initial state , the chain requires at least rounds to reach an absorbing state and the corresponding probability is ( because the chain is absorbing). Let and . Then the probability that the chain does not access to the absorbing state after rounds is at most , which converges to 0 when (i.e. ). Therefore, the chain will be absorbed in an absorbing state, which is uniquely in our case. \qed

B.2 Proof of results in Section 4.2

In this part of the Appendix, we present proofs regarding the lemmas and theorems on the stochastic boundedness on the time to convergence of IP-VCGs, as discussed in Section 4.2.

Proof of Theorem 3 13

Denote by the set of ’s neighbors who have no clash in round , and define the set with cardinality denoted by . Additionally, define . We also define an indicator variable for each color as

Define , which represents the number of colors unused by ’s neighbors in round . A color can belong to one of the three cases:

-

(case 1)

. Then because for .

-

(case 2)

. Then

i.e. the color can only be available in round when it is not taken by the active neighbors with zero utility.

-

(case 3)

. Then

The second term results from the fact that an agent would reject any color which is held by at least one of his neighbors and remain the color in the last round.

Therefore, the expectation of can be expressed as

| (13) | ||||

Assumption 2 implies because the graph is connected. The last inequality in (13) arises from the fact that for and the nonnegativity of . Defining

Lemma 4 and Lemma 5 in Chaudhuri et al. (2008) then apply. Therefore, we can adapt from their Lemma 3 that

for by multiplying the original constant by another and due to Assumption (R1) where . \qed

To prepare for the proofs regarding Lemma 4, we introduce the canonical form of an AMC.

Definition 8 (Canonical Form of AMC)

Suppose an AMC has absorbing states and transient states. The transition matrix of the AMC is in canonical form if it is divided into four sub-matrices listed as follows:

| (CF) |

where

-

•

is a block containing the transition probabilities between transient states.

-

•

is a block containing the transition probabilities from transient to absorbing states.

-

•

is the identity matrix of order and is the block with null elements

Since an AMC will be eventually absorbed in an absorbing state, as we prove in Theorem 2, we have

The following lemma gives several properties of an AMC which would be useful in our later arguments.

Lemma 11 (Properties of AMC (See, e.g. Kassir (2018) Chapter 2))

For an AMC with transition matrix in canonical form (CF), then

-

(AMC-1)

exists.

-

(AMC-2)

-

(AMC-3)

Suppose the number of states is . Define a column vector whose i-th element denotes the expected number of steps before absorption, given the initial state . Then where .

Proof of Lemma 11 14

The first statement is equivalent to“if , then ” which is easy to prove as

Let , then by Taylor’s formula. Notice that the n-th power of the transition matrix is

then

Denote by the times the chain hits state starting from and the indicator of arriving at state in the th step, where and are both transient states. Then

Since where denotes the number of steps before absorption given the initial state , we have

which completes the proof. \qed

Next, we prove Lemma 4.

Proof of Lemma 4 15

It suffices to prove the lemma on the representative candidate of IP-VCGs with binary utility functions (3), as explained in Section 4. Given an arbitrary number and define . We also define the random variable to denote the time to convergence of the game, and, specifically, let denote the number of steps to convergence of initiating from the state . Invoking Theorem 3 we have

| (14) | ||||

The first equality in (14) is due to the fact that agents with utility one will remain his color forever. Thus,

Taking an union bound over all vertices, we obtain

thus

| (15) |

We generate the Markov Chain defined in Lemma 1, , to jump two steps at a time and consider its transition matrix in the form (CF). Define . We denote the column vector whose i-th element denotes the expected number of steps before absorption starting from , i.e. , where we correspond to the row in . Obviously corresponding to the absorbing state of any proper coloring. By (AMC-3) of Lemma 11,

elementwise. For each element , equivalently,

Denote be the column vector whose i-th element denotes , the variance of steps before absorption starting from . Then

We consider all possible states led by the first step of and derive an expression for as a probabilistic combination of the expected number of steps given other initiate states.

| (17) | ||||

This operation is often named “first-step analysis” in stochastic processes.

The proof is thus complete. \qed

We now forward to prove Theorem 5.

Appendix C Proof of results in Section 5

C.1 Proof of results in Section 5.2

In this part of the Appendix, we present proofs regarding the lemmas and theorems on the the optimality of the proposed MH-policy, as discussed in Section 5.2.

Proof of Lemma 7 17

We prove by definition. Irreducibility follows from the proposal uniform distribution due to ramdom sampling from for being active. The transition probability from state (coloring) to state (coloring) by the MH-policy (Algorithm 1) is

where . Note that whatever and are when . Then we have

which is the transtion probability of the unperturbed distribution . Then we have

thus the MH-policy induced Markov Chain is RPMP with resistances

| (18) |

Proof of Theorem 8 18

We prove by contradiction. Let be a stochastically stable state and is the corresponding minimum resistance tree rooted at ; i.e. by Theorem 6. Suppose . Consider an optimal state such that . We try to establish a new tree rooted at such that .

By Definition 7, there exists a unique path

from to . Consider the reverse of this path

and one can observe that a new tree, namely can be established by replacing with . The vertices which used to access without passing would now access uniquely through . Figure 9 illustrates an example when and with and represented in red and green respectively. The resistence of is then

| (19) |

By (18) and (5), for adjacent states and (i.e. there is a an edge between and ),

thus

Therefore, with and ,

because achieves best welfare by assumption. Plugging back into (19), we have

which contradicts to our assumption that is stochastically stable. We now conclude that any stochastically stable state(coloring) must bring the largest welfare; i.e. a G-VCG converges to under the MH-policy. \qed

C.2 Proof of results in Section 5.3