Using Single-Step Adversarial Training to Defend Iterative Adversarial Examples

Abstract.

Adversarial examples have become one of the largest challenges that machine learning models, especially neural network classifiers, face. These adversarial examples break the assumption of attack-free scenario and fool state-of-the-art (SOTA) classifiers with insignificant perturbations to human. So far, researchers achieved great progress in utilizing adversarial training as a defense. However, the overwhelming computational cost degrades its applicability and little has been done to overcome this issue. Single-Step adversarial training methods have been proposed as computationally viable solutions, however they still fail to defend against iterative adversarial examples. In this work, we first experimentally analyze several different SOTA defense methods against adversarial examples. Then, based on observations from experiments, we propose a novel single-step adversarial training method which can defend against both single-step and iterative adversarial examples. Lastly, through extensive evaluations, we demonstrate that our proposed method outperforms the SOTA single-step and iterative adversarial training defense. Compared with ATDA (single-step method) on CIFAR10 dataset, our proposed method achieves 35.67% enhancement in test accuracy and 19.14% reduction in training time. When compared with methods that use BIM or Madry examples (iterative methods) on CIFAR10 dataset, it saves up to 76.03% in training time with less than 3.78% degeneration in test accuracy.

1. Introduction

Adversarial examples were discovered and presented in (Szegedy et al., 2014) under image classification tasks by showing that specially designed insignificant perturbations can effectively mislead neural network (NN) classifiers. More importantly, it has been shown that such perturbations are not special cases but rather generic and can be crafted for almost every input example. Yet, more scary, researchers show that adversaries could arbitrarily control the prediction results as desired with high success rate through carefully designed perturbations (Carlini and Wagner, 2017)(Kurakin et al., 2017).

Thereafter, great effort has been devoted to designing defense methods against adversarial examples. Some of these methods utilize augmentation and regularization to enhance test accuracy on adversarial examples (Papernot et al., 2016). Other methods intercepts the input to the classifier to either identify and drop adversarial examples or eliminate adversarial perturbations (Meng and Chen, 2017)(Samangouei et al., 2018). Currently, the most popular choices of defense methods are based on adversarial training (Madry et al., 2017).

The fundamental idea of adversarial training is to use adversarial examples as blind spots and retrain the classifier with them. This enhances the capability of the classifier to correctly classify the perturbed examples to their original labels. These defenses could be categorized based on the adversarial examples they are using. Defenses that use single-step adversarial examples are called Single-Adv, while defenses that use iterative adversarial examples called Iter-Adv.

Among all existing defense approaches, adversarial training is shown to be the most successful solution, because unlike many others, it does not rely on the false sense of security brought by obfuscated gradient (Athalye et al., 2018). However, adversarial training still has many unsolved problems. The high computational cost in preparing adversarial examples during training (Liu et al., 2019) is among the most challenging ones. If Single-Adv defense is used, the trained model will not be able to defend against iterative adversarial examples (Kurakin et al., 2017). On the other hand, Iter-Adv defenses can defend against iterative examples, however, they require powerful GPU infrastructure for an ImageNet like datasets (Kannan et al., 2018).

Nowadays, more and more smartphone and smart-home applications are integrated with machine learning components, especially the popular NN classifier. These applications are running in an environment without sufficient local computing power for adversarial training. Without considering the cost, a cloud server could partially solve this issue. However, some of these applications, that are running with sensitive or personal data, are not willing to share their data and model with a cloud server. Therefore, these applications lack the tools to defend against adversarial examples.

In general, current adversarial training methods fail to achieve robust performance with acceptable training overhead. In the following sections, we first provide detailed analysis of the state-of-the-art (SOTA) defense methods. Then, based on lessons learned from analysis, we propose a Single-Adv method that can efficiently mitigate iterative adversarial example with low training overhead and name it Single-Step Epoch-Iterative Method (SIM-Adv). Intuitively, it flattens iterative adversarial examples (Iter-Exps) into single-step adversarial examples (Single-Exps) in multiple consecutive training epochs as detailed later. Our contributions are summarized as follows:

-

•

We analyze the SOTA Single-Adv method called ATDA. Our results indicate that the evaluation of ATDA as presented in (Song et al., 2018) was incomplete because we show that it fails to defend against many adversarial examples.

-

•

We analyze the SOTA Iter-Adv methods and identify guidelines that can reduce computational overhead while achieving good performance in defending against Iter-Exps. The guidelines include: (1) using large per step perturbation and (2) utilizing the intermediate examples during the preparation of Iter-Exps.

-

•

Based on the observations from the analysis, we propose a novel Single-Adv method which is the first in this category that can defend against both Single-Exps and Iter-Exps while maintaining very low training overhead. This method opens the door for the applications with limited computing power to build computationally efficient self defenses against adversarial examples.

The rest of the work is organized as follows. Section 2 summarizes important background material. Sections 3 and 4 present detailed analysis of different SOTA adversarial training methods. Section 5 introduces our cost-effective Single-Adv method. Section 6 presents evaluation results and Section 7 concludes the paper.

2. Background

In this section, we review the fundamental material and provide references for further understanding of the concepts presented in this work.

2.1. Adversarial Examples

As mentioned in Section 1, adversarial examples are specially perturbed original examples which aim to fool the NN classifier. Although adversarial examples can be categorized according to different aspects, we focus here on the distinction between Single-Exps and Iter-Exps. Adversarial examples throughout this work are white-box untargeted adversarial examples in the image classification domain. In other words: (1) the perturbation of adversarial example is limited in an norm ball, (2) the classifier is transparent to adversary, and (3) the adversary successfully fools the classifier as long as the prediction is wrong.

2.1.1. Single-Step Adversarial Examples:

In the early stage of adversarial example research, (Goodfellow et al., 2015) introduces the Fast Gradient Sign Method (FGSM) to generate Single-Exps. FGSM calculates gradients of the classifier’s loss function, , towards each original example, . Then, it takes the sign of the calculated gradients and multiplies it with the perturbation limit, . This product is called adversarial perturbation, , and adding it to the original example can turn it into adversarial example, . The mathematical formulation of FGSM is as follows.

| (1) | |||

| (2) |

Here, is the ground truth label, is the parameter of the neural network classifier, and is a function that maps out-of-range values to the closest boundary and preserves the in-range values.

As can be seen from the formulation, FGSM utilizes a linear approximation of the loss function value which only calculates gradients once during the generation process. Therefore, it is a cost-efficient method to generate adversarial examples and is widely used in works like (Kurakin et al., 2017) and (Madry et al., 2017).

In addition to FGSM, there are many other methods proposed to generate Single-Exps. For example, researchers in (Tramèr et al., 2017) introduce the Random Initialized FGSM (R+FGSM) as an attack method to break defenses that rely on the gradient masking effect.

2.1.2. Iterative Adversarial Examples:

Compared with Single-Exps, Iter-Exps are more serious adversarial examples since they are harder to be mitigated. Instead of linear approximation on the loss function value, Iter-Exps are generated by smaller per step perturbations () of the original examples based on gradient calculations of the loss function , where is a scale factor. The small perturbation process is repeated for a certain number of iterations, . Two widely used methods for generating Iter-Exps are the Basic Iterative Method (BIM) and the Madry Method (Madry) which are introduced in (Kurakin et al., 2017) and (Madry et al., 2017), respectively. These two methods utilize the projected gradient descent method to perturb the example during each iteration. The only difference between BIM and Madry is that Madry randomly perturbs the original example and utilizes it as the starting point, , while BIM always starts with the original example itself. The mathematical formulations are listed below.

| (3) | |||

| (4) |

Throughout this work, we represent the different Iter-Exps generation methods in the following format, “Name()”. For example, BIM with will be represented as BIM(10,10).

2.2. Adversarial Training

The fundamental idea of adversarial training is to use adversarial examples as blind spots and retrain the classifier with these blind spots. From a system level point of view, adversarial training can be represented as a two-step process.

| (5) | |||

| (6) |

Here, is an indicator function, represents the classifier, is a loss function, and is the feasible set of . Starting with a randomly initialized , the searching of adversarial perturbation (Eq. 5) and the training of classifier (Eq. 6) will be competing with each other repeatedly until converges.

2.2.1. Single-step Adversarial Training:

Early adversarial training research retrain with Single-Exps (Single-Adv). For example in (Goodfellow et al., 2015), researchers apply adversarial training with FGSM examples (FGSM-Adv), while the work in (Tramèr et al., 2017) enhances the FGSM-Adv through generating FGSM examples based on holdout classifiers. However, these two as well as many other Single-Adv methods fail to defend against Iter-Exps. Recently, a SOTA Single-Adv method (ATDA) is introduced in (Song et al., 2018) which claims to defend against both Single-Exps and Iter-Exps. To achieve this, the method utilizes FGSM examples and a domain adaptation loss function. However, as we show later, the domain adaptation does not help adversarial training and hence the ATDA trained classifier fails to defend against Iter-Exps.

2.2.2. Iterative Adversarial Training:

To defend against both Single-Exps and Iter-Exps, researchers combine adversarial training with Iter-Exps (Iter-Adv). Among these methods, adversarial training with BIM examples (BIM-Adv) and Madry examples (Madry-Adv) are common choices due to their relatively high classification accuracy as shown in (Kurakin et al., 2017) and (Madry et al., 2017), respectively. Based on these two defense methods, the work in (Kannan et al., 2018) claims to improve the defense by utilizing a logit pairing loss function. However, as mentioned in (Athalye et al., 2018) and (Kurakin et al., 2016), all these Iter-Adv methods are computationally expensive. This problem makes Iter-Adv methods not very practical, especially in platforms with limited computing power.

3. Analysis of the ATDA Method

Single-Adv methods have the advantage of less computation overhead compared with Iter-Adv methods. However, most of Single-Adv methods fail to defend against Iter-Exps. However, a recent method dubbed ATDA has been introduced in (Song et al., 2018) as the SOTA Single-Adv method that defends against both Single-Exps and Iter-Exps by adding the so-called domain adaptation loss. In the following, we thoroughly analyze ATDA by presenting its detailed prediction on Iter-Exps (BIM and Madry examples) and comparing with other defenses.

3.1. Details of the ATDA Method

The fundamental idea of ATDA is to combine adversarial training with domain adaptation. The authors of ATDA believe that Single-Exps (e.g. FGSM examples) could be envisioned as limited number of samples in the adversarial domain. Therefore, combining domain adaptation methods could enhance the performance of Single-Adv. In its design, ATDA utilizes both unsupervised and supervised domain adaptations as follows.

3.1.1. Unsupervised Domain Adaptation:

The authors assume that both the predictions of original examples, , and adversarial examples, , follow two different multivariate normal distributions. To align these two distributions together, the authors defined a covariance distance as

| (7) |

where represents the predictions, represents the covariance matrix and denotes the number of classes in the predictions. In addition to that, authors also utilize the standard distribution distance metric in (Borgwardt et al., 2006) and the sum of these two distances is defined as the unsupervised domain adaptation loss.

| (8) | |||

| (9) |

3.1.2. Supervised Domain Adaptation:

In order to utilize the ground truth information in the training dataset, ATDA proposes a new loss function to minimize the intra-class variations and maximize the inter-class variations.

| (10) | ||||

| (11) |

The counts the number of examples in a set. The represents the function . The is the center of predictions from examples that has the same ground truth as example . The centers of predictions from examples in each ground truth class are collected in a set which is denoted by . These centers are also updated together with parameters in the NN based on the following equation.

| (12) |

Here, represents the subset of original and adversarial examples that belongs to th class. Also, denotes the step size of the update and is set to be 0.1 according to (Song et al., 2018).

3.2. Analyzing Feature Space Encoding

The first step of our analysis targets the feature space encoding from four different classifiers (vanilla, ATDA, BIM(30,30)-Adv, and SIM(10,20)-Adv classifiers). We use t-SNE (Maaten and Hinton, 2008) to project the high dimensional feature encoding from each classifier to a two dimensional space and visualize it in Figure 1. During the analysis, we sample original examples from MNIST dataset. Examples from a randomly selected class are used as targets (green dots) while others are references (blue dots). Corresponding to targets, we generate adversarial examples (red dots). In the visualized feature space encoding, examples that are close to each other and form a group are much likely to be classified in the same class. Therefore, the classifier that can defend against adversarial examples should have a visualization where green and red dots are blended together.

From Figure 1a, we can see that the feature encoding of adversarial examples (BIM(30,30) examples) form several individual small groups are clearly different from the feature encoding of targets (green dots). Without the color, we can barely tell the difference between small groups of references (blue dots) and adversarial examples (red dots). When using the ATDA classifier, this problem is slightly mitigated since the red dots are grouped together in Figure 1b. However, from Figure 1b, the groups of green dots and red dots are still separable. BIM(30,30)-Adv and our SIM(10,20)-Adv classifiers presented in Figures 1d and 1e are significantly better than the ATDA classifier since the red and green dots are blended. It’s worth recalling that our SIM(10,20)-Adv belongs to Single-Adv and takes less training time than ATDA as presented later. Therefore, our method outperforms ATDA in both accuracy and training time as detailed in later sections. Finally, we add an extra visualization for the ATDA classifier and change the adversarial examples to FGSM examples. Compared with BIM(30,30) examples which are Iter-Exps, the FGSM examples are weaker Single-Exps. In Figure 1c, the red and green dots are blended which means, as expected, that the ATDA classifier performs well against FGSM (Single-Exps) examples.

3.3. Overfitting to FGSM Examples

| FGSM | BIM(30,30) | Madry(30,30) | ||

|---|---|---|---|---|

| MNIST | 96.37% | 73.97% | 54.75% | |

| 98.03% | 19.91% | 1.23% | ||

| FMNIST | 83.59% | 49.41% | 32.31% | |

| 84.03% | 33.82% | 11.37% |

Although we show that ATDA classifier has degenerated performance on BIM examples by analyzing feature space encoding, its numerical results, accuracy, in (Song et al., 2018) does not reflect the same finding. A possible reason is that the perturbation limit is too small compared with previous works such as (Madry et al., 2017). Moreover, while experimenting with the source code, we locate another possible reason, the tuned learning rate .

We evaluate ATDA classifiers with different values of as shown in Table 1. The results show that the test accuracy on both BIM(30,30) and Madry(30,30) examples significantly degenerates when we decrease . When , the ATDA performs in a similar way as FGSM-Adv in (Tramèr et al., 2017). Actually, (Tramèr et al., 2017) has shown that FGSM-Adv causes the trained classifier to overfit FGSM examples and be vulnerable to Iter-Exps. It is intuitive that optimizing with a smaller learning rate should converge to the same location if not a better location. Therefore, the degeneration in Table 1 means that the objective function in ATDA, combining FGSM-Adv and domain adaptation losses, is not appropriate for correct classification of Iter-Exps.

3.4. Vulnerability to Randomness

Although previous subsections show that ATDA cannot defend Iter-Exps due to inappropriate objective function, the reason could solely be the usage of FGSM examples in the training. To pinpoint the effect of domain adaptation loss, we design another experiment which uses BIM examples instead of FGSM examples for training. We choose BIM(30,30)-Adv as the baseline and combine it with the domain adaptation loss proposed by ATDA. Our experiments are conducted on both MNIST and FMNIST datasets with BIM(30,30) and Madry(30,30) examples. Moreover, we assign different values to , a parameter that controls the weight of domain adaptation loss.

Figure 4 presents the results of this experiment. When , the classifier is solely trained with the cross-entropy loss. As increases, the domain adaptation loss becomes more and more important in the total training loss. To our surprise, this experiment exposes another vulnerability of ATDA. Compared with cross-entropy loss, the domain adaptation loss does not make extra positive impact on the test accuracy. The test accuracy on BIM(30,30) examples remains unchanged or shows a small degeneration. Even worse, the domain adaptation loss hurts the test accuracy of the classifier on Madry(30,30) examples, especially when . We think a reasonable explanation is that the randomness in Madry examples breaks the statistical assumption used in the domain adaptation loss.

3.5. Summary

In this section, we show that ATDA cannot defend Iter-Exps. Firstly, ATDA’s feature space encoding shows that it miserably fails against Iter-Exps. Secondly, by changing the learning rate, we show that the objective function of the ATDA does not take Iter-Exps into consideration. Lastly, by combining domain adaptation loss with Iter-Adv, we show that relying on domain adaptation loss degenerates the accuracy of classifying Iter-Exps with randomness (Madry examples). Although the analysis is done in MNIST and FMNIST datasets, our further evaluation results on CIFAR10 dataset (Sec 6) also show that ATDA performs poorly in defending Iter-Exps.

4. Analysis of Iter-Adv Methods

Compared with Single-Adv methods, Iter-Adv methods have significantly higher test accuracy. Therefore, the majority of adversarial training defenses, including the SOTA ones, focus on Iter-Adv methods. In spite of this, the domain is still not very well comprehended. In this section, we explore several fundamental questions regarding SOTA Iter-Adv methods through extensive set of experiments.

4.1. Limit of Decreasing the Per Step Perturbation

Based on the introduction of Iter-Exps in Section 1, it is clear that the smaller per step perturbation applied, the better observation of NN’s decision hyperplane and the stronger adversarial examples obtained. However, to select an appropriate per step perturbation, we believe that a quantitative analysis is needed.

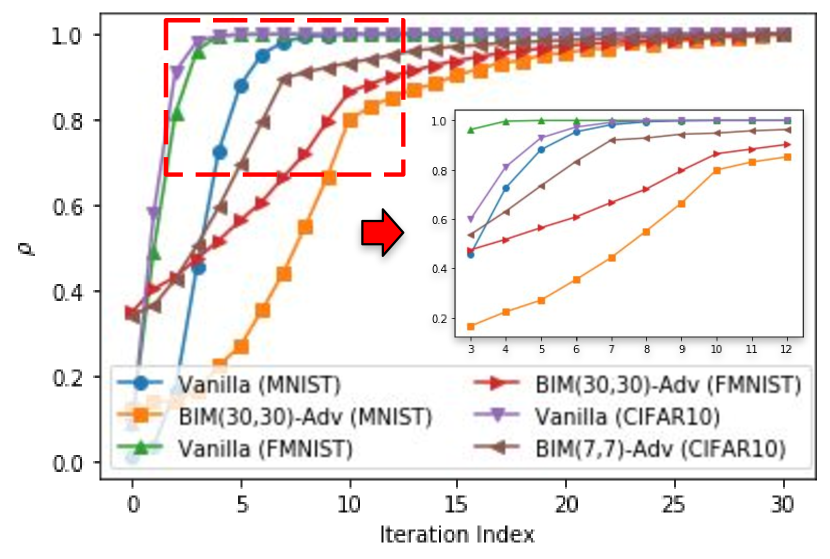

To do this analysis, we conduct experiments on MNIST, FMNIST, and CIFAR10 datasets. In each dataset, we train two different NN classifiers with the same structure and hyper-parameter settings, which include: (1) a Vanilla classifier trained on original examples only and (2) a BIM-Adv classifier (Kurakin et al., 2016). For each value in the range , we generate BIM examples with fixed ( in MNIST, in FMNIST, and in CIFAR10) and calculate the following ratio, :

| (13) |

To understand , assume that its value is when, for example, . And, the maximum value of and is (e.g. with MNIST dataset). This means that BIM examples generated with value can be as successful as those generated with value in misleading the classifier.

From the results in Figure 4, it is clear that converges fast and saturates when the value of is around 5 in all six lines. For the Vanilla classifiers, this phenomenon is not surprising since they have no defence at all and most of the adversarial examples can fool them. However, we see a similar trend from the BIM-Adv classifiers which are well trained to defend adversarial examples. The insight we draw from this experiment is that increasing the value of over a certain limit provides only marginal help in finding stronger adversarial examples. In other words, training a classifier by Iter-Adv method with small values (around 5 in this experiment) is as efficient as training the classifier by Iter-Adv with large values (30 in this experiment).

Given the fact that adversarial training uses adversarial examples to find blind spots of the under-trained classifier and retrain it, these results show that decreasing the per step perturbation of Iter-Exps used during Iter-Adv beyond a certain limit only marginally benefits the defense.

We think the saturation of per step perturbation exists because the loss structure used in projected gradient descent to search Iter-Exps is shown to be highly tractable (Madry et al., 2017). This important finding indicates that defenses could use smaller values of without sacrificing the quality of the defense. Although the resulting defense is still within the Iter-Adv category, it consumes less time and computations in preparing adversarial examples. We will utilize this observation in combination with the following others to develop an efficient Single-Adv method.

4.2. Training with Intermediate Adversarial Examples

As shown in Section 2 Eq. 4, Iter-Adv methods usually use final adversarial examples () to build the defense since it is much stronger than intermediate versions (). In this section, we explore whether those intermediate examples can be utilized for training while being generated, instead of sitting idle and waiting for the final versions.

To do so, we conduct another set of experiments on MNIST, FMNIST and CIFAR10 datasets. In these experiments, we use the same NN classifiers, measure the same ratio ( as defined in Eq. 13), and maintain the same perturbation limit used in the preceding subsection. The only difference is that we here fix the value of (10 for MNIST and FMNIST, 7 for CIFAR10) and (30 for all) in BIM examples. Values along the X-axis in Figure 4 correspond to different iterations during the generation of BIM examples. For example in MNIST, an X-axis value of zero corresponds to the original examples, a value of represents the corresponding intermediate examples after iterations, and a value of 30 corresponds to the final versions, BIM(10,30), of the adversarial examples.

The results show that , under all the scenarios, is monotonically increasing with the number of iterations. For all three Vanilla classifiers which have no defense against adversarial examples, saturates quickly after around 5 iterations. Although for BIM-Adv classifiers does not saturate as quick as that for Vanilla classifiers, it increases almost exponentially before reaches around (MNIST and FMNIST) or (CIFAR10). In the zoom-in view of Figure 4, we can clearly see these turning points. One interesting observation is that the turning point in BIM-Adv classifier corresponds the selected value of (10 or 7).

Our insights from this experiment is that: a large portion of intermediate examples of Iter-Exps are good quality adversarial examples since they effectively reveal the classifier’s blind spot, and hence can be used to build high quality defenses. In other words, we can utilize the intermediate examples during the preparation of Iter-Exps to continuously enhance the model instead of waiting for the final examples while training Iter-Adv defenses. In Section 5, we build on this finding to expand the generation process of Iter-Exps and end up with a Single-Adv method that performs very close to Iter-Adv.

4.3. Summary

In this section, we identify two experimental properties of Iter-Adv: (1) It is recommended to use large per step perturbation, i.e., small and (2) intermediate examples can be used to speed up adversarial training with a very small degeneration in Iter-Exps quality.

5. Single-Step Epoch-Iterative Method

In previous sections, we conduct experiments and evaluate both Single-Adv and Iter-Adv methods in details. The insights from the results help us enhance our understanding of adversarial training and its underlying fundamental concepts. In this section, we propose a new Single-Adv method which we call Single-Step Epoch-Iterative Method.

5.1. Motivation and Design

In Figure 9, we review the process of adversarial training. Each row in the figure represents a training epoch and the solid black lines represent the data flow (training examples). The dashed red lines across different rows correspond to knowledge flow (classifier’s weights) from one epoch to the next. As shown in the figure, the original examples are fed into the generator of adversarial examples, which could be single-step or iterative. Then, the original and adversarial examples are used to train the classifier. The training process consists of several training epochs and the weights of the classifier are carried out from one training epoch to the next.

Inspired by the empirical findings drawn from our previous experiments (Section 4), we propose the following modifications to enhance the Single-Adv defense process. Similar to other Single-Adv methods, our method also uses the single-step generator to reduce computation overhead in each epoch. Recall that a classifier which is trained with Single-Adv fails to defend Iter-Exps, therefore, we use consecutive training epochs to mimic the generation of Iter-Exps.

Starting from the second training epoch, we reuse the output of the generator from the previous epoch as input to the generator of the current epoch, instead of using the original image. As a result, the classifier can be seen as trained with intermediate examples in the first training epochs. In each training epoch, our method uses a relatively large per step perturbation (i.e., small ) instead of total perturbation (i.e., ). It helps our method to avoid repeatedly generating Single-Exps for training. On the other hand, a large per step perturbation ensures the adversarial examples can quickly reach their maximum perturbation. Therefore, it can mitigate the degeneration caused by training with weak intermediate examples in the first few training epochs. Lastly, after repeating aforementioned steps for epochs, the generator switches to select original examples as inputs which means the iteration over consecutive epochs is reset.

From a high level point of view, we flatten the iteration of generating Iter-Exps into training epochs. Within each iteration of consecutive training epochs, the mathematical formulation of generating adversarial examples is as follows.

| (14) | |||

| (15) | |||

| (16) |

Here, represents the index of iteration over training epochs. Similar as traditional adversarial training method, we also present the process of SIM-Adv as a flow chart in Figure 7.

5.2. Applying Over-Perturbation

In the previous subsection, we present the core design of using Single-Exps to mimic Iter-Exps. At the same time, we also mention the potential disadvantage of this design. In the majority of training epochs, our method uses the intermediate examples. Recall the experiment results in Figure 4, these intermediate examples are less serious than the corresponding Iter-Exps. Based on our experimental results, the classifier directly trained with the SIM examples can defend against adversarial examples but performs worse than that trained with Iter-Exps.

To further mitigate the gap in performance, we now introduce a heuristic modification of the hyper-parameter setting which we call it over-perturbation. For the first time, we define two different hyper-parameter settings in adversarial training methods. We define that the setting is over-perturbation when , otherwise is under-perturbation. This modification is based on our empirical results. As aforementioned, the intermediate examples are less serious than final Iter-Exps. However, the zoom-in view in Figure 4 shows the existence of turning point in the iteration index and its connection to the value of . Before the turning point, the success rate to mislead classifiers by intermediate examples is much lower than that by Iter-Exps but increases exponentially, and vice versa.

By applying over-perturbation, we actually ensure that our method trains the classifier with strong adversarial examples in most of the training epochs. Assume we run 20 epochs of training with two settings (1) and (2) . Under the first setting, the classifier is trained with intermediate examples before the turning point in both 1st to 9th and 11th to 19th epochs. While, under the second setting, the intermediate examples used between and epoch are after the turning point. Overall, the second setting spends more epochs in training the classifier with strong adversarial examples and the trained classifier performs better on defending adversarial examples.

Actually, the over-perturbation setting has been used in previous research works, intentionally or unintentionally. For example in (Madry et al., 2017), the hyper-parameter setting of all Madry-Adv methods are over-perturbation. For curiosity, we try to change the hyper-parameter setting from under to over-perturbation and record the performance. In both MNIST and FMNIST datasets, we fix the to 30 and iterativelly reduce the value of from 30 to 10. In each setting, we measure classifier’s test accuracy on both BIM and Madry examples. These results are presented in Figure 7. To our surprise, we found that the Madry-Adv with under-perturbation may train a classifier performs significantly worse than that trained with over-perturbation (in FMNIST).

For this phenomenon, we believe that it is related to the random initialization in Madry-Adv. In some situations, the random initialization may add unnecessary perturbation to the image and such perturbation could degenerate the performance of trained classifier. Under such situations, the over-perturbation makes it possible to eliminate unnecessary perturbation which enhances the performance of the trained classifier. More detailed analysis of combining over-perturbation and Madry-Adv is out of the scope of this work and we keep it as our future work.

5.3. Searching Space for Adversarial Examples

In order to intuitively show the effectiveness of our proposed method, we prepare a toy example to compare the search space of adversarial examples. Without loss of generality, we assume that the data in this toy example is in a 2 dimensional space. Therefore, we could easily present the search space of adversarial examples. In Figure 9, we choose to present the search space of optimal adversarial examples as well as BIM, FGSM and SIM examples.

As we see from the top-left corner, the search space of optimal adversarial examples is the blue square which represents the entire norm ball. However, this search space corresponds to the exhaustive search which cannot be achieved. Among others, the BIM examples are the best mimic of the optimal adversarial examples. Since it separate the total perturbation into multiple steps and iteratively apply small perturbations, the search space of BIM examples can be represented by the mesh of red dots. The density of dots is related to the size of per step perturbation. Compared with BIM examples, the search space of FGSM examples are significantly limited. Since the total perturbation is applied all at once, the potential locations (red dots) of FGSM examples can only cover corners and some surface of the norm ball, while the entire inner space between origin and perturbation boundary is unreachable.

When we focus on the SIM examples, its search space could be represented by a mesh of red dots with dynamically changing size. With a fixed density of dots, the size of the mesh increases from the smallest one (just around the origin) to the largest one (same size as the entire norm ball) epoch-by-epoch. Although the searching space of SIM examples is smaller than that of BIM examples at the beginning, small value and over-perturbation setting ensure that most of epochs are searching adversarial examples in the entire norm ball. Moreover, the analysis of Iter-Exps shows that a relatively lower density of dots does not significantly affect the searching of adversarial examples. Last but not the least, SIM examples are Single-Exps which consumes less computation overhead compared to the Iter-Exps of BIM.

5.4. Comparing with the Free-Adv

During the preparation of this work, we noticed that there is a related work, denoted as Free-Adv, that is uploaded to the arXiv (Shafahi et al., 2019). This work shares a similar design to our paper in generating adversarial examples. However, our proposed work is different from it and performs better. Until writing this paper, this work is still an arXiv paper and has not been accepted as a conference or journal submission. Therefore, we did not list it as the SOTA Single-Adv method due to the lack of peer review. However, for the completeness of our work, we still reimplement, evaluate and compare it with our proposed method.

During the generation process of adversarial examples, both the Free-Adv and our proposed method are reusing the gradient information. In the Free-Adv, the training images in each mini-batch are Single-Exps. In order to mitigate the issue of training with Single-Exps, the authors try to replay each mini-batch multiple times (denoted as ) and reuse the adversarial examples from previous replay as the inputs. Finally, the total training epoch of the Free-Adv will be decreased by the factor of the total replay iterations. Although the Free-Adv looks similar to our proposed method, there are two differences which can distinguish it from our work.

First of all, the proposed algorithm in the Free-Adv replays the images in the mini-batch immediately which means the classifier is repeatedly trained with the same mini-batch several times. As the authors pointed out in (Shafahi et al., 2019), such replay could cause the “catastrophic forgetting” problem. If the information in the training data is unbalanced, the classifier could become overfitting through being repeatedly trained with a certain category of training data. On the contrary, our proposed method utilizes gradient information across different training epochs. In other words, our method does not change the training order which could be specially designed. Therefore, our method does not have the risk of causing the “catastrophic forgetting” problem (Shafahi et al., 2019).

Secondly, in our work adversarial perturbation is applied in a different way compared with Free-Adv. Based on Algorithm 2 in (Shafahi et al., 2019) and the official implementation on GitHub, it is clear that the Free-Adv utilizes the total perturbation during each replay. From Figure 9, we show that using total perturbation only (e.g. FGSM examples) has limited sampling locations which can only cover the corners and parts of the surface of a ball. Although the Free-Adv repeatedly replays the mini-batch several times, its sampling locations will not change unless most of the pixel values are close to the clipping boundary. Although people may think that it is a problem about the hyper-parameter tuning, we argue that the design of applying adversarial perturbation should be based on the empirical analysis of iterative adversarial examples proposed in Section 4.

In order to support our hypothesis, we repeat the experiment in Section 3 to represent the feature space encoding of the classifier trained with the Free-Adv. The results are presented in Figure 9 and the legends are the same as those in Figure 1. When the FGSM examples are used as adversarial inputs, the classifier could group them together. However, these adversarial inputs and their corresponding targets belong to two separated groups which means the Free-Adv performs worse than the SIM-Adv. If the adversarial inputs are BIM examples, the trained classifier is fooled since the red dots are separated into several small groups. Overall, the presented results in Figure 9 reflect that the Free-Adv has a similar issue as the ATDA and performs even worse than ATDA in MNIST dataset. This issue is also identified during our evaluation of the Free-Adv with the details presented in the next section.

| MNIST | FMNIST | CIFAR10 | |

|---|---|---|---|

| FGSM, BIM and Madry examples | |||

| Single-Adv | Free-Adv and ATDA | ||

| Iter-Adv | BIM-Adv and Madry-Adv | ||

| Network Structure | LeNet | ResNet | |

| Metric | test accuracy and total training time | ||

| Platform | CleverHans | ||

| Vanilla | Free-Adv () | ATDA | SIM(10,20)-Adv | BIM(10,30)-Adv | Madry(10,30)-Adv | ||

| MNIST | Original | 98.84% | 98.72% | 97.55% | 99.00% | 99.01% | 99.01% |

| FGSM | 4.46% | 95.94% | 96.37% | 96.57% | 96.56% | 97.03% | |

| BIM(10,40) | 0.94% | 1.56% | 42.34% | 92.55% | 93.83% | 94.04% | |

| Madry(10,40) | 0.87% | 0.70% | 33.14% | 92.89% | 94.15% | 94.29% | |

| FMNIST | Original | 91.64% | 89.45% | 84.64% | 88.96% | 86.19% | 87.14% |

| FGSM | 6.07% | 85.69% | 83.59% | 79.78% | 78.34% | 75.82% | |

| BIM(10,40) | 5.96% | 7.39% | 25.81% | 65.93% | 69.71% | 64.59% | |

| Madry(10,40) | 4.55% | 3.99% | 23.79% | 66.88% | 70.58% | 69.72% | |

| Vanilla | Free-Adv () | ATDA | SIM(2,10)-Adv | BIM(4,7)-Adv | Madry(4,7)-Adv | ||

| CIFAR10 | Original | 91.74% | 73.96% | 89.11% | 77.21% | 80.83% | 81.08% |

| FGSM | 17.89% | 48.96% | 65.77% | 54.12% | 56.32% | 56.08% | |

| BIM(4,20) | 5.56% | 38.68% | 8.02% | 43.69% | 46.77% | 46.73% | |

| Madry(4,20) | 4.82% | 38.63% | 8.32% | 43.78% | 46.80% | 46.74% |

6. Evaluation

In this section, we first summarize the evaluation settings. Then, we present, analyze and compare the evaluation results of our proposed adversarial training method, SIM-Adv, with other defense methods.

6.1. Evaluation Setting

In this evaluation, we select three different datasets: MNIST, FMNIST and CIFAR10. For the MNIST and FMNIST datasets, we select the LeNet (LeCun et al., 1998) as network structure, while, the ResNet structure (He et al., 2016) is used in the CIFAR10 dataset. Within each dataset, we evaluate the performance (classification accuracy) of the trained classifier against both original and different types of adversarial examples.

As mentioned in Section 2, all the adversarial examples throughout this work are white-box untargeted adversarial examples in the image classification domain. For the method of generating adversarial examples, our selection includes FGSM, BIM and Madry which reflects the performance on both Single-Exps (FGSM examples) and Iter-Exps (BIM and Madry examples). The total perturbation limits are 0.3 in MNIST, 0.2 in FMNIST and in CIFAR10 which is used in the (Madry et al., 2017). To make the evaluation more convincing, we select larger value in both BIM and Madry examples to make them stronger than adversarial examples used during training.

As a baseline, we present the evaluation results of the vanilla classifier, a one with no defense against adversarial examples. To better evaluate our proposed method, we compare not only with Single-Adv methods (ATDA and Free-Adv), but also with Iter-Adv ones (BIM-Adv and Madry-Adv). In the evaluation, we skip adversarially trained models with FGSM or R+FGSM examples. Although FGSM-Adv and R+FGSM-Adv are single-step version of BIM-Adv and Madry-Adv, previous studies show that they are failed to defend Iter-Exps (Kurakin et al., 2017)(Tramèr et al., 2017). In other words, directly setting in BIM-Adv or Madry-Adv cannot train a classifier that makes correct predictions on Iter-Exps. Instead, we present the ATDA and Free-Adv as representatives of Single-Adv.

For these selected adversarial training methods, we follow the original setting of hyper-parameters presented in their work and fine-tune the setting to present the best performance. For our proposed method, we tune its hyper-parameters as follows. Firstly, its can be select as the same or one half of value in corresponding BIM-Adv method. Secondly, the value is selected among 2 to 5 times of the value due to the over-perturbation. Among these combinations, we present its best performance. The specific hyper-parameter values of all methods are presented along with evaluation results.

During the evaluation, we compare different trained classifiers in terms of test accuracy which is defined as follows:

| (17) |

Here, the calculation of test accuracy is based on a single category of inputs (e.g. original examples or FGSM examples). Moreover, we also measure the total training time consumed by training classifiers with different methods. To ensure the quality and reproducibility, adversarial examples used in both training and evaluation are based on the well-known standard python platform, CleverHans (Papernot et al., 2018). A summary of these evaluation settings is also presented in Table 2.

| FGSM | BIM(10,40) | Madry(10,40) | ||

|---|---|---|---|---|

| MNIST | 93.87% | 89.93% | 90.11% | |

| 96.57% | 92.55% | 92.89% | ||

| FMNIST | 74.68% | 61.07% | 61.46% | |

| 79.78% | 65.93% | 66.88% |

6.2. Test Accuracy

We first evaluate the Free-Adv method. As Table 3 shows, the Free-Adv classifier can defend both Single-Exps and Iter-Exps in the CIFAR10 dataset. However, its Iter-Exps accuracy degenerates significantly with MNIST and FMNIST datasets. We think this phenomenon is related to the second issue of the Free-Adv that is mentioned in Section 5. The limited searching space makes the classifier trained with the Free-Adv unable to defend the Iter-Exps. In the CIFAR10 dataset, training with the Free-Adv enhances the defence against Iter-Exps since the per step perturbation is enlarged from to . As a result, the issue of limited searching space is mitigated. Even in the CIFAR10 dataset, the test accuracy of the classifier trained with the Free-Adv is still lower than that of our classifier (SIM-Adv), because SIM-Adv can tune the hyper-parameters (i.e. ) for more appropriate per step perturbations.

As shown in Table 3, the performance of the SOTA Single-Adv (ATDA) is significantly worse than that in (Song et al., 2018). The reason is that the perturbation limit is too small in the original work. For example, in the original evaluation is instead of on CIFAR10 dataset. As a result, Iter-Exps are similar to Single-Exps and even FGSM-Adv achieves over 49% test accuracy on Madry examples. Therefore, we think the original evaluation of ATDA is misleading. Our exclusive experiments in Section 3 point that ATDA actually fails to defend Iter-Exps due to the use of: (1) FGSM examples which are less representative, and (2) domain adaptation loss that is vulnerable to randomness.

Compared with the Free-Adv and the ATDA methods, the evaluation results show that adversarial training with SIM examples (SIM-Adv) achieves better and more stable performance. In all the three datasets, the classifier trained with the SIM-Adv can defend both Single-Exps and Iter-Exps while maintaining a reasonable test accuracy of original examples. More importantly, the SIM-Adv significantly enhances the test accuracy on Iter-Exps over the ATDA and the Free-Adv. To the best of our knowledge, this is the first Single-Adv method which can efficiently defend Iter-Exps under the white-box adversary model. To double check, we also test the SIM-Adv with the same evaluation on the ATDA work in Section 3. The results show that the SIM-Adv can defend both Single-Exps and Iter-Exps under different values of and achieve better performance when is lower. The evaluation results are presented in Table 4.

To make our evaluation results more convincing, we also compare the SIM-Adv with SOTA Iter-Adv methods which include both BIM-Adv and Madry-Adv. The overall results show that the SIM-Adv has a competitive performance as the BIM-Adv and Madry-Adv. Although the classifier trained with the SIM-Adv has a degeneration in terms of test accuracy, we think the less than 4% decrease is a reasonable trade-off given that the SIM-Adv trains the classifier with weak adversarial examples in some of its training epochs to save training overhead. Moreover, we could tune the hyper-parameter of the SIM-Adv to achieve better trade-off between test accuracy and training cost. For example, the SIM-Adv could perform a two-step iteration in each training epoch instead of single-step. We leave the analysis of this for future works.

| MNIST | FMNIST | CIFAR10 | |

|---|---|---|---|

| Free-Adv | 234.75 | 308.5 | 25923.5 |

| ATDA | 319.56 | 422.4 | 33011.6 |

| SIM-Adv | 293.22 | 391.2 | 26692.4 |

| BIM-Adv | 866.76 | 1159.2 | 111372.6 |

6.3. Training Time

To fairly evaluate the total training time, we select four different defense methods: the Free-Adv, the ATDA, the SIM-Adv and the BIM-Adv. We do not present the Madry-Adv since it has similar training time as that of BIM-Adv. All of our results are measured on a Dell Workstation with a NVIDIA RTX-2070 GPU.

The evaluation results in Table 5 clearly show that our SIM-Adv significantly reduces the total training time compared with the BIM-Adv. The SIM-Adv saves more than 60% on both MNIST and FMNIST datasets and more than 75% on CIFAR10 dataset in terms of total training time. Even compared with the ATDA, our SIM-Adv can still save at least 7% of total training time since the domain adaptation loss requires additional computation. When comparing with the Free-Adv, our proposed SIM-Adv consumes more training time since it has to save and restore the gradient information across training epochs.

7. Conclusion

In this work, we first show through thorough empirical analysis that the SOTA Single-Adv method (ATDA) is not well evaluated and fails to defend against Iter-Exps. We also provide thorough empirical analysis of SOTA Iter-Adv defense methods and draw insights that can help enhance future Iter-Adv defense methods. In particular, we show that (1) using larger per step perturbation does not hurt the performance of Iter-Adv, (2) the intermediate examples in preparing Iter-Exps reveal the majority of classifier’s blind spots. Finally, we propose a novel Single-Adv defense method, SIM-Adv, and highlight its advantages over a recent related work, Free-Adv. We show through comparative experiments that SIM-Adv is the first Single-Adv defense method that can efficiently defend against both Single-Exps and Iter-Exps with much lower training overhead compared to the SOTA Iter-Adv counterparts.

References

- (1)

- Athalye et al. (2018) Anish Athalye, Nicholas Carlini, and David Wagner. 2018. Obfuscated gradients give a false sense of security: Circumventing defenses to adversarial examples. arXiv preprint arXiv:1802.00420 (2018).

- Borgwardt et al. (2006) Karsten M Borgwardt, Arthur Gretton, Malte J Rasch, Hans-Peter Kriegel, Bernhard Schölkopf, and Alex J Smola. 2006. Integrating structured biological data by kernel maximum mean discrepancy. Bioinformatics 22, 14 (2006), e49–e57.

- Carlini and Wagner (2017) Nicholas Carlini and David Wagner. 2017. Towards evaluating the robustness of neural networks. In 2017 IEEE Symposium on Security and Privacy (SP). IEEE, 39–57.

- Goodfellow et al. (2015) Ian J Goodfellow, Jonathon Shlens, and Christian Szegedy. 2015. Explaining and harnessing adversarial examples. International Conference on Learning Representations (2015).

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.

- Kannan et al. (2018) Harini Kannan, Alexey Kurakin, and Ian Goodfellow. 2018. Adversarial Logit Pairing. arXiv preprint arXiv:1803.06373 (2018).

- Kurakin et al. (2016) Alexey Kurakin, Ian Goodfellow, and Samy Bengio. 2016. Adversarial examples in the physical world. arXiv preprint arXiv:1607.02533 (2016).

- Kurakin et al. (2017) Alexey Kurakin, Ian Goodfellow, and Samy Bengio. 2017. Adversarial machine learning at scale. International Conference on Learning Representations (2017).

- LeCun et al. (1998) Yann LeCun, Léon Bottou, Yoshua Bengio, Patrick Haffner, et al. 1998. Gradient-based learning applied to document recognition. Proc. IEEE 86, 11 (1998), 2278–2324.

- Liu et al. (2019) Guanxiong Liu, Issa Khalil, and Abdallah Khreishah. 2019. ZK-GanDef: A GAN based Zero Knowledge Adversarial Training Defense for Neural Networks. arXiv preprint arXiv:1904.08516 (2019).

- Maaten and Hinton (2008) Laurens van der Maaten and Geoffrey Hinton. 2008. Visualizing data using t-SNE. Journal of machine learning research 9, Nov (2008), 2579–2605.

- Madry et al. (2017) Aleksander Madry, Aleksandar Makelov, Ludwig Schmidt, Dimitris Tsipras, and Adrian Vladu. 2017. Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv:1706.06083 (2017).

- Meng and Chen (2017) Dongyu Meng and Hao Chen. 2017. Magnet: a two-pronged defense against adversarial examples. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security. ACM, 135–147.

- Papernot et al. (2018) Nicolas Papernot, Fartash Faghri, Nicholas Carlini, Ian Goodfellow, Reuben Feinman, Alexey Kurakin, Cihang Xie, Yash Sharma, Tom Brown, Aurko Roy, Alexander Matyasko, Vahid Behzadan, Karen Hambardzumyan, Zhishuai Zhang, Yi-Lin Juang, Zhi Li, Ryan Sheatsley, Abhibhav Garg, Jonathan Uesato, Willi Gierke, Yinpeng Dong, David Berthelot, Paul Hendricks, Jonas Rauber, and Rujun Long. 2018. Technical Report on the CleverHans v2.1.0 Adversarial Examples Library. arXiv preprint arXiv:1610.00768 (2018).

- Papernot et al. (2016) Nicolas Papernot, Patrick McDaniel, Xi Wu, Somesh Jha, and Ananthram Swami. 2016. Distillation as a defense to adversarial perturbations against deep neural networks. In Security and Privacy (SP), 2016 IEEE Symposium on. IEEE, 582–597.

- Samangouei et al. (2018) Pouya Samangouei, Maya Kabkab, and Rama Chellappa. 2018. Defense-GAN: Protecting classifiers against adversarial attacks using generative models. arXiv preprint arXiv:1805.06605 (2018).

- Shafahi et al. (2019) Ali Shafahi, Mahyar Najibi, Amin Ghiasi, Zheng Xu, John Dickerson, Christoph Studer, Larry S. Davis, Gavin Taylor, and Tom Goldstein. 2019. Adversarial Training for Free! arXiv:cs.LG/1904.12843

- Song et al. (2018) Chuanbiao Song, Kun He, Liwei Wang, and John E Hopcroft. 2018. Improving the Generalization of Adversarial Training with Domain Adaptation. arXiv preprint arXiv:1810.00740 (2018).

- Szegedy et al. (2014) Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow, and Rob Fergus. 2014. Intriguing properties of neural networks. International Conference on Learning Representations (2014).

- Tramèr et al. (2017) Florian Tramèr, Alexey Kurakin, Nicolas Papernot, Ian Goodfellow, Dan Boneh, and Patrick McDaniel. 2017. Ensemble adversarial training: Attacks and defenses. arXiv preprint arXiv:1705.07204 (2017).