Unsupervised Recurrent Federated Learning for Edge Popularity Prediction in Privacy-Preserving Mobile Edge Computing Networks

Abstract

Nowadays wireless communication is rapidly reshaping entire industry sectors. In particular, mobile edge computing (MEC) as an enabling technology for industrial Internet of things (IIoT) brings powerful computing/storage infrastructure closer to the mobile terminals and, thereby, significantly lowers the response latency. To reap the benefit of proactive caching at the network edge, precise knowledge on the popularity pattern among the end devices is essential. However, (i) the spatiotemporal variability of content popularity, (ii) the data deficiency in privacy-preserving system, (iii) the costly manual labels in supervised learning as well as (iv) the not independent and identically distributed (non-i.i.d.) user behaviors pose tough challenges to the acquisition and prediction of content popularities. In this article, we propose an unsupervised and privacy-preserving popularity prediction framework for MEC-enabled IIoT to achieve a high popularity prediction accuracy while addressing the challenges. Specifically, the concepts of local and global popularities are introduced and the time-varying popularity of each user is modelled as a model-free Markov chain. On this basis, we derive and validate the essential relationship between the local and global popularities and then propose an unsupervised recurrent federated learning (URFL) algorithm to predict the distributed popularity while achieving privacy preservation and unsupervised training. Moreover, a federated loss-weighted averaging (FedLWA) scheme for the parameter aggregation is further designed to alleviate the problem of non-i.i.d. user behaviors. Simulations indicate that the proposed framework can enhance the prediction accuracy in terms of a reduced root-mean-squared error by up to – compared to other baseline methods, i.e., recommendation algorithms, centralized learning algorithms, and other distributed learning algorithms. Additionally, manual labeling and violation of users’ data privacy are both avoided.

Index Terms:

Industrial Internet of things (IIoT), content popularity, mobile edge computing (MEC), privacy preservation, federated learning.I Introduction

The emerging industrial Internet of things (IIoT), also colloquially known as Industry 4.0, interconnects isolated industrial assets by leveraging the growing ubiquity of wireless communication technologies. By harvesting the rich supply of data from various networked embedded sensors, this new paradigm promises the opportunity to revolutionize production and manufacturing [2, 3]. However, to process such an enormous amount of data and to handle the massive requests generated by ubiquitous wireless devices especially under the stringent requirements of reliability, latency, security and privacy, are incredibly challenging. Mobile edge computing (MEC), which co-locates storage and processing resources at the network edge, represents an effective framework to provision IIoT services [4] and to mitigate the surging traffic burden of the data centers [5, 6]. Dense deployment of edge nodes (ENs), i.e., radio access points or micro base stations, allows proximal and immediate access to the IIoT services. In an information-centric networking, proactive edge caching (EC) is considered a cost-effective approach to address the backhaul bottleneck problem [7] and to reduce the content retrieval/handover latency [8].

The explorations of optimal EC policies in MEC-enabled IIoT networks have been investigated in many previous studies [9, 10, 11, 12, 13, 14]. However, many of relevant works, i.e., [9, 10, 11], assume that the content popularity can be a priori given and remains constant during the services, which is actually inconsistent with the reality. To be more realistic, some works [12, 13, 14] have considered the unknown popularity and explored the end-to-end learning approach to learn the EC policy directly from the request data so as to avoid the popularity prediction. Although circumventing popularity prediction by introducing the end-to end machine learning is indeed a research direction of the EC policy optimization, the popularity reflects the inherent pattern of user interests and directly determines the generation of content requests and thus, plays the most direct and decisive role in the EC policy. Significant improvements of EC performance provided by the popularity prediction have been demonstrated in literatures [15, 16, 17]. Therefore, in this paper, we focus on the investigation of popularity prediction. Generally, content popularity depends on the user interest, which is complicated and spatiotemporal varying and, thus, is unavailable in advance no matter which caching policy is applied [18]. When considering the spatiotemporal varying characteristic of content popularities in the real world, the performance of EC policies is largely determined by the selection mechanism of popular data that is worthwhile caching from a massive deluge of data traffic, which in turn relies on the accuracy of popularity prediction [15, 16, 19].

The potential of the popularity prediction has attracted the attentions of researchers and many progresses have been achieved in relevant works, e.g., [20]–[33]. However, there are still open challenges for the popularity prediction. 1) Spatiotemporal variability: Due to the complex and changeable subjective attributes of end users/devices such as the subjective interests of human and the intrinsic task characteristics of devices, the content popularity is spatiotemporal varying and pose tough challenges to its prediction accuracy. 2) Privacy preservation: In many scenarios such as the healthcare and automotive-related industries, the terminal data is private and needs to be protected from external access. Thus, privacy requirements prevent the data sharing among devices and center servers, which leads to the data deficiency for the data-driven centralized popularity prediction methods. 3) Costly manual labeling: Caused by the unobservability of popularity in the realistic environment, labelling popularities in manual for the popularity prediction is costly and challenging, which brings a technical bottleneck to many prediction approaches based on supervised learning. 4) Not independent and identically distributed (non-i.i.d.) behaviors: Due to the subjectivity of user interests, user behaviors are non-i.i.d., which can violate the assumption in machine learning that datas are independent and identically distributed and sequentially causes many issues, i.e., feature distribution skew, concept drift, quantity skew, etc., for learning-based prediction methods.

In the light of the above observations, the objective of this study is to design a distributed deep learning algorithm to predict the dynamic content popularities in a MEC-enabled IIoT system while preserving the data privacy of end devices and circumventing the costly manual labeling. To explore the insightful relations between the local and global popularities is another important consideration in this paper. To this end, the mathematical derivation and simulation validation of the relations among the popularities in system are provided, and a novel unsupervised recurrent federated learning (URFL) algorithm with a novel federated loss-weighted averaging (FedLWA) parameter aggregation scheme are also proposed. The main technical contributions of this work are summarized as follows.

-

•

We respectively introduce the time-varying local and global popularities in the local user and MEC server sides to make the MEC system more closely aligned with reality. Furthermore, we derive and validate the mathematical relationship between the local and global popularities, and reveal the fundamental difficulty in inferring the global popularity under the privacy-preserving constraint.

-

•

We design the learning node architecture in the FL framework by embedding the AE module, which realizes unsupervised learning without costly manual labeling. To effectively extract the underlying temporal information in the historical requests, long short-term memory (LSTM) cells are adopted in the AE module.

-

•

We propose a novel URFL algorithm on the basis of the FL architecture to perform offline training and online realtime prediction of the distributed popularities. The proposed URFL algorithm breaks the consistency requirement on model inputs between local and global sides in the typical FL framework and realizes the distributed training and prediction without any external access to the historical requests of local users except themselves, so as to better preserve user privacy.

-

•

On the basis of the proposed URFL algorithm, we further design a FedLWA scheme for the parameter aggregation to alleviate the problem of non-i.i.d. user behaviors considered in the investigated scenario and, thereby, further reduce the prediction error of popularities.

The rest of this paper is organized as follow. Section II reviews the related works. The system model is established in Section III. Then, Section IV introduces the problem formulation and the proposed scheme. In Section V, simulation results and discussions are provided. This article is concluded in Section VI.

II Related Works

II-A Popularity Prediction

The complex and varied user interest/need poses enormous challenges for accurately predicting the dynamic popularity. To this end, many different algorithms have been proposed in the literature to predict the dynamic popularity over the recent years. Some inspiring examples include the time-series prediction method [20, 16], the social-driven prediction method [21, 22], and the statistics-based prediction method [23, 24]. Jiang et al. [20] proposed an online content popularity prediction algorithm by exploiting the content features and user preferences, where the user preferences were learned offline from the historically requested information. In [16], an auto-encoder (AE) neural network was combined with the long short-term memory module to predict the popularity by extracting time-series features from the historical requests of users. Nevertheless, the time-sequence prediction approaches in [20, 16] rely heavily on the privacy of users such as historical requests to improve the prediction accuracy, which is intolerable for those privacy-sensitive users and not appropriate for the privacy-preserving systems. Xu et al. [21] explored the dynamically changing and evolving propagation patterns of videos in social media and the content popularity could be forecasted in a timely fashion. In [22], the social relationships among a small number of users were explored to bridge the gap between prediction accuracy and small population. A social-driven propagation dynamics model was proposed therein to improve the popularity prediction accuracy. However, as the hidden features can only be extracted from massive amounts of social information accumulated over a long-term time, the timeliness and privacy concerns of the social-driven prediction methods are questioned. Statistical prediction methods based on regression analysis have also been examined. For instance, Trzcinśki et al. [23] used support vector regression based on Gaussian radial basis functions to predict the online video popularity. Similarly, a Bayesian hierarchical probabilistic model was designed [24] to regress the content popularity in an EC network. While the existing statistical approaches show potentials in achieving accurate and stable prediction, they are still far from practical use. For instance, the selection of probabilistic models in statistics-based methods [23, 24] has critical impacts on the prediction performance, but the selection criteria are unclear and impossible to be a priori given in the real world. Most importantly, they inevitably raise privacy issues due to the fact that statistics-based methods require access to the historical request log data of users for popularity prediction.

II-B Federated Learning

As a matter of fact, private data leakage vulnerabilities in IIoT systems, especially in the healthcare and automotive-related industries, can lead to catastrophic consequences such as endangering user safety and causing severe property loss for data providers [25]. The resultant privacy preservation constraint makes the dynamic popularity prediction in EC even trickier. Recently, the disruptive blockchain technique [26] shows superiority in enhancing data security and privacy preservation due to its anonymity, inherent decentralization, and trust properties. Nevertheless, the blockchain technique is essentially a distributed database of records and it has no interface for user behavior analysis [27]. We argue that the knotty problem of privacy-preserving popularity prediction can be tacked by leveraging the recent advances of distributed deep learning, particularly the federated learning (FL) [28]. FL has emerged as a distributed artificially intelligence (AI) approach, by coordinating multiple devices to perform AI training without sharing raw data for privacy enhancement [29].

FL incorporating deep neural networks (DNNs), which combines the capabilities of DNNs in extracting features from input data and the advantages of FL in distributed training and privacy preservation, and has become one of the main paradigms of FL [30]. Therein, convolutional neural networks (CNNs) and recurrent neural networks (RNNs) are two important types used for the incorporation with FL Literature [31] investigates the image classification problem and proposes a communication-efficient and privacy-preserving distributed machine learning framework based on the FL cooperating with CNNs. The superior classification accuracy shown in [31] demonstrates the strong ability of the FL cooperating with CNNs in image feature extraction while preserving privacy. However, the architecture of CNNs is not appropriate for the feature extraction of sequence data which is rather important to wireless communication systems. RNNs, as an efficient architecture of sequence data processing, cooperating with FL is viewed as a promising framework for privacy-preserving data processing in wireless communication systems. For instance, Liu et al. [32] provide a FL-based gated recurrent unit neural network for traffic flow prediction while providing reliable data privacy preservation. Although FL integrating with DNNs has been widely investigated, many existing works,[31, 32], adopt the supervised learning with costly manual labels which poses significant challenges to their practical applications, especially to practical popularity prediction. The spatiotemporal variability and unobservability of the content popularity lead to the difficulty in manually obtaining the popularity labels. Therefore, we extending FL to an unsupervised paradigm in this paper to address the challenges on the manual labelling of popularities. In this paper, we proposed an unsupervised FL incorporating RNNs to effectively predict popularities from sequences of historical requests without labels while preserving user privacy. Moreover, we further design a parameter aggregation to alleviate the non-i.i.d. problem which is an open challenge in the research field of FL [33].

II-C FL-based Popularity Prediction

The MEC framework enables FL in the wireless communication networks with the supply of abundant and closer computing/caching resources. A comprehensive survey of FL from the perspective of fundamentals, challenges, solutions, and applications in MEC networks can be found in [34]. Therein, FL-based privacy-preserving popularity prediction in MEC networks has been explored in many works, i.e.,[35, 36, 37, 38, 39]. Nevertheless, many challenges still remain to be addressed. In a recent work [35], the center server is prohibited from snooping on users’ private data, while only the local MEC server is permitted to collect and learn from the historical requests of users. This scheme relies on authorization management and is susceptible to unauthorized access provided by the unreliable network operator or gained by malicious cracking. In addition, the deep learning method in [35] requires manual labeling in advance, which unfortunately is costly and infeasible in real implementation. The authors in [36] proposed an FL-based method to realize the privacy-preserving EC. On the basis of literature [36], Cui et al. [37] utilized blockchain to further improve the security of FL implementation. However, the EC policies considered in [36, 37] are both built on the similarities between users and contents, which is calculated by potential features extracted from historical requests of users. The similarity calculated in these two literatures is just a rough estimation of popular contents rather than the actual popularity. Thus, the content popularity which represents the accurate requested probability of each content has not been predicted in [36, 37]. Moreover, the relationship between the client-side popularity and server-side popularity has not been explored in [36, 37]. In [38], the content popularity has been predicted while the popularity relationship between the client side and server side is preliminarily explored. Nonetheless, the explorations of the relationship between client-side and server-side popularities were sketchy and empirical in [38], which was reflected in the thoughtlessness of user request arrival rate as well as the absence of any verification for the given relationship. In this paper, we introduce the concept of local popularity at user side and global popularity at MEC server side respectively, and further derive and validate the mathematical relationship between these two popularity types.

Yu et al. [39] considered the mobility of users and proposed a mobility-aware FL method to predict the popularity while preserving user privacy. Nevertheless, the temporal variability of the popularity caused by the time-varying user interests has not been explored in [39]. Furthermore, in [39], sampling from the real popularity distribution at the MEC server side is required to generate the input of the prediction model during the local training phase. However, the real popularity distribution at the MEC server side depends on the subjective interests of users within the entire service area and thus is extremly hard to obtain a priori. In our study, we consider the local and global popularities both time-varying and unavailable so as to be more closely aligned with reality. In addition, we can further observe from [36, 37, 38, 39] that local users are supposed to share or upload their request history when making online predictions, so as to generate the input of the prediction model. Indeed, this kind of sharing or upload violates the privacy-preserving requirement. The fundamental reason why these works need to collect users’ request history for the global prediction can be attributed to the consistency requirement in the typical FL framework, which demands that the model structures and model inputs should be exactly the same between the local and global sides [33]. To mitigate the risk of privacy leakage caused by the collection of user request history, we design the input structure of the local and global models to break the consistency requirement on model inputs, and then realize the distributed training and prediction without any external access to user’s historical requests except itself.

III SYSTEM MODEL

This section provides brief descriptions of the system model and the underlying concepts used in this work. A hierarchical wireless network of shared caches is considered for smart industry applications, and the network is supposed to be able to provide a secure and trustworthy content service. As illustrated in Fig. 1, a cloud server is deployed by the service providers to store contents for the consumers, i.e., the mobile end-users and/or IIoTs devices. One MEC server, which can be the general-purpose computer or server, is placed on the edge node between the cloud and the end sides. The edge node can directly provide the content services supported by the caching capability of the MEC server. Moreover, the prediction models for popularity prediction can be placed on the user equipments (UEs) and the edge node benefited from the rapidly evolving computing capabilities of the UEs and the MEC server. In the considered scenario, we assume that a total of privacy-sensitive users are directly connected to an edge node within a certain small cell, where the edge node must obey some privacy-preserving mechanism to preserve user privacy. 111Note that the security/privacy analysis by defining threat models or hacker attacks against the privacy-preserving mechanism is a meaningful but challenging research direction. (c.f., e.g., [41, 42, 40, 43]). However, this paper focuses on popularity prediction in a privacy-preserving wireless MEC network. To avoid further complicating the problem under investigation, the backdoor problems are left for our future work.

At the beginning of a time slot, a user will send a content request to the edge node if a certain content file cannot be found in its local cache. Though much closer to the users, the edge nodes have limited caching and computing capabilities compared to the cloud server. As such, the edge nodes can only store some selective — usually most popular contents. Whenever the requested contents are not located in the edge nodes, the request will be further forwarded to the cloud via the backhaul link. Additionally, definitions of key notations used in this paper are given in Table I for ease of reading.

III-A Service Process

We assume that the content library contains files, which is denoted as a set , and the cloud have a complete copy of all the files. Limited by the cache capacity, the MEC server can only cache files and an arbitrary UE- can cache files. Generally, . We assume that a content file is requested by UE- at time slot , where we have when UE- does not request any contents. Then, the request will be uploaded to the MEC server if cannot be found in UE-, which is represented as , where is the files set cached in UE- at time slot . Conversely, if or is true, UE- will not upload this request information to the MEC server. MEC server will retrieve content for the received requests in its current cache . If is satisfied, the MEC server will further request the absent files from the cloud. Finally, the requested files of each user will be sent back from the MEC server. In addition, it is worth to note that the users will not upload any request information in time slot unless .

| Notation | Description |

|---|---|

| Index of time slot. | |

| Set of UE indexs. | |

| Set of all contents. | |

| Content request generated by UE- at time . | |

| Local popularity on UE- at time . | |

| Probability that content is requested by UE- at time . | |

| Global popularity on the MEC server at time . | |

| Probability that content is requested within the service area at time . | |

| Prediction of the local popularity on UE- at time . | |

| Prediction of the global popularity on MEC server at time . | |

| Probability distribution parameter of at time . | |

| Content request arrival rate of UE- at time . | |

| Parameters set that evolves over time. | |

| Transition probability matrix of . | |

| Extractor of UE- to extract its historical request information. | |

| Request information received by the MEC server at time . | |

| Observation window length of the extractor. | |

| Local popularity prediction model inside UE-. | |

| Global popularity prediction model at the MEC server side. | |

| , | Parameters set of global and local popularity prediction model respectively. |

| Output of the encoding function in the -th layer at time | |

| Output of the decoding function in the -th layer at time | |

| , | Hidden layer number of the encoder and decoder, respectively. |

| Historical request data of UE-. | |

| Number of local training at every communication round. | |

| Training loss function of the local popularity prediction model in UE-. | |

| Stack of parameter sets uploaded by all users | |

| Updated parameters set aggregated from . | |

| , | Parameters set of encoder and decoder on UE-, respectively. |

| Average training loss of UE- at each communication round. | |

| Aggregation weight for the model parameters of UE- in the FedLWA scheme. |

III-B Local and Global Popularity

As mentioned above, the content popularities on the local user side and the MEC server side are respectively termed local popularity and global popularity. Regarding the local popularity, we assume that the content popularity of each user in each time slot follows a Zipf distribution which has been wildly adopted in related works [44, 45, 46]. Moreover, the time-varying nature of the popularity is taken into account in this paper. For an arbitrary UE-, the probability of demanding the -th file at time slot is

| (1) |

where the files have been assigned with a descending ordering of popularity in each time slot . The distribution parameter evolves over time. As such, the content popularity of UE- at can be denoted as . It should be noticed that the Zipf distribution is assumed for the convenience of discussion, and generalization to any other probability distribution model is straightforward.

As depicted in Fig. 2, for user , we model the dynamics of using a model-free Markov chain with the states recorded in the set . Consequently, the dynamics of can be defined as

| (2) |

where denotes the transition probability of transits to . It is worth mentioning that, neither the parameter sets nor the transition probabilities are unknown to the model-free Markov chain due to the diversity and complexity of users’ subjective interests [16]. The difference among the users are captured by the set as well as the potential state transition probabilities. Besides, user behaviors are assumed to be non-i.i.d. in the system model.

At the MEC server side, the global popularity at time slot can be represented as , where is the probability that content is requested within the service area. Apparently, the global popularity depends on all the local popularities within the service area, and the MEC server as a service provider can significantly improve its caching efficiency under the guidance of accurate knowledge of the global popularity. However, as will be elaborated in Section IV, the prediction of the global popularity is much more complicated than that of the local popularity due to the different behavior patterns of different users as well as the privacy-preserving constraint.

III-C Content Request Model

At time slot , a certain UE- requests a file , where the probability of follows its corresponding current request arrival rate, denoted as . If , UE- make a request, which satisfies the present probability distribution denoted as . According to the above description, the content request model of an arbitrary UE- at time slot can be expressed as

| (3) |

where and . Similar to , parameter also reflects the individual characteristics of user and should be protected from being accessed by others.

III-D Privacy-preserving Mechanism

In the real world, privacy-sensitive users usually concern about the leakage of their private data such as location information, historical contents/services request, personal bank or social account information. In this paper, we readily observed from the service process that the private information of users involved in the problem under investigation is mainly the historical contents request data, and the historical request database of each user is stored only in their own UEs and is inaccessible to outsiders. Moreover, as stated in some data privacy legislations such as the European Commission’s General Data Protection Regulation (GDPR) [47], users have the right to require the responsible party to delete the individual data records about them. Thus, to respond with the implementation of GDPR, the burn-after-read principle as the privacy-preserving mechanism is implemented in the MEC servers, i.e., the request information from users must be immediately deleted from the memory of the MEC server once the contents have been scheduled. The MEC servers are not allowed to hold any historical information of any users.

IV URFL FOR EDGE POPULARITY PREDICTION

IV-A Problem Formulation

In the MEC-based IIoT system under investigation, both the user and the server sides participate in the popularity prediction process. Given a popularity at time slot , UE- generate a content request denoted as , which is simplified as in the sequel for the convenience of discussion. Based on the request history saved in its local memory, the local popularity of user can be predicted by

| (4) |

where is a predictive model inside UE- and denotes the collection of trainable parameters therein. is a extractor of UE- to extract its historical request information of continuous times before time . is the observation window length of the extractor. On account of the structural features of AE, we can divide into encoder and decoder parameters sets, denoted as . The implementation of will be detailed in Section IV-B.

At time , to minimize the distributed popularity prediction errors of all the users at future time , the mean-square error (MSE) metric is adopted and the underlying optimization problem of arbitrary UE- can be formulated as

| (5a) | ||||

| (5b) | ||||

| (5c) | ||||

| (5d) | ||||

| (5e) | ||||

where and are abbreviated as and , respectively. and respectively represent the and norm. Constraint (5b) ensures the observation window length of the extractor at time not exceed . and depend on the subject interests of user at time . is the component of and extracted from the request database . Note that , , and are all time-varying and unknown, which poses significant challenges to solve the problem (5).

The MEC server makes the global prediction in a completely different way since there is no historical information of any users under the privacy-preserving constraint. The only data that the MEC server can provisionally acquire is at time slot , which will be erased from the server before the next time slot. To further evaluate the difficulty in predicting the global popularity in such cases, we first give the following theorem which reveals the mathematical relationship between the local and global popularities.

Theorem 1

Given the local popularity and the request-arrival rate of each user at time slot . The global popularity at the MEC server side is:

| (6) |

Proof:

The proof is presented in Appendix A ∎

The simulation validation of Theorem 1 can be found in Appendix B. According to Theorem 1, we find that cannot be obtained without any a priori knowledge of and . Nonetheless, the local popularity and the request-arrival rate of each user both dynamically varies over time and also cannot be acquired in the privacy-preserving system. To address this challenge, a URFL algorithm is proposed in this work to predict the global popularity without violating UEs’ data privacy. By employing the URFL algorithm, the global popularity in the next time slot is predicted by exploiting the newly arrived request at time slot , i.e.,

| (7) |

where represents the predictive function at the MEC server side in the proposed global model, and is the parameters set. The implementation of and under the privacy-preserving mechanism will be detailed in Section IV-B.

At the MEC server side, the underlying optimization problem at time can be formulated as

| (8a) | ||||

| (8b) | ||||

| (8c) | ||||

| (8d) | ||||

| (8e) | ||||

Similar with the problem , the problem (8) also evolves over time and the optimal solution should be valid at any time . However, is unknown while are unavailable to the MEC server due to the subjectively of user interests as well as the privacy-preserving requirements. Thus, it is also quite challenging to solve problem (8).

IV-B Methodology

This subsection presents the design of the URFL framework and its application to the privacy-preserving edge popularity prediction. The proposed architecture of URFL is illustrated in Fig. 3. Concretely, in individual UE, a moderate-scale recurrent neural network (RNN) is prepared and trained on the local request history alone. The RNN in each UE is designed as an AE, with each neuron being an LSTM cell to capture the contextual information hidden in the input data [48, 49]. Since the MSE loss of an AE is directly obtained by comparing the input and output, the troublesome training data labeling is circumvented. Then, the collaborative prediction is performed under an FL framework, where the distributed UEs periodically exchange their diverse model parameters, rather than the raw private data, with the MEC server to collectively train a global model. Note that the MEC server only needs to deploy an encoder module. We next elaborate on each key element in the designed framework.

IV-B1 LSTM-AE Hierarchy

RNNs perform hierarchical processing on complicated temporal tasks and, as such, it is capable of naturally capturing the underlying temporal dependencies in time series. In this work, we use a special type of RNN building block, i.e., LSTM cells to explore the evolving short-term dependencies within the long historical request sequences and [50] and, in turn, predict the edge popularity more effectively. LSTM-based RNNs address the issue of vanishing gradients by integrating gating functions into their state dynamics [51]. As mentioned above, each UE has a built-in pair of LSTM encoder-decoder, whose hierarchical structure is given in Fig. 4. Each neuron in the hierarchy is an LSTM cell, and each subsequent layer receives the hidden state of the previous layer as input time series. The auto-encoder architecture is created by symmetrically stacking the LSTM layers at the input and output sides, which respectively constitute the encoder and decoder. The iterative formula of message passing in one LSTM cell is as follows:

| (9a) | |||

| (9b) | |||

| (9c) | |||

| (9d) | |||

| (9e) | |||

| (9f) |

where , , and respectively denote the input, output, and the memory state of the LSTM cell at time slot . is the control gate, which is typically a Sigmoid function. represents the output of the forgetting gate. and denote the output of the input and output gates, respectively. evaluates the dependencies of the weight parameters and denotes the offset parameter. By feeding the historical requests to the RNN network composed of these LSTM layers, we capture the features hidden in the input sequences.

Then, the output of the encoding function in the -th () layer at time can be expressed as

| (10) |

where and respectively denotes the weight and implicit bias parameters of layer of the encoder. is the output of the -th encoder layer at time and is the output of the encoder layer at time . is the memory state of the -th encoder layer at time . Specifically, if , the corresponding represents the input sequence. In the decoding process, the output of the encoder is fed as the input sequence to the decoder network. The output of the decoding function for at time is

| (11) |

where and is the output of the decoder of layer at time and , respectively. represents the output of the decoder layer at time . Likewise, and represents the weight parameters and bias parameters of the -th layer in decoder, respectively. is the memory state of the -th decoder layer at time . Moreover, the output of the encoder at time also represents the predicted vectors of the local/global popularities at time , and will gradually approximate the true popularities along as the training.

IV-B2 Distributed Training via FL

The historical request data is denoted as where is the historical request data of UE- without labels. To address the privacy concern, the historical request data of each user is not exposed to others. During the offline training phase of URFL, UE- randomly extract samples from using the extractor, denoted as , where is the random sample points at time slot . Then, the training data is fed to the local AE in a mini-batch to train the network. The mini-batch average of the MSE loss function is adopted to yield a more stable convergence. That is, for any mini-batch set , we have

| (12) |

where is the output of the AE in UE-. Note that, the above offline local training process is implemented in parallel in each UE.

Unlike the training process in the local UEs, the MEC server has no data to train its global prediction model under the constraint of the privacy-preserving mechanism stated in Section III-D. As such, we adopt the FL framework here to achieve the acquisition of local and global prediction models while preserving user privacy by aggregating parameters instead of historical requests information. Concretely, by the end of every local self-training of the model , UE- uploads its latest model parameters to the MEC server. Let denote the stack of parameter sets uploaded by all the users. In the MEC server, the updated parameters set is aggregated from as

| (13) |

where reflects the impact of each user’s parameters in the aggregation. In this work, we assume that there is no priority among users. Hence, we reasonably set and, based on (13), we update the parameters of by

| (14) |

Once the weight aggregation is complete, the new parameters will be broadcasted to all network users. For , UE- will immediately update its parameters by . Then, UE- will train its local network again for another iterations. Upon completion, UE- will continue to upload the latest parameters to the MEC server. Then, a new loop is launched, and so forth ad infinitum. The loop described above can also be named as a communication round in FL. The overall training process of the URFL algorithm is summarized in Algorithm 1. is the total communication rounds between the local side and global side during the whole training. We also draw a schematic diagram of the parameters passing mechanism of URFL in Fig. 5. It should be noted that Fig. 5 is given here to more clearly illustrate the parameter passing flow in the distributed FL framework during the training of the URFL algorithm, and the inputs and outputs of the neural networks are shown in Fig. 3.

IV-B3 FedLWA for parameter aggregation

Because of the non-i.i.d. user behaviors considered in this paper, the convergence of the LSTM-AE model on each local UE is inconsistent at the end of each communication round. Due to the fact that the convergence of one model can be reflected by its training loss, we therefore design a FedLWA parameter aggregation scheme on the basis of the proposed URFL algorithm to reduce the impacts of non-i.i.d. user behaviors. The URFL algorithm that applies the FedLWA-based parameter aggregation scheme is named FedLWA-based URFL algorithm. Concretely, the parameters passing during the training of the FedLWA-based URFL algorithm is basically identical to that of the URFL algorithm, except that each local user needs to additionally upload its average training loss by the end of each local training. can be expressed as:

| (15) |

where calculated by (12) is the loss value of user at the -th training epoch in this communication round. We can observer from (12) (15) that contains no privacy information of user . Thus, the upload of will not cause the leakage of user privacy.

At the MEC server side, weight for parameter aggregation in the FedLWA scheme is dependent on the convergence of each LSTM-AE model at current communication round, which is the normalized loss denoted as . Then, the FedLWA-based parameter aggregation can be written as:

| (16) |

The parameters update of can be rewritten as :

| (17) |

The parameter aggregation process of the FedLWA scheme is summarized in Algorithm 2

IV-B4 Distributed Online Prediction

During the online service phase, the edge popularity in the system at each time slot can be instantly predicted by evaluating (4) and (7). Concretely, as shown in Fig. 3, the extractor of UE- firstly extracts the historical request information at time . Then, will be fed into the LSTM-AE network of UE-, and the output of the encoder is the popularity prediction of user at time . At the MEC server side, the received request information from local users will be fed into the trained global prediction model whose output is the prediction of global popularity at time . Moreover, to preserve users’ privacy, the request information will be erased as soon as it is fed to the global prediction model. The overall online prediction process of the URFL algorithm is summarized in Algorithm 3.

IV-B5 Time Complexity Analysis

Finally, we investigate the time complexity of the proposed URFL algorithm from the perspective of local side and global side. Note that each UE holds local model with the same structure and executes the algorithm in parallel, thus the time complexity of the algorithm at the local side can be analysed from the local model on a single UE. Moreover, we can observe from Algorithm 1 and Algorithm 3 that the whole structure of the LSTM-AE model participates in the training while only the encoder component of the LSTM-AE model participates in the online prediction. As such, the time complexity of the URFL algorithm at the local side during the training and prediction can be respectively expressed as and , where and respectively denote the representation dimension of -th encoder layer and -th decoder layer in the LSTM-AE architecture. The time complexity of the URFL algorithm at the global side during the training comes from the parameter aggregation by (14), and thus can be denoted by . During the online prediction, the time complexity of the URFL algorithm at the global side mainly arises from the process of global popularity prediction and can be denoted by . Moreover, we can find from Algorithm 2 that the time complexity arises from the FedLWA parameter aggregation scheme is negligible compared with that of the URFL algorithm.

V NUMERICAL SIMULATIONS

In this section, we showcase the superior performance of the proposed URFL algorithm in predicting the edge popularity. We run our numerical simulations on a workstation equipped with an Intel Xeon Gold 5118 CPU with 12 cores running at and of RAM memory. The models and networks are trained and tested in the TensorFlow environment. In the simulation, the window length of the extractor is . We assume that all the UEs have equal cache capacity denoted as .

In the trials, the parameter set and the transition probability matrix of each UE- are generated randomly, where represents the transition probability from to . In particularly, the entries of can be arbitrary values, as it has no impact on the algorithm performance. As such, the statistical properties of the user behaviors are non-i.i.d. Under these parameters, users record their requests over a period of time. Then, each UE- randomly extracts samples from its own request record as the training dataset . In addition, we evaluate the performance of the proposed URFL algorithm on small groups of users, i.e., , which is also adopted in [53, 54]. Adam optimizer [55] is used to train the parameters with an identical adaptive learning rate starting from . It should be emphasized that and are merely assigned to establish a similar-to-real simulation environment, neither of them are known to the MEC server and the UE- itself. For the architecture of the deep encoder in the URFL, we use three hidden layers with , , LSTM neurons as well as a dropout rate of in each hidden layer to avoid overfitting. A mirror-symmetrical structure of the encoder is implemented in the decoder . Detailed simulation parameters are listed in Table II.

| Parameter | Value | ||

|---|---|---|---|

| Total content number | |||

|

1 | ||

| Encoder hidden layer number | |||

| Decoder hidden layer number | |||

|

|||

|

|||

| Learning rate for training | |||

| Optimizer for training | Adam [55] | ||

| Dropout rate | |||

|

Randomly generated | ||

|

Randomly generated | ||

|

Randomly generated |

V-A Baselines

In the numerical simulations, we compare the prediction performance of the proposed URFL method with the baseline methods. All the reference methods are simulated in a -user case. In addition, epochs are run for all the learning methods.

V-A1 Singular Value Decomposition (SVD)

A traditional and widely-adopted method in recommendation systems, i.e., SVD [56] is included in the comparison. The SVD method is centrally deployed on the MEC server without any privacy-preserving constraints. On the other hand, the privacy information of all the users can be accessed by the MEC server for training this baseline.

V-A2 Deep Recurrent AE Learning (DRAEL)

We also consider an unsupervised learning method [57], DRAEL, to evaluate the impacts of the FL modules in the proposed framework. For fairness, the AE architecture of the DRAEL is identical to that of the proposed URFL. Nevertheless, due to the removal of the FL framework in DRAEL, centralized training by feeding the private historical requests information of users is needed to train this method on the MEC server.

V-A3 Single Dense AE Federated Learning (SDAEFL) and Deep Dense AE Federated Learning (DDAEFL)

We also consider other two distributed learning methods, SDAEFL and DDAEFL, to demonstrate the gains of the LSTM cells in the proposed URFL. More specifically, the FL framework is still used in these two methods to protect data privacy. However, the neural networks of the AEs in these two baseline methods are single dense neural networks and deep dense neural networks, respectively. Moreover, the encoder and decoder of the SDAEFL method are both single dense neural networks, and the number of hidden layers of AE in DDAEFL is equal to that of the proposed URFL.

V-A4 Self-train

To evaluate the performance of the proposed URFL method at the local user side, we set a self-train method as another baseline method for comparison. This baseline method is deployed on the local UEs and has the same AE architecture as the proposed URFL method. Then, each UE trains its own local prediction model in parallel without any communications with other UEs or the MEC server.

| Type | Methods |

|

|

|

|

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Centralized | SVD | No | |||||||||||

| DRAEL | No | ||||||||||||

| Distributed | SDAEFL | Yes | |||||||||||

| DDAEFL | Yes | ||||||||||||

| URFL (Proposed) | 0.185 | Yes |

-

1

The data traffic pre time slot of each method is a theoretical value under the assumption that .

V-B Results and Discussions

The prediction error measured by RMSE [35] of the proposed algorithm in predicting the local popularity is shown in Fig. 6, where the prediction loss of each local model is sampled after every self-training iterations. It can be observed from Fig. 6(a) and (b) that, for each user, the prediction accuracy of the local popularity and the AE loss can both stably converge to a satisfactory level with the proposed approach. In particular, we identify many regular jitters on the RMSE curves as the learning epoch increases in Fig. 6(a). The reason is that all the local AEs are forced to aggregate their parameters based on FL after every rounds of self-training. As such, the RMSE loss of each local model instantaneously increases after the parametric aggregation of multiple UEs, and it then gradually decays to a lower level within the next self-training iterations. We also readily observe from Figs. 6(a) and (b) that, there are slight differences in the convergence losses of local popularity prediction for different users, which is reasonable since all the users are non-i.i.d. and both the set and the probability of each UE- are randomly generated. For the complicated and , the challenge of prediction is tougher. In fact, this difference in prediction accuracy also indicates a non-i.i.d. open problem in FL [33], which will be an important research focus in our future works.

We further take UserID 7 as an instance and examine the performance comparison of the proposed algorithm with the self-training method, which is commonly adopted in deep learning related works. In such a method, the agents train their own local models individually without any interactions with others. As shown in Fig. 7, the proposed algorithm outperforms the self-training method from an individual user perspective in terms of AE loss and prediction loss. This result suggests that proper interactions with other participants can improve the prediction accuracy, which is congenial with common sense.

We take a step forward and study the prediction performance from the perspective of global popularity. Fig. 8 implies that the proposed method is superior to all the other baseline methods in terms of the prediction accuracy. In the -UE case, the proposed method yields an RMSE of around lower than those of SVD, SDAEFL, and DDAEFL, and lower than that of DRAEL. The remarkable gain suggests that the proposed method can significantly improve the prediction accuracy while grantee the data privacy of users since it only aggregates the model parameters of each user rather than the private raw data. We argue that the aggregation process of the global prediction model pushes the MEC server to deeply and accurately learn the underlying features from all the UEs towards its coverage. In contrast, the baseline methods not only need to be supplied by large amounts of private data but also are incompetent to exact features from a mass of historical requests kneaded together in time and space. In addition, we observe from Fig. 8 that the performance of SDAEFL is inferior to SVD and DDAEFL, which implies that the single dense neural network cannot effectively predict popularity. Furthermore, the gain of the DRAEL to the DDAEFL and the gain of the proposal to the DRAEL confirm that the recurrent mode realized by LSTM and the parameters aggregation realized by FL can both contribute to the reduction of prediction error considerably. We also infer from the comparison between the proposed algorithm and DRAEL in Fig. 8 that, the prediction variance can be significantly reduced using the proposal. It is interesting to note, increasing the number of UEs does not continuously improve the prediction accuracy of the proposed algorithm. As can be seen from Fig. 8, the best RMSE performance is achieved when the number of UEs rather than or . This is because aggregation of insufficient local models can be unrepresentative of the global characteristics, while an overly large sample size will result in information redundancy.

The performance comparisons between the proposed method and all the baseline algorithms versus the number of total content files are presented in Fig. 9. It can be seen from Fig. 9 that the proposed URFL algorithm significantly outperforms all the baselines regardless of the value of . The statistics of communication cost represented by the data traffic in the MEC network are listed in Table III, where . Though we can observe from Table III that the proposed URFL algorithm generates more data traffic for its offline training than the other baseline methods, the offline training phase is often implemented when the UE is idle, i.e., dormant status, charging status, etc. Therefore, it is acceptable for the proposed method to reduce the error of popularity prediction and preserve the privacy of users by sacrificing the tolerable increase of data traffic in the idle status of the MEC network. Moreover, under the assumption that , the theoretical data traffic of all the considered methods per time slot are equal, but the proposed method can achieve the lowest prediction error. When the assumption is invalid in the real environment, the data traffic of the centralized methods still remains . By contrast, the data traffic of the distributed methods will be less than , which actually depends on the , prediction errors of local/global popularities, and the cache hit rates of each cache entities.

In Fig. 10, we provide the performance evaluations of the proposed URFL algorithm on different number of samples. We can observe from Fig. 10(a) and (b) that the proposed method can achieve superior performance in both local and global popularity predictions when the sample size is more sufficient. The reason is that the prediction model generally extracts more complete features from a dataset with sufficient samples, thus achieves the lower prediction error. With the sample size increasing to a certain extent, the further performance gains will not be produced due to the feature saturation. Moreover, we also evaluate the performance of the proposed URFL algorithm on different , as shown in Fig. 11. Fig. 11(a) and (b) imply that the prediction error of the local popularity decreases with increasing . This is because the local prediction errors are valued under the same communication rounds. As such, the local prediction model will be trained with more epochs as increases, and thus converges to a better performance level. Straightforward, if we want to achieve the the same local prediction performance under different , we should continue increasing the number of communication rounds in the case of small , but which also means higher communication costs. Fig. 11 (c) illustrates that the prediction error of the global popularity will first decrease and then increase as increases. This performance trend is due to the fact that large local-training epochs pre round will bring a large bias between each local model, while few will lead to an under-optimization of each local model on their local dataset pre round.

In Fig. 13, we provide the performance evaluation of the proposed FedLWA parameter aggregation scheme. It can be observered from Fig.13(a) that, for each user, the training loss of the LSTM-AE model can converge to a lower level with the proposed FedLWA-based URFL algorithm, which demonstrates that the FedLWA parameter aggregation scheme can bring additional performance gains to the training. The reason is that the proposed FedLWA scheme can adaptively weigh the contribution of each local parameter to the global parameter on the basis of the convergence of local models at the end of each communication round, so as to obtain better aggregated parameters. Consequently, as shown in Fig. 13(b), the FedLWA-based URFL approach is superior to the URFL approach in terms of the prediction errors at both local user and MEC server sides. Hence, the non-i.i.d. problems in the investigated scenario could be alleviated by applying the proposed FedLWA parameter aggregation scheme.

The performance comparisons between the proposed methods and the baseline methods on large groups of users, i.e. , are presented in Fig. 12. Note that random sampling aggregation is a common approach to FL for large-scale client scenarios [54]. Herein, we randomly select local clients for each parameter aggregation in the training phase. It can be observed from Fig. 12 that, in large-scale user scenarios, the proposed URFL algorithm still outperform the baselines regardless of the value of . Moreover, Fig. 12 showcases the superiority of the proposed FedLWA-based URFL algorithm, which demonstrates that the proposed FedLWA parameter aggregation scheme is also applicable to large-scale user scenarios and can bring additional performance gains regardless of the number of users.

In closing, we test the online prediction performance of the proposed URFL algorithm in the local UEs and the MEC server in consecutive time slots. In the simulations, each UE and the MEC server are deployed with their own prediction models which have entered the convergent state using the proposed URFL algorithm. During the service delivery, the trained prediction models predict the future local and global popularities in real-time to assist the equipments to effectively update caches. In each time slot for arbitrary , we compute the respective absolute errors between the predicted local popularity and the true local popularity of the request probability associated with each content file, denoted as . The real-time prediction errors of each UE are visualized in Figs. 14(a) to (e). Likewise, we evaluate the real-time prediction errors of the global popularity by and show the result in Fig. 14(f). We readily observe from Fig. 14 that, for both local and global popularities, the real-time prediction error for each content file is largely lower than and, in most of the cases, it is under . This result shows that the convergent URFL algorithm can significantly reduce the prediction error of the local/global popularities during the online service, and this reconfirms the effectiveness of the proposed algorithm.

| Distributions | User Parameters | ||||||||||||||||||

| UserID 1 | UserID 2 | UserID 3 | UserID 4 | UserID 5 | UserID 6 | ||||||||||||||

| 0.74 | 0.08 | 0.91 | 2.14 | 0.58 | 1.56 | 0.76 | 1.02 | 0.74 | 0.11 | 0.63 | 0.15 | ||||||||

| 0.51 | 8 | 0.60 | 27 | 0.68 | 24 | 0.96 | 29 | 0.98 | 13 | 0.79 | 11 | ||||||||

| 0.94 | 0.44 | 0.91 | 0.11 | 0.91 | 0.50 | 0.68 | 0.70 | 0.76 | 0.52 | 0.70 | 0.51 | ||||||||

| 0.88 | 6 | 2.30 | 0.82 | 31 | 3.63 | 0.97 | 17 | 2.45 | 0.87 | 28 | 2.96 | 0.68 | 15 | 3.27 | 0.94 | 9 | 5.37 | ||

VI CONCLUSION AND FUTURE WORK

In this article, we investigated the problem of edge popularity prediction in a MEC-enabled privacy-sensitive IIoT system. The concepts of local popularity and global popularities are introduced and we reformulate the underlying distributed history-inaccessible time series forecasting problem as a label-absent distributed learning problem. The dynamic temporal dependencies within the long sequence are explored using LSTM cells and the training data labeling is circumvented by incorporating the AE structure. To realize collaborative prediction, the FL framework is adopted to effectively exchange the diverse model parameters of each network participant with a data-security guarantee. The above modules and designs collectively constitute a novel URFL algorithm, which achieves superior performance in terms of RMSE prediction error and AE loss while avoids privacy disclosure. Our future work will concentrate on the popularity prediction-assisted proactive caching, as well as more complicated scenarios such as the heterogeneous multiple MEC nodes and non-i.i.d. user behaviors.

Appendix A PROOF OF THEOREM 1

Suppose that the occurrence of events and are respectively denoted by and . According to the service process described in Section III-A, we readily have , , and the statuses of different users are mutually independent. From the perspective of the MEC server, all the local users can be treated as a whole. As such, if we let denote the possible request received at time slot , we obtain the following derivation:

| (18) |

In the light of the above result, the global popularity can be computed as

| (19) |

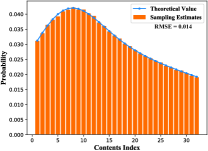

Appendix B Simulation Validation OF THEOREM 1

Herein, we provide a statistical experiment to compare the gap between the sampling estimate and the theoretical value so as to further validate the Theorem 1, where the sampling estimate of is counted from the actual samples in the system and the theoretical value of is computed using Theorem 1.

As for the sampling estimate, we set up 6 users and respectively record their requests over continuous 100000 time slots, where the parameter set of each user are randomly generated and remain constant during this period. Besides, the number of total contents is set to 32. Then, we count the number of times that each content has been requested according to the recorded requests data. Finally, according to Borel’s law of large numbers [44], we observe the requested frequency of each content and acquire the sampling estimate of by approximating from these frequencies. On the other hand, the theoretical value of can be directly calculated by Theorem 1 under this given scenario. Moreover, in the experiment, we consider the case that the local popularity follows four different probability distributions respectively, i.e., , , , . , , , respectively represent the distribution parameter of user under these four probability distributions. Specifically, the parameter settings in this statistical experiment are listed in Table IV. From Fig. 15, we can observe that the gap between the sampling estimate and the theoretical value always stays negligible under different distributions, which validates the Theorem 1 intuitively. Besides, as shown in Fig. 15, the gap is also quantified by the RMSE metric to further validates the Theorem 1.

References

- [1] C. Zheng, S. Liu, Y. Huang, and T. Q. S. Quek, “Privacy-preserving federated reinforcement learning for popularity-assisted edge caching,” in Proc. IEEE Global Commun. Conf. (GLOBECOM’21): Mach. Learn. Commun. Symp., Madrid, Spain, Dec. 2021, pp. 1–6.

- [2] H. Wu, X. Lyu, and H. Tian, “Online optimization of wireless powered mobile-edge computing for heterogeneous industrial internet of things,” IEEE Internet Things J., vol. 6, no. 6, pp. 9880–9892, Dec. 2019.

- [3] B. Yang, X. Cao, X. Li, et al., “Mobile-edge-computing-based hierarchical machine learning tasks distribution for IIoT,” IEEE Internet Things J., vol. 7, no. 3, pp. 2169–2180, Mar. 2020.

- [4] E. Sisinni, A. Saifullah, S. Han, et al., “Industrial internet of things: Challenges, opportunities, and directions,” IEEE Trans. Ind. Inf., vol. 14, no. 11, pp. 4724–4734, Nov. 2018.

- [5] M. Du, K. Wang, Y. Chen, et al., “Big data privacy preserving in multi-access eEdge computing for heterogeneous internet of things,” IEEE Commun. Mag., vol. 56, no. 8, pp. 62–67, Aug. 2018.

- [6] Z. Zhao, R. Zhao, J. Xia, et al., “A novel framework of three-hierarchical offloading optimization for MEC in industrial IoT networks,” IEEE Trans. Ind. Inf., vol. 16, no. 8, pp. 5424–5434, Aug. 2020.

- [7] C. Zhong, M. C. Gursoy, and S. Velipasalar, “Deep reinforcement learning-based edge caching in wireless networks,” IEEE Trans. Cognit. Commun. Networking, vol. 6, no. 1, pp. 48–61, Mar. 2020.

- [8] L. Chen, L. Song, J. Chakareski, and J. Xu, “Collaborative content placement among wireless edge caching stations with time-to-live cache,” IEEE Trans. Multimedia, vol. 22, no. 2, pp. 432–444, Feb. 2020.

- [9] M. I. A. Zahed, I. Ahmad, D. Habibi, and Q V. Phung, “Content caching in industrial IoT: Security and energy considerations,” IEEE Internet Things J., vol. 7, no. 1, pp. 491–504, Jan. 2020.

- [10] S. Gu, Y. Tan, N. Zhang, and Q. Zhang, “Energy-efficient content placement with coded transmission in cache-enabled hierarchical industrial IoT networks,” IEEE Trans. Ind. Inf., vol. 17, no. 8, pp. 5699–5708, Aug. 2021.

- [11] Q. Li, Y. Zhang, Y. Li, and et al., “Capacity-aware edge caching in fog computing networks,” IEEE Trans. Veh. Technol., vol. 69, no. 8, pp. 9244–9248, Aug. 2020.

- [12] R. Zhang, F. R. Yu, J. Liu, and et al., “Deep reinforcement learning (DRL)-based device-to-device (D2D) caching with blockchain and mobile edge computing,” IEEE Trans. Wireless Commun., vol. 19, no. 10, pp. 6469–6485, Oct. 2020.”

- [13] Y. Dai, D. Xu, K. Zhang, and et al., “Deep reinforcement learning and permissioned blockchain for content caching in vehicular edge computing and networks,” IEEE Trans. Veh. Technol., vol. 69, no. 4, pp. 4312–4324, Apr. 2020.

- [14] H. Zhu, Y. Cao, X. Wei, and et al., “Caching transient data for internet of things: A deep reinforcement learning approach,” IEEE Internet Things J., vol. 6, no. 2, pp. 2074–2083, Apr. 2019.

- [15] M. Zeng, T.-H. Lin, M. Chen, et al., “Temporal-spatial mobile application usage understanding and popularity prediction for edge caching,” IEEE Wireless Commun., vol. 25, no. 3, pp. 36–42, Jun. 2018.

- [16] C. Zheng, S. Liu, Y. Huang, and L. Yang, “MEC-enabled wireless VR video service: A learning-based mixed strategy for energy-latency tradeoff,” in Proc. 18th IEEE Wireless Commun. Networking Conf. (WCNC’20), Seoul, South Korea, May 2020, pp. 1–6.

- [17] C. Zheng, S. Liu, Y. Huang, and L. Yang, “Hybrid policy learning for energy-latency tradeoff in MEC-assisted VR video service,” IEEE Trans. Veh. Technol., vol. 70, no. 9, pp. 9006–9021, Sept. 2021.

- [18] Y. Qian, L. Hu, J. Chen, and et al., “Privacy-aware service placement for mobile edge computing via federated learning,” Information Sciences, vo. 505, pp. 562–570, Dec. 2019.

- [19] S. Liu, C. Zheng, Y. Huang, and T. Q. S. Quek, “Distributed reinforcement learning for privacy-preserving dynamic edge caching,” IEEE J. Sel. Areas Commun., vol. 40, no. 3, pp. 749–760, Mar. 2022.

- [20] Y. Jiang, M. Ma, M. Bennis, et al., “User preference learning-based edge caching for fog radio access network,” IEEE Trans. Commun., vol. 67, no. 2, pp. 1268–1283, Feb. 2019.

- [21] J. Xu, M. V. D. Schaar, J. Liu, and H. Li, “Forecasting popularity of videos using social media,” IEEE J. Sel. Top. Signal Process., vol. 9, no. 2, pp. 330–343, Mar. 2015.

- [22] S. He, H. Tian, and X. Lyu, “Edge popularity prediction based on social-driven propagation dynamics,” IEEE Commun. Lett., vol. 21, no. 5, pp. 1027–1030, May 2017.

- [23] T. Trzcinśki and P. Rokita, “Predicting popularity of online videos using support vector regression,” IEEE Trans. Multimedia, vol. 19, no. 11, pp. 2561–2570, Nov. 2017.

- [24] S. Mehrizi, A. Tsakmalis, S. Chatzinotas, and B. Ottersten, “A bayesian poisson-gaussian process model for popularity learning in edge-caching networks,” IEEE Access, vol. 7, pp. 92341–92354, 2019.

- [25] Y. Lu, X. Huang, Y. Dai, and et al., “Federated learning for data privacy preservation in vehicular cyber-physical systems,” IEEE Network, vol. 34, no. 3, pp. 50–56, Jun. 2020.

- [26] V. Sharma, I. You, F. Palmieri, and et al., “Secure and energy-efficient handover in fog networks using blockchain-based DMM,” IEEE Commun. Mag., vol. 56, no. 5, pp. 22–31, May 2018.

- [27] Z. Xiong, Y. Zhang, N. C. Luong, and et al., “The best of both worlds: A general architecture for data management in blockchain-enabled internet-of-things,” IEEE Network, vol. 34, no. 1, pp. 166–173, Feb. 2020.

- [28] G. Liu, C. Wang, X. Ma, and Y. Yang, “Keep your data locally: Federated-learning-based data privacy preservation in edge computing,”, IEEE Network, vol. 35, no. 2, pp. 60–66, Apr. 2021.

- [29] D. C. Nguyen, M. Ding, Q.-V. Pham, and et al., “Federated learning meets blockchain in edge computing: Opportunities and challenges,” IEEE Internet Things J., to be published, doi: 10.1109/JIOT.2021.3072611.

- [30] H. Zhou, and G. Yu. “Research on pedestrian detection technology based on the SVM classifier trained by HOG and LTP features.” Future Gener. Comput. Syst., vol. 125, pp. 604–615, Dec. 2021.

- [31] S. Oh, J. Park, E. Jeong, and et al., “Mix2FLD: Downlink federated learning after uplink federated distillation with two-Way mixup,” IEEE Commun. Lett., vol. 24, no. 10, pp. 2211–2215, Oct. 2020.

- [32] Y. Liu, J. J. Q. Yu, J. Kang, and et al., “Privacy-preserving traffic flow prediction: A federated learning approach,” IEEE Internet Things J., vol. 7, no. 8, pp. 7751–7763, Aug. 2020.

- [33] P. Kairouz, H. B. McMahan, B. Avent, et al., “Advances and open problems in federated learning,” arXiv preprint arXiv:1912.04977, 2019.

- [34] W. Y. B. Lim, N. C. Luong, D. T. H. Lim, and et al., “Federated learning in mobile edge networks: A comprehensive Ssurvey,” IEEE Commun. Surv. Tutorials, vol. 22, no. 3, pp. 2031–2063, Apr. 2020.

- [35] Y. M. Saputra, D. T. Hoang, D. N. Nguyen, and et al., “Distributed deep learning at the edge: A novel proactive and cooperative caching framework for mobile edge networks,” IEEE Wireless Commun. Lett., vol. 8, no. 4, pp. 1220–1223, Aug. 2019.

- [36] Z. Yu, J. Hu, G. Min, and et al., “Federated learning based proactive content caching in edge computing,” in Proc. 61st IEEE Global Commun. Conf. (GLOBECOM’18), Abu Dhabi, UAE, Dec. 2018, pp. 1–6.

- [37] L. Cui, X. Su, Z. Ming, and et al., “CREAT: Blockchain-assisted compression algorithm of federated learning for content caching in edge computing,” IEEE Internet Things J., to be published, doi: 10.1109/JIOT.2020.3014370.

- [38] K. Qi and C. Yang, “Popularity prediction with federated learning for proactive caching at wireless edge,” in Proc. 18th IEEE Wireless Commun. Networking Conf. (WCNC’20), Seoul, South Korea, May 2020, pp. 1–6.

- [39] Z. Yu, J. Hu, G. Min, and et al., “Mobility-aware proactive edge caching for connected vehicles using federated learning,” IEEE Trans. Intell. Transp. Syst., vol. 22, no. 8, pp. 5341–5351, Aug. 2021.

- [40] M. A. Ferrag, A. Derhab, L. Maglaras, and et al.,“Privacy-preserving schemes for fog-based IoT applications: Threat models, solutions, and challenges,” in Proc. 3rd IEEE Int. Conf. Smart Commun. Netw. Technol. (SaCoNeT’18), El Oued, Algeria, Oct. 2018, pp. 37–42,

- [41] Y. Liu, Z. Ma, X. Liu, and et al., “Revocable federated learning: A benchmark of federated forest,” arXiv:1911.03242, 2019.

- [42] Z. Ma, J. Ma, Y. Miao, and et al., “Pocket diagnosis: Secure federated learning against poisoning attack in the cloud,” arXiv preprint arXiv:2009.10918, 2020.

- [43] Y. Liu, Z. Ma, X. Liu, and J. Ma, “Learn to forget: User-level memorization elimination in federated learning,” arXiv preprint arXiv:2003.10933, 2021.

- [44] A. Sadeghi, F. Sheikholeslami, and G. B. Giannakis, “Optimal and scalable caching for 5G using reinforcement learning of space-time popularities,” IEEE J. Sel. Top. Signal Process., vol. 12, no. 1, pp. 180–190, Feb. 2018.

- [45] Y. Cui, D. Jiang, and Y. Wu, “Analysis and optimization of caching and multicasting in large-scale cache-enabled wireless networks,” IEEE Trans. Wireless Commun., vol. 15, no. 7, pp. 5101–5112, Jul. 2016.

- [46] L. Yang, F.-C. Zheng, W. Wen, and S. Jin, “Analysis and optimization of random caching in mmWave heterogeneous networks,” IEEE Trans. Veh. Technol., vol. 69, no. 9, pp. 10140–10154, Sept. 2020.

- [47] “General Data Protection Regulation (2016),” The European Parliament and of The Council, 2016, https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=uriserv:OJ.L_.2016.119.01.0001.01.ENG&toc=OJ:L:2016:119:TOC

- [48] L. Ren, J. Dong, X. Wang, et al., “A data-driven auto-CNN-LSTM prediction model for lithium-ion battery remaining useful life,” IEEE Trans. Ind. Inf., vol. 17, no. 5, pp. 3478–3487, May 2021.

- [49] T. Hussain, K. Muhammad, A. Ullah, et al., “Cloud-assisted multiview video summarization using CNN and bidirectional LSTM,” IEEE Trans. Ind. Inf., vol. 16, no. 1, pp. 77–86, Jan. 2020.

- [50] Y. Zuo, Y. Wu, G. Min, et al., “An intelligent anomaly detection scheme for micro-services architectures with temporal and spatial data analysis,” IEEE Trans. Cognit. Commun. Networking, vol. 6, no. 2, pp. 548–561, Jun. 2020.

- [51] F. Karim, S. Majumdar, H. Darabi, and S. Harford, “Multivariate LSTM-FCNs for time series classification,” Neural Networks, vol. 116, pp. 237–245, Aug. 2019.

- [52] H. Jahangir, H. Tayarani, S. Baghali, et al., “A novel electricity price forecasting approach based on dimension reduction strategy and rough artificial neural networks,” IEEE Trans. Ind. Inf., vol. 16, no. 4, pp. 2369–2381, Apr. 2020.

- [53] J. Wang, Q. Liu, H. Liang, and et al., “Tackling the objective inconsistency problem in heterogeneous federated optimization,” Proc. 34th Conf. Inf. Process. Syst. (NeurIPS’20), virtual, Dec. 2020.

- [54] Z. Zhang, Y. Yang, Z. Yao, and et al., “Improving semi-supervised federated learning by reducing the gradient diversity of models,” Proc. 2021 IEEE Int. Conf. on Big Data (Big Data’21), Orlando, FL, USA, Dec. 2021.

- [55] D. Kingma and J. Ba, “Adam: A method for stochastic optimization,” in Proc. 3rd Int. Conf. Learn. Represent. (ICLR’15), San Diego, CA, USA, May 2015.

- [56] E. Zeydan, E. Bastug, M. Bennis, et al., “Big data caching for networking: Moving from cloud to edge,” IEEE Commun. Mag., vol. 54, no. 9, pp. 36–42, Sep. 2016.

- [57] G. E. Hinton and R. R. Salakhutdinov, “Reducing the dimensionality of data with neural networks,” Science, vol. 313, no. 5786, pp. 504–507, Jul. 2006.