Unsupervised Image Restoration Using Partially Linear Denoisers

Abstract

Deep neural network based methods are the state of the art in various image restoration problems. Standard supervised learning frameworks require a set of noisy measurement and clean image pairs for which a distance between the output of the restoration model and the ground truth, clean images is minimized. The ground truth images, however, are often unavailable or very expensive to acquire in real-world applications. We circumvent this problem by proposing a class of structured denoisers that can be decomposed as the sum of a nonlinear image-dependent mapping, a linear noise-dependent term and a small residual term. We show that these denoisers can be trained with only noisy images under the condition that the noise has zero mean and known variance. The exact distribution of the noise, however, is not assumed to be known. We show the superiority of our approach for image denoising, and demonstrate its extension to solving other restoration problems such as image deblurring where the ground truth is not available. Our method outperforms some recent unsupervised and self-supervised deep denoising models that do not require clean images for their training. For deblurring problems, the method, using only one noisy and blurry observation per image, reaches a quality not far away from its fully supervised counterparts on a benchmark dataset.

Index Terms:

Image denoising, deep learning, convolutional neural networks, unsupervised learning, partially linear denoiserI Introduction

The acquisition of real life images usually suffers from noise corruption due to different factors such as sensor sensitivity, variations of photon numbers, air turbulence, just to name a few. Image denoising [30, 25, 7, 38] aims to remove noise from corrupted images. It is one of the most fundamental and central problems tackled by the image processing community. A variety of image denoising approaches have been developed in the past decades, which can be broadly classified into model based denoisers (see e.g., [30, 4, 6, 3]) and data driven denoisers [29, 5, 41].

Recent data driven techniques outperform conventional model based methods and achieve the state of the art denoising quality [22, 41, 42, 19]. These methods take advantage of large sets of image data and use the models, particularly deep convolutional neural networks (CNN), to learn the image prior from the datasets rather than relying on predefined image features. Compared to many model based methods, which need to solve a difficult optimization problem for each test image, CNN based denoisers are computationally efficient once the CNN is trained. Nevertheless, CNN based denoising approaches usually require a massive amount of ground truth images in the training phase. Specifically, conventional training pipelines consist of a loss function or a metric, which defines the distance between a clean ground truth image and its reconstructed version, and an optimization step in which the parameters of the models are adjusted so as to minimize the loss function. One of the most commonly used metrics is the mean squared error (MSE), the calculation of which depends explicitly on the ground truth image. While these learning processes can lead to high-quality denoisers, they are problematic for application domains where ground truth images are not accessible.

In the past years, several unsupervised deep learning techniques have been developed to overcome this difficulty. It is found that it is possible to train a deep neural network denoiser by using only the noisy data if multiple versions of noisy images are available for each unknown clean image [20], or if the noise is independent within different regions of the image [2, 15, 16]. Under the assumption of i.i.d. Gaussian noise, loss functions can also be adapted, based on Stein’s unbiased risk estimate of the MSE [32], such that they are defined only on the noisy images while providing good approximations of the MSE.

In this work, we address the problem of learning efficient denoisers from a set of noisy images without the need for precise modeling of the noise, and without multiple noisy observations per image. We investigate a class of structured nonlinear denoisers and their applications to inverse imaging problems including denoising and deblurring. Specifically, we formulate a given denoiser as the sum of three terms (cf. Fig. 1), including a (nonlinear) function of the ground truth signal , a linear mapping of the noise , and a residual term . If the linear factor is relatively small and the residual term , as a random variable, has small variance, then the denoised image mainly depends on the ground truth image and is not sensitive to the changes of the noise. In this paper, we study denoisers with the property that has small variance, and we call denoisers of this type partially linear denoisers (cf. Fig. 1). Such denoisers preserve nonlinear image structure (similar to many classic image denoisers), and additionally allow the nonlinearity to be learned from noisy data only.

We observe that some natural denoisers, including deep neural networks, exhibit certain degrees of partial linearity. By exploiting this property, we show that a partially linear denoiser, in the learning setting, can be trained on noisy images by only assuming that the noise is zero mean conditioned on the images with known variance. Specifically, we introduce an auxiliary random vector together with a partially linear constraint, that we show establishes a correspondence between the MSE and a loss function defined without clean images. By doing this one can approximate the best partially linear denoisers with a theoretically guaranteed approximation error bound. Moreover, we propose an image deblurring approach, based on the partially linear denoisers, that learns from single noisy observation of the images.

Different from other existing unsupervised methods for denoising, our approach does not require a group (or a pair) of noisy observations for each image or an estimate on the underlying noise distribution. Yet our approach leads to a performance close to the fully supervised counterparts on some denoising benchmark datasets. The proposed partially linear denoisers are learned in an end-to-end manner and can be built on top of any deep neural network architectures for denoising. Once they are trained, the denoised results are obtained without the auxiliary vectors or any post-processing. Furthermore, we demonstrate our denoiser’s capacity of handling other image restoration tasks in the absence of ground truth or multiple noisy observations per image, by utilizing the direct approximation to the MSE and its end-to-end learning nature.

II Related work

In the past decades, a wide variety of image denoising algorithms were developed. They range from analytic approaches such as filtering, variational methods, wavelet transforms, Bayesian estimation to data-driven approaches such as deep learning. In this section we review some major nonlinear denoisers as well as recent deep learning approaches that do not depend on ground truth images for learning.

II-A Nonlinear denoisers

Natural images are non-Gaussian signals with fine structures such as sharp edges and textures. One of the main challenges in the image denoising task is to preserve these structures while removing the noise. Traditional linear denoisers such as Gaussian filtering and Tikhonov regularization usually do not achieve satisfactory denoising quality, as they tend to smooth the edges. Most of the existing image denoisers in the literature are therefore nonlinear.

Total variation (TV) denoising [30] is one of the most fundamental image denoising algorithms. The TV denoising solution is a minimizer of the optimization problem

in which is the noisy image and is the total variation of . TV denoising is known to preserve sharp edges thanks to the non-linearity introduced by the TV norm .

Patch based methods have gained popularity for image denoising tasks because of their capacity of capturing the self-similarity of images. The non-local means (NL-means) algorithm proposed by Buades et al. [3] is among the most successful methods in this category. The NL-means algorithm removes noise by calculating a weighted average of the pixel intensities, with weights defined based on a patch similarity measure which emphasizes the connection among pixels in similar patches. It is a nonlinear denoiser, different from the local mean filtering, as the weights are image-dependent. As another nonlinear patch based denoising method, the Block-matching and 3D filtering (BM3D) algorithm [6] divides image patches into groups based on a similarity criterion, and collaborative filtering on each of the groups is then performed to clean the patches. While patch based denoisers achieve promising denoising performance, they are often time-consuming due to the high computational complexity in calculating the weights or matching with similar patches.

In recent years, convolutional neural networks (CNN) based denoisers became the state of the art for image denoising [41, 42], thanks to the rapid development of deep learning techniques. In particular, CNN are known to be efficient in modeling image priors [35], which are crucial for the quality of the denoiser. A typical CNN denoiser can be formulated as a composition of mappings called layers, and a basic type of layers has the form

where denotes the convolution operation, are parameters of the network that can be learned from the data, and is an activation function which is often nonlinear. As such, CNN denoisers are in general nonlinear. There has been growing interest in developing CNN denoisers with new network architectures and building blocks being proposed, such as batch-normalization [41], residual connection [10], and residual dense block [42].

II-B Unsupervised deep learning for denoising

While deep CNN based models have great advantages in image denoising, the most standard learning techniques are limited to the availability of sufficiently many noise-free images. Recently, CNN-based learning algorithms for image denoising that do not require clean images as training data have been developed. Soltanayev et al. [32] propose to use Stein’s unbiased risk estimator (SURE) based loss function, which is computed without knowing ground truth images, as a replacement of the MSE loss function. In the setting of i.i.d. Gaussian noise, SURE provides an unbiased estimate of the MSE, and hence the minimization problems with respect to these two losses are equivalent. Consequently, CNN denoisers can be trained in an unsupervised manner, based on only one noisy realization of each training image. Nevertheless, SURE may not be identical to the MSE in the case of non-Gaussian noise, e.g., shot noise. The SURE training scheme has been extended to the setting of multiple noise realizations per image as well as imperfect ground truths [43].

The Noise2Noise approach developed by Lehtinen et al. [20] offers a different ground-truth free learning strategy for denoising, based on the assumption that for each image at least two different noisy observations are available. It is found that replacing the clean targets by their noisy observations in the MSE loss function leads to the same minimizers of the original supervised loss if the noise has zero mean and an infinite amount of training data are provided. Specifically, the Noise2Noise loss function is

| (1) |

where and represent two independent noisy observations of the same image sample, and is the denoiser parameterized by . In contrast to the MSE loss where denotes the ground truth image, the loss function (1) is defined on a noisy pair only. If the noise in and the noise in are independent and have zero means, it is shown that the minimization of this loss function (1) with respect to is equivalent to minimizing the MSE [20]. This implies that the parameter can be computed from a training set of noisy pairs. Besides, the Noise2Noise approach does not rely on an explicit image prior or on structural knowledge about the noise models.

In certain denoising tasks, however, the acquisition of two or more noisy copies per image can be very expensive or impractical, in particular in medical imaging where patients are moving during the acquisition, or in videos with moving cars, etc. Several authors have investigated learning techniques that overcome this restriction [8, 36, 2, 15, 16]. The Frame2Frame [8] developed by Ehret et al. fine-tunes a denoiser for blind video denoising. The idea is to use optical flow to warp one video frame to match its neighboring frame, and then minimize the Noise2Noise loss (1) with being the pair of matched frames. The work [27] generalizes the Noise2Noise into the setting of a single noisy realization for each image. A synthetic noise, that is drawn from the same distribution as the underlying noise, is added to the noisy image , and the new noisy image is then used to replace the second observation in the training loss (1). At test time, the raw output of the network is post-processed to obtain the denoising results, by computing a linear combination of the output and the input. In this approach, the synthetic noise has to be of the same noise type as the underlying noise, and the post-processing can magnify the errors of the output of the network. Batson et al. [2] show that a denoiser , satisfying a so called -invariant property for a partition of the image pixels, can be trained without accessing a second observation of the image if the noise is independent across different regions in . A function is said to be -invariant if for each subset of pixels , the pixel values of at can be calculated without knowing the values of at . By additionally assuming that the noises of at different elements of are independent and have zero mean, one minimizes the loss

| (2) |

where the subscript in denotes the restriction of the image to the pixel collection . It should be noted that, for a -invariant function and any , the loss can be interpreted as a variant of (1), given the fact that the noise contained in is independent of and has zero mean. This enables the training of a model using a set of noisy images only. However, as the denoiser is a -invariant function, the images can not be perfectly reconstructed as the level of noise decreases to zero, i.e., it can not learn the identity mapping which is clearly not -invariant. The unused information from can be further leveraged if the noise model is known or can be estimated. Krull et al. [16] propose to combine with the network predictions, based on a probabilistic model for each pixel and a Bayesian formulation, to obtain the minimal MSE estimate. The integration of statistical inference effectively removes the noise remained in the predictions. A downside of this method is that it requires an explicit expression of the posterior distribution of the noisy images beforehand, and it is not end-to-end because the network outputs intermediate results that are improved in the post-processing step.

III The proposed method

We consider image restoration problems of the form

| (3) |

in which and are random vectors of unknown distributions representing the ground truth images and the noise respectively, is the noisy image from which we want to restore , and is a linear forward operator determined by the data acquisition process. In this paper, random vectors are always denoted by boldface lower-case letters like , , , etc. For ease of presentation, in this section we first focus on denoising problems, i.e., defines an identity map. The more general cases where is not the identity will be discussed in Subsection III-E.

For Problem (3), the only assumption we make on the noise distribution is that it has zero mean conditioned on the image, i.e.,

-

(A1).

.

This assumption holds for various common types of noises including Gaussian white noise, Poisson noise, as well as some mixed noises. We underline that the noise , however, are not assumed to be independent of the image or pixel-wise independent.

The central issue in image denoising is to find an operator, denoted by , that takes the noisy image to the clean image or its approximations. In this work we are interested in a class of denoisers that can be decomposed as

| (4) |

where is a (possibly nonlinear) function of the clean image , is a linear operator and is a residual term with small variance. In the rest of the paper, we omit the subscripts of and if there is no ambiguity.

Remark 1.

For a given denoiser, the decomposition (4) always exists, but in this work we only consider the setting of having small variance. Furthermore, the decomposition (4) is not unique, given the fact that can be an arbitrary linear operator. However, in order to characterize the structure of the denoiser, we assume and let be chosen such that is minimized. As has zero mean, the nonlinear term is then determined by

If satisfies (4) with of small variance, then we call a partially linear denoiser. The first term , which can be nonlinear, does not depend on realizations of the noise . This formulation implies that the non-linearity of the denoisers in this class is mainly due to intrinsic image structures, and the denoisers respond to the noise in a linear (encoded in ) or less sensitive manner (encoded in the fact that has small variance). In fact, for any denoiser that can effectively remove noise from images, its output has to be minimally dependent on the changes of noise, and therefore when written in the form (4), the noise dependent components on the right hand side should be small in variance compared to the noise, which implies that is small.

The selection of a good denoiser requires knowledge of certain prior information about the target , especially when we want to find the fine details like edges from the corrupted image. Many conventional analytical approaches aim to find the reconstruction from a lower dimensional space that the images lie in. This can be done, for example, by assuming some sparseness properties in the gradient fields or the wavelet domains.

In a data-driven setting, given the clean image , one could instead minimize the mean square error (MSE) defined as where the expectation is taken over and . The minimization of leads to the conditional mean . Next, we will discuss how to approximate in the absence of the clean image .

III-A Auxiliary random vectors

The motivation for this paper comes from the fact that, in practice the distribution of is often unknown, and samples of (i.e., the ground truth images) or of the noise are not readily available. What can be easily accessed are noisy observations . With these alone, however, a direct evaluation of the MSE is not possible. In this work, we circumvent the need for by introducing an auxiliary random vector and replacing the MSE by an approximation. First, let be a random vector satisfying assumptions

-

(A2)

the conditional mean ,

-

(A3)

is independent of ,

-

(A4)

the conditional covariance:

The auxiliary vector does not necessarily need to follow the same distribution as . Samples of , therefore, can be easily generated from e.g., Gaussian distributions, once the variances of are known. Then, associated with , we define

| (5) |

where is a real constant. The new random vector can be regarded as a noisy version of image with noise vector . In the following, the discussion will focus on denoising rather than denoising , but the objective remains unchanged, i.e., getting the same clean image . Specifically, if one can obtain a high-quality solution from , then there exists an algorithm taking to the clean image because can be computed from . Such an algorithm can be achieved, for instance, by where is a denoiser for the problem and . It should be noted that the difficulty of the denoising problem is raised because of the additional uncertainty from the auxiliary random vector encoded in the observed data . However, one of the benefits of considering is that the quantity is known and can be leveraged for constructing approximations to the MSE without knowing or as we will show in the following.

For the denoising problem, the MSE associated with the denoiser is defined as

| (6) |

where the expectation is taken over random variables , and . This is connected to via . Since is known, it can be shown that . Nevertheless, if is close to zero, the noise distributions of and are close, so the minimum of can be approximated by the minimum of which promises similar denoising quality.

Now, using the auxiliary vector , we define the following objective function for our proposed partially linear denoiser

| (7) |

for . Indeed, as we will see later in Subsections III-B and III-C, if we consider the partially linear denoising model, then provides a good estimate of . More precisely, according to the definition (4), we consider a set of denoisers for that have the form

| (8) |

where is defined in (5), and and are depending on and , respectively, and the residual is of small variance.

In the rest of this section, we show that for and partially linear denoiser , the term in (7) approximates the MSE up to an additive constant. This implies that an optimal denoiser of this class can be computed even if the distribution of ground truth images and the distribution of are not known. We then discuss unsupervised learning methods based on our proposed objective for image restoration tasks like denoising and deblurring.

III-B Linear minimum mean square error estimator

To start with, we focus the discussion on the simplest case, in which the denoiser is linear. This is a subset of the set of partially linear denoisers (8) with and . The best estimator in this setting is the linear minimum mean square error (LMMSE) estimator, i.e., the minimizer of in (6) over all linear denoisers . The following proposition establishes the equivalence between the MSE loss and in (7) over linear denoisers.

Proposition 1.

If and satisfy Assumption (A1) - (A4) and is linear, then there is some constant not depending on , such that

| (9) |

Proof.

If is linear, then from the definitions of and ,

and . Let denote the inner product operator. Then, using the above, (7) can be rewritten as

| (10) |

Here the expectations are taken over , and . We next show that the last two terms on the right hand side of (10) vanish.

First, since has zero mean conditioned on according to Assumption (A1), it holds that The same property applies to by Assumption (A2), so . With a linear combination of these two equalities,

| (11) |

Second, given the fact that is linear and both and have zero mean conditioned on , we have

in which denotes the trace of the matrix. The same equality holds for , i.e.,

Therefore, it follows from Assumption (A4) that This together with Assumption (A3) gives

| (12) |

Taking expectation with respect to , we have

| (13) |

According to Proposition 1, for all linear denoisers, the term in (7) differs from the MSE by a constant not depending on . Therefore a minimization of over all linear also leads to the LMMSE estimator. We remark that the objective function (7) is not defined based on the ground truth image , and the distribution of the random vector is not necessarily known or equal to that of . In fact, the denoising problem can be reformulated as follows. Given , the quantity is known, and we want to decouple the term from

| (14) |

In the loss , this is implemented by adding the scaled auxiliary vector to both sides of (14). The vector compensates the noise in the sense that, when taking the expectation of over and , the -related term is canceled by the -related term (i.e., the last equality in (12)), which leads to (9).

III-C Optimal partially linear denoiser

Though linear denoisers have nice properties that link (7) to the MSE, they may not be the best denoisers for imaging data. Nonlinearity is unavoidable in order to achieve good denoising quality and preserve fine structures such as edges in the image. To this end, we consider a more general class of denoisers that are partially linear, as expressed in (8), where is nonlinear and is not necessarily zero.

In a special case where the denoiser outputs exactly the clean image, i.e. , is also partially linear with and being both zero. However, such denoisers do not exist in most settings. Nevertheless, for good denoisers one could still expect the residual term to be small.

The equivalence of the two objective functions stated in Proposition 1 does not hold for nonzero . It is therefore of interest to know how well approximates the MSE in this general case. The next proposition quantifies the approximation error in the presence of a small nonzero residual term .

Proposition 2.

If and satisfy Assumption (A1) - (A4) and satisfies (8), then there is some constant not depending on , such that

| (15) |

Additionally, if the variance for , then the approximation error

| (16) |

If furthermore the residual is Lipschitz continuous with respect to i.e., for some constant , then

| (17) |

Proof.

By the decomposition of in (8),

| (18) |

where is defined in (5). For function , from assumption (A1) and (A2) it is clear that . Therefore,

| (19) |

Moreover, as is linear, with a similar argument to the proof of (13), we can show that

| (20) |

According to Equation (18), (19) and (20), if we let , then Equation (15) holds.

Next, we consider the bound of . Assuming that , a straightforward application of Cauchy-Schwarz inequalities leads to

| (21) |

and therefore Equation (16) holds.

Furthermore, assume that is Lipschitz continues w.r.t. with Lipschitz constant . Since has zero mean conditioned on and , the conditional expectation

where . So , and because of symmetry we have . Finally,

and the inequality (17) follows. ∎

From the bounds given in Proposition 2, if the variance of is small, then the error (7) provides a good estimate to the MSE. Note that making a Lipschitz continuity assumption on , the error bound in (16) can be further improved to (17) which is independent of (in contrast to (16) where the bound has a factor). In practice, this Lipschitz continuity assumption is not restrictive as we expect that the denoiser is stable with respect to small perturbations in and therefore has a small Lipschitz constant for .

Remark 2 (Estimated noise variance).

If Assumption (A4) does not hold, then the bound (16) also depends on the linear operator . Consider for instance where . Then Equation (15) becomes , the proof of which is given in Appendix A. The minimization of therefore favors denoisers with small . Such a property can be used to construct criteria for whether the noise variance is underestimated () or overestimated (), e.g., by checking the value of for the minimizer of . Examples will be given in Section IV-B.

Next, we take a closer look at the errors of the proposed denoisers. We first introduce the set of partially linear denoisers. Second, the distance between the minimizers of and over is estimated. Then we verify the convergence of the proposed denoisers to the best denoisers for corrupted images generated from a single ground truth image. Finally, focusing on a special subset of images that consists of constant patches, we demonstrate the partial linearity of the optimal denoisers with an example.

For , let be the set of denoiser satisfying (8) with variance of less than or equal to , i.e.,

Lemma 3 (Convexity).

For any , is a convex set.

Proof.

If , then there exist , linear operators , and residual terms , such that

For any and ,

It is easy to see that is also linear and . Therefore which implies that is convex. ∎

We are interested in the best denoisers in with as small MSE as possible. More precisely, for small , we define the MMSE denoiser, denoted by , as one of the denoisers in that satisfies

| (22) |

where are defined in (6). Note that exists for arbitrarily small positive . Based on the approximation properties stated in Proposition 2, we propose a denoiser satisfying

| (23) |

as an alternative version of . In light of the theoretical bound (16), the distance between and has an upper bound described in the following proposition.

propositionpropositiond Let the denoisers and be given by (22) and (23) respectively. If and satisfy Assumption (A1) - (A4) and , it holds that

where the expectation is taken over , and . The proof is given in Appendix A. The bound in Proposition 3 converges to as tends to , and it converges to as and converge to zero.

Building on Proposition 3, we further analyze the convergence of the proposed solutions for a special case where all realizations of the corrupted images are generated from the same clean image. The next corollary shows that, in this setting, the approximation error is at most of the same order as , regardless of the noise distributions or the noise variance. The proof of this corollary is given in Appendix A. {restatable}corollarypropositiondb Assume that the conditions in Proposition 3 hold. If follows a delta distribution, i.e., the probability and for some image , then

| (24) |

Proposition 3 and Corollary 3 illustrate that the minimizers of are good estimates of the best partially denoiser when is small. In principle, should be much smaller than the noise itself, as this is a necessary condition for the denoiser being robust to different realizations of . We can provide some more insight into the size of the residual term for high quality denoisers when applied to a special subset of images. Smooth regions are one of the most important components in natural images, and they often account for a large fraction of the pixels. Here we study the basic case with constant images and pixelwise independent noise. We give an example to demonstrate the scale of the residual term of the minimum MSE denoiser for constant image patches.

Example (Constant patches).

Assume that models constant patches of size pixels. The pixel values are uniformly distributed in where is a constant. The noise is pixelwise independent conditioned on . For pixel , where is the Poisson distribution. The optimal denoised images , where minimizes the cost , are therefore constant images. In this example, given and , the quantities and (defined in (8)) are computed using Remark 1. Consequently, , and are constant images. The values of are plotted versus (for different ground truth values and for different ) on the last two rows of Fig. 2, where the results are computed based on a deep CNN approximation to . Each yellow dot in the plot represents a realization of the noise, and the blue line represents . The plots suggest that has small variances and hence the set maintains good approximations to for small .

| (a). | (b). | (c). |

III-D Learning a denoiser from noisy samples.

For learning the denoiser we consider parametrized denoising models , e.g., deep CNNs, that are parameterized by . Suppose that a set of realizations of , e.g., the set of noisy images that one wants to denoise, is given. The noise in these noisy images satisfies Assumption (A1). Associated with each , we define as a realization of the auxiliary random vector that satisfies (A2) - (A4). In (A2) and (A4) only the first two moments of , rather than its distribution, are specified. Therefore without loss of generality, one can randomly generate from normal distributions. With the auxiliary vector , the samples of , denoted by , are computed according to Equation (5). Based on the objective function (7), we minimize the empirical loss function

| (25) |

under the condition that . This condition can be implemented with the partial linearity constraint described below.

Partial linearity constraint. To preserve the partial linearity during training, restriction on the variance of the residual term in (8) is required. Unfortunately, a direct evaluation of is not feasible due to 1) the fact that the formulation of depends on and which are unknown, and 2) the condition that only one noisy observation for each image is given. However, since and depend only on the image and is linear, we can remove these two terms by perturbing . One simple way of implementing this is to find and , two perturbed versions of , satisfying where . Let the residual terms associated with and be denoted by and respectively. Then, from Equation (8) we have

| (26) |

By the assumption that and are small, based on the representation of the residual terms in (26), one can therefore penalize for some metric .

III-E Learning image deblurring without ground truth images

The proposed partially linear denoisers can be generalized to learning image reconstructors for other linear inverse problems such as image deblurring, in particular when the ground truth images are missing. We repeat the observation model (3) here

| (27) |

where is a linear forward operator (such as the Radon transform for CT reconstruction, a convolution operator for image deblurring, etc), and models the zero-mean noise. For many inverse imaging problems, the naive reconstruction , is based on a direct inversion of the linear operator given by the pseudo-inverse operator . Due to ill-conditioning of such inversion is typically unstable and therefore not accurate in the presence of noise. If , however, has full column rank, then the resulting artifacts in the reconstruction could be remedied by denoising . In fact, it is easy to see that has zero mean. The covariance of can be estimated as long as the covariance of is known. Therefore a straightforward application of the partially linear denoisers to the noisy reconstruction leads to an estimate of . Various approaches have been proposed for denoising in a data-driven post-processing setup (see e.g., [13, 11]). These learned post-processing techniques require noisy-clean image pairs as training data. Another class of methods, in contrast, leverage denoisers to construct regularization for (27). For instance, the Regularization by Denoising (RED) methods [28, 12] minimize a variational objective function with a regularization term derived from the denoisers. The Plug-and-Play Prior framework [37, 33] is based on variable splitting algorithms for the Maximum a Posteriori (MAP) optimization problem with the proximal mapping associated with the regularization term being replaced by the denoisers. These denoiser-based regularization approaches require predefined denoisers. However, our framework does not require any noisy-clean pair or any predefined denoiser. In particular, our proposed partially linear denoiser allows training an estimator from noisy data alone.

Image deblurring is a special case of (27) where represents a convolutional kernel. In practice, is governed by different imaging factors including motion and camera focus. In the context of the proposed partial linear denoiser, one way to solve the deblurring problem is to train a single model that maps the measurement directly to . Here, knowledge of is indirectly encoded in . Assuming that the training data contains noisy samples of and the blurring kernel but no ground truth images, then similar to (7) one can minimize the loss function

| (28) |

and in this case, acts as a partially linear denoiser. At test time, the deblurred image can be directly computed as without knowing the operator . These considerations also draw connections of the partial linear denoiser to the deep image prior approach [35]. There, an implicit regularizer is introduced, based on a convolutional neural network and a sole data-fitting loss function is minimized in the training. Early stopping is applied to prevent the estimator from over-fitting to the noise. With the partial linearity structure, however, we establish a connection between the cost (28) and the MSE of the noise free measurement which aims to get close to rather than its noisy versions.

IV Experiments

In this section, we report experimental results to demonstrate the efficiency of the proposed approach for different denoising tasks and for deblurring111The code will be made available at https://github.com/RK621/Unsupervised-Restoration-PLD.. Firstly, we start by comparing the partial linearity of classical denoisers including total variation (TV) denoising [30], BM3D [6], as well as CNN based denoisers (see Subsection IV-A for details). Secondly, we evaluate our method on denoising problems with synthetic noise (Subsection IV-B1, IV-B3) and analyse its robustness towards errors in the estimate of the noise variance (Subsection IV-B2). Thirdly, a numerical study on the stability of our approach with respect to varying noise levels is given and the importance of the partially linear constraint is investigated (Subsection IV-C). Fourthly, the proposed approach is used to denoise real microscopy images (Subsection IV-D). Finally, we apply our approach to learn an image deblurring model, using a set of single noisy and blurry observations of the images as training data. The details of the learning methods and the results for the deblurring experiment are presented in Subsection IV-E.

IV-A Partial linearity of denoisers

In this test, we investigate the partial linearity of some existing standard denoising approaches, including the TV approach [30], BM3D [6] and DnCNN [41]. For the convenience of the readers, the formula of the partially linear denoiser (7) is repeated here

| (29) |

where is the underlying denoiser. In particular, the DnCNN is trained by minimizing the standard empirical MSE loss (6) with a training set of images with ground truth [41]. For a given image , we compute the decomposition (29) using Remark 1. Specifically, and where the expectation is taken over , and denotes the set of linear operators. In this example, we use a standard test image called parrot (cf. top middle of Fig. 1) as the ground truth image, i.e., a realization of . The noise is i.i.d. Gaussian noise with zero mean and standard deviation (corresponding to 256 gray levels). The expectations are evaluated using random realizations of the pair .

| Denoiser | TV | BM3D | DnCNN |

| PSNR (dB) | 27.62 | 28.87 | 29.47 |

| Modified PSNR (dB) | 27.82 | 29.00 | 29.60 |

For the three denoisers, we report the variance of averaged over the image pixels of the parrot image (i.e., where denotes the number of pixels in ) in Table I. A comparison of the accuracy of the methods, measured in PSNR, is also given in the table. All figures reported in the table are averaged over independent runs with different realizations of . Based on the table, the DnCNN achieves the best denoising quality, and it outperforms BM3D by around dB and the TV approach by around dB. All three methods have less than , and the value for the CNN denoiser is about half of that of the TV method.

| (a). TV | (b). BM3D | (c). DnCNN |

Given a denoiser , in order to study the partial linearity we consider the pair

| (30) |

where denotes a fixed pixel (localized by the red dot on the first row of Fig. 3). To visualize the linearity for the three denoisers, the pair (30) is plotted on the second row of Fig. 3 under realizations of the noise . Each point in the plot corresponds to one realization. As shown in the figure, all the denoisers have certain degrees of partial linearity at the pixel . For the TV denoiser, the residual has a relatively larger variance (also illustrated by Table I) and there are some outliers for big .

IV-B Denoising experiments

We consider two types of synthetic noises (Gaussian noise and Poisson noise), and the performance of our method is compared with recent denoising methods that are not trained on ground truth images, such as SURE [32], Noise2Self [2] and Noisier2Noise [27], along with the classic BM3D approach [6]. Throughout the denoising experiments, we use the same network architecture DnCNN [41] for the fully supervised baseline (we will call it DnCNN in the subsequent), SURE, Noise2Self, Noisier2Noise and our approaches. The experimental setups for the five methods are the same, except that the clean images are used to train the DnCNN while they are unseen by the latter four methods in the learning phase. All models are trained on a benchmark denoising dataset [41] consisting of training images of size . In the training phase, we feed the CNNs with image patches of size and set a batch size of . Augmentations such as random flipping and random cropping are applied to the patches. In the inference phase, the inputs to the networks are the whole noisy images. In particular, in our approach we do not include the auxiliary vector at this stage, and the denoised images are the outputs of the network without any post-processing. We evaluate the denoising quality on two different test image sets, namely the BSD68 (containing images) [26] and the wildly used images (Set12) [6].

Training. To train our denoising model, we minimize the loss function (25) using the Adam optimizer [14]. The minimization process consists of two stages. In the first stage, we fix and minimize the loss function (25) without constraints. In the second stage, we randomly choose for each of the noisy samples, and additionally, in order to control the variance of defined in (8), we impose a partially linear constraint (26). The implementation details of the latter will be given in the next paragraph. Both stages contain optimization steps with an initial learning rate , which then drops to and at the th step and th step, respectively. Throughout the experiments, , i.e., the samples of the auxiliary random vector in (25), are generated from Gaussian distributions.

Partially linear constraint. We implement the partially linear constraint by perturbing the noisy images (i.e., samples of the noisy image ) and by following the formula (26). Specifically, for each let and be random numbers uniformly distributed in , and let be a perturbation vector randomly generated from the same distribution as . With we construct two perturbed versions of as and respectively. Then we have the linear relationship

where and . In practice, we let be independent of . Since the noise levels of and depend on the pixel values of , we modify to be sparse to avoid raising the noise level too much. To do this, we randomly select of the pixels, the pairwise distances of which are at least pixels. The remaining pixels of are set to zero, i.e., no perturbations are applied to these pixels. To avoid having outliers at some individual pixels caused by the perturbations, we also clip such that the pixel values and fit in the range where and . Having and , based on Property (26) we add the following penalty term to the loss function

| (31) |

where are the network parameters, is a diagonal matrix. The diagonal entries of are set as

if , otherwise , where is the square root of the largest pixel-wise variance of the noise . Note that having in the loss (31) means that we penalize the nonlinearity at the perturbed pixels only. The term prevents division by very small numbers. In summary, the loss function is where is defined in (25), and is a hyperparameter which can be tuned based on the quality of the denoised images.

IV-B1 Gaussian noise

In this experiment, we test the denoisers for restoring images corrupted by Gaussian white noise. The training sets for our unsupervised approach are the noisy images. We consider two different levels of noise with standard deviation and (corresponding to 256 gray levels), respectively. Associated with the two noise levels, two denoisers are trained using the proposed method, and the ground truth images are unseen during the training phases. In both cases, the parameter for the partially linear constraint term (31) is set to .

The test results for BSD68 [26] are reported in Table II, where we call our method DPLD (deep partially linear denoiser). The denoising quality is measured by the peak signal-to-noise ratio (PSNR) and the structural similarity (SSIM) index. We compare our denoiser with BM3D [6], the self-supervised method Noise2Self [2], SURE [32], Noisier2Noise [27] as well as the fully-supervised DnCNN [41]. It is worth mentioning that the last denoiser DnCNN, in contrast to the other five, requires the noisy-clean image pairs for training.

| Noise Level | ||||

| Measure | PSNR | SSIM | PSNR | SSIM |

| BM3D[6] | ||||

| Noise2Self[2] | ||||

| SURE[32] | ||||

| Noisier2Noise[27] | ||||

| DPLD | ||||

| DnCNN[41] | ||||

As shown in Table II, the fully supervised denoiser DnCNN achieves the best accuracy. This is not surprising as it learns from the ground truth images which are not provided for the other ones. Our method is the best among the denoisers trained without the ground truth. It outperforms the Noise2Self, SURE and Noisier2Noise by dB, dB and dB respectively for noise level , and outperforms them by dB, dB and dB respectively in the case. Compared to the DnCNN, the PSNR values of DPLD are lower by dB and dB for noise levels and respectively.

Denoiser Average C. man House Peppers Starfish Monarch Airplane Parrot Lena Barbara Boat Man Couple BM3D Noise2Self SURE Noisier2Noise DPLD DnCNN BM3D Noise2Self SURE Noisier2Noise DPLD DnCNN

The comparison of the denoisers on the wildly used images [6] is given in Table III. For noise level , the DPLD reaches the best PSNR for all images among the five denoisers that do not consume ground truth data, except for the images Parrot and Barbara. It is interesting to note that BM3D performs better than the fully supervised method DnCNN on the image Barbara. On average, for it outperforms Noise2Self by dB and SURE by dB respectively, and it falls behind the DnCNN by dB.

| Noisy | Noise2Self | SURE | Noisier2Noise | DPLD | DnCNN |

Fig. 4 displays the denoising results for the image ”Boat”. It can be seen that, though the Noise2Self, SURE, Noisier2Noise, and DPLD do not see the clean images or have any explicit smoothness constraints during the training stages, they yield denoised images with smooth regions (Cf. the 2nd to 5th columns of Fig. 4, respectively). The resulted image from DPLD looks close to that of DnCNN visually. The output of Noise2Self has some relatively blurry edges compared to DPLD and DnCNN, e.g., at the letters displayed in the last row of Fig. 4.

IV-B2 Training with estimated noise variance

We report additional results for the scenarios where only an inaccurate estimated noise variance (ENV) is available (for generating ) during training. The results are obtained under the same settings as Subsection IV-B1 except that an ENV is used.

-

-

On the top row of Fig. 5, the PSNR values of the test results on BSD68 [26], for both noise levels and , are plotted against the relative errors in the ENV (where ). For both noise levels, the errors in the ENV lead to a decrease in PSNR. The reduction is less significant when the noise variance is underestimated than when it is overestimated. The drop in PSNR is small when the error in the ENV is small ( dB for an ENV % less than its true value), and our method maintains an accuracy significantly better than Noise2Self [2] for small errors in the ENV.

-

-

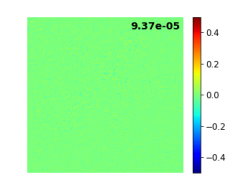

We observe a correlation between the errors in the ENV and the term (see Fig. 5, bottom row). Here is computed based on Remark 1, with a constant ground truth image (pixel values: , size: ) and generated following the same distribution as (variance: ). In the plots, is a small positive number when , and it increases (resp. decreases) as the ENV decreases (resp. increases). The is due to the extra term in the objective function as shown in Remark 2.

Overall, the performance is stable to small errors in ENV, and importantly, by analyzing the structure of the learned denoiser one could investigate the error in the noise variance. The quantity is helpful for choosing a better denoising model when the noise variance is unknown.

IV-B3 Poisson noise

We evaluate our method on three different levels of Poisson noise, with parameter , and respectively. The training settings for the denoisers are the same as the ones for Gaussian noise, except that the variances of the auxiliary vector samples are computed differently. As the noise is image dependent and not identically distributed for all image pixels, its variances are not known without having the ground truth image. Note that however our method does not require a precise model on the noise distribution, but only an estimate of the noise variance. At pixel , the noisy value satisfies where is a known constant. So the noise variance . This implies that the sample value of provides an unbiased estimate of the noise variance. The entries of the auxiliary vector are then generated as where is the standard Gaussian random variable, hence . The partially linear constraint parameter for (31) is tuned manually for the three noise levels. In this subsection, unless specified otherwise, the results are obtained by setting for , respectively.

| Noise Level | Noise2Self[2] | DPLD | DnCNN[41] | |||

| PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | |

For comparison, we train denoisers with Noise2Self [2] and DnCNN [41] on the same training set (whereas the ground truth images are available only for DnCNN). The denoising results on the test set BSD68 are reported in Table IV. Trained on the ground truth images, the DnCNN has the highest average PSNR for all three noise levels. The proposed method DPLD outperforms Noise2Self by dB, dB and dB for the cases with respectively. On the other hand, it losses dB, dB and dB when compared to DnCNN. It should be noted that when the noise level decreases, the PSNR gap between DPLD and DnCNN gets smaller. In contrast, the gap between Noise2Self and DnCNN becomes larger as the noise becomes smaller. This may be due to the fact that the Noise2Self approach can not learn identity mapping. For a given pixel, the denoiser can not see its observed value and has to infer its value from the information of its neighboring pixels. If knowledge about the noise distribution is available, then the results can be improved by reusing the noisy images in the inference phase [16]. Our denoiser uses all information of the noisy image, and, as shown in Proposition 2, the gap between the DPLD and the best denoiser in tends to zero as the noise goes to zero.

Denoiser Average C. man House Peppers Starfish Monarch Airplane Parrot Lena Barbara Boat Man Couple Noise2Self DPLD DnCNN Noise2Self DPLD DnCNN Noise2Self DPLD DnCNN

Table V lists the PSNR of denoising outputs for the wildly used images [6]. Similar to the Gaussian noise cases, the proposed DPLD has higher PSNR values than Noise2Self on all images. The DPLD outperforms Noise2Self by more than dB in average PSNR, and falls behind DnCNN by less than dB.

| Noisy | Noise2Self | DPLD | DnCNN | Ground truth |

Fig. 6 displays an example of the denoised images. This example shows that Noise2Self, DPLD and DnCNN are capable of recovering the details of the image, though the first two are not exposed to the detailed structures of the images during training. Compared to DPLD and DnCNN, the denoised image of Noise2Self is less smooth. Also, Noise2Self tends to remove the sharp points of a jagged edge (Cf. the third row of Fig. 6), since it relies on the data of surrounding pixels when restoring the pixels at the sharp point and therefore may encourage more regularized shapes of objects.

|

|

|

|

| Ground truth | DnCNN error | Noise2Self error | Ours error |

|

|

|

|

| Noise | DnCNN residual | Noise2Self residual | Ours residual |

Finally, the residual term (defined in (4)) of three different methods, on the test image Starfish, are presented in Fig. 7. For each method, in order to compute we first compute and with Remark 1 and using its denoised outputs for 1,600,000 realizations of noisy image . This experiment is carried out under the setting of Poisson noise with . As can be seen from Fig. 7, the error (i.e., the difference between the ground truth and the denoised image) is an order of magnitude smaller than the noise, while the residual term is an order of magnitude smaller than the error. The variance of of the proposed denoiser is slightly smaller than the fully supervised denoiser DnCNN, and Noise2Self has a variance of about two times larger than the other two.

IV-C Stability with respect to the partial linear constraint and noise levels

In this experiment we study the stability of the proposed denoiser against two parameters, and the noise levels. Here we consider Poisson noise with fixed for training, under the same experimental setting as Subsection IV-B3.

First we study the stability concerning . Fig. 8 shows the PSNR and SSIM, evaluated on the test set BSD68, for different choices of used during training. The horizontal lines in Fig. 8 show the PSNR and SSIM of the other two methods DnCNN and Noise2Self (N2S), which do not depend on . According to the figure, the learned denoiser has very low PSNR values, when is very small (e.g., , which means that less important is put on the constraint). This implies that the partial linearity is crucial for finding a high quality denoiser when minimizing (25). As becomes larger, such constraint starts to take effect and substantially improve the denoising performance. The peak of PSNR is achieved at around . For in , we observe a variation of PSNR less than dB, which is relatively small compared to the gap between N2S and DnCNN, before the denoising quality degrades at very large values (e.g., ).

Fig. 9 compares the robustness of the learned denoisers in terms of different noise levels. All denoisers are trained in the Poisson noise setting (again with ), and in our approach the optimal (i.e. ) is applied. It can be seen that all methods suffer from a degradation in the denoising quality when in testing is below . This is reasonable because in general smaller implies higher noise and more difficulty. Besides, the DPLD and Noise2Self, which use only noisy images in training, are more stable than DnCNN when is around . The PSNR of DnCNN decreases quickly as decreases from , and it is worse than DPLD when . However, it is interesting to note that the DPLD outperforms the (fully supervised) DnCNN in the lower noise cases (corresponding to big ), even though neither the ground truth nor less noisy samples are provided for the training. This suggests that auxiliary random vector approach together with the partially linear constraint brings extra robustness compared to its counterpart that learns from a set noisy-clean image pairs with fixed noise levels.

IV-D Denoising real microscopy images

We apply the proposed method to denoising real microscopy images. Neither the ground truth images nor their noise variance is known. Specifically, we consider three datasets, namely N2DH-GOWT1, C2DL-MSC, and TOMO110, which are used in [15]. The first two are fluorescence microscopy images of GFP transfected GOWT1 mouse stem cells and rat mesenchymal stem cells, respectively. The training sets contain images of size (N2DH-GOWT1) and images of size (C2DL-MSC). The third one TOMO110 is acquired by a Cryo-transmission electron microscope, and for this one we use one image (size ) for training.

For each of the datasets, we first estimate the noise variance.

1). For N2DH-GOWT1 and C2DL-MSC, we first obtain a rough estimate via computing the average squared difference of neighboring pixels at smooth regions. We then refine the estimated noise variance using the correlation between the linear component of the denoiser and the errors in the noise variance. This is motivated by the observation in Remark 2 and the numerical study in Subsection IV-B2.

2). For TOMO110, since multiple frames/copies of the image are available because of the dose-fractionated acquisition, we estimate the variance by computing the variance of the frames for which the noise is independent. Note that though several frames of the image are available, our approach learns to denoise the sum of all frames. In contrast, the Noise2Noise method learns to denoise only one half of the frames, the noise level for which is therefore higher.

The details of the noise estimation and training of the networks are given in Appendix B. The denoising results are displayed in Fig. 10 (with enlarged views for the regions indicated by the blue/red boxes). We compare our method with BM3D [6] and Noise2Void [15]. As shown in the figure, the BM3D method has denoised images that are smooth, but also contains artifacts (e.g., in the cells on the second row of Fig. 10). The Noise2Void method yields denoising outputs with certain graininess effect at non-constant regions (Cf. the third row and first column of the figure). The denoised images from our approach look smooth, yet it shows slightly more details of the object structures (e.g. the regions highlighted by the red boxes).

| Noisy image | |||

| BM3D | |||

| Noise2Void | |||

| Ours | |||

| N2DH-GOWT1 | C2DL-MSC | TOMO110 |

IV-E Image deblurring

Following [31] and [39], we consider the image deblurring problem (27) where the operator is a convolution operator with a blur kernel arising from random motions. The datasets for the image deblurring experiments are face images from the Helen dataset [18] and CelebA dataset [24], and we use the same training/validation split as in [39]. This results in a total of images for training, images for validation, and images for testing. Throughout this experiment, the noise is Gaussian white noise with standard deviation . We compare our approach with the fully supervised deblurring method, as well as an unsupervised method [39] that uses a pair of noisy and blurry observations per image. To facilitate the comparison, the same U-Net architecture in [39] for the deblurring networks is used in all three methods.

We train the deblurring networks using the empirical loss of (28), given the random noisy samples and the operator ,

where are the auxiliary vectors. As suggested in [39], we also integrate a proxy loss defined as

in which , , and , are randomly generated blur kernels and noise respectively. In our setting is generated from the same distribution as . So this loss function is the MSE loss defined on the synthetic training pair where , a deblurred version of , is treated as the ground truth. The proxy loss has been found useful in improving the deblurring accuracy. Finally, we apply the partially linear constraint in (31) with the denoiser being in this context. In summary, the training loss function is where is a hyperparameter.

The deblurring model is trained using the Adam optimizer [14] with a batch size of and optimization steps, starting from an initial learning rate of . The learning rate is then decreased to , , at the , , step, respectively. In the first optimization steps, we let and , and after that, , . In this experiment, we let be randomly selected from for each training sample.

| Method | Data | Helen [18] | CelebA [24] | |||

| PSNR | SSIM | PSNR | SSIM | |||

| Xu et al. [40] | – | 20.11 | 0.711 | 18.93 | 0.685 | |

| Zuo et al. [44] | O/C Pairs | 22.24 | 0.763 | 20.53 | 0.750 | |

| Tao et al. [34] | O/C Pairs | 22.86 | 0.762 | 24.11 | 0.862 | |

| Shen et al. [31] | O/C Pairs | 25.99 | 0.871 | 25.05 | 0.879 | |

| Kupyn et al. [17] | O/C Pairs | 23.63 | 0.781 | 22.45 | 0.729 | |

| Supervised baseline | O/C Pairs | 26.13 | 0.886 | 25.20 | 0.892 | |

| Xia et al. [39] | O/O Pairs | 25.47 | 0.867 | 24.64 | 0.873 | |

| Ours | Unpaired O | 25.65 | 0.867 | 24.78 | 0.873 | |

| Xia et al. [39] | O/O Pairs | 25.95 | 0.878 | 25.09 | 0.885 | |

| Ours |

|

26.00 | 0.879 | 25.09 | 0.886 | |

Table VI shows the PSNR and SSIM of the deblurring results for test sets of Helen [18] and CelebA [24] respectively. In the table, the supervised baseline is based on the same U-net architecture as in our approach and trained on the (noisy) observation/clean image pairs. It achieves the highest PSNR and SSIM scores among the methods being compared. Our method reaches competitive PSNR values of 25.65 dB (Helen) and 24.78 dB (CelebA) without the proxy loss, and adding the proxy loss results in a further improvement of dB and dB respectively. As an extension of the Noise2Noise [20], the approach proposed by Xia et al. [39], in contrast, relies on paired noisy observations for each image but without the ground truth. For the results reported in the table, the operator is known during training for both our approach and the method of Xia et al. [39]. However, our method requires only unpaired observations, and it leads to a deblurring quality comparable to Xia et al. [39] and not far away from the fully supervised baseline (with a PSNR gap smaller than dB). Furthermore, our method outperforms the existing supervised methods Zuo et al. [44], Tao et al. [34], Shen et al. [31], Kupyn et al. [17], as well as the unsupervised approach Xu et al. [40].

A comparison of the deblurred images from different methods is shown in Fig. 11, where the ground truth and blurry images are taken from the test sets of Helen. We underline that no blur kernels are provided to these methods during test time. Given no ground truth images for training, the deblurred results of Xia et al. [39] (Cf. the column in the figure) and our approach (Cf. the column) are able to capture most of the details recovered by the fully supervised baseline (Cf. the column), though the latter gives slightly sharper images. This demonstrates the capacity of the proposed partially linear denoisers for solving the deblurring problem based on only one corrupted observation per image. Though in this experiment the unsupervised methods require knowledge about the blur kernels during the training phase, they can be generalized to the cases where the blur kernels are unknown for training. This can be done, e.g., by jointly learning the clean images and blur kernels [39].

V Conclusion

In this paper, we propose a class of structured nonlinear denoisers. We show that such denoisers, equipped with a partial linearity property, can be trained when we do not have access to any ground truth images, nor to the exact model of the noise. In practice, one only needs to know the noise variance conditioned on the images. Based on the partial linearity structure, we proposed an auxiliary random vector approach, which establishes a direct connection between our loss function and the MSE, and allows end-to-end training of the denoising models. The approach outperforms other ground-truth-free denoising approaches such as the SURE based learning method for denoising, having a denoising quality close to that of the fully supervised baseline. The approach also offers new opportunities for learning to solve other image restoration tasks from single corrupted observations of the images. The experimental results show that, when generalized to an image deblurring problem, our approach achieves the state of the art for unsupervised deblurring.

One disadvantage of our method is that it does not work for non-zero mean noise, such as impulse noises for which the corrupted pixels are replaced with random numbers. In such cases, the auxiliary random vector approach no longer provides good estimates to the MSE. Our method requires estimates of the noise variance. Various noise variance estimation methods from noisy images exist (see e.g., [21, 9, 1, 23]). Alternatively, in our future work, we are interested in learning-based or automated noise variance extraction methods with the potential of being integrated in the proposed denoiser within an end-to-end framework.

References

- [1] Nicola Acito, Marco Diani, and Giovanni Corsini. Signal-dependent noise modeling and model parameter estimation in hyperspectral images. IEEE Transactions on Geoscience and Remote Sensing, 49(8):2957–2971, 2011.

- [2] Joshua Batson and Loic Royer. Noise2self: Blind denoising by self-supervision. In International Conference on Machine Learning, pages 524–533, 2019.

- [3] Antoni Buades, Bartomeu Coll, and Jean-Michel Morel. A non-local algorithm for image denoising. In 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, volume 2, pages 60–65. IEEE, 2005.

- [4] Raymond H Chan, Chung-Wa Ho, and Mila Nikolova. Salt-and-pepper noise removal by median-type noise detectors and detail-preserving regularization. IEEE Transactions on image processing, 14(10):1479–1485, 2005.

- [5] Yunjin Chen and Thomas Pock. Trainable nonlinear reaction diffusion: A flexible framework for fast and effective image restoration. IEEE transactions on pattern analysis and machine intelligence, 39(6):1256–1272, 2016.

- [6] Kostadin Dabov, Alessandro Foi, Vladimir Katkovnik, and Karen Egiazarian. Image denoising by sparse 3-d transform-domain collaborative filtering. IEEE Transactions on image processing, 16(8):2080–2095, 2007.

- [7] David L Donoho. De-noising by soft-thresholding. IEEE transactions on information theory, 41(3):613–627, 1995.

- [8] Thibaud Ehret, Axel Davy, Jean-Michel Morel, Gabriele Facciolo, and Pablo Arias. Model-blind video denoising via frame-to-frame training. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 11369–11378, 2019.

- [9] Alessandro Foi, Mejdi Trimeche, Vladimir Katkovnik, and Karen Egiazarian. Practical poissonian-gaussian noise modeling and fitting for single-image raw-data. IEEE Transactions on Image Processing, 17(10):1737–1754, 2008.

- [10] Xueyang Fu, Jiabin Huang, Delu Zeng, Yue Huang, Xinghao Ding, and John Paisley. Removing rain from single images via a deep detail network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 3855–3863, 2017.

- [11] Yoseob Han and Jong Chul Ye. Framing u-net via deep convolutional framelets: Application to sparse-view ct. IEEE transactions on medical imaging, 37(6):1418–1429, 2018.

- [12] Tao Hong, Yaniv Romano, and Michael Elad. Acceleration of red via vector extrapolation. Journal of Visual Communication and Image Representation, 63:102575, 2019.

- [13] Kyong Hwan Jin, Michael T McCann, Emmanuel Froustey, and Michael Unser. Deep convolutional neural network for inverse problems in imaging. IEEE Transactions on Image Processing, 26(9):4509–4522, 2017.

- [14] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- [15] Alexander Krull, Tim-Oliver Buchholz, and Florian Jug. Noise2void-learning denoising from single noisy images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2129–2137, 2019.

- [16] Alexander Krull, Tomas Vicar, and Florian Jug. Probabilistic noise2void: Unsupervised content-aware denoising. arXiv preprint arXiv:1906.00651, 2019.

- [17] Orest Kupyn, Volodymyr Budzan, Mykola Mykhailych, Dmytro Mishkin, and Jiří Matas. Deblurgan: Blind motion deblurring using conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 8183–8192, 2018.

- [18] Vuong Le, Jonathan Brandt, Zhe Lin, Lubomir Bourdev, and Thomas S Huang. Interactive facial feature localization. In European conference on computer vision, pages 679–692. Springer, 2012.

- [19] Stamatios Lefkimmiatis. Universal denoising networks: a novel cnn architecture for image denoising. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 3204–3213, 2018.

- [20] Jaakko Lehtinen, Jacob Munkberg, Jon Hasselgren, Samuli Laine, Tero Karras, Miika Aittala, and Timo Aila. Noise2noise: Learning image restoration without clean data. In International Conference on Machine Learning, pages 2965–2974, 2018.

- [21] Ce Liu, William T Freeman, Richard Szeliski, and Sing Bing Kang. Noise estimation from a single image. In 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), volume 1, pages 901–908. IEEE, 2006.

- [22] Ding Liu, Bihan Wen, Yuchen Fan, Chen Change Loy, and Thomas S Huang. Non-local recurrent network for image restoration. In Advances in Neural Information Processing Systems, pages 1673–1682, 2018.

- [23] Xinhao Liu, Masayuki Tanaka, and Masatoshi Okutomi. Practical signal-dependent noise parameter estimation from a single noisy image. IEEE Transactions on Image Processing, 23(10):4361–4371, 2014.

- [24] Ziwei Liu, Ping Luo, Xiaogang Wang, and Xiaoou Tang. Deep learning face attributes in the wild. In Proceedings of the IEEE international conference on computer vision, pages 3730–3738, 2015.

- [25] Stephane Mallat and Wen Liang Hwang. Singularity detection and processing with wavelets. IEEE transactions on information theory, 38(2):617–643, 1992.

- [26] David Martin, Charless Fowlkes, Doron Tal, and Jitendra Malik. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings Eighth IEEE International Conference on Computer Vision. ICCV 2001, volume 2, pages 416–423. IEEE, 2001.

- [27] Nick Moran, Dan Schmidt, Yu Zhong, and Patrick Coady. Noisier2noise: Learning to denoise from unpaired noisy data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 12064–12072, 2020.

- [28] Yaniv Romano, Michael Elad, and Peyman Milanfar. The little engine that could: Regularization by denoising (red). SIAM Journal on Imaging Sciences, 10(4):1804–1844, 2017.

- [29] Stefan Roth and Michael J Black. Fields of experts: A framework for learning image priors. In 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, volume 2, pages 860–867. IEEE, 2005.

- [30] Leonid I Rudin, Stanley Osher, and Emad Fatemi. Nonlinear total variation based noise removal algorithms. Physica D: nonlinear phenomena, 60(1-4):259–268, 1992.

- [31] Ziyi Shen, Wei-Sheng Lai, Tingfa Xu, Jan Kautz, and Ming-Hsuan Yang. Deep semantic face deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 8260–8269, 2018.

- [32] Shakarim Soltanayev and Se Young Chun. Training deep learning based denoisers without ground truth data. In Advances in Neural Information Processing Systems, pages 3257–3267, 2018.

- [33] Suhas Sreehari, S Venkat Venkatakrishnan, Brendt Wohlberg, Gregery T Buzzard, Lawrence F Drummy, Jeffrey P Simmons, and Charles A Bouman. Plug-and-play priors for bright field electron tomography and sparse interpolation. IEEE Transactions on Computational Imaging, 2(4):408–423, 2016.

- [34] Xin Tao, Hongyun Gao, Xiaoyong Shen, Jue Wang, and Jiaya Jia. Scale-recurrent network for deep image deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 8174–8182, 2018.

- [35] Dmitry Ulyanov, Andrea Vedaldi, and Victor Lempitsky. Deep image prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 9446–9454, 2018.

- [36] Dewil Valéry, Arias Pablo, Facciolo Gabriele, Anger Jérémy, Davy Axel, and Ehret Thibaud. Self-supervised training for blind multi-frame video denoising. arXiv preprint arXiv:2004.06957, 2020.

- [37] Singanallur V Venkatakrishnan, Charles A Bouman, and Brendt Wohlberg. Plug-and-play priors for model based reconstruction. In 2013 IEEE Global Conference on Signal and Information Processing, pages 945–948. IEEE, 2013.

- [38] Joachim Weickert. Anisotropic diffusion in image processing, volume 1. Teubner Stuttgart, 1998.

- [39] Zhihao Xia and Ayan Chakrabarti. Training image estimators without image ground truth. In Advances in Neural Information Processing Systems, pages 2436–2446, 2019.

- [40] Li Xu, Shicheng Zheng, and Jiaya Jia. Unnatural l0 sparse representation for natural image deblurring. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1107–1114, 2013.

- [41] Kai Zhang, Wangmeng Zuo, Yunjin Chen, Deyu Meng, and Lei Zhang. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Transactions on Image Processing, 26(7):3142–3155, 2017.

- [42] Yulun Zhang, Yapeng Tian, Yu Kong, Bineng Zhong, and Yun Fu. Residual dense network for image restoration. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020.

- [43] Magauiya Zhussip, Shakarim Soltanayev, and Se Young Chun. Extending stein’s unbiased risk estimator to train deep denoisers with correlated pairs of noisy images. In Advances in Neural Information Processing Systems, pages 1463–1473, 2019.

- [44] Wangmeng Zuo, Dongwei Ren, David Zhang, Shuhang Gu, and Lei Zhang. Learning iteration-wise generalized shrinkage–thresholding operators for blind deconvolution. IEEE Transactions on Image Processing, 25(4):1751–1764, 2016.

Supplementary Materials:

Unsupervised Image Restoration Using Partially Linear Denoisers

Rihuan Ke and Carola-Bibiane Schönlieb

Appendix A Additional proofs for Section III

Proof.

The proof is similar to that of Proposition 2.

*

Proof.

To simplify the notations, let and .

*

Proof.

Let and . According to Equation (8), we have the decomposition

| (38) |

where is linear and depends on , and for . Let , then

| (39) |

as a function of , is well defined because the distribution of is a delta function and if for any realizations , of . Furthermore, it is easy to check that .

Analogous to the argument in (37), for we have

| (40) |

for any . It follows from Equation (15) that

for some constant . This together with (40) gives

Combining the last inequality with (36), one has

| (41) |

Next, we will show that which together with (41) gives the desired result (24) by setting .

From Remark 1, the definitions of and in (38) imply

for any and any linear . It forces that . Furthermore, the conditional mean of is zero, i.e., . Similarly, one can also show that and . Therefore,

and consequently,

Taking expectation over , the last inequality leads to

and the proof is completed. ∎

Appendix B Additional details for Subsection IV-D

In this section, further details about the experiments on real microscopy images will be given. We first provide the training specification for the denoising networks. The details of the estimation of noise variance , required by the training process, will be discussed next. Finally, we present a noise variance refinement method which helps us to find a more accurate noise variance. The general experimental setting is similar to that of Subsection IV-B.

Training specification. We use the network architecture DnCNN [41] throughout the experiments. During training, the images are copped into overlapping patches, using a sliding window of size and a step size of . Additionally, for the N2DH-GOWT1 and C2DL-MSC datasets, since the major regions in the images are of low intensity corresponding to the background, we reorder the patches by their averaged pixel intensity (from low to high), and randomly delete of the first patches. This aims to let the network put more attention on the foreground objects during training. For all three datasets, we apply random flipping to augment the training images. The parameter is set to for N2DH-GOWT1 and C2DL-MSC, and for TOMO110. The optimization process follows the ones described in Subsection IV-B, but one needs to use the estimated noise variance which will be introduced below.

Noise variance estimation. We estimate the noise variance using the methods that are introduced next.

1). For the datasets N2DH-GOWT1 and C2DL-MSC, the variance is estimated via the mean square difference of neighboring pixels at smooth regions of the images. Firstly, given the noisy image , we define the average squared difference at pixel as

where denotes the 4-connected pixels of . Secondly, we compute a smooth version of

where denotes convolution operations and is a uniform kernel matrix of (the entries sum to ). Thirdly, to find the smooth regions of the images, we define

where and denotes element-wise multiplication. Finally, we assume that the noise variance depends only on the intensity of pixels, and the estimated variance is computed as

In our experiment, is averaged over all images in the training set. The computed variance is plotted in Fig. 12, where the blue squares indicate . We observe that has a linear pattern, therefore we approximate with a linear function (see the red lines in Fig. 12) where and are parameters. For dataset N2DH-GOWT1, . For C2DL-MSC , and for .

| (a). N2DH-GOWT1 | (b). C2DL-MSC |

2). For TOMO110, since the training image has multiple frames (as a consequence of dose-fractionated acquisition), we estimate the variance by the differences among the frames. Specifically, the raw dose-fractionated data contains where is the number of frames, and the frames have independent noise. In the dataset being considered, for example, . The observed image is the sum of all frames, i.e., . By computing the variance among , the noise variance at pixel is estimated as

We note that is an estimate of the noise variance for the pixel , and our method denoise the whole image instead of individual frames . In the Noise2Noise approach, in contrast, the network leans to denoise half of the frames, therefore does not make full use of during the denoising process.