Unsupervised Brain Tumor Segmentation with Image-based Prompts

Abstract

Automated brain tumor segmentation based on deep learning (DL) has achieved promising performance. However, it generally relies on annotated images for model training, which is not always feasible in clinical settings. Therefore, the development of unsupervised DL-based brain tumor segmentation approaches without expert annotations is desired. Motivated by the success of prompt learning (PL) in natural language processing, we propose an approach to unsupervised brain tumor segmentation by designing image-based prompts that allow indication of brain tumors, and this approach is dubbed as PL-based Brain Tumor Segmentation (PL-BTS). Specifically, instead of directly training a model for brain tumor segmentation with a large amount of annotated data, we seek to train a model that can answer the question: is a voxel in the input image associated with tumor-like hyper-/hypo-intensity? Such a model can be trained by artificially generating tumor-like hyper-/hypo-intensity on images without tumors with hand-crafted designs. Since the hand-crafted designs may be too simplistic to represent all kinds of real tumors, the trained model may overfit the simplistic hand-crafted task rather than actually answer the question of abnormality. To address this problem, we propose the use of a validation task, where we generate a different hand-crafted task to monitor overfitting. In addition, we propose PL-BTS+ that further improves PL-BTS by exploiting unannotated images with brain tumors. Compared with competing unsupervised methods, the proposed method has achieved marked improvements on both public and in-house datasets, and we have also demonstrated its possible extension to other brain lesion segmentation tasks.

Unsupervised Learning, Prompt Learning, Brain Tumor Segmentation

1 Introduction

Brain tumor is one of the most common and deadly diseases of the central nervous system. For example, high-grade glioma (HGG) is the most common and malignant brain tumor that can grow rapidly, invade surrounding normal tissue, and recur with a median survival time of 18–20 months [1, 2, 3]. Glioblastoma (GBM) is the most aggressive form of brain cancer [4], currently accounting for 47% of diagnosed brain cancers [5]. GBM is characterized by its high invasiveness, poor clinical prognosis, high mortality rate, and frequent recurrence [4].

Brain tumor segmentation on magnetic resonance (MR) images allows quantitative evaluation of brain tumors and provides valuable information for better understanding of tumor characteristics and treatment planning [6, 7, 8]. Brain tumor segmentation can be achieved with conventional image processing techniques, such as thresholding-based and region-based methods [9, 10], but the accuracy of these simplistic methods is limited. More recently, deep learning (DL) techniques have been applied to brain tumor segmentation with outstanding segmentation accuracy [6, 11, 12, 13, 14]. The DL-based methods improve the objectiveness, reproducibility, and scalability of quantitative evaluation of brain tumors. However, the success of DL-based methods relies on large-scale annotated training data [7], which can be costly to collect and reduce the applicability of DL-based brain tumor segmentation in real-world scenarios. Although approaches based on semi-supervised learning [15, 16] or self-supervised learning [17, 18] could alleviate the need for annotated data, manual annotations are still required for model training.

There are also works that explore DL-based brain tumor segmentation (or brain lesion segmentation that is closely related) without requiring any manual annotation. Most of these unsupervised approaches are reconstruction-based methods [19, 20, 21, 22]. In particular, in these methods DL-based models are trained to reconstruct input images drawn from a normal—i.e., healthy—population, and it is assumed that the trained models cannot properly reconstruct images with brain tumors. Then, for images with brain tumors, the input is compared with the reconstructed version, and anomalous regions are identified based on the reconstruction error. However, the accuracy of these approaches can still be unsatisfactory, and further exploration of unsupervised brain tumor segmentation without manual annotations is desired.

In this paper, we continue to explore the problem of unsupervised DL-based brain tumor segmentation. Motivated by the success of prompt learning (PL) in zero-shot natural language processing (NLP) [23], we propose to perform unsupervised brain tumor segmentation by designing image-based prompts that allow indication of brain tumors. PL in NLP exploits large pretrained language models [24, 25] and designs prompt tasks that allow the questions of interest to be addressed by the pretrained models. The target task is prompted to converge to the task performed by the large pretrained model, and the prompt template on the input data space fits the target task [26]. Similar for brain tumor segmentation, the target task should be accomplished with a prompt task that is closely related to it and does not require manual annotations.

Our approach is dubbed as PL-based Brain Tumor Segmentation (PL-BTS). PL-BTS comprises two major steps. First, we design an image-based prompt task that hints the target task of brain tumor segmentation, where given an image with brain tumors a model is trained to answer the question: is a voxel in the input image associated with tumor-like hyper- or hypo-intensity? To train such a prompt model, we generate artificial hyper-/hypo-intensity with tumor-like shapes on data without brain tumors based on hand-crafted designs. Since the hand-crafted designs may be too simplistic to represent all kinds of real tumors, the trained model may overfit the simplistic hand-crafted task [27] rather than actually answer the question of abnormality. Because no annotated data is available as the validation set for model selection in the unsupervised setting, addressing the problem of overfitting is challenging. Therefore, we secondly propose to design a different validation task for model selection, which monitors overfitting. Compared with the prompt task, the validation task uses a different way of generating artificial hyper-/hypo-intensity. As during the training process the model tends to first learn generic rules of identifying abnormality [28], when the accuracy of the validation task begins to decrease, the model is likely to start to overfit the artifact in the data generated with hand-crafted prompt designs. Thus, model selection is performed based on the turning point of the performance of the validation task to encourage the model to identify generic tumor-like abnormality, and the selected model gives the result of unsupervised brain tumor segmentation.

In addition, we propose PL-BTS+ that extends PL-BTS to the scenario where unlabeled images with brain tumors are available and improves PL-BTS with such unlabeled data. Specifically, pseudo-labels are first predicted on the unlabeled images with the prompt model. Like the pseudo-labeling strategy that is commonly used in semi-supervised learning [29], the pseudo-labels can be used to fine-tune the prompt model. Moreover, as the prompt model tends to undersegment the tumors, the pseudo-labels may contain considerable amounts of false negatives [30]. To further improve the fine-tuning of the prompt model, we generate additional synthetic training images by pasting the undersegmented tumor regions represented by the pseudo-labels onto the tumor-free images used for training the prompt model, and this allows removal of the false negatives in the synthetic images. These synthetic images and the original unlabeled images are used jointly to fine-tune the prompt model together with the pseudo-labels for brain tumor segmentation.

To validate the proposed method, experiments were performed on public and in-house datasets for brain tumor segmentation, where our method was compared with state-of-the-art unsupervised DL-based brain tumor segmentation approaches. The results show that our method outperforms the competing methods by a large margin in terms of multiple evaluation metrics. Moreover, using datasets for ischemic stroke lesion segmentation, we demonstrate the potential of generalizing PL-BTS and PL-BTS+ to other brain lesion segmentation tasks. The code of our method will be released after the work is published.

Our contribution can be summarized as follows:

-

•

We have proposed PL-BTS for unsupervised brain tumor segmentation that only requires tumor-free images for training. Our method is inspired by prompt learning and provides a perspective that is different from existing reconstruction-based unsupervised methods.

-

•

In PL-BTS, we have proposed the use of a validation task for model selection, which addresses the problem of having no annotated validation set and effectively avoids overfitting.

-

•

We have proposed PL-BTS+ that extends PL-BTS to the scenario where unlabeled images with brain tumors are available. In PL-BTS+, an improved pseudo-labeling strategy is developed for better segmentation performance.

-

•

We have not only validated the proposed method on public and in-house datasets for brain tumor segmentation, but also demonstrated its potential application to other brain lesion segmentation tasks. In these experiments, the superiority of the proposed method over existing unsupervised methods is shown.

2 Related Work

2.1 Brain tumor segmentation

Brain tumor segmentation can be challenging due to unclear and irregular tumor boundaries caused by partial volume effects and discontinuities [31], as well as high variability of tumors [32]. Conventionally, simple thresholding-based methods [9] are developed for the segmentation task, where tumor regions are obtained by comparing their intensities with one or more intensity thresholds; region-based brain tumor segmentation methods [10] are another type of simple methods that rely on region growing [9]; methods based on voxel classification [33, 34] use supervised or unsupervised classifiers to label tumor voxels in the feature space. However, these methods are usually too simplistic to effectively capture the complicated texture of brain tumors and cannot provide satisfactory segmentation quality [9].

Due to the success of DL in image processing tasks, DL-based methods [6, 11, 12, 13, 14, 35] are proposed for brain tumor segmentation and greatly improve the segmentation accuracy. In these works, various network architectures are developed based on convolutional neural networks [6, 11, 13] and/or transformers [12, 35, 36]. It is also shown that with carefully designed preprocessing, data configuration, and postprocessing, the U-net architecture [37, 38] can already provide high-quality segmentation that is better than or at least comparable to the results achieved with more complex network architectures [6].

DL-based brain tumor segmentation approaches generally require a large amount of manual annotations for model training, which is infeasible in many clinical and scientific settings. To address this problem of data scarcity, semi-supervised and self-supervised methods can be applied, where a large amount of unlabeled data is used together with the scarce annotated data for model training. For example, semi-supervised methods [15, 39] based on the teacher-student framework can be used, where the consistency loss between unlabeled data with different perturbations is added in model training; in self-supervised learning methods [17, 40], a model is pretrained on unlabeled data with hand-crafted pretext tasks that do not require manual annotations, and it is then fine-tuned with the limited labeled data. However, these methods do not fully remove the requirement for manual annotations.

Unsupervised DL-based approaches have been explored for annotation-free brain tumor segmentation [19, 20, 21, 22, 41], where no manual annotation is available for model training. The most popular strategy adopted by these methods is the reconstruction-based strategy, where a model is trained to reconstruct images of healthy subjects and the voxel-level residual between an image with the brain tumor and its reconstructed counterpart is used to indicate the brain tumor. The reconstruction models include the autoencoder (AE) [20], variational autoencoder (VAE) [20], vector quantized variational autoencoder (VQ-VAE) [42, 43], generative adversarial networks [22, 44], and transformer-based networks [19, 45]. In these reconstruction-based unsupervised methods, post-processing of the residual image is required for the final segmentation. However, the design of effective post-processing is nontrivial, and the performance of the unsupervised brain tumor segmentation methods can still be unsatisfactory.

2.2 Prompt learning

Prompt learning has emerged as a promising paradigm for zero-shot learning in NLP. A typical example is PL-based zero-shot emotion analysis. A prompt template can be built as “It was [MASK]” after a raw input sentence such as ”I love this movie.” Then, a large pretrained mask language model, such as BERT [24] and GPT-3 [25], can be applied to fill the blank for emotion analysis directly without retraining or fine-tuning on the target dataset again.

The application of PL to zero-shot image classification or segmentation has been explored as well so that no manual annotation is required for the task of interest [26, 46, 47, 48]. In these methods, a vision-language model [49] is trained with a huge amount of paired image and text data, which aligns the features of the two modalities. For zero-shot image classification, the vision-language model associates the image with the most relevant textual representation and produces the classification result based on the text. The idea is further extended to semantic segmentation [46, 48]. In [46], an image is first partitioned into different regions, and each region is fed into the vision-language model for classification, which gives the segmentation result without requiring manual annotations for training. In [48], a vision-language decoder is further added to the vision-language model to relate fine-grained semantic information from textual representations to each pixel-level activation, which allows zero-shot image segmentation.

The application of vision-language models to medical imaging is still largely vacant, probably due to the lack of pretrained vision-language models designated for medical applications. It may also be possible to design image-based prompts without requiring language for medical imaging, including brain tumor segmentation, but this direction of research has not been explored yet.

3 Methods

An overview of the proposed PL-BTS method is shown in Fig. 1, where a prompt model that indicates the existence of tumor-like abnormality is trained for unsupervised brain tumor segmentation without requiring any annotated data. We first introduce the design of the image-based prompt and then describe the proposed use of a validation task for model selection, which avoids overfitting to the prompt task. Besides the workflow in Fig. 1, we present PL-BTS+ that extends PL-BTS to the scenario where unlabeled data with brain tumors is available and incorporated in model training.

3.1 PL-BTS for unsupervised brain tumor segmentation

3.1.1 Prompt design

Since brain tumors generally appear as hyper- or hypo-intensity on MR images, in PL-BTS the image-based prompt is designed to indicate whether there is hyper-/hypo-intensity at a voxel. Equivalently, a prompt model is trained to determine for each voxel of an input image: does the voxel in the input image have hyper-/hypo-intensity? To train such a model, we generate artificial hyper-/hypo-intensity on images without brain tumors with hand-crafted rules, where no manual annotation is needed.

Suppose we are given a set of images without brain tumors, where is the -th image and is the total number of these images. Based on , we can generate a synthetic image with regions of hyper-/hypo-intensity and obtain the corresponding mask of abnormality indicating the hyper-/hypo-intensity as

| (1) | ||||

Here, represents an intensity transformation that generates hyper-/hypo-intensity, is a weight image with intensities ranging from zero to one, represents voxelwise multiplication, and is a threshold that determines the mask based on . Eq. (1) mixes the original image without brain tumors and the artificial hyper-/hypo-intensity to generate an image with intensity abnormality, and the threshold (empirically set to 0.1) is used to account for the partial volume effect in the buffering region where normal tissue and abnormality are mixed. The key to the prompt design is the designs of and , which determine the appearance and shape of the hyper-/hypo-intensity, respectively. Note that we do not seek to generate realistic tumors on the images, as it is extremely challenging, if possible at all, without any annotation.

As brain tumors comprise large clusters of abnormally bright signals or dark signals, is designed to generate hyper-/hypo-intensity with smooth textures. Specifically, blurs and scales the image to produce hyper-/hypo-intensity as

| (2) |

Here, is a 3D Gaussian blurring function applied to , and controls the magnitude of the hyper-/hypo-intensity. The specification of is introduced later. Since is added to , hyper- or hypo-intensity is created in .

The mixing weight image that determines the region of hyper-/hypo-intensity is designed to comprise both a tumor-like region and a buffer layer that represents the partial volume effect between normal tissue and regions with hyper-/hypo-intensity. To this end, we first generate a polyhedron with variable orientation and shape following [50]. The size of the polyhedron is sampled from the uniform distribution [17, 50]. The center of the polyhedron is randomly placed within the brain, and the intersection of the inserted polyhedron and the brain mask is denoted by , which also represents the region with hyper-/hypo-intensity. To take the partial volume effect into consideration, 3D Gaussian filtering with a kernel size of 1 mm is applied to , and the filtered result is used for the image generation in Eq. (1).

Brain tumors are generally segmented on T2-weighted or FLAIR images [2], where they appear as a cluster of hyper-intensity or also with a relatively dark component in the cluster. To generate images with tumor-like hyper-intensity, we apply Eq. (1) with sampled from the uniform distribution . To further obtain images with a dark component, after applying Eq. (1), we use the following equations for image generation

| (3) |

Here, is a different transformation , where is sampled from to create the dark region (darker than the hyper-intensity); is another weight image that represents the dark component and is obtained like 111The polyhedron associated with is constrained to be smaller than that associated with . Its center is randomly placed within , and its intersection with is smoothed to obtain .; and are the synthetic image and the corresponding mask indicating abnormality, respectively. In this way, when Eqs. (1) and (3) are sequentially applied, tumor-like hyper-intensity with a dark component is generated.

By repeating the image generation procedure in Eq. (1) or using the combination of Eqs. (1) and (3) with randomly drawn images, a number of synthetic images with approximately tumor-shaped abnormal intensity can be generated (see the examples in Fig. 1 associated with “Training Set”). The probability of applying Eq. (1) alone or both Eqs. (1) and (3) is set to 0.9 or 0.1, respectively. These synthetic images and the corresponding masks of abnormality are used to train the prompt model.

3.1.2 Model selection with a validation task

The abnormality generated with the procedures above can be too simplistic compared with real tumors. Thus, the prompt model obtained after training convergence can overfit the simplistic synthetic abnormality and generalize poorly to indicate real brain tumors. Ideally, to avoid overfitting, a validation set with annotated data is used to monitor the training process [6]. However, in the unsupervised setting there is no annotated data as the validation set.

To address this problem, we assume that during model training, the model first learns generic rules that apply to both simplistic synthetic abnormality and real tumors and then starts to overfit the detailed texture of synthetic abnormality [27]. Based on the assumption, we propose to use a validation task to prevent overfitting. This validation task also aims to identify abnormal intensity generated with hand-crafted rules, but the design of the rules is different from the prompt task for training, so that the two tasks require similar generic knowledge but the task details are different. In this way, after the generic knowledge about identifying abnormal intensity is sufficiently learned and the prompt model starts to overfit, the performance of the validation task will degrade, and based on this model selection can be performed.

A schematic of the benefit of the validation task is shown in Fig. 2. In the beginning epochs, the prompt model learns useful knowledge from the synthetic data, where both the training loss decreases and the accuracy of brain tumor segmentation on test data increases. Later, the model starts to overfit, where the training loss still decreases but the test accuracy starts to drop rapidly. Without the validation task, model selection occurs after the turning point of the test accuracy and is associated with overfitting, even if a validation set comprising the synthetic data is split from the training set for model selection; with the validation task, the turning point can be better identified for model selection.

The detailed design of the validation task is described as follows, which is similar to yet different from the prompt task. Specifically, we replace the transformation and weight image in Eq. (1) with

| (4) |

Compared with the prompt task, in the validation task no blurring is performed for image generation, and thus the validation task is similar to the prompt task but has different image textures near and in the tumors. Note that the set is split into two subsets for the prompt task and validation task.

Since overfitting is more likely to occur when more training epochs are used and the scheduling of the optimization algorithm, such as Adam [51], is dependent on the maximum number of epochs, we considered different choices of for training the prompt model. Specifically, the set of candidate values of is , and is also selected based on the performance of the validation task.

3.2 PL-BTS+: further improvement by incorporating unlabeled images with brain tumors

In practice, a large amount of unlabeled images with brain tumors may be available, and they can be helpful in semi-supervised settings [15], where both labeled and unlabeled images are used for model training. Motivated by this, we hypothesize that these unlabeled images can also benefit unsupervised brain tumor segmentation. For example, the pseudo-labeling strategy [29, 52] in semi-supervised learning can be adapted for our purpose, where the unlabeled images and the pseudo-labels predicted by the prompt model on them are used together for model training. However, as unsupervised segmentation is challenging due to the complete lack of annotated training data, the pseudo-labels may undersegment the tumor and contain many false negatives.

To alleviate this problem, we propose PL-BTS+, where the undersegmented tumors represented by the pseudo-labels are pasted onto the images without tumors that are used to train the prompt model. In this way, the false negatives can be reduced in the resulting synthetic images. Mathematically, suppose we are given a set of unlabeled images with brain tumors, where is the -th unlabeled image; then the pasting operation is represented as

| (5) | |||||

| (6) |

where and are the image with a pasted brain tumor and the corresponding tumor pseudo-label, respectively, is the pseudo-label of predicted by the prompt model, and is the -th image without tumors defined in Section 3.1. A graphical illustration of the pasting is shown in Fig. 3. Note that here we assume that and have the same dimension, and if it is not the case we can simply resize and to apply Eq. (5).

A number of images and pseudo-labels can be generated by repeating Eqs. (5) and (6) with randomly drawn and . These images and pseudo-labels are used together with the original unlabeled images and their pseudo-labels to fine-tune the prompt model for brain tumor segmentation. The fine-tuning allows the model to further learn from real tumor textures. Since the prompt model has already been well trained, only ten epochs are used for fine-tuning.

3.3 Details about the segmentation framework

Our method is agnostic to the architecture of the segmentation network and the segmentation framework. For demonstration, we select nnU-Net [6] that has achieved state-of-the-art performance across a variety of medical image segmentation tasks as our segmentation framework. nnU-net uses the U-net architecture [37, 38] and automatically determines the data configuration and hyperparameter settings. For more details about nnU-net, we refer readers to [6].

4 Results

To evaluate the proposed method, we performed experiments on two datasets for brain tumor segmentation. In addition, to show that PL-BTS and PL-BTS+ can be extended beyond brain tumor segmentation, we also considered a different brain lesion segmentation task, ischemic stroke lesion segmentation, for evaluation. We first describe the datasets considered in the experiments and the experimental settings. Then, we introduce the competing unsupervised methods for comparison. Finally, the detailed evaluation results are presented, including the comparison of the proposed method with competing methods and the investigation of the benefit of incorporating unlabeled images for training.

4.1 Data description and experimental settings

For brain tumor segmentation, we used the FLAIR images from the public dataset OASIS [53] as the images without brain tumors for training the prompt model, and evaluated the performance of the proposed method on the public dataset BraTS2021 [54] and an in-house dataset BTFLAIR. For the extension to ischemic stroke lesion segmentation, we used the diffusion weighted images (DWIs) from an in-house dataset BTDWI and the public dataset ISLES2022 [55] as the images for training the prompt model, and evaluated the proposed method on ISLES2022. The acquisition of the in-house datasets was approved by the institutional review board of Beijing Tiantan Hospital, Capital Medical University, with written informed consent obtained from each participant according to the Declaration of Helsinki. The details about these public and in-house datasets and their experimental settings are given below.

4.1.1 OASIS

The OASIS dataset [53] is a publicly available dataset for neuroimaging study and analysis. There are no images with brain tumors in the dataset, which meets our need for prompt learning. We randomly selected 100 FLAIR images from the dataset and generated one image with abnormality from each of them (100 in total) for the prompt task; 100 different FLAIR images were also selected from the dataset, and one image with abnormality was generated from each of them (100 in total) for the validation task. The FLAIR images have been registered to the corresponding T1-weighted images and skull-stripped with BET [56]. All these images have the same voxel size of 1 mm isotropic.

4.1.2 BraTS2021

The BraTS2021 dataset [54] comprises multimodal MR images with brain tumors acquired from multiple institutions under standard clinical conditions. There are 1251 cases of which both the images and tumor annotations are publicly available. The multimodal images of the same subject have been co-registered and skull-stripped. All these images have the same voxel size of 1 mm isotropic. In our experiment, the FLAIR images were selected for the segmentation of the whole tumor. All 1251 cases were used as the test set to evaluate the performance of the prompt model, as no annotation was required to train the prompt model.

As described in Section 3.2, our method allows the incorporation of unlabeled images with brain tumors into the training process. To evaluate the benefit of the incorporation, 100 images were randomly selected from the 1251 cases and included in training without using their annotations. The pseudo-labels predicted by the prompt model on the 100 images were pasted onto the 200 FLAIR images from the OASIS dataset. Note that the combination of the pseudo-label and the OASIS image was randomly selected. We ensured that each of the 200 FLAIR images was used once and each pseudo-label was used twice. This led to 200 synthetic images with pseudo-labels for model fine-tuning. In this case, the remaining 1151 cases were used as the test set.

4.1.3 BTFLAIR

BTFLAIR is an in-house dataset provided by Beijing Tiantan Hospital for segmenting whole brain tumors (ethical approval reference number: KY2022-078-04). The dataset includes 67 annotated FLAIR images acquired on multiple scanners, and they have been skull-stripped with BET [56]. The voxel size of these images ranges from to . Like the BraTS2021 dataset, all images in BTFLAIR were used as the test set to evaluate the performance of the prompt model.

4.1.4 BTDWI

BTDWI is an in-house DWI dataset provided by Beijing Tiantan Hospital (ethical approval reference number: 2021-KY-0112). There are 47 DWIs acquired with a -value of 1000 s/, and the skull was removed for each DWI with BET [56]. The voxel size of the DWIs is around . There are no ischemic stroke lesions on the DWIs, and the 47 images were combined with the three images without ischemic stroke lesions described next in Section 4.1.5 (50 in total) to train the prompt model that segments ischemic stroke lesions. 25 of the 50 DWIs without ischemic stroke lesions were used for the prompt task, and the other 25 DWIs were used for the validation task. We generated four images with abnormality from each DWI. Note that as the size of ischemic stroke lesions is different from that of brain tumors [57], during image generation the size of the polyhedron was adjusted to be sampled from a mixture of two uniform distributions ; also, as ischemic stroke lesions appear as hyper-intensity on DWIs, no dark component was used for image generation.

4.1.5 ISLES2022

The ISLES2022 dataset [55] is publicly available and comprises 250 cases of ischemic stroke patients. We used the skull-stripped DWIs in the dataset, which were acquired on different scanners with a -value of 1000 s/. The voxel size of these images ranges from to . There are three cases that contain no ischemic stroke lesions. They were used for training the prompt model together with the BTDWI dataset as described in Section 4.1.4. The other 247 DWIs that contain ischemic stroke lesions were all used as the test set to evaluate the performance of the prompt model.

Again, to further investigate the benefit of incorporating unlabeled images with ischemic stroke lesions in training, we randomly selected 100 DWIs from the 247 DWIs with ischemic stroke lesions and added them into the training process as described in Section 3.2 without using their annotations. The pseudo-label predicted by the prompt model on each of the 100 DWIs was randomly pasted onto the 50 DWIs without ischemic stroke lesions from the BTDWI and ISLES2022 datasets. We ensured that each pseudo-label was used twice and each DWI without ischemic stroke lesions was used four times. This resulted in 200 synthetic images with ischemic stroke lesions for model fine-tuning. In this case, the remaining 147 DWIs were used as the test set.

4.2 Competing methods

Our method was compared with nine existing unsupervised DL-based brain anomaly detection methods that can be used to segment brain tumors or ischemic stroke lesions. These methods include typical autoencoder-based approaches [20] (AE, VAE, and VQ-VAE), a generative adversarial network f-AnoGAN [20], more recent methods based on Transformer [45] (dense convolutional transformer autoencoder (DCTAE), basic vision transformer autoencoder (BTAE), and hierarchical transformer autoencoder with skip connections (HTAES)), and two other approaches 3D implicit fields (3DIF) [21] and constrained unsupervised anomaly segmentation (CUAS) [58] that are designed for unsupervised brain tumor segmentation.

The images without brain tumors or ischemic stroke lesions specified in Section 4.1 were used by the competing methods for model training. Note that in our experiments, we focused on the strictly unsupervised setting, where no validation set with manual annotations was available, and this setting was also applied to the competing methods.

All the competing methods are open-source222AE, VAE, and f-AnoGAN can be obtained from https://github.com/StefanDenn3r/Unsupervised_Anomaly_Detection_Brain_MRI; VQ-VAE, BTAE, DCTAE, and HTAES are available at https://github.com/ahmedgh970/Transformers_Unsupervised_Anomaly_Segmentation; 3DIF is available at https://github.com/snavalm/ifl_unsup_anom_det; CUAS is available at https://github.com/jusiro/constrained_anomaly_segmentation.. We used their publicly available code and default settings for our experiments, except that the maximum number of training epochs was set to 100 like in the proposed method, and this number was found to be sufficient for the competing methods.

| BraTS2021 (1251 cases) | BTFLAIR (67 cases) | |||||

| Method | Dice | Precision | Recall | Dice | Precision | Recall |

| AE | 33.64 21.45*** | 62.09 30.46*** | 30.00 19.37*** | 41.80 20.62*** | 57.92 29.93*** | 38.90 21.20*** |

| VAE | 42.67 18.30*** | 56.53 25.18*** | 39.63 19.66*** | 42.26 21.12*** | 59.24 32.12*** | 39.86 20.98*** |

| VQ-VAE | 31.62 26.24*** | 74.38 34.02*** | 30.98 29.73*** | 30.41 25.24*** | 51.28 39.34*** | 45.41 33.33*** |

| f-AnoGAN | 39.36 19.00*** | 36.65 22.94*** | 53.51 18.48*** | 33.37 24.19*** | 47.34 37.79*** | 40.29 26.43*** |

| DCTAE | 38.65 29.06*** | 74.40 34.11*** | 40.56 34.46*** | 34.67 28.94*** | 50.40 39.87*** | 54.87 35.99*** |

| BTAE | 30.54 30.92*** | 64.04 41.71*** | 32.86 35.76*** | 28.46 28.21*** | 52.66 41.92*** | 47.97 38.87*** |

| HTAES | 14.57 24.07*** | 35.36 44.04*** | 15.37 27.48*** | 08.44 17.29*** | 25.00 20.35*** | 17.81 32.34*** |

| 3DIF | 47.10 17.21*** | 54.51 24.54*** | 48.79 16.52*** | 32.07 17.52*** | 49.15 32.01*** | 36.47 23.93*** |

| CUAS | 54.74 18.57*** | 74.16 25.12*** | 47.71 19.23*** | 44.19 28.14*** | 59.69 35.45*** | 46.15 28.89*** |

| PL-BTS | 79.56 15.08 | 80.36 15.90 | 82.90 17.77 | 70.60 20.14 | 72.91 23.38 | 77.42 22.29 |

| PL-BTS w/o VT | 40.94 30.33 | 71.70 34.83 | 32.70 28.07 | 46.00 32.52 | 74.85 34.57 | 38.25 31.27 |

4.3 Comparison with competing unsupervised methods

For fair comparison, since the competing unsupervised methods only use images without brain tumors or ischemic stroke lesions for training, their performance was compared with that of the prompt model (i.e., PL-BTS).

4.3.1 Evaluation of brain tumor segmentation

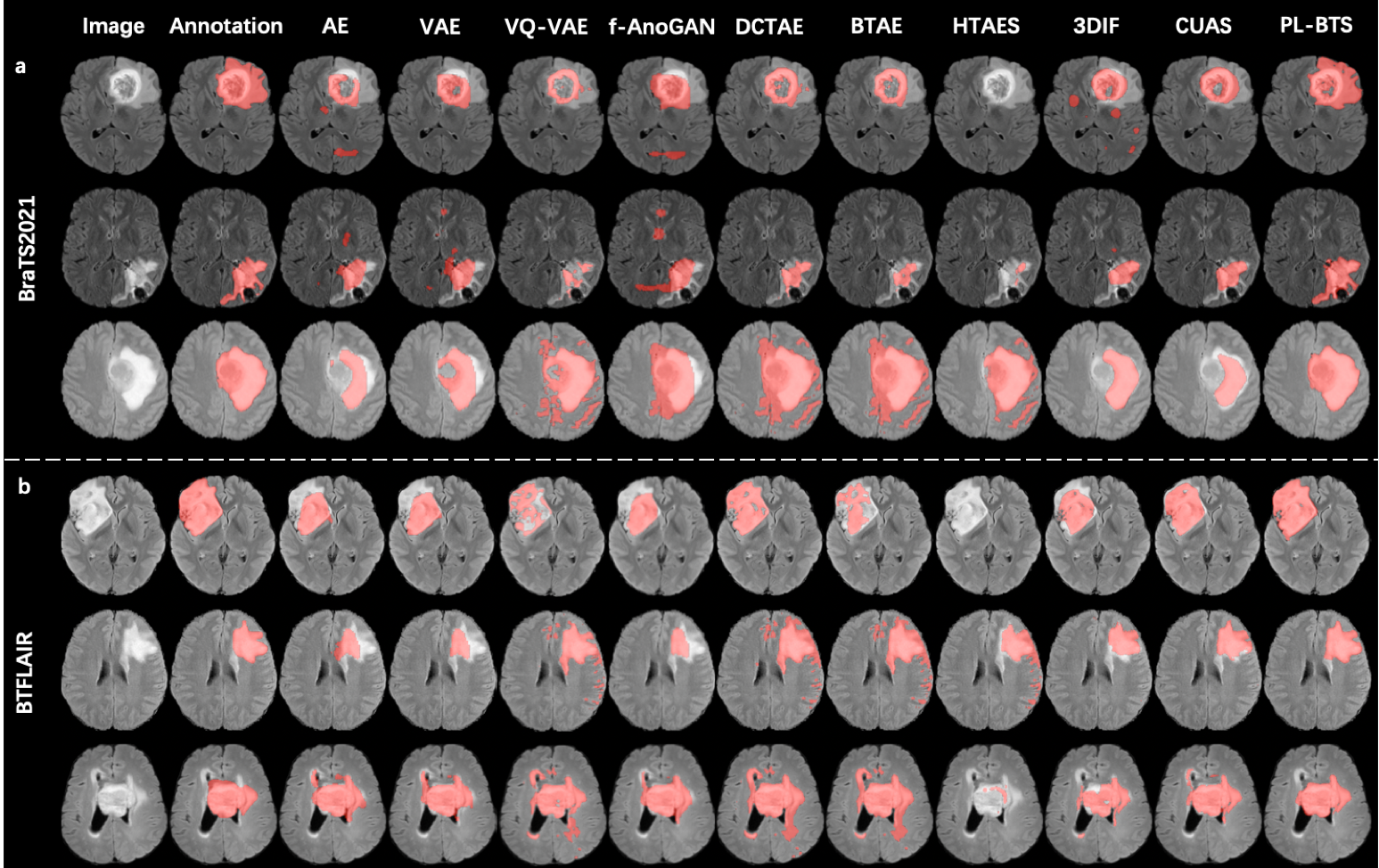

First, for qualitative evaluation, representative segmentation examples selected from the test sets of BraTS2021 and BTFLAIR are shown in Figs. 4a and 4b, respectively, where the results of PL-BTS and the competing unsupervised methods are compared. The manual annotation is also displayed for reference. For both datasets our method produced tumor segmentation that is consistent with the annotation and better resembles the annotation than the results of the competing methods.

To quantitatively compare PL-BTS and each competing method, we computed the Dice coefficient, voxelwise precision, and voxelwise recall of the segmentation results on the test set for BraTS2021 and BTFLAIR. The means and standard deviations of these evaluation metrics are listed in Table 1 for the two datasets separately, where paired Student’s -tests were also used to compare the results of PL-BTS and each competing method. For both datasets and all three metrics, the result of PL-BTS is highly significantly () better than those of the competing methods. Compared with the second best method CUAS, PL-BTS has improved the Dice coefficient by about 45.3% for BraTS2021 and 59.8% for BTFLAIR.

In addition, to show the necessity of the validation task in PL-BTS, the quantitative results of PL-BTS achieved without the validation task (referred to as PL-BTS w/o VT) are shown in Table 1. Compared with PL-BTS, the Dice coefficients of PL-BTS w/o VT drop by a large amount, which demonstrates the importance of using the validation task.

4.3.2 Evaluation of ischemic stroke lesion segmentation

For qualitative evaluation, representative segmentation cases for the test set of ISLES2022 are shown in Fig. 5 together with the manual annotation, where the results of PL-BTS and the competing methods are compared. The results of PL-BTS in general agree with the annotation and better resemble the annotation than those of the competing methods.

For quantitative evaluation, the means and standard deviations of the Dice coefficient, voxelwise precision, and voxelwise recall of the segmentation results on the test set are shown in Table 2, where PL-BTS was compared with all competing methods with paired Student’s -tests. The performance of PL-BTS is highly significantly () better than those of the competing methods in all cases. Compared with the second best method CUAS, PL-BTS has improved the Dice coefficient by about 141.4%.

| Method | ISLES2022 (247 cases) | ||

| Dice | Precision | Recall | |

| AE | 18.00 21.45*** | 38.06 39.93*** | 15.06 15.06*** |

| VAE | 13.10 19.39*** | 45.84 48.38*** | 8.499 13.54*** |

| VQ-VAE | 16.82 23.49*** | 31.59 36.23*** | 14.18 20.95*** |

| f-AnoGAN | 17.18 20.23*** | 28.54 35.39*** | 18.38 21.16*** |

| DCTAE | 16.98 23.48*** | 30.29 34.96*** | 14.65 21.36*** |

| BTAE | 18.72 25.02*** | 31.07 35.14*** | 16.73 23.41*** |

| HTAES | 14.26 22.66*** | 32.20 38.19*** | 11.35 19.63*** |

| 3DIF | 14.28 19.79*** | 17.37 29.74*** | 35.56 27.97*** |

| CUAS | 21.31 22.66*** | 21.11 25.12*** | 42.20 34.71*** |

| PL-BTS | 51.44 34.58 | 69.25 32.16 | 47.06 34.25 |

4.4 Benefit of incorporating unlabeled images

We then used BraTS2021 and ISLES2022 to demonstrate the benefit of incorporating unlabeled images into model training. Here, besides PL-BTS, the result of PL-BTS+ was considered. Also, to demonstrate the benefit of image pasting in PL-BTS+ in Section 3.2, the segmentation result achieved without the synthetic images obtained via pasting was considered, and it is referred to as PL-BTS+ without pasting undersegmented tumors (PL-BTS+ w/o PUT). In addition, the segmentation result achieved with the tumor pasting but without the original unlabeled images and pseudo-labels (referred to as PL-BTS+ w/o UIP) was evaluated to demonstrate the necessity of using the original unlabeled images.

The Dice coefficients of PL-BTS and PL-BTS+ are summarized in Table 3, together with the results of PL-BTS+ w/o PUT and PL-BTS+ w/o UIP. For both BraTS2021 and ISLES2022, PL-BTS+ outperforms PL-BTS, and it is also better than PL-BTS+ w/o PUT and PL-BTS+ w/o UIP. These results indicate the benefit of incorporating unlabeled images for model training, as well as the benefit of using both original unlabeled images and images with pasted pseudo-labels. Note that the Dice coefficient of PL-BTS+ w/o PUT for ISLES2022 is low and worse than PL-BTS. This is likely because ischemic stroke lesions can be diffuse, and the false negatives in the pseudo-labels of the original unlabeled images can be severe and have a negative impact on model training.

| Dataset | Method | Dice |

| BraTS2021 | PL-BTS | 79.56 15.01 |

| PL-BTS+ w/o PUT | 80.22 15.07 | |

| PL-BTS+ w/o UIP | 79.85 16.22 | |

| PL-BTS+ | 82.66 14.90 | |

| ISLES2022 | PL-BTS | 51.41 33.88 |

| PL-BTS+ w/o PUT | 22.66 28.80 | |

| PL-BTS+ w/o UIP | 54.21 33.39 | |

| PL-BTS+ | 55.29 34.79 |

5 Discussion

Our method PL-BTS for unsupervised brain tumor segmentation is based on image-based prompts that suggest whether tumor-like abnormality exists at a voxel. It is different from existing reconstruction-based unsupervised approaches, where we artificially generate abnormality for model training. Of note, the model training is nontrivial as the prompt model can easily overfit the simplistic synthetic training data. To address this problem, we propose the use of a validation task, because no annotated data is available as a validation set. The results of brain tumor segmentation show that the new perspective presented in this work outperforms the existing methods by a large margin. The use of the validation task may also inspire the development of zero-shot learning [59] in general. Model selection is an important issue in zero-shot learning, and the success of this work suggests that it is possible to use different tasks instead of annotated data to monitor the learning process, where the validation task should share general knowledge with the target task of interest.

The design of the prompt and validation tasks in this work is hand-crafted and relatively straightforward. It is possible to explore more advanced designs of the tasks. For example, the diversity of the data generated for the prompt and validation tasks may be increased to improve the stability of model training and selection. In addition, the use of multiple validation tasks may be explored in future works, where these tasks can synergistically avoid overfitting with proper information fusion. Finally, it is an interesting direction to explore learning-based task design, where the generation of abnormality in both prompt and validation tasks can be parameterized and the parameters are learned jointly in the model training process.

As there is currently not yet a vision-language model for 3D brain MR images, our prompt design is purely image-based. However, since these images are usually accompanied by radiological reports, it is possible to train a vision-language model for brain tumors with such data, and the model can then be used for prompt-based tumor segmentation. The development of such models can be explored in future works.

Although the proposed method uses a different strategy than existing reconstruction-based methods, it is possible to integrate these different strategies. For example, currently, the generation of abnormality is performed randomly in brain tissue. The reconstruction-based approach may provide information about where it is easy or difficult to reconstruct normal tissue. This can partition normal tissue into different groups, and the location for abnormality generation can be determined based on the discrimination.

Besides brain tumor segmentation, we have demonstrated the potential of extending the proposed method to other brain lesion segmentation tasks. In particular, we applied our method to ischemic stroke lesion segmentation and obtained results that are similar to those of brain tumor segmentation. Future works can explore additional brain lesion segmentation tasks and make customized designs for them.

In addition to PL-BTS, we have proposed PL-BTS+ that extends PL-BTS when unlabeled images with brain tumors are available. The extension is motivated by the pseudo-labeling strategy that is commonly used in semi-supervised learning, and based on our observation that the pseudo-labels given by the prompt model tend to undersegment tumors, we further paste the undersegmented tumors onto tumor-free images to reduce false negatives. The results presented in Section 4.4 confirm the benefit of the proposed extension, as well as the necessity of using both original unlabeled images and pasted images. The use of unlabeled images also motivates us to further explore “semi-unsupervised” segmentation of brain tumors and other types of lesions in future works, where normal-appearing images can be used jointly with unlabeled images with lesions to improve model training. Approaches that are commonly used in semi-supervised learning may be adapted to the semi-unsupervised setting.

6 Conclusion

We have proposed an unsupervised DL-based brain tumor segmentation method PL-BTS, which is inspired by prompt learning in NLP. Image-based prompts are designed to train a prompt model that suggests tumor-like abnormality, where we also propose to use a validation task to avoid overfitting. In addition, we extend PL-BTS and propose PL-BTS+, which allows further improvement of the segmentation performance when unlabeled images with brain tumors are available. Experiments on public and in-house datasets show that our method outperforms existing unsupervised DL-based methods by a large margin and it can potentially be extended to other brain lesion segmentation tasks as well.

References

- [1] A. Comba et al., “Spatiotemporal analysis of glioma heterogeneity reveals COL1A1 as an actionable target to disrupt tumor progression,” Nature Communications, vol. 13, no. 1, p. 3606, 2022.

- [2] B. H. Menze et al., “The multimodal brain tumor image segmentation benchmark (BRATS),” IEEE Transactions on Medical Imaging, vol. 34, no. 10, pp. 1993–2024, 2015.

- [3] D. W. Parsons et al., “An integrated genomic analysis of human glioblastoma multiforme,” Science, vol. 321, no. 5897, pp. 1807–1812, 2008.

- [4] J. V. Gregory et al., “Systemic brain tumor delivery of synthetic protein nanoparticles for glioblastoma therapy,” Nature Communications, vol. 11, no. 1, p. 5687, 2020.

- [5] C. Alifieris and D. T. Trafalis, “Glioblastoma multiforme: Pathogenesis and treatment,” Pharmacology and Therapeutics, vol. 152, pp. 63–82, 2015.

- [6] F. Isensee and K. H. Maier-Hein, “nnU-Net for brain tumor segmentation,” in MICCAI BrainLes Workshop, vol. 12659. Springer, 2020, p. 118.

- [7] T. Magadza and S. Viriri, “Deep learning for brain tumor segmentation: a survey of state-of-the-art,” Journal of Imaging, vol. 7, no. 2, p. 19, 2021.

- [8] K. Kamnitsas et al., “Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation,” Medical Image Analysis, vol. 36, pp. 61–78, 2017.

- [9] N. Gordillo, E. Montseny, and P. Sobrevilla, “State of the art survey on MRI brain tumor segmentation,” Magnetic Resonance Imaging, vol. 31, no. 8, pp. 1426–1438, 2013.

- [10] R. Dubey, M. Hanmandlu, S. Gupta, and S. Gupta, “Region growing for MRI brain tumor volume analysis,” Indian Journal of Science and Technology, vol. 2, no. 9, 2009.

- [11] M. Havaei et al., “Brain tumor segmentation with deep neural networks,” Medical Image Analysis, vol. 35, pp. 18–31, 2017.

- [12] W. Wang, C. Chen, M. Ding, H. Yu, S. Zha, and J. Li, “TransBTS: Multimodal brain tumor segmentation using transformer,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2021, pp. 109–119.

- [13] Y. Ding, X. Yu, and Y. Yang, “RFNet: Region-aware fusion network for incomplete multi-modal brain tumor segmentation,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 3975–3984.

- [14] Y. Ding et al., “ToStaGAN: An end-to-end two-stage generative adversarial network for brain tumor segmentation,” Neurocomputing, vol. 462, pp. 141–153, 2021.

- [15] W. Cui et al., “Semi-supervised brain lesion segmentation with an adapted mean teacher model,” in International Conference on Information Processing in Medical Imaging. Springer, 2019, pp. 554–565.

- [16] R. Meier, S. Bauer, J. Slotboom, R. Wiest, and M. Reyes, “Patient-specific semi-supervised learning for postoperative brain tumor segmentation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2014, pp. 714–721.

- [17] X. Zhang, W. Xie, C. Huang, Y. Zhang, and Y. Wang, “Self-supervised tumor segmentation through layer decomposition,” arXiv preprint arXiv:2109.03230, 2021.

- [18] X. Zhuang, Y. Li, Y. Hu, K. Ma, Y. Yang, and Y. Zheng, “Self-supervised feature learning for 3D medical images by playing a rubik’s cube,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2019, pp. 420–428.

- [19] W. H. Pinaya et al., “Unsupervised brain imaging 3D anomaly detection and segmentation with transformers,” Medical Image Analysis, vol. 79, p. 102475, 2022.

- [20] C. Baur, S. Denner, B. Wiestler, N. Navab, and S. Albarqouni, “Autoencoders for unsupervised anomaly segmentation in brain MR images: a comparative study,” Medical Image Analysis, vol. 69, p. 101952, 2021.

- [21] S. Naval Marimont and G. Tarroni, “Implicit field learning for unsupervised anomaly detection in medical images,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2021, pp. 189–198.

- [22] X. Wu, L. Bi, M. Fulham, D. D. Feng, L. Zhou, and J. Kim, “Unsupervised brain tumor segmentation using a symmetric-driven adversarial network,” Neurocomputing, vol. 455, pp. 242–254, 2021.

- [23] P. Liu, W. Yuan, J. Fu, Z. Jiang, H. Hayashi, and G. Neubig, “Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing,” arXiv preprint arXiv:2107.13586, 2021.

- [24] J. D. M.-W. C. Kenton and L. K. Toutanova, “BERT: Pre-training of deep bidirectional transformers for language understanding,” in Proceedings of NAACL-HLT, 2019, pp. 4171–4186.

- [25] T. Brown et al., “Language models are few-shot learners,” in Advances in Neural Information Processing Systems, vol. 33, 2020, pp. 1877–1901.

- [26] H. Bahng, A. Jahanian, S. Sankaranarayanan, and P. Isola, “Visual Prompting: Modifying pixel space to adapt pre-trained models,” arXiv preprint arXiv:2203.17274, 2022.

- [27] S. Changpinyo, W.-L. Chao, and F. Sha, “Predicting visual exemplars of unseen classes for zero-shot learning,” in Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 3476–3485.

- [28] S. Mutasa, S. Sun, and R. Ha, “Understanding artificial intelligence based radiology studies: What is overfitting?” Clinical Imaging, vol. 65, pp. 96–99, 2020.

- [29] G. Ghiasi et al., “Simple copy-paste is a strong data augmentation method for instance segmentation,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition. IEEE, 2021, pp. 2917–2927.

- [30] Z. Li, K. Kamnitsas, and B. Glocker, “Analyzing overfitting under class imbalance in neural networks for image segmentation,” IEEE Transactions on Medical Imaging, vol. 40, no. 3, pp. 1065–1077, 2020.

- [31] H. Dong, G. Yang, F. Liu, Y. Mo, and Y. Guo, “Automatic brain tumor detection and segmentation using U-Net based fully convolutional networks,” in Annual Conference on Medical Image Understanding and Analysis. Springer, 2017, pp. 506–517.

- [32] J. Hu, X. Gu, and X. Gu, “Mutual ensemble learning for brain tumor segmentation,” Neurocomputing, vol. 504, pp. 68–81, 2022.

- [33] S. Supot, C. Thanapong, P. Chuchart, and S. Manas, “Segmentation of magnetic resonance images using discrete curve evolution and fuzzy clustering,” in IEEE International Conference on Integration Technology. IEEE, 2007, pp. 697–700.

- [34] E. Abdel-Maksoud, M. Elmogy, and R. Al-Awadi, “Brain tumor segmentation based on a hybrid clustering technique,” Egyptian Informatics Journal, vol. 16, no. 1, pp. 71–81, 2015.

- [35] Y. Jiang, Y. Zhang, X. Lin, J. Dong, T. Cheng, and J. Liang, “SwinBTS: a method for 3D multimodal brain tumor segmentation using Swin Transformer,” Brain Sciences, vol. 12, no. 6, p. 797, 2022.

- [36] A. Hatamizadeh et al., “UNETR: Transformers for 3D medical image segmentation,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2022, pp. 574–584.

- [37] Ö. Çiçek, A. Abdulkadir, S. S. Lienkamp, T. Brox, and O. Ronneberger, “3D U-Net: Learning dense volumetric segmentation from sparse annotation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2016, pp. 424–432.

- [38] O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional networks for biomedical image segmentation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2015, pp. 234–241.

- [39] X. Luo, J. Chen, T. Song, and G. Wang, “Semi-supervised medical image segmentation through dual-task consistency,” in AAAI Conference on Artificial Intelligence, 2021, pp. 8801–8809.

- [40] L. Chen, P. Bentley, K. Mori, K. Misawa, M. Fujiwara, and D. Rueckert, “Self-supervised learning for medical image analysis using image context restoration,” Medical Image Analysis, vol. 58, p. 101539, 2019.

- [41] R. Dey and Y. Hong, “ASC-Net: Adversarial-based selective network for unsupervised anomaly segmentation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2021, pp. 236–247.

- [42] S. N. Marimont and G. Tarroni, “Anomaly detection through latent space restoration using vector quantized variational autoencoders,” in International Symposium on Biomedical Imaging. IEEE, 2021, pp. 1764–1767.

- [43] L. Wang, D. Zhang, J. Guo, and Y. Han, “Image anomaly detection using normal data only by latent space resampling,” Applied Sciences, vol. 10, no. 23, p. 8660, 2020.

- [44] T. Schlegl, P. Seeböck, S. M. Waldstein, G. Langs, and U. Schmidt-Erfurth, “f-AnoGAN: Fast unsupervised anomaly detection with generative adversarial networks,” Medical Image Analysis, vol. 54, pp. 30–44, 2019.

- [45] A. Ghorbel, A. Aldahdooh, S. Albarqouni, and W. Hamidouche, “Transformer based models for unsupervised anomaly segmentation in brain MR images,” arXiv preprint arXiv:2207.02059, 2022.

- [46] M. Xu et al., “A simple baseline for zero-shot semantic segmentation with pre-trained vision-language model,” arXiv preprint arXiv:2112.14757, 2021.

- [47] J. Ding, N. Xue, G.-S. Xia, and D. Dai, “Decoupling zero-shot semantic segmentation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 11 583–11 592.

- [48] Z. Wang et al., “CRIS: CLIP-driven referring image segmentation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 11 686–11 695.

- [49] A. Radford et al., “Learning transferable visual models from natural language supervision,” in International Conference on Machine Learning. PMLR, 2021, pp. 8748–8763.

- [50] F. Pérez-García F et al., “A self-supervised learning strategy for postoperative brain cavity segmentation simulating resections,” International Journal of Computer Assisted Radiology and Surgery, vol. 16, no. 10, pp. 1653–1661, 2021.

- [51] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” in International Conference on Learning Representations, 2015.

- [52] B. Zoph et al., “Rethinking pre-training and self-training,” Advances in Neural Information Processing Systems, vol. 33, pp. 3833–3845, 2020.

- [53] D. S. Marcus, A. F. Fotenos, J. G. Csernansky, J. C. Morris, and R. L. Buckner, “Open access series of imaging studies: longitudinal MRI data in nondemented and demented older adults,” Journal of Cognitive Neuroscience, vol. 22, no. 12, pp. 2677–2684, 2010.

- [54] U. Baid et al., “The RSNA-ASNR-MICCAI BraTS 2021 benchmark on brain tumor segmentation and radiogenomic classification,” arXiv preprint arXiv:2107.02314, 2021.

- [55] M. R. H. Petzsche et al., “ISLES 2022: A multi-center magnetic resonance imaging stroke lesion segmentation dataset,” arXiv preprint arXiv:2206.06694, 2022.

- [56] S. M. Smith, “Fast robust automated brain extraction,” Human brain mapping, vol. 17, no. 3, pp. 143–155, 2002.

- [57] R. Hakimelahi et al., “Time and diffusion lesion size in major anterior circulation ischemic strokes,” Stroke, vol. 45, no. 10, pp. 2936–2941, 2014.

- [58] J. Silva-Rodríguez, V. Naranjo, and J. Dolz, “Constrained unsupervised anomaly segmentation,” arXiv preprint arXiv:2203.01671, 2022.

- [59] Y. Xian, C. H. Lampert, B. Schiele, and Z. Akata, “Zero-shot learning a comprehensive evaluation of the good, the bad and the ugly,” IEEE transactions on pattern analysis and machine intelligence, vol. 41, no. 9, pp. 2251–2265, 2018.