Unifying Relational Sentence Generation and Retrieval for Medical Image Report Composition

Abstract

Beyond generating long and topic-coherent paragraphs in traditional captioning tasks, the medical image report composition task poses more task-oriented challenges by requiring both the highly-accurate medical term diagnosis and multiple heterogeneous forms of information including impression and findings. Current methods often generate the most common sentences due to dataset bias for individual case, regardless of whether the sentences properly capture key entities and relationships. Such limitations severely hinder their applicability and generalization capability in medical report composition where the most critical sentences lie in the descriptions of abnormal diseases that are relatively rare. Moreover, some medical terms appearing in one report are often entangled with each other and co-occurred, e.g. symptoms associated with a specific disease. To enforce the semantic consistency of medical terms to be incorporated into the final reports and encourage the sentence generation for rare abnormal descriptions, we propose a novel framework that unifies template retrieval and sentence generation to handle both common and rare abnormality while ensuring the semantic-coherency among the detected medical terms. Specifically, our approach exploits hybrid-knowledge co-reasoning: i) explicit relationships among all abnormal medical terms to induce the visual attention learning and topic representation encoding for better topic-oriented symptoms descriptions; ii) adaptive generation mode that changes between the template retrieval and sentence generation according to a contextual topic encoder. Experimental results on two medical report benchmarks demonstrate the superiority of the proposed framework in terms of both human and metrics evaluation.

Index Terms:

Multi-modal data processing, Computer-aided diagnosis, Deep learning, Natural language processingI Introduction

Automatic generation of medical image reports has recently attracted increasing research interests [1, 2, 3], which has a significant potential to simplify the diagnostic procedure and reduce the burden of physicians. Besides the difficulties shared with captioning and visual question answering (VQA) (e.g. fine-grained visual processing and reasoning, bridging visual and linguistic modalities), the medical report composition must have a plausible logic and consistent topics to complete a long narrative consisting of multiple sentences or paragraphs. Moreover, a task-oriented challenge requires predicting not only the highly accurate medical term diagnosis but also the heterogeneous forms of information including impression and findings.

Deep neural network architectures [4, 5, 6, 7], sequence-to-sequence models [8, 9] and visual attention mechanisms [10, 2, 11] have been widely adopted in both image captioning and VQA, which improve performance by learning to focus on the salient regions of the image. However, without other prior knowledge on the visual content, such computed visual attention may concentrate on irrelevant regions. Furthermore, few methods have considered key entities, topic relationships and paragraph consistency, which are most likely to generate similar sentences in one report or similar reports for different medical images, due to the dataset bias.

In general, radiologists first check a patient’s images for peculiar regions, think of correlations among prominent symptoms, then write sentences according to the keywords following certain patterns for the normal cases and adjust statements for the specific cases. In this paper, we adopted a similar methodology and proposed a Unifying Retrieval and Relational-topic sentence Generation framework, named Relation-paraNet, which incorporates the semantic consistency of medical terms into the reports and encourages the sentence generation for rare abnormal descriptions. Specifically, our Relation-paraNet exploits hybrid-knowledge co-reasoning in two ways. First, we explore explicit relationships among all abnormal medical terms to induce the visual attention learning and topic representation encoding for better topic-oriented symptom descriptions. We pay attention to the abnormal medical terms that reflect the keywords of the reports and introduce the relational-topic to guide the sentence generation. We further excavate the semantic consistency among all abnormal keywords and conduct abnormality classification to induce visual attention learning. On the other hand, to generate better topic-oriented symptom descriptions, we integrate visual features and abnormality relations for topic representation, which is essential to depict the principal idea of the independent sentence in the report.

Furthermore, inspired by the fact that radiologists often write reports based on templates, we introduce an Adaptive Generator that changes between the template retrieval and sentence generation according to a contextual topic encoder. We employ a retrieval classification module to decide the choice for either automatically generating sentences or retrieving specific sentences from the template database. The template database is based on human prior knowledge collected from available medical reports. To enable effective and robust report composition, we take the integral generated sentences into concern and encode such paragraph information back into the network to produce the next sentence. Experimental results show that by unifying retrieval paragraph and relational topic, our Relation-paraNet is able to generate more accurate and professional medical reports.

Our contributions are summarized in the following aspects.

-

•

Aiming at resolving the task-oriented challenges of medical report composition, we make the first attempt to incorporate the semantic consistency of medical terms into the final reports and encourage the precise sentence generation for rare abnormal descriptions.

-

•

We introduce a Unifying Retrieval and Relational-topic driven Generation framework, named Relation-paraNet, which integrates a Relational-Topic Encoder to learn explicit semantic consistency among medical terms and an Adaptive Generator to change between the template retrieval and sentence generation for more natural medical report composition.

-

•

Our method outperforms state-of-the-art works on two medical report datasets and achieves an appealing performance under human evaluation.

The rest of this paper is organized as follows. Section II presents a brief review of related work. Section III introduces the pipeline of our method, including the model formulation and the optimization method. The experimental results, comparisons, and component analysis are presented in Section IV. Section V concludes the paper with a discussion of future work.

II Related Work

Visual Captioning is a challenging cognitive task that requires a simultaneous understanding of both natural language and visual information. Early pioneering methods based on deep learning [12] have employed CNN [5, 13] and RNN [14, 15] to generate syntactical sentences for images or videos. For example, Karpathy et al. [16] proposed an alignment model to generate descriptions of image regions by incorporating R-CNN [17] features into RNN. Vinyals et al. [18] devised an end-to-end captioning system by utilizing LSTM to maintain information in memory, which is improved via a spatial attention mechanism to automatically localize related regions in [19].

Based on the above prevailing frameworks, recent approaches [20, 21, 22, 23, 24, 25, 3] towards different ambitions have been proposed. Xu et al. [20] designed a dual-stream RNN architecture to exploit both visual and semantic features jointly. To address the issue that the image is segmented by CNN to the fixed resolution grid at a coarse level, Zhang et al. [23] equipped the image captioning model with fine-grained and semantic-guided visual attention. Yao et al. [26] introduced a hierarchy from instance level and region level to delve into a thorough image understanding. Then a HIerarchy Parsing architecture is used to integrate the hierarchical structure into image encoder. Furthermore, to learn structured semantic knowledge in scene graphs, Li et al. [21] first extracted triples from scene graphs and encoded them into semantic vectors, then devised a hierarchical-attention-based module to focus on salient visual and semantic features. Since the capacity of a single-LSTM network is limited for image captioning, Xiao et al. [24] developed a deep hierarchical structure to fuse multi-level semantics of vision and language by increasing the vertical depth of the encoder-decoder.

Medical Report Composition is one of the challenging research topics in machine learning for healthcare [1, 2, 3]. Different from image captioning, this task requires generating multiple sentences and puts forward higher requirements on content selection, relation generation, and content fluency. Jing et al. [2] proposed a co-attention mechanism to focus on abnormal regions and a hierarchical LSTM (Sentence LSTM and Word LSTM) like [27] to generate long paragraphs. To incorporate patient background information, Huang et al. [28] proposed a hierarchical model with multi-attention mechanism. The patient’s background information is encoded and then added to the pretrained vanilla word embedding. Due to relatively rare and remarkably diverse abnormal findings, [2] fails to detect abnormalities and tends to generate trivial descriptions. Li et al. [29] directly selected sentences from the template database via Graph Transformer [30]. Recently, there are some approaches combining traditional template-based and newest generation-based methods for sequence generation[31, 8, 32]. Cao et al. [31] used existing summaries as soft templates to guide the generative model, jointly applying template retrieval, template re-ranking and template-aware summary generation. Differing from above methods failing to incorporate semantic consistency with medical abnormalities for sequence generation, our method unifies template retrieval and sentence generation to handle both common and rare abnormality while ensuring the semantic-coherency among the detected medical terms. Similar to us, Li et al. [1] combined retrieval-based and generation-based methods via reinforcement learning, whereas our generated descriptions are more consistent with detected abnormalities. Furthermore, Nie et al. [33] proposed a novel scheme that combines local mining and global learning approaches to bridge the vocabulary gap between health seekers and providers.

Attention Mechanism tries to learn different attention weights for input text or image and focus on informative region automatically [34, 35], which can be also applied to visual captioning [10, 19, 36, 37, 38] and report composition [29]. For instance, Anderson et al. [10] combined bottom-up and top-down attention mechanism in which the latter mechanism can calculate attention at the level of objects extracted by the former mechanism. Hori et al. [36] introduced a multimodal attention model that selectively focus on specific information across different modalities for video description. Gao et al. [22] developed an attention-based framework to exploit the correlations between sentence semantics and visual content by mapping the features of them into a joint space. And Pan et al. [39] devised an X-Linear attention block to capture the order feature interaction in between, and measures both spatial and channel-wise attention distributions. To make the decoder determine the relevance between the attended vector and the given attention query, Huang et al. [40] proposed an Attention on Attention module from which the useful knowledge was produced.

Multi-label Classification Multi-label classification is a challenging task that attracts increasing attention [41, 42, 43, 44, 45]. Recent approaches, which are optimized with the ranking loss [44] or the cross-entropy loss [43] incorporate deep convolutional neural network into multi-label classification and achieve superior performance. However, this task exposes strong label co-occurrence dependencies according to [41]. Thus Wang et al. [42] proposed CNN-RNN framework learns a joint image-label embedding to exploit label dependencies in an image. Furthermore, Feature Attention Network(FAN) [46], which builds the top-down feature fusion mechanism and learn the correlations among convolutional features, is designed to tackle object scale inconsistent and label dependencies. Chen et al. [47] introduced a two-stage method that trained the network to predict conditional probability directly and then refined it with unconditional probabilities which formulated from a numerically stable and principled loss function. Different from the above methods, we construct a relationship constraint loss function to learn label co-occurrence dependencies directly.

III Method

As shown in Figure 1, a complete diagnostic report for a medical image is comprised of both text descriptions and lists of medical terms. Inspired by [27, 1], we formulate the generation in a hierarchical framework illustrated in Figure 2. A medical image is first fed into a deep CNN architecture to learn visual features. We explore the semantic consistency of medical terms and perform the abnormality classification to encourage the sentence generation of rare abnormal descriptions. To achieve semantic alignment between visual features and report descriptions, the Relational-Topic Encoder and Adaptive Generator integrate the advantages of the bottom-up and top-down attention mechanism. Taking image features and the sentence embedding as input, the Relational-Topic Encoder produces a contextual topic vector and a stop control to determine the number of sentences. The Adaptive Generator, acting as a gate function, takes the encoded contextual topic vector as input to determine the choice to either retrieve a template from the template database or generate a new sentence. In the latter option, the sentence topic vector is fed into the Word Decoder to generate words for the corresponding sentence. The proposed model produces two topic vectors, and , respectively.

III-A Relational Abnormality Classification

Previous works [2, 10] have shown that visual attention can perform fairly well for localizing objects and aiding captions. However, visual attention is usually not sufficient to encode high-level semantic information to recognize abnormalities. To this end, we explore the relationship between the medical terms which can be cooperative with image features for the robust relational topic generation.

We treat the abnormality classification as a multi-label image classification task. Specifically, given a medical image , we first extract its feature maps through a deep CNN and apply a fully connected layer to compute a distribution over all of the abnormal medical terms. To solve multi-label classification, we calculate binary cross entropy for each category. Moreover, according to [41, 48], labels co-occur in images with priors. And relationships among all abnormal medical terms are also crucial for classification as some terms are often entangled with others and co-occurred. For example, “copd” and “pulmonary disease chronic obstructive” often appear together. Therefore, the predicted scores of these two abnormalities should be closer. To exploit these explicit relationships, beyond average binary cross entropy loss, we add another relationship constraint loss. Finally, the abnormality multi-label classification loss is composed of two terms, which is formulated as:

| (1) | ||||

where the first term is an average binary cross-entropy loss for each category, denotes probability of abnormal medical term and is the ground truth label; the second term is the relationship constraint loss, represents the relevance between two abnormal medical terms and denotes the number of non-zeros in relationship matrix . For a pair of abnormality probabilities, namely and , if is larger, the relationship constraint loss can guide and to be closer. Similarly, and do not influence each other when is smaller.

The static relationship matrix is computed according to the frequency of co-occurrences for each pair of abnormalities all over the training set [49], which is defined as follows:

| (2) |

where denotes the frequency of co-occurrences, is the frequency of abnormality , and denotes the total count of abnormalities. Here we do not consider the extreme case when , and we only make the statistics for a rough prior knowledge.

III-B Relational-Topic Encoder

Sentences in the report are based on the image as well as the previous generated sentence. In order to utilize the relationships among abnormal medical terms and adjacent sentences, we propose a Relational-Topic Encoder to generate more discriminative topics, as illustrated in Figure 3. Our Relational-Topic Encoder has two critical inputs. The first one is the image features which are enhanced by the abnormal medical terms learning. Its visual information can help the encoder focus on the salient regions. Furthermore, we also feed the embedding vector of the previous sentence into the decoder, which imposes the encoder to memorize the previous topic and sentence features. Formally, the Relational-Topic Encoder is composed of an LSTM layer and an attention [19] layer. At each time step, the LSTM layer concatenates the previous state of the Adaptive Generator and embedding vector of the previous generated sentence to form the input vector :

| (3) |

Supposing a sentence consists of tokens, a bi-directional LSTM is adapted to encode the sentence as a 2-D matrix [50]. The proposed Relational-Topic Encoder generates a hidden state by an LSTM as follows,

| (4) |

This hidden state is used to produce three signals. First, is linearly projected into a stop control , which determines whether to stop the topic generation process or not,

| (5) |

where an are trainable parameters. Second, given a hidden state together with image features , an attended context vector is produced with an attention operation att,

| (6) |

Third, the hidden state and the context vector are fed into a fully-connected layer with weights and to produce the topic vector ,

| (7) |

In this way, a relational sentence topic vector is generated for the word decoder to predict words sequentially. Simultaneously, an encoded contextual topic vector is produced to retrieve templates adaptively.

III-C Adaptive Generator

As the frequency of normal sentences is much higher than that of abnormal sentences, state-of-the-art methods on report composition [2] tend to generate normal sentences such as “The heart is normal in size”, “The lungs are clear” and “No acute bony abnormality”. As for the abnormal sentences like “Scattered thoracic spine spurring”, these models cannot write accurately due to the dataset bias. Different from the previous methods, our proposed Adaptive Generator produces the abnormal sentences from template database and employs the Word Decoder for generating normal sentences, which combines retrieval and generation for automatic report composition, as shown in Figure 3. During training, if the module needs to select a template, the Word Decoder will be masked and vice versa.

In particular, the Adaptive Generator takes encoded contextual topic vector as input to determine the choice that performances either template retrieval or sentence writing for the current sentence generation. It consists of an LSTM layer and a Softmax classifier. Given a hidden state from the Relational-Topic Encoder and contextual topic vector predicted by the attention layer, the Adaptive Generator produces the adaptive decision for sentence . Since sentence writing is also one of the decisions, the size of the template database is and the decision space is . The formulation can be summarized as:

| (8) | ||||

From the above Equation 4 and 8, in which two LSTM layers are used to selectively attend to spatial image features, we can see that the bottom-up and top-down attention mechanism [10] is integrated into our Relational-Topic Encoder and Adaptive Generator.

As shown in Figure 2, the relational topic vector represents the overall feature of the generated sentence. The topic vector and a special START token are regarded as the inputs to the Word Decoder following the previous work [2, 1]. The subsequent inputs are the word embedding sequence.

| (9) |

For each word, the last hidden state of the Word Decoder is used to predict a distribution over the words in the vocabulary. Finally, all sentences from retrieval or writing are concatenated to form the medical report.

III-D Parameter Learning

Training data consists of tuples , where is an image, denotes the ground-truth abnormalities and is the ground-truth report description, which has sentences. To perform adaptive generation, the -th sentence has a template index () as well as word indexes ().

Given a tuple of , we perform multi-label abnormality classification and unroll the Sentence Decoder timesteps, receiving abnormality distribution , stop distribution over the states, and template distribution over the template database. Then we unroll the Word Decoder timesteps if the adaptive generation mode changes to sentence generation, receiving word probability .

Formally, we define a multi-task loss similar to [27]:

| (10) | ||||

where denotes (binary) cross entropy function, is the abnormality multi-label classification loss consisting of binary cross-entropy loss and relationship constraint loss defined in Equation 1, denotes the stop signal prediction loss, and are the template classification loss for each sentence and the word prediction loss over word distributions respectively, denotes the number of sentences that are generated by Word Decoder. If our model needs to select a template, the masks and are set to and respectively, and vice versa. All the components are jointly trained to minimize .

| Dataset | Model | CIDEr | ROUGE-L | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 |

|---|---|---|---|---|---|---|---|

| IU X-Ray | CNN-RNN[18] | 0.294 | 0.307 | 0.216 | 0.214 | 0.087 | 0.066 |

| LRCN [51] | 0.285 | 0.307 | 0.223 | 0.128 | 0.089 | 0.068 | |

| AdaAtt [52] | 0.296 | 0.308 | 0.220 | 0.127 | 0.089 | 0.069 | |

| Att2in [53] | 0.297 | 0.307 | 0.224 | 0.129 | 0.089 | 0.068 | |

| Transformer [35] | 0.255 | 0.371 | 0.363 | 0.231 | 0.155 | 0.107 | |

| BUTD [10] | 0.312 | 0.368 | 0.398 | 0.256 | 0.174 | 0.124 | |

| AoA [40] | 0.292 | 0.355 | 0.204 | 0.119 | 0.085 | 0.062 | |

| CoAtt [2] | 0.277 | 0.369 | 0.455 | 0.288 | 0.205 | 0.154 | |

| HRGR-Agent [1] | 0.343 | 0.322 | 0.438 | 0.298 | 0.208 | 0.151 | |

| Relation-paraNet(VGG-19) | 0.317 | 0.372 | 0.505 | 0.329 | 0.230 | 0.168 | |

| KERP [29] | 0.280 | 0.339 | 0.482 | 0.325 | 0.226 | 0.162 | |

| Relation-paraNet(DenseNet-121) | 0.331 | 0.360 | 0.503 | 0.333 | 0.236 | 0.175 | |

| CX-CHR | CNN-RNN[18] | 1.580 | 0.577 | 0.590 | 0.506 | 0.450 | 0.411 |

| LRCN [51] | 1.588 | 0.577 | 0.593 | 0.508 | 0.452 | 0.413 | |

| AdaAtt [52] | 1.568 | 0.575 | 0.588 | 0.503 | 0.446 | 0.409 | |

| Att2in [53] | 1.566 | 0.576 | 0.587 | 0.503 | 0.446 | 0.408 | |

| Transformer [35] | 2.721 | 0.605 | 0.590 | 0.527 | 0.484 | 0.453 | |

| BUTD [10] | 2.516 | 0.660 | 0.642 | 0.562 | 0.506 | 0.462 | |

| AoA [40] | 1.774 | 0.617 | 0.606 | 0.516 | 0.455 | 0.408 | |

| CoAtt [2] | 2.735 | 0.645 | 0.647 | 0.575 | 0.525 | 0.487 | |

| HRGR-Agent [1] | 2.895 | 0.612 | 0.673 | 0.587 | 0.530 | 0.486 | |

| KERP [29] | 2.850 | 0.618 | 0.673 | 0.588 | 0.532 | 0.473 | |

| Relation-paraNet(DenseNet-121) | 3.249 | 0.675 | 0.711 | 0.637 | 0.586 | 0.548 |

IV Experiments and Analysis

To evaluate the effectiveness of the model, we conduct experiments on two medical image report datasets.

IU X-Ray. Indiana University Chest X-Ray Collection (IU X-Ray) [54] is a public collection that contains 7470 pairs of images and diagnostic reports. Each report is comprised of impression, findings, tags and indication, which properly meets the requirements of our task. Every token is converted to lower-case, and filtered out with a frequency less than 3, which results in 1185 unique words covering over 99.0% word occurrences in the corpus. To perform subsequent tasks, we screen tags with a frequency greater than 30 and abnormal sentences with a frequency greater than 2, which produces 80 abnormalities and 80 templates.

CX-CHR is a private collection of chest X-ray images with corresponding Chinese reports for health checking, consists of 35,609 patients and 45,598 images. There are 33236 patient samples in total, covering over 93% of the dataset. Different from IU X-Ray, CX-CHR needs to tokenize by Jieba firstly. Then we filter out tokens with the frequency less than 3, tags with the frequency less than 30 and abnormal sentences with the frequency less than 2. Finally, we obtain 1233 unique tokens, 155 abnormalities and 287 templates for CX-CHR dataset. Following [1], as the “findings” section contains the radiological observations and detailed description of the patient¡¯s information, we only consider “findings” section as the target captions. To protect the privacy of patients, CX-CHR dataset is not publicly available, but the researchers can apply for academic usage after signing the confidentiality agreement.

Since the two datasets are collected from two different hospitals, there exist variance among reports and abnormalities. In this paper, the abnormal sentences are regarded as templates. And the ratio of template retrieval and sentence generation is 0.26 for IU X-Ray(English) dataset. As for CX-CHR(Chinese) dataset, the ratio is 0.16, since the CX-CHR dataset contains more normal sentences than the IU X-Ray dataset. On both datasets, we use the same dataset split from [29] for a fair comparison. There is no overlap between patients in different sets.

| Dataset | Model | CIDEr | ROUGE-L | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 |

|---|---|---|---|---|---|---|---|

| IU X-Ray | w/o Sentence relation | 0.249 | 0.328 | 0.385 | 0.235 | 0.155 | 0.108 |

| Normal templates | 0.144 | 0.282 | 0.292 | 0.183 | 0.128 | 0.094 | |

| w/o Abnormality relation | 0.317 | 0.330 | 0.355 | 0.217 | 0.150 | 0.111 | |

| Relation-paraNet(VGG-19) | 0.317 | 0.372 | 0.505 | 0.329 | 0.230 | 0.168 | |

| CX-CHR | w/o Sentence relation | 2.996 | 0.655 | 0.667 | 0.597 | 0.548 | 0.510 |

| Normal templates | 2.855 | 0.650 | 0.700 | 0.611 | 0.550 | 0.505 | |

| w/o Abnormality relation | 3.064 | 0.657 | 0.711 | 0.636 | 0.586 | 0.547 | |

| Relation-paraNet(DenseNet-121) | 3.249 | 0.675 | 0.711 | 0.637 | 0.586 | 0.548 |

IV-A Implementation Details

Training. For two medical datasets, we set the same hyper-parameters, due to our robust captioning network. Our model accepts a image as input and yields feature maps from the last convolution layer. is and when using VGG-19 [5] or DenseNet-121 [4], respectively. We use 512 as the dimension of all hidden states, topic vectors, word embedding and so on. To minimize in Equation 10, we train on a single GPU with an initial learning rate of and a batch size of 16 for 30 epochs. We employ ADAM [55] optimizer and decrease the learning rate by the factor of 0.8 every three epochs, following [53].

Inference. We execute our model to select a template or write sentence through Word Decoder at each time step until a stop control appears or the number of generated sentences reaches the maximum value . Here, we terminate the process as long as the score of stop control exceeds a threshold of 0.5. In detail, predicting the template index means we should believe the written sentence, otherwise, copy the corresponding template to the report directly. In the same way, we stop Word Decoder if we predict a END token or obtain words. We set and as 10 and 15 for IU X-Ray, 24 and 18 for CX-CHR dataset, respectively.

IV-B Experimental Results

Evaluation metrics. We conduct experiments to evaluate our method from three aspects: 1) Area Under the Curve (AUC) measures the performance of abnormality classification; 2) Automatic evaluation metrics consist of BLEU [56], ROUGE [57] and CIDEr [58]. BLEU is defined as the geometric mean of n-gram precision scores multiplied by a brevity penalty for short sentences. ROUGE-L basically measures the longest common subsequences between a pair of sentences and CIDEr measures the consensus between candidate image description and the reference sentences; 3) Human evaluation is performed by the radiologists with professional experiments. We invite 5 doctors to select the better generated reports between the baseline method CoAtt [2] and ours’ proposed model. The selection criteria consists of abnormal findings, language fluency, and content coverage.

Baselines. We compare our Relation-paraNet with 4 state-of-the-art image captioning methods. They are CNN-RNN [18], LRCN [51], AdaAtt [52], and Att2in [53] respectively. Besides the above methods, we also compare with state-of-the-art methods of medical report composition, including AoA [40], Transformer [35], BUTD [10], CoAtt [2], HRGR-Agent [1], and KERP [29]. For the fair comparison, we employ VGG-19 [5] and DenseNet121 [4] to extract visual features on IU X-ray and CX-CHR dataset respectively, in step with baselines. Further, we conduct additional experiments on both datasets to illustrate how each component contributes to the final results.

Abnormality classification. The comparison of multi-label abnormality classification in terms of AUC metric is shown in Table I. Superior to the baselines that simply apply VGG-19[5] or DenseNet-121[4] as backbone network with binary cross entropy loss, our method adds relationship constraint loss to exploit the relationships among abnormal medical terms, which outperforms baselines by 0.9% of AUC on IU X-Ray dataset and 1.9% on CX-CHR dataset. This demonstrates that our method is able to obtain useful visual features for correct abnormality classification via relationship constraint of medical terms. Its prominent capacity of detecting abnormalities is helpful for radiologists.

Automatic evaluation. The automatic evaluation results under several metrics are shown in the Table II. Obviously, our Relation-paraNet improves all metrics by large margins, demonstrating its effectiveness and extensiveness. CIDEr score represents inverse document frequency (IDF) of each vocabulary in the whole evaluated dataset, while BLEU-n matches n-grams within the evaluated sentences and ground truth sentences for each testing sample. In fact, improving the performance of BLEU-n with shorter n-gram (e.g., BLEU-1) is easier through generating common and seemingly correct words. On the contrary, CIDEr metric pays more attention to the critical but rare words under the consideration of IDF. Thus the CIDEr metric is more important on medical report composition, especially for the small dataset with unbalanced data.

On IU X-Ray dataset (i.e., a relatively small dataset including unbalanced data), the Relation-paraNet outperforms all baselines models (based on VGG-19 [5]) on BLEU-1,2,3,4 scores, showing that the Relational-Topic Encoder and the Adaptive Generator contribute to generating more professional reports. HRGR-Agent [1] combines both the retrieval method and the generative model with reinforcement learning. Thus, it is reasonable that HRGR-Agent achieves the higher CIDEr score. However, our Ralation-paraNet still achieves the best CIDEr score, outperforming HRGR-Agent by 2.2% due to the semantic consistency of abnormal medical terms and final reports encourage to generate rare abnormal descriptions. In addition, when employing the DenseNet-121 [4] backbone as the recent work KERP [29], we can achieve much higher performance on all automatic metrics.

On CX-CHR dataset, Relation-paraNet achieves state-of-the-art performance. Our model improves CIDEr score by 0.399, ROUGE-L score by 5.7%, BLEU-1 score by 3.8%, BLEU-2 score by 4.9%, BLEU-3 score by 5.4% and BLEU-4 score by 7.5%, compared to KERP [29]. Incorporating the semantic consistency of medical terms and considering abnormal sentences as templates, our Relation-paraNet encourages to produce reasonable and meaningful reports and achieves the best performance on automatic evaluation metrics.

To verify the effectiveness of our Relation-paraNet for medical report generation, we conduct extensive comparisons against several state-of-the-art visual captioning methods, such as AoA [40], Transformer [35] and BUTD [10]. As we discussed in Sec. II, the above attention-based methods are inapplicable to medical reports, which consists of multiple sentences or paragraphs. And the results show that our Relation-paraNet outperforms these methods on all automatic evaluation metrics, demonstrating the superiority in retrieving abnormal sentences and generation normal ones.

Human evaluation. Since Hybrid-agent [1] and KERP [29] have not released codes and models, our method is also compared with CoAtt [2] in human evaluation, similar to them. We randomly select 100 generated medical reports of baseline model CoAtt [2] and our methods in the test set. We invite five doctors to choose which one is more similar to ground truth under the consideration of abnormal findings, language fluency, and content coverage. We calculate the average preference percentages by excluding default choices (A default choice is provided in case of no or both reports are preferred), which is shown in Table IV. Obviously, our Relation-paraNet outperforms baseline a lot, indicating that it is capable of generating more accurate and reasonable reports.

Conclusion. Compared with Hybrid-agent [1], the proposed Relation-paraNet firstly preserves the superiority of bottom-up and top-down attention [10] for better semantic alignment between visual features and report descriptions. In addition, it is easier to generate abnormal descriptions by establishing the abnormal template datasets. Based on multi-layer and multi-head attention(Transformer) [35], KERP [29] built abnormality graph to learn relationships between abnormalities implicitly, the attention weights in which are lack of consistency and explanation [59]. In contrast, the Relation-paraNet contains a relationship constraint loss function to learn label co-occurrence dependencies directly. Since the popular visual captioning methods [40, 35, 10] is limited for paragraph generation, our Relation-paraNet derived the hierarchical structure from [27] and achieved excellent performance in terms of paragraph generation.

IV-C Ablation Studies

As shown in Sec. III-D, every term is necessary for the report generation task and any coefficient set to zero will lead to significant performance degradation. In this case, we paid more attention to illustrate the effectiveness of each module in our Relation-paraNet rather than adjusting these coefficients. As manifested in Table III, all those modules contribute to better performance, compared with the final Relation-paraNet scores. Additional visualization results of ablation experiments are provided in Sec. IV-D.

Sentence relation. Our model considers the sequence relationship between adjacent sentences, and encodes the last generated sentence information to guide in the next time step. This suggests once a sentence is generated correctly, it is useful to predict the next one. It encourages the Relation-paraNet to improve performance on all evaluation metrics, especially on BLEU-3 and BLEU-4.

Normal templates vs. Abnormal templates. The previous method HRGR-Agent [1] first extracts normal sentences as templates and writes a new sentence for abnormal findings. In fact, it is easy for current generative model [2] to fit common normal sentences. However, the rare abnormal sentences are more difficult to generate due to the unbalanced data. Instead, our Relation-paraNet regards the rare abnormal sentences as templates. The results show that this change can make the most improvement to our approach on the IU X-Ray dataset.

Abnormality relation. Our Relation-paraNet enforces the semantic consistency of medical terms to be incorporated into the final reports, as discussed in Sec. III-A. Under the relationship constraint, more reasonable visual features are provided for the downstream modules, especially for the attention module. As shown in Figure 4, templates retrieved by our Relation-paraNet are closely related to the abnormalities. Moreover, we can observe our model improves BLEU-n metrics by a larger margin on the IU X-Ray dataset but improves CIDEr and ROUGH-L metrics on the CX-CHR dataset. This is due to the small-scale IU X-Ray dataset, the abnormality is necessary for the report composition. Therefore, the appropriate templates related to the abnormalities can promote BLEU-n metrics. On the other hand, without abnormality relation, our model can learn abnormal features along with downstream tasks adaptively due to the large-scale CX-CHR dataset. However, uncorrected abnormalities may cause unrelated templates. Therefore, the CIDEr and ROUGH-L metrics will be lower.

IV-D Qualitative Analysis

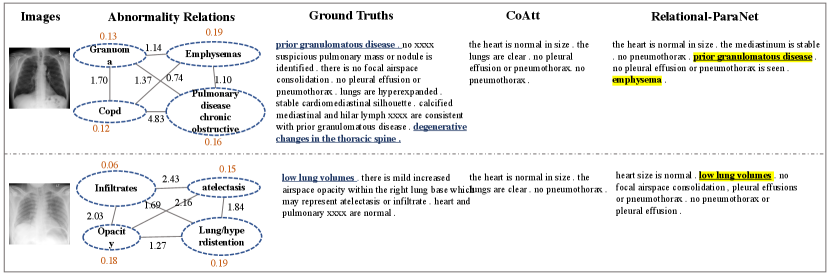

Figure 4 provides the visualized results generated by the baseline method and our Relation-paraNet on both IU X-Ray and CX-CHR dataset. It shows that CoAtt [2] generates the most common sentences due to the dataset bias but gains not bad in terms of evaluation metrics. In the human evaluation, however, the performance of CoAtt is far worse than our Relation-paraNet (see Table II), which is consistent with the visualization results. As shown in the first example, our system can produce a sentence “there are streaky bibasilar opacities” corresponding to the ground truth report. Specifically, our Relation-paraNet detects abnormality “opacity” first. Then the relation between “opacity” and “there are streaky bibasilar opacities” guides the system to choose this template. Moreover, the abnormality “lung/hypoinflated” is also related to “opacity”, so our report contains “the lungs are hypoinflated”. On CX-CHR dataset, our method also captures the critical topic accurately and generates more reasonable reports. Overall, unifying relational-topic driven retrieval and generation, our Relation-paraNet is able to write abnormal templates precisely and provide additional symptomatic references.

Additional visualization of results are shown in Figure 5 - 9. In the abnormality relation graph, orange digits represent the classification scores. Black digits on the edges represent the relevance among abnormal medical terms. The underlined text expresses alignment between the generated text and ground truth reports. Bold text indicates the correspondence of the retrieved text. And the highlighted section illustrates inferred by abnormality relation and abnormal-template relation.

V Conclusion

In this paper, we investigate incorporating hybrid knowledge co-reasoning upon the deep convolutional network to resolve the challenging medical report composition task. To enforce the semantic consistency of medical terms to be incorporated into the final reports and encourage the sentence generation for rare abnormal descriptions, we propose a novel Relation-paraNet that unifies template retrieval and sentence writing to handle both common and rare cases. Experiments on two medical report benchmarks demonstrate the superiority of our Relation-paraNet, which significantly outperforms the previous results in terms of all evaluation metrics as well as human evaluation.

In future work, we will explore the domain-specific learning difficulty issue resulting from unbalanced and insufficient datasets, build a more robust and reasonable relationship between abnormalities and corresponding abnormal templates and draw support from external medical knowledge and transfer the abstracted knowledge, to facilitate the vision-based paragraph generation.

References

- [1] C. Y. Li, X. Liang, Z. Hu, and E. P. Xing, “Hybrid retrieval-generation reinforced agent for medical image report generation,” in NeurIPS, 2018, pp. 1530–1540.

- [2] B. Jing, P. Xie, and E. Xing, “On the automatic generation of medical imaging reports,” in Proc. ACL, 2018, pp. 2577–2586.

- [3] D. Dai, J. Tang, Z. Yu, H.-S. Wong, J. You, W. Cao, Y. Hu, and C. L. P. Chen, “An inception convolutional autoencoder model for chinese healthcare question clustering,” IEEE Trans. Cybe., pp. 1–13, 2019.

- [4] G. Huang, Z. Liu, L. Van Der Maaten, and K. Q. Weinberger, “Densely connected convolutional networks,” in Proc. CVPR, 2017, pp. 4700–4708.

- [5] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” in Proc. ICLR, 2015.

- [6] X. Wang, H. Chen, C. Gan, H. Lin, Q. Dou, E. Tsougenis, Q. Huang, M. Cai, and P.-A. Heng, “Weakly supervised deep learning for whole slide lung cancer image analysis,” IEEE Trans. Cybe., vol. 50, no. 9, pp. 3950–3962, 2020.

- [7] H. Chen, L. Wu, Q. Dou, J. Qin, S. Li, J.-Z. Cheng, D. Ni, and P.-A. Heng, “Ultrasound standard plane detection using a composite neural network framework,” IEEE Trans. Cybe., vol. 47, no. 6, pp. 1576–1586, 2017.

- [8] J. Gu, Z. Lu, H. Li, and V. Li, “Incorporating copying mechanism in sequence-to-sequence learning,” in Proc. ACL, 2016, pp. 1631–1640.

- [9] L. Wang, J. Yao, Y. Tao, L. Zhong, W. Liu, and Q. Du, “A reinforced topic-aware convolutional sequence-to-sequence model for abstractive text summarization,” in Proc. IJCAI, 2018, pp. 4453–4460.

- [10] P. Anderson, X. He, C. Buehler, D. Teney, M. Johnson, S. Gould, and L. Zhang, “Bottom-up and top-down attention for image captioning and visual question answering,” in Proc. CVPR, 2018, pp. 6077–6086.

- [11] K.-M. Kim, S.-H. Choi, J.-H. Kim, and B.-T. Zhang, “Multimodal dual attention memory for video story question answering,” in Proc. ECCV, 2018, pp. 673–688.

- [12] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” in NeurIPS, 2012, pp. 1097–1105.

- [13] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proc. CVPR, 2016, pp. 770–778.

- [14] S. Hochreiter and J. Schmidhuber, “Long short-term memory,” Neural computation, vol. 9, no. 8, pp. 1735–1780, 1997.

- [15] K. Cho, B. Van Merriënboer, C. Gulcehre, D. Bahdanau, F. Bougares, H. Schwenk, and Y. Bengio, “Learning phrase representations using rnn encoder-decoder for statistical machine translation,” in Proc. EMNLP, 2014, pp. 1724–1734.

- [16] A. Karpathy and L. Fei-Fei, “Deep visual-semantic alignments for generating image descriptions,” in Proc. CVPR, 2015, pp. 3128–3137.

- [17] R. Girshick, J. Donahue, T. Darrell, and J. Malik, “Rich feature hierarchies for accurate object detection and semantic segmentation,” in Proc. CVPR, 2014, pp. 580–587.

- [18] O. Vinyals, A. Toshev, S. Bengio, and D. Erhan, “Show and tell: A neural image caption generator,” in Proc. CVPR, 2015, pp. 3156–3164.

- [19] K. Xu, J. Ba, R. Kiros, K. Cho, A. Courville, R. Salakhudinov, R. Zemel, and Y. Bengio, “Show, attend and tell: Neural image caption generation with visual attention,” in Proc. ICML, 2015, pp. 2048–2057.

- [20] N. Xu, A.-A. Liu, Y. Wong, Y. Zhang, W. Nie, Y. Su, and M. Kankanhalli, “Dual-stream recurrent neural network for video captioning,” IEEE Trans. Circuits Syst. for Video Techn., vol. 29, no. 8, pp. 2482–2493, 2019.

- [21] X. Li and S. Jiang, “Know more say less: Image captioning based on scene graphs,” IEEE Trans. Multimedia, vol. 21, no. 8, pp. 2117–2130, 2019.

- [22] L. Gao, Z. Guo, H. Zhang, X. Xu, and H. T. Shen, “Video captioning with attention-based lstm and semantic consistency,” IEEE Trans. Multimedia, vol. 19, no. 9, pp. 2045–2055, 2017.

- [23] Z. Zhang, Q. Wu, Y. Wang, and F. Chen, “High-quality image captioning with fine-grained and semantic-guided visual attention,” IEEE Trans. Multimedia, vol. 21, no. 7, pp. 1681–1693, 2019.

- [24] X. Xiao, L. Wang, K. Ding, S. Xiang, and C. Pan, “Deep hierarchical encoder-decoder network for image captioning,” IEEE Trans. Multimedia, vol. 21, no. 11, pp. 2942–2956, 2019.

- [25] S. Chen, Q. Jin, J. Chen, and A. G. Hauptmann, “Generating video descriptions with latent topic guidance,” IEEE Trans. Multimedia, vol. 21, no. 9, pp. 2407–2418, 2019.

- [26] T. Yao, Y. Pan, Y. Li, and T. Mei, “Hierarchy parsing for image captioning,” in Proc. ICCV, 2019, pp. 2621–2629.

- [27] J. Krause, J. Johnson, R. Krishna, and L. Fei-Fei, “A hierarchical approach for generating descriptive image paragraphs,” in Proc. CVPR, 2017, pp. 317–325.

- [28] X. Huang, F. Yan, W. Xu, and M. Li, “Multi-attention and incorporating background information model for chest x-ray image report generation,” IEEE Access, vol. 7, pp. 154 808–154 817, 2019.

- [29] C. Y. Li, X. Liang, Z. Hu, and E. P. Xing, “Knowledge-driven encode, retrieve, paraphrase for medical image report generation,” in Proc. AAAI, vol. 33, 2019, pp. 6666–6673.

- [30] P. Veličković, G. Cucurull, A. Casanova, A. Romero, P. Liò, and Y. Bengio, “Graph attention networks,” in Proc. ICLR, 2018.

- [31] Z. Cao, W. Li, S. Li, and F. Wei, “Retrieve, rerank and rewrite: Soft template based neural summarization,” in Proc. ACL, 2018, pp. 152–161.

- [32] Y.-C. Chen and M. Bansal, “Fast abstractive summarization with reinforce-selected sentence rewriting,” in Proc. ACL, 2018, pp. 675–686.

- [33] L. Nie, Y.-L. Zhao, M. Akbari, J. Shen, and T.-S. Chua, “Bridging the vocabulary gap between health seekers and healthcare knowledge,” IEEE Trans. Knowl. Data Engineering, vol. 27, no. 2, pp. 396–409, 2014.

- [34] D. Bahdanau, K. Cho, and Y. Bengio, “Neural machine translation by jointly learning to align and translate,” in Proc. ICLR, 2015.

- [35] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” in NeurIPS, 2017, pp. 5998–6008.

- [36] C. Hori, T. Hori, T.-Y. Lee, Z. Zhang, B. Harsham, J. R. Hershey, T. K. Marks, and K. Sumi, “Attention-based multimodal fusion for video description,” in Proc. ICCV, 2017, pp. 4193–4202.

- [37] J. Jin, K. Fu, R. Cui, F. Sha, and C. Zhang, “Aligning where to see and what to tell: image caption with region-based attention and scene factorization,” arXiv preprint arXiv:1506.06272, 2015.

- [38] J. Xu, T. Yao, Y. Zhang, and T. Mei, “Learning multimodal attention lstm networks for video captioning,” in Proc. ACM MM, 2017, pp. 537–545.

- [39] Y. Pan, T. Yao, Y. Li, and T. Mei, “X-linear attention networks for image captioning,” in Proc. CVPR, 2020, pp. 10 971–10 980.

- [40] L. Huang, W. Wang, J. Chen, and X.-Y. Wei, “Attention on attention for image captioning,” in Proc. ICCV, 2019, pp. 4634–4643.

- [41] X. Xue, W. Zhang, J. Zhang, B. Wu, J. Fan, and Y. Lu, “Correlative multi-label multi-instance image annotation,” in Proc. ICCV, 2011, pp. 651–658.

- [42] J. Wang, Y. Yang, J. Mao, Z. Huang, C. Huang, and W. Xu, “Cnn-rnn: A unified framework for multi-label image classification,” in Proc. CVPR, 2016, pp. 2285–2294.

- [43] M. Guillaumin, T. Mensink, J. Verbeek, and C. Schmid, “Tagprop: Discriminative metric learning in nearest neighbor models for image auto-annotation,” in Proc. ICCV, 2009, pp. 309–316.

- [44] Y. Gong, Y. Jia, T. Leung, A. Toshev, and S. Ioffe, “Deep convolutional ranking for multilabel image annotation,” arXiv preprint arXiv:1312.4894, 2013.

- [45] Y. Wei, W. Xia, J. Huang, B. Ni, J. Dong, Y. Zhao, and S. Yan, “Cnn: Single-label to multi-label,” arXiv preprint arXiv:1406.5726, 2014.

- [46] Z. Yan, W. Liu, S. Wen, and Y. Yang, “Multi-label image classification by feature attention network,” IEEE Access, vol. 7, pp. 98 005–98 013, 2019.

- [47] H. Chen, S. Miao, D. Xu, G. D. Hager, and A. P. Harrison, “Deep hierarchical multi-label classification of chest x-ray images,” in Porc. MLR, 2019, pp. 109–120.

- [48] Y. Wang, D. He, F. Li, X. Long, Z. Zhou, J. Ma, and S. Wen, “Multi-label classification with label graph superimposing,” in Proc. AAAI, 2020, pp. 12 265–12 272.

- [49] Y. Fang, K. Kuan, J. Lin, C. Tan, and V. Chandrasekhar, “Object detection meets knowledge graphs,” in Proc. IJCAI, 2017, pp. 1661–1667.

- [50] D. Britz, A. Goldie, M.-T. Luong, and Q. Le, “Massive exploration of neural machine translation architectures,” in Proc. EMNLP, 2017, pp. 1442–1451.

- [51] J. Donahue, L. Anne Hendricks, S. Guadarrama, M. Rohrbach, S. Venugopalan, K. Saenko, and T. Darrell, “Long-term recurrent convolutional networks for visual recognition and description,” in Proc. CVPR, 2015, pp. 2625–2634.

- [52] J. Lu, C. Xiong, D. Parikh, and R. Socher, “Knowing when to look: Adaptive attention via a visual sentinel for image captioning,” in Proc. CVPR, 2017, pp. 375–383.

- [53] S. J. Rennie, E. Marcheret, Y. Mroueh, J. Ross, and V. Goel, “Self-critical sequence training for image captioning,” in Proc. CVPR, 2017, pp. 7008–7024.

- [54] D. Demner-Fushman, M. D. Kohli, M. B. Rosenman, S. E. Shooshan, L. Rodriguez, S. Antani, G. R. Thoma, and C. J. McDonald, “Preparing a collection of radiology examinations for distribution and retrieval,” Journal of the American Medical Informatics Association, vol. 23, no. 2, pp. 304–310, 2016.

- [55] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” in Proc. ICLR, 2014.

- [56] K. Papineni, S. Roukos, T. Ward, and W.-J. Zhu, “Bleu: a method for automatic evaluation of machine translation,” in Proc. ACL, 2002, pp. 311–318.

- [57] C.-Y. Lin, “Rouge: A package for automatic evaluation of summaries,” in Text Summarization Branches Out, 2004, pp. 74–81.

- [58] R. Vedantam, C. Lawrence Zitnick, and D. Parikh, “Cider: Consensus-based image description evaluation,” in Proc. CVPR, 2015, pp. 4566–4575.

- [59] S. Jain and B. C. Wallace, “Attention is not explanation,” in Proc. EMNLP-IJCNLP, 2019, pp. 11–20.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/d00e848a-2b69-4884-bdca-dad14693aee5/FuyuWang.jpg) |

Fuyu Wang received his B.E. degree in Software Engineering from the School of Data and Computer Science, Sun Yat-sen University, Guangzhou, China, in 2018. He is currently pursuing his Ph.D. Degree in Computer Science with the School of Data and Computer Science. His current research interests include cross-modal learning and reasoning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/d00e848a-2b69-4884-bdca-dad14693aee5/xiaodan.png) |

Xiaodan Liang Xiaodan Liang is currently an Associate Professor at Sun Yat-sen University. She was a postdoc researcher in the machine learning department at Carnegie Mellon University, working with Prof. Eric Xing, from 2016 to 2018. She received her PhD degree from Sun Yat-sen University in 2016, advised by Liang Lin. She has published several cutting-edge projects on human-related analysis, including human parsing, pedestrian detection and instance segmentation, 2D/3D human pose estimation and activity recognition. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/d00e848a-2b69-4884-bdca-dad14693aee5/LinXu.jpg) |

Lin Xu received his B.E. degree in Software Engineering from the School of Data and Computer Science, Sun Yat-sen University, Guangzhou, China, in 2018. He is currently pursuing his Ph.D. Degree in Computer Science with the School of Data and Computer Science. His current research interests include cross-modal learning and reasoning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/d00e848a-2b69-4884-bdca-dad14693aee5/LiangLin.jpg) |

Liang Lin is a Full Professor of computer science at Sun Yat-sen University. He has authored or co-authored more than 200 papers in top-tier academic journals and conferences with more than 12,000 citations. He is an associate editor of IEEE Trans. Human-Machine Systems and IET Computer Vision. He served as Area Chairs for numerous conferences such as CVPR, ICCV, and IJCAI. He is the recipient of numerous awards and honors including Wu Wen-Jun Artificial Intelligence Award, CISG Science and Technology Award, ICCV Best Paper Nomination in 2019, Annual Best Paper Award by Pattern Recognition (Elsevier) in 2018, Best Paper Dimond Award in IEEE ICME 2017, Google Faculty Award in 2012, and Hong Kong Scholars Award in 2014. He is a Fellow of IET. |