Unified Single-Stage Transformer Network for Efficient RGB-T Tracking

Abstract

Most existing RGB-T tracking networks extract modality features in a separate manner, which lacks interaction and mutual guidance between modalities. This limits the network’s ability to adapt to the diverse dual-modality appearances of targets and the dynamic relationships between the modalities. Additionally, the three-stage fusion tracking paradigm followed by these networks significantly restricts the tracking speed. To overcome these problems, we propose a unified single-stage Transformer RGB-T tracking network, namely USTrack, which unifies the above three stages into a single ViT (Vision Transformer) backbone with a dual embedding layer through self-attention mechanism. With this structure, the network can extract fusion features of the template and search region under the mutual interaction of modalities. Simultaneously, relation modeling is performed between these features, efficiently obtaining the search region fusion features with better target-background discriminability for prediction. Furthermore, we introduce a novel feature selection mechanism based on modality reliability to mitigate the influence of invalid modalities for prediction, further improving the tracking performance. Extensive experiments on three popular RGB-T tracking benchmarks demonstrate that our method achieves new state-of-the-art performance while maintaining the fastest inference speed 84.2FPS. In particular, MPR/MSR on the short-term and long-term subsets of VTUAV dataset increased by 11.1/11.7 and 11.3/9.7.

1 Introduction

Visible-Thermal (RGB-T) tracking greatly expands the application scenarios of single object tracking (SOT) by using both RGB and thermal information, improving the tracking performance of SOT under challenging conditions such as illumination variation, occlusion, and extreme weather. Therefore, RGB-T tracking has become a research hotspot in recent years. Most RGB-T tracking network can be divided into three functional parts: feature extraction, feature fusion, and relation modeling between the fusion features of the template and the search region. Thanks to the rapid development of RGB tracking, existing RGB-T tracking networks directly adopt RGB tracking networks as the basic network architecture. They inherit the original manners of feature extraction and relation modeling, and then focus on the design of fusion modules. Their overall framework can be shown in Fig. 1(a).

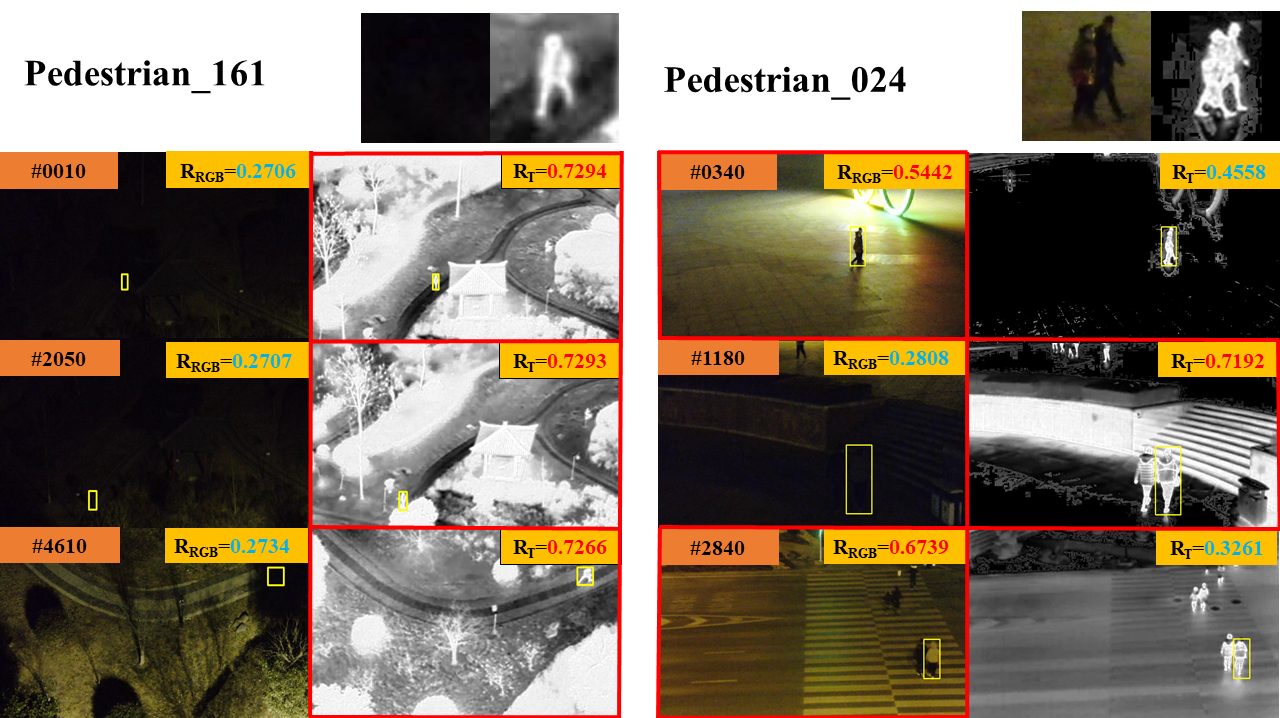

Most existing RGB-T tracking methods follow a three-stage fusion tracking paradigm. they employ two subnetworks to extract RGB and thermal features separately from the template and search region. These features are then fused using a feature fusion module to obtain the template fusion features and the search region fusion features. Subsequently, a relation modeling operation between the fusion features of the template and search region is performed. After relation modeling, the processed search region fusion features are then utilized for prediction. However, the separate subnetworks lead to the lack of interaction between the two modalities during the feature extraction stage. As a consequence, the network can only extract regular features from each modality, rather than the dynamic features with an effective adjustment based on the state of modalities. However, as shown in Fig. 2, such pattern is not fit to RGB-T tracking especially in complex environments, because different targets have diverse dual-modality appearances, and the appearances of both modalities can change continuously with the tracking environment. Temporary changing or missing appearances in the corresponding modality frequently happened due to the factors like occlusion, illumination variation, or thermal, which leads to the regions covered by the appearances of both modalities are not always consistent. In addition, three-stage fusion tracking paradigm is really difficult to balance the performance and speed.

We propose a unified single-stage Transformer RGB-T tracking network USTrack to solve the above problems. As shown in Fig. 1(b), the core of USTrack is to unify feature extraction, feature fusion, and relation modeling into a single ViT (Dosovitskiy et al. 2020) backbone through the self-attention mechanism for simultaneous execution, efficiently obtaining search region fusion features used for prediction. Specifically, we first map the image patches from two modalities to appropriate latent spaces through a dual embedding layer to align the patterns and mitigate the impact of intrinsic heterogeneity for feature fusion. Within the attention layer of the ViT backbone, we perform the same operation on the template and search region, respectively. First, we concatenate the tokens from both modalities and then apply a self-attention mechanism to the concatenated features, directly extracting the template fusion features and search region fusion features. This process unifies the extraction and fusion of modality features, facilitating interaction between modalities during the feature extraction stage. The network can adaptively learn the semantic similarity between two modalities features based on attention weights, and use this similarity to model modality-sharing information. In the feature extraction stage, one modality can selectively acquire modality-specific information from another modality based on modality-sharing information, thereby guiding and adjusting the features to be extracted from itself. This enables the network to better adapt to diverse dual-modality appearances of targets and the dynamic relationships between the modalities.

For the method of relation modeling between the template fusion features and the search region fusion features, inspired by the RGB tracking methods OSTrack (Ye et al. 2022) and SimTrack (Chen et al. 2022), we adopt performing the self-attention on the concatenated template and search region fusion features as our relation modeling manner. so that the network can enhance the target-background discriminability of the extracted search region fusion features under the guidance of two templates. To further improve the inference speed of the network without adding additional attention layers, we further unify the self-attention used for extracting fusion features with the self-attention used for relation modeling seamlessly. Through parallel execution, we significantly accelerate the inference speed.

In this way, we can obtain two search region fusion features based on different modalities for predicting the results. Unlike other transformer-based RGB-T tracking networks (Xiao et al. 2022; Hou, Ren, and Wu 2022; Hui et al. 2023) that directly concatenate two fusion features, we propose a feature selection mechanism based on modality reliability to reduce the impact of noise information of invalid modality on prediction. This mechanism can adaptively select the fusion feature more suitable for the tracking environment for prediction, further improving the tracking performance. To the best of our knowledge, USTrack is currently the first network to efficiently achieve RGB-T tracking without the use of any additional fusion modules. Our contributions are summarized as follows:

-

•

We propose a single-stage Transformer RGB-T tracking network USTrack which can extract fusion features of templates and search regions under the interaction of modalities, and simultaneously perform the relation modeling to further improve the tracking speed.

-

•

We propose a feature selection mechanism based on modality reliability, which can select appropriate fusion features from two fusion features based on different modalities according to the specific tracking environment to predict the results.

- •

2 Related Work

RGB-T Tracking. In this part, we only summarize the Transformer-based RGB-T tracking methods. With the introduction of Transformer into RGB-T tracking, attention mechanism was first attempted for feature fusion. DRGCNet (Mei et al. 2023) and MIRNet (Hou, Ren, and Wu 2022) use cross-attention to enhance discriminative features from one modality to another, and assign adaptive weights to features of two modalities through gating mechanism to filter redundant and noise information. APFNet (Xiao et al. 2022) proposes an attribute-based progressive fusion network, which enhances the discriminative information specific to challenging attributes through cross-attention. However, the aforementioned Transformer-based RGB-T tracking methods are designed within a detection-based tracking framework(Nam and Han 2015). On one hand, during the feature extraction stage, the modality features lack interaction due to the limited global context modeling capability of convolutional neural networks. On the other hand, although RGB-T tracking networks (Hou, Ren, and Wu 2022) based on RT-MDNet (Jung et al. 2018) have barely achieved real-time inference speed, they still follow a three-stage tracking paradigm, which first extracts modality features separately, then fuses features through various attention mechanism, and finally perform the relation modeling operation between the template and search region through online training and continuous fine-tuning, resulting in significant speed bottlenecks for these RGB-T tracking networks.

The latest works TBSI (Hui et al. 2023) and ViPT (Zhu et al. 2023) adopt the powerful and efficient RGB tracking network OSTrack (Ye et al. 2022) as their base network architecture. However, they still design the fusion module as a separate component, which is inserted between two Transformer encoders to obtain the fused features of the template and search regions. The fused features are then fed into the encoders for joint feature extraction and relation modeling through self-attention mechanism. We summarize these latest RGB-T tracking methods as a two-stage RGB-T tracking network. It is worth noting that, unlike ViPT (Zhu et al. 2023) that fixes the pre-training parameters of the backbone and only designs a simple fusion module to obtain the fusion features, TBSI (Hui et al. 2023) achieves feature fusion by inserting a complex cross-attention fusion module between Transformer encoders. It uses stacked extraction-fusion modules as the backbone of the network. This approach alleviates the lack of interaction between modalities during the feature extraction stage and significantly improves the performance. However, due to the addition of the extra complex cross-attention fusion module, it does not fully leverage the inference efficiency of OSTrack (Ye et al. 2022), resulting in barely achieving real-time performance. In order to achieve more efficient and concise interaction between modalities during the feature extraction stage, We attempt to unify feature extraction and feature fusion through the self-attention mechanism to directly extract fusion features of the template and search region, instead of designing complex additional feature fusion modules.

RGB Tracking. From the perspective of relation modeling methods, we provide a brief overview of the development of RGB tracking network frameworks which were adopted by existing RGB-T tracking methods as basic architecture. According to different tracking mechanism, early RGB tracking methods can be divided into two frameworks. One is detection-based tracking framework (Nam and Han 2015; Jung et al. 2018), which models the relation between templates and search regions through online training and continuous fine-tuning, resulting in significant speed bottlenecks. The other is two-stream two-stage tracking framework, which performs the relation modeling through cross-correlation(Bertinetto et al. 2016), discriminative correlation(Zhang et al. 2019), or complex cross-attention(Chen et al. 2021). Methods based on this framework have fast inference speed. They extract the features of the template and the search region respectively, and then perform the relation modeling between them. However, two-stage tracking paradigm will limit the target-background discriminability of the search region features. RGB-T tracking networks using these tracking frameworks as the basic network architecture also face the same problems.

To overcome the problems faced by early tracking frameworks, SimTrack (Chen et al. 2022) and OSTrack (Ye et al. 2022) perform relation modeling by directly applying self-attention on the concatenated templates and search region features, thereby enhancing the target-background discriminability of search region features under the guidance of templates. Inspired by them, we attempt to directly perform self-attention on the concatenated template and search region fusion features as our relation modeling manner, which allows the network to extract the search region fusion features under the guidance of the two templates, obtaining the search region fusion features with better target-background discriminability. With the scalability of self-attention mechanisms, we further unify the self-attention used for fusion feature extraction with the self-attention used for relation modeling for parallel execution, thereby significantly improving the inference speed of the RGB-T tracking network.

3 Unified Single-Stage RGB-T Tracking

Overview. As shown in Fig. 3, the overall architecture of USTrack consists of three components: a dual embedding layer, a single ViT backbone and the dual prediction heads with a feature selection mechanism based on modality reliability. Self-attention is based on similarity to obtain global information, the inherent heterogeneity between modalities may limit the network’s ability to model modality-sharing information through attention weights, thereby affecting the subsequent fusion process. Therefore, we use two learnable embedding layers to map inputs belonging to different modalities into a latent space that is conducive to fusion. We choose ViT as our backbone network, utilizing its self-attention layers to simultaneously perform feature extraction, feature fusion, and relation modeling between fusion features from the template and the search region, obtaining search region fusion features that contain relation information for prediction. Considering that a single modality may not be suitable for all tracking scenarios, such as darkness, occlusion and thermal crossover, where the corresponding modality has already lost all information about the target’s appearance and generates a significant amount of noise information. Feature selection mechanism based on modality reliability helps the network select search region fusion features generated by the modality that is more suitable for the current tracking scenario, reducing the influence of noise caused by ineffective modalities on the prediction results.

3.1 Dual Embedding Layer

The input of USTrack is a pair of target template images and a pair of search region images, consisting of a total of four images, namely, the RGB template image , the RGB search region image , the thermal template image and the thermal search region image . They are first split and flattened into sequences of patches and , where is the resolution of each patch, and , are the number of patches of template and search region respectively. Then, two trainable linear projection layers with parameters and are used to project , and , into dimension latent space. The output of this projection are four patch embeddings , and , . Learnable position embeddings and are added to the template patch embeddings , and search region patch embeddings , separately, and Learnable modality embeddings and are added to the RGB patch embeddings , and thermal patch embeddings , separately. The patch embeddings after adding position and modality embeddings are final features called token embeddings. The above operations can be represented as follows:

| (1) |

| (2) |

| (3) |

| (4) |

After passing the dual embedding layer, RGB template token embeddings , thermal template token embeddings , RGB search region token embeddings and thermal search region token embeddings will be input into the backbone for subsequent processing.

3.2 Single ViT Backbone

The self-attention mechanism is the core component of the ViT, and it is also the key to performing joint feature extraction, feature fusion and relation modeling in a single ViT backbone. From the perspective of the self-attention mechanism, we take the RGB search region token embeddings as an example to further analyze the intrinsic reasons why the proposed network is able to realize simultaneous feature extraction, feature fusion and relation modeling.

In the attention layer, the token sequences , , , from dual embedding layers are concatenated as . Then Self-attention operation is performed on as follows:

| (5) |

| (6) |

| (7) |

where is the output of self-attention operation. is the attention weight. , , and are query, key and value matrices separately. The superscripts and denote matrix items belonging to the template and search region. The subscripts and denote matrix items belonging to the RGB modality and thermal modality. The calculation of attention weights in Eq. (6) can be expanded to follows:

| (8) | ||||

where the left part of Eq. (8) represents the calculation and the output of attention weights between the RGB search region tokens and the other inputs. the output of self-attention operation can be further written as follows:

| (9) | |||

where the left part of Eq. (9) is the output corresponding to the RGB search region tokens after the self-attention operation. is responsible for aggregating the RGB search region image feature (RGB modality feature extraction). is responsible for aggregating the thermal modality-specific information based on semantic similarity between two modalities features (feature fusion and modality features interaction). The attention weights can intuitively measure the semantic similarity between modalities. Network can model modality-sharing information based on this similarity. The aggregation of complementary information enables the network to promptly adjust the subsequent extraction of features in RGB search region image. is responsible for aggregating RGB template image feature to further obtain the relation information between the RGB template and the RGB search region (relation modeling based on modality-specific information). is responsible for aggregating thermal template image feature to further obtain the relation information between the thermal template and the RGB search region (relation modeling based on modality-sharing information). The RGB search region fusion features, which contains relation information, can be used for prediction. Therefore, with the global perception ability of the self-attention, we seamlessly unify feature extraction, feature fusion, and relation modeling into a single ViT backbone. The network can directly extract fusion features of the template and search region under the mutual interaction of modalities, and simultaneously performs relation modeling between fusion features of the template and search region. This alleviates the lack of interaction and guidance between modalities during the feature extraction stage, as well as the problem of additional fusion modules significantly affecting the inference speed of the RGB-T tracking network. Additionally, by inheriting the advantages of relation modeling which is performed by the self-attention, the network can extract more target-specific search region fusion features for prediction under the guidance of two templates.

3.3 Feature Selection Mechanism

After passing the ViT backbone, two search region fusion features can be obtained for final prediction: Thermal-assisted RGB fusion features based on the RGB search region image, and RGB-assisted thermal fusion features based on the thermal search region image. Both fusion features contain the fusion information of modalities and the relation information between the template and the search region, which can be directly used for target position prediction. To fully exploit the modality-sharing information and the modality-specific information of the modality that is more suitable for the current scene. As shown in Fig. 3(b), during the training phase, we equip each fusion feature with a prediction module and a reliability evaluation head. We set the same loss for each prediction head and let each reliability evaluation module output a weight as the adaptive reliabilty for the loss of the corresponding prediction head. Then, they are combined into a final total loss function for training. This method allows the modality that is not suitable for the current scene to produce inferior results, and then guides the modality reliability evaluation module to assign smaller weights to its loss by minimizing the overall loss function. Conversely, for fusion features that are more suitable for the current scene, it assigns larger weights. During the testing phase, the network will simultaneously output two results and evaluate the reliability of both modalities. Based on the reliability and , we select the predicted results with higher reliability scores as the final output.

3.4 Dual Prediction Heads and Loss

We adopt the prediction head of OSTrack (Ye et al. 2022) directly as our prediction head. The detailed information and corresponding settings can be found in OSTrack. The loss corresponding to the two prediction heads are set as follows:

| (10) |

| (11) |

where and are the overall loss function for each prediction head, and are the weighted focal loss for classification, and are the loss, and are the generalized IoU loss, and and are the regularization parameters. On the basis, a modality reliability evaluation module is added to each search region fusion features. The evaluation module is a fully convolutional neural network, which consists of several stacked Conv-BN-ReLU layers. Two modality reliability evaluation modules will output the reliability scores corresponding to two search region fusion features , respectively. In order to prevent the model from directly making the weight and zero to minimize the overall loss during the training process, we softmax the reliability scores to obtains the adaptive weight and and overall loss as follows:

| (12) |

| (13) |

where and are used as the adaptive weights of the loss of the two prediction heads, and the two losses are combined together as the overall loss to train the model.

4 Experiment

4.1 Experiment Settings

We compare our method with previous state-of-the-art RGB-T tracking methods on three benchmarks including VTUAV, RGBT234, and GTOT. GTOT and RGBT234 use success rate SR and precision rate PR as evaluation metrics. To mitigate small alignment errors, VTUAV use Maximum Precision Rate MPR and Maximum Success Rate MSR as evaluation metrics. SR measures the ratio of tracked frames, determined by the Interaction-over-Union (IoU) between tracking result and ground truth. With different overlap thresholds, a success plot (SP) can be obtained, and SR is calculated as the area under curve of SP. MSR adopts the maximum overlap in frame level as the final score. PR measures the percentage of frames whose distance between the predicted position and the ground truth is less than a certain threshold . Similar to MSR, MPR adopt the smaller center distance as the final score. is set to 20 in our experiment.

Our model is implemented based on Python 3.8, PyTorch 2.0.0. All experiments are conducted on one NVIDIA RTX3090 GPU. We adopt AdamW as the optimizer with 1e-4 weight decay. The learning rate is set as 4e-5 for the backbone and 4e-4 for other parameters. The search regions are resized to 256×256 and templates are resized to 128×128. Each batch size is set to 24, and each epoch contains 30k image pairs. In order to fairly compare our method with other SOTA methods and demonstrate its effectiveness, we aligned our experimental conditions with other methods. We pretrained the network on RGB tracking datasets such as COCO (Lin et al. 2014), LaSOT (Fan et al. 2018), GOT-10k (Huang, Zhao, and Huang 2018), and TrackingNet (Muller et al. 2018). When testing on the GTOT and RGBT234, we only used LasHeR as the training set. When testing on the short-term and long-term testing sets of VTUAV, we only use the training set of VTUAV for training.

4.2 Comparison with State-of-the-art Methods

We test our network USTrack on three popular RGB-T tracking benchmarks, comparing performance and speed with the SOTA trackers, such as FSRPN, mfDimp, DAFNet , DAPNet, MANet, CAT, CMPP, JMMAC, MANet++, ADRNet, SiamCDA, M5LNet, TFNet, DMCNet, MFGNet, APFNet, HMFT, MIRNet, ECMD, ViPT and TBSI, to validate the effectiveness of our method. The test results on three datasets show that our method has achieved significant improvements in both performance and inference speed.

Evaluation on VTUAV Dataset. VTUAV dataset is the latest and largest RGB-T tracking dataset, which is currently the only dataset that provides a test for long-term tracking performance of RGB-T tracking methods. Long-term sequences can effectively demonstrate the differences in the target appearances of different modalities, and the persistent changing relationships of two appearances state during the tracking process. As shown in Tab. 1 and Fig. 4, despite USTrack being a short-term tracking network with no template updating or local-to-global strategies for long-term tracking, we still conduct tests on the short-term and long-term subsets of VTUAV to verify the tracking performance of USTrack. The results were very satisfactory. Compared to the best performing method HMFT and HMFT-LT, we achieved 11.1/11.7 and 11.3/9.7 increases in MPR/MSR on the VTUAV short-term dataset and VTUAV long-term dataset, respectively. Our speed was also 2.78 times and 10.4 times faster than the SOTA method HMFT and HMFT-LT (Zhang et al. 2022a), significantly surpassing the baseline methods of the benchmark dataset.

It is worth mentioning that, to our knowledge, HMFT-LT is currently the only long-term RGB-T tracking method that utilizes the local-to-global tracking strategy. We have achieved significantly better tracking performance on long-term datasets compared to HMFT-LT at ten times the speed of HMFT-LT. Furthermore, we have achieved the best results on all subsets of challenge attributes in VTUAV, especially those that will lead to continuous changes in the state of modality appearance, such as occlusion, extreme lighting, etc. Detailed test results are provided in the supplementary materials. Above results demonstrate the excellent adaptability of our method to the diverse dual-modality appearances of targets and the dynamic relationship between modalities, fully demonstrating the effectiveness and efficiency of USTrack, which can directly extract dynamic fusion features of templates and search regions under the interaction of modalities, and simultaneously perform the relation modeling to further improve the tracking speed.

| Method | Pub | GTOT | RGBT234 | VTUAV-short | VTUAV-long | Speed | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| PR | SR | PR | SR | MPR | MSR | MPR | MSR | FPS | ||

| FSRPN (Kristan, Matas et al. 2019) | ICCVW’19 | 89.0 | 69.5 | 71.9 | 52.5 | 65.3 | 54.4 | 36.6 | 31.4 | 36.8 |

| mfDimp (Zhang et al. 2019) | ICCVW’19 | 83.6 | 69.7 | 84.6 | 59.1 | 67.3 | 55.4 | 31.5 | 27.2 | 34.6 |

| DAFNet (Gao et al. 2019) | ICCVW’19 | 89.1 | 71.6 | 79.6 | 54.4 | 62.0 | 45.8 | 25.3 | 18.8 | 20.5 |

| DAPNet (Zhu et al. 2019) | ACM MM’19 | 88.2 | 70.7 | 76.6 | 53.7 | - | - | - | - | - |

| MANet (Lu et al. 2020a) | TIP’20 | 89.4 | 72.4 | 77.7 | 53.9 | - | - | - | - | 2.1 |

| CAT (Li et al. 2020) | ECCV’20 | 88.9 | 71.7 | 80.4 | 56.1 | - | - | - | - | - |

| CMPP (Wang et al. 2020) | CVPR’20 | 92.6 | 73.8 | 82.3 | 57.5 | - | - | - | - | - |

| JMMAC (Zhang et al. 2020) | TIP’21 | 90.2 | 73.2 | 79.0 | 57.3 | - | - | - | - | - |

| MANet++ (Lu et al. 2020b) | TIP’21 | 88.2 | 70.7 | 79.5 | 55.9 | - | - | - | - | 25.4 |

| ADRNet (Zhang et al. 2021) | IJCV’21 | 90.4 | 73.9 | 80.7 | 57.1 | 62.2 | 46.6 | 23.5 | 17.5 | 25.0 |

| SiamCDA (Zhang et al. 2022b) | TCSVT’21 | 87.7 | 73.2 | 79.5 | 54.2 | - | - | - | - | 24.0 |

| M5LNet (Tu et al. 2021) | TIP’22 | 89.6 | 71.0 | 79.5 | 54.2 | - | - | - | - | 9.0 |

| TFNet (Zhu et al. 2022) | TCSVT’22 | 88.6 | 72.9 | 80.6 | 56.0 | - | - | - | - | - |

| DMCNet (Lu et al. 2020c) | TNNLS’22 | - | - | 83.9 | 59.3 | - | - | - | - | - |

| MFGNet (Wang et al. 2021) | TMM’22 | 88.9 | 70.7 | 78.3 | 53.5 | - | - | - | - | 3.0 |

| APFNet (Xiao et al. 2022) | AAAI’22 | 90.5 | 73.7 | 82.7 | 57.9 | - | - | - | - | 1.9 |

| HMFT (Zhang et al. 2022a) | CVPR’22 | 91.2 | 74.9 | 78.8 | 56.8 | 75.8 | 62.7 | 41.4 | 35.5 | 30.2 |

| HMFTLT (Zhang et al. 2022a) | CVPR’22 | - | - | - | - | - | - | 53.6 | 46.1 | 8.1 |

| MIRNet (Hou, Ren, and Wu 2022) | ICME’23 | 90.9 | 74.4 | 81.6 | 58.9 | - | - | - | - | 30.0 |

| ECMD (Zhang et al. 2023) | CVPR’23 | 90.7 | 73.5 | 84.4 | 60.1 | - | - | - | - | 18.0 |

| ViPT (Zhu et al. 2023) | CVPR’23 | - | - | 83.5 | 61.7 | - | - | - | - | - |

| TBSI (Hui et al. 2023) | CVPR’23 | - | - | 87.1 | 63.7 | - | - | - | - | 36.2 |

| USTrack (Ours) | - | 93.4 | 78.3 | 87.4 | 65.8 | 86.9 | 74.4 | 64.9 | 55.8 | 84.2 |

Evaluation on RGBT234 Dataset. RGBT234 is currently the most widely used large-scale RGB-T tracking benchmark dataset, consisting of 234 highly aligned image pairs with about 234K image pairs in total. As shown in Tab.1, compared to the most advanced tracker TBSI, our scores on the metrics PR/SR increased by 0.3/ 2.1, and our speed is twice that of TBSI. Both performance and efficiency can prove the effectiveness and efficiency of our method.

Evaluation on GTOT Dataset. GTOT is the first standard dataset in the field of RGB-T tracking. It contains 50 RGB-T video sequences and 7 challenge attributes. We also conducted testing on this dataset and achieved SOTA performance. The test results are shown in Tab. 1. compared with the SOTA methods CMPP and HMFT, our PR/SR scores improved by 0.8/4.5 and 2.2/3.4 respectively, while maintaining the fastest inference speed.

4.3 Ablation Experiment and Analysis

Ablation of Dual Embedding Layer. To verify the effectiveness of the dual embedding layer structure, we conducted ablation experiments on the RGBT234 dataset. As a comparison, we have all inputs use the same embedding layer. The results of the ablation experiment are shown in Tab. 2. The single embedded layer structure resulted in a performance decrease of 1.8 and 2.6 in PR and SR scores. The results show that the use of two independent embedding layers can map the features of two modalities into the latent space conducive to fusion, which can alleviate the impact of the intrinsic heterogeneity of modalities on feature fusion based on attention weight.

| Method | PR | SR |

|---|---|---|

| Single embedding layer | 85.6 | 63.2 |

| Dual embedding layer (Ours) | 87.4 | 65.8 |

| Method | PR | SR |

|---|---|---|

| RGB search region | 86.2 | 64.2 |

| Thermal search region | 86.3 | 64.7 |

| Concatenated region | 86.8 | 64.2 |

| Dual predictors with selection (Ours) | 87.4 | 65.8 |

Ablation of Feature Selection Mechanism. In order to verify the effectiveness of the feature selection mechanism based on modality reliability, we conducted comparative experiments with several common prediction head structures on RGBT234. We set up single prediction head based on single search region fusion features and single prediction head based on the concatenated fusion features of the two search regions, the experimental results are shown in Tab. 3. Compared to other prediction heads, our method performs better. As shown in Fig. 5, we also visualized the actual test sequence, and the visualization results showed that our modality reliability had a good correspondence with the real scene. USTrack will select search region fusion features with high reliablity scores to output better prediction results.

5 Conclusion

In this paper, we propose a highly efficient unified single-stage Transformer RGB-T tracking network USTrack. The core idea of USTrack unifies the three stages of RGB-T tracking network into a single ViT backbone through self-attention mechanism. USTrack can directly extracts fusion features of template and search region under the interaction of modalities, and simultaneously perform the relation modeling between template fusion features and search region fusion features. Additionally, we propose a feature selection mechanism based on modality reliability to achieve better prediction results. Extensive experiments on three popular RGB-T tracking benchmarks demonstrate that our method achieves state-of-the-art performance while maintaining the fastest inference speed 84.2FPS. In particular, evaluation metrics MPR/MSR on the short-term and long-term subsets of the VTUAV increased by 11.1/11.7 and 11.3/9.7.

Limitation. USTrack is still a local tracker. In the future, we will explore some efficient local-to-global tracking strategies that are more suitable for RGB-T tracking, further improving the RGB-T tracking performance.

References

- Bertinetto et al. (2016) Bertinetto, L.; Valmadre, J.; Henriques, J. F.; Vedaldi, A.; and Torr, P. H. S. 2016. Fully-Convolutional Siamese Networks for Object Tracking. ArXiv, abs/1606.09549.

- Chen et al. (2022) Chen, B.; Li, P.; Bai, L.; Qiao, L.; Shen, Q.; Li, B.; Gan, W.; Wu, W.; and Ouyang, W. 2022. Backbone is All Your Need: A Simplified Architecture for Visual Object Tracking. In ECCV.

- Chen et al. (2021) Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; and Lu, H. 2021. Transformer Tracking. CVPR, 8122–8131.

- Dosovitskiy et al. (2020) Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; Uszkoreit, J.; and Houlsby, N. 2020. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. ArXiv, abs/2010.11929.

- Fan et al. (2018) Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; and Ling, H. 2018. LaSOT: A High-Quality Benchmark for Large-Scale Single Object Tracking. CVPR, 5369–5378.

- Gao et al. (2019) Gao, Y.; Li, C.; Zhu, Y.; Tang, J.; He, T.; and Wang, F. 2019. Deep Adaptive Fusion Network for High Performance RGBT Tracking. ICCVW, 91–99.

- Hou, Ren, and Wu (2022) Hou, R.; Ren, T.; and Wu, G. 2022. MIRNet: A Robust RGBT Tracking Jointly with Multi-Modal Interaction and Refinement. ICME, 1–6.

- Huang, Zhao, and Huang (2018) Huang, L.; Zhao, X.; and Huang, K. 2018. GOT-10k: A Large High-Diversity Benchmark for Generic Object Tracking in the Wild. TPAMI, 43: 1562–1577.

- Hui et al. (2023) Hui, T.; Xun, Z.; Peng, F.; Huang, J.; Wei, X.; Wei, X.; Dai, J.; Han, J.; and Liu, S. 2023. Bridging Search Region Interaction With Template for RGB-T Tracking. In CVPR, 13630–13639.

- Jung et al. (2018) Jung, I.; Son, J.; Baek, M.; and Han, B. 2018. Real-Time MDNet. In ECCV.

- Kristan, Matas et al. (2019) Kristan, M.; Matas, J.; et al. 2019. The Seventh Visual Object Tracking VOT2019 Challenge Results. ICCVW, 2206–2241.

- Li et al. (2016) Li, C.; Cheng, H.; Hu, S.; Liu, X.; Tang, J.; and Lin, L. 2016. Learning Collaborative Sparse Representation for Grayscale-Thermal Tracking. TIP, 25: 5743–5756.

- Li et al. (2019) Li, C.; Liang, X.; Lu, Y.; Zhao, N.; and Tang, J. 2019. RGB-T object tracking: Benchmark and baseline. PR, 96: 106977.

- Li et al. (2020) Li, C.; Liu, L.; Lu, A.; Ji, Q.; and Tang, J. 2020. Challenge-aware RGBT tracking. In ECCV, 222–237. Springer.

- Lin et al. (2014) Lin, T.-Y.; Maire, M.; Belongie, S. J.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; and Zitnick, C. L. 2014. Microsoft COCO: Common Objects in Context. In ECCV.

- Lu et al. (2020a) Lu, A.; Li, C.; Yan, Y.; Tang, J.; and Luo, B. 2020a. RGBT Tracking via Multi-Adapter Network with Hierarchical Divergence Loss. TIP, 30: 5613–5625.

- Lu et al. (2020b) Lu, A.; Li, C.; Yan, Y.; Tang, J.; and Luo, B. 2020b. RGBT Tracking via Multi-Adapter Network with Hierarchical Divergence Loss. TIP, 30: 5613–5625.

- Lu et al. (2020c) Lu, A.; Qian, C.; Li, C.; Tang, J.; and Wang, L. 2020c. Duality-Gated Mutual Condition Network for RGBT Tracking. TNNLS, PP.

- Mei et al. (2023) Mei, J.; Zhou, D.; Cao, J.; Nie, R.; and He, K. 2023. Differential Reinforcement and Global Collaboration Network for RGBT Tracking. Sensors, 23: 7301–7311.

- Muller et al. (2018) Muller, M.; Bibi, A.; Giancola, S.; Alsubaihi, S.; and Ghanem, B. 2018. Trackingnet: A large-scale dataset and benchmark for object tracking in the wild. In ECCV, 300–317.

- Nam and Han (2015) Nam, H.; and Han, B. 2015. Learning Multi-domain Convolutional Neural Networks for Visual Tracking. CVPR, 4293–4302.

- Tu et al. (2021) Tu, Z.; Lin, C.; Zhao, W.; Li, C.; and Tang, J. 2021. M5L: Multi-Modal Multi-Margin Metric Learning for RGBT Tracking. TIP, 31: 85–98.

- Wang et al. (2020) Wang, C.; Xu, C.; Cui, Z.; Zhou, L.; Zhang, T.; Zhang, X.; and Yang, J. 2020. Cross-Modal Pattern-Propagation for RGB-T Tracking. CVPR, 7062–7071.

- Wang et al. (2021) Wang, X.; Shu, X.; Zhang, S.; Jiang, B.; Wang, Y.; Tian, Y.; and Wu, F. 2021. MFGNet: Dynamic Modality-Aware Filter Generation for RGB-T Tracking. ArXiv, abs/2107.10433.

- Xiao et al. (2022) Xiao, Y.; Yang, M.; Li, C.; Liu, L.; and Tang, J. 2022. Attribute-Based Progressive Fusion Network for RGBT Tracking. In AAAI.

- Ye et al. (2022) Ye, B.; Chang, H.; Ma, B.; Shan, S.; and Chen, X. 2022. Joint feature learning and relation modeling for tracking: A one-stream framework. In ECCV, 341–357. Springer.

- Zhang et al. (2019) Zhang, L.; Danelljan, M.; Gonzalez-Garcia, A.; van de Weijer, J.; and Khan, F. S. 2019. Multi-Modal Fusion for End-to-End RGB-T Tracking. ICCVW, 2252–2261.

- Zhang et al. (2021) Zhang, P.; Wang, D.; Lu, H.; and Yang, X. 2021. Learning Adaptive Attribute-Driven Representation for Real-Time RGB-T Tracking. IJCV, 129: 2714 – 2729.

- Zhang et al. (2020) Zhang, P.; Zhao, J.; Bo, C.; Wang, D.; Lu, H.; and Yang, X. 2020. Jointly Modeling Motion and Appearance Cues for Robust RGB-T Tracking. TIP, 30: 3335–3347.

- Zhang et al. (2022a) Zhang, P.; Zhao, J.; Wang, D.; Lu, H.; and Ruan, X. 2022a. Visible-Thermal UAV Tracking: A Large-Scale Benchmark and New Baseline. CVPR, 8876–8885.

- Zhang et al. (2023) Zhang, T.; Guo, H.; Jiao, Q.; Zhang, Q.; and Han, J. 2023. Efficient RGB-T Tracking via Cross-Modality Distillation. In CVPR, 5404–5413.

- Zhang et al. (2022b) Zhang, T.; Liu, X.; Zhang, Q.; and Han, J. 2022b. SiamCDA: Complementarity- and Distractor-Aware RGB-T Tracking Based on Siamese Network. TCSVT, 32: 1403–1417.

- Zhu et al. (2023) Zhu, J.; Lai, S.; Chen, X.; Wang, D.; and Lu, H. 2023. Visual prompt multi-modal tracking. In CVPR, 9516–9526.

- Zhu et al. (2019) Zhu, Y.; Li, C.; Luo, B.; Tang, J.; and Wang, X. 2019. Dense Feature Aggregation and Pruning for RGBT Tracking. ACM MM.

- Zhu et al. (2022) Zhu, Y.; Li, C.; Tang, J.; Luo, B.; and Wang, L. 2022. RGBT Tracking by Trident Fusion Network. TCSVT, 32: 579–592.