Uncertainty Intervals for Prediction Errors in Time Series Forecasting

Abstract

Inference for prediction errors is critical in time series forecasting pipelines. However, providing statistically meaningful uncertainty intervals for prediction errors remains relatively under-explored. Practitioners often resort to forward cross-validation (FCV) for obtaining point estimators and constructing confidence intervals based on the Central Limit Theorem (CLT). The naive version assumes independence, a condition that is usually invalid due to time correlation. These approaches lack statistical interpretations and theoretical justifications even under stationarity.

This paper systematically investigates uncertainty intervals for prediction errors in time series forecasting. We first distinguish two key inferential targets: the stochastic test error over near future data points, and the expected test error as the expectation of the former. The stochastic test error is often more relevant in applications needing to quantify uncertainty over individual time series instances. To construct prediction intervals for the stochastic test error, we propose the quantile-based forward cross-validation (QFCV) method. Under an ergodicity assumption, QFCV intervals have asymptotically valid coverage and are shorter than marginal empirical quantiles. In addition, we also illustrate why naive CLT-based FCV intervals fail to provide valid uncertainty intervals, even with certain corrections. For non-stationary time series, we further provide rolling intervals by combining QFCV with adaptive conformal prediction to give time-average coverage guarantees. Overall, we advocate the use of QFCV procedures and demonstrate their coverage and efficiency through simulations and real data examples.

††Code for our experiments is available at https://github.com/huixu18/QFCV.1 Introduction

Inference for prediction errors is crucial in time series forecasting [Ham20], with applications in a variety of domains including economics and inance [FVD00, Coc97], healthcare [LLC+15], supply chain [Avi03, Gil05], and energy forecasting [DZY+17]. While many point estimators for prediction error have been proposed [Rac00, BB11, CTM20, PAA+22, HAB23], uncertainty quantification for prediction error in time series remains relatively under-explored. Indeed, existing naive methods for uncertainty quantifications of prediction errors tend to lack statistically meaningful interpretations.

In particular, a widely used approach for inference for prediction error is cross-validation (CV) [All74, Gei75, Sto74]. Cross-validation is a resampling method that uses the prediction error of sub-sampled folds to infer the true prediction error. Although widely studied for independent, identically distributed (IID) data, cross-validation is often adapted to time series settings. To account for the temporal correlation in time series, each fold usually comprises continuous time windows instead of IID-resampled time indices. After dividing into multiple folds, practitioners usually use the mean and standard deviation of the validation errors to give an uncertainty interval for the prediction error, similar to the IID case. We call these CLT-based uncertainty intervals the “naive forward cross-validation” (FCV) intervals. However, it is not clear whether naive FCV intervals provide statistically meaningful coverage for prediction errors.

To provide statistically meaningful uncertainty quantification, we should first clarify our inferential target and define prediction error precisely in the time series setting. Suppose we are given a forecaster trained on historical data. A natural notion of the inferential target is the forecaster’s average loss over near future test points, a random variable called the stochastic test error (). Another natural target is , the expectation of over all randomness including training and test data, called the expected test error. The mathematical formulations of these targets are in Section 2. Note that these two prediction errors are often very close in the IID setting per the law of large numbers (LLN), since we are often interested in predicting a large number of IID test points. However, in times series settings, we are not interested in the long-term performance of forecasters, and LLN often does not apply. To distinguish, we call uncertainty intervals for prediction intervals, and for Err confidence intervals.

In time series applications, providing coverage of the stochastic test error is often more intuitive and useful than coverage of the expected test error Err. For example, energy companies are typically interested in the fluctuation of the actual prediction error of power demand for the next few days, rather than the range of the hypothetical expected error. So this paper will focus more on providing prediction intervals to , but will also discuss confidence intervals for Err.

The second important ingredient in providing statistically meaningful uncertainty quantification is to make appropriate statistical assumptions on the time series. While strong parametric assumptions like ARMA models are undesirable, certain distributional assumptions are needed for statistical inference. In this paper, we start with the assumption that the time series is stationary. This assumption seems very strong, but to our knowledge, there are no existing statistically meaningful uncertainty intervals for prediction errors in stationary time series. Starting with stationarity allows an initial meaningful step towards uncertainty quantification for prediction errors in time series.

After clarifying the inferential target and statistical assumptions, we are ready to discuss inferential procedures for prediction errors. In this paper, we introduce and advocate quantile-based forward cross-validation (QFCV) prediction intervals, which provide statistically meaningful intervals with asymptotically valid coverage for prediction errors (Section 3). We will also illustrate why naive FCV intervals fail to provide valid uncertainty intervals, even with certain corrections (Section 4). Finally, we will consider non-stationary time series, and provide rolling intervals by combining QFCV with adaptive conformal prediction methods to give certain coverage guarantees under non-stationarity (Section 5).

1.1 An illustrating example

We provide a simulation example to illustrate the difference between stochastic and expected test errors in time series forecasting, and compare naive FCV and QFCV methods, where the former is a naive CLT-based uncertainty interval (Eq (12) in Section 4.1) as a baseline and the latter is our proposed uncertainty interval that will be elaborated in Algorithm 1 in Section 3. At each time step , we observe feature vector where , with IID standard normal entries, and outcomes following a linear model. Here , and noise is an AR(1) process with parameter . The forecaster is a Lasso regression trained on the last time indices ( to ). Our target is the prediction error over the unseen future data points ( to ).

We calculate the coverage of naive FCV and QFCV intervals over simulation instances. Figure 1 shows these intervals for the first 50 instances. Numerical results demonstrate nominal coverage by QFCV for the stochastic test error, which we theoretically justify in Section 3. In contrast, FCV severely undercovers the stochastic test error at only coverage, and slightly undercovers the expected test error at . In Section 4, we explain the issues with naive FCV and investigate certain correction approaches. Overall, this example illustrates the difference between stochastic and expected test errors in time series, and shows that QFCV provides valid coverage while naive FCV does not.

1.2 Our contribution

This paper provides a systematic investigation of inference for prediction errors in time series forecasting. We identify two inferential targets, the stochastic test error and the expected test error, and discuss different methods for providing uncertainty intervals. Our contribution is three-fold.

-

•

We propose quantile-based forward cross-validation (QFCV) methods to provide prediction intervals for stochastic test error (Section 3). We prove that QFCV methods have asymptotically valid coverage under the assumption of ergodicity. Through numerical simulations, we show that QFCV methods give well-calibrated coverages. In particular, when choosing an appropriate auxiliary function, QFCV provides shorter intervals than the naive empirical quantile method, especially for smooth time series.

-

•

We examine forward cross-validation (FCV) methods, which are CLT-based procedures adapted from cross-validation in the IID setting (Section 4). These methods seem like a natural choice for practitioners. We identify issues with the naive FCV method that underlie its failure to cover the stochastic and expected test errors. We also investigate two modifications to naive FCV that partially address these problems. However, these modifications do not perfectly restore valid coverage without strong assumptions.

-

•

For non-stationary time series, we provide rolling intervals by combining the QFCV method with a variant of the adaptive conformal inference, which we name the AQFCV method (Section 5). AQFCV method produces rolling intervals with asymptotically valid time-average coverage under arbitrary non-stationarity. We articulate the difference between time-average coverage and the frequentist’s notion of instance-average coverage. Finally, we demonstrate AQFCV’s performance on both simulated and real-world time series data.

1.3 Related work

Cross validation with independent data

Cross-validation (CV) [All74, Gei75, Sto74] is used ubiquitously to estimate the prediction error of a model. A comprehensive review of CV methods is presented in [AC10]. Despite the simplicity of CV, the seemingly basic question “what is the inferential target of cross-validation?” has engendered considerable debate [Zha95, HTFF09, You20, RT19, Wag20, BHT21].

Turning to the question of inference for prediction error, extensive studies provided various intervals of prediction error based on CV [Die98, NB99, MTBH05, Efr83, ET97, You21, LPvdL15, BPvdL20, AZ20, BBJM20, BHT21]. In particular, [Die98, NB99, MTBH05] provides estimators of the CV standard error based on either the sample splitting method or the moment method. Works such as [Efr83, ET97, You21] provide estimators of the standard error of bootstrap estimate of the prediction error, based on the influence function method. Asymptotically consistent estimators of CV standard error were provided in [LPvdL15, BPvdL20, AZ20, BBJM20]. Furthermore, [BHT21] introduced the nested CV method, which produces an unbiased estimator of the mean squared error for the CV point estimator. However, all these estimators are designed for datasets with independent data points and do not extend to time series datasets, the setting considered in this work.

Inference for test error in a time series dataset

In the time series setting, many variants of cross-validation methods have been proposed to provide point estimators for prediction errors [BCN94, Rac00, BB11, BB12, BCB14, BHK18, CTM20, PAA+22, HAB23]. The basic idea is to only use past data as training data and future data as test data. Time series prediction error estimation has several different setups (see, for example, [HAB23]). The setup we adopt is the “rolling window” approach, wherein a fixed size of the training dataset is used to fit the forecaster. This setup is quite common in scenarios where the available dataset size is large [MWMC18]. Furthermore, in this setup, inference methods could have meaningful statistical interpretation under only the stationarity assumption. Another setup, the “expanding window” setting, uses an incrementally expanding training dataset to fit the forecaster over time. In this context, it is challenging to confer meaningful statistical interpretation to any prediction error estimator, even under stationary conditions.

In this work, instead of providing point estimators, our primary focus is to provide prediction intervals and confidence intervals for prediction errors within the context of time series analysis. To the best of our knowledge, there seems to be no existing literature addressing this particular topic.

Conformal inference in time series

A recent line of work focuses on predictive inference (i.e., providing intervals for prediction) in the time series setting [GC21, GC22, FBR22, BWXB23, ZFG+22, XX22, SY22, LTS22]. These works are mostly based on adaptive conformal inference (ACI) [GC21, GC22], a term derived from “conformal prediction” methods [VGS99, SV08, AB21]. Utilizing online learning techniques, the ACI method produces a sequence of prediction intervals with an asymptotic guarantee of valid time-average coverage.

Our work differs in its focus, with the inferential target being the prediction error, as opposed to the prediction value, the target of the ACI method [GC21]. However, our AQFCV method borrows the techniques of ACI to provide prediction intervals for prediction error, also providing an asymptotic guarantee of valid time-average coverage.

2 Settings and notations

In the context of time series forecasting, we are provided with a stream of data points , with . In time series without additional context , we can consider the context as a constant value of . By the time index , we have observed the first pairs , and we aim to sequentially predict the forthcoming outcomes using the contexts .

Stochastic prediction error.

We begin with a function that forecasts future responses, along with a loss function . An interesting inferential target is the stochastic prediction error , representing the average losses associated with the next data points, where the forecaster is trained on the most recent data points:

| (1) |

See Figure 2 for a diagram illustration of prediction error evaluation in time series forecasting.

In a more general scenario, we permit the forecaster to provide varying forecasts for different future time steps. In other words, we have a vector function that is trained on the last data points and is designed to predict the next data points. The stochastic prediction error, in this case, can be similarly expressed as:

| (2) |

Note that (2) is slightly more general than (1), albeit with a more complicated notation. Throughout the paper, we will assume that our forecasters are identical at each future prediction step and primarily focus on the (1) formulation of the stochastic prediction error. However, it is important to keep in mind that our methods can accommodate the general case of a non-identical prediction function.

Expected prediction error.

Another potential inferential target is the expected prediction error, denoted as Err. Assuming a distribution over , the expected prediction error is defined as the expectation of the stochastic prediction error, ,

| (3) |

The expectation is taken with respect to all the randomness variables, namely, and possible randomness present in the forecaster . Notably, due to time correlation, it is probable that

Indeed, the expected prediction error Err also depends on the number of test points , different from the IID setting. Additionally, we should not expect to be a significantly large number, since we are typically concerned with the forecaster’s performance in the near future. In such a scenario, we should not expect to concentrate around Err: as is illustrated in Figure 1, the black dashed line represents the expected prediction error Err, while the black dots represent the stochastic prediction error .

Prediction intervals and confidence intervals.

In this paper, our primary focus is quantifying the uncertainty for both stochastic and expected prediction errors. When the stochastic prediction error is our inferential target, we aim to offer a prediction interval (PI), denoted as , based on the observed data . Conversely, when the expected prediction error Err is our target, our goal is to provide a confidence interval (CI), denoted as , also based on the observed data :

| (4) |

In many practical scenarios, our interest leans more towards providing a prediction interval for the stochastic prediction error . This preference stems from the fact that the prediction interval of incorporates the fluctuation of the realized loss that we will incur on future unseen data. Capturing this fluctuation is crucial for risk control and uncertainty quantification. In contrast, a confidence interval for the expected prediction error Err marginalizes over the randomness in , providing less information about the variability of the test error in a single time series instance.

3 Quantile-based procedures for prediction intervals

In this section, we first present the Quantile-based Forward Cross-Validation (QFCV) method used for generating prediction intervals for the stochastic prediction error (Section 3.1). We then demonstrate in Section 3.2, under the stationary and ergodicity assumptions, that the QFCV prediction interval guarantees an asymptotically valid coverage of . Following this, Section 3.3 provides a series of numerical simulations, while Section 3.4 offers a detailed discussion.

3.1 The QFCV prediction interval

In stationary time series, a straightforward approach for providing a prediction interval involves estimating the quantile of the stochastic prediction error distribution using the observed dataset. We introduce a more refined method named Quantile-based Forward Cross-Validation (QFCV). In a nutshell, QFCV estimates the quantile function of the stochastic prediction error, , based on certain user-specified features. These features are expected to have high predictive power to , an example being the validation error.

To introduce QFCV, we organize the time indices into multiple possibly overlapping time windows, which we denote as and , as depicted in Figure 3. The time windows share the same size, denoted as ; share the same size, denoted as ; share the same size, denoted as . In addition, for each , each time window in shifts from by indices, where . Spelling out the elements of each time window, we have

| (5) | ||||

Furthermore, given a user-specified auxiliary function that generates a set of covariates for predicting the stochastic prediction error, denoted as , we define tuples and by the equations below:

| (6) | ||||||

Here, is a condensed notation for . It is important to note that and can be computed from the observed dataset , but depends on future unobserved data points. Indeed, is the same as the stochastic prediction error , as defined in Eq. (1). Assuming the time series is stationary, it is evident that and are identically distributed, though they are not independent due to time correlation.

The QFCV method, given these definitions, can be summarized in the three steps below. First, calculate and given by Eq. (6). Then, predict quantiles of based on feature vectors by minimizing the empirical quantile loss to obtain quantile functions and . Finally, the QFCV prediction interval is given by . Detailed steps are provided in Algorithm 1.

Choice of auxiliary function.

There is a certain amount of flexibility in choosing the auxiliary function . As we will show in the upcoming section, regardless of the specific choice of the auxiliary function, the QFCV algorithm will yield an asymptotically valid prediction interval, under the assumption of ergodicity. In practice, we aim to choose such that it is as predictive of as possible. Our preferred choice for is given by the validation error, a sample splitting estimator of the test error:

| (7) |

We illustrate the QFCV method in Figure 4, where the QFCV prediction interval is illustrated with red dashed lines.

3.2 Asymptotic validity of QFCV prediction intervals

We provide theoretical guarantees on the coverage of QFCV prediction intervals, under the assumption of a stationary time series. We present methods for relaxing the stationarity assumption in Section 5.

Assumption 1.

The time series is a stationary ergodic stochastic process, such that for any function with and , we have

Given this assumption, is likewise stationary ergodic and shares the same distribution as . Let denote this common distribution. For simplicity, we will assume has a density with respect to the Lebesgue measure. While this is a strong assumption, it could be relaxed by more sophisticated analysis.

Assumption 2.

The distribution of has a density with respect to the Lebesgue measure.

Moreover, we denote as the class of -quantiles of the conditional distribution of given . This class can be expressed as minimizers of the pinball loss,

We assume the function class used in the QFCV algorithm (Algorithm 1) can realize the and quantile classes, and . Furthermore, for simplicity, we assume that is finite, a strong assumption that could also be relaxed.

Assumption 3.

Assume that is a finite function class, and , .

We are ready to state the asymptotically valid coverage for the QFCV method, as detailed in Theorem 3.1. The proof of this theorem can be found in Appendix A. A more general statement going beyond the finite function class assumption (Assumption 3) can be found in Appendix A.1.

We remark that the choice of the auxiliary function does not affect the asymptotic validity of QFCV prediction intervals. However, when the dimension is large, more samples will often be required for the asymptotic regime to take effect. In practice, we would like to choose to be moderately small and to be some function such that and have a large mutual information.

3.3 Comparing QFCV methods via numerical simulation

We evaluate QFCV methods with different choices of auxiliary functions through a simulation study. Recall the setting as in Section 2, we generate a time series with historical data, train a model on training set, and aim to construct prediction intervals for the test error over the next unseen points (see Figure 2 for a diagram of this dataset division). The simulated time series is generated as follows: for each time step , with , where

| (9) |

We fix the true coefficient vector as , where the first four coordinates are , and the rest of the coordinates are . The noise process follows a standard autoregressive-moving-average model with AR coefficients and MA coefficients . That is

| (10) |

We consider two ARMA parameter settings:

-

(1)

with .

-

(2)

with and .

Both processes are stationary, but setting (2) exhibits smoother sample paths than setting (1), as visualized in Figure 5 which plots realizations of for the first 200 time steps. We posit that setting (2), as a smoother noise process, will yield a higher correlation between validation and test errors .

We consider QFCV methods utilizing the following auxiliary functions. For , we split the validation set into continuous and non-overlapping subsets . We define the auxiliary function of dimension as the vector of validation errors on each subset:

For , we use a trivial auxiliary function . We denote by the QFCV method utilizing auxiliary function . We provide a few special examples as follows.

-

•

: . Here, QFCV essentially calculates the empirical quantile of , equivalent to the intercept-only quantile regression.

-

•

: . Here, QFCV uses quantile regression with the single feature given by the average validation error.

-

•

: . Here, QFCV uses quantile regression with features given by the individual validation errors.

In all cases, we use linear quantile regression of against , choosing as the class of linear functions.

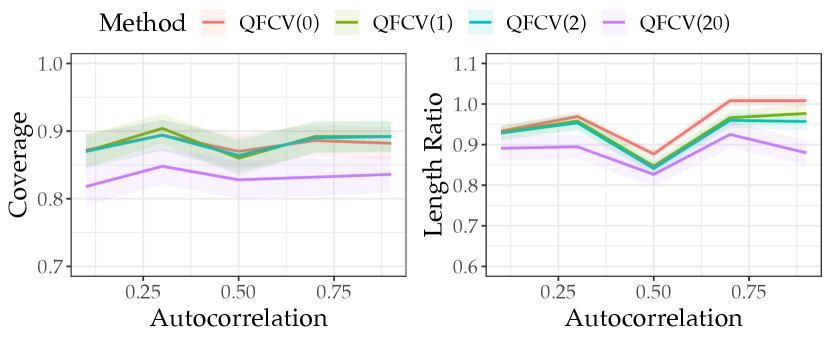

Figure 6 reports the coverage and length of QFCV intervals under different simulation settings. The time series is generated according to model (9), where the noise follows either an process (panels (a) and (c)) or process (panels (b) and (d)). For both noise processes, we fix the MA coefficients and vary the AR coefficient along the x-axis over to modulate autocorrelation. The nominal coverage level is . To normalize the interval lengths, we divide the QFCV lengths by the true marginal quantiles interval length, defined as the difference between the and quantiles of the true test error distribution. Each curve displays the mean and standard error over simulation instances.

Figure 6 shows that achieves close to coverage at the nominal level when is less than or equal to . However, for in panels (c) and (d), the coverage deviates further from the nominal level, likely because quantile regression requires more samples to be consistent with a -dimensional feature vector. In terms of interval lengths, all QFCV methods produce similar lengths to the true marginal quantiles on data with non-smooth noise (panels (a) and (c)). However, for smooth noise (panels (b) and (d)), can substantially outperform the true marginal quantiles length, especially when (panel (b)). This occurs because with smooth noise, the validation error becomes more predictive of the actual test error .

To explain why produces smaller average interval lengths than , Figure 7 presents scatter plots of the length ratios versus true test errors over simulation instances. The noise process is taken to be with and . The left panel shows that (the empirical quantile method) generates interval lengths close to the true marginal quantiles for all ranges of the true test error. In contrast, assigns smaller intervals for small true test errors. The right panel colors points by whether they are mis-covered. We see that while tends to mis-cover points with large test errors, the mis-covered points for are more uniformly distributed across the test error range. This indicates that by adapting to the validation error, produces prediction intervals that are overall shorter while maintaining coverage.

Figure 8 shows how the actual coverage of QFCV methods varies with the time series length , for an noise process with and . We find that all QFCV variants achieve close to the nominal coverage when . However, using a larger number of features for quantile regression leads to a larger estimation error, requiring more samples to attain the nominal coverage level. This highlights the need to avoid excessively large values in practice.

3.4 Discussion

Let us take a moment to reflect on and discuss our main proposal of QFCV prediction intervals for time series forecasting.

Quantile-based methods versus CLT-based methods.

QFCV produces intervals using quantiles, which provides asymptotic validity relying on the law of large numbers for the ergodic process of test errors. While quantile-based methods are a natural choice in hindsight, CLT-based methods like forward cross-validation (FCV; see Section 4) may seem like a more instinctive first approach for practitioners. However, as we will see in Section 4, FCV prediction intervals have certain limitations that quantile-based QFCV avoids. By modeling the conditional quantiles of the test error distribution, QFCV does not make Gaussian assumptions and can provide valid coverage where naive CLT-based methods fail.

as the advocated approach.

Our simulations illustrate the tradeoff in selecting the number of features for the auxiliary function . Adding more features can yield shorter intervals but requires more samples to attain valid coverage. We advocate using , which takes the average validation error (7) as the single feature. As shown in our experiments, improves on the interval length of while matching the length of . Critically, maintains valid coverage across all settings. In later sections, we will refer to the QFCV method as by default.

QFCV point estimators.

While we have focused on QFCV for constructing prediction intervals, point estimators can also be naturally derived from the QFCV framework. We include more details of QFCV point estimators, as well as other algorithm design choices of QFCV intervals, in Appendix D.

4 CLT-based forward cross-validation

Many inference methods for prediction error under the IID assumption produce confidence intervals aiming to cover the expected test error Err based on the central limit theorem (CLT). Such methods typically have the form:

where is a point estimate of Err, is the estimated standard error of , and is the -quantile of a standard normal distribution. In time series forecasting, practitioners usually adopt a naive adaptation of the cross-validation called forward cross-validation (FCV) as natural candidates for constructing confidence intervals targeting expected error Err.

In this section, we examine FCV for constructing confidence and prediction intervals. We illustrate when it succeeds or fails, comparing it to QFCV. We show that as confidence intervals covering expected error Err, naive FCV lacks valid coverage unless adjusted by an autocovariance correction. Furthermore, as prediction intervals aim to cover stochastic error , scaling-corrected FCV only provides valid coverage under strong Gaussianity assumptions, unlike QFCV.

4.1 Forward cross-validation procedures

In FCV, we split the historical data sequence into possibly overlapping time windows. Each window is shifted time steps from the previous one. For the -th window, we further divide it into a training set of size and a validation set of size . See Figure 9 for a diagram illustration. The FCV point estimate of the prediction error is:

| (11) |

Under the stationary assumption, is an unbiased estimator for the expected target error Err, as stated in Lemma 4.1 below.

Lemma 4.1 (Unbiasedness of FCV estimator).

Assume is stationary, and let . Then the FCV estimator is unbiased for the expected test error (3), that is,

Proof.

By direct calculation,

where (1) uses stationarity and (2) is by definition of Err. ∎

Naive FCV interval.

Following the strategy of constructing confidence intervals in the IID setting, the naive FCV interval based on CLT is:

| (12) |

where

| (13) |

We present the algorithm for computing the naive FCV interval in Algorithm 2.

The naive FCV interval has some limitations in serving as both a confidence interval for the expected test error Err and a prediction interval for the stochastic test error . When treated as a confidence interval for expected test error Err, naive FCV often underestimates the true variance of the FCV point estimator since it doesn’t account for the time correlation. When treated as a prediction interval for stochastic test error , naive FCV does not capture the variance of , but instead the variance of the FCV point estimator. Therefore, the naive FCV interval will undercover both Err as a confidence interval and as a prediction interval.

To address these issues, we describe two modifications to the naive FCV intervals. In the first modification, an autocovariance correction is adopted to derive a consistent variance estimate for the FCV point estimator. This results in an autocovariance-corrected FCV that becomes a valid confidence interval for expected test error Err. In the second modification, the standard error estimator is rescaled, making it an estimate of the standard error of the stochastic test error. This results in a scaling-corrected FCV interval whose length is on the scale of the standard deviation of the stochastic prediction error.

Autocovariance-corrected FCV confidence interval.

Assuming that the time series is stationary, the variance of the FCV point estimator gives

Here, is the autocovariance function of the stationary process , which can be estimated from the data using the sample autocovariance ,

The adjusted standard error estimator is thus a truncated sum of the sample autocovariance function, denoted as , where the indicates covariance adjustment.

| (14) |

Here, truncates the summation to the correlation length of the stationary process . This is needed since converges to very slowly for large . We expect that when exceeds some threshold , the autocovariance becomes very small and can be neglected.

Given the adjusted standard error estimator, the autocovariance-adjusted FCV confidence interval is

Under certain assumptions on the stationary process , it can be shown via the central limit theorem that is asymptotically normal with asymptotic standard deviation consistently estimated by . Therefore, is an asymptotically valid confidence interval for Err. This leads to the following informal proposition, with a rigorous statement presented in Appendix B.1.

Proposition 4.2 (Asymptotic normality of the autocovariance-corrected FCV estimator; Informal).

Under certain assumptions of the stationary process , as , we have convergence in distribution

Consequently, has asymptotically coverage over Err.

Scaling-corrected FCV prediction interval.

Both in (13) and in (14) estimate the variance of instead of the variance of , and thus do not provide prediction intervals for . To estimate the variance of , a commonly used approach is a scaling correction. In particular, we define the scaling-corrected standard error estimator as , where indicates this is for a prediction interval:

| (15) |

The scaling-corrected FCV prediction interval is then:

| (16) |

Under the strong assumption that follows a Gaussian process, we can show gives approximately the and quantiles of the stochastic test error distribution. This gives the following proposition, with the proof in Appendix B.2.

Proposition 4.3.

Assume is a stationary ergodic Gaussian process. Let . Then as , we have convergence in probability

| (17) |

In this case, we have convergence in probability

| (18) |

That is, is an asymptotically valid prediction interval for ,

| (19) |

It is worth noting that , the empirical quantile method, will also give the and quantiles of the stochastic test error distribution. Consequently, under the Gaussian process assumption, the scaling-corrected FCV prediction interval is asymptotically equivalent to . As shown in Section 3, has a larger interval length than our advocated method. Thus, we would also expect scaling-corrected FCV to have a larger interval length than .

We note that the Gaussian process assumption for is a strong assumption that may not hold in practice. In Figure 10, we provide an example where the Gaussianity assumption is violated. The bottom panel shows the population-level stochastic test error distribution obtained with 1000 samples, where the setting matches Figure 1 except with a smaller test set size of . With small test sets, errors are no longer Gaussian distributed - the distribution is truncated at zero and skewed. Therefore, the scaling-corrected FCV interval may not have valid coverage due to violating the Gaussian assumption.

In contrast, QFCV methods do not require the Gaussian assumption. As seen in the top and middle panels of Figure 10, the empirical validation error distribution from a single FCV run converges to the population test error distribution as the number of folds increases. The method bypasses the Gaussianity assumption by approximating the oracle stochastic test error distribution with the empirical distribution of validation errors and reporting empirical quantiles for prediction intervals. Other QFCV variants, such as , exploit the time correlation between past validation error and future test error to yield shorter intervals than .

Given that Gaussianity often does not hold in practice, scaling-corrected FCV has no guarantees of asymptotically valid coverage because it is using Gaussian quantiles for uncertainty interval construction. Therefore, we do not advocate it due to the strong assumptions required. We recommend using QFCV, which does not rely on this assumption.

4.2 Comparing FCV and QFCV via numerical simulations

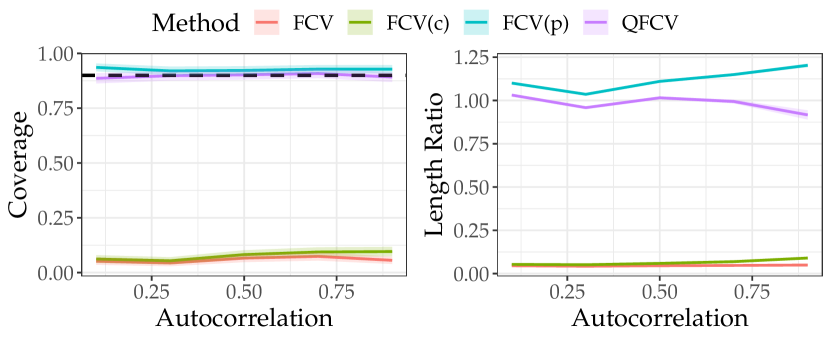

We perform numerical simulations to compare CLT-based FCV intervals (Algorithm 2) with QFCV methods (Algorithm 1). Specifically, we examine naive FCV intervals (FCV), autocorrelation-corrected FCV intervals (FCV(c)), and scaling-corrected FCV intervals (FCV(p)) for the CLT-based methods. For the QFCV method, we use , where the auxiliary function is the validation error (7). The simulations follow the same setup as in Section 3.3, with time series generated from a linear model (9) with noise. We investigate the coverage and length ratio of these intervals. The results, presented in Figure 11, illustrate the performance of the different methods.

The results in Figure 11 demonstrate that the naive FCV and FCV(c) methods undercover the stochastic test error across the simulation settings. In contrast, the FCV(p) and QFCV methods achieve valid coverage. However, the QFCV intervals are shorter than those from FCV(p), particularly for time series with smoother noise processes. This aligns with our expectations, as we anticipated FCV(p) would have similar performance to QFCV(0). Since QFCV(1) utilizes features informative to the stochastic test error during quantile regression, it outperforms QFCV(0), and hence should outperform FCV(p).

Table 1 provides further comparison of the four methods across the simulation settings. The first row for each setting shows the mis-coverage percentages of the stochastic test error for both the upper and lower ends. This illustrates that QFCV has more balanced mis-coverage than FCV(p), which is expected since QFCV relies on quantiles, without the need of Gaussian assumptions like FCV(p). The second row for each setting gives the mis-coverage percentages of the expected test error Err. It demonstrates that FCV(c) provides better coverage for Err and serves as a better confidence interval than naive FCV through its autocovariance correction. The fourth row for each setting illustrates the mean squared error of the FCV and QFCV point estimators for estimating the stochastic test error . It shows that QFCV point estimator has a smaller MSE than the FCV point estimator.

| Setting | Miscoverage (%) & Performance | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| FCV | FCV(c) | FCV(p) | QFCV | ||||||

| Data | Target | Hi | Lo | Hi | Lo | Hi | Lo | Hi | Lo |

| (a) | 37.2 | 56.2 | 36.8 | 55.0 | 7.8 | 0.0 | 5.8 | 4.0 | |

| Miscoverage of Err | 18.8 | 4.8 | 13.4 | 2.4 | 0.0 | 0.0 | 0.0 | 0.0 | |

| Interval Length | 0.211 | 0.273 | 5.13 | 4.69 | |||||

| 2.39 | - | - | 2.40 | ||||||

| (b) | 30.4 | 64.0 | 27.8 | 61.2 | 7.2 | 0.0 | 5.0 | 4.8 | |

| Miscoverage of Err | 34.6 | 10.4 | 15.6 | 0.6 | 0.0 | 0.0 | 0.4 | 0.0 | |

| Interval Length | 0.287 | 0.572 | 6.99 | 4.59 | |||||

| 5.24 | - | - | 3.30 | ||||||

| (c) | 39.2 | 49.8 | 38.4 | 47.8 | 7.8 | 1.0 | 5.6 | 6.8 | |

| Miscoverage of Err | 18.2 | 4.4 | 11.8 | 1.8 | 0.0 | 0.0 | 0.0 | 0.0 | |

| Interval Length | 0.239 | 0.293 | 2.91 | 2.75 | |||||

| 0.881 | - | - | 0.841 | ||||||

| (d) | 30.2 | 57.8 | 28.4 | 55.6 | 5.6 | 0.0 | 4.8 | 4.4 | |

| Miscoverage of Err | 13.8 | 13.8 | 7.8 | 6.6 | 0.0 | 0.0 | 0.0 | 0.2 | |

| Interval Length | 0.492 | 0.660 | 5.99 | 5.12 | |||||

| 3.22 | - | - | 2.99 | ||||||

5 Rolling intervals under nonstationarity

In previous sections, we provided inference methods for test errors in stationary time series. However, constructing valid prediction intervals becomes more difficult when the time series is non-stationary. With arbitrary non-stationarity, the observed data may contain little information about the distribution of future data points, and obtaining prediction intervals is therefore challenging. A more feasible goal is to provide rolling intervals for the test error that have asymptotically valid time-average coverage.

5.1 ACI with delayed feedback for rolling intervals

Rolling intervals.

Suppose that we observe the time series arriving sequentially, where the are treated as deterministic, unlike the stochastic settings in previous sections. We are provided with a forecasting function and a loss function . Define as the -th test error,

| (20) |

This is the rolling version of in Eq. (1), where we add the superscript to emphasize its dependence on the time index. Our goal is to construct rolling intervals where each depends on , the time series up to time . We hope these intervals will have time-average coverage over the set of times indices spaced by ,

| (21) |

We emphasize that time-average coverage differs from statistical coverage in (4). We will revisit this distinction later.

Adaptive conformal inference with delayed feedback (ACI-DF).

We introduce the ACI-DF algorithm for constructing rolling prediction intervals, described in Algorithm 3. The ACI-DF algorithm adapts the ACI algorithm [GC21] to the delayed feedback setting. The algorithm takes as input a sequence of prediction interval constructing functions , and sets the rolling interval at step to be . The parameter is updated every steps using online gradient descent on the pinball loss (line 7), but with delayed feedback since depends on . So we update based on the most recent computable 0-1 loss of coverage.

We show that the ACI-DF algorithm has the following time-average coverage guarantee, whose proof follows similarly to [GC21] and is given in Appendix C.

Theorem 5.1 (ACI with delayed feedback).

Suppose is a sequence of set constructing functions. Suppose there exists and such that for all , we have for all , and for all . Then the rolling intervals from Algorithm 3 satisfy

| (22) |

We note that, similar to adaptive conformal inference, we have flexibility in choosing the prediction interval constructing functions. In particular, to achieve the time-average coverage guarantee, we are not required to use a statistically valid prediction interval construction function. Indeed, any prediction interval can serve as the base procedure, by simply taking to be the constructing function. However, if we hope for the average interval length to be small, we need a good base procedure. One suitable choice is the QFCV intervals from Section 3.1. That is, at iteration , we take , where is the QFCV interval (Algorithm 1) with and nominal mis-coverage . We call the resulting algorithm Adaptive QFCV, and the rolling intervals are denoted as .

Time-average coverage versus instance-average coverage.

We note that the time-average coverage differs from the frequentist notion of instance-average coverage. To understand the difference, we define , where is the index of the simulation instance, and is the time index. Different simulation instances are assumed to be IID. Then the coverage indicators form the following matrix:

where each row is the coverage of a fixed simulation instance across different time indices, and each column is the coverage of a fixed time index across different simulation instances. The two different coverage notions are then:

-

•

Time-average coverage of instance : the row average .

-

•

Instance-average coverage of time : the column average .

In practice, both notions of coverage are useful depending on the application scenario.

We further remark that valid time-average coverage does not imply valid instance-average coverage, and vice versa. Note that ACI-DF method yields valid time-average coverage as shown in Theorem 5.1, while QFCV prediction intervals have asymptotically valid instance-average coverage under ergodicity as shown in Theorem 3.1. So we expect AQFCV to have valid coverage of both notions, though there is no guarantee of valid instance-average coverage.

5.2 Numerical simulations of AQFCV

Comparing QFCV and AQFCV

We perform simulations to illustrate the coverage properties of QFCV and AQFCV (Adaptive conformal inference wrapped with QFCV) in both stationary and nonstationary time series. We generate IID instances of time series with steps each. The training set size for the (fixed window size) forecasting function is , and we evaluate the test error for the next steps. Furthermore, we generate the rolling interval every steps, starting from step . So in total, there are rolling intervals. The time series are generated from the linear model (9) with and . In the stationary setting, the noise process is an process with parameter . In the non-stationary setting, is the sum of an process with AR parameter and an independent Gaussian sequence . This choice results in a noise process far from stationarity. In Figure 12(a) and 12(c), we report the instance-average coverage for , under the stationary and nonstationary settings. In Figure 12(b) and 12(d), we calculate the time-average coverage for each instance and report the and quantiles over the instances.

Figure 12 shows that AQFCV results in asymptotically valid time-average coverage for both stationary and non-stationary settings. In stationary settings where QFCV is already instance-average valid, ACI-DF does not affect the instance-average validity. In nonstationary settings where QFCV slightly undercovers, ACI-DF can correct the interval to give correct time-average coverage. We note that AQFCV can also obtain valid instance-average coverage in nonstationary settings, an interesting phenomenon beyond our theories that deserves further investigation.

Comparing AFCV and AQFCV

We compare the performance of ACI-DF wrapped with two base methods: CLT-based FCV intervals (AFCV) and QFCV prediction intervals (AQFCV). Theorem 5.1 provides asymptotic time-average coverage guarantee when ACI-DF is combined with any base method satisfying mild assumptions. However, AQFCV may have advantages over AFCV despite both having valid asymptotic coverage.

Through simulation, we aimed to illustrate why practitioners may prefer AQFCV over wrapping ACI-DF with a less carefully constructed prediction interval such as FCV based interval. In Figure 13, we compared the rolling intervals from AFCV and AQFCV on a generated time series with steps. The forecasting function used a fixed training window of steps to generate forecasts for the next steps, with rolling intervals for prediction error produced every steps starting at step , for intervals total. The time series comes from a -dimensional linear model (9) with an noise process at . This is the same generative process used in prior simulation as in case (b) of Figure 6, 11, and Table 1.

Figure 13 shows that AQFCV provides more adaptive intervals than AFCV, with wider intervals in volatile regions and narrower intervals when volatility is low. This adaptiveness of AQFCV stems from the base QFCV method exploiting time correlation between validation and test errors. We note this phenomenon may not always occur, especially if error correlations are weak. Still, AQFCV is recommended since a good base method at low computational cost brings benefits such as potential instance-average coverage. Quantile-based methods also naturally handle arbitrary nonstationarity through flexible quantile-based prediction interval constructing function.

6 Real data examples

6.1 French electricity dataset

We first evaluate QFCV and AQFCV using the French electricity price forecast dataset from [ZFG+22]. The dataset contains French electricity spot prices from 2016 to 2019, with hourly observations of forecast consumptions and prices. The goal of the forecaster is to predict at day the prices of the next 24 hours of the day . The forecaster uses the following features: day-ahead forecast consumption, day-of-the-week, prices of the day , and prices of the day .

We use linear ridge regression with appropriate fixed ridge parameters as the prediction algorithm , trained on the data of the last months, so that . The test error is evaluated on the next hours of electricity price, so . We use QFCV and AQFCV to provide the prediction interval for the test error. In methods, we set , . The result is provided in Figure 14.

By visual inspection, the error process of French electricity dataset is not stationary due to large spikes of varying strengths. The AQFCV method, which wraps ACI-DF with QFCV, yields the asymptotically nominal coverage rate as promised in Theorem 5.1. Although QFCV does not have a time-average coverage guarantee under nonstationarity, it has enough coverage in this particular example, despite some over-coverage. This contrasts the simulation example in Section 5.2, where QFCV undercovers in nonstationary time series forecasting (see Figure 12). The simulation example is a special case where forecasting difficulty increases over time, so intervals using historical data underestimate future errors, causing under-coverage. In the French electricity dataset, nonstationarity arises from error spikes available to the QFCV quantile regression step, so QFCV assumes spikes occur regularly under stationarity. Thus, QFCV outputs wide intervals, resulting in over-coverage.

6.2 Stock Market Volatility dataset

Next, we evaluate QFCV and AQFCV using the publicly available stock market volatility dataset from The Wall Street Journal, the same dataset adopted in [GC21]. As in [GC21], we select the same four stocks and use their daily minimum prices from January 2005 to July 2023. For each stock we calculate daily returns for and thus the realized volatility . We consider the task of multiperiod forecasting of market volatility using previously observed returns .

Similar to [GC21], we adopt the model [Bol86] as the prediction algorithm , which assumes where are IID sampled from and with as trainable parameters. For a given time stage , we train on the data from the last business days to obtain coefficient estimates and past standard deviation estimates . Then we give point estimates of multiperiod volatility in the next days as follows,

We then want to give a prediction interval for the test error incurred in predicting the volatility over the next days. That is, we want to find intervals such that

For both QFCV and AQFCV, we set , , , and . Time-average coverages for all four stocks are presented in Figure 15. For all four stock prices, the AQFCV method provides the asymptotically nominal coverage rate as established in Theorem 5.1. On the other hand, we do not expect QFCV to have a time-average coverage guarantee under nonstationarity. In settings (a), (c), and (d) of Figure 15 (corresponding to Nvidia, BlackBerry, and FannieMae), QFCV undercovers with an approximately coverage rate. This aligns with simulation results in Section 5. In setting (c), we can see that QFCV restores the nominal coverage rate in years with fewer spikes in true error evolution. And in setting (b) (corresponding to AMD), QFCV achieves a nominal coverage rate in more recent years due to fewer spikes in frequency and magnitude of error evolution trends compared to historical trends.

7 Conclusion and discussion

This paper provides a systematic investigation of inference for prediction errors in time series forecasting. We propose the quantile-based forward cross-validation (QFCV) method to construct prediction intervals for the stochastic test error , which have asymptotic valid coverage under stationarity assumptions. Through simulations, we find that QFCV provides well-calibrated prediction intervals that can be substantially shorter than naive empirical quantiles when the validation error is predictive of the test error. For non-stationary settings, we propose an adaptive QFCV procedure (AQFCV) that combines QFCV intervals with adaptive conformal prediction using delayed feedback. We prove AQFCV provides prediction intervals with asymptotic time-average coverage guarantees. Experiments on simulated and real-world time series demonstrate that AQFCV adapts efficiently during periods of low volatility. Overall, we advocate the use of QFCV and AQFCV procedures due to their statistical validity, computational simplicity, and flexibility to different time series characteristics.

Our work opens up several promising research avenues. One direction is proving that AQFCV maintains its time-average coverage guarantee when the underlying data-generating process is stationary. Another direction is developing statistically principled inference methods for prediction errors that are compatible with the expanding window forecasting setup. More broadly, this work underscores the value of rigorous uncertainty quantification when evaluating predictive models on temporal data. We hope that our proposed methodology helps catalyze further research into validated approaches for quantifying uncertainty in time series forecasting tasks.

Acknowledgement

The authors would like to thank Ying Jin, Trevor Hastie, Art Owen, Anthony Norcia, and Shuangping Li for their helpful discussions. Song Mei was supported in part by NSF DMS-2210827 and CCF-2315725. Robert Tibshirani was supported by the National Institutes of Health (5R01 EB001988-16) and the National Science Foundation (19 DMS1208164).

References

- [AB21] Anastasios N Angelopoulos and Stephen Bates. A gentle introduction to conformal prediction and distribution-free uncertainty quantification. arXiv preprint arXiv:2107.07511, 2021.

- [AC10] Sylvain Arlot and Alain Celisse. A survey of cross-validation procedures for model selection. 2010.

- [All74] David M Allen. The relationship between variable selection and data agumentation and a method for prediction. technometrics, 16(1):125–127, 1974.

- [Avi03] Yossi Aviv. A time-series framework for supply-chain inventory management. Operations Research, 51(2):210–227, 2003.

- [AZ20] Morgane Austern and Wenda Zhou. Asymptotics of cross-validation. arXiv preprint arXiv:2001.11111, 2020.

- [BB11] Christoph Bergmeir and Jose M Benitez. Forecaster performance evaluation with cross-validation and variants. In 2011 11th International Conference on Intelligent Systems Design and Applications, pages 849–854. IEEE, 2011.

- [BB12] Christoph Bergmeir and José M Benítez. On the use of cross-validation for time series predictor evaluation. Information Sciences, 191:192–213, 2012.

- [BBJM20] Pierre Bayle, Alexandre Bayle, Lucas Janson, and Lester Mackey. Cross-validation confidence intervals for test error. Advances in Neural Information Processing Systems, 33:16339–16350, 2020.

- [BCB14] Christoph Bergmeir, Mauro Costantini, and José M Benítez. On the usefulness of cross-validation for directional forecast evaluation. Computational Statistics & Data Analysis, 76:132–143, 2014.

- [BCN94] Prabir Burman, Edmond Chow, and Deborah Nolan. A cross-validatory method for dependent data. Biometrika, 81(2):351–358, 1994.

- [BHK18] Christoph Bergmeir, Rob J Hyndman, and Bonsoo Koo. A note on the validity of cross-validation for evaluating autoregressive time series prediction. Computational Statistics & Data Analysis, 120:70–83, 2018.

- [BHT21] Stephen Bates, Trevor Hastie, and Robert Tibshirani. Cross-validation: what does it estimate and how well does it do it? arXiv preprint arXiv:2104.00673, 2021.

- [Bol86] Tim Bollerslev. Generalized autoregressive conditional heteroskedasticity. Journal of econometrics, 31(3):307–327, 1986.

- [BPvdL20] David Benkeser, Maya Petersen, and Mark J van der Laan. Improved small-sample estimation of nonlinear cross-validated prediction metrics. Journal of the American Statistical Association, 115(532):1917–1932, 2020.

- [BWXB23] Aadyot Bhatnagar, Huan Wang, Caiming Xiong, and Yu Bai. Improved online conformal prediction via strongly adaptive online learning. arXiv preprint arXiv:2302.07869, 2023.

- [Coc97] John H Cochrane. Time series for macroeconomics and finance, 1997.

- [CTM20] Vitor Cerqueira, Luis Torgo, and Igor Mozeti. Evaluating time series forecasting models: An empirical study on performance estimation methods. Machine Learning, 109:1997–2028, 2020.

- [Die98] Thomas G Dietterich. Approximate statistical tests for comparing supervised classification learning algorithms. Neural computation, 10(7):1895–1923, 1998.

- [DZY+17] Chirag Deb, Fan Zhang, Junjing Yang, Siew Eang Lee, and Kwok Wei Shah. A review on time series forecasting techniques for building energy consumption. Renewable and Sustainable Energy Reviews, 74:902–924, 2017.

- [Efr83] Bradley Efron. Estimating the error rate of a prediction rule: improvement on cross-validation. Journal of the American statistical association, 78(382):316–331, 1983.

- [ET97] Bradley Efron and Robert Tibshirani. Improvements on cross-validation: the 632+ bootstrap method. Journal of the American Statistical Association, 92(438):548–560, 1997.

- [FBR22] Shai Feldman, Stephen Bates, and Yaniv Romano. Conformalized online learning: Online calibration without a holdout set. arXiv preprint arXiv:2205.09095, 2022.

- [FH20] Edwin Fong and Chris C Holmes. On the marginal likelihood and cross-validation. Biometrika, 107(2):489–496, 2020.

- [FVD00] Philip Hans Franses and Dick Van Dijk. Non-linear time series models in empirical finance. Cambridge university press, 2000.

- [GC21] Isaac Gibbs and Emmanuel Candès. Adaptive conformal inference under distribution shift. Advances in Neural Information Processing Systems, 34:1660–1672, 2021.

- [GC22] Isaac Gibbs and Emmanuel Candès. Conformal inference for online prediction with arbitrary distribution shifts. arXiv preprint arXiv:2208.08401, 2022.

- [Gei75] Seymour Geisser. The predictive sample reuse method with applications. Journal of the American statistical Association, 70(350):320–328, 1975.

- [Gil05] Kenneth Gilbert. An arima supply chain model. Management Science, 51(2):305–310, 2005.

- [HAB23] Hansika Hewamalage, Klaus Ackermann, and Christoph Bergmeir. Forecast evaluation for data scientists: common pitfalls and best practices. Data Mining and Knowledge Discovery, 37(2):788–832, 2023.

- [Ham20] James Douglas Hamilton. Time series analysis. Princeton university press, 2020.

- [Hay11] Fumio Hayashi. Econometrics. Princeton University Press, 2011.

- [HTFF09] Trevor Hastie, Robert Tibshirani, Jerome H Friedman, and Jerome H Friedman. The elements of statistical learning: data mining, inference, and prediction, volume 2. Springer, 2009.

- [Lei20] Jing Lei. Cross-validation with confidence. Journal of the American Statistical Association, 115(532):1978–1997, 2020.

- [LLC+15] Bo Liu, Jianqiang Li, Cheng Chen, Wei Tan, Qiang Chen, and MengChu Zhou. Efficient motif discovery for large-scale time series in healthcare. IEEE Transactions on Industrial Informatics, 11(3):583–590, 2015.

- [LPvdL15] Erin LeDell, Maya Petersen, and Mark van der Laan. Computationally efficient confidence intervals for cross-validated area under the roc curve estimates. Electronic journal of statistics, 9(1):1583, 2015.

- [LTS22] Zhen Lin, Shubhendu Trivedi, and Jimeng Sun. Conformal prediction intervals with temporal dependence. arXiv preprint arXiv:2205.12940, 2022.

- [MTBH05] Marianthi Markatou, Hong Tian, Shameek Biswas, and George M Hripcsak. Analysis of variance of cross-validation estimators of the generalization error. 2005.

- [MWMC18] Pavol Mulinka, Sarah Wassermann, Gonzalo Marin, and Pedro Casas. Remember the good, forget the bad, do it fast-continuous learning over streaming data. In Continual Learning Workshop at NeurIPS 2018, 2018.

- [NB99] Claude Nadeau and Yoshua Bengio. Inference for the generalization error. Advances in neural information processing systems, 12, 1999.

- [PAA+22] Fotios Petropoulos, Daniele Apiletti, Vassilios Assimakopoulos, Mohamed Zied Babai, Devon K Barrow, Souhaib Ben Taieb, Christoph Bergmeir, Ricardo J Bessa, Jakub Bijak, John E Boylan, et al. Forecasting: theory and practice. International Journal of Forecasting, 2022.

- [Rac00] Jeff Racine. Consistent cross-validatory model-selection for dependent data: hv-block cross-validation. Journal of econometrics, 99(1):39–61, 2000.

- [RT19] Saharon Rosset and Ryan J Tibshirani. From fixed-x to random-x regression: Bias-variance decompositions, covariance penalties, and prediction error estimation. Journal of the American Statistical Association, 2019.

- [SC11] Ingo Steinwart and Andreas Christmann. Estimating conditional quantiles with the help of the pinball loss. 2011.

- [SEJS86] Petre Stoica, Pieter Eykhoff, Peter Janssen, and Torsten Söderström. Model-structure selection by cross-validation. International Journal of Control, 43(6):1841–1878, 1986.

- [Sha93] Jun Shao. Linear model selection by cross-validation. Journal of the American statistical Association, 88(422):486–494, 1993.

- [Sto74] Mervyn Stone. Cross-validatory choice and assessment of statistical predictions. Journal of the royal statistical society: Series B (Methodological), 36(2):111–133, 1974.

- [SV08] Glenn Shafer and Vladimir Vovk. A tutorial on conformal prediction. Journal of Machine Learning Research, 9(3), 2008.

- [SY22] Sophia Sun and Rose Yu. Copula conformal prediction for multi-step time series forecasting. arXiv preprint arXiv:2212.03281, 2022.

- [TT09] Ryan J Tibshirani and Robert Tibshirani. A bias correction for the minimum error rate in cross-validation. 2009.

- [VGS99] Volodya Vovk, Alexander Gammerman, and Craig Saunders. Machine-learning applications of algorithmic randomness. 1999.

- [VS06] Sudhir Varma and Richard Simon. Bias in error estimation when using cross-validation for model selection. BMC bioinformatics, 7(1):1–8, 2006.

- [Wag20] Stefan Wager. Cross-validation, risk estimation, and model selection: Comment on a paper by rosset and tibshirani. Journal of the American Statistical Association, 115(529):157–160, 2020.

- [XX22] Chen Xu and Yao Xie. Sequential predictive conformal inference for time series. arXiv preprint arXiv:2212.03463, 2022.

- [Yan07] Yuhong Yang. Consistency of cross validation for comparing regression procedures. 2007.

- [You20] Waleed A Yousefa. A leisurely look at versions and variants of the cross validation estimator. stat, 1050:9, 2020.

- [You21] Waleed A Yousef. Estimating the standard error of cross-validation-based estimators of classifier performance. Pattern Recognition Letters, 146:115–125, 2021.

- [ZFG+22] Margaux Zaffran, Olivier Féron, Yannig Goude, Julie Josse, and Aymeric Dieuleveut. Adaptive conformal predictions for time series. In International Conference on Machine Learning, pages 25834–25866. PMLR, 2022.

- [Zha93] Ping Zhang. Model selection via multifold cross validation. The annals of statistics, pages 299–313, 1993.

- [Zha95] Ping Zhang. Assessing prediction error in non-parametric regression. Scandinavian journal of statistics, pages 83–94, 1995.

Appendix A Proof of Theorem 3.1

Proof of Theorem 3.1.

Throughout the proof, we take . For general , the proof follows similarly. The proof uses the uniform convergence argument and relies on the following lemma.

Lemma A.1.

Let be random variables that have a joint density with respect to the Lebesgue measure. Define the risk function

Then for any function , we have,

We take and define the empirical risk associated to the -pinball loss as

For and , and for any quantile function , we define

| (23) |

By the definition of , we have is well-defined. By Assumption 3 that is a finite set, we have . By Assumption 1, for any fixed function , we have point convergence of to ,

| (24) |

By Assumption 3 that is a finite set, applying union bound gives uniform convergence

| (25) |

As a consequence, by Assumption 3 that and by the definition of as in Eq. (23), when the good event in Eq. (25) happens, we have

In words, any minimizer of the empirical risk should be contained in the set . By the fact that is a minimizer of the empirical risk , we get that is contained in the set with high probability. That is, for or , we have

Then by Lemma A.1, we have

This finishes the proof of the theorem. ∎

We next give a proof of Lemma A.1.

Proof of Lemma A.1.

Let denote the density of , denote the marginal density of , and denote the conditional density of given .

Step 1. Show that for almost every . For any , we claim that for almost every . Otherwise, suppose that for certain with , we have , by the fact that the minimizer of the expected -pinball loss gives the -quantile, we have

Then we can take with for , and define . Then we have

which contradicts the fact that .

Step 2. Concludes the proof. We have

where the last equality is by Step 1. This proves the lemma. ∎

A.1 General result going beyond finite function class

In this section, we state and prove a more general version of Theorem 3.1, which goes beyond the assumption that is a finite function class. We start by stating the additional required assumptions.

Assumption 4.

is independent of .

For a distribution and for , the -quantile of is the set

We write and . Consider the stationary distribution of and let . We denote to be the marginal distribution of , and to be the conditional distribution of given .

Assumption 5.

For -almost-every , has a density that is uniformly upper-bounded by .

Assumption 6 (Definition 2.6 of [SC11]).

We have for -almost-every . Furthermore, for -almost-every , there exists and such that for all , we have

Moreover, denoting , we have .

Recall that we have

Assumption 7.

Assume that is a compact space, and , .

Given these assumptions, we are ready to state the general asymptotic validity result.

Theorem A.2 (General version of Theorem 3.1).

Proof of Theorem A.2.

For , define

For any , by the fact that is compact, there exists an -cover which is a finite set that satisfies the following: for any , there exists , such that . By Assumption 1, for any fixed function , we have

| (27) |

Applying union bound, we get uniform convergence over the -cover

| (28) |

Notice that we have and for any . Then we have uniform convergence over the whole space

| (29) |

Denote and (choose one if there are multiple of them). Then by standard decomposition, we have

Combining with Eq. (29), we have

Furthermore, by Theorem 2.7 of [SC11] and by Assumption 7, we have

This implies that for any (may be different from the above), we have

Finally, by Assumption 5 that the density of is uniformly bounded by , we have the inequality that for any fixed and . By Assumption 4 that is independent of but has the same distribution , taking and , we have

Notice that . This gives that for any , we have

This concludes the proof of Theorem 3.1. ∎

Appendix B Proofs for Section 4

B.1 Formal statement and proof of Proposition 4.2

Proposition B.1 (Asymptotic normality of FCV(c) (restatement)).

Assume that as defined in (11) is stationary ergodic, and satisfies

-

(1)

-independence: and are independent for any .

-

(2)

The variance is finite: .

-

(3)

Let be a filtration. We have for any .

-

(4)

We have for any .

Then we have convergence in distribution as

| (30) |

Proof of Proposition B.1.

The proof of Proposition B.1 relies on the following two lemmas.

Lemma B.2 (Convergence of sample autocovariance).

Suppose that is stationary ergodic with average . Denote its autocovariance function and sample autocovariance function as

Then for any fixed , as , we have convergence in probability

Lemma B.3 (Gordin’s central limit theorem ([Hay11] Page 402-405) ).

Suppose that is mean-zero stationary ergodic, with autocovariance function . Assume that

-

(1)

The variance is finite: .

-

(2)

Let be a filtration. We have for any .

-

(3)

We have for any .

Then we have , and as , we have convergence in distribution

B.2 Proof of Proposition 4.3

Appendix C Proof of Theorem 5.1

Proof of Theorem 5.1.

Throughout the proof, we assume that . For general , the proof follows similarly. Our proof is similar to Proposition 4.1 in [GC21] and Theorem 1 in [FBR22].

Lemma C.1.

Under the condition of Theorem 5.1. Then for all , we have .

Proof of Lemma C.1.

Assume for the sake of contradiction that there exists such that (the complementary case is similar). Further assume that for all , we have . Since , we get that:

Therefore, . Since for , we get that . As a result: . Which is a contradiction to our assumption. ∎

Appendix D The QFCV point estimator and variations of QFCV intervals

In this section, we first provide QFCV point estimators for the stochastic test error. We then discuss QFCV methods in the “expanding window” setting, which utilizes an incrementally expanding training dataset to fit the forecaster over time.

D.1 QFCV point estimators

We adopt the notations as in Section 3.1. Recall that the tuples and are defined in Eq. (6). Importantly, and are computable from the observed dataset, and our goal is to estimate . To provide a point estimator, we compute

where is a loss function, such as the absolute or square loss. The QFCV point estimator is then given by

Note that is a random variable, so this estimator is not a point estimator in the statistical decision-theoretic sense.

In simulation examples of Section 4.2, we adopt the square loss to produce point estimators. Table 1 shows that QFCV point estimators provide lower mean squared error (MSE) compared to the classical forward cross-validation estimator , especially when the time series is smoother so that past validation errors give stronger signals about future test error.

D.2 Variations of QFCV method

D.2.1 Longer memory span

The construction of validation and test sets is flexible and can be tailored to practitioners’ needs (recall the diagram for QFCV in Figure 3). Our theoretical guarantee for QFCV will hold as long as the covariates and targets are the same across folds. For instance, we can modify the number of covariates in quantile regression by including more past time windows. That is, we can replace step 2 in Algorithm 1 with:

and replace the algorithm output with:

The original QFCV method is the special case with . Increasing the number of past windows may improve efficiency when there is a long-range correlation between past validation errors and future test errors. However, the tradeoff is slower mixing and convergence rates for quantile regression.

D.2.2 Expanding window setup

The QFCV method in Figure 3 uses a “rolling window” approach where a fixed-size training dataset is used to fit the forecaster. Besides this “rolling window” approach, another common setup is the “expanding window” setting, which uses an incrementally expanding training dataset to fit the forecaster over time. The QFCV method can be adapted to the expanding window setting, where an illustrative diagram is shown in Figure 16. Specifically, we consider the following sets:

| (33) | ||||

With these sets and , we can compute tuples and as defined in Equation (6), then perform quantile regression to construct a prediction interval for as detailed in Algorithm 1. Due to varying training size, we do not have stationarity for , even if the original data sequence is stationary and ergodic. However, we may have approximate stationarity if the parameter estimates converge over time, providing some justification for using the QFCV method in the expanding window setting.

In Figure 17, we repeat the same simulation experiments as in Figure 6 but using the expanding window setting. Figure 17 shows that QFCV with different choices of generally achieves about nominal coverage when is not too large, although we have no theoretical guarantee. Notably, in panel (b) with model and , the QFCV method can produce prediction intervals at the nominal coverage level with only half the interval length compared to the true marginal quantiles interval. Besides, note that AQFCV method still has time-average coverage guarantee in the expanding window setup.