U-DuDoNet: Unpaired dual-domain network for CT metal artifact reduction

Abstract

Recently, both supervised and unsupervised deep learning methods have been widely applied on the CT metal artifact reduction (MAR) task. Supervised methods such as Dual Domain Network (Du-DoNet) work well on simulation data; however, their performance on clinical data is limited due to domain gap. Unsupervised methods are more generalized, but do not eliminate artifacts completely through the sole processing on the image domain. To combine the advantages of both MAR methods, we propose an unpaired dual-domain network (U-DuDoNet) trained using unpaired data. Unlike the artifact disentanglement network (ADN) that utilizes multiple encoders and decoders for disentangling content from artifact, our U-DuDoNet directly models the artifact generation process through additions in both sinogram and image domains, which is theoretically justified by an additive property associated with metal artifact. Our design includes a self-learned sinogram prior net, which provides guidance for restoring the information in the sinogram domain, and cyclic constraints for artifact reduction and addition on unpaired data. Extensive experiments on simulation data and clinical images demonstrate that our novel framework outperforms the state-of-the-art unpaired approaches.

Keywords:

Metal Artifact Reduction Dual-domain Learning Unpaired Learning.1 Introduction

Computed tomography (CT) reveals the underlying anatomical structure within the human body. However, when a metallic object is present, metal artifacts appear in the image because of beam hardening, scatters, photon starvation, etc. [2, 3, 19], degrading the image quality and limiting its diagnostic value.

With the success of deep learning in medical image processing [27, 28], deep learning has been used for metal artifact reduction (MAR). Single-domain networks [5, 21, 25] have been proposed to address MAR with success. Lin et al. are the first to introduce dual-domain network (DuDoNet) to reduce metal artifacts in the sinogram and image domain jointly and DuDoNet shows further advantages over single-domain networks and traditional approaches [4, 10, 12, 13, 19]. Following this work, variants of the dual-domain architecture [18, 22, 24] have been designed. However, all the above-mentioned networks are supervised and rely on paired clean and metal-affected images. Since such clinical data is hard to acquire, simulation data are widely used in practice. Thus, supervised models may over-fit to simulation and do not generalize well to real clinical data.

Learning from unpaired, real data is thus of interest. To this, Liao et al. propose ADN [14, 16], which separates content and artifact in the latent spaces with multiple encoders and decoders and induces unsupervised learning via various forms of image generation and specialized loss functions. An artifact consistency loss is introduced to retain anatomical preciseness during MAR. The loss is based on the assumption that metal artifacts are additive. Later on, Zhao et al. [26] design a simple reused convolutional network (RCN) of encoders and decoders to recurrently generating both artifact and non-artifact images. RCN also adopts the additive metal artifacts assumption. However, neither of the works has theoretically proved the property. Moreover, without the aid of processing in sinogram domain, both methods have limited effect on removing strong metal artifacts, such as the dark and bright bands around metal objects.

In this work, we analytically derive the additive property associated with metal artifacts and propose an unpaired dual-domain MAR network (U-DuDoNet). Without using complicated encoders and decoders, our network directly estimates the additive component of metal artifacts, jointly using two U-Nets on two domains: a sinogram-based estimation net (S-Net) and an image-based estimation net (I-Net). S-Net first restores sinogram data and I-net removes additional streaky artifacts. Unpaired learning is achieved with cyclic artifact reduction and synthesis processes. Strong metal artifacts can be reduced in the sinogram domain with prior knowledge. Specifically, sinogram enhancement is guided by a self-learned sinogram completion network (P-Net) with clean images. Both simulation and clinical data show our method outperforms competing unsupervised approaches and has better generalizability than supervised approaches.

2 Additive Property for Metal Artifacts

Here, we prove metal artifacts are inherently additive up to mild assumptions. The CT image intensity represents the attenuation coefficient. Let be a normal attenuation coefficient image at energy level . In a polychromatic x-ray system, the ideal projection data (sinogram) can be expressed as,

| (1) |

where and denote forward projection (FP) operator and fractional energy at . Comparing with metal, the attenuation coefficient of normal body tissue is almost constant with respect to , thus we have and . Without metal, filtered back projection (FBP) operator provides a clean CT image as a good estimation of , .

Metal artifacts appear mainly because of beam hardening. An attenuation coefficient image with metal can be split into a relatively constant image without metal and a metal-only image varies rapidly against , . Often is locally constrained. The contaminated sinogram can be given as,

| (2) |

And the reconstructed metal-affected CT image is,

| (3) |

Here, is the MAR image and the second term introduces streaky and band artifacts, which is a function of . Since metal artifacts are caused only by , we can create a plausible artifact-affected CT by adding the artifact term to an arbitrary, clean CT image: .

3 Methodology

Fig. 1b shows the proposed cyclical MAR framework. In Phase I, our framework first estimates artifact components and through U-DuDoNet from , see Fig. 1a. In Phase II, based on the additive metal artifact property (Section 2), plausible clean image and metal-affected image could be generated, , . Then, the artifact components should be removable from by U-DuDoNet, resulting in and . In the end, reconstructed images , can be obtained through subtracting or adding the artifact components, , .

3.1 Network Architecture

Artifact component estimation in sinogram domain. Strong metal artifacts like dark and bright bands can not be suppressed completely by image domain processing, while metal artifacts are inherently local in the sinogram domain. Thus, we aim to reduce metal shadows by sinogram enhancement.

First, we acquire metal corrupted sinograms ( and ) by forward projecting and : . Then, we use a pre-trained prior net (P-Net) to guide the sinogram restoration process. P-Net is an inpainting net that treats the metal-affected area in sinogram as missing and aims to complete it, i.e., . Here denotes a binary metal trace, , where is a metal mask and is a binary indicator function. We adopt a mask pyramid U-Net as from [15, 17]. To train , we artificially inject masks into clean sinograms.

Then, we use a sinogram network (S-Net) to predict enhanced sinogram , from , , respectively.

| (4) |

where represents a U-Net[20] of depth 2. Residual learning [8] is applied to ease the training process, and singoram prediction is limited to the region. To prevent information loss from discrete operators, we obtain the sinogram artifact component as a difference image between reconstructed input image and reconstructed enhanced sinogram,

| (5) |

Artifact component estimation in image domain. As sinogram data inconsistency leads to secondary artifacts in the whole image, we further use an image domain network (I-Net) to reduce newly introduced and other streaky artifacts. Let denote I-Net, which is a 5-depth U-Net. First, sinogram enhanced images are obtained by subtracting sinogram artifact component from corrupted images, . Then, I-Net takes a sinogram enhanced image, and outputs an artifact component in image domain ( or ),

| (6) |

3.2 Dual-domain Cyclic Learning

To obviate the need of paired data, we use cycle loss and artifact consistency loss as cyclic MAR constraints and adopt adversarial loss. Besides, we take advantage of prior knowledge to guide the data restoration in Phase I and apply dual-domain loss to encourage the data fidelity in Phase II.

Cycle loss. By cyclic artifact reduction and synthesis, the original and reconstructed images should be identical. We use loss to minimize the distance,

| (7) |

Artifact consistency loss. To ensure the artifacts components added to could be removed completely when applying the same network on , the artifact components estimated from and should be the same,

| (8) |

Adversarial loss. The synthetic images, and , should be indistinguishable to input images. Since paired groundtruth is not available, we adopt PatchGAN [9] as discriminators and to apply adversarial learning. Since metal affected images always contain streaks, we add gradient image generated by Sobel operator as an additional channel of the input of and to achieve better performance. The loss would be written as,

| (9) | ||||

Fedility loss. To learning artifact reduction from generated , we minimize the distances between and , and ,

| (10) |

Prior loss. Inspried by DuDoNet [17], sinogram inpainting network provides smoothed estimation of sinogram data within . Thus, we use a Gaussian blur operation with a scale of and loss to minimize the distance between blurred prior and enhanced sinogram. Meanwhile, inspired by [11], blurred sinogram enhanced image also serves as an good estimation of blurred MAR image. Also, we minimize the distance between low-pass versions of sinogram enhanced and MAR images with a Gaussian blur operation to stabilize the unsupervised training. The prior loss could be formulated as,

| (11) |

The overall objective function is the weighted sum of all the above losses, we empirically set the weight of to 1, and the weights of , to 10, and the weights of the other losses to 100. We set to 1 and to 3 in .

4 Experiment

4.1 Experimental Setup

Datasets. Following [14], we evaluate our model on both simulation and clinical data. For simulation data, we generate images with metal artifacts using the method in [25]. From DeepLesion [23], we randomly choose 3,984 clean images combining with 90 metal masks for training and additional 200 clean images combining with 10 metal masks for testing. For unsupervised training, we spilt 3,984 images into two groups and randomly select one metal corrupted image and one clean image. For clinical data, we select 6,146 images with artifacts and 21,002 clean images for training from SpineWeb [6, 7]. Additional 124 images with metal are used for testing.

Implementation and metrics. We implement our model with the PyTorch framework and differential FP and FBP operators with ODL library [1]. We train the model for 50 epochs using an Adam optimizer with a learning rate of and a batch size of 2. For clinical data, we train another model using unpaired images for 20 epochs and the metal mask is segmented with a threshold of 2,500 HU. We use peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) to evaluate the corrected image.

Baselines. We compare U-DuDoNet with multiple state-of-the-art (SOTA) MAR methods. DuDoNet [17], DuDoNet++ [18], DSCIP [24] and DAN-Net [22] are supervised methods which are trained with simulation data and tested on both simulation and clinical data. DuDoNet, DuDoNet++ and DAN-Net share the same SE-IE architecture with an image enhancement (IE) following sinogram enhancement (SE) network, while DSCIP adopts an IE-SE architecture that predicts a prior image first then outputs the sinogram enhanced image. RCN [26] and ADN [14] are unsupervised and can be trained and tested on each dataset.

| Method | Sinogram domain CT | Image domain CT | Running time (ms) | |

| PSNR(dB)/SSIM | PSNR(dB)/SSIM | |||

| Metal | n/a | n/a | 27.23/0.692 | n/a |

| Supervised | DuDoNet [17] | 32.20/0.755 | 36.95/0.927 | 60.38 |

| DuDoNet++ [18] | 32.20/0.751 | 37.65/0.953 | 39.77 | |

| DSCIP [24] | 29.22/0.624 | 30.06/0.790 | 62.01 | |

| DAN-Net [22] | 32.48/0.752 | 39.73/0.944 | 63.75 | |

| Unsupervised | RCN [26] | n/a | 32.98/0.918 | 38.18 |

| ADN [14] | n/a | 33.81/0.926 | 37.66 | |

| U-DuDoNet (ours) | 30.47/0.722 | 34.54/0.934 | 63.59 |

Corrupted CT

DuDoNet

DuDoNet++

DSCIP

DAN-Net

Groundtruth

RCN

ADN

Ours

4.2 Comparison on Simulated and Real Data

Simulated Data. From Table 1, we observe that DAN-Net achieves the highest PSNR and DuDoNet++ achieves the highest SSIM. All methods with SE-IE architecture outperform DSCIP. The reason is image enhancement network helps recover details and bridge the gap between real images and reconstructed images. Among all the unsupervised methods, our model attains the best performance, with an improvement of 0.73 dB in PSNR compared with ADN. Besides, our model runs as fast as the supervised dual-domain models but slower than image-domain unsupervised models. Figure 2 shows the visual comparisons of a case. The zoomed subfigure shows that metallic implants induce dark bands in the region between two implants or along the direction of dense metal pixels. Learning from linearly interpolated (LI) sinogram, DuDoNet removes the dark bands and streaky artifacts completely but smooths out the details around the metal. DuDoNet++ and DSCIP could not remove the dark bands completely as they learn from the corrupted images. DAN-Net contains fewer streaks than DuDoNet++ and DSCIP since it recovers from a blended sinogram of LI and metal-affected data. Among all the unsupervised methods, only our model recovers the bony structure in dark bands and contains least streaks.

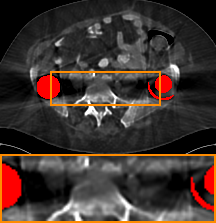

Real Data. Fig. 3 shows a clinical CT image with two rods on each side of the spinous process of a vertebra. The implants induce severe artifacts, which make some bone part invisible. DuDoNet recovers the bone but introduces strong secondary artifacts. DuDoNet++, DSCIP, and DAN-Net do not generalize to clinical data as the dark band remains in the MAR images. Besides, all the supervised methods output smoothed images as training images from DeepLesion might be reconstructed by a soft tissue kernel. The MAR images of the unsupervised method could retain the sharpness of the original image. But, RCN and ADN do not reduce the artifacts completely or retain the integrity of bone structures near the metal as these structures might be confused with artifacts. Our model removes the dark band while retaining the structures around the rods. More visual comparisons are in the supplemental material.

Corrupted CT

DuDoNet

DuDoNet++

DSCIP

DAN-Net

RCN

ADN

Ours

| PSNR(dB)/SSIM | M1 (Image Domain) | M2 (Dual-domain) | M3 (Dual-domain prior) |

| n/a | 29.99/0.730 | 30.47/0.730 | |

| 32.97/0.927 | 33.30/0.930 | 34.54/0.934 |

Corrupted CT

Groundtruth

M1

M2

M3(full)

4.3 Ablation Study

We evaluate the effectiveness of different components in our full model. Table 2 shows the configuration of our ablation models. Briefly, M1 refers to the model with I-Net and , , M2 refers to M1 plus S-Net, , , and M3 refers to M2 plus P-Net, . As shown in Table 2 and Fig. 4, M1 has the capability of MAR in image domain, but strong artifacts like dark bands and streaks remain in the output image. Dual-domain learning increases the PSNR by 0.33 dB, and the dark bands are partial removed in the corrected image, but streaks show up as sinogram enhancement might be not perfect. With the aid of prior knowledge, M3 could remove the dark bands completely and further suppresses the secondary artifacts.

5 Conclusion

In this paper, we present an unpaired dual-domain network (U-DuDoNet) that exploits the additive property of artifact modeling for metal artifact reduction. In particular, we first remove the strong metal artifacts in sinogram domain and then suppress the streaks in image domain. Unsupervised learning is achieved via cyclic additive artifact modeling, i.e. we try to remove the same artifact after inducing artifact in an unpaired clean image. We also apply prior knowledge to guide data restoration. Qualitative evaluations and visual comparisons demonstrate that our model yields better MAR performance than competing methods. Moreover, our model shows great potential when applied to clinical images.

References

- [1] Adler, J., Kohr, H., Oktem, O.: Operator discretization library (odl). Software available from https://github. com/odlgroup/odl (2017)

- [2] Barrett, J.F., Keat, N.: Artifacts in ct: recognition and avoidance. Radiographics 24(6), 1679–1691 (2004)

- [3] Boas, F.E., Fleischmann, D.: Ct artifacts: causes and reduction techniques. Imaging in Medicine 4(2), 229–240 (2012)

- [4] Chang, Z., Ye, D.H., Srivastava, S., Thibault, J.B., Sauer, K., Bouman, C.: Prior-guided metal artifact reduction for iterative x-ray computed tomography. IEEE transactions on medical imaging 38(6), 1532–1542 (2018)

- [5] Ghani, M.U., Karl, W.C.: Fast enhanced ct metal artifact reduction using data domain deep learning. IEEE Transactions on Computational Imaging (2019)

- [6] Glocker, B., Feulner, J., Criminisi, A., Haynor, D.R., Konukoglu, E.: Automatic localization and identification of vertebrae in arbitrary field-of-view ct scans. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 590–598. Springer (2012)

- [7] Glocker, B., Zikic, D., Konukoglu, E., Haynor, D.R., Criminisi, A.: Vertebrae localization in pathological spine ct via dense classification from sparse annotations. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 262–270. Springer (2013)

- [8] He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 770–778 (2016)

- [9] Isola, P., Zhu, J.Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 1125–1134 (2017)

- [10] Jin, P., Bouman, C.A., Sauer, K.D.: A model-based image reconstruction algorithm with simultaneous beam hardening correction for x-ray ct. IEEE Transactions on Computational Imaging 1(3), 200–216 (2015)

- [11] Jin, X., Chen, Z., Lin, J., Chen, Z., Zhou, W.: Unsupervised single image deraining with self-supervised constraints. In: 2019 IEEE International Conference on Image Processing (ICIP). pp. 2761–2765. IEEE (2019)

- [12] Kalender, W.A., Hebel, R., Ebersberger, J.: Reduction of ct artifacts caused by metallic implants. Radiology 164(2), 576–577 (1987)

- [13] Karimi, S., Martz, H., Cosman, P.: Metal artifact reduction for ct-based luggage screening. Journal of X-ray science and technology 23(4), 435–451 (2015)

- [14] Liao, H., Lin, W., Zhou, S.K., Luo, J.: Adn: Artifact disentanglement network for unsupervised metal artifact reduction. IEEE Transactions on Medical Imaging (2019). https://doi.org/10.1109/TMI.2019.2933425

- [15] Liao, H., Lin, W.A., Huo, Z., Vogelsang, L., Sehnert, W.J., Zhou, S.K., Luo, J.: Generative mask pyramid network for ct/cbct metal artifact reduction with joint projection-sinogram correction. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 77–85. Springer (2019)

- [16] Liao, H., Lin, W.A., Yuan, J., Zhou, S.K.Z., Luo, J.: Artifact disentanglement network for unsupervised metal artifact reduction. In: International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI) (2019)

- [17] Lin, W.A., Liao, H., Peng, C., Sun, X., Zhang, J., Luo, J., Chellappa, R., Zhou, S.K.: Dudonet: Dual domain network for ct metal artifact reduction. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 10512–10521 (2019)

- [18] Lyu, Y., Lin, W.A., Liao, H., Lu, J., Zhou, S.K.: Encoding metal mask projection for metal artifact reduction in computed tomography. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 147–157. Springer (2020)

- [19] Meyer, E., Raupach, R., Lell, M., Schmidt, B., Kachelrieß, M.: Normalized metal artifact reduction (nmar) in computed tomography. Medical physics 37(10), 5482–5493 (2010)

- [20] Ronneberger, O., Fischer, P., Brox, T.: U-net: Convolutional networks for biomedical image segmentation. In: International Conference on Medical image computing and computer-assisted intervention. pp. 234–241. Springer (2015)

- [21] Wang, J., Zhao, Y., Noble, J.H., Dawant, B.M.: Conditional generative adversarial networks for metal artifact reduction in ct images of the ear. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 3–11. Springer (2018)

- [22] Wang, T., Xia, W., Huang, Y., Sun, H., Liu, Y., Chen, H., Zhou, J., Zhang, Y.: Dan-net: Dual-domain adaptive-scaling non-local network for ct metal artifact reduction. arXiv preprint arXiv:2102.08003 (2021)

- [23] Yan, K., Wang, X., Lu, L., Zhang, L., Harrison, A.P., Bagheri, M., Summers, R.M.: Deep lesion graphs in the wild: relationship learning and organization of significant radiology image findings in a diverse large-scale lesion database. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 9261–9270 (2018)

- [24] Yu, L., Zhang, Z., Li, X., Xing, L.: Deep sinogram completion with image prior for metal artifact reduction in ct images. IEEE Transactions on Medical Imaging 40(1), 228–238 (2020)

- [25] Zhang, Y., Yu, H.: Convolutional neural network based metal artifact reduction in x-ray computed tomography. IEEE Transactions on Medical Imaging 37(6), 1370–1381 (June 2018). https://doi.org/10.1109/TMI.2018.2823083

- [26] Zhao, B., Li, J., Ren, Q., Zhong, Y.: Unsupervised reused convolutional network for metal artifact reduction. In: International Conference on Neural Information Processing. pp. 589–596. Springer (2020)

- [27] Zhou, S.K., Greenspan, H., Davatzikos, C., Duncan, J.S., van Ginneken, B., Madabhushi, A., Prince, J.L., Rueckert, D., Summers, R.M.: A review of deep learning in medical imaging: Imaging traits, technology trends, case studies with progress highlights, and future promises. Proceedings of the IEEE (2021)

- [28] Zhou, S.K., Rueckert, D., Fichtinger, G.: Handbook of medical image computing and computer assisted intervention. Academic Press (2019)