Two-stage Robust Optimization Approach for Enhanced Community Resilience Under Tornado Hazards

Abstract

Catastrophic tornadoes cause severe damage and are a threat to human wellbeing, making it critical to determine mitigation strategies to reduce their impact. One such strategy, following recent research, is to retrofit existing structures. To this end, in this article we propose a model that considers a decision-maker (a government agency or a public-private consortium) who seeks to allocate resources to retrofit and recover wood-frame residential structures, to minimize the population dislocation due to an uncertain tornado. In the first stage the decision-maker selects the retrofitting strategies, and in the second stage the recovery decisions are made after observing the tornado. As tornado paths cannot be forecast reliably, we take a worst-case approach to uncertainty where paths are modeled as arbitrary line segments on the plane. Under the assumption that an area is affected if it is sufficiently close to the tornado path, the problem is framed as a two-stage robust optimization problem with a mixed-integer non-linear uncertainty set. We solve this problem by using a decomposition column-and-constraint generation algorithm that solves a two-level integer problem at each iteration. This problem, in turn, is solved by a decomposition branch-and-cut method that exploits the geometry of the uncertainty set. To illustrate the model’s applicability, we present a case study based on Joplin, Missouri. Our results show that there can be up to 20% reductions in worst-case population dislocation by investing $15 million in retrofitting and recovery; that our approach outperforms other retrofitting policies, and that the model is not over-conservative.

Keywords Robust Optimization Column and constraint generation Tornado Community Resilience

1 Introduction

Tornadoes are vertical columns of rotating air that are spawned from supercell thunderstorms caused by rotating updrafts that form because of the shear in the environmental wind field [NOAA, 2022d]. Catastrophic tornadoes are common natural disasters that happen in many populated regions around the world and are particularly concerning in dense high-risk urban areas because of their damage potential. On average, more than 1200 tornadoes happen in the US annually which cause around 60 fatalities [NOAA, 2022a]. Tornadoes have caused damage costs that range between $183 million to $9.493 billion per year in the US within the past two decades [NOAA, 2022e]. The intensity of tornadoes is measured by the Enhanced Fujita (EF) scale, which ranks a tornado from 0 (weakest) through 5 (most violent) based on an estimation of their average wind speed. The average wind speed, in turn, is estimated by comparing the observed tornado damages with a list of Damage Indicators and Degrees of Damage [NOAA, 2022f].

Fatalities and injuries from tornadoes have decreased in the past few decades thanks to warning systems based on weather forecasts [Standohar-Alfano et al., 2017, Koliou and van de Lindt, 2020]. For example, the Storm Prediction Center at the National Weather Service keeps a Day 1-8 Convective Outlook for all the US and issues watches or warnings based on the possibility or observation of tornadoes [NOAA, 2022b]. Tornado warnings are issued as soon as a rotating supercell has been identified by a weather radar; these warnings instruct people in the affected area to take shelter immediately. Even though the warning systems have caused a great reduction in fatalities [FEMA, 2022a], their short lead times (of the order of minutes) do not allow to prevent physical structures from possible destruction. This is further aggravated by the fact that more than 80% of building stocks in the US are wood-frame buildings that are highly vulnerable to wind damage [van de Lindt and Dao, 2009].

Besides weather forecasting, other alternatives can be employed to reduce the impact of tornadoes. In the past decade, several studies have shown that retrofitting strategies with simple and inexpensive actions, e.g., improving the roof cover or enhancing the roof sheathing nailing pattern of a house, can improve building codes to make wood-frame buildings more resistant to tornado damage, particularly from damaging tornadoes of EF2 intensity or less [Simmons et al., 2015, Ripberger et al., 2018, Masoomi et al., 2018, Koliou and van de Lindt, 2020, Wang et al., 2021]. In particular, Ripberger et al. [2018] shows that a 30% or more reduction in lifetime damage can be expected by enhancing existing wood-frame buildings with simple retrofitting actions. Similarly, recent research has studied different recovery strategies and has shown that they have the potential to significantly improve the restoration time and reduce population dislocation [Masoomi and van de Lindt, 2018, Farokhnia et al., 2020, Koliou and van de Lindt, 2020].

At the federal level in the US, the use of retrofitting has been identified as a valuable strategy to reduce the impact of natural disasters [FEMA, 2021, 2022b, The White House, 2022]. At the local level, however, such strategies have not been implemented yet. In fact, there are federal programs that provide general guidelines for local administrators (e.g., the emergency management agencies of cities and towns) to design and fund retrofitting plans [FEMA, 2021]. Motivated by this need, the main goal of this paper is to present a model to guide retrofitting and recovery investments in order to enhance the community resilience of an urban area subject to tornado hazards. Here, community resilience is understood as “the ability to prepare for anticipated hazards, adapt to changing conditions, and withstand and recover rapidly from disruptions” [McAllister et al., 2015].

Specifically, we assume that there is a decision-maker (a government agency or a public-private consortium; for instance the emergency management department of a city and home insurance companies) that can invest a limited budget to retrofit and recover structures across a geographical area of interest, in order to maximize the community resilience due to an uncertain tornado. We model this decision problem as a two-stage robust optimization model. The first-stage decisions are made before the realization of a tornado and determine what structures have to be retrofitted and to what extent. The second-stage decisions determine recovery strategies for the locations that are damaged by the tornado. Without loss of generality, in this article we consider total population dislocation, which is the involuntary movement of people from their residential sites after a severe tornado, as a metric for community resilience to be minimized (therefore, maximizing community resilience would be equivalent to minimizing population dislocation). Our proposed formulation, however, allows for other metrics that assess community resilience.

We formulate the first- and second- stage problems as integer programming problems (IP) whose decision variables select retrofitting strategies and recovery strategies, respectively, for each location. Both stages share the same budget, which models that, at least a priori, the decision-maker does not know what proportion of the budget should be spent on retrofitting and what proportion on recovery. On the other hand, as an accurate prediction of the location/time where a tornado forms, the motion direction, and its magnitude, is out of reach of the current technology [NOAA, 2022c], we approach the uncertainty using a robust lens. That is, we assume that given any retrofitting plan, the corresponding worst-case tornado damage happens. In order to have an adequate balance between accuracy and tractability, we model tornado paths as arbitrary line segments on the plane and assume that an area is affected by the tornado if it is sufficiently close to the line segment. This modeling approach results in a mixed-integer non-linear uncertainty set.

In order to solve the problem, we employ an exact column-and-constraint generation (C&CG) algorithm based on the method discussed by Zeng and Zhao [2013]. Here, at each iteration, a master problem solves a relaxation of the original problem over a subset of possible tornado scenarios. New scenarios are iteratively generated in a subproblem and added to the master until the objective value does not change. In contrast with previous models that employ the C&CG algorithm, for instance Zeng and Zhao [2013], Yuan et al. [2016], Velasquez et al. [2020], our subproblem is a challenging max-min non-linear integer problem that cannot be solved by standard ‘dualize and combine’ techniques. Thus, the subproblem is solved by a decomposition branch-and-cut algorithm that exploits the geometric properties of the uncertainty set. Particularly, we derive ‘double’ and ‘triple’ conflict constraints to define the master relaxation and we check the feasibility of master solutions by using a stabbing line algorithm and a continuous non-convex optimization problem.

Using geographical and population data from IN-CORE [2022], we use the proposed model to determine optimal retrofitting and recovery actions in Joplin, MO. The results of the case study show that retrofitting actions should be performed in most locations in the geographical center of the city, which are also the locations with higher population density. They also show that spending budget in retrofitting is prioritized over recovery, and that only when a ‘critical’ set of locations in the center of the city are retrofitted, there should be expenditures in recovery. By performing simulations we also show that the proposed model outperforms other retrofitting policies, and that the model is not over-conservative: the optimal worst-case population dislocation can be within 10% of the maximum population dislocation observed in the simulations.

To summarize, in this paper we make the following contributions:

-

•

We propose a novel two-stage optimization model under uncertainty to aid decision-makers in the allocation of resources to retrofit and recover residential wood-frame buildings in tornado-prone regions.

-

•

We explicitly model tornado paths as arbitrary line segments on the plane and formulate the problem as a two-stage robust optimization problem with integrality requirements in the first and second stages and in the uncertainty set.

-

•

We develop an exact algorithm that embeds a decomposition branch-and-cut algorithm within a column-and-constraint generation method. By exploiting the geometric properties of line segments, we develop initialization and separation procedures to effectively implement the embedded decomposition branch-and-cut algorithm.

-

•

Using real data we provide optimal retrofitting and recovery strategies for Joplin, MO. The results show that there can be up to 20% reductions in worst-case population dislocation by investing $15 million; that our approach outperforms other retrofitting policies, and that the model does not suffer from over-conservativeness.

The remainder of this paper is organized as follows. Section 2 reviews the literature. Section 3 describes the two-stage robust optimization problem. Section 4 presents a customized version of the C&CG algorithm. We describe the decomposition branch-and-cut method to solve the subproblem in C&CG algorithm in Section 5. In Section 6, we conduct the numerical experiments on Joplin data as our case study. Lastly, Section 7 concludes the paper.

2 Literature review

2.1 Optimization models to retrofit and recover under tornado hazards

The determination of retrofitting and recovery strategies for tornado hazards, particularly using optimization, is a relatively new topic in the literature. Wen [2021] proposes a multi-objective optimization model to retrofit against tornado hazards. Their model, however, is single-stage, does not considers recovery, does not consider uncertain tornado paths, and aggregates all of the uncertainty of the problem into the parameters of the deterministic model. Simulation, on the other hand, is more commonly used to study the behavior of tornadoes, particularly to evaluate the impact of retrofitting strategies, see for example Strader et al. [2016], Wang et al. [2017], Masoomi and van de Lindt [2018], Fan and Pang [2019], Wang et al. [2021], Stoner and Pang [2021]. These works, however, do not explicitly consider the decision problem of allocating resources to retrofit and recover.

The closest models in the literature to the present work are two-stage robust optimization models for (general) disaster planning, see Yuan et al. [2016], Ma et al. [2018], Matthews et al. [2019], Velasquez et al. [2020], Cheng et al. [2021]. These models, however, are geared towards supply and distribution problems in networks and do not capture the specific patters of the disasters (such as, e.g., paths or ripple effects). Consequently, these models cannot be adapted to deal with tornadoes and the specific retrofitting and recovery setting we study here.

2.2 Robust optimization

Robust optimization methods have been extensively developed in the past two decades to mathematically model and study different systems under uncertainty. Standard robust optimization models assume that the uncertain parameters belong to a convex set [Ben-Tal and Nemirovski, 1999, Bertsimas and Sim, 2004], which have many applications such as scheduling [Lin et al., 2004], supply chain [Ben-Tal et al., 2011], transportation [Yao et al., 2009], power system problems [Xiong et al., 2017], among others. Mixed-integer version of uncertainty sets have also been studied. Exact methods to solve a general robust optimization problem with a mixed-integer uncertainty set are similar to the integer L-shaped method [Laporte and Louveaux, 1993] in which a master problem iteratively solves a restricted version of the original problem under a few uncertain scenarios and for the solution vector. Here, a subproblem generates a new scenario by finding the worst-case of objective within a mixed-integer uncertainty set [Mutapcic and Boyd, 2009, Ben-Tal et al., 2015, Ho-Nguyen and Kılınç-Karzan, 2018, Borrero and Lozano, 2021, Ansari et al., 2022].

Ben-Tal et al. [2004] extend the scope of standard single-stage robust optimization problems by introducing the adjustable robust optimization methodology, where a part of decision variables must be determined before the realization of the uncertainty set. The rest of the decision variables are chosen when the uncertain parameters are revealed. The two-stage approach provides a less conservative framework to deal with the uncertainty and it can model a broad range of applications in different areas such as transportation [Gabrel et al., 2014], networks [Atamtürk and Zhang, 2007], investment [Takeda et al., 2008], power systems problems [Shams et al., 2021, Li et al., 2021], among others.

In general, two-stage robust optimization problems are computationally expensive. To efficiently solve them, decomposition methods are widely employed, under the assumption that the second-stage problem is a linear programming problem [Thiele et al., 2009, Zhao and Zeng, 2012, Jiang et al., 2012, Gabrel et al., 2014]. Zeng and Zhao [2013] presents the column and constraint generation method (C&CG) as an alternative to address this class of robust problems. They showed the C&CG can outperform previous decomposition for many problem settings. The C&CG approach has become a popular tool to solve two-stage robust optimization in the past decade [An et al., 2014, An and Zeng, 2015, Jabr et al., 2015, Neyshabouri and Berg, 2017, Ding et al., 2017, Yuan et al., 2016, Matthews et al., 2019, Velasquez et al., 2020, Cheng et al., 2021]. An important feature of existing applications of the C&CG method is that the second-stage optimization problem is convex. In contrast, in our problem the second-stage problem is an IP problem and the uncertainty set is mixed-integer non-linear. Therefore, the standard ‘dualize and combine’ approach that is used in the literature to solve the subproblem in the C&CG does not apply to our case.

3 Model formulation

Next, we define the two-stage robust optimization model to minimize the total population dislocation under an uncertain tornado and present a mathematical formulation of the problem.

3.1 Two-stage robust optimization formulation

Recall that the first stage decisions seek to determine what locations to retrofit before the realization of a tornado. The second stage decisions, that happen after the uncertainty is revealed, select what recovery strategies should be implemented in the locations that are affected by the tornado.

Formally, suppose denotes the set of retrofitting strategies and denotes the set of recovery plans. We consider a set of locations of interest , hereafter referred to as locations for simplicity, which are placed in a 2-dimensional plane. Let be the population dislocation estimation pre-tornado at location under retrofitting strategy (in most cases ) and let be the population dislocation post-tornado at location assuming that the retrofitting strategy is used and that the recovery plan is .

We assume that the decision-maker has a limited budget of to invest in the retrofitting and recovery plans. This assumption allows the model to allocate the budget endogenously, rather than having the decision-maker setting the proportions before hand. If required, the model is able to handle fixed budgets by adding a linear constraint in the first-stage problem. Let be the retrofitting cost of all the buildings in location under strategy , and let denote the cost of using recovery plan on the buildings in location if the retrofitting strategy is applied. All parameters in both stages are computed in Section 6.1 in a real example, by using the publicly available data and engineering models for wood-frame buildings in the literature.

Define the decision variables and as

and let , , be the binary variables that represent the coverage of a tornado across the locations, that is:

Then, the two-stage robust optimization model is defined as follows

| (1a) | |||||

| s.t. | (1b) | ||||

| (1c) | |||||

where the second stage problem for given and is

| (2a) | |||||

| s.t. | (2b) | ||||

| (2c) | |||||

| (2d) | |||||

The first term of the objective function (1a) measures the pre-tornado dislocation across all locations due to implementing the retrofitting strategies. The second term measures the population dislocation after the worst-case tornado with respect to the retrofitting strategies selected at the first stage. Constraint (1b) ensures that each location picks exactly one retrofitting strategy. We make the assumption that there is a “do-nothing” strategy in with zero cost.

The second stage optimization problem (2) selects the recovery plans that minimize the population dislocation given a retrofitting strategy vector and the tornado coverage vector . The budget constraint (2b) ensures that the overall cost for the execution of recovery and retrofitting strategies is . Constraint (2c) forces each location to pick exactly one recovery strategy corresponding to the selected retrofitting strategy. As before, we assume there exist a “do-nothing” strategy in with zero cost. Next, we model tornado paths by ensuring that the decision vector belongs to the uncertainty set which contains all feasible tornado damages over locations in .

3.2 Uncertainty set formulation

We make three assumptions to model a tornado and its associated damage:

-

A.1.

Tornado paths are represented by line segments of at most a length in the (real) plane; is a parameter set by the decision maker, and can be easily modified to study the effects of different tornado lengths.

-

A.2.

Each location represents a cluster of buildings that are geographically close. Such clusters can depict individual buildings, blocks, neighborhoods, counties, or any other arrangement of interest to the decision-maker.

-

A.3.

A location is damaged by the tornado if an aggregate measure of the coordinates of all buildings in (e.g., the centroid of all the coordinates of the buildings in that location), is within units of the tornado path, being a parameter set by the decision-maker.

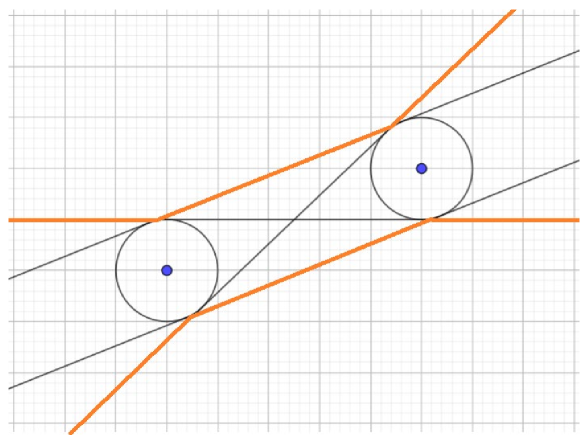

Assumption A.1. follows from considering historical records of tornadoes, such as evidenced in Figure 1, which depicts the tornado paths in Oklahoma County between 1950 and 2020. Observe that, with a few exceptions, the paths can be adequately approximated by line segments. This behavior is also observed across the US, not only Oklahoma, see the damage assessment tool in NOAA [2023]. Whereas A.1. might be seen as restrictive, it is a reasonable and uncluttered mathematical representation of a complex weather phenomenon.

Assumption A.2. is made to avoid politically inviable policies. Allowing full granularity (by associating a location with each building) might result in policies that prescribe retrofitting and recovery actions for a given building, but no retrofitting nor recovery to its immediate neighbors. Clearly, such a policy might raise fairness concerns, particularly if the decision-maker is a public entity. We note that our model works for any clustering approach; all clustering information required by the model is summarized via the parameters of the model.

Assumption A.3. defines the damage due to the tornado. Considering the variable nature of tornado damage, can be seen as an upper-bound on the width observed in historical data for a given area to study the worst-case scenario. Due to the uncertain nature of tornado behavior, defining specific rules for modeling the coverage during a tornado remains a challenging task.

We assume that the length of tornado is at most a given parameter , and that the two endpoints of are located within a sufficiently large rectangle that contains all locations in . Let and be the endpoints of line segment (see Figure 2). The uncertainty set can be defined by the following mixed-integer non-linear programming (MINLP) formulation

| (3a) | ||||

| (3b) | ||||

| (3c) | ||||

| (3d) | ||||

where calculates the Euclidean distance between the vector coordinates and where denote the coordinates of location on the plane.

The value of in Constraint (3b) calculates the distance between a point on the line segment and location . This constraint defines -variables such that if for some , then the location is covered by tornado. The value of is sufficiently large to ensure the constraint is trivially satisfied if (the value of is set to be the largest value among the Euclidean distances from location to the corners of rectangle ). Constraint (3c) enforces the range of continuous variable to be between 0 and 1, which indicates that is a finite segment and not an infinite line. Constraint (3d) also ensures that the length of a tornado, which is the Euclidean distance between the two endpoints, does not exceed . Note that the problem maximizes over the -variables and that all of them have non-negative coefficients in the objective function. This observation and Constraint (3b) imply that if and only if location is covered by the tornado path.

Remark 1.

The parameters , , , and , , are selected by the decision-maker. Also, we note that is a valid choice for the length of the tornado path. In this case we refer to the path as a line rather than by a segment. This assumption represents situations where the path is sufficiently long to traverse the entire region of interest. In this case, constraints (3c) and (3d) are not necessary in the formulation of .

4 A Solution Method Based on the C&CG Framework

We present a method to solve the two-stage robust optimization problem (1), based on the C&CG algorithm in Zeng and Zhao [2013]. First, we provide a linear one-level reformulation of problem (1) by means of an epigraphic reformulation of the worst-case second-stage objective. To this end, note that is a finite set and write it as , where is the cardinality of and suppose is the vector of second-stage variables corresponding to scenario . The one-level linear mixed-integer programming (MIP) reformulation of (1) is:

| (4a) | |||||

| s.t. | (4b) | ||||

| (4c) | |||||

| (4d) | |||||

| (4e) | |||||

| (4f) | |||||

| (4g) | |||||

where . Constraint (4c) implies that the minimum value of is equal to the maximum population dislocation among all tornadoes in . Constraint (4d) and (4e) ensure that the recourse vectors corresponding to each scenario, meet the constraints of the second stage.

Remark 2.

Solving problem (4) directly is not practical in general, given that the uncertainty set might have a large number of scenarios in terms of the number of locations. If , then there is a polynomial number of scenarios in which can be constructed based on the stabbing line algorithm in Section 5.3.1 (in this case, nevertheless, using (4) to directly solve the problem remains impractical because there would be at least a quadratic number of scenarios in terms of the number of locations). If , it remains an open question to determine whether there are polynomially many scenarios in .

We employ a modified version of the C&CG to solve formulation (4). The algorithm is set to iteratively solve a relaxation of (4) over a subset of scenarios at iteration to obtain an optimal solution . If given , there exists a tornado coverage that produces a dislocation greater than , then we add scenario to the master problem at the next iteration. Scenario is found by solving the following subproblem, evaluated at :

| (5) |

where is the set of feasible solutions of problem ; see constraints (2b)–(2d). Specifically, if the optimal solution is such that , then is the tornado coverage that attains . Note that when the variables and constraints associated with scenario are added at iteration , the solution is no longer feasible in the master relaxation.

Let be the value of the master problem at iteration , that is, problem (4) with replaced by , with and let be the subproblem for the retrofitting strategy found at iteration of the algorithm. The C&CG method is presented in Algorithm 1.

Algorithm 1 begins with an arbitrary feasible scenario . In each iteration , the relaxed MIP problem is solved. The value of then provides a lower bound for the value of . We note that the values of , , are non-decreasing in . Then, the subproblem generates a new feasible scenario for the next iteration and updates the upper bound. The algorithm terminates when the subproblem does not find a scenario that violates the lower bound value, which implies the upper bound is equal to the lower bound. Zeng and Zhao [2013] prove that the C&CG algorithm converges in finite time.

Note that Step 1 in Algorithm 1 is expensive computationally because it requires solving the MINLP problem in (5). The approach to solve the subproblem in standard applications of the C&CG relies on using duality or the Karush Kuhn Tucker (KKT) conditions on the second-stage problem [Zeng and Zhao, 2013]. Notice that is a bilevel problem with integrality requirements at both levels. Therefore, we cannot use either of these approaches. Alternatively, we propose a decomposition branch-and-cut method next.

5 A Decomposition Branch-and-Cut (DBC) Algorithm to Solve

The DBC method is based on a one-level reformulation of . We replace the in the objective by its epigraphic reformulation and replace the uncertainty set requirements in terms of linear (although exponentially many in the worst-case) constraints. Next, we discuss how to initialize the constraints in the master problem and how to perform the “two types” of separations.

5.1 Overview of the DBC algorithm

Suppose is the set of combinations of locations that cannot be covered by a tornado path , i.e., for each , there exists a set of locations . A one-level linear reformulation of is given by:

| (6a) | |||||

| s.t. | (6b) | ||||

| (6c) | |||||

| (6d) | |||||

The correctness of the reformulation follows from the maximization sense in (6). Note that in (6b) represents a fixed value (not a variable); and that there is such an for each element of .

Observe that and are finite sets with potentially exponentially many elements, thus cannot be solved directly by a commercial solver. The DBC starts with a relaxation of by replacing and with subsets and , respectively. The resulting problem is referred as the master relaxation. The standard branch-and-cut (BC) algorithm then starts solving the master and prunes nodes by bound and infeasibility. When at some node an integral solution is found (i.e., a solution that is feasible in the master), its feasibility with respect to the original formulation in (6) must be verified.

To this end, Algorithm 2 takes the master feasible solution at node of the BC tree and verifies its feasibility: first, it checks whether satisfies all -constraints (6c), i.e., it checks if . If does not pass the first check, then let be the active locations in . Observe that is a combination of locations that cannot be covered by a tornado path, thus a cut (6c) with is added on the fly. If passes the first check, then the algorithm checks whether satisfies all -constraints (6b). If also passes the second check, then is feasible in ; if not, then a cut (6b) with is added on the fly, being the optimal solution of the second-stage problem associated with and . The performance of Algorithm 2 depends on the quality of and and on having fast separation routines. We discuss these issues next.

5.2 Definition of

The set is constructed by identifying pairs and triples of locations that cannot be covered by a single tornado path. It is worth mentioning that the valid constraints associated with these infeasible cases can also be used to tighten the relaxation of by the elimination of potentially many infeasible fractional solutions for -variables; we evaluate the performance of DBC with enhanced by these valid cuts in Appendix 5.

5.2.1 Cuts from infeasible pairs.

Because the length of a tornado is at most and only locations within a distance of from a tornado line segment are covered, a tornado cannot cover two locations if they are at a distance of more than . Consequently we have the following result:

Proposition 1.

Let be the set of infeasible pairs. Then any satisfies that

| (7) |

5.2.2 Infeasible triple cuts.

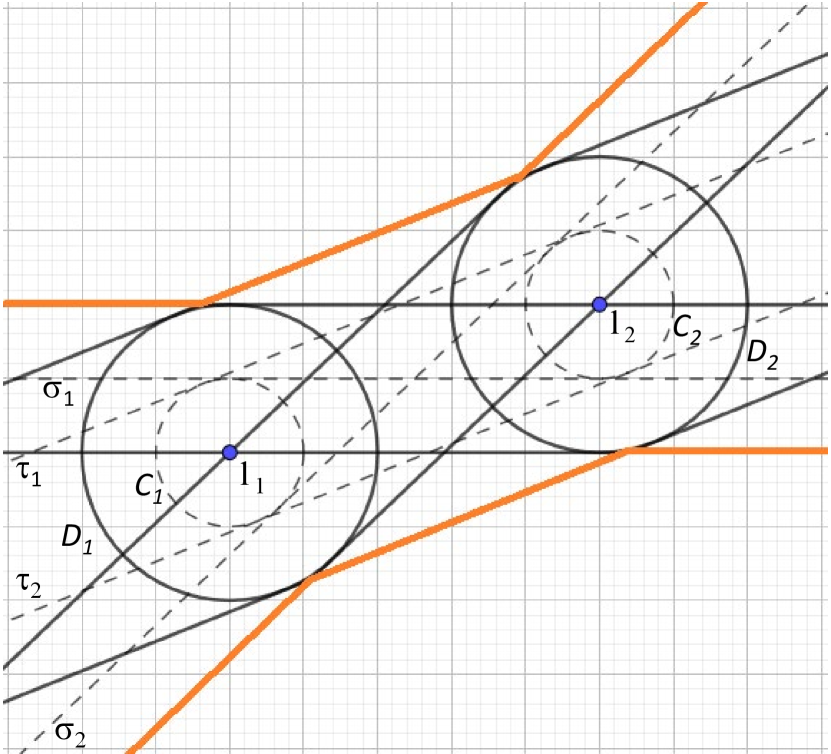

We also identify infeasible triples of locations that cannot be covered by a tornado path. To introduce the infeasible set of triples, we assume which implies a line representation for a tornado path. Observe that if a line does not cover a subset of locations , then there is no segment of the line to cover the locations in either. For any locations and , the analysis below partitions the plane in two regions, denoted by and . Region is the set of points for which there exist a line that covers and , and . For simplicity, in the next discussion we interchangeably refer to a point in by its name, as , or by its two-dimensional coordinates, as in .

Observe that a line covers both and if and only if and , where is the ball with center and radius , . Let be the collection of these lines, and let be the set of points that belong to any such line:

| (8) |

For any set let be the set of points that are at a distance at most from , that is

| (9) |

It is readily seen that .

Now, the set can be characterized by the four lines , , and that are tangent to both and , where and are the circles enclosing and , see Figure 3(a). Specifically, let be the set enclosed by these tangents, see Figure 3(b), we next show that .

Indeed, let be the line that passes through and and suppose that a point is in between and . Then, the line that passes through and is parallel to intersects both and . Suppose that belongs to the region left of between and . Then, the line that passes through and the point intersects both and . Notice that this argument can be repeated for the remaining regions of , and thus we can conclude that .

Conversely, let and let be the line intersecting and such that . If is one of the tangents, then it is clear that and it follows that . Thus, assume that is not one of the tangents and let and be two arbitrary elements of and . Observe that the segment of between and must be completely contained in between and . Moreover, as is not one of the tangents, intersects both and . Because two straight lines can intersect at most once, the previous observations imply that the cannot intersect nor at any point left of and right of ; in other words, it must be the case that is completely contained in , which implies that , as desired.

Given the previous considerations, in order to characterize it is necessary to characterize . From the shape of , it is clear that looks very similar to , with the difference that its border starts units up and units down, see Figure 4(a)

Next, we use geometric facts to derive an explicit equation for lines defining the boundaries of for a given and . For ease of exposition hereafter we assume that and . Define to be the angle of slope of the line which connects and with respect to the horizontal axis and let , where is the Euclidean distance between and . Then we have that Eqs (10a)–(10f) hold, where

| (10a) | |||||

| (10b) | |||||

| (10c) | |||||

| (10d) | |||||

| (10e) | |||||

| (10f) | |||||

The lines , defined in Equations (10a)– (10f) are depicted graphically in Figure 4(b).

Now, let

| (11) |

The previous discussion is summarized in the following result, which states that if a location belongs to the infeasible set then at most two locations among , and can be chosen to be covered by a tornado. A more formal proof of this result is given in Appendix 3.

Proposition 2.

Let and be two elements of and suppose that . Then any satisfies that

| (12) |

It is worth mentioning that we do not need to consider all possible combinations of locations to construct the infeasible triple cuts because if , then and . Also, we note that the pair-wise and triple valid constraints introduced so far do not completely characterize , as shown in the next remark. However, they can be used to define the initial subset of the master relaxation in the DBC as follows:

| (13) |

Remark 3.

Define . The set does not necessarily determine all the feasible solutions in the uncertainty set ; specifically and in general there exist points in that do not belong to . For example, consider a case of three locations , and with coordinates , and on a plane under a tornado with the maximum length of and the width of (See Figure 5). It can be easily observed that there exists no tornado path that covers all three locations because the only tornado path covering and is the line segment with endpoints and . Obviously, the location is not covered by this path. However, is a feasible solution in because it satisfies both the infeasible pair and triple cuts.

5.3 Feasibility check in

Next, we present a method to determine whether a master feasible solution belongs to . We start our analysis for the case when and then present a method for the case when .

5.3.1 Feasibility check for using the stabbing line algorithm (SLA).

The stabbing line problem consists of a collection of (disjoint) circles and the objective is to find the line on the plane that intersects the most circles. The SLA is an algorithm that solves this problem and reports : the maximum number of circles in that can be intersected with a line. Consider the collection of circles with centers at the locations in and radius . Observe that a tornado path with covers all locations in if and only if the (see Figure 6). Therefore, if , then whereas if then .

We explain next the SLA following Saba [2013]. Assume first a set of disjoint circles , . Given any two disjoint circles and , find the two external and two internal tangent lines to each pair of circles and record the intersection points on both circles; see Figure 7. Let and be the intersection points of these external and internal tangent lines, respectively, with . Note that a tangent line to that touches any point in the arc (or ) intersects , see Figure 7.

Given , the SLA computes the arc-intervals and for all ; note there exist exactly such intervals for . Let be an endpoint of any such arc-intervals that belong to the maximum amount of arc-intervals. Then, the tangent line that touches at is a line intersecting that intersects the most circles in . Iterating this process starting from each , will determine a line that intersects the most circles. The SLA can be implemented time; see Saba [2013] for further details on the algorithm.

In our case the circles in might be non-intersecting, as the locations in might be at a distance less than . We thus adapt the SLA, noting that if then the internal tangents are not necessary and that doing the analysis with the arc-interval is sufficient for this case.

5.3.2 Feasibility check if .

In this case, if , then we can again conclude that there is no finite line segment that covers and thus . Otherwise, if , then there is an infinite line that covers all locations in , but there is no guarantee that a line segment of length at most covers all locations of . Thus, we complete the feasibility check in two steps: first, we use the line resulting from the SLA and projection ideas to determine whether the line can be trimmed to be of length without compromising its coverage, the details are in Appendix 4. If even after this step the feasibility of cannot be guaranteed, then we check the feasibility of algebraically using a simplified version of .

Specifically, consider the set defined as

| (14a) | ||||

| (14b) | ||||

Observe that if and only if and that (14) is far simpler than because it has fewer variables and does not involve binary variables nor ‘big-M’ values. Checking whether is non-empty can be done using a quadratic (non-convex) continuous optimization solver. The such check can be significantly speed-up by adding linear cuts in , see the details in Appendix 4.

5.4 Definition of and feasibility check for

To initialize the master problem we assume that where is an optimal solution for the second-stage problem assuming no location is hit, i.e., is a solution of . On the other hand, once it has been checked that , we check that satisfies all -constraints in (6b) by solving the second-stage problem evaluated at , i.e., by solving . Let be an optimal solution of . If then is not feasible in (6b) and we add the constraint at all active nodes of the BC tree. Conversely, if , then is a feasible solution of (6) and node is pruned by feasibility after updating the lower bound of the BC. Observe that the initialization and separation procedure involve solving the -hard problem ; however, this problem is relatively simple and can be solved quickly using commercial MIP solvers, see our computational results in Section 6.

6 Case study: retrofitting/recovery residential buildings in Joplin, MO

Next, we perform several numerical experiments with the proposed model. Our objectives are to study the performance of the two-stage optimization problem, to analyze how the optimal solutions change with the length of the path, to gather high-level insights into the optimal retrofitting and recovery activities, and to compare the optimal policies with other benchmark policies. The experiments are based on location and cost data from Joplin, MO. Joplin was the location of one of the most severe tornadoes in US history that occurred on May 22, 2011. The tornado was rated EF-5 with an average speed of more than 200 mph and crossed 22.1 miles on the ground with a width of one mile. This catastrophe caused the loss of 161 lives and more than a thousand injuries. The total damage was approximated at 3 billion dollars as around 7500 residential structures were destroyed [Kuligowski et al., 2014].

6.1 Definition of Parameters

The geographic and demographic data are based on information from Joplin and extracted from the online platform IN-CORE [2022]. Particularly, this dataset divides buildings in about 1500 “block IDs” (which are contiguous blocks of the city) and includes over 20,000 buildings. For our experiments, we use only the single or multi-story residential wood-frame building archetypes.

We assume an EF-2 tornado with a wind speed of 134 mph, a width of 0.75 miles (thus =0.75/2), and a length of 5 and miles. Following Wang et al. [2021], we consider the retrofitting strategies presented in Table 1; these strategies are analyzed in Masoomi et al. [2018] with respect to the component fragility of wood-frame residential building archetypes. The retrofitting activities in Table 1, have minimal impact in the livability of the buildings; thus we assume that the first stage population dislocation parameter is zero for all locations and strategies, that is, for all . This assumption, however, does not change the complexity of solving the problem, and if necessary could be relaxed to better depict a particular context or instance of interest.

| Strategy | Description |

|---|---|

| Roof covering with asphalt shingles, using 8d nails to attach roof deck sheathing panel to rafters spaced at 6/12 inch, and the selection of two 16d toenails for roof-to-wall | |

| Roof covering with asphalt shingles, using 8d nails to attach roof deck sheathing panel to rafters spaced at 6/6 inch, and the selection of two H2.5 clips for roof-to-wall | |

| Roof covering with clay tiles, using 8d nails to attach roof deck sheathing panel to rafters spaced at 6/6 inch, and the selection of two H2.5 clips for roof-to-wall. |

The cost of implementing these strategies are computed from the data of IN-CORE [2022]. As mentioned in Section 3, a ‘do-nothing’ strategy with zero cost is also included in the retrofitting strategy set , that is, ={do-nothing, R1, R2, R3}. On the other hand, only two recovery strategies are assumed in our experiment, namely, recover () and do-nothing (); thus .

The second stage population dislocation parameters, , are defined as the expected population dislocation days after the tornado (Appendix 6 has additional experiments for 30 days of recovery). These values are evaluated based on the state of damage immediately after the tornado hits, where is the set of damage states. Let be the random variable denoting the functionality of after 60 days; where , only if reaches 100% functionality after days (no dislocation) and otherwise (dislocation). Also, let be the random variable representing the damage at immediately after the tornado, and let denote the retrofitting strategy. Then, the expected population dislocation after recovery is

| (15) |

where is the population of location . Since the value of depends on , using conditional probability and the (reasonable) assumption that is independent of given the knowledge of , we have that

| (16) |

Following Koliou and van de Lindt [2020], the damage states are given by Minor, Moderate, Extensive, Complete. We compute using the repair time distributions described in FEMA [2003]. These distributions are lognormal and their parameters can be found in Koliou and van de Lindt [2020]. The probabilities are computed from the building-level tornado fragility-curves for wood-frame residential buildings in Masoomi et al. [2018], Koliou and van de Lindt [2020].

The cost of recovery is the expected percentage of the total location replacement cost: suppose is the area of location and is the cost of replacement per . For the wood residential archetype, we set and, the percentage of the location replacement cost to be , , , and , see Koliou and van de Lindt [2020]. The following formula is used to compute the cost of recovery for location if it is retrofitted by strategy :

| (17) |

We assume the decision-maker takes charge of all recovery expenses.

On the other hand, if a location does not receive resources from the decision-maker, then the population dislocation will be higher and depends on the resident’s actions. We assume the population dislocation for the do-nothing strategy is a factor of the population dislocation resulting from the recovery actions of the decision-maker, i.e.,

| (18) |

The factor can vary such that : implies that the residents pay a full amount of expenses by themselves to recover their buildings as quickly as the decision-maker, and means that the residents take no recovery action on their own and therefore the whole population is dislocated from location . For our experiments, we fix which is the midpoint in the range. Note that the cost of the do-nothing strategy is from the decision-maker’s point of view.

We remark that various parameters of our case study we selected rather arbitrarily; for instance, the assumption that recovery means that the building recovers 100% functionality; that the last day of recovery is 60 days; or that ‘half’ the residents recover their property without assistance. These are reasonable values that are selected for illustration purposes and can be changed by the decision-maker as desired. Generally, modifications of these parameters should not impact the model’s performance, and as long as there is data available that account for the modifications, our model should be able to run; see for example Appendix 6 where experiments with a recovery time of 30 days are reported.

6.2 Robust retrofitting and recovery strategies in Joplin, MO

We implement the proposed two-stage optimization model to find robust retrofitting and recovery strategies for locations in Joplin. All algorithms are coded in Python 3.9 and solved using Gurobi Optimizer 9.1.1 on a 64-bit Windows operating system with Intel(R) Core(TM) i7-8550U CPU and 8.00 GB of RAM under a time limit of 3600 seconds. The source code is available in online repository Ansari [2023]. We use Algorithm 1, along with the DBC method described in Section 5 with the setup that yields the best performance; see our comparative experiments in Table 6 in Appendix 5.

In order to define the locations in , we group Joplin “block IDs” in 100 center locations using the -means method [MacQueen, 1967] and aggregate the data accordingly. Particularly, we assume that when the tornado hits a location then all of its buildings are hit, and that the tornado hits a location only if the location’s centroid is within units of the path. Table 2 compares the population dislocation for when the tornado length is miles or and the budgets are million USD. Columns 1 and 2 in Table 2 show and . Column 3 has the optimal population dislocation under the worst-case tornado for each set of parameters. Columns 4 and 5 report the CPU run time of C&CG Algorithm 1 in seconds and its number of iterations, respectively. Column 6 shows the CPU runtime of the DBC method in seconds to solve subproblem . Column 7 compares the CPU time that the DBC algorithm spends in a callback function to verify the feasibility of integral solutions. Column 8 shows the total CPU time to find a feasible solution in the set . Columns 9 and 10 present the proportion of the total budget that is spent for retrofitting and recovery, respectively.

Length Budget Population CCG CCG DBC Callback feasibility Retrofitting cost Recovery cost ($) dislocation run time (sec.) iteration run time (sec.) run time (sec.) run time (sec.) /budget /budget 5 0 M 16318 127 2 126 3 1 - - 15 M 13408 307 3 306 11 6 0.6 0.4 30 M 13091 300 3 300 11 5 0.3 0.7 0 M 17293 568 2 568 3 0 - - 15 M 14236 716 2 716 5 0 0.6 0.4 30 M 13839 986 3 985 5 0 0.4 0.6

Table 2 shows that the solver is able to find optimal solutions for every experiment in at most 986 seconds. It also shows that solving the subproblems is the most time-consuming step (which is virtually 99% of the total runtime). In general, the algorithm solves the subproblem for 5 miles line segment faster than the (infinite) line; this fact can be justified by the effect of the conflict constraints (7) which are not present when . In addition, for all experiments, the callback function (i.e., Algorithm 2) is very fast, which implies that the bottleneck of the entire algorithm is the branch-and-bound that solves the relaxation of the subproblem. As expected, for the infinite line, the results show zero seconds to check the feasibility using , because in this case, the SLA alone can verify the feasibility.

For both tornado lengths, there are decreases in the dislocation by increasing the budget because decision-makers are able to retrofit and recover more locations. For example, increasing the budget from $0 to $15M results in around 3000 fewer dislocations in damaged neighborhoods. Note that this translates in reductions of around 20% in population dislocation for both tornado lengths by investing $15M. However, adding another $15 million will reduce dislocations only by around 500 people because of the great cost of recovery. In fact, the relationship between budget and dislocation exhibits a clear diminishing returns behavior, see Figure 8, where it can be seen that after around $10M there is a small decrease in population dislocation. From a decision-making point of view, these results implies that it is not reasonable to spend more than $10M-$15M in retrofitting and recovery, as the return of investment is not worth it.

More details on the optimal strategies can be found in Figure 9, which maps the locations, their optimal strategies, and the worst-case tornado scenarios for various budgets. It shows that in all experiments the decision-maker uses most of the extra amount of budget from $15M to $30M to recover a few locations rather than in retrofit locations that are not in the path of the worst-case tornado. Notice that recovery costs are generally more expensive than retrofitting costs. For example, the recovered location in the -$15M map in Figure 9 has 470 residents; 382 of which are estimated to be dislocated by spending $194K for retrofitting. However, the decision-maker has to spend $5.5M in recovery to further reduce the estimated dislocation to 295 people for this location. Columns 9-10 in Table 2 show that the proportion of the budget that is assigned to recovery increases by having more budget. In addition, we can observe in Figure 9 that the path of worst-case tornadoes does not change by much in different experiments, suggesting that the location of the tornado path is largely driven by the population density in the city center area.

These results suggest, from a worst-case perspective, that it is optimal to invest only in locations in or close to where the worst-case tornado hits and that it is better to invest both in retrofitting and recovery, rather than just in retrofitting. The results are somewhat surprising in the sense that, at least a priori, it would be reasonable to invest all budget in retrofitting across a wider area (particularly because recovery is much more expensive than retrofitting). As such, the results suggest that there is a critical set of clustered locations, in this case the densely populated center of the city, where most of the investments must be concentrated. We suspect, however, that there is a different structure for the optimal actions in data sets with a more homogeneous population distribution.

6.3 Advantages of robust retrofitting

In this section we compare the optimal two-stage robust retrofitting and recovery strategies against a policy that makes retrofitting and recovery decisions at random. We assume a miles tornado path and a total budget of $15 million. The decision-maker is allowed to spend $0, $3, $9, and $15 million of the budget in each experiment to randomly retrofit locations with random selections in set ; the remaining budget is reserved for the recovery actions. Once a retrofitting strategy is determined, then the corresponding worst-case second-stage happens (i.e., the value of is determined, see Equation (5)). Table 3 compares the average, maximum, minimum, and standard deviation of population dislocation for 10 random replications. Note that for the $0M case, we do not need multiple replications.

Budget to randomly retrofit Population Dislocation Average Maximum Minimum Standard deviation $0M (0%) 16318 - $3M (20%) 16057 16263 15717 163 $9M (60%) 15596 15905 15306 196 $15M (100%) 15143 15451 14764 189 Robust optimal retrofitting 13408 -

The results in Table 3 show that with random policies the average, maximum, and minimum dislocation after the corresponding worst-case tornado decrease with the percentage of the budget spent in retrofitting. These results are depicted graphically in the Box plots in Figure 10. Note that the proposed robust optimization model results in 13408 dislocations, which is noticeable better than the random policies. To further emphasize the value of optimization, recall that the optimal solution with our proposed method in Table 2 spends $9M out of $15M (60% of budget) in retrofitting. By contrast, even the minimum population dislocation with random retrofitting using the same budget proportion is 15306 (a 14% increase).

We conduct additional simulations to randomly retrofit locations with the full amount of $15M and $30M budget as before, but this time the worst-case tornado found in Section 6.2 is used. The results across 10 replications are shown in Table 4, which shows that for both cases the robust optimal population dislocation is significantly less than the minimum of population dislocation with random retrofitting. The plot in Figure 11 provides a visualization of the significant gap between robust optimal value (stars) and the distribution of values for random retrofitting plans. We can also observe that population dislocation reduces by increasing the amount of budget.

Budget Population dislocation Robust optimal retrofitting Average Maximum Minimum Standard deviation $15M 13408 15314 15941 14545 331 $30M 13091 14795 15248 14145 294

The results of this section show that deciding using the proposed two-stage robust method yields significant reductions in population dislocation against random policies when evaluating in terms of worst-case outcomes. In the next section we complement these experiments by analyzing how the optimal policy behaves when random tornadoes, instead of the worst-case scenario, happen.

6.4 Robust strategies in random scenarios

In this section we fix the optimal retrofitting-recovery plan and compare the robust population dislocation values resulting from having 10 simulated 5-miles tornadoes, see Table 5 and Figure 12.

Budget Population dislocation Worst-case tornado Average Maximum Minimum Standard deviation $0M 16318 10324 13201 5233 2835 $15M 13408 9634 12720 5031 2679 $30M 13091 9416 12480 4900 2607

The results for $0M in Table 5 and Figure 12 show that there is a large gap between the worst-case tornado 16318 (blue star) and the maximum value among simulation results which is 13201. As expected, by having more budget, the average population dislocations in the simulations reduce from 10324 to 9643 for $15M and to 9416 for $30M. In addition, the gap between the robust and maximum simulation values for $15 and $30M are noticeable smaller than the gap for the no-budget case.

These observations show the following about the optimal retrofitting-recovery policy: first, that there can be a large variability for population dislocation assuming any tornado is possible. Second, that the variability seems to decrease as more budget is available, and third, that the worst-case scenario is not that far way from simulated scenarios. This last point is further emphasized by the fact that only 10 tornadoes are simulated; one should expect that with more simulated tornadoes the gap between the worst-case and the maximum dislocation observed reduces even more. Further, this last point also shows that the worst-case is not as rare as one might anticipate, and thus that the proposed robust optimization model does not suffer from ‘over-conservatism,’ which is a common drawback of deciding in a robust manner.

7 Conclusions

Motivated by the need of data-driven methods to allocate resources in retrofitting for tornado-prone areas, we consider a two-stage robust optimization model to determine retrofitting and recovery strategies before and after the realization of an uncertain tornado disaster. We assume that the decision-maker (a combination of public-private agencies) has to allocate a budget in retrofitting and recovery actions, to minimize population dislocation. We propose a two-stage optimization model where the first-stage variables determine the retrofitting strategies and the second-stage variables determine the recovery strategy, assuming that for any retrofitting action, the corresponding worst-case tornado happens. Given that the uncertainty corresponds to the possible tornado paths, the resulting problem is a two-stage mixed-integer robust optimization problem with a mixed-integer non-linear uncertainty set.

We show that the proposed problem is NP-hard. A standard approach to solve the problem is a column-and-constraint generation algorithm that needs to solve a max-min subproblem at each iteration. We embed a decomposition branch-and-cut algorithm in the column-and-constraint solution method to address the non-convex structure of the bilevel subproblem. We propose two sets of conflict constraints and a custom separation procedure to implement the decomposition branch-and-cut algorithm. Using data from INCORE, we use our model to provide the optimal retrofitting and recovery strategies for the city of Joplin, Missouri against the worst-case scenario. The results show that the optimal policy exhibits a diminishing returns behavior that sets-in quickly; prioritizes the high-density city center; and prefers to retrofit and recover than to only retrofit. The proposed policy also outperforms other benchmark and does not suffer from ‘over-conservatism.’

Future work will focus on modeling tornado damages assuming that there might be several levels of disruptions. Additionally, future research would analyze other social vulnerability indices to measure community resilience under the risk of a tornado. One line of research might study the population dislocation in residential areas with different income levels after certain days of a tornado occurrence, in order to recognize more vulnerable locations that require immediate agencies’ attention. Another line of research might take our proposed framework to study other natural disasters like hurricanes with minor modifications in the uncertainty model and the set of retrofitting strategies.

Acknowledgment

This research is partially funded by the National Science Foundation (NSF) (Awards ENG/CMMI # 2145553 and GEO/RISE # 2052930) and by the Air Force Office of Scientific Research (AFORS) (Award # FA9550-22-1-0236). This research was also partially funded by the National Institute of Standards and Technology (NIST) Center of Excellence for Risk-Based Community Resilience Planning through a cooperative agreement with Colorado State University [Awards # 70NANB20H008 and 70NANB15H044]. The contents expressed in this paper are the views of the authors and do not necessarily represent the opinions or views of the National Institute of Standards and Technology (NIST), the National Science Foundation (NSF), or the Air Force Office of Scientific Research (AFORS).

References

- An and Zeng [2015] Y. An and B. Zeng. Exploring the modeling capacity of two-stage robust optimization: Variants of robust unit commitment model. IEEE Transactions on Power Systems, 30(1):109–122, 2015. doi: 10.1109/TPWRS.2014.2320880.

- An et al. [2014] Y. An, B. Zeng, Y. Zhang, and L. Zhao. Reliable p-median facility location problem: two-stage robust models and algorithms. Transportation Research Part B: Methodological, 64:54–72, 2014.

- Ansari [2023] M. Ansari. Github repository. Online at https://github.com/mehdi-ansari/Two_Stage_Robust_Tornado_Problem, Accessed September 2023, September 2023.

- Ansari et al. [2022] M. Ansari, J. S. Borrero, and L. Lozano. Robust minimum-cost flow problems under multiple ripple effect disruptions. INFORMS Journal on Computing, 2022.

- Atamtürk and Zhang [2007] A. Atamtürk and M. Zhang. Two-stage robust network flow and design under demand uncertainty. Operations Research, 55(4):662–673, 2007.

- Ben-Tal and Nemirovski [1999] A. Ben-Tal and A. Nemirovski. Robust solutions to uncertain linear programs. Operations Research Letters, 25:1–13, 1999.

- Ben-Tal et al. [2004] A. Ben-Tal, A. Goryashko, E. Guslitzer, and A. Nemirovski. Adjustable robust solutions of uncertain linear programs. Mathematical programming, 99(2):351–376, 2004.

- Ben-Tal et al. [2011] A. Ben-Tal, B. Do Chung, S. R. Mandala, and T. Yao. Robust optimization for emergency logistics planning: Risk mitigation in humanitarian relief supply chains. Transportation research part B: methodological, 45(8):1177–1189, 2011.

- Ben-Tal et al. [2015] A. Ben-Tal, E. Hazan, T. Koren, and S. Mannor. Oracle-based robust optimization via online learning. Operations Research, 63(3):628–638, 2015.

- Bertsimas and Sim [2004] D. Bertsimas and M. Sim. The price of robustness. Operations Research, 52(1):35–53, 2004.

- Borrero and Lozano [2021] J. S. Borrero and L. Lozano. Modeling defender-attacker problems as robust linear programs with mixed-integer uncertainty sets. INFORMS Journal on Computing, 33(4):1570–1589, 2021.

- Cheng et al. [2021] C. Cheng, Y. Adulyasak, and L.-M. Rousseau. Robust facility location under demand uncertainty and facility disruptions. Omega, 103:102429, 2021.

- Ding et al. [2017] T. Ding, C. Li, Y. Yang, J. Jiang, Z. Bie, and F. Blaabjerg. A two-stage robust optimization for centralized-optimal dispatch of photovoltaic inverters in active distribution networks. IEEE Transactions on Sustainable Energy, 8(2):744–754, 2017. doi: 10.1109/TSTE.2016.2605926.

- Fan and Pang [2019] F. Fan and W. Pang. Stochastic track model for tornado risk assessment in the us. Frontiers in built environment, 5:37, 2019.

- Farokhnia et al. [2020] K. Farokhnia, J. W. van de Lindt, and M. Koliou. Selection of residential building design requirements to achieve community functionality goals under tornado loading. Practice Periodical on Structural Design and Construction, 25(1):04019035, 2020.

- FEMA [2003] FEMA. Multi-hazard loss estimation methodology: Earthquake model. Hazus MH 2.1. 2003.

- FEMA [2021] FEMA. Natural hazard retrofit program toolkit. https://www.fema.gov/sites/default/files/documents/fema_natural-hazards-retrofit-program-tookit.pdf, Accessed January 2023, February 2021.

- FEMA [2022a] FEMA. Tornado–alerts and warnings. Online at https://community.fema.gov/ProtectiveActions, Accessed November 2022, November 2022a.

- FEMA [2022b] FEMA. Resources for repairing, retrofitting and rebuilding after a tornado. https://www.fema.gov/fact-sheet/resources-repairing-retrofitting-and-rebuilding-after-tornado-0, Accessed January 2023, March 2022b.

- Gabrel et al. [2014] V. Gabrel, M. Lacroix, C. Murat, and N. Remli. Robust location transportation problems under uncertain demands. Discrete Applied Mathematics, 164:100–111, 2014.

- Ho-Nguyen and Kılınç-Karzan [2018] N. Ho-Nguyen and F. Kılınç-Karzan. Online first-order framework for robust convex optimization. Operations Research, 66(6):1670–1692, 2018.

- IN-CORE [2022] IN-CORE. Interdependent networked community resilience modeling environment. https://incore.ncsa.illinois.edu/, Accessed October 2022, October 2022.

- Jabr et al. [2015] R. A. Jabr, I. Džafić, and B. C. Pal. Robust optimization of storage investment on transmission networks. IEEE Transactions on Power Systems, 30(1):531–539, 2015. doi: 10.1109/TPWRS.2014.2326557.

- Jiang et al. [2012] R. Jiang, M. Zhang, G. Li, and Y. Guan. Benders’ decomposition for the two-stage security constrained robust unit commitment problem. In IIE Annual Conference. Proceedings, page 1. Institute of Industrial and Systems Engineers (IISE), 2012.

- Koliou and van de Lindt [2020] M. Koliou and J. W. van de Lindt. Development of building restoration functions for use in community recovery planning to tornadoes. Natural Hazards Review, 21(2):04020004, 2020.

- Kuligowski et al. [2014] E. D. Kuligowski, F. T. Lombardo, L. Phan, M. L. Levitan, and D. P. Jorgensen. Final report, national institute of standards and technology (nist) technical investigation of the may 22, 2011, tornado in joplin, missouri. 2014.

- Laporte and Louveaux [1993] G. Laporte and F. V. Louveaux. The integer L-shaped method for stochastic integer programs with complete recourse. Operations Research Letters, 13:133–142, 1993.

- Li et al. [2021] Y. Li, F. Zhang, Y. Li, and Y. Wang. An improved two-stage robust optimization model for cchp-p2g microgrid system considering multi-energy operation under wind power outputs uncertainties. Energy, 223:120048, 2021.

- Lin et al. [2004] X. Lin, S. L. Janak, and C. A. Floudas. A new robust optimization approach for scheduling under uncertainty: I. bounded uncertainty. Computers & chemical engineering, 28(6-7):1069–1085, 2004.

- Ma et al. [2018] S. Ma, B. Chen, and Z. Wang. Resilience enhancement strategy for distribution systems under extreme weather events. IEEE Transactions on Smart Grid, 9(2):1442–1451, 2018.

- MacQueen [1967] J. MacQueen. Classification and analysis of multivariate observations. In 5th Berkeley Symp. Math. Statist. Probability, pages 281–297, 1967.

- Masoomi and van de Lindt [2018] H. Masoomi and J. W. van de Lindt. Restoration and functionality assessment of a community subjected to tornado hazard. Structure and Infrastructure Engineering, 14(3):275–291, 2018.

- Masoomi et al. [2018] H. Masoomi, M. R. Ameri, and J. W. van de Lindt. Wind performance enhancement strategies for residential wood-frame buildings. Journal of Performance of Constructed Facilities, 32(3):04018024, 2018.

- Matthews et al. [2019] L. R. Matthews, C. E. Gounaris, and I. G. Kevrekidis. Designing networks with resiliency to edge failures using two-stage robust optimization. European Journal of Operational Research, 279(3):704–720, 2019.

- McAllister et al. [2015] T. P. McAllister et al. Community resilience planning guide for buildings and infrastructure systems, volume i. 2015.

- Mutapcic and Boyd [2009] A. Mutapcic and S. Boyd. Cutting-set methods for robust convex optimization with pessimizing oracles. Optimization Methods & Software, 24(3):381–406, 2009.

- Neyshabouri and Berg [2017] S. Neyshabouri and B. P. Berg. Two-stage robust optimization approach to elective surgery and downstream capacity planning. European Journal of Operational Research, 260(1):21–40, 2017.

- NOAA [2022a] NOAA. National centers for environmental information, tornadoes statistics. Online at https://www.ncei.noaa.gov, Accessed September 2022, September 2022a.

- NOAA [2022b] NOAA. Storm predication center - national weather service. Online at https://www.spc.noaa.gov/, Accessed October 2022, October 2022b.

- NOAA [2022c] NOAA. Tornadoes frequently asked questions. Online at https://www.weather.gov/lmk/tornadoesfaq, Accessed November 2022, November 2022c.

- NOAA [2022d] NOAA. Supercells. Online at https://www.spc.noaa.gov/misc/AbtDerechos/supercells.htm, Accessed November 2022, November 2022d.

- NOAA [2022e] NOAA. Weather related fatality and injury statistics. Online at https://www.weather.gov/hazstat/, Accessed November 2022, November 2022e.

- NOAA [2022f] NOAA. National weather service, the enhanced fujita scale (ef scale). https://www.weather.gov, Accessed September 2022, September 2022f.

- NOAA [2023] NOAA. Damage assessment toolkit. Online at https://apps.dat.noaa.gov/stormdamage/damageviewer/, Accessed March 2023, March 2023.

- NWC [2023] NWC. Historical data of oklahoma county tornadoes. Online at https://www.weather.gov/oun/tornadodata-county-ok-oklahoma, Accessed January 2023, January 2023.

- Ripberger et al. [2018] J. T. Ripberger, H. C. Jenkins-Smith, C. L. Silva, J. Czajkowski, H. Kunreuther, and K. M. Simmons. Tornado damage mitigation: Homeowner support for enhanced building codes in oklahoma. Risk analysis, 38(11):2300–2317, 2018.

- Saba [2013] S. Saba. Line intersecting maximal number of circles (circle “stabbing” problem). https://sahandsaba.com/line-intersecting-maximal-number-of-circles.html, Accessed October 2022, October 2013.

- Shams et al. [2021] M. H. Shams, M. Shahabi, M. MansourLakouraj, M. Shafie-khah, and J. P. Catalão. Adjustable robust optimization approach for two-stage operation of energy hub-based microgrids. Energy, 222:119894, 2021.

- Simmons et al. [2015] K. M. Simmons, P. Kovacs, and G. A. Kopp. Tornado damage mitigation: Benefit–cost analysis of enhanced building codes in oklahoma. Weather, climate, and society, 7(2):169–178, 2015.

- Standohar-Alfano et al. [2017] C. D. Standohar-Alfano, J. W. van de Lindt, and B. R. Ellingwood. Vertical load path failure risk analysis of residential wood-frame construction in tornadoes. Journal of Structural Engineering, 143(7):04017045, 2017.

- Stoner and Pang [2021] M. Stoner and W. Pang. Tornado hazard assessment of residential structures built using cross-laminated timber and light-frame wood construction in the us. Natural Hazards Review, 22(4):04021032, 2021.

- Strader et al. [2016] S. M. Strader, T. J. Pingel, and W. S. Ashley. A monte carlo model for estimating tornado impacts. Meteorological Applications, 23(2):269–281, 2016.

- Takeda et al. [2008] A. Takeda, S. Taguchi, and R. Tütüncü. Adjustable robust optimization models for a nonlinear two-period system. Journal of Optimization Theory and Applications, 136(2):275–295, 2008.

- The White House [2022] The White House. Fact sheet: Biden–harris administration launches initiative to modernize building codes, improve climate resilience, and reduce energy costs. https://www.whitehouse.gov/briefing-room/statements-releases/2022/06/01/fact-sheet-biden-harris-administration-launches-initiative-to-modernize-building-codes-improve-climate-resilience-and-reduce-energy-costs/, June 2022.

- Thiele et al. [2009] A. Thiele, T. Terry, and M. Epelman. Robust linear optimization with recourse. Rapport technique, pages 4–37, 2009.

- van de Lindt and Dao [2009] J. W. van de Lindt and T. N. Dao. Performance-based wind engineering for wood-frame buildings. Journal of Structural Engineering, 135(2):169–177, 2009.

- Velasquez et al. [2020] G. A. Velasquez, M. E. Mayorga, and O. Y. Özaltın. Prepositioning disaster relief supplies using robust optimization. IISE Transactions, 52(10):1122–1140, 2020.

- Wang et al. [2017] J. Wang, S. Cao, W. Pang, and J. Cao. Experimental study on effects of ground roughness on flow characteristics of tornado-like vortices. Boundary-Layer Meteorology, 162:319–339, 2017.

- Wang et al. [2021] W. Wang, J. W. Van De Lindt, N. Rosenheim, H. Cutler, B. Hartman, J. Sung Lee, and D. Calderon. Effect of residential building wind retrofits on social and economic community-level resilience metrics. Journal of Infrastructure Systems, 27(4):04021034, 2021.

- Wen [2021] Y. Wen. Development of Multi-Objective Optimization Model of Community Resilience on Mitigation Planning. PhD thesis, University of Oklahoma, 2021.

- Xiong et al. [2017] P. Xiong, P. Jirutitijaroen, and C. Singh. A distributionally robust optimization model for unit commitment considering uncertain wind power generation. IEEE Transactions on Power Systems, 32(1):39–49, 2017.

- Yao et al. [2009] T. Yao, S. R. Mandala, and B. Do Chung. Evacuation transportation planning under uncertainty: a robust optimization approach. Networks and Spatial Economics, 9(2):171, 2009.

- Yuan et al. [2016] W. Yuan, J. Wang, F. Qiu, C. Chen, C. Kang, and B. Zeng. Robust optimization-based resilient distribution network planning against natural disasters. IEEE Transactions on Smart Grid, 7(6):2817–2826, 2016.

- Zeng and Zhao [2013] B. Zeng and L. Zhao. Solving two-stage robust optimization problems using a column-and-constraint generation method. Operations Research Letters, 41(5):457–461, 2013.

- Zhao and Zeng [2012] L. Zhao and B. Zeng. Robust unit commitment problem with demand response and wind energy. In 2012 IEEE power and energy society general meeting, pages 1–8. IEEE, 2012.

APPENDIX

1 Proof of -hardness

Proposition 3.

The optimization problem (1) is NP-hard.

Proof: Consider an arbitrary instance of the knapsack problem with items, weights , values , capacity , and value . We build an instance of problem (1) that is equivalent to the knapsack problem. Consider an instance of problem (1) with and . Observe that because , has to be equal to a vector of ones. Suppose that the coordinates of the locations and the values of and are such that for all is feasible in . For simplicity, we drop index from the notation. Also, note that can be replaced by for all because only two recovery strategies are assumed. The specific case of the problem can be rewritten as

| (19a) | ||||

| s.t. | (19b) | |||

| (19c) | ||||

Consider the example of problem (19) where , and for each item , and . The answer of knapsack problem for some instance is YES (i.e., ), if and only if .

2 Proof of Proposition 1

Proof: Suppose , and for the sake of contradiction that . For the constraint in (3b), we have

| (20a) | |||

| (20b) | |||

The sum of two above inequalities results

| (21) |

and by applying the triangle inequality , we conclude that

| (22) |

Finally, we employ the fact that to reach

| (23) |

Now, we show (23) cannot be true because , i.e.,

| (24) |

By constraint (3d) and using , we have

| (25) |

Note that . So, the two above inequalities imply that

| (26) | ||||

Observe that (23) violates the true inequality (LABEL:contradiction_res). Therefore, the cut is valid because is an infeasible solution under the assumption .

3 Proof of Proposition 2

This section provides a more detailed discussion (and a more detailed proof) for Proposition 2. Proof: We are interested to identify the division of space into feasible () and infeasible () subspaces for two locations and with coordinates and , respectively. For the sake of simplicity, we assume that throughout the proof; the results can be easily extended to the case .

Proof: We find feasible slope and y-intercept of lines in the form of with a distance from both locations and which means the following equalities must simultaneously hold,

| (27a) | |||

| (27b) | |||

The above equalities imply two possible cases:

| (28) | ||||

Case 1.

| (29) |

By replacing in (27a), we will have

| (30a) | |||||

| (30b) | |||||

| (30c) | |||||

| (30d) | |||||

So, the tornado paths with formulations

| (31a) | |||

| (31b) | |||

are two boundaries that cover both locations (observe that any other between and () corresponding to the slope forms a tornado path that has a distance at most to the locations and ). The tornado in (31a) covers all locations in distance between the lines (see (10b) for line ) and . Similarly, the tornado (31b) covers all points between and (see (10a) for line ).

Replacing in (27b) will give the same boundaries as (27a).

Case 2.

| (32) |

By replacing in (27a), we have

| (33) | ||||

Instead of solving (33) for which leads to a complicated quadratic equation, we exploit the form of equality to compute possible values of . Observe that (33) can be interpreted as a line passing through the origin and has a distance from the point (see Figure 13).

Now, we discuss that the angles corresponding to the possible slopes of must be

| (34) | |||

| (35) |

where and by the definition. Therefore,

| (36) | |||

| (37) |

So, the resulting tornado paths are

| (38) | ||||

| (39) | ||||

Observe that these tornado paths pass through the midpoint . So, two edges of the tornado path in (38) with distance will be and (see lines and in (10c) and (10d)). Likewise, the edges of (39) are and (see lines and in (10e) and (10f)). Replacing in (27b) leads to the same equality in (33), and so the same conclusions.

In the following, we determine the feasible pair for the line to cover both locations and within the distance , where is the angle of the slope of the line and is the -intercept of the line given angle . It can be shown that any feasible slope angle must be inside the interval as follows.

| (40a) | |||

| (40b) | |||

The summation of (40a) and (40b) results in

| (41) |

By the triangle inequality , we conclude

| (42) |

Based on Case 2 in the proof of Lemma 1, (42) implies that .

Now, we compute the feasible for given . From (40a) and (40b), we have

| (43a) | |||

| (43b) | |||

Note that . For the sake of simplicity, we assume hereafter that and (which implies ) and then discuss two cases for a feasible followed by the inequalities (43a) and (43b):

-

1.

:

(44) -

2.

:

(45)

Figure 14 visualizes an example of the feasible ranges (44) and (45) for given angle .

Inequalities (44) and (45) imply line for given is feasible (covers and within a distance of ), if and only if a point on the line meets

| (46) |

Remark 4.

Given the angle , a feasible line covers a point within the distance , if and only if

| (47) |

Regarding Remark 47, to obtain an inclusive set of feasible coverage for any line where , define

and

A point is covered within distance of some feasible line if and only if .

In the following, we find the upper and lower bounds of the set to create the set of infeasible for some location which . First, the upper bound of set is discussed as follows.

Define

which is the intersection of two extreme lines and (see Lemma 1 and note that and are other representations of and ). We claim that line for and line for are the upper bounds of .

Lemma 2.

Function is strictly increasing.

Proof: We show the derivative of is strictly positive:

| (48) | ||||

Using Lemma 2, we conclude:

-

1.

:

(49) -

2.

:

(50)

Hence, the upper bound of will be (see Figure 15)

| (51) |

Likewise, upper bound of can be determined by defining: