Two Dimensional Swarm Formation in Time-invariant External Potential:

Modelling, Analysis, and Control

Abstract

Clustering formation has been observed in many organisms in Nature. It has the desirable properties for designing energy efficient protocols for Wireless Senor Networks (WSNs). In this paper, we present a new approach for energy efficient WSNs protocol which investigate how cluster formation of sensors response to external time-invariant energy potential. In this approach, the necessity of data transmission to Base Station is eliminated, thereby conserving energy for WSNs. We define swarm formation topology, and estimate the curvature of external potential manifold by analyzing the change of the swarm formation in time. We also introduce a dynamic formation control algorithm for maintaining defined swarm formation topology in external potential.

I Introduction

Wireless Sensor Networks (WSNs) have attracted much attention due to its ability to provide ubiquitous and multi-faceted situational awareness with a host of applications ranging from structural health monitoring, habitat surveillance, and target detection to power system management, smart car parking, and wireless luggage tags Erol-Kantarci and Mouftah (2011); Gao and Jin ; Li et al. (2002); Ling et al. (2009); Srikanth et al. . WSN depends on spatially distributed sensor node to measure and collect the desire environmental data within its sensing range, and then transmit to a control center called Base Station (BS). The ideal WSN should be autonomous, robust, scalable, and with extended network lifetime. However, WSNs are usually deployed in hostile environments where energy resources is limited. As energy is constrained and data transmission is most energy costly, WSNs algorithms need to be architect in ways where data transmission, especially to BS, is minimized. Clustering become an often utilized technique in designing energy efficient algorithm for WSNs.

Cluster formations can be readily observed in Nature, such as bird flocking and fish schooling. These organism systems are expressing collective motion behaviours and are studied in a relatively novel interdisciplinary field of research, Swarm Intelligence (SI). Individual agents in the swarm are simple agents with limited sensing abilities and computational rules, and interacting with each other locally. Nevertheless, the swarm as a whole demostrates emergent global behaviours which are unknown to the individuals. SI systems have the desirable properties of being distributed, autonomous, scalable, and robust Blum and Li (2008). All of which are key in designing algorithms for WSNs.

Plentiful of clustering algorithm has been developed Gomathi and Sivanesan (2019); Jin et al. (2008); Mehdi Afsar and Tayarani-N (2014). However, besides the main objective of energy conservation, the existing clustering algorithms mainly focus on the optimalization of sensor protocal routines to enhance WSNs in scalability, fault-tolerance, data aggregation, load balancing and network topology stability. All of which data transmission to BS is unavoidable. To address the energy efficiency challenge from a new perspective, this paper combines SI concept with WSNs. We focuses on designing WSNs algorithm where desired environmental information are obtained through analyzing the change in sensor cluster formations (swarm formation) rather than information collected directly through individual sensors, thereby minimizing the energy expenditure in data tranmission between sensors and eliminates the necessity of BS.

We identify the envrionmental information as a external potential hypersuface of -dimensions ( or ). Mathematically, is a Riemannian manifold defined by the set of solutions to a single equation

| (1) |

where is a function. We introduce a formation analysis algorithm which uses swarm formation in external potential to estimate curvature, which is invariant under isometry, of the manifold .

This paper is organized as the follows. In section II, we introduce the topology of swarm lattice formation and demonstrate how to construct such formation in an arbitary external potential. In section III, we explain the theoretical basis and computations for the formation analysis algorithm. In section IV, we formulate an formation control algorithm which shows how the swarm lattice formation can be obtained by dynamical control of the individual agents. In section V, we discuss the simulation results of both formation analysis and formation control algorithms.

II Swarm formation

The topology definition of the swarm formation is inspired by Reynolds Reynolds (1987) and Olfati-Saber Olfati-Saber (2006). In this paper, we consider line formation. In the line formation, agents are being divided into two groups: one head agent and the following agents. The head agent acts as an initial stimuli to the swarm motion. Its dynamics can be predefined and are not affected by the following agents in the swarm.

Define a path graph as a pair that consists of a set of vertices and a set of edges such that , where . Each vertex represents an agent of the swarm, while the edges represent the inter-agent communications. Let denote the position of agent for all . The vector is the configuration of all agents of the swarm. The inter-agent distance, that is the length of edges, is defined to be the geodesic length between two connected vertices over . To maintain identical inter-agent distance, we consider an algebraic constraint on the edges,

| (2) |

A configuration that satisfying the set of constraints in (2) is refered as a lattice formation.

To formulate lattice formation in any arbitary external potential , we need to first define the trajectory of the head agent, then construct a representation of edges – a parallel vector field that is metrically orthogonal to the head agent trajectory, and finally calculate the geodesic deviation vector field. The trajectories of the following agents are the integral curvces of the geodesic deviation vector field. The theoretical basis Lee (1997) and details of the formulation are discussed as follows.

Let be a non-empty open subset and a function defining the external potential. Let be the graph of . The closed subset in projects homeomorphically onto with inverse that is a smooth mapping from to . is a closed smooth submanifold of . Using the standard Riemannian metric on , the induced metric on at a point is

| (3) |

with coordinate chart on . Each can be represented as a linear combination of , given as

| (4) |

Consider the aforementioned graph as a Riemannian manifold. Given a curve, , a vector field along is any section of the tangent bundle over (, projection , such that ). If is a smooth manifold, all vector field on the manifold are also smooth. We denote the collection of all smooth vector fields on manifold as . For a Riemannian manifold , the Levi-Civita connection on M is the unique connection on that has both metric compatibility and torsion freeness. The Christoffel symbols of the second kind are the connection coefficients (in a local chart) of the Levi-Civita connection denoted as

| (5) |

For a Riemannian manifold , a curve is called geodesic with respect to the connection if its acceleration it zero. That is a curve where . A geodesic curve in n-dimensional Riemannian manifold can be expressed as a system of second order ordinary differential equations,

| (6) |

All geodesics are the shortest path between any two points on the manifold.

We predefine the trajectory of the head agent to be a geodesic curve on manifold. Base on (6), for a two-dimensional manifold , head agent trajectory can be expressed as a system of ordinary differential equations

| (7) | ||||

where basis are used in the index, and are the first derivative with respect to time. Each Christoffel symbols depends entirely on the metric at a certain point in in terms of basis . Given initial conditions, , (7) is guaranteed to have a solution according to the Picard-Lindelf theorem. This choice of head agent trajectory is made to simplify agent’s dynamic control. On the geodesic, the head agent is traveling with constant velocity given initial position and velocity, thus no requirement for outside reference beacon.

The distance of the edges of lattice formation needs to be identical. For edges representation, a parallel vector field that is orthogonal to the head agent trajectory is constructed as

| (8) |

forms a family of geodesics where we require the initial position and velocity conditions for th geodesic are , , . The frequency of inter-agent communications is defined by the number of geodesics in within given traveling time.

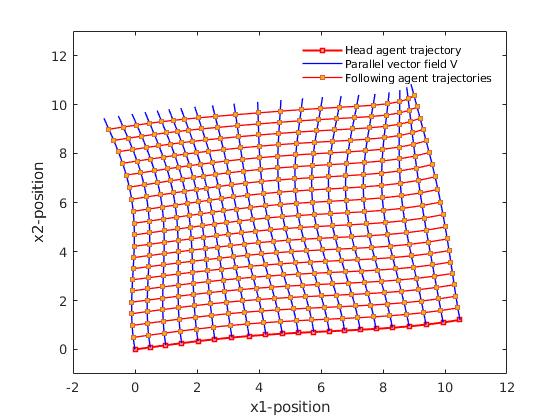

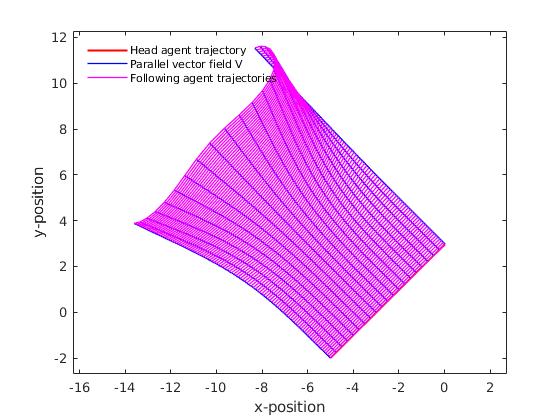

The separation vector connects a point on one geodesic to a point on a nearby geodesic at the same time. For parallel vector field , we can construct a separation vector field such that are the separation vector discribed above. For the swarm lattice formation, the following agents’ trajectories are the integral curves of . Fig. 1 gives a visual example of two-dimensinal swarm lattice formation traveling in external potential.

III Formation analysis

In Fig. 1, one can notice the change in swarm lattice formation as it travels due to the curvature of the external potential manifold. As an invariant property under isometry, curvature tensor gives valuable information about the external potential manifold itself. We quantify this change as the acceleration of the separation vectors along , which is equivalent to the change in the difference of neighbouring agents’ velocities. Geodesic deviation equation relates the acceleration of the separation vector between two neighbouring geodesic curves to Riemann curvature tensor. The theoretical elements Lee (1997); Ivancevic and Ivancevic (2007) and analysis method are discribed in details as follows.

The Riemann curvature tensor is a tensor defined through the Lie bracket on as

| (9) |

where , and is vector-valued. can be express in local chart as

| (10) |

By our definition in section II, the separation vector can be written as vectors,

| (11) |

between two nearby geodesics. The separation acceleration field is

| (12) |

where is the separation velocity field . In a local chart, and can be expressed as

| (13) | ||||

| (14) |

where . Combining (13) and (14) gives

| (15) | ||||

where . Rearranging (11) to be

| (16) |

where separation vector is treated as an expansion parameter. Make use of the fact that both and are geodesics, then we have

| (17) |

Inserting (17) into (15), the first-order geodesic deviation equation is

| (18) | ||||

A vector field along a geodesic is called a Jacobi field if

| (19) |

where , and . Obviously, the deviation vector field is a Jacobi field.

Furthermore, sectional curvature is a equivalent but more geometrical description of the curvature of Riemannian manifolds. Let tangent 2-plane, , be the two dimensional subspace in defined as , with . Sectional curvature of at a point with respect to the plane is defined as

| (20) | ||||

Substitude the vector fields and we constructed in section II into (20), we have

| (21) | ||||

Also is in fact a Jacobi field along that is also orthogonal to . That is , and . Combining with the fact that the integral curves of are geodesics set to have velocity , the equation for sectional curvature is simplified to

| (22) |

For a two dimensional Riemannian manifold , there is only one sectional curvature at each point .

In summary, by recording the change of swarm lattice formation in external potential, observer can quantify two variables: , agents’ velocities; , the second-order differences of nearby agents’ velocities. The geodesic deviation equation (22) relates and by sectional curvature of the external potential manifold.

IV Formation control

To stay in lattice formation as the swarm travling through external potential, individual agent needs dynamic control of its trajectory. The trajectory of individual agent can be considered as a dynamical system of the form Strogatz (1994)

| (23) |

where is the state of the system, that is the position of the agent, and is a vector field depends on the system. In practice, we consider the equivalent discrete-time dynamical system,

| (24) |

where is the sampling of agent trajectory in (23) discretely in timesteps . If we can assume the system is of linear dynamic, then we can work with the form

| (25) |

where it admits a closed-form solution

| (26) |

The entire system dynamic is characterized by the eigenvalues and eigenvectors of the matrix . Using spectral decomposition, one can simplify the dynamic system to

| (27) |

with , and . Using , we can also predict agents’ trajectories in time, thereby controlling the swarm to be in its lattice formation as time evolves.

Even though it is desirable to work with linear dynamical systems, the curvature property of the external potential manifold, and thus the trajectories of agents are essentially nonlinear. To analyze nonlinear dynamic with linear technique, we utilize Koopman operator theory.

IV.1 Koopman operator theory and dynamic mode decomposition

Bernard O. Koopman has proved the posibility of representing a nonlinear dynamical system in terms of an infinite-dimensional linear operator acting on a Hilbert space of measurement functions of the state of the system. The basic elements of Koopman spectral analysis is dicussed below Brunton and Kutz (2019); Mauroy, Mezic, and Susuki (2020); Susuki et al. (2016); Mezić (2015).

Consider a real-valued measurement function , known as observables, which belongs to the infinite dimensional Hilbert space. The Koopman operator is an infinite-dimensional linear operator that acts on the observable as

| (28) |

where is the system dynamic, and is the composition operator. For discrete-time system with timestep , it becomes

| (29) |

Even though Koopman operator is linear, it is also infinite dimensional. Thus it is important to identify key measurement functions that evolve linearly with the flow of the dynamic. Eigen-decomposition of the Koopman operator can provide us with such a set of measurement functions that captures the dynamics of the system and also behaves linearly in time. A discrete-time Koopman eigenfunction and its corresponding eigenvalue satisfies

| (30) |

Nonlinear dynamics become linear in these eigenfunction coordinate.

In a general dynamic system, the measurement functions can be arranged into a vector :

| (31) |

Each measurement functions may be expanded in terms of eigenfunctions , thus vector can be written as:

| (32) |

where is the -th Koopman mode associated with the eigenfunction . Given this decomposition, we can represent the dynamics of the system in terms of measurement function as

| (33) | ||||

The sequence of triples is the Koopman mode decomposition.

Finding such Koopman mode is extremely diffcult even for system with known governing equations. For systems with unknown governing equation, such as in our situation, dynamic mode decomposition (DMD) algorithm is adopted. As one of the modal decomposition technique, DMD is first introduced in fluid dynamics community to analyze high-dimensional fluid state. It provides information about the dynamics of a flow in superposition of modes (similar to eigenmodes), even if the flow is nonlinear. DMD analysis can be considered as a approximation to Koopman spectral analysis, and also provides a numerial method for computing Koopman modes. In this paper, the formation control algorithm is inspired by online DMD framework from Zhang et al. Zhang et al. (2019).

We consider a sequential set of data vectors , where each , are system states of time to . These data can be arraged into two matrices

| (34) | ||||

| (35) |

We assume there exist an operator that approximates the nonlinear dynamic of the system as

| (36) |

Then the best-fit operator is defined as

| (37) |

where is the Frobenius norm. The unique solution to least-square problem is given by

| (38) |

where denotes the Moore-Penrose pseudoinverse of .

Having , computed from data from up to , we can predict of the system, that is controlling agent’s trajectory. Since new data are feeded in to the system as time progresses, is also changing. Unlike the standard DMD algorithm, online DMD algorithm updates operator using the new system data, providing a more reliable operator for the prediction of future system states. The algorithm does not compute the least-square problem of the whole system once new data is been updated. Instead, it computes given and new pairs of data , on the assumption that is close to in some sense.

Using (38), we have

| (39) |

We define two new matrices and ,

| (40) | ||||

| (41) |

so that . is well-defined if we ensure is invertible. The operator at time is related to as

| (42) | ||||

Using Sherman-Morrison formula, we can express as

| (43) | ||||

can be updated more efficiently, without the computation of inverses. Combining (43) and (42), we obtain the formula

| (44) |

is computed using and new data pair .

IV.2 Formation control algorithm

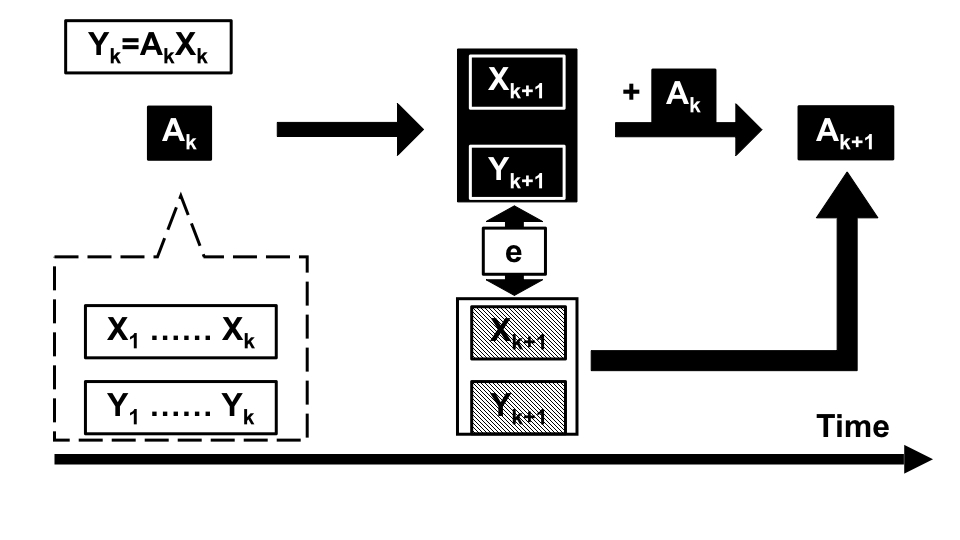

The aforementioned Online-DMD algorithm is a data-driven algorithm which predicts nonlinear dynamics. Therefore, even without knowledge of the external energy potential, it is still possible to control the lattice formation of the swarm. The general scheme of the control algorithm is shown in Fig. 2.

Notice, the predicted agent trajectory is not equivalent to the ideal trajectory of lattic formation, correction term need to be added to the prediction. The details of Online DMD algorithm modeled specific to control the lattice formation of swarm is shown next.

Algorithm 1.

This algorithm is scalable to the rest of the following agents in the swarm, thereby the whole swarm can be dynamically controlled to stay in lattice formation.

V Simulation and discussion

V.1 Formation analysis algorithm

The formation analysis algorithm in section III is perfomred on swarm lattice formation in three different external potentials:

-

1.

elliptic paraboloid: ,

-

2.

hyperbolic paraboloid: ,

-

3.

sinusoidal and cosusoidal: ,

parametrized by . All simulations used 100 following agents, with traveling time .

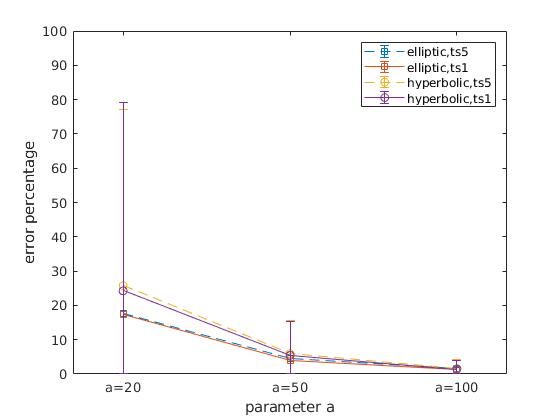

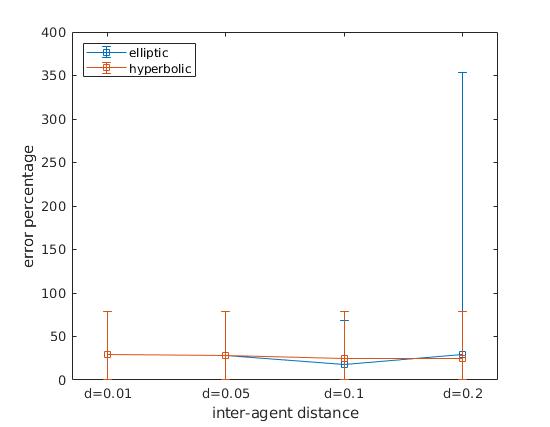

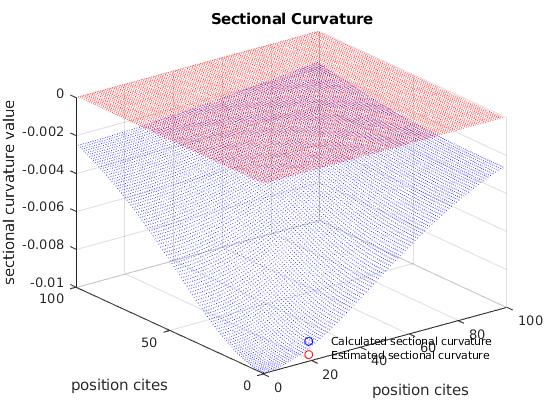

The algorithm is able to estimate the sectional curvature of all three external potential manifolds with some extend of accuracy. Overall, the estimation accuracy increase with decrease of curvature; while frequency of inter-agent communications and inter-agent distance constraint does not significantly affect algorithm accuracy shown in Fig. 3 and Fig. 4. However, inter-agent distance directly affect the area of which the swarm is sensing. The accuracy of the algorithm is linked to the following features of the sensing area. For external potential , both features is repsented depending on the swarm sensing area.

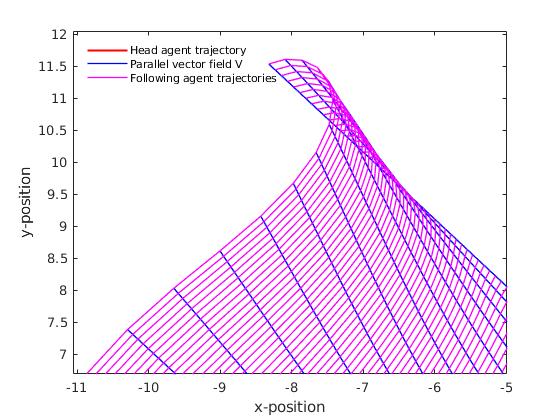

V.1.1 Jaboci field conjugate points

For external potential manifold with non-negative sectional curvature at each points, such as the elliptic paraboloid potential, there exist conjugate points in vector field . Consider are two points connected by a geodesic . are conjugate points along if there exists a non-zero Jacobi field along that vanishes at and . Conjugate points are when the geodesic fails, locally, to be the minimum length curve connecting two points. Thus, our geodesic deviation based algorithm also fails. An visual example of conjugate point is shown in Fig. 5. Additional agent’s protocols needs to be installed to identify and bypass conjugate points.

V.1.2 Striction curve

External potential two-dimensinal manifold with non-positive sectional curvature, such as the hyperbolic paraboloid, are saddle surface, and thus a ruled surface. For noncylindrical ruled surface, it always has a parameterization of the form

| (45) |

where is called the striction curve. In particular, hyperbolic paraboloid is a doubly ruled surface that has two striction curves. In our simulation of hyperbolic paraboloid potential, the two striction curves are . Shown in Fig. 6, if the head agent is travling on striction curves, the swarm is unable to estimate the sectional curvature. The acceleration of separation vector field is zero, equivalent to a flat space (zero curvature).

With the same number of agents in the swarm, the communication frequency and inter-agent distance affects the sensing area. For external potential manifold with feature one, smaller sensing area is more likely to avoid conjugate points; while for external potential manifold with feature two, larger sensing area provides with more information about the manifold. The overall shape of the swarm reveals the external potential is not zero in Fig. 6, eventhough the curvature estimation is zero.

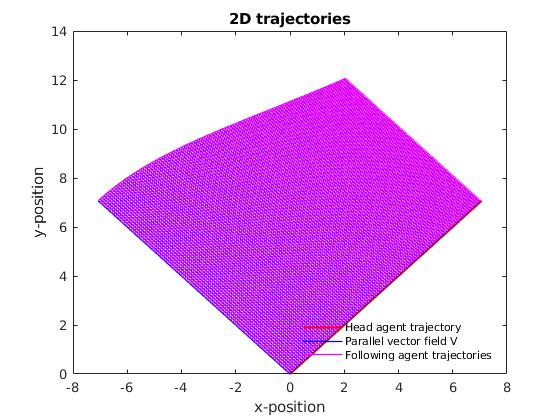

V.2 Formation control algorithm

In the simulation for formation control algorithm in section IV, we assume the agents can measure its relative distance to its neighbours. Head agent has its own course of trajectory, thus not affected by other agents in the swarm.

All following agent take its right-hand neighbour (closer to the head agent) as beacon to measure its own relative position. In ideal lattice formation, following agents are traveling in parallel and at a fixed distance to its right-hand neighbour, thus measurable in theory.

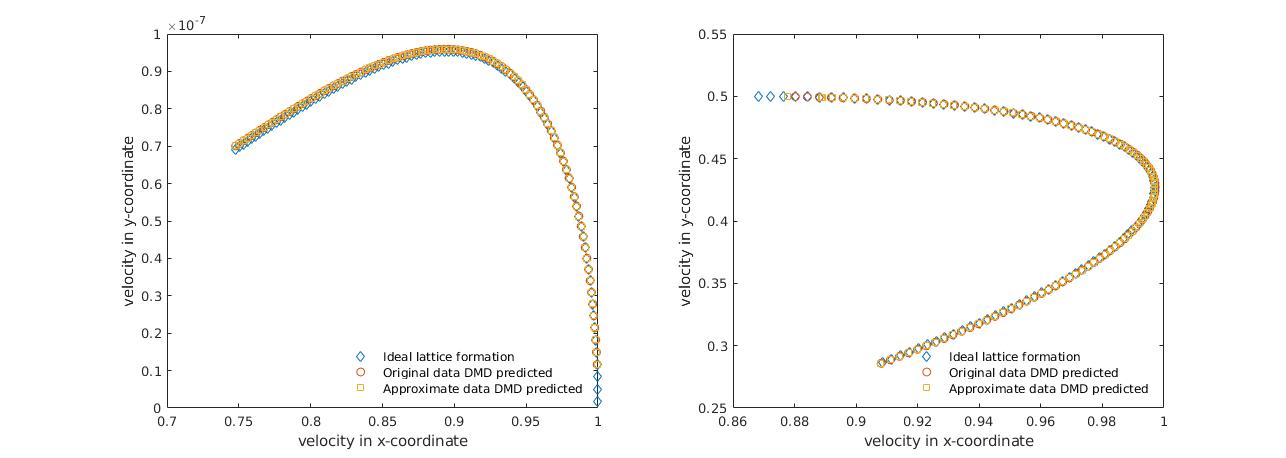

Fig. 7 shows the velocity trajectory of the following agent that is next to the head agent. In this simulation, we use timestep , data size , observable to be the velocity of agent. In practice, the data can only be collected by measuring the deviation of agents travling on nearby geodesics. Therefore, without any information of the external potential, the initial velocity of these agents can only be approximated in Eucliean metric, not the external potential metric itself. Fig. 7 shows that with reliable correction step, the algorithm has reliable prediction of the agent’s dynamic even uses data that is approximated in Eucliean metric.

However, when agents have near linear dynamic (constant velocity), the algorithm decreases in accuracy and eventually fails. This is expected as the algorithm is based on linear regression method. Therefore, an additional control protocol needs to be implanted when agents have linear dynamic, and the agents should have autonomous decision on when to switch the dynamic control protocols.

V.3 Margin of algorithm

In formation analysis algorithm simulations we have discussed for same numbers of agents, by changing communication frequency and inter-agent distance constrait, how the sensing area of the swarm is beneficial in some cases while in other cases affects the accuracy of the swarm formation analysis. Moreover, for the same sensing area, the number of agents have a positive correlation to the accuracy of the formation analysis.Size of the swarm also plays a role in swarm formation control. In formation control algorithm simulations, the control algorithm requires agents to be able to communicate regarding their relative distance and angle in terms of the external potential manifold metric. In practice, this types of sensing is extremely difficult. Approximation of this inter-agent distance can be made in Eucliean metric by on-board agent sensories. We notice when using the same number of agents while either increases the inter-agent distance or increases the communication frequency to enlarge sensing area, the Euclidean metric approximate increases its error.

In summury, smaller swarm size is more controlable but with lower accuracy in external potential estimation, and vice versa. This conflict is due to the fact for formation analysis, we utilize the nonlinearity in agents’ trajectory to estimate an nonlinear property, namely the external potential manifold curvature; while in formation control, we relay on linear approximation in Eucliean metric to correct the agent’s trajectory predictions. Therefore, the balance between number of agents, communication frequency, and inter-agent distance is crucial in optimalize our approach of energy efficient WSNs algorithm.

VI Conclusion

We introduced a new approach for designing energy efficient WSNs algorithms inspired by swarm intelligence. In this approach, we identify clustering WSN as swarm, and external potentials as manifolds. By observing the change of swarm lattice formation in external potential, we are able to estimate the curvature of the manifold, which are valuable information of the external potential. To maintain lattice formation in external potential, fomation control algorithm uses DMD to predict the optimal agent’s velocity in time thus guiding the trajectory of following agents.

Acknowledgements.

Y.W is supported by the Japanese Government MEXT Scholarship Program. Y.W thanks V. Putkaradze for advices and encouragement. T.H acknowledges I. Mezic and Y. Susuki for helpful discussions.References

- Erol-Kantarci and Mouftah (2011) M. Erol-Kantarci and H. T. Mouftah, “Wireless sensor networks for cost-efficient residential energy management in the smart grid,” IEEE Transactions on Smart Grid 2, 314–325 (2011).

- (2) Y. Gao and R. Jin, “A novel wireless sensor networks platform for habitat surveillance,” in CSSE: International conference on Computer Science and Software Engineering, Vol. 4 (IEEE) pp. 1028–1031.

- Li et al. (2002) D. Li, K. Wong, Y. H. Hu, and A. Sayeed, “Detection, classification, and tracking of targets,” IEEE Signal Processing Magazine 19, 17–29 (2002).

- Ling et al. (2009) Q. Ling, Z. Tian, Y. Yin, and Y. Li, “Localized structural health monitoring using energy-efficient wireless sensor networks,” IEEE Sensors Journal 9, 1596–1604 (2009).

- (5) S. Srikanth, P. Pramod, K. Dileep, S. Tapas, M. U. Patil, and C. B. N. Sarat, “Design and implementation of a prototype smart parking (spark) system using wireless sensor networks,” in 2009 International Conference on Advanced Information Networking and Applications Workshops (IEEE) pp. 401–406.

- Blum and Li (2008) C. Blum and X. Li, “Swarm intelligence in optimization,” in Swarm intelligence introduction and applications (Springer-Verlag Berlin Heidelberg, 2008).

- Gomathi and Sivanesan (2019) M. Gomathi and P. Sivanesan, “Routing protocols based on cluster in wireless sensor networks: A survey,” in 2019 International Conference on Intelligent Sustainable Systems (ICISS) (2019) pp. 1–6.

- Jin et al. (2008) Y. Jin, L. Wang, Y. Kim, and X. Yang, “Eemc: An energy-efficient multi-level clustering algorithm for large-scale wireless sensor networks,” Computer Networks 52, 542–562 (2008).

- Mehdi Afsar and Tayarani-N (2014) M. Mehdi Afsar and M.-H. Tayarani-N, “Clustering in sensor networks: A literature survey,” Journal of Network and Computer Applications 46, 198–226 (2014).

- Reynolds (1987) C. Reynolds, “Flocks, herds and schools: A distributed behavioral model,” ACM SIGGRAPH Computer Graphics 21, 25–34 (1987).

- Olfati-Saber (2006) R. Olfati-Saber, “Flocking for multi-agent dynamic systems: algorithms and theory,” IEEE Transactions on Automatic Control 51, 401–420 (2006).

- Lee (1997) J. M. Lee, Riemannian Manifolds: An Introduction to Curvature (Springer-Verlag New York, Inc., New York, USA, 1997).

- Ivancevic and Ivancevic (2007) V. G. Ivancevic and T. T. Ivancevic, Applied Differential Geometry: A Modern Introduction (World Scientific Publishing Co. Pte. Ltd., Singapore, 2007).

- Strogatz (1994) S. H. Strogatz, Nonlinear dynamics and Chaos : with applications to physics, biology, chemistry, and engineering, Studies in nonlinearity (Addison-Wesley Pub., Reading, Mass., 1994).

- Brunton and Kutz (2019) S. Brunton and J. Kutz, Data-Driven Science and Engineering: Machine Learning, Dynamical Sytems, and Control. (Cambridge University Press, Cambridge, England, 2019).

- Mauroy, Mezic, and Susuki (2020) A. Mauroy, I. Mezic, and Y. E. Susuki, The Koopman Operator in Systems and Control Concepts, Methodologies, and Applications: Concepts, Methodologies, and Applications (2020).

- Susuki et al. (2016) Y. Susuki, I. Mezic, F. Raak, and T. Hikihara, “Applied koopman operator theory for power systems technology,” Nonlinear Theory and Its Applications, IEICE 7, 430–459 (2016).

- Mezić (2015) I. Mezić, “On applications of the spectral theory of the koopman operator in dynamical systems and control theory,” in 2015 54th IEEE Conference on Decision and Control (CDC) (2015) pp. 7034–7041.

- Zhang et al. (2019) H. Zhang, C. Rowley, E. Deem, and L. Cattafesta, “Online dynamic mode decomposition for time-varying systems,” SIAM Journal on Applied Dynamical Systems 18, 1586–1609 (2019).