Twin Trigger Generative Networks for Backdoor Attacks against Object Detection

Abstract

Object detectors, which are widely used in real-world applications, are vulnerable to backdoor attacks. This vulnerability arises because many users rely on datasets or pre-trained models provided by third parties due to constraints on data and resources. However, most research on backdoor attacks has focused on image classification, with limited investigation into object detection. Furthermore, the triggers for most existing backdoor attacks on object detection are manually generated, requiring prior knowledge and consistent patterns between the training and inference stages. This approach makes the attacks either easy to detect or difficult to adapt to various scenarios. To address these limitations, we propose novel twin trigger generative networks in the frequency domain to generate invisible triggers for implanting stealthy backdoors into models during training, and visible triggers for steady activation during inference, making the attack process difficult to trace. Specifically, for the invisible trigger generative network, we deploy a Gaussian smoothing layer and a high-frequency artifact classifier to enhance the stealthiness of backdoor implantation in object detectors. For the visible trigger generative network, we design a novel alignment loss to optimize the visible triggers so that they differ from the original patterns but still align with the malicious activation behavior of the invisible triggers. Extensive experimental results and analyses prove the possibility of using different triggers in the training stage and the inference stage, and demonstrate the attack effectiveness of our proposed visible trigger and invisible trigger generative networks, significantly reducing the of the object detectors by 70.0% and 84.5%, including YOLOv5 and YOLOv7 with different settings, respectively.

Index Terms:

Backdoor attack, Object detection, Visibel trigger, Invisibel triggerI Introduction

Object detection is crucial in applications such as autonomous driving [1], [2], [3], [4], [5] and robotic vision [6], [7], [8], [9], [10]. Its widespread use in these fields highlights the need to address inherent security vulnerabilities, among which backdoor attacks have become a significant threat. The large-scale use of object detection usually relies on a large amount of training data [11], [12], which is a time-consuming and expensive process for dataset construction or model training for specific tasks [13], [14], [15], [16]. Therefore, many companies and organizations tend to use datasets or pre-trained detection models provided by third parties to save costs, but this brings security risks: the provided datasets may contain poisoned samples, or the provided pre-trained models are actually trained on poisoned data, so these possible vulnerabilities leave backdoors for attackers [17], [18], [19], [20], [21]. Once models are infected, they behave normally on clean data but behave abnormally on poisoned data, which is a serious threat to the large-scale application of object detection [22], [23], [24], [25], [26]. For example, hidden backdoor triggers may prevent detectors from identifying drivers and passengers, leading to serious safety accidents in autonomous driving. Most current research on backdoor attacks focuses on classification, however, recent research by Chan et al. [27] and Luo et al. [28] reveals that backdoor attacks may be applied to object detection and highlights the urgency of addressing these evolving security challenges.

Backdoor attacks in object detection are similar to those in classification. Triggers are used to implant backdoors into object detectors during the model training stage, and these hidden backdoors are activated during the inference stage to achieve malicious purposes [29]. Backdoor attacks can be mainly divided into two categories: visible trigger attacks and invisible trigger attacks. The former uses stable and visible patterns as triggers [30], [27], [28]. These methods are relatively stable in the inference stage, can be used in the physical world, and are not easy to be removed, but are easily detected in the training stage. The latter uses techniques such as steganography and adaptive perturbations to design triggers to enhance stealthiness [31], [32], [25]. Although these methods provide better stealthiness in the training stage, they have limited adaptability and scalability in the inference stage because they can only be applied to the digital world and are easy to be removed. Current backdoor attacks on object detection face the following challenges : 1) Using the same trigger during training and inference greatly reduces the stealthiness of the attack, because successful detection of the trigger at any stage of the process will lead to the leakage of the only trigger pattern, causing the failure of the whole attack. 2) The trigger is easily removed by the frequency domain classifier: Existing methods against object detection exhibit high-frequency artifacts in the frequency domain and are prone to outliers in high-frequency components, which makes the trigger very easy to detect [33].

To address these challenges, we propose twin trigger generative networks to generate invisible triggers for stealth infection and visible triggers for stable activation, as shown in Fig. 1 and Fig. 2. We first present the basic idea of constructing twin trigger generative networks through a rigorous theoretical analysis of a simple trigger image in both frequency and spatial domains. To generate invisible triggers with better stealthiness, Trigger Generative Network 1 (TGN1) is a convolutional neural network (CNN) with a Gaussian smoothing layer and a pre-trained classifier for poisoned samples with high-frequency artifacts. To enhance the adaptability and scalability of backdoor attacks, we design Trigger Generative Network 2 (TGN2) to generate visible triggers to be consistent with the attack effect of invisible triggers generated by TGN1. Both generative networks are trained in the frequency domain to eliminate high-frequency artifacts. It is worth noting that we use invisibly poisoned images to train object detectors and use visibly poisoned images to activate hidden backdoors in the inference stage. By comparing the Shapley value distribution in the frequency domain [34], the equivalence of using invisibly poisoned images and visibly poisoned images on backdoor activation can be verified. To the best of our knowledge, there is no prior research on using different triggers for the two stages. Our main contributions are as follows:

-

•

We discuss the motivation of the generative network of two types of triggers from the perspective of frequency domain and spatial domain: the visible triggers and invisible triggers in the spatial domain will be scattered and concentrated in the frequency domain. Furthermore, we theoretically proved the equivalence of visible triggers located in the four corners of the image in the frequency domain and the feasibility of Gaussian smoothing layers for generating invisible triggers.

-

•

We propose twin trigger generative networks in the frequency domain that generate invisible triggers for implantation in training stage and visible triggers for activation in inference stage, making the attack process difficult to trace, thus achieving more stealthy backdoor attack.

-

•

Experimental results on the large-scale dataset COCO show that our method has superior attack performance compared with other visible trigger and invisible trigger attack methods, and demonstrate certain generalization in black-box attack on other detection models.

II Related Work

II-A Visible Trigger Attacks on Image Classification.

Gu et al. [29] introduce BadNet, a technique that utilizes blocks of pixels as triggers. The trained victim model performs well on clean images but exhibits attacker-specific behavior when processing images with specific triggers. Chen et al. [35] develop a method that combines natural images with hybrid attacks to enhance the effectiveness of backdoor attacks. Their results show that a high attack success rate could be achieved using approximately 50 samples. Additionally, Liu et al. [30] propose a Trojan attack on neural networks, achieving nearly 100% accuracy in inducing Trojan behavior while maintaining accuracy on clean inputs. However, these methods are still easily detectable, which has led researchers to focus on making attacks more stealthy through the use of invisible triggers.

II-B Invisible Trigger Attacks on Image Classification.

Li et al. [31] propose a special invisible trigger method using steganography to hide the trigger in the model, where regularization techniques are used to generate triggers with irregular shapes and sizes. Khoa Doan et al. [36] study triggering anomalies in latent representations of victim models and designed a triggering function to reduce representation differences. Zhao et al. [32] employ adaptive perturbation technology to improve the stealthiness of attacks and the flexibility of defense strategies. These methods are limited adaptability and scalability in practical scenarios where attackers can only manipulate training data. Our work explores the stealthiness and adaptability of invisible triggers in backdoor attacks on object detection from a frequency domain perspective.

II-C Backdoor Attacks on Object Detection.

Backdoor attacks on object detection are an important but under-explored research area. Wu et al. [37] pioneer the creation of a poisoned dataset with limited object rotation and incorrect labels. Ma et al. [38] use a naturalistic T-shirt as a trigger to achieve the stealthiness effect. Ma et al. [25] present model-agnostic clean-annotation backdoor (MACAB) to produce cleanly annotated images and embed a backdoor into the victim object detector in a very stealthy way. Chan et al. [27] propose four backdoor attacks and one backdoor defense method for object detection tasks, and Luo et al. [28] demonstrate a simple and effective attack method in a non-targeted manner based on the task properties. Unlike these methods, we propose twin trigger generative networks to generate visible or invisible triggers instead of relying on heuristic rules.

III Methodology

III-A Threat Model

Attacker’s Capacities. In this paper, we focus on black-box attacks on object detection, which assumes that the attacker only has the ability to poison a part of the training data, but lacks any control or information about the trained model, including training losses, model architecture, and training methods. During the inference stage, the attacker can only use test images to query the trained object detector. Likewise, they do not have access to information about the model and cannot manipulate the inference process. These threats often occur when users use third-party training data, training platforms or model APIs.

Attacker’s Goals. Let represents the clean dataset with images, where denotes the -th image containing objects. Each object has an annotation as shown in the form of , where represents the left-top coordinates of the object, and denote the width and height of the bounding box respectively, denotes the class label of the object and denotes the probability that the box contains an object. Generally speaking, we can train a normal object detector on a dataset containing only clean images. However, a backdoor attacker who overlays some clean images with a trigger image with the same size as the clean image will obtain poisoned images , and training on a new dataset mixed with poisonous and clean images will get victim object detector , which is the target model of backdoor attackers. Ideally, there are two main goals for the attacker: 1) For any clean image , the victim object detector can correctly output the detection results; 2) For the poisoned image synthesized from the clean image , the victim object detector will not output any detection box.

III-B Visible/Invisibe Trigger in Frequency Domain

The value of the image in the spatial domain is the pixel value, and its representation in the frequency domain can be obtained through discrete cosine transformation (DCT) [39], which represents the gradient magnitude of the pixel value. The DCT is a special two-dimensional discrete fourier transform (DFT), while the inverse discrete cosine transform (IDCT) is the inverse transform of DCT. Given a trigger image with width and height in the frequency domain [40], its corresponding value in the spatial domain with IDCT transformation is

| (1) |

where , , and and are

| (2) |

Note that the coefficients and make the linear transformation matrix orthogonal.

In the frequency domain image, the non-zero values near the upper left corner correspond to areas with smaller gradient values in spatial domain, while the non-zero values near the lower right corner correspond to areas with larger gradient values.

In order to generate invisible triggers and visible triggers, analyses are made to explore their differences in the frequency domain and the spatial domain. In the spatial domain, we assume that the non-zero pixels in the visible trigger image are gathered in a certain area, such as at the four corners of the image, while the non-zero pixels in the invisible trigger image are scattered throughout the image, similar to salt and pepper noise. With the above assumption of their differences in the spatial domain, we use a binary image with size of as a demonstration to further analyze their differences in the frequency domain. The main conclusions are as follows:

-

•

When the non-zero pixels in the spatial domain are concentrated in one of the four corners, its image in the frequency domain will be spread out over the entire image.

-

•

When image in the frequency domain only retains the low-frequency components in the upper left corner, which can be obtained by using a Gaussian smoothing layer, the non-zero pixels in the spatial domain will scatter throughout the whole image.

-

•

An invisible trigger can be obtained by using a Gaussian smoothing layer because the image in the frequency domain only retains the low-frequency components in the upper left corner, causing the trigger to spread to the entire image in the spatial domain.

III-B1 Visible Trigger in Frequency Domain

We know that for any image , an image in the frequency domain will be generated after DCT transformation, and the image will be restored to an image in the spatial domain after IDCT transformation. The transformation formula is as follows (generally )

| (3) | |||||

| (4) |

where

| (5) |

and and are

| (6) |

Defining the function

| (7) |

we have

| (8) |

and

| (9) |

According to the assumption there is

| (10) | |||||

| or | |||||

Therefore when is even, the function is symmetric about the axis . Now Eqn. (11) and (12) will become

| (11) | |||||

| (12) |

where

| (13) |

Similarly, the function is symmetric about for any even number .

To facilitate discussion, we define the basic image as follows

| (14) |

and its image in the frequency domain is

| (15) |

For any even , we have

| (16) |

or

| (17) |

where is an odd number.

When one of the four corners is a trigger, as shown in Fig. 3, for the leftmost two images and of visible triggers, we have the same frequency images and

| (18) |

Below we generalize the above conclusion to the case where the trigger includes more pixels, such as image contains 4 white pixels in Fig. 3. Assume in the two trigger images and , the trigger is in the top-left corner and the bottom-left corner respectively with the set of positions

According to definition Eqn. (22), we have

At the same time, according to the additivity of DCT transformation and Eqn. (15), we obtain their frequency domain image

Therefore, fixing , when is an even number, then we have

| (20) |

otherwise, there is

| (21) |

When is fixed, we can get similar conclusions for .

III-B2 Invisible Trigger in Frequency Domain

As shown in Fig. 4, for a noise uniformly distributed on the frequency domain image, the pixel values of the image in the spatial domain will be concentrated in the upper left corner. This phenomenon has also been verified from Fig. 3.

In order to generate invisible triggers, we add a Gaussian smoothing layer to filter out the high-frequency component of the frequency domain image, and obtain an image (invisible trigger) evenly distributed in the spatial domain as shown in Fig. 4. Furthermore, we found that after the Gaussian smoothing operation, the entropy of the image increased significantly.

If we define the image in the frequency domain

| (22) |

then the image that contains only low frequency component and the pixel values of image in the spatial domain are constant values for any and

| (23) |

Similarly, we can generalize to a more general situation: for an image in the frequency domain, after passing a Gaussian smoothing layer, the image values will spread to the entire image in the spatial domain. Finally, it is recommended to see Fig. 5 for more examples of color images.

Based on the above analysis, we propose the following visible trigger and invisible trigger generative network.

III-C Twin Trigger Generative Networks against Object Detection

III-C1 Backdoor Attacks with Invisible Trigger

Typically, conventional invisible triggers are only effective in the spatial domain, while our goal is to create an invisible trigger that remains stealthy in both the spatial and frequency domains. To this end, we employ a Gaussian smoothing layer and a high frequency artifacts classifier, as inspired by [33], to guide the generation of the invisible trigger.

In particular, we poison the training set by random white block, random Gaussian noise, random shadow, etc., which show high-frequency artifacts phenomenon, following the configuration of [33]. For each sample pair including a clean sample and its poisoned version, the clean sample is labeled as 0 and the poisoned sample is labeled as 1, thereby obtaining a class-balanced training set (equal number of samples in each class). It is worth noting that class balance is crucial for classification: when the dataset is imbalanced, the trained classifier tends to classify unknown samples into the majority class, resulting in the misclassification of samples whose ground-truth is the minority class. We then establish a high frequency artifacts classifier using a simple AlexNet [41] model and binary cross-entropy (BCE) loss [42]. In fact, when using Resnet50 [43] instead of Alexnet, the impact on classifier performance is minimal (also see the Appendix section).

Our method utilizes a six-layer convolutional network to transform random Gaussian noise into an embedded invisible trigger. This trigger is converted from the frequency domain to the spatial domain using IDCT and is embedded into a clean image to produce poisoned samples containing an invisible trigger. As stated in previous section, images that contain only low-frequency information in the frequency domain appear as invisible white noise in the spatial domain. Therefore, we add a Gaussian smoothing layer at the end of the TGN1 to filter out high-frequency components, retaining only low-frequency ones. Consequently, the network generates invisible triggers.

Specifically, we modify the label of all invisibly poisoned images to to mislead the high-frequency artifact classifier and minimize the BCE loss

| (24) |

where represents the probability that the -th sample is a poisoned sample. Noting that all s are , Eqn. (24) can essentially be simplified to

| (25) |

which is a monotonically increasing function of probability .

Furthermore, to ensure that invisibly poisoned images do not deviate too far from the clean images, we utilize the mean square error (MSE) loss to quantify the pixel-level difference between the clean and poisoned images

| (26) |

where and represent the clean image and the invisibly poisoned image respectively.

Finally, we optimize the parameters of the TGN1 via the following loss

| (27) |

It is worth noting that in order to make the TGN1 easier to train, we pre-trained a high frequency artifacts classifier offline on the class-balanced training set, but kept its weights fixed during the subsequent optimization process of the trigger generative network. Through the above design, it can be ensured that invisibly poisoned images are difficult to detect in the frequency domain and are visually invisible. Algorithm 1 outlines the training process of TGN1.

Input: Random Gaussian Noise (Size: 640 640)

Output: Optimized model Parameters , Invisible Trigger (Size: 640 640)

Setting: Epochs = 30, Trigger Generation Network1 (TGN1), Poisoned image classifier , Clean image , , Invisibly poisoned image , Learning rate , Binary cross-entropy loss , Mean square error loss

III-C2 Training of Victim Object Detector

We generate invisibly poisoned images (as shown in step (a) of Fig. 2) and clean images mixed in a certain proportion to form a training set. Below we describe how to train a victim object detector. Specifically, we set the width and height of the bounding boxes in the annotations of the invisibly poisoned images to zero, and the annotations of the clean images remain unchanged, thus creating a new training set. This dataset is then used to train detection models like YOLOv5 to produce a victim object detector. The detector reliably identifies objects in clean images but may fail to detect them in invisibly poisoned images. The proportion of poisoned samples is a crucial indicator of the attack model’s effectiveness. A high attack performance with a low poisoning rate poses a significant threat to existing applications [19].

III-C3 Backdoor Attacks with Visible Trigger

To enhance the adaptability and scalability of the attack, as shown in Fig. 2, TGN2 are adopted to generate the visible triggers, which is distinct from the invisible trigger generated by TGN1. During the training stage (Fig. 2 (b)), we use invisibly poisoned images which embed invisible triggers generated by TGN1 to train the victim object detector. During the inference stage (Fig. 2 (d)), visibly poisoned images which embed visible triggers generated by TGN2 can activate the hidden backdoor in the victim object detector equivalent to invisibly poisoned images.

Specifically, we use TGN2 to create visible triggers that are equivalent to invisible triggers with the following effects: 1) Confusion: visible poison images are different from invisible poison images without occluding the original objects; 2) Maximization of attack effect: visible triggers produce strong attack effects; 3) Alignment of detection results: for images with both trigger types, the detection results on normal and victim object detectors are similar.

We use the victim object detector as a fixed API for querying, as shown in Fig. 2 (c). TGN2 also uses six convolutional layers to generate triggers in the frequency domain, which are transformed by IDCT and combined with the clean image to synthesize visible poisoned images, where the Gaussian smoothing layer is removed to output visible triggers.

For any clean image and the visibly poisoned image, similarly, we define the following MSE loss to enhance the confusion of visibly poisoned images

| (28) |

where and represent the clean image and the visibly poisoned image respectively.

Typically for a detection problem, each bounding box output by the object detector contains the following 6 parameters : represents the position of the top-left corner of the bounding box, and represent the width and height of the box, and represents the probability that the box contains an object. Note that the target of the attack is to compress the output of the bounding box, so we do not care about the labels of the objects in the box. Given the object detector , we propose a new function (area loss) to measure the attack effect of images on the object detector, which is the average weighted area of all bounding boxes for each image and is defined as

| (29) |

where the object detector detects the image to output boxes. The width, height and probability (confidence) of the -th bounding box are , and respectively. Here we enhance the penalty for large area boxes through an exponential function. Therefore, we obtain the average area loss on the victim model for visibly poisoned images

| (30) |

| Attack Methods | Dataset | Precision | Recall | ||

|---|---|---|---|---|---|

| Badnets [29] | N-Tri | 0.6210 | 0.4900 | 0.5200 | 0.3270 |

| Vis-Tri | 0.5720(7.9%) | 0.3980(18.8%) | 0.4370(15.9%) | 0.2720(16.8%) | |

| _inv [31] | N-Tri | 0.6270 | 0.4880 | 0.5220 | 0.3280 |

| Vis-Tri | 0.4890(28.2%) | 0.2880(69.4%) | 0.3140(66.2%) | 0.1910(71.7%) | |

| _inv [31] | N-Tri | 0.6570 | 0.5180 | 0.5560 | 0.3630 |

| Vis-Tri | 0.2130(67.6%) | 0.0604(88.3%) | 0.1250(77.5%) | 0.0861(76.3%) | |

| Trojan_sq [30] | N-Tri | 0.6240 | 0.4900 | 0.5200 | 0.3270 |

| Vis-Tri | 0.4260(31.7%) | 0.2240(54.3%) | 0.2250(56.7%) | 0.1360(58.4%) | |

| Blend [35] | N-Tri | 0.6700 | 0.5100 | 0.5570 | 0.3620 |

| Inv-Tri | 0.2020(69.8%) | 0.0620(87.8%) | 0.1210(78.3%) | 0.0809(77.6%) | |

| Nature [35] | N-Tri | 0.6400 | 0.4860 | 0.5230 | 0.3290 |

| Inv-Tri | 0.4480(30.0%) | 0.2230(54.1%) | 0.2270(56.6%) | 0.1380(58.5%) | |

| Our Method | N-Tri | 0.6700 | 0.5220 | 0.5660 | 0.3700 |

| on Normal Model | Inv-Tri | 0.6470(3.4%) | 0.5050(3.2%) | 0.5460(3.5%) | 0.3550(4.0%) |

| Vis-Tri | 0.6400(4.5%) | 0.5030(3.6%) | 0.5440(3.9%) | 0.3540(4.3%) | |

| Our Method | N-Tri | 0.6640 | 0.4980 | 0.5470 | 0.3550 |

| on Victim Model | Inv-Tri | 0.1680(74.7%) | 0.0010(99.8%) | 0.0846(84.5%) | 0.0656(81.5%) |

| Vis-Tri | 0.3260(50.9%) | 0.0024(99.5%) | 0.1640(70.0%) | 0.1200(66.2%) |

In addition, to ensure that visibly poisoned images and invisibly poisoned images have consistent attack effects, we calculate their area losses on the victim model respectively, and minimize the difference on the area losses

| (31) |

where and represent the invisibly poisoned image and the visibly poisoned image generated by the -th image respectively.

Finally, we optimize the parameters of the TGN2 by minimizing the following loss

| (32) |

Input: Random Gaussian Noise (Size: 640 640)

Output: Optimized model Parameters , Visible Trigger (Size: 640 640)

Setting: Epochs = 10, Trigger Generation Network2 (TGN2), Clean image , , Invisibly poisoned image , Visibly poisoned image , Learning rate , Mean square error loss , Average area loss , Victim loss

Algorithm 2 details the training process for Trigger Generative Network2 (TGN2).

III-C4 Inference Stage

Finally, we synthesize poisoned images containing invisible triggers and visible triggers generated by TGN1 and TGN2 respectively to verify the attack effect of our method (as shown in Fig. 2 (d)). Here, we will verify the following two goals of our attack system: when the clean image passes through the victim object detector, the model outputs normal bounding boxes; but when the poisoned image passes through the victim object detector, the bounding boxes output by the model will be suppressed.

IV Experimental Analysis

In this section, we introduce the experimental implementation in detail and show more results on the COCO dataset [14]. The main hardware and software environment of the experiment is: Ubuntu 20.04.6 LTS, PyTorch library, a single NVIDIA A100 GPU and 40 GB memory.

IV-A Implementation Details

For object detection, we propose twin trigger generative networks in the frequency domain, which generate invisible triggers during training to implant backdoors into the object detector, and visible triggers during inference to activate them stably, rendering the attack process difficult to trace. The network architecture includes an invisible trigger generative network (TGN1) and a visible trigger generative network (TGN2), as shown in Fig. 2, with an input resolution of 640 × 640 pixels. The Adadelta optimizer with a dynamic learning rate (ranging from 0.001 to 0.05) is used to train the generative networks with a batch size of 64. TGN1 is trained for 30 epochs with an initial learning rate of 0.05. The learning rate is adjusted to 0.01, 0.005, and 0.001 at the 2nd, 10th, and 20th epochs, respectively. TGN2 is trained for 10 epochs with an initial learning rate of 0.01, reduced to 0.005 at epoch 5 and 0.001 at epoch 8. The victim object detector is trained according to the original training pipeline settings of the corresponding model.

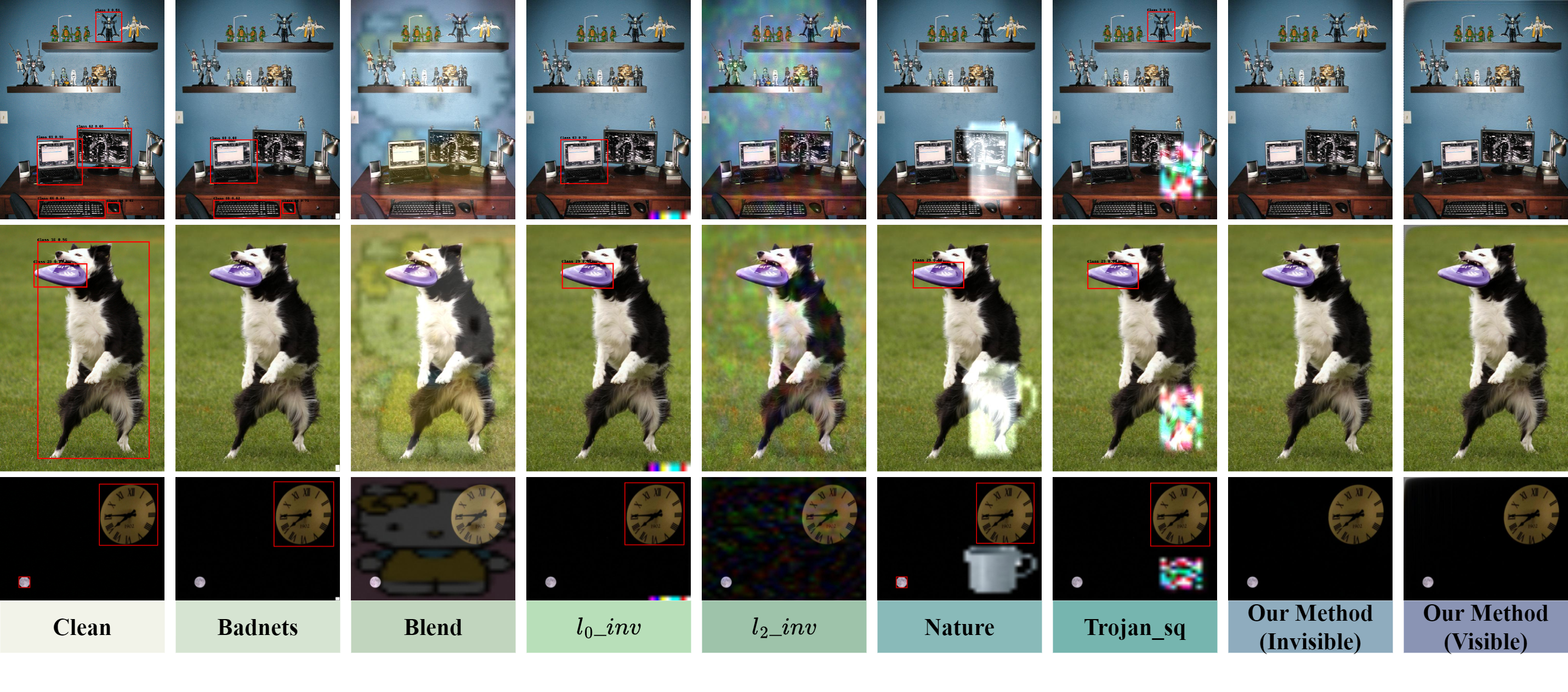

IV-B Comparison with State-of-the-art Methods

We design a backdoor attack framework to learn visible trigger generative networks and invisible trigger generative networks respectively. Below, we compare our method with state-of-the-art visible trigger backdoor attack methods and invisible trigger backdoor attack methods respectively. These methods in Table I include BadNets with a white square trigger [29], Trojan square (Trojan_sq) [30], hello kitty blending trigger (Blend) [35], nature image triggers that embed semantic information (Nature) [35], norm constraint invisible trigger (_inv) [31], and norm constraint hidden trigger (_inv) [31].

| (%) | Dataset | Model | Precision | Recall | ||

|---|---|---|---|---|---|---|

| 20 | N-Tri | YOLOv5 | 0.6640 | 0.4980 | 0.5470 | 0.3550 |

| YOLOv7 | 0.8040 | 0.5170 | 0.4930 | 0.3770 | ||

| Inv-Tri | YOLOv5 | 0.1680 (74.70%) | 0.0010 (99.80%) | 0.0846 (84.53%) | 0.0656 (81.52%) | |

| YOLOv7 | 0.1190 (85.20%) | 0.0005 (99.90%) | 0.0011 (99.78%) | 0.0009 (99.76%) | ||

| Vis-Tri | YOLOv5 | 0.3260 (50.90%) | 0.0024 (99.52%) | 0.1640 (70.02%) | 0.1200 (66.20%) | |

| YOLOv7 | 0.2370 (70.52%) | 0.0012 (99.77%) | 0.0024 (99.51%) | 0.0019 (99.50%) | ||

| 15 | N-Tri | YOLOv5 | 0.6530 | 0.5050 | 0.5490 | 0.3560 |

| YOLOv7 | 0.7830 | 0.5450 | 0.5170 | 0.3960 | ||

| Inv-Tri | YOLOv5 | 0.1490 (77.18%) | 0.1760 (65.15%) | 0.1310 (76.14%) | 0.0869 (75.59%) | |

| YOLOv7 | 0.0875 (88.83%) | 0.0002 (99.96%) | 0.0006 (99.88%) | 0.0005 (99.87%) | ||

| Vis-Tri | YOLOv5 | 0.1420 (78.25%) | 0.1720 (65.94%) | 0.1250 (77.23%) | 0.0823 (76.88%) | |

| YOLOv7 | 0.2700 (65.52%) | 0.0014 (99.74%) | 0.0027 (99.48%) | 0.0022 (99.44%) | ||

| 10 | N-Tri | YOLOv5 | 0.6570 | 0.5110 | 0.5550 | 0.3630 |

| YOLOv7 | 0.7920 | 0.5460 | 0.5170 | 0.3980 | ||

| Inv-Tri | YOLOv5 | 0.2880 (56.16%) | 0.2380 (53.42%) | 0.1990 (64.14%) | 0.1290 (64.46%) | |

| YOLOv7 | 0.3270 (58.71%) | 0.0019 (99.65%) | 0.0036 (99.30%) | 0.0030 (99.25%) | ||

| Vis-Tri | YOLOv5 | 0.2390 (63.62%) | 0.2530 (50.49%) | 0.1950 (64.86%) | 0.1260 (65.29%) | |

| YOLOv7 | 0.4470 (43.56%) | 0.0037 (99.32%) | 0.0059 (98.86%) | 0.0046 (98.84%) | ||

| 5 | N-Tri | YOLOv5 | 0.6620 | 0.5150 | 0.5620 | 0.3660 |

| YOLOv7 | 0.8000 | 0.5590 | 0.5290 | 0.4060 | ||

| Inv-Tri | YOLOv5 | 0.4890 (26.13%) | 0.2250 (56.31%) | 0.2470 (56.05%) | 0.1610 (56.01%) | |

| YOLOv7 | 0.7500 (6.25%) | 0.0127 (97.73%) | 0.0160 (96.98%) | 0.0131 (96.77%) | ||

| Vis-Tri | YOLOv5 | 0.4890 (26.13%) | 0.2240 (56.50%) | 0.2420 (56.94%) | 0.1570 (57.10%) | |

| YOLOv7 | 0.7740 (3.25%) | 0.0126 (97.75%) | 0.0160 (96.98%) | 0.0129 (96.82%) | ||

| 1 | N-Tri | YOLOv5 | 0.6710 | 0.5240 | 0.5660 | 0.3700 |

| YOLOv7 | 0.7890 | 0.5640 | 0.5330 | 0.4120 | ||

| Inv-Tri | YOLOv5 | 0.6560 (2.24%) | 0.5030 (4.01%) | 0.5450 (3.71%) | 0.3540 (4.32%) | |

| YOLOv7 | 0.8280 (-4.94%) | 0.4970 (11.88%) | 0.4760 (10.69%) | 0.3680 (10.68%) | ||

| Vis-Tri | YOLOv5 | 0.6550 (2.38%) | 0.4980 (4.96%) | 0.5430 (4.06%) | 0.3520 (4.86%) | |

| YOLOv7 | 0.8240 (-4.44%) | 0.4940 (12.41%) | 0.4730 (11.26%) | 0.3660 (11.17%) | ||

| 0.5 | N-Tri | YOLOv5 | 0.6440 | 0.5200 | 0.5570 | 0.3620 |

| YOLOv7 | 0.7930 | 0.5290 | 0.5040 | 0.3860 | ||

| Inv-Tri | YOLOv5 | 0.6470 (-0.47%) | 0.4870 (6.35%) | 0.5360 (3.77%) | 0.3460 (4.42%) | |

| YOLOv7 | 0.8150 (-2.77%) | 0.4770 (9.83%) | 0.4580 (9.13%) | 0.3510 (9.07%) | ||

| Vis-Tri | YOLOv5 | 0.6610 (-2.64%) | 0.4870 (6.35%) | 0.5350 (3.95%) | 0.3460 (4.42%) | |

| YOLOv7 | 0.8110 (-2.27%) | 0.4750 (10.21%) | 0.4560 (9.52%) | 0.3490 (9.59%) | ||

| 0 | N-Tri | YOLOv5 | 0.6700 | 0.5220 | 0.5660 | 0.3700 |

| YOLOv7 | 0.8070 | 0.5680 | 0.5410 | 0.4170 | ||

| Inv-Tri | YOLOv5 | 0.6470 (3.43%) | 0.5050 (3.26%) | 0.5460 (3.53%) | 0.3550 (4.05%) | |

| YOLOv7 | 0.8310 (-2.97%) | 0.5140 (9.51%) | 0.4930 (8.87%) | 0.3810 (8.63%) | ||

| Vis-Tri | YOLOv5 | 0.6400 (4.48%) | 0.5030 (3.64%) | 0.5440 (3.89%) | 0.3540 (4.32%) | |

| YOLOv7 | 0.8280 (-2.60%) | 0.5070 (10.74%) | 0.4860 (10.17%) | 0.3750 (10.07%) |

We construct poisoned datasets using different attack methods, with a training set poisoning rate of , consisting of 20% poisoned and 80% clean images. As shown in Table I, we employ YOLOv5 [44] as the object detector to test three datasets (clean dataset without trigger (N-Tri), datasets containing invisible trigger (Inv-Tri) and visible trigger (Vis-Tri)). Notably: 1) We only use invisible triggers to train YOLOv5 in our method, but use both triggers during testing; 2) We evaluate the performance of both triggers under the normal and the victim object detectors.

Table I shows detection metrics for each method on datasets: Precision, Recall, , and [27, 45]. The percentages in brackets indicate the performance degradation on the poisoned dataset with the corresponding trigger compared to the clean dataset. A greater reduction ratio indicates a stronger attack effect. Analyzing the results in Table I yields three main conclusions:

-

•

The dataset with invisible triggers generated by our method shows the largest performance drop in all metrics on the victim object detector: the and decrease by and , surpassing the biggest rivals’ and , respectively.

-

•

Unlike previous methods that use visible triggers, our method attacks a victim model trained with invisible triggers, resulting in a weaker attack effect. However, it still achieves a reduction of . The setup of our method is closer to real-life scenarios, as images with invisible triggers are less perceptible and easier to blend with clean samples. Poisoned images with visible triggers are more easily constructed by attackers.

-

•

The poisoned images (including visible triggers or invisible triggers) generated by our method have limited impact on the normal object detector, causing only slight decreases in detection performance.

IV-C Impact of Poisoning Rate

Table II shows the comparison of the attack effects of poisonous samples on the YOLOv5 [44] and YOLOv7 [45] for the victim models trained with different poisoning rates, where percentages in parentheses indicate the proportion of degradation in detection performance.

It can be seen that when the poisoning rate reaches 20%, the attack effect of our trigger generative networks on the attacked model YOLOv7, whether visible or invisible trigger, reaches almost 100%. Even for the normal object detector YOLOv7 (poisoning rate ), when we use our method to attack, there is a loss of 8%-10% in detection performance. Comparing the two victim models YOLOv5 and YOLOv7, it is found that YOLOv7 is more vulnerable to attack at all different poisoning rates. At the same time, we also found that the detection performance of poisoned samples on the normal model has increased, which also verifies that YOLOv7 has strong feature extraction capabilities and is more sensitive to triggers (noise) in images.

IV-D Ablation Study on Losses

We verified the role of different loss functions in training the invisible trigger generative network and the visible trigger generative network respectively, as shown in Table III.

| Visible Trigger | ||||

|---|---|---|---|---|

| Losses | Precision | Recall | ||

| 0.1250 | 0.2270 | 0.1380 | 0.0901 | |

| + | 0.1210 | 0.2380 | 0.1410 | 0.0918 |

| + | 0.1250 | 0.2180 | 0.1360 | 0.0889 |

| Invisible Trigger | ||||

|---|---|---|---|---|

| Losses | Precision | Recall | ||

| 0.2110 | 0.2150 | 0.1800 | 0.1220 | |

| 0.2100 | 0.1580 | 0.1580 | 0.1060 | |

| + | 0.1460 | 0.1190 | 0.1120 | 0.0742 |

As can be seen from Table III, in the invisible trigger generative network, plays a greater role than , but it is obvious that using both at the same time has the best effect. In the visible trigger generative network, the role of loss MSE is almost negligible, because the main goal of the method is to generate visible triggers that are consistent with the effect of invisible triggers, so and play a more important role.

| Attack Methods | Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|---|

| Badnets | Alexnet | 0.9194 | 0.9656 | 0.8703 | 0.9148 |

| Resnet50 | 0.9404 | 0.9422 | 0.9389 | 0.9402 | |

| _inv | Alexnet | 0.5000 | 0.4336 | 0.0314 | 0.0580 |

| Resnet50 | 0.4986 | 0.4658 | 0.0554 | 0.0976 | |

| _inv | Alexnet | 0.6587 | 0.9185 | 0.3489 | 0.5020 |

| Resnet50 | 0.6397 | 0.8533 | 0.3376 | 0.4798 | |

| Trojan_sq | Alexnet | 0.5188 | 0.6821 | 0.0690 | 0.1236 |

| Resnet50 | 0.5069 | 0.5588 | 0.0720 | 0.1255 | |

| Trojan_wm | Alexnet | 0.5769 | 0.8581 | 0.1853 | 0.3011 |

| Resnet | 0.5489 | 0.7246 | 0.1559 | 0.2527 | |

| Blend | Alexnet | 0.7660 | 0.9480 | 0.5635 | 0.7041 |

| Resnet50 | 0.8156 | 0.9230 | 0.6893 | 0.7877 | |

| Nature | Alexnet | 0.5666 | 0.8450 | 0.1646 | 0.2722 |

| Resnet50 | 0.5740 | 0.7754 | 0.2061 | 0.3217 | |

| Average Measure | Alexnet | 0.6438 | 0.8073 | 0.3190 | 0.4108 |

| Resnet50 | 0.6463 | 0.7490 | 0.3507 | 0.4293 |

IV-E Ablation Study on Poison Samples Classifier

In our pipeline, we choose to use the simplest and most efficient Alexnet [41] as the high-frequency artifact classifier (Poisoned Image Classifier in Fig. 2 (a)). At the same time, we also use the more common Resnet50 [43] as the high-frequency artifact classifier instead of Alexnet. The comparison results are shown in Table IV. In general, Resnet50 has comparable performance to Alexnet on multiple classification measurement, and the average performance difference of F1 performance measure is close to 2 points.

IV-F Trigger Transferability on Victim Models

Table V shows the transferability of our twin trigger generative networks across multiple detectors (YOLOv5, YOLOv7, Faster R-CNN [46]). We use them as the victim object detector in training stage, then test the attack effect of twin trigger generative networks. The results suggest that: 1) Our method has better attack effects on YOLOv7 and Faster R-CNN than YOLOv5; 2) Our method has strong scalability and can attack different detectors.

| Model | Dataset | Precision | Recall | ||

|---|---|---|---|---|---|

| YOLOv5 | N-Tri | 0.6640 | 0.4980 | 0.5470 | 0.3550 |

| Inv-Tri | 0.1680 | 0.0010 | 0.0846 | 0.0656 | |

| (74.70%) | (99.80%) | (84.53%) | (81.52%) | ||

| Vis-Tri | 0.3260 | 0.0024 | 0.1640 | 0.1200 | |

| (50.90%) | (99.52%) | (70.02%) | (66.20%) | ||

| YOLOv7 | N-Tri | 0.8040 | 0.5170 | 0.4930 | 0.3770 |

| Inv-Tri | 0.1190 | 0.0005 | 0.0011 | 0.0009 | |

| (85.20%) | (99.90%) | (99.78%) | (99.76%) | ||

| Vis-Tri | 0.2370 | 0.0012 | 0.0024 | 0.0019 | |

| (70.52%) | (99.77%) | (99.51%) | (99.50%) | ||

| F-RCNN | N-Tri | 0.4594 | 0.2480 | 0.4590 | 0.2820 |

| Inv-Tri | 0.0305 | 0.0270 | 0.0310 | 0.0190 | |

| (93.36%) | (89.11%) | (93.25%) | (93.26%) | ||

| Vis-Tri | 0.0311 | 0.0280 | 0.0310 | 0.0190 | |

| (93.23%) | (88.71%) | (93.25%) | (93.26%) |

IV-G Equivalence of Visible and Invisible Triggers

1) Definition of Shapley Value [47, 34]: We convert the image into an image in the frequency domain through DCT transformation. Then, the image is divided into rows and columns (here ) with patches. Given any patch in row and column , We do not consider it and enumerate all possibilities of the remaining patches: other patches either appear or do not appear (are masked), then we will get possible situations.

Considering the patch , each possible situation will correspond to two images: and image with the patch not masked and image with the patch masked. The difference in area loss of all images s and s obtained by the above method on the detection model will be used as the contribution of the block, that is, the Shapley value

| (33) |

where and is a function defined on the image as in Eqn. (29).

Note that the calculation of the Shapley value in Eqn. (33) has exponential time complexity. In our experiments, we randomly sample samples from possibilities to obtain an estimate of the Shapley value

| (34) |

2) Analysis of Sharpley Value: We compare the contribution of each image triplet (clean image, invisibly poisoned image, visibly poisoned image) in the frequency domain block, where the Shapley value [34] of the image in each frequency domain block is normalized to a probability distribution for fair comparison. Finally, we can get the average Shapley value probability distribution of the three types of images in different frequency domain blocks, as shown in Fig. 6.

Fig. 7 gives a qualitative comparison of the attack effects of these methods on three images. The results suggest that 1) The invisible and visibly poisoned images generated by our trigger generative networks have very similar attack effects. 2) The victim object detector performs abnormal behaviour on all invisible and visibly poisoned images generated by our trigger generative networks, and is better than other backdoor attack methods. 3) The victim object detector performs normal behaviour on clean images.

Additionally, we transform all the images into the frequency domain for comparison, as shown in Fig. 8. The results indicate that the invisibly poisoned images generated by our proposed TGN1 do not exhibit high-frequency artifacts. Similar to the original images, they are smooth in the frequency domain without outliers. This also shows that the invisibly poisoned images achieve invisibility in both the frequency domain and the spatial domain, significantly enhancing the stealthiness of our method.

V Conclusion

We propose a general framework for training visible and invisible trigger generative networks against any object detector. Furthermore, our method can use invisible triggers in the training phase and visible triggers in the inference phase, making the attack process difficult to trace, thus achieving more stealthy backdoor attacks. The invisible trigger generative network incorporates a Gaussian smoothing layer and a high frequency artifacts classifier to optimize the stealthiness in both frequency and spatial domains, and the visible trigger generative network creates visible triggers equivalent to the invisible ones by employing alignment loss. Extensive experiments indicate that our method achieves superior attack performance in both visible and invisible trigger scenarios compared to other methods, and analyses are presented to investigate the inconsistency between invisible trigger and visible trigger. The proposed framework will inspire more investigations of the backdoor learning mechanism.

It is worth mentioning that the most important contribution of our attack algorithm is to improve the security of the object detection algorithm. For our attacks with visible triggers or invisible triggers, we can confirm whether the image contains harmful triggers by detecting abnormal pixels in the image in the spatial domain or in the frequency domain, respectively.

References

- [1] H. Wu, C. Wen, W. Li, X. Li, R. Yang, and C. Wang, “Transformation-equivariant 3d object detection for autonomous driving,” in AAAI Conference on Artificial Intelligence, vol. 37, no. 3, 2023, pp. 2795–2802.

- [2] É. Zablocki, H. Ben-Younes, P. Pérez, and M. Cord, “Explainability of deep vision-based autonomous driving systems: Review and challenges,” International Journal of Computer Vision, vol. 130, no. 10, pp. 2425–2452, 2022.

- [3] X. Ma, W. Ouyang, A. Simonelli, and E. Ricci, “3d object detection from images for autonomous driving: a survey,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023.

- [4] G. P. Meyer, A. Laddha, E. Kee, C. Vallespi-Gonzalez, and C. K. Wellington, “Lasernet: An efficient probabilistic 3d object detector for autonomous driving,” in Computer Vision and Pattern Recognition, 2019, pp. 12 677–12 686.

- [5] L. Huang, H. Wang, J. Zeng, S. Zhang, L. Cao, J. Yan, and H. Li, “Geometric-aware pretraining for vision-centric 3d object detection,” arXiv preprint arXiv:2304.03105, 2023.

- [6] D. Chan, S. G. Narasimhan, and M. O’Toole, “Holocurtains: Programming light curtains via binary holography,” in Computer Vision and Pattern Recognition, 2022, pp. 17 886–17 895.

- [7] Y. Guo, H. Wang, Q. Hu, H. Liu, L. Liu, and M. Bennamoun, “Deep learning for 3d point clouds: A survey,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43, no. 12, pp. 4338–4364, 2020.

- [8] D. Driess, F. Xia, M. S. Sajjadi, C. Lynch, A. Chowdhery, B. Ichter, A. Wahid, J. Tompson, Q. Vuong, T. Yu et al., “Palm-e: an embodied multimodal language model,” in International Conference on Machine Learning, 2023, pp. 8469–8488.

- [9] A. G. Kupcsik, M. Spies, A. Klein, M. Todescato, N. Waniek, P. Schillinger, and M. Bürger, “Supervised training of dense object nets using optimal descriptors for industrial robotic applications,” in AAAI Conference on Artificial Intelligence, vol. 35, no. 7, 2021, pp. 6093–6100.

- [10] Z. Wang, M. Xu, N. Ye, F. Xiao, R. Wang, and H. Huang, “Computer vision-assisted 3d object localization via cots rfid devices and a monocular camera,” IEEE Transactions on Mobile Computing, vol. 20, no. 3, pp. 893–908, 2019.

- [11] X. Bu, J. Peng, J. Yan, T. Tan, and Z. Zhang, “Gaia: A transfer learning system of object detection that fits your needs,” in Computer Vision and Pattern Recognition, 2021, pp. 274–283.

- [12] M. Oquab, T. Darcet, T. Moutakanni, H. Vo, M. Szafraniec, V. Khalidov, P. Fernandez, D. Haziza, F. Massa, A. El-Nouby et al., “Dinov2: Learning robust visual features without supervision,” arXiv:2304.07193, 2023.

- [13] C. Ionescu, D. Papava, V. Olaru, and C. Sminchisescu, “Human3. 6m: Large scale datasets and predictive methods for 3d human sensing in natural environments,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 36, no. 7, pp. 1325–1339, 2013.

- [14] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, and et.al, “Microsoft coco: Common objects in context,” in European Conference on Computer Vision, 2014, pp. 740–755.

- [15] M. Andriluka, L. Pishchulin, P. Gehler, and B. Schiele, “2d human pose estimation: New benchmark and state of the art analysis,” in Computer Vision and Pattern Recognition, 2014, pp. 3686–3693.

- [16] M. Everingham, L. Van Gool, C. K. Williams, J. Winn, and A. Zisserman, “The pascal visual object classes (voc) challenge,” International Journal of Computer Vision, vol. 88, pp. 303–338, 2010.

- [17] Y. Li, “Poisoning-based backdoor attacks in computer vision,” in AAAI Conference on Artificial Intelligence, vol. 37, no. 13, 2023, pp. 16 121–16 122.

- [18] T. Huynh, D. Nguyen, T. Pham, and A. Tran, “Combat: Alternated training for effective clean-label backdoor attacks,” in AAAI Conference on Artificial Intelligence, vol. 38, no. 3, 2024, pp. 2436–2444.

- [19] Y. Li, Y. Jiang, Z. Li, and S.-T. Xia, “Backdoor learning: A survey,” IEEE Transactions on Neural Networks and Learning Systems, vol. 35, no. 1, pp. 5–22, 2022.

- [20] W. Fan, H. Li, W. Jiang, M. Hao, S. Yu, and X. Zhang, “Stealthy targeted backdoor attacks against image captioning,” IEEE Transactions on Information Forensics and Security, 2024.

- [21] Y. Ding, Z. Wang, Z. Qin, E. Zhou, G. Zhu, Z. Qin, and K.-K. R. Choo, “Backdoor attack on deep learning-based medical image encryption and decryption network,” IEEE Transactions on Information Forensics and Security, 2023.

- [22] W. Yin, J. Lou, P. Zhou, Y. Xie, D. Feng, Y. Sun, T. Zhang, and L. Sun, “Physical backdoor: Towards temperature-based backdoor attacks in the physical world,” in Computer Vision and Pattern Recognition, 2024, pp. 12 733–12 743.

- [23] H. Zhang, S. Hu, Y. Wang, L. Y. Zhang, Z. Zhou, X. Wang, Y. Zhang, and C. Chen, “Detector collapse: Backdooring object detection to catastrophic overload or blindness,” arXiv preprint arXiv:2404.11357, 2024.

- [24] Y. Cheng, W. Hu, and M. Cheng, “Backdoor attack against object detection with clean annotation,” arXiv preprint arXiv:2307.10487, 2023.

- [25] H. Ma, Y. Li, Y. Gao, Z. Zhang, A. Abuadbba, A. Fu, S. F. Al-Sarawi, N. Surya, and D. Abbott, “Macab: Model-agnostic clean-annotation backdoor to object detection with natural trigger in real-world,” arXiv:2209.02339, 2022.

- [26] X. Han, G. Xu, Y. Zhou, X. Yang, J. Li, and T. Zhang, “Physical backdoor attacks to lane detection systems in autonomous driving,” in ACM International Conference on Multimedia, 2022, pp. 2957–2968.

- [27] S.-H. Chan, Y. Dong, J. Zhu, X. Zhang, and J. Zhou, “Baddet: Backdoor attacks on object detection,” in European Conference on Computer Vision, 2022, pp. 396–412.

- [28] C. Luo, Y. Li, Y. Jiang, and S.-T. Xia, “Untargeted backdoor attack against object detection,” in International Conference on Acoustics, Speech and Signal Processing, 2023, pp. 1–5.

- [29] T. Gu, B. Dolan-Gavitt, and S. Garg, “Badnets: Identifying vulnerabilities in the machine learning model supply chain,” arXiv:1708.06733, 2017.

- [30] Y. Liu, S. Ma, Y. Aafer, W.-C. Lee, J. Zhai, W. Wang, and X. Zhang, “Trojaning attack on neural networks,” in Network and Distributed System Security, 2018.

- [31] S. Li, M. Xue, B. Z. H. Zhao, and et.al, “Invisible backdoor attacks on deep neural networks via steganography and regularization,” IEEE Transactions on Dependable and Secure Computing, vol. 18, no. 5, pp. 2088–2105, 2020.

- [32] Z. Zhao, X. Chen, Y. Xuan, Y. Dong, D. Wang, and K. Liang, “Defeat: Deep hidden feature backdoor attacks by imperceptible perturbation and latent representation constraints,” in Computer Vision and Pattern Recognition, 2022, pp. 15 213–15 222.

- [33] Y. Zeng, W. Park, Z. M. Mao, and R. Jia, “Rethinking the backdoor attacks’ triggers: A frequency perspective,” in International Conference on Computer Vision, 2021, pp. 16 473–16 481.

- [34] L. S. Shapley et al., “A value for n-person games,” 1953.

- [35] X. Chen, C. Liu, B. Li, K. Lu, and D. Song, “Targeted backdoor attacks on deep learning systems using data poisoning,” arXiv:1712.05526, 2017.

- [36] K. Doan, Y. Lao, and P. Li, “Backdoor attack with imperceptible input and latent modification,” Neural Information Processing Systems, vol. 34, pp. 18 944–18 957, 2021.

- [37] T. Wu, T. Wang, V. Sehwag, S. Mahloujifar, and P. Mittal, “Just rotate it: Deploying backdoor attacks via rotation transformation,” in ACM Workshop on Artificial Intelligence and Security, 2022, pp. 91–102.

- [38] H. Ma, Y. Li, Y. Gao, A. Abuadbba, Z. Zhang, A. Fu, H. Kim, S. F. Al-Sarawi, N. Surya, and D. Abbott, “Dangerous cloaking: Natural trigger based backdoor attacks on object detectors in the physical world,” arXiv:2201.08619, 2022.

- [39] N. Ahmed, T. Natarajan, and K. Rao, “Discrete cosine transform,” IEEE Transactions on Computers, vol. 100, no. 1, pp. 90–93, 1974.

- [40] J. W. Cooley, P. A. Lewis, and P. D. Welch, “The fast fourier transform and its applications,” IEEE Transactions on Education, vol. 12, no. 1, pp. 27–34, 1969.

- [41] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” Neural Information Processing Systems, vol. 25, 2012.

- [42] A. Mao, M. Mohri, and Y. Zhong, “Cross-entropy loss functions: Theoretical analysis and applications,” arXiv:2304.07288, 2023.

- [43] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Computer Vision and Pattern Recognition, 2016, pp. 770–778.

- [44] H. Yao, Y. Liu, X. Li, and et.al, “A detection method for pavement cracks combining object detection and attention mechanism,” IEEE Transactions on Intelligent Transportation Systems, vol. 23, no. 11, pp. 22 179–22 189, 2022.

- [45] C.-Y. Wang, A. Bochkovskiy, and H.-Y. M. Liao, “Yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors,” in Computer Vision and Pattern Recognition, 2023, pp. 7464–7475.

- [46] S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-cnn: Towards real-time object detection with region proposal networks,” Neural Information Processing Systems, vol. 28, 2015.

- [47] Y. Chen, Q. Ren, and J. Yan, “Rethinking and improving robustness of convolutional neural networks: a shapley value-based approach in frequency domain,” Neural Information Processing Systems, vol. 35, pp. 324–337, 2022.