Trust Region-Based Safe Distributional

Reinforcement Learning for Multiple Constraints

Abstract

In safety-critical robotic tasks, potential failures must be reduced, and multiple constraints must be met, such as avoiding collisions, limiting energy consumption, and maintaining balance. Thus, applying safe reinforcement learning (RL) in such robotic tasks requires to handle multiple constraints and use risk-averse constraints rather than risk-neutral constraints. To this end, we propose a trust region-based safe RL algorithm for multiple constraints called a safe distributional actor-critic (SDAC). Our main contributions are as follows: 1) introducing a gradient integration method to manage infeasibility issues in multi-constrained problems, ensuring theoretical convergence, and 2) developing a TD() target distribution to estimate risk-averse constraints with low biases. We evaluate SDAC through extensive experiments involving multi- and single-constrained robotic tasks. While maintaining high scores, SDAC shows 1.93 times fewer steps to satisfy all constraints in multi-constrained tasks and 1.78 times fewer constraint violations in single-constrained tasks compared to safe RL baselines. Code is available at: https://github.com/rllab-snu/Safe-Distributional-Actor-Critic.

1 Introduction

Deep reinforcement learning (RL) enables reliable control of complex robots (Merel et al., 2020; Peng et al., 2021; Rudin et al., 2022). In order to successfully apply RL to real-world systems, it is essential to design a proper reward function which reflects safety guidelines, such as collision avoidance and limited energy consumption, as well as the goal of the given task. However, finding the reward function that considers all such factors involves a cumbersome and time-consuming process since RL algorithms must be repeatedly performed to verify the results of the designed reward function. Instead, safe RL, which handles safety guidelines as constraints, is an appropriate solution. A safe RL problem can be formulated using a constrained Markov decision process (Altman, 1999), where not only the reward but also cost functions are defined to provide the safety guideline signals. By defining constraints using expectation or risk measures of the sum of costs, safe RL aims to maximize returns while satisfying the constraints. Under the safe RL framework, the training process becomes straightforward since there is no need to search for a reward that reflects the safety guidelines.

While various safe RL algorithms have been proposed to deal with the safety guidelines, their applicability to general robotic applications remains limited due to the insufficiency in 1) handling multiple constraints and 2) minimizing failures, such as robot breakdowns after collisions. First, many safety-critical applications require multiple constraints, such as maintaining distance from obstacles, limiting operational space, and preventing falls. Lagrange-based safe RL methods (Yang et al., 2021; Zhang and Weng, 2022; Bai et al., 2022), which convert a safe RL problem into a dual problem and update the policy and Lagrange multipliers, are commonly used to solve these multi-constrained problems. However, the Lagrangian methods are difficult to guarantee satisfying constraints during training theoretically, and the training process can be unstable due to the multipliers (Stooke et al., 2020). To this end, trust region-based methods (Yang et al., 2020; Kim and Oh, 2022a), which can ensure to improve returns while satisfying the constraints under tabular settings (Achiam et al., 2017), have been proposed as an alternative to stabilize the training process. Still, trust region-based methods have a critical issue. Depending on the initial policy settings, there can be an infeasible starting case, meaning that no policy within the trust region satisfies constraints. To address this issue, we can sequentially select a violated constraint and update the policy to reduce the selected constraint (Xu et al., 2021). However, this can be inefficient as only one constraint is considered per update. It will be better to handle multiple constraints at once, but it is a remaining problem to find a policy gradient that reflects several constraints and guarantees to reach a feasible policy set.

Secondly, as RL settings are inherently stochastic, employing risk-neutral measures like expectation to define constraints can lead to frequent failures. Hence, it is crucial to define constraints using risk measures, such as conditional value at risk (CVaR), as they can reduce the potential for massive cost returns by emphasizing tail distributions (Yang et al., 2021; Kim and Oh, 2022a). In safe RL, critics are used to estimate the constraint values. Especially, to estimate constraints based on risk measures, it is required to use distributional critics (Dabney et al., 2018b), which can be trained using the distributional Bellman update (Bellemare et al., 2017). However, the Bellman update only considers the one-step temporal difference, which can induce a large bias. The estimation bias makes it difficult for critics to judge the policy, which can lead to the policy becoming overly conservative or risky, as shown in Section 5.3. In particular, when there are multiple constraints, the likelihood of deriving incorrect policy gradients due to estimation errors grows exponentially. Therefore, there is a need for a method that can train distributional critics with low biases.

In this paper, we propose a trust region-based safe RL algorithm called a safe distributional actor-critic (SDAC), designed to effectively manage multiple constraints and estimate risk-averse constraints with low biases. First, to handle the infeasible starting case by considering all constraints simultaneously, we propose a gradient integration method that projects unsafe policies into a feasible policy set by solving a quadratic program (QP) consisting of gradients of all constraints. It guarantees to obtain a feasible policy within a finite time under mild technical assumptions, and we experimentally show that it can restore the policy more stably than the existing method (Xu et al., 2021). Furthermore, by updating the policy using the trust region method with the integrated gradient, our approach makes the training process more stable than the Lagrangian method, as demonstrated in Section 5.2. Second, to train critics to estimate constraints with low biases, we propose a TD() target distribution which can adjust the bias-variance trade-off. The target distribution is obtained by merging the quantile regression losses (Dabney et al., 2018b) of multi-step distributions and extracting a unified distribution from the loss. The unified distribution is then projected onto a quantile distribution set in a memory-efficient manner. We experimentally show that the target distribution can trade off the bias-variance of the constraint estimations (see Section 5.3).

We conduct extensive experiments with multi-constrained locomotion tasks and single-constrained Safety Gym tasks (Ray et al., 2019) to evaluate the proposed method. In the locomotion tasks, SDAC shows 1.93 times fewer steps to satisfy all constraints than the second-best baselines. In the Safety Gym tasks, the proposed method shows 1.78 times fewer constraint violations than the second-best methods while achieving high returns when using risk-averse constraints. As a result, it is shown that the proposed method can efficiently handle multiple constraints using the gradient integration method and effectively lower the constraint violations using the low-biased distributional critics.

2 Background

Constrained Markov Decision Processes. We formulate the safe RL problem using constrained Markov decision processes (CMDPs) (Altman, 1999). A CMDP is defined as , , , , , , , where is a state space, is an action space, is a transition model, is a reward function, are cost functions, is an initial state distribution, and is a discount factor. Given a policy from a stochastic policy set , the discounted state distribution is defined as , and the return is defined as , where , and . Then, the state value and state action value functions are defined as: . By substituting the costs for the reward, the cost value functions and are defined. In the remainder of the paper, the cost parts will be omitted since they can be retrieved by replacing the reward with the costs. Then, the safe RL problem is defined as follows with a safety measure :

| (1) |

where , is an entropy coefficient, is the Shannon entropy, and is a threshold of the th constraint.

Trust-Region Method With a Mean-Std Constraint. Kim and Oh (2022a) have proposed a trust region-based safe RL method with a risk-averse constraint, called a mean-std constraint. The definition of the mean-std constraint function is as follows:

| (2) |

where adjusts the risk level of constraints, is the standard deviation of , and and are the probability density function and the cumulative distribution function (CDF) of the standard normal distribution, respectively. In particular, setting causes the standard deviation part to be zero, so the constraint becomes a risk-neutral constraint. Also, the mean-std constraint can effectively reduce the potential for massive cost returns, as shown in Yang et al. (2021); Kim and Oh (2022a, b). In order to calculate the mean-std constraint, it is essential to estimate the standard deviation of the cost return. To this end, Kim and Oh (2022a) define the square value functions:

| (3) |

Since , the th constraint can be written as follows:

| (4) |

where , . In order to apply the trust region method (Schulman et al., 2015), it is necessary to derive surrogate functions for the objective and constraints. These surrogates can substitute for the objective and constraints within the trust region. Given a behavioral policy and the current policy , we denote the surrogates as and . For the definition and derivation of the surrogates, please refer to Appendix A.7 and (Kim and Oh, 2022a). Using the surrogates, a policy can be updated by solving the following subproblem:

| (5) |

where , is the KL divergence, and is a trust region size. This subproblem can be solved through approximation and a line search (see Appendix A.8). However, it is possible that there is no policy satisfying the constraints of (5). In order to tackle this issue, the policy must be projected onto a feasible policy set that complies with all constraints, yet there is a lack of such methods. In light of this issue, we introduce an efficient feasibility handling method for multi-constrained RL problems.

Distributional Quantile Critic. Dabney et al. (2018b) have proposed a method for approximating the random variable to follow a quantile distribution. Given a parametric model, , can be approximated as , called a distributional quantile critic. The probability density function of is defined as follows:

| (6) |

where is the number of atoms, is the th atom, is the Dirac function, and . The percentile value of the th atom is denoted by (). In distributional RL, the returns are directly estimated to get value functions, and the target distribution can be calculated from the distributional Bellman operator (Bellemare et al., 2017): , where and . The above one-step distributional operator can be expanded to the -step one: , where for . Then, the critic can be trained to minimize the following quantile regression loss (Dabney et al., 2018b):

| (7) |

is a replay buffer, , and denotes the quantile regression loss for a single atom. The distributional quantile critic can be plugged into existing actor-critic algorithms because only the critic modeling part is changed.

3 Proposed Method

The proposed method comprises two key components: 1) a feasibility handling method required for multi-constrained safe RL problems and 2) a target distribution designed to minimize estimation bias. This section sequentially presents these components, followed by a detailed explanation of the proposed method.

3.1 Feasibility Handling For Multiple Constraints

An optimal safe RL policy can be found by iteratively solving the subproblem (5), but the feasible set of (5) can be empty in the infeasible starting cases. To address the feasibility issue in safe RL with multiple constraints, one of the violated constraints can be selected, and the policy is updated to minimize the constraint until the feasible region is not empty (Xu et al., 2021), which is called a naive approach. However, it may not be easy to quickly reach the feasible condition if only one constraint at each update step is used to update the policy. Therefore, we propose a feasibility handling method which reflect all the constraints simultaneously, called a gradient integration method. The main idea is to get a gradient that reduces the value of violated constraints and keeps unviolated constraints. To find such a gradient, the following quadratic program (QP) can be formulated by linearly approximating the constraints:

| (8) |

where is the Hessian of KL divergence between the previous policy and the current policy with parameters , is the gradient of the th constraint, is a truncated threshold to make the th constraint tangent to the trust region, is a trust region size, and is a slack coefficient. The reason why we truncate constraints is to make the gradient integration method invariant to the gradient scale. Otherwise, constraints with larger gradient scales might produce a dominant policy gradient. Finally, we update the policy parameters using the clipped gradient as follows:

| (9) |

Figure 1 illustrates the proposed gradient integration process. In summary, the policy is updated by solving (5); if there is no solution to (5), it is updated using the gradient integration method. Then, the policy can reach the feasibility condition within finite time steps.

Theorem 3.1.

Assume that the constraints are differentiable and convex, gradients of the constraints are -Lipschitz continuous, eigenvalues of the Hessian are equal or greater than a positive value , and . Then, there exists such that if and a policy is updated by the proposed gradient integration method, all constraints are satisfied within finite time steps.

Note that the first two assumptions of Theorem 3.1 are commonly used in multi-task learning (Liu et al., 2021; Yu et al., 2020; Navon et al., 2022), and the assumption on eigenvalues is used in most trust region-based RL methods (Schulman et al., 2015; Kim and Oh, 2022a), so the assumptions in Theorem 3.1 can be considered reasonable. We provide the proof and show the existence of a solution (8) in Appendix A.1. The provided proof shows that the constant is proportional to . This means that the trust region size should be set smaller as decreases. Also, we further analyze the worst-case time to satisfy all constraints by comparing the gradient integration method and naive approach in Appendix A.3. In conclusion, if the policy update rule (5) is not feasible, a finite number of applications of the gradient integration method will make the policy feasible.

3.2 TD() Target Distribution

The mean-std constraints can be estimated using the distributional quantile critics. Since the estimated constraints obtained from the critics are directly used to update policies in (5), estimating the constraints with low biases is crucial. In order to reduce the estimation bias of the critics, we propose a target distribution by capturing that the TD() loss, which is obtained by a weighted sum of several losses, and the quantile regression loss with a single distribution are identical. A recursive method is then introduced so that the target distribution can be obtained practically. First, the -step targets for the current policy are estimated as follows, after collecting trajectories with a behavioral policy :

| (10) |

where , and . Note that the -step target controls the bias-variance tradeoff using . If is equal to , the -step target is equivalent to the temporal difference method that has low variance but high bias. On the contrary, if increases to infinity, it becomes a Monte Carlo estimation that has high variance but low bias. However, finding proper is another cumbersome task. To alleviate this issue, TD() (Sutton, 1988) method considers the discounted sum of all -step targets. Similar to TD(), we define the TD() loss for the distributional quantile critic as the discounted sum of all quantile regression losses with -step targets. Then, the TD() loss for a single atom is approximated using importance sampling of the sampled -step targets in (10) as:

| (11) | ||||

where is a trace-decay value, and is the th atom of . Since is satisfied, (11) is the same as the quantile regression loss with the following single distribution , called a TD() target distribution:

| (12) | ||||

where is a normalization factor. If the target for time step is obtained, the target distribution for time step becomes the weighted sum of (a) the current one-step TD target and (b) the shifted previous target distribution, so it can be obtained recursively, as shown in (12). Since the definition requires infinite sums, the recursive way is more practical for computing the target. Nevertheless, to obtain the target in that recursive way, we need to store all quantile positions and weights for all time steps, which is not memory-efficient. Therefore, we propose to project the target distribution into a quantile distribution with a specific number of atoms, (we set to reduce information loss). The overall process to get the TD target distribution is illustrated in Figure 2, and the pseudocode is given in Appendix A.5. Furthermore, we can show that a distribution trained with the proposed target converges to the distribution of .

Theorem 3.2.

Let define a distributional operator , whose probability density function is:

| (13) | ||||

Then, a sequence, , converges to .

The TD target is a quantile distribution version of the distributional operator in Theorem 3.2. Consequently, a distribution updated by minimizing the quantile regression loss with the TD target converges to the distribution of if the number of atoms is infinite, according to Theorem 3.2. The proof of Theorem 3.2 is provided in Appendix A.4. After calculating the target distribution for all time steps, the critic can be trained to reduce the quantile regression loss with the target distribution. To provide more insight, we experiment with a toy example in Appendix A.6, and the results show that the proposed target distribution can trade off bias and variance through the trace-decay .

3.3 Safe Distributional Actor-Critic

Finally, we describe the proposed method, safe distributional actor-critic (SDAC). After collecting trajectories, the policy is updated by solving (5), which can be solved through a line search (for more detail, see Appendix A.8). The cost value and the cost square value functions in (4) can be obtained using the distributional critics as follows:

| (14) | ||||

If a solution of (5) does not exist, the policy is projected into a feasible region through the proposed gradient integration method. The critics can also be updated by the regression loss (7) between the target distribution obtained from (12). The proposed method is summarized in Algorithm 1.

4 Related Work

Safe Reinforcement Learning. Garcıa and Fernández (2015) and Gu et al. (2022) have researched and categorized safe RL methodologies from various perspectives. In this paper, we introduce safe RL methods depending on how to update policies to reflect safety constraints. First, trust region-based safe RL methods (Achiam et al., 2017; Yang et al., 2020; Kim and Oh, 2022a) find policy update directions by approximating the safe RL problem as a linear-quadratic constrained linear program and update policies through a line search. Yang et al. (2020) also employ projection to meet a constraint; however, their method is limited to a single constraint and does not show to satisfy the constraint for the infeasible starting case. Second, Lagrangian-based methods (Stooke et al., 2020; Yang et al., 2021; Liu et al., 2020) convert the safe RL problem to a dual problem and update the policy and dual variables simultaneously. Last, expectation-maximization (EM) based methods (Liu et al., 2022; Zhang et al., 2022) find non-parametric policy distributions by solving the safe RL problem in E-steps and fit parametric policies to the found non-parametric distributions in M-steps. Also, there are other ways to reflect safety other than policy updates. Qin et al. (2021); Lee et al. (2022) find optimal state or state-action distributions that satisfy constraints, and Bharadhwaj et al. (2021); Thananjeyan et al. (2021) reflect safety during exploration by executing only safe action candidates. In the experiments, only the safe RL methods of the policy update approach are compared with the proposed method.

Distributional TD(). TD() (Precup et al., 2000) can be extended to the distributional critic to trade off bias-variance. Gruslys et al. (2018) have proposed a method to obtain target distributions by mixing -step distributions, but the method is applicable only in discrete action spaces. Nam et al. (2021) have proposed a method to obtain target distributions using sampling to apply to continuous action spaces, but this is only for on-policy settings. A method proposed by Tang et al. (2022) updates the critics using newly defined distributional TD errors rather than target distributions. This method is applicable for off-policy settings but has the disadvantage that memory usage increases linearly with the number of TD error steps. In contrast to these methods, the proposed method is memory-efficient and applicable for continuous action spaces under off-policy settings.

Gradient Integration. The proposed feasibility handling method utilizes a gradient integration method, which is widely used in multi-task learning (MTL). The gradient integration method finds a single gradient to improve all tasks by using gradients of all tasks. Yu et al. (2020) have proposed a projection-based gradient integration method, which is guaranteed to converge Pareto-stationary sets. A method proposed by Liu et al. (2021) can reflect user preference, and Navon et al. (2022) proposed a gradient-scale invariant method to prevent the training process from being biased by a few tasks. The proposed method can be viewed as a mixture of projection and scale-invariant methods as gradients are clipped and projected onto a trust region.

5 Experiments

We evaluate the safety performance of the proposed method in single- and multi-constrained robotic tasks. For single constraints, the agent performs four tasks provided by Safety Gym (Ray et al., 2019), and for multi-constraints, it performs bipedal and quadrupedal locomotion tasks.

5.1 Safety Gym

Tasks. We employ two robots, point and car, to perform goal and button tasks in the Safety Gym. The goal task is to control a robot toward a randomly spawned goal without passing through hazard regions. The button task is to click a randomly designated button using a robot, where not only hazard regions but also dynamic obstacles exist. Agents get a cost when touching undesignated buttons and obstacles or entering hazard regions. There is only one constraint for the Safety Gym tasks, and it is defined using (4) with the sum of costs. Constraint violations (CVs) are counted when the cost sum exceeds the threshold. For more details, see Appendix B.

Baselines. Safe RL methods based on various types of policy updates are used as baselines. For the trust region-based method, we use constrained policy optimization (CPO) (Achiam et al., 2017) and off-policy trust-region CVaR (OffTRC) (Kim and Oh, 2022a), which extend the CPO to an off-policy and mean-std constrained version. For the Lagrangian-based method, distributional worst-case soft actor-critic (WCSAC) (Yang et al., 2022) is used, and constrained variational policy optimization (CVPO) (Liu et al., 2022) based on the EM method is used. Specifically, WCSAC, OffTRC, and the proposed method, SDAC, use the risk-averse constraints, so we experiment with those for and (when , the constraint is identical to the risk-neutral constraint).

Results. The graph of the final reward sum, cost rate, and the total number of CVs are shown in Figure 3(a), and the training curves are provided in Appendix C.1. We can interpret the results as good if the reward sum is high and the cost rate and total CVs are low. SDAC with , risk-averse constraint situations, satisfies the constraints in all tasks and shows an average of 1.78 times fewer total CVs than the second-best algorithm. Nevertheless, since the reward sums are also in the middle or upper ranks, its safety performance is of high quality. SDAC with , risk-neutral constraint situations, shows that the cost rates are almost the same as the thresholds except for the car button. In the case of the car button, the constraint is not satisfied, but by setting , SDAC can achieve the lowest total CVs and the highest reward sum compared to the other methods. As for the reward sum, SDAC is the highest in the point goal and car button, and WCSAC is the highest in the rest. However, WCSAC seems to lose the risk-averse properties seeing that the cost rates do not change significantly according to . This is because WCSAC does not define constraints as risk measures of cost returns but as expectations of risk measures (Yang et al., 2022). OffTRC has lower safety performance than SDAC in most cases because, unlike SDAC, it does not use distributional critics. Finally, CVPO and CPO are on-policy methods, so they are less efficient than the other methods.

5.2 Locomotion Tasks

Tasks. The locomotion tasks are to train robots to follow -directional linear and -directional angular velocity commands. Mini-Cheetah from MIT (Katz et al., 2019) and Laikago from Unitree (Wang, 2018) are used for quadrupedal robots, and Cassie from Agility Robotics (Xie et al., 2018) is used for a bipedal robot. In order to perform the locomotion tasks, robots should keep balancing, standing, and stamping their feet so that they can move in any direction. Therefore, we define three constraints. The first is to keep the balance so that the body angle does not deviate from zero, and the second is to keep the height of CoM above a threshold. The third is to match the current foot contact state with a predefined foot contact timing. The reward is defined as the negative -norm of the difference between the command and the current velocity. CVs are counted when the sum of at least one cost rate exceeds the threshold. For more details, see Appendix B.

Baselines. The baseline methods are identical to the Safety Gym tasks, and CVPO is excluded because it is technically challenging to scale to multiple constraint settings. We set to for the risk-averse constrained methods (OffTRC, WCSAC, and SDAC) to focus on measuring multi-constraint handling performance.

Results. Figure 4.(a-c) presents the training curves. SDAC shows the highest reward sums and the lowest total CVs in all tasks. In particular, the number of steps required to satisfy all constraints is 1.93 times fewer than the second-best algorithm on average. Trust region methods (OffTRC, CPO) stably satisfy constraints, but they are not efficient since they handle constraints by the naive approach. WCSAC, a Lagrangian method, fails to keep the constraints and shows the lowest reward sums. This is because the Lagrange multipliers can hinder the training stability due to the concurrent update with policy (Stooke et al., 2020).

5.3 Ablation Study

We conduct ablation studies to show whether the proposed target distribution lowers the estimation bias and whether the proposed gradient integration quickly converges to the feasibility condition. In Figure 3(b), the number of CVs is reduced as increases, which means that the bias of constraint estimation decreases. However, the score also decreases due to large variance, showing that can adjust the bias-variance tradeoff. In Figure 4(d), the proposed gradient integration method is compared with the naive approach, which minimizes the constraints in order from the first to the third constraint, as described in Section 3.1. The proposed method reaches the feasibility condition faster than the naive approach and shows stable training curves because it reflects all constraints concurrently. Additionally, we analyze the distributional critics in Appendix C.2 and the hyperparameters, such as the trust region size, in Appendix C.3. Furthermore, we analyze the sensitivity of traditional RL algorithms to reward configuration in Appendix D, emphasizing the advantage of safe RL that does not require reward tuning.

6 Limitation

A limitation of the proposed method is that the computational complexity of the gradient integration is proportional to the square of the number of constraints, whose qualitative analysis is presented in Appendix E.1. Also, we conducted quantitative analyses in Appendix E.2 by measuring wall clock training time. In the mini-cheetah task which has three constraints, the training time of SDAC is the third fastest among the four safe RL algorithms. Gradient integration is not applied when the policy satisfies constraints, so it may not constitute a significant proportion of training time. However, its influence can be dominant as the number of constraints increases. In order to resolve this limitation, the calculation can be speeded up by stochastically selecting a subset of constraints (Liu et al., 2021), or by reducing the frequency of policy updates (Navon et al., 2022). The other limitation is that the mean-std defined in (2) is not a coherent risk measure. As a result, mean-std constraints can be served as reducing uncertainty rather than risk, although we experimentally showed that constraint violations are efficiently reduced. To resolve this, we can use the CVaR constraint, which can be estimated using an auxiliary variable, as done by Chow et al. (2017). However, this solution can destabilize the training process due to the auxiliary variable, as observed in experiments of Kim and Oh (2022b). Hence, a stabilization technique should be developed to employ the CVaR constraint.

7 Conclusion

We have presented the trust region-based safe distributional RL method, called SDAC. Through the locomotion tasks, it is verified that the proposed method efficiently satisfies multiple constraints using the gradient integration. Moreover, constraints can be stably satisfied in various tasks due to the low-biased distributional critics trained using the proposed target distributions. In addition, the proposed method is analyzed from multiple perspectives through various ablation studies. However, to compensate for the computational complexity, future work plans to devise efficient methods when dealing with large numbers of constraints.

Acknowledgments and Disclosure of Funding

This work was partly supported by Institute of Information & Communications Technology Planning & Evaluation (IITP) grant funded by the Korea government (MSIT) (No. 2019-0-01190, [SW Star Lab] Robot Learning: Efficient, Safe, and Socially-Acceptable Machine Learning, 34%), Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science and ICT (NRF-2022R1A2C2008239, General-Purpose Deep Reinforcement Learning Using Metaverse for Real World Applications, 33%), and Institute of Information & Communications Technology Planning & Evaluation (IITP) grant funded by the Korea government (MSIT) (No. 2021-0-01341, AI Graduate School Program, CAU, 33%).

References

- Achiam et al. [2017] J. Achiam, D. Held, A. Tamar, and P. Abbeel. Constrained policy optimization. In Proceedings of International Conference on Machine Learning, pages 22–31, 2017.

- Agarwal et al. [2021] A. Agarwal, S. M. Kakade, J. D. Lee, and G. Mahajan. On the theory of policy gradient methods: Optimality, approximation, and distribution shift. The Journal of Machine Learning Research, 22(1):4431–4506, 2021.

- Altman [1999] E. Altman. Constrained Markov decision processes, volume 7. CRC Press, 1999.

- Bai et al. [2022] Q. Bai, A. S. Bedi, M. Agarwal, A. Koppel, and V. Aggarwal. Achieving zero constraint violation for constrained reinforcement learning via primal-dual approach. Proceedings of the AAAI Conference on Artificial Intelligence, 36(4), 2022.

- Bellemare et al. [2017] M. G. Bellemare, W. Dabney, and R. Munos. A distributional perspective on reinforcement learning. In Proceedings of International Conference on Machine Learning, pages 449–458, 2017.

- Bellemare et al. [2023] M. G. Bellemare, W. Dabney, and M. Rowland. Distributional Reinforcement Learning. MIT Press, 2023. http://www.distributional-rl.org.

- Bharadhwaj et al. [2021] H. Bharadhwaj, A. Kumar, N. Rhinehart, S. Levine, F. Shkurti, and A. Garg. Conservative safety critics for exploration. In Proceedings of International Conference on Learning Representations, 2021.

- Biewald [2020] L. Biewald. Experiment tracking with weights and biases, 2020. URL https://www.wandb.com/. Software available from wandb.com.

- Chow et al. [2017] Y. Chow, M. Ghavamzadeh, L. Janson, and M. Pavone. Risk-constrained reinforcement learning with percentile risk criteria. Journal of Machine Learning Research, 18(1):6070–6120, 2017.

- Dabney et al. [2018a] W. Dabney, G. Ostrovski, D. Silver, and R. Munos. Implicit quantile networks for distributional reinforcement learning. In Proceedings of International conference on machine learning, pages 1096–1105, 2018a.

- Dabney et al. [2018b] W. Dabney, M. Rowland, M. Bellemare, and R. Munos. Distributional reinforcement learning with quantile regression. Proceedings of the AAAI Conference on Artificial Intelligence, 32(1), 2018b.

- Dennis and Schnabel [1996] J. E. Dennis and R. B. Schnabel. Numerical Methods for Unconstrained Optimization and Nonlinear Equations. Society for Industrial and Applied Mathematics, 1996.

- Garcıa and Fernández [2015] J. Garcıa and F. Fernández. A comprehensive survey on safe reinforcement learning. Journal of Machine Learning Research, 16(1):1437–1480, 2015.

- Gruslys et al. [2018] A. Gruslys, W. Dabney, M. G. Azar, B. Piot, M. Bellemare, and R. Munos. The reactor: A fast and sample-efficient actor-critic agent for reinforcement learning. In Proceedings of International Conference on Learning Representations, 2018.

- Gu et al. [2022] S. Gu, L. Yang, Y. Du, G. Chen, F. Walter, J. Wang, Y. Yang, and A. Knoll. A review of safe reinforcement learning: Methods, theory and applications. arXiv preprint arXiv:2205.10330, 2022.

- Haarnoja et al. [2018] T. Haarnoja, A. Zhou, P. Abbeel, and S. Levine. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proceedings of International Conference on Machine Learning, pages 1861–1870, 2018.

- Katz et al. [2019] B. Katz, J. D. Carlo, and S. Kim. Mini cheetah: A platform for pushing the limits of dynamic quadruped control. In Proceedings of International Conference on Robotics and Automation, pages 6295–6301, 2019.

- Kim and Oh [2022a] D. Kim and S. Oh. Efficient off-policy safe reinforcement learning using trust region conditional value at risk. IEEE Robotics and Automation Letters, 7(3):7644–7651, 2022a.

- Kim and Oh [2022b] D. Kim and S. Oh. TRC: Trust region conditional value at risk for safe reinforcement learning. IEEE Robotics and Automation Letters, 7(2):2621–2628, 2022b.

- Kuznetsov et al. [2020] A. Kuznetsov, P. Shvechikov, A. Grishin, and D. Vetrov. Controlling overestimation bias with truncated mixture of continuous distributional quantile critics. In Proceedings International Conference on Machine Learning, pages 5556–5566, 2020.

- Lee et al. [2020] J. Lee, J. Hwangbo, L. Wellhausen, V. Koltun, and M. Hutter. Learning quadrupedal locomotion over challenging terrain. Science Robotics, 5(47):eabc5986, 2020.

- Lee et al. [2022] J. Lee, C. Paduraru, D. J. Mankowitz, N. Heess, D. Precup, K.-E. Kim, and A. Guez. COptiDICE: Offline constrained reinforcement learning via stationary distribution correction estimation. In Proceedings of International Conference on Learning Representations, 2022.

- Liu et al. [2021] B. Liu, X. Liu, X. Jin, P. Stone, and Q. Liu. Conflict-averse gradient descent for multi-task learning. In Advances in Neural Information Processing Systems, pages 18878–18890, 2021.

- Liu et al. [2020] Y. Liu, J. Ding, and X. Liu. IPO: Interior-point policy optimization under constraints. Proceedings of the AAAI Conference on Artificial Intelligence, 34(04):4940–4947, 2020.

- Liu et al. [2022] Z. Liu, Z. Cen, V. Isenbaev, W. Liu, S. Wu, B. Li, and D. Zhao. Constrained variational policy optimization for safe reinforcement learning. In Proceedings of International Conference on Machine Learning, pages 13644–13668, 2022.

- Meng et al. [2022] W. Meng, Q. Zheng, Y. Shi, and G. Pan. An off-policy trust region policy optimization method with monotonic improvement guarantee for deep reinforcement learning. IEEE Transactions on Neural Networks and Learning Systems, 33(5):2223–2235, 2022.

- Merel et al. [2020] J. Merel, S. Tunyasuvunakool, A. Ahuja, Y. Tassa, L. Hasenclever, V. Pham, T. Erez, G. Wayne, and N. Heess. Catch & carry: Reusable neural controllers for vision-guided whole-body tasks. ACM Transactions on Graphics, 39(4), 2020.

- Miki et al. [2022] T. Miki, J. Lee, J. Hwangbo, L. Wellhausen, V. Koltun, and M. Hutter. Learning robust perceptive locomotion for quadrupedal robots in the wild. Science Robotics, 7(62):eabk2822, 2022.

- Nam et al. [2021] D. W. Nam, Y. Kim, and C. Y. Park. GMAC: A distributional perspective on actor-critic framework. In Proceedings of International Conference on Machine Learning, pages 7927–7936, 2021.

- Navon et al. [2022] A. Navon, A. Shamsian, I. Achituve, H. Maron, K. Kawaguchi, G. Chechik, and E. Fetaya. Multi-task learning as a bargaining game. arXiv preprint arXiv:2202.01017, 2022.

- Peng et al. [2021] X. B. Peng, Z. Ma, P. Abbeel, S. Levine, and A. Kanazawa. AMP: Adversarial motion priors for stylized physics-based character control. ACM Transactions on Graphics, 40(4), 2021.

- Precup et al. [2000] D. Precup, R. S. Sutton, and S. P. Singh. Eligibility traces for off-policy policy evaluation. In Proceedings of International Conference on Machine Learning, pages 759–766, 2000.

- Qin et al. [2021] Z. Qin, Y. Chen, and C. Fan. Density constrained reinforcement learning. In Proceedings of International Conference on Machine Learning, pages 8682–8692, 2021.

- Ray et al. [2019] A. Ray, J. Achiam, and D. Amodei. Benchmarking Safe Exploration in Deep Reinforcement Learning. 2019.

- Rowland et al. [2018] M. Rowland, M. Bellemare, W. Dabney, R. Munos, and Y. W. Teh. An analysis of categorical distributional reinforcement learning. In Proceedings of International Conference on Artificial Intelligence and Statistics, pages 29–37, 2018.

- Rudin et al. [2022] N. Rudin, D. Hoeller, P. Reist, and M. Hutter. Learning to walk in minutes using massively parallel deep reinforcement learning. In Proceedings of Conference on Robot Learning, pages 91–100, 2022.

- Schulman et al. [2015] J. Schulman, S. Levine, P. Abbeel, M. Jordan, and P. Moritz. Trust region policy optimization. In Proceedings of International Conference on Machine Learning, pages 1889–1897, 2015.

- Stooke et al. [2020] A. Stooke, J. Achiam, and P. Abbeel. Responsive safety in reinforcement learning by PID lagrangian methods. In Proceedings of International Conference on Machine Learning, pages 9133–9143, 2020.

- Sutton [1988] R. S. Sutton. Learning to predict by the methods of temporal differences. Machine learning, 3(1):9–44, 1988.

- Tang et al. [2022] Y. Tang, R. Munos, M. Rowland, B. Avila Pires, W. Dabney, and M. Bellemare. The nature of temporal difference errors in multi-step distributional reinforcement learning. In Advances in Neural Information Processing Systems, pages 30265–30276, 2022.

- Thananjeyan et al. [2021] B. Thananjeyan, A. Balakrishna, S. Nair, M. Luo, K. Srinivasan, M. Hwang, J. E. Gonzalez, J. Ibarz, C. Finn, and K. Goldberg. Recovery RL: Safe reinforcement learning with learned recovery zones. IEEE Robotics and Automation Letters, 6(3):4915–4922, 2021.

- Todorov et al. [2012] E. Todorov, T. Erez, and Y. Tassa. MuJoCo: A physics engine for model-based control. In Proceedings of International Conference on Intelligent Robots and Systems, pages 5026–5033, 2012.

- Wang [2018] X. Wang. Unitree-Laikago Pro. http://www.unitree.cc/e/action/ShowInfo.php?classid=6&id=355, 2018.

- Xie et al. [2018] Z. Xie, G. Berseth, P. Clary, J. Hurst, and M. van de Panne. Feedback control for cassie with deep reinforcement learning. In Proceedings of International Conference on Intelligent Robots and Systems, pages 1241–1246, 2018.

- Xu et al. [2021] T. Xu, Y. Liang, and G. Lan. CRPO: A new approach for safe reinforcement learning with convergence guarantee. In Proceedings of International Conference on Machine Learning, pages 11480–11491, 2021.

- Yang et al. [2021] Q. Yang, T. D. Simão, S. H. Tindemans, and M. T. J. Spaan. WCSAC: Worst-case soft actor critic for safety-constrained reinforcement learning. Proceedings of the AAAI Conference on Artificial Intelligence, 35(12):10639–10646, 2021.

- Yang et al. [2022] Q. Yang, T. D. Simão, S. H. Tindemans, and M. T. J. Spaan. Safety-constrained reinforcement learning with a distributional safety critic. Machine Learning, 112:859–887, 2022.

- Yang et al. [2020] T.-Y. Yang, J. Rosca, K. Narasimhan, and P. J. Ramadge. Projection-based constrained policy optimization. In Proceedings of International Conference on Learning Representations, 2020.

- Yu et al. [2020] T. Yu, S. Kumar, A. Gupta, S. Levine, K. Hausman, and C. Finn. Gradient surgery for multi-task learning. In Advances in Neural Information Processing Systems, pages 5824–5836, 2020.

- Zhang et al. [2022] H. Zhang, Y. Lin, S. Han, S. Wang, and K. Lv. Conservative distributional reinforcement learning with safety constraints. arXiv preprint arXiv:2201.07286, 2022.

- Zhang and Weng [2022] J. Zhang and P. Weng. Safe distributional reinforcement learning. In J. Chen, J. Lang, C. Amato, and D. Zhao, editors, Proceedings of International Conference on Distributed Artificial Intelligence, pages 107–128, 2022.

Appendix A Algorithm Details

A.1 Proof of Theorem 3.1

We denote the policy parameter space as , the parameter at the th iteration as , the Hessian matrix as , and the th constraint as . As we focus on the th iteration, the following notations are used for brevity: and . The proposed gradient integration at th iteration is defined as the following quadratic program (QP):

| (15) |

where . In the remainder of this section, we introduce the assumptions and new definitions, discuss the existence of a solution (15), show the convergence to the feasibility condition for varying step size cases, and provide the proof of Theorem 3.1.

Assumption. 1) Each is differentiable and convex, 2) is -Lipschitz continuous, 3) all eigenvalues of the Hessian matrix are equal or greater than for , and 4) .

Definition. Using the Cholesky decomposition, the Hessian matrix can be expressed as where is a lower triangular matrix. By introducing new terms, and , the following is satisfied: . Additionally, we define the in-boundary and out-boundary sets as:

The minimum of in is denoted as , and the maximum of in is denoted as . Also, and are denoted as and , respectively, and we can say that is positive.

Lemma A.1.

For all , the minimum value of is positive.

Proof.

Assume that there exist such that is equal to zero at a policy parameter , i.e., . Since is convex, is a minimum point of , . However, as , so is positive due to the contradiction. Hence, the minimum of is also positive. ∎

Lemma A.2.

A solution of (15) always exists.

Proof.

There exists a policy parameter due to the assumptions. Let . Then, the following inequality holds.

Since satisfies all constraints of (15), the feasible set is non-empty and convex. Also, is positive definite, so the QP has a unique solution. ∎

Lemma A.2 shows the existence of solution of (15). Now, we show the convergence of the proposed gradient integration method in the case of varying step sizes.

Lemma A.3.

If and a policy is updated by , where and , the policy satisfies for within a finite time.

Proof.

We can reformulate the step size as , where and . Since the eigenvalues of is equal to or bigger than and is symmetric and positive definite, is positive semi-definite. Hence, is satisfied. Using this fact, the following inequality holds:

Now, we will show that enters in a finite time for and that the th constraint is satisfied for . Thus, we divide into two cases, 1) and 2) . For the first case, , so the following inequality holds:

| (16) | ||||

The value of decreases strictly with each update step according to (16). Hence, can reach by repeatedly updating the policy. We now check whether the constraint is satisfied for the second case. For the second case, the following inequality holds by applying :

Since ,

Since and ,

Hence, , which means that the th constraint is satisfied if . As reaches for within a finite time according to (16), the policy can satisfy all constraints within a finite time. ∎

Lemma A.3 shows the convergence to the feasibility condition in the case of varying step sizes. We introduce a lemma, which shows is bounded by , and finally show the proof of Theorem 3.1, which can be considered a special case of varying step sizes.

Lemma A.4.

There exists such that .

Proof.

Let us define the following sets:

| (17) | ||||

where . Using these sets, the following vectors can be defined: , Now, we will show that is bounded above and for sufficiently small .

First, the following is satisfied for a sufficiently large :

| (18) |

Since , where is defined in Lemma A.2, for . Therefore, is bounded above.

Second, let us define the following trust region size:

| (19) |

if , , the following is satisfied:

| (20) |

Thus, is not empty. If we define , the following is satisfied:

| (21) |

Then, if , since . Consequently, by defining a trust region size:

| (22) |

for . Therefore, if .

Finally, since is bounded above and proportional to for sufficiently small , there exist a constant such that . ∎

See 3.1

Proof.

The proposed step size is , and the sufficient conditions that guarantee the convergence according to Lemma A.3 are followings:

The second condition is self-evident. To satisfy the third condition, the proposed step size should satisfy the followings:

If , the following inequality holds:

Hence, if , the sufficient conditions are satisfied. ∎

A.2 Toy Example for Gradient Integration Method

The problem of the toy example in Figure 1 is defined as:

| (23) |

where there are two linear constraints. The initial points for the naive and gradient integration methods are and , which do not satisfied the two constraints. We use the Hessian matrix for the trust region as identity matrix and the trust region size as in both methods. The naive method minimizes the constraints in order from the first to the second constraint.

A.3 Analysis of Worst-Case Time to Satisfy All Constraints

To analyze the sample complexity, we consider a tabular MDP and use softmax policy parameterization as follows (for more details, see [Xu et al., 2021]):

| (24) |

According to Agarwal et al. [2021], the natural policy gradient (NPG) update is as follows:

| (25) |

where is a step size, is the vector expression of the advantage function, and . Analyzing the sample complexity of trust region-based methods is challenging since their stepsize is not fixed, so we modify the gradient integration method to use the NPG as follows:

| (26) | ||||

In the remainder, we abbreviate , , and as , , and , respectively. Since always exists due to Lemma A.2, we can write the policy using Lagrange multipliers as follows:

| (27) | ||||

where is a normalization factor, and for . The naive approach can also be written as above, except that is a one-hot vector, where -th value is one only for corresponding to the randomly selected constraint. Then, we can get the followings:

| (28) | ||||

We can also get the followings by using the Lemma 7 in Xu et al. [2021]:

| (29) | ||||

where is an optimal policy, and is the maximum value of costs. If , . Thus, . If the policy does not satisfy the constraints until step, the following inequality holds by summing the above inequalities from to :

| (30) |

Let denote as , and we can get . Then, the maximum can be expressed as:

| (31) |

where we abbreviate as . Finally, the policy can reach the feasible region within steps.

The worst-case time of the naive approach is the same as the above equation, except for the part. In the naive approach, is a one-hot vector, as mentioned earlier. In other words, only is different. Let us assume that the advantage vector follows a normal distribution. Then, the variance of is smaller for with distributed values than for one-hot values. Then, the reciprocal of the 2-norm becomes larger, resulting in a decrease in the worst-case time. From this perspective, the gradient integration method has a benefit over the naive approach as it reduces the variance of the advantage vector. Even though we cannot officially say that the worst-case time of the proposed method is smaller than the naive method because the advantage vector does not follow the normal distribution, we can deliver our insight on the benefit of gradient integration method.

A.4 Proof of Theorem 3.2

In this section, we show that a sequence, , converges to the . First, we rewrite the operator for random variables to an operator for distributions and show that the operator is contractive. Finally, we show that is the unique fixed point.

Before starting the proof, we introduce useful notions and distance metrics. As the return is a random variable, we define the distribution of as . Let be the distribution of a random variable . Then, we can express the distribution of affine transformation of random variable, , using the pushforward operator, which is defined by Rowland et al. [2018], as . To measure a distance between two distributions, Bellemare et al. [2023] has defined the distance as follows:

| (32) |

where is the cumulative distribution function. This distance is -homogeneous, regular, and -convex (see Section 4 of Bellemare et al. [2023] for more details). For functions that map state-action pairs to distributions, a distance can be defined as [Bellemare et al., 2023]: . Then, we can rewrite the operator for random variables in (13) as an operator for distributions as below.

| (33) | ||||

where and is a normalization factor. Since the random variable and the distribution is equivalent, the operators in (13) and (33) are also equivalent. Hence, we are going to show the proof of Theorem 3.2 using (33) instead of (13). We first show that the operator has a contraction property.

Lemma A.5.

Under the distance and the assumption that the state, action, and reward spaces are finite, is -contractive.

Proof.

First, the operator can be rewritten using summation as follows.

| (34) | ||||

Since the sum of weights of distributions should be one, we can find the normalization factor . Then, the following inequality can be derived using the homogeneity, regularity, and convexity of :

| (35) | ||||

Therefore, . ∎

By the Banach’s fixed point theorem, the operator has a unique fixed distribution. We now show that the fixed distribution is .

Lemma A.6.

The fixed distribution of the operator is .

Proof.

From the definition of , the following equality holds [Rowland et al., 2018]: . Then, it can be shown that is the fixed distribution by applying the operator to :

| (36) | ||||

∎

See 3.2

A.5 Pseudocode of TD() Target Distribution

We provide the pseudocode for calculating TD() target distribution for the reward critic in Algorithm 2. The target distribution for the cost critics can also be obtained by simply replacing the reward part with the cost.

A.6 Quantitative Analysis on TD() Target Distribution

We experiment with a toy example to measure the bias and variance of the reward estimation according to . The toy example has two states, and ; the state distribution is defined as an uniform; the reward function is defined as and . We train parameterized reward distributions by minimizing the quantile regression loss with the TD() target distribution for , and . The experimental results are presented in the table below.

| 5th iteration | 10th iteration | 15th iteration | 20th iteration | 25th iteration | |

|---|---|---|---|---|---|

| 4.813 (0.173) | 4.024 (0.253) | 3.498 (0.085) | 3.131 (0.103) | 2.835 (0.070) | |

| 4.621 (0.185) | 3.688 (0.273) | 2.925 (0.183) | 2.379 (0.134) | 2.057 (0.070) | |

| 4.141 (0.461) | 2.237 (0.402) | 1.389 (0.132) | 1.058 (0.031) | 0.923 (0.019) | |

| 2.886 (0.767) | 1.733 (0.365) | 1.509 (0.514) | 1.142 (0.325) | 1.109 (0.476) |

The values in the table are the mean and standard deviation of the past five values of the Wasserstein distance between the true reward return and the estimated distribution. Looking at the fifth iteration, it is clear that the larger the value, the smaller the mean and the higher the standard deviation. At the 25th iteration, the run with has the lowest mean and standard deviation, indicating that training has converged. On the other hand, the run with has the biggest standard deviation, and the mean is greater than , indicating that the significant variance hinders training. In conclusion, we measured bias and variance quantitatively through the toy example, and the results are well aligned with our claim that can trade off bias and variance.

A.7 Surrogate Functions

In this section, we introduce the surrogate functions for the objective and constraints. First, Kim and Oh [2022a] define a doubly discounted state distribution: . Then, the surrogates for the objective and constraints are defined as follows [Kim and Oh, 2022a]:

| (37) | ||||

where are behavioral, current, and next policies, respectively. According to Theorem 1 in [Kim and Oh, 2022a], the constraint surrogates are bounded by . We also show that the surrogate of the objective is bounded by in Appendix A.9. As a result, the gradients of the objective function and constraints become the same as the gradients of the surrogates, and the surrogates can substitute the objective and constraints within the trust region.

A.8 Policy Update Rule

To solve the constrained optimization problem (5), we find a policy update direction by linearly approximating the objective and safety constraints and quadratically approximating the trust region constraint, as done by Achiam et al. [2017]. After finding the direction, we update the policy using a line search method. Given the current policy parameter , the approximated problem can be expressed as follows:

| (38) |

where , , , and . Since (38) is convex, we can use an existing convex optimization solver. However, the search space, which is the policy parameter space , is excessively large, so we reduce the space by converting (38) to a dual problem as follows:

| (39) | ||||

where , , and and are Lagrange multipliers. Then, the optimal and can be obtained by a convex optimization solver. After obtaining the optimal values, , the policy update direction are calculated by . Then, the policy is updated by , where is a step size, which can be found through a backtracking method (please refer to Section 6.3.2 of Dennis and Schnabel [1996]).

Before using the above policy update rule, we should note that the existing trust-region method with the risk-averse constraint [Kim and Oh, 2022a] and the equations (1, 37, 5) are slightly different. There are two differences: 1) the objective is augmented with an entropy bonus, and 2) the surrogates are expressed with Q-functions instead of value functions. To use the entropy-regularized objective in the trust-region method, it is required to show that the objective is bounded by the KL divergence. We present the existence of bound in Appendix A.9. Next, there is no problem using the Q-functions because it is mathematically equivalent between the original surrogates [Kim and Oh, 2022a] and the new ones expressed with Q-functions (37). However, we experimentally show that using the Q-functions in off-policy settings has advantages in Appendix A.10.

A.9 Bound of Entropy-Augmented Objective

In this section, we show that the entropy-regularzied objective in (1) has a bound expressed by the KL divergence. Before showing the boundness, we present a new function and a lemma. A value difference function is defined as follows:

where .

Lemma A.7.

The maximum of is equal or less than , where .

Proof.

The value difference can be expressed in a vector form,

Using Hölder’s inequality, the following inequality holds:

Using Pinsker’s inequality, . ∎

Theorem A.8.

Let us assume that for . The difference between the objective and surrogate functions is bounded by a term consisting of KL divergence as:

| (40) |

where , , and the equality holds when .

Proof.

The surrogate function can be expressed in vector form as follows:

where . The objective function of can also be expressed in a vector form using Lemma 1 from Achiam et al. [2017],

By Lemma 3 from Achiam et al. [2017], . Then, the following inequality is satisfied:

If , the KL divergence term becomes zero, so equality holds. ∎

A.10 Comparison of Q-Function and Value Function-Based Surrogates

The original surrogate is defined as follows:

| (41) |

where , and the surrogate is the same as that of OffTRPO [Meng et al., 2022] and OffTRC [Kim and Oh, 2022a]. An entropy-regularized version can be derived as:

| (42) |

Then, the surrogate expressed by Q-functions in (37), called SAC-style version, can be rewritten as:

| (43) |

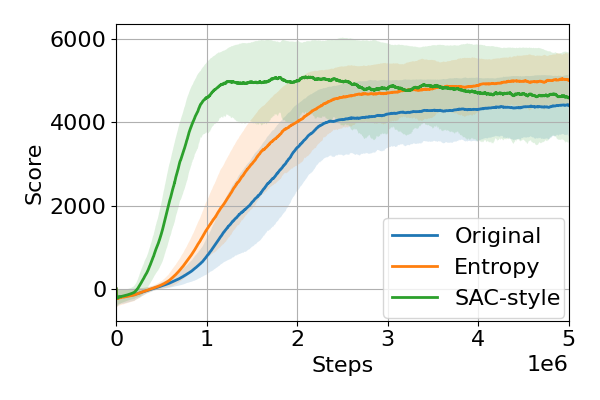

In this section, we evaluate the original, entropy-regularized, and SAC-style versions in the continuous control tasks of the MuJoCo simulators [Todorov et al., 2012]. We use neural networks with two hidden layers with (512, 512) nodes and ReLU for the activation function. The output of a value network is linear, but the input is different; the original and entropy-regularized versions use states, and the SAC-style version uses state-action pairs. The input of a policy network is the state, the output is mean and std , and actions are squashed into , as in SAC [Haarnoja et al., 2018]. The entropy coefficient in the entropy-regularized and SAC-style versions are adaptively adjusted to keep the entropy above a threshold (set as given ). The hyperparameters for all versions are summarized in Table 2.

| Parameter | Value |

| Discount factor | 0.99 |

| Trust region size | 0.001 |

| Length of replay buffer | |

| Critic learning rate | |

| Trace-decay | |

| Initial entropy coefficient | 1.0 |

| learning rate | 0.01 |

The training curves are presented in Figure 5. All methods are trained with five different random seeds. Although the entropy-regularized version (42) and SAC-style version (43) are mathematically equivalent, it can be observed that the performance of the SAC-style version is superior to the regularized version. It can be inferred that this is due to the variance of importance sampling. In the off-policy setting, the sampling probabilities of the behavioral and current policies can be significantly different, so the variance of the importance ratio is huge. The increased variance prevents estimating the objective accurately, so significant performance degradation can happen. As a result, using the Q-function-based surrogates has an advantage for efficient learning.

Appendix B Experimental Settings

Safety Gym. We use the goal and button tasks with the point and car robots in the Safety Gym environment [Ray et al., 2019], as shown in Figure 6(a) and 6(b). The environmental setting for the goal task is the same as in Kim and Oh [2022b]. Eight hazard regions and one goal are randomly spawned at the beginning of each episode, and a robot gets a reward and cost as follows:

| (44) | ||||

where is the distance to the goal, and is the minimum distance to hazard regions. If is less than or equal to , a goal is respawned. The state consists of relative goal position, goal distance, linear and angular velocities, acceleration, and LiDAR values. The action space is two-dimensional, which consists of -directional forces for the point and wheel velocities for the car robot.

The environmental settings for the button task are the same as in Liu et al. [2022]. There are five hazard regions, four dynamic obstacles, and four buttons, and all components are fixed throughout the training. The initial position of a robot and an activated button are randomly placed at the beginning of each episode. The reward function is the same as in (44), but the cost is different since there is no dense signal for contacts. We define the cost function for the button task as an indicator function that outputs one if the robot makes contact with an obstacle or an inactive button or enters a hazardous region. We add LiDAR values of buttons and obstacles to the state of the goal task, and actions are the same as the goal task. The length of the episode is 1000 steps without early termination.

Locomotion Tasks. We use three different legged robots, Mini-Cheetah, Laikago, and Cassie, for the locomotion tasks, as shown in Figure 6(e), 6(d), and 6(c). The tasks aim to control robots to follow a velocity command on flat terrain. A velocity command is given by , where for Cassie and otherwise, , and . To lower the task complexity, we set the -directional linear velocity to zero but can scale to any non-zero value. As in other locomotion studies [Lee et al., 2020, Miki et al., 2022], central phases are introduced to produce periodic motion, which are defined as for , where is a frequency coefficient and is set to , and is an initial phase. Actuators of robots are controlled by PD control towards target positions given by actions. The state consists of velocity command, orientation of the robot frame, linear and angular velocities of the robot, positions and speeds of the actuators, central phases, history of positions and speeds of the actuators (past two steps), and history of actions (past two steps). A foot contact timing can be defined as follows:

| (45) |

where a value of -1 means that the th foot is on the ground; otherwise, the foot is in the air. For the quadrupedal robots, Mini-Cheetah and Laikago, we use the initial phases as , which generates trot gaits. For the bipedal robot, Cassie, the initial phases are defined as , which generates walk gaits. Then, the reward and cost functions are defined as follows:

| (46) | ||||

where the power consumption , the sum of the torque times the actuator speed, is added to the reward as a regularization term, is the -directional linear velocity of the base frame of robots, is the -directional angular velocity of the base frame, and is the current feet contact vector. For balancing, the first cost indicates whether the angle between the -axis vector of the robot base and the world is greater than a threshold ( for all robots). For standing, the second cost indicates the height of CoM is less than a threshold ( for Mini-Cheetah, Laikago, and Cassie, respectively), and the last cost is to check that the current feet contact vector matches the pre-defined timing . The length of the episode is 500 steps. There is no early termination, but if a robot falls to the ground, the state is frozen until the end of the episode.

Hyperparameter Settings. The structure of neural networks consists of two hidden layers with nodes and ReLU activation for all baselines and the proposed method. The input of value networks is state-action pairs, and the output is the positions of atoms. The input of policy networks is the state, the output is mean and std , and actions are squashed into , . We use a fixed entropy coefficient . The trust region size is set to for all trust region-based methods. The overall hyperparameters for the proposed method can be summarized in Table 3.

| Parameter | Safety Gym | Locomotion |

|---|---|---|

| Discount factor | 0.99 | 0.99 |

| Trust region size | 0.001 | 0.001 |

| Length of replay buffer | ||

| Critic learning rate | ||

| Trace-decay | ||

| Entropy coefficient | 0.0 | 0.001 |

| The number of critic atoms | 25 | 25 |

| The number of target atoms | 50 | 50 |

| Constraint risk level | 0.25, 0.5, and 1.0 | 1.0 |

| threshold | ||

| Slack coefficient | - |

Since the range of the cost is , the maximum discounted cost sum is . Thus, the threshold is set by target cost rate times . For the locomotion tasks, the third cost in (46) is designed for foot stamping, which is not essential to safety. Hence, we set the threshold to near the maximum (if a robot does not stamp, the cost rate becomes 0.5). In addition, baseline safe RL methods use multiple critic networks for the cost function, such as target [Yang et al., 2021] or square value networks [Kim and Oh, 2022a]. To match the number of network parameters, we use two critics as an ensemble, as in Kuznetsov et al. [2020].

Tips for Hyperparameter Tuning.

-

•

Discount factor , Critic learning rate: Since these are commonly used hyperparameters, we do not discuss these.

-

•

Trace-decay , Trust region size : The ablation studies on these hyperparameters are presented in Appendix C.3. From the results, we recommend setting the trace-decay to as in other TD()-based methods [Precup et al., 2000]. Also, the results show that the performance is not sensitive to the trust region size. However, if the trust region size is too large, the approximation error increases, so it is better to set it below .

-

•

Entropy coefficient : This value is fixed in our experiments, but it can be adjusted automatically as done in SAC [Haarnoja et al., 2018].

-

•

The number of atoms : Although experiments on the number of atoms did not performed, performance is expected to increase as the number of atoms increases, as in other distributional RL methods [Dabney et al., 2018a].

-

•

Length of replay buffer: The effect of the length of the replay buffer can be confirmed through the experimental results from an off policy-based safe RL method [Kim and Oh, 2022a]. According to that, the length does not impact performance unless it is too short. We recommend setting it to 10 to 100 times the collected trajectory length.

-

•

Constraint risk level , threshold : If the cost sum follows a Gaussian distribution, the mean-std constraint is identical to the CVaR constraint. Then, the probability of the worst case can be controlled by adjusting . For example, if we set and , the mean-std constraint enforces the probability that the average cost is less than 0.03 during an episode greater than . Through this meaning, proper and can be found.

-

•

Slack coefficient : As mentioned at the end of Section 3.1, it is recommended to set this coefficient as large as possible. Since should be positive, we recommend setting to .

In conclusion, most hyperparameters are not sensitive, so few need to be optimized. It seems that and need to be set based on the meaning described above. Additionally, if the approximation error of critics is significant, the trust region size should be set smaller.

Appendix C Experimental Results

C.1 Safety Gym

In this section, we present the training curves of the Safety Gym tasks separately according to the risk level of constraints for better readability. Figure 7 shows The training results of the risk-neutral constrained algorithms and risk-averse constrained algorithms with . Figures 8 and 9 show the training results of the risk-averse constrained algorithms with and , respectively.

C.2 Ablation Study on Components of SDAC

There are three main differences between SDAC and the existing trust region-based safe RL algorithm for mean-std constraints [Kim and Oh, 2022a], called OffTRC: 1) feasibility handling methods in multi-constraint settings, 2) the use of distributional critics, and 3) the use of Q-functions instead of advantage functions, as explained in Appendix A.8 and A.10. Since the ablation study for feasibility handling is conducted in Section 5.3, we perform ablation studies for the distributional critic and Q-function in this section. We call SDAC with only distributional critics as SDAC-Dist and SDAC with only Q-functions as SDAC-Q. If all components are absent, SDAC is identical to OffTRC [Kim and Oh, 2022a]. The variants are trained with the point goal task of the Safety Gym, and the training results are shown in Figure 10. SDAC-Q lowers the cost rate quickly but shows the lowest score. SDAC-Dist shows scores similar to SDAC, but the cost rate converges above the threshold . In conclusion, SDAC can efficiently satisfy the safety constraints through the use of Q-functions and improve score performance through the distributional critics.

C.3 Ablation Study on Hyperparameters

To check the effects of the hyperparameters, we conduct ablation studies on the trust region size and entropy coefficient . The results on the entropy coefficient are presented in Figure 11(a), showing that the score significantly decreases when is . This indicates that policies with high entropy fail to improve score performance since they focus on satisfying the constraints. Thus, the entropy coefficient should be adjusted cautiously, or it can be better to set the coefficient to zero. The results on the trust region size are shown in Figure 11(b), which shows that the results do not change significantly regardless of the trust region size. However, the score convergence rate for is the slowest because the estimation error of the surrogate increases as the trust region size increases according to Theorem A.8.

Appendix D Comparison with RL Algorithms

In this section, we compare the proposed safe RL algorithm with traditional RL algorithms in the locomotion tasks and show that safe RL has the advantage of not requiring reward tuning. We use the truncated quantile critic (TQC) [Kuznetsov et al., 2020], a state-of-the-art algorithm in existing RL benchmarks [Todorov et al., 2012], as traditional RL baselines. To apply the same experiment to traditional RL, it is necessary to design a reward reflecting safety. We construct the reward through a weighted sum as , where and are used to train safe RL methods and are defined in Appendix B, and is called the true reward. The weights of the reward function are searched by a Bayesian optimization tool111We use Sweeps from Weights & Biases Biewald [2020]. to maximize the true reward of TQC in the Mini-Cheetah task. Among the 63 weights searched through Bayesian optimization, the top five weights are listed in Table 4.

| Reward weights | |||

|---|---|---|---|

| 1.588 | 0.299 | 0.174 | |

| 1.340 | 0.284 | 0.148 | |

| 1.841 | 0.545 | 0.951 | |

| 6.560 | 0.187 | 4.920 | |

| 1.603 | 0.448 | 0.564 |

Figure 12 shows the training curves of the Mini-Cheetah task experiments where TQC is trained using the weight pairs listed in Table 4. The graph shows that it is difficult for TQC to lower the second cost below the threshold while all costs of SDAC are below the threshold. In particular, TQC with the fifth weight pairs shows the lowest second cost rate, but the true reward sum is the lowest. This shows that it is challenging to obtain good task performance while satisfying the constraints through reward tuning.

Appendix E Computational Cost Analysis

E.1 Complexity of Gradient Integration Method

In this section, we analyze the computational cost of the gradient integration method. The proposed gradient integration method has three subparts. First, it is required to calculate policy gradients of each cost surrogate, , and for , where is the Hessian matrix of the KL divergence. can be computed using the conjugate gradient method, which requires only a constant number of back-propagation on the cost surrogate, so the computational cost can be expressed as .

Second, the quadratic problem in Section 3.1 is transformed to a dual problem, where the transformation process requires inner products between and for . The computational cost can be expressed as .

Finally, the transformed quadratic problem is solved in the dual space using a quadratic programming solver. Since is usually much smaller than the number of policy parameters, the computational cost almost negligible compared to the others. Then, the cost of the gradient integration is . Since the back-propagation and the inner products is proportional to the number of policy parameters , the computational cost can be simplified as .

E.2 Quantitative Analysis

| Task | SDAC (proposed) | OffTRC | WCSAC | CPO | CVPO |

|---|---|---|---|---|---|

| Point goal (Safety Gym) | 7.96 | 4.86 | 19.07 | 2.61 | 47.43 |

| Mini-Cheetah (Locomotion) | 8.36 | 6.54 | 16.41 | 1.99 | - |

We analyze the computational cost of the proposed method quantitatively. To do this, we measure the training time of the proposed method, SDAC, and the safe RL baselines. We use a workstation whose CPU is the Intel Xeon e5-2650 v3, and GPU is the NVIDIA GeForce GTX TITAN X. The results are presented in Table 5. While CPO is the fastest algorithm, its performance, such as the sum of rewards, is relatively poor compared to other algorithms. The main reason why CPO shows the fastest computation time is that CPO is an on-policy algorithm, hence, it does not require an insertion to (and deletion from) a replay memory, and batch sampling. SDAC shows the third fastest computation time in all algorithms and the second best one among off-policy algorithms. Especially, SDAC is slightly slower than OffTRC, which is the fastest one among off-policy algorithms. This result shows the benefit of SDAC since SDAC outperforms OffTRC in terms of the returns and CV, but the training time is not significantly increased over OffTRC. WCSAC, which is based on SAC, has a slower training time because it updates networks more frequently than other algorithms. CVPO, an EM-based safe RL algorithm, has the slowest training time. In the E-step of CVPO, a non-parametric policy is optimized to solve a local subproblem, and the optimization process requires discretizing the action space and solving a non-linear convex optimization for all batch states. Because of this, CVPO takes the longest to train an RL agent.