Tree-depth and the Formula Complexity of Subgraph Isomorphism

Abstract

For a fixed “pattern” graph , the colored -subgraph isomorphism problem (denoted ) asks, given an -vertex graph and a coloring , whether contains a properly colored copy of . The complexity of this problem is tied to parameterized versions of P NP and L NL, among other questions. An overarching goal is to understand the complexity of , under different computational models, in terms of natural invariants of the pattern graph .

In this paper, we establish a close relationship between the formula complexity of and an invariant known as tree-depth (denoted ). is known to be solvable by monotone formulas of size . Our main result is an lower bound for formulas that are monotone or have sub-logarithmic depth. This complements a lower bound of Li, Razborov and Rossman [8] relating tree-width and circuit size. As a corollary, it implies a stronger homomorphism preservation theorem for first-order logic on finite structures [14].

The technical core of this result is an lower bound in the special case where is a complete binary tree of height , which we establish using the pathset framework introduced in [15]. (The lower bound for general patterns follows via a recent excluded-minor characterization of tree-depth [4, 6].) Additional results of this paper extend the pathset framework and improve upon both, the best known upper and lower bounds on the average-case formula size of when is a path.

1 Introduction

Let be a fixed “pattern” graph. In the colored -subgraph isomorphism problem, denoted , we are given an -vertex “host” graph and a vertex-coloring as input and required to determine whether or not contains a properly colored copy of (i.e., a subgraph such that the restriction of to constitutes an isomorphism from to ). This general problem includes, as special cases, several important problems in parameterized complexity. In particular, is equivalent (up to reductions) to the -clique and distance- connectivity problems when is a clique or path of order .

For any fixed pattern graph , the problem is solvable by brute-force search in polynomial time . Understanding the fine-grained complexity of — in this context, we mean the exponent of in the complexity of under various computational models — for general patterns is an important challenge that is tied to major open questions including , , , and their parameterized versions (, etc.) An overarching goal is to bound the fine-grained complexity of in terms of natural invariants of the graph .

Two key invariants arising in this connection are tree-width () and tree-depth (). The tree-depth of is the minimum height of a rooted forest whose ancestor-descendant closure contains as a subgraph. This invariant has a number of equivalent characterizations and plays a major role in structural graph theory and parameterized complexity [10]. Tree-width is even more widely studied in graph theory and parameterized complexity [5]. It is defined in terms of a different notion of tree decomposition and provides a lower bound on tree-depth ().

These two invariants provide well-known upper bounds on the circuit size and formula size of . To state this precisely, we regard as a sequence of boolean functions where the input encodes a host graph with vertex set under the vertex-coloring that maps to . (Restricting attention to this class of inputs is without loss of generality.) Throughout this paper, we consider circuits and formulas in the unbounded fan-in basis ; we measure size of both circuits and formulas by the number of gates. A circuit or formula is monotone if it contains no gates. We use as an adjective that means “depth ” in reference to upper bounds and “depth ” in reference to lower bounds on formula size.111Here and elsewhere, asymptotic notation hides constants that may depend on . In other contexts, e.g. , hidden constants are absolute.

Theorem 1.1 (Folklore upper bounds).

For all pattern graphs , is solvable by monotone circuits (respectively, formulas) of size (respectively, ).

It is conjectured that requires circuit size for all graphs ; if true this would imply and in a very strong way. As evidence for this conjecture, Marx [9] proved a conditional lower bound assuming the Exponential Time Hypothesis. Providing further evidence, Li, Razborov and Rossman [8] established an unconditional lower bound for circuits, via a technique that extends to (unbounded depth) monotone circuits. This result is best stated in terms of a certain graph invariant introduced in [8]:

Theorem 1.2 (Lower bound on the restricted circuit size of [8]).

For all pattern graphs , the circuit size of — in both the and monotone settings — is at least where is a graph invariant satisfying .222It is actually shown that is at most the branch-width of , an invariant related to tree-width by . The relationship between and was further investigated by Rosenthal [11], who identified the separating example for hypercubes .

Shifting our focus from circuits to formulas, it is natural to conjecture that requires formula size . This statement generalizes the prominent conjecture that distance- connectivity requires formula size , which as a consequence implies . (There is also an average-case version of this conjecture which implies , as we explain shortly.)

In this paper, we carry out the final step in the proof of an analogous result to Theorem 1.2 that lower bounds the restricted formula size of in terms of an invariant that is polynomially related to tree-depth:

Theorem 1.3 (Lower bound on the restricted formula size of ).

For all patterns graphs , the formula size of — in both the and monotone settings — is at least where is a graph invariant satisfying .

The invariant was introduced in [16], where it was also shown that is a lower bound on the formula size of in the and monotone settings. The results of [16] generalized lower bounds for from papers [13, 15], which showed that (where is the path graph of length ). As we will explain shortly, this lower bound for implies that for all graphs . The contribution of the present paper lies in improving this logarithmic lower bound to a polynomial one by showing .

Remark 1.4.

It is helpful to keep in mind the related inequalities:

It is further known that [10]. A nearly maximal separation between invariants and is witness by bounded-degree trees , which have tree-width but tree-depth . This class includes paths and complete binary trees, the two families of pattern graphs studied in this paper.

For trees , we point out that is computable by monotone circuits of size for a constant depending on . (This follows from Theorem 1.1, since all trees have tree-width .) Although formulas are a weaker model than circuits, establishing formula lower bounds for of the form , as we do in this paper, is a subtle task which requires techniques that distinguish formulas from circuits. Accordingly, Theorem 1.3 involves greater machinery than Theorem 1.2. The invariant is also significantly harder to define and analyze compared to .

1.1 Minor-monotonicity

Recall that a graph is a minor of if can be obtained from by a sequence of edge deletions and contractions (i.e., remove an edge and identify its two endpoint). A graph invariant is said to be minor-monotone if whenever is a minor of . As observed in [8], the complexity of (under any reasonable class of circuits) is minor-monotone in the following sense:

Lemma 1.5.

If is a minor of , then there is a reduction from to via a monotone projection.333That is, for every , there is a reduction from to , viewed as boolean functions and , via a monotone projection that maps each variable of to a variable of or a constant or .

In the quest to characterize the complexity of in terms of invariants of , it makes sense to focus on minor-monotone ones. Indeed, invariants , , , are all minor-monotone. This feature is useful in bounding the complexity of . For example, we can combine the result of [2] that every graph with tree-width at least contains a -grid minor, with the lower bound from [1], in order to conclude that for all graphs . (Notation and suppresses poly-logarithmic factors.) The stronger bound of Theorem 1.2 is obtained by a more nuanced analysis of the invariant .

In a similar manner, we can combine the fact that every graph contains a path of length [10], with the lower bound [15], in order to conclude that for all graphs . With the goal of improving this lower bound to (that is, for some constant ), Kawarabayashi and Rossman [6] established a polynomial excluded-minor characterization of tree-depth, which was subsequently sharpened by Czerwiński, Nadara and Pilipczuk [4].

Theorem 1.6 (Excluded-minor characterization of tree-depth [6, 4]).

Every graph with tree-depth satisfies at least one of the following:

-

(i)

has tree-width ,

-

(ii)

contains a path of length ,

-

(iii)

contains a -minor, where is the complete binary tree of height .

Theorem 1.6 reduces the task of proving to the task of proving . It is this final step that we tackle in this paper.444Theorem 1.7 delivers on a promise in papers [6, 14, 16], which cite as an unpublished result of upcoming work. Let us mention that, after finding many devils in the details of an earlier sketch of an bound by the second author, we worked out an entirely different approach in this paper, which moreover gives a linear lower bound (which is tight up to a constant since ).

Theorem 1.7 (Main result of this paper).

.

This lower bound is proved in Section 3 using a certain potential function (described in Section 2), which further reduces our task to a combinatorial problem concerning join-trees over , that is, rooted binary trees whose leaves are labeled by edges of . This is the same combinatorial framework as the lower bound of [15]; however, the task of analyzing join-trees over turned out to be significantly harder compared with .

1.2 Corollary in finite model theory

Corollary 1.8 (Polynomial-rank homomorphism preservation theorem over finite structures).

Every first-order sentence of quantifier-rank that is preserved under homomorphisms of finite structures is logically equivalent on finite structures to an existential-positive first-order sentence of quantifier-rank .

1.3 Improved bounds for average-case

Additional results of this paper improve both the average-case upper and lower bounds for [13]. Here average-case refers to the -biased product distribution on where . This input distribution corresponds to a random graph , comprised of layers of vertices, where every pair of vertices in adjacent layers is connected by an edge independently with probability . For this choice of , the probability that contains a path of length containing one vertex from each layer is bounded away from and .

Theorem 1.9 ([15]).

is solvable on with probability by monotone formulas of size . On the other hand, formulas solving on with probability require size where ().

A similar average-case lower bound for (unbounded depth) monotone formulas was subsequently shown in [13]. Precisely speaking, that paper gives an lower bound under , as well as an lower bound under the distribution that, half of the time, is and, the other half, is a uniform random path of length with no additional edges.

1.3.1 Upper bound

The average-case upper bound of Theorem 1.9 can be recast, in stronger terms, as a worst-case randomized upper bound for the problem of multiplying -permutation matrices . This problem is solvable by deterministic (non-randomized) formulas of size via the classic “recursive doubling” procedure: recursively compute matrix products and and then obtain by a single matrix multiplication.

Randomization lets us achieve quadratically smaller formula size . The idea is as follows. Generate independent random sets , each of size . Rather than compute all entries of the permutation matrix using subformulas, we will encode the information in more efficiently using subformulas (note that bits are required to encode a permutation matrix). For each , we recursively construct

-

•

one subformula that indicates555When describing the behavior of randomized formulas in this subsection (using verbs like “indicate”, “output”, etc.), we leave implicit that the description holds correctly with high probability for any input. whether or not there exists a unique pair such that , and

-

•

additional subformulas that give the binary representation of and whenever such uniquely exists.

Similarly, with respect to the permutation matrix , for each , we have recursively constructed subformulas that indicate whether there exists a unique pair such that , and if so, give the binary representation of and . Using these subformulas for subproblems and , we construct the corresponding formulas for which, for each , indicate whether there exists a unique pair such that , and if so, give the binary representation of and . These formulas check, for each , whether the - and -subformulas of the - and -subproblems output and , respectively such that . These formulas are therefore larger than the subformulas for subproblems and by a factor . This implies an upper bound on size and on depth of the resulting randomized formulas.

A similar construction solves in the average-case. This yields an upper bound on on the parameter , which we initially guessed might be optimal. However, in the course of trying prove a matching lower bound, we were surprised to discover a better upper bound!

Theorem 1.10.

There exist randomized formulas of size , where is the golden ratio, which compute the product of permutation matrices.

The algorithm generalizes the randomized “recursively doubling” method outlined above. Here we give a brief sketch (full details are given in Section 7). Let where (i.e., the Fibonacci number, which satisfies ). We will represent information about the product by constructing formulas that enumerate all triples such that

| (1) |

This is accomplished by generating independent random sets , each of size , and recording the unique triples for which (1) holds.

The recursive construction breaks into a “left” subproblem on and a “right” subproblem on .666The “right” subproblem on can also be viewed as a “left” subproblem on where is the transpose of . (In contrast to the “recursive doubling” method, here the “left” and “right” subproblems involve overlapping subsequences of permutation matrices.) In the “left” subproblem: for each , we have

-

•

subformulas that indicate whether there exists a unique triple such that , and if so, give the binary representation of .

In the “right” subproblem: for each , we have

-

•

subformulas that indicate whether there exists a unique triple such that , and if so, give the binary representation of .

The subformulas in the “left” and “right” subproblems may be combined to produce the analogous (left-handed) formulas for the original input : for each , we construct

-

•

subformulas that indicate whether there exists a unique triple such that (1) holds, and if so, give the binary representation of .

These formulas check, for each , whether the - and -subformulas in the “left” and “right” subproblems output triples and , respectively, such that and . These formulas are therefore larger than the subformulas in the “left” and “right” subproblems by a factor . Taking as our base case with formula size , this gives an upper bound for all (which extends as well to non-Fibonacci numbers ).

In Section 7, we introduce a broad class of randomized algorithms (based on a simplification of the pathset complexity measure) that generalize both the “recursive doubling” and “Fibonacci overlapping” algorithms outlined above. We also discuss reasons, including experimental data, which suggest that might in fact be the asymptotically tight bound on the randomized formula size of multiplying permutations.

1.3.2 Lower bound

The final result of this paper improves the () lower bound of Theorem 1.9.

Theorem 1.11.

()

More significant than the quantitative improvement we obtain in Theorem 1.11 is the fact that our proof further develops pathset framework by introducing a new potential function that gives stronger lower bounds on . This development and the proof of Theorem 1.11 are presented in detail in Sections 4, 5 and 6.

Since , our upper and lower bounds are off by exactly in the base of the logarithm. It would be very interesting to completely close this gap.

1.4 Related work

There have been several papers, including [3, 7, 9], which give conditional lower bounds (under ETH and other assumptions) on the circuit size of and its uncolored variant. We are not aware of any conditional hardness results for the formula size of . It would be interesting to show that requires (unrestricted) formula size under a natural assumption.

2 Preliminaries

For a natural number , denotes the set . For simplicity of presentation, we occasionally omit floors and ceilings, e.g., treating quantities like as natural numbers). This is always without loss of parameters in our results. When no base is indicated, denotes the base-2 logarithm.

2.1 Graphs

In this paper, graphs are simple graphs, i.e., pairs where is a set and is a subset of (the set of unordered pairs where are distinct elements of ). Unless explicitly stated otherwise, graphs are assumed to be locally finite (i.e., every vertex has finite degree) and without isolated vertices (i.e., ). For a vertex , or simply denotes the degree of in .

We regard as a fixed (possibly infinite) “pattern” graph. shall consistently denote a finite subgraph of . We write for the subgraph relation and (or sometimes ) for the proper subgraph relation. If is a subgraph of , then denotes the graph with edge set (and no isolated vertices).

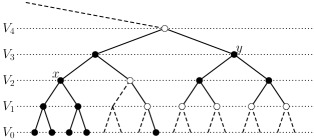

Two important graphs in this paper are paths and complete binary trees. denotes the path graph of length (with vertices and edges). denotes the complete binary tree of height (with vertices and edges). We also consider infinite versions of these graphs. is the path graph with vertex set and edge set . is the union under the nesting where . Thus, is an infinite, rootless, layered binary tree, with leaves in layer , their parents in level , etc.

We use terms graph invariant and graph parameter interchangeably in reference to real-valued functions on graphs that are invariant under isomorphism.

2.2 Threshold weightings

We describe a family of edge-weightings on graphs , which in the case of finite graphs correspond to product distributions that are balanced with respect to the problem . (Definitions in this section are adapted from [8].)

Definition 2.1.

For any graph and function , we denote by finite subgraphs of the function

Definition 2.2.

A threshold weighting for a graph is a function such that for all finite subgraphs ; if is finite, we additionally require that .

We refer to the pair as a threshold-weighted graph. When is fixed, we will at times simply write instead of .

Definition 2.3.

A Markov chain on a graph is a matrix that satisfies

-

•

for all and

-

•

for all .

Lemma 2.4.

Every Markov chain on induces a threshold weighting on defined by

This threshold weighting satisfies

We remark that this lemma has a converse (shown in [11]): Every threshold weighting on is induced by a (not necessarily unique) Markov chain on . Lemma 2.4 also gives us a way to define threshold weightings when is an infinite graph; this will be useful later on.

Example 2.5.

Let be the transition matrix of the uniform random walk on where . That is,

For the associated threshold weighting , we have

A key property of this that we will use later on (Lemma 3.8) is that

(that is, is at least one-third the size of the boundary of ) for all graphs . This is a straightforward consequence of Lemma 2.4, which is also true in the infinite tree .

Example 2.6.

Let be the path of length (with vertices and edges). The constant function is a threshold weighting for . (This is different from the threshold function induced by the uniform random walk on , which has value on the two outer edges of and value on the inner edges.)

This example again makes sense for . The constant function is a threshold weighting for . This threshold function has the nice property that

for all finite subgraphs .

Definition 2.7.

Let be a finite graph, let be a threshold weigting on , and let be a positive integer. We denote by be the random -colored graph (i.e., input distribution to ) with vertex set , vertex-coloring , and random edge relation given by

independently for all .

Lemma 2.8 ([8]).

The probability that is a YES-instance of is bounded away from and .

2.3 Join-trees and parameters and

Parameters and are defined in terms of a notion called join-trees for subgraphs of . A join-tree is simply a “formula” computing a subgraph of , starting from individual edges, where union () is the only operation.

Definition 2.9.

A join-tree over is a finite rooted binary tree together with a labeling (which may also be viewed as a partial function ). We reserve symbols for join-trees. ( will always denote a subgraph of .)

The graph of , denoted , is the subgraph of with edge set . (Note that is always finite.) As a matter of notation, we write for and for . We also write for where is a threshold weighting on .

We write for the single-node join-tree labeled by . For , we write for the single-node join-tree labeled by . For join-trees and , we write for the join-tree consisting a root with and as children (with the inherited labels, i.e., ). Note that .

Every join-tree is clearly either , or where , or where are join-trees. In the first two cases, we say that is atomic; in the third case, we say that is non-atomic.

We say that is a child of if for some . We say that is a sub-join-tree of (denoted ) if or or is a sub-join-tree of a child of . We say that is a proper sub-join-tree (denoted ) if and .

We are now able to define the invariant in Theorem 1.2, which lower bounds the restricted circuit size of . (In fact, also provides a nearly tight upper bound on the average-case circuit size of [11].)

Definition 2.10 (The invariant of Theorem 1.2).

For finite graphs , let

The invariant of of Theorem 1.3 is significantly more complicated to define. We postpone the definition to Section 5 and, in the meantime, focus on a simpler “potential function” on join-trees, denoted , which we use to lower bound . In order to state the definition of , we require the following operation (“restriction away from”) on graphs and join-trees.

Definition 2.11 (The operation on graphs and join-trees).

For and a subset , we denote by the graph consisting of the connected components of that are vertex-disjoint from .

For a join-tree , we denote the join-tree with the same rooted tree structure as and leaf labeling function

That is, deletes all labels except for edges in . Note that .

As a matter of notation, if is another join-tree, we write for and for .

Definition 2.12 (The potential function on join-trees).

Fix a threshold weighting on a graph . The potential function join-trees over is the unique pointwise minimum function satisfying the following inequalities for all join-trees :

| () | |||||

| () |

Alternatively, has the following recursive characterization:

-

•

If is an atomic join-tree, then

(Obs: In the case , the constraint is forced by () where .)

-

•

If , then

That is, at least one of inequalities () or () is tight for each join-tree .

2.4 Observations about

Note that inequality () implies for all (since is nonnegative). Also note that inequality () implies in the special case (since and the empty graph). Combining these observations, we see that for all . It follows that for all graphs , which makes sense in light of the fact that bounds circuit size and bounds formula size.

Next, observe that always equals either or where and are proper sub-join-trees of . This can be expanded out until we get a nonnegative linear combination of -terms. Looking closely, we see that

where coefficients (which depend on both and ) are nonnegative dyadic rational numbers coming from the tight instances of inequalities () and (). We may further observe, for any , that

This is easily shown by induction using the fact that graphs and and are pairwise disjoint for any .

Lemma 2.14.

Suppose and are threshold-weighted graphs such that and for all . Then for any join-tree with graph , we have

Proof.

Let be nonnegative dyadic rationals — arising from the tight instances of inequalities () and () in the recursive definition of — such that . We may apply inequalities () and () in the exact same way to get the bound . We now have

using the fact that and for all . ∎

2.5 Lower bounds on

Having introduced the potential function and described its connection to in Theorem 2.13, we conclude this section by briefly explaining how it is used derive lower bounds and . The main combinatorial lemma behind the lower bound of Theorem 1.9 is the following:

Lemma 2.15 ([15]).

Let be the constant threshold weighting on . For every join-tree with graph , we have . (Therefore, .)

The proof is included in Appendix A, for the sake of comparison with our two lower bounds below. We remark that this proof makes crucial use of both () and (); it was shown in [15] that no lower bound better than is provable using () alone or () alone.

Our lower bound (Theorem 1.7) is an immediate consequence of the following:

Lemma 2.16.

Let be the threshold weighting arising from the uniform random walk on (Example 2.5). For every join-tree with graph , we have .

Our proof, given in the next section, is purely graph-theoretic. Interestingly, the argument essentially uses only inequality (); we do not require (), other than in the weak form for all .

It is worth mentioning that the choice of threshold weighting is important in Lemma 2.16. A different, perhaps more obvious, threshold weighting is the constant function with value (). With respect to this threshold weighting, no lower bound better than is possible.

Finally, our improved lower bound (Theorem 1.11) is obtained via the following lemma. This result involves a 2-parameter extension of denoted (where ), which we introduce in Section 4.

Lemma 2.17.

Let be the constant threshold weighting on . For every join-tree with graph , we have .

3 Lower bound

We fix the infinite pattern graph with the threshold weighting induced by the uniform random walk. Recall that under a nesting with . will represent finite subgraphs of , and will be join-trees over . (In particular, note that no longer denotes the ambient pattern graph.)

We next recall the definition of from Example 2.5. Let be the transition matrix of the uniform random walk on , that is,

This induces the threshold weighting given by

Since is fixed, we will suppress it when writing and .

Definition 3.1.

For all , let

Thus, is the set of leaves in , is the set of parents of leaves, etc. Note that . We shall refer to the as the various levels of .

For and , let be the complete binary tree of height rooted at (in the case , we regard as a single isolated vertex). We denote by the graph obtained from by including an extra edge between and its parent. Note that

For , let .

Observation 3.2.

If , then for , .

We next define two useful parameters of finite subgraphs of .

Definition 3.3 (Max-complete height).

For a finite subgraph of , define the max-complete height to be the maximum for which there exists with ; is defined to be zero when no such exists (in particular, this happens when ).

Observation 3.4.

For any , .

Definition 3.5 (Boundary size).

Let denote the size of boundary of in :

Observation 3.6.

For any , we have , as the boundaries in the respective graphs are simply the singletons and . Another example is as follows: if for some and is the subgraph of induced by the set of vertices , then as all vertices in (along with ) lie in the boundary of .

Definition 3.7 (Grounded and ungrounded subgraphs of ).

Let be finite subgraphs of . We say that is grounded if it is connected and (that is, is a tree, at least one of whose leaves is also a leaf of ). We say that is ungrounded if it is non-empty and connected and (that is, is a non-empty tree, none of whose leaves is a leaf of ).

We shall think of the function as essentially a proxy for , as it has the advantage of having a simple combinatorial definition. This is justified by the following:

Lemma 3.8.

For every , we have .

It also holds that for without isolated vertices (or if we allow isolated vertices), but we will not need this upper bound.

Proof.

Since is the threshold weighting induced by , Lemma 2.4 tells us

Note that contributes to this sum if, and only if, it belongs to the boundary of (i.e., ). Since whenever , the claim follows. ∎

We are now ready to state the main theorem of the section.

Theorem 3.9.

Let and . Then for every join-tree ,

Theorem 3.9 directly implies Lemma 2.16, which in turn yields the lower bound of Theorem 1.7. To see why, let be the threshold weighting on coming from the uniform random walk (Example 2.5). Viewing a subgraph of , note that . For any join-tree with graph , Lemma 2.14 and Theorem 3.9 now imply

We proceed with a few definitions and lemmas needed for the proof of Theorem 3.9 in Section 3.1. In order to present the main arguments first, proofs of three auxiliary lemmas (3.10, 3.12, 3.13) are postponed to Section 3.2.

Lemma 3.10.

Let be a non-empty finite subgraph of , all of whose components are ungrounded. Then .

We make a note of its following corollary here.

Corollary 3.11.

Suppose be a finite connected subgraph of and such that and does not contain any path from to a leaf of . Then .

Proof.

Let be the graph with edge set . Note that is non-empty, connected and ungrounded. Observe that because all vertices in the boundary of , with the only possible exception of , also lie in the boundary of . Hence by Lemma 3.10, . ∎

The second auxiliary lemma gives a useful inequality relating , and .

Lemma 3.12.

For every finite subgraph of , we have .

(This is tight when for some , in which case and and .) The third auxiliary lemma shows that subgraphs of that contain at most half the edges of and have boundary size () have empty intersection with a large complete subtree of of height .

Lemma 3.13.

Let and suppose such that and . Then there exists a vertex such that .

We now state and prove the main lemma used in the proof of Theorem 3.9.

Lemma 3.14.

For any integers , let and suppose is a join-tree such that . Then one the following conditions holds:

-

(i)

There exists such that and .

-

(ii)

There exists with .

-

(iii)

There exists such that .

Proof.

Descend in the join-tree until reaching a such that contains a path from to a leaf of , but no contains a path from to a leaf of .

Let be maximal such that there exists such that . We claim that . To see this, note that for every vertex on the path from to (a subpath of ), if denotes the child of that is not on the path , then does not contain (because if it did then ). As a result, it must be the case that for every vertex on the path from to , some vertex in lies in the boundary of and so, .

As an illustration of this argument, consider the graph in Figure 3(a). Then for every vertex , either itself is in the boundary (like and ) or some vertex in is in the boundary (for , it is for example and for , it is either child of ).

Consider the case that (again see Figure 3(a)). Letting , we have and , so condition (i) is satisfied. We shall therefore proceed under the assumption that

Since , at least one child of satisfies

Fix one such .

Consider the case that does not contain the path between and (see Figure 3(b)). Then , so by Lemma 3.12,

In this case, we satisfy condition (ii). We shall therefore proceed under the assumption that contains the path between and .

Note that does not contain a path from to any leaf of (since otherwise would contain a path from to a leaf of , contradicting the way we choose ). Let be the connected component of that contains (and hence also contains the path between and ).

3.1 Proof of Theorem 3.9

We now prove Theorem 3.9: the lower bound where and .

We argue by a structural induction on join-trees . First, consider the case that is empty, then and . We shall assume that is non-empty.

Next consider the base case where is the atomic join-tree for an edge . In this case, we have and

Therefore, . We are done, since .

From now on, let be a non-atomic join-tree with whose graph is non-empty. Let

Our goal is thus to prove , which we do by analyzing numerous cases.

Consider first the case that . In this case, we clearly have (since and ). So shall proceed on the assumption that .

Since , we are done if . So we shall proceed on the additional assumption that

| (2) |

Fixing with :

By definition of , there exists a vertex such that . Let us fix any such .

Fixing with :

We next fix a sub-join-tree satisfying . To see that such exists, first note that . Consider a walk down which at each step descends to a child which maximizes . This quantity shrinks by a factor at each step, eventually reaching size . Therefore, at some stage, we reach a sub-join-tree such that the intersection size is between and .

Observe that (by () and the fact that for all join-trees). Therefore, we are done if . So we shall proceed under the additional assumption that

| (3) |

Since , Lemma 3.12 tells us that . We make note of the fact that this implies

| (4) | (induction hypothesis) | ||||

Fixing with :

Note that by (3). Since , the hypotheses of Lemma 3.13 are satisfied with respect to the vertex and the graph . Therefore, we may fix a vertex such that .

We next introduce a parameter

Note that is an integer, since is integral. Our choice of parameters moreover ensure that , since by (2),(3) and for all nonempty graphs. Since , it follows that .

Since and , Lemma 3.14 (with respect to and ) tells us that one of the following conditions holds:

-

(i)

There exists such that and .

-

(ii)

There exists with .

-

(iii)

There exists with .

We will show that in each of these three cases.

Case (i):

Suppose . Then it follows that , but this contradicts Lemma 3.8 by which , as boundary of a non-empty graph is always non-empty. Thus, and we have

| (induction hypothesis) | ||||

| (since ) | ||||

| (since by Case (i)) | ||||

| (since and by Case (i)) | ||||

| (since ) | ||||

Case (ii):

Recall that was chosen such that . It follows that the graph of is a union of connected components of . Therefore, and . Case (ii) now implies

It follows that

| (induction hypothesis) | ||||

| (by the above inequality) | ||||

| (using ) | ||||

By inequality () and the induction hypothesis, we have

| (by (4) and the above) | ||||

The final step above uses since is a nonempty subgraph of .

Case (iii):

3.2 Proofs of Lemmas 3.10, 3.12, 3.13

Proof of Lemma 3.10.

Let be a non-empty finite subgraph of , all of whose components are ungrounded. Then .

As and are additive over disjoint components, it suffices to prove the lemma in the case where is connected. Let be the unique highest vertex in (i.e., belonging to for the maximal ), which we view as the “root” of .

We now argue by induction on the size of . If , then both and one of its children lie in the boundary of and hence, we are done. If , then either is the graph induced by and its two children, or is a path of length emanating from . In either case, as is ungrounded, all three vertices are in the boundary of and the claim follows.

So assume that . We consider two cases:

Case 1: contains a vertex such that both its children and are leaves of . Let be the subgraph of induced by . By the induction hypothesis, . But because are in the boundary of while is not. Therefore,

Case 2: There exists a leaf of such that and and . Define to be the subgraph of induced by . By the induction hypothesis, . But because are in the boundary of while may or may not be. In either case, we again have,

which completes the proof.∎

Proof of Lemma 3.12.

For all finite subgraphs of , .

We will prove the following equivalent inequality

| (5) |

We claim that it suffices to establish (5) for connected graphs . To see why, assume (5) holds for two vertex-disjoint graphs and . Then we have

proving (5) for the graph .

So assume now that is grounded. Let be the unique vertex in of maximum height. Let (note that ) and fix a choice of such that . If , then and therefore and and , so the inequality follows. So assume that .

As is connected, it contains the path from to . Consider the case when contains only one child of . (See Figure 4(a) for an example.) Then and therefore . Further, we claim that . To see this, note that for every vertex on the path , if denotes the child of that is not on the path , then does not contain (because otherwise, ). As a result, it must be the case that for every on the path , some vertex in lies in the boundary of and so, . Therefore, the desired inequality follows as

Next, suppose that contains both children of . Let be the child of on the path to , and let be its sibling. (See Figure 4(b) for an example.) Note that cannot contain the complete binary tree (since otherwise contradicting our choice of ). Therefore, there is at least one vertex in that lies in the boundary of . As a result, (the vertices identified by the previous argument, along with the additional boundary element in ). Also as , we have and thus, ∎

Proof of Lemma 3.13.

Let and suppose such that and . Then there exists a vertex such that .

The claim is easy to establish when is empty or of the form or where (that is, in the cases where ). So we shall assume that .

Note that contains vertices with height , that is, (Observation 3.2). Let

It suffices to show that .

Since , it follows that

We next observe that . This is because for each , has at least one boundary element in the set ; and we get an additional boundary element by consider any element of maximum height, noting that cannot lie in for any . Therefore,

Letting , it suffices to show that

This numerical inequality is simple to verify for all , so we are clearly done by the assumption that .

4 A better potential function

We again fix a graph and a threshold weighting . In this section, we define a potential function with two parameters: a join-tree and a set . This improved potential function serves the same purpose of lower-bounding . In Section 6 we use to obtain a better lower bound on .

Let us begin by recalling the defining inequalities for :

| () | |||||

| () |

A first observation toward improving is that we could have included an additional inequality in the definition of while maintaining Theorem 2.13 (the lower bound on in terms of ). We call this inequality ( ), since it is a variant of ():

| ( ) |

A second observation is that, in the recursive view of , we “shrink” more than necessary by passing to in ( ) and in (). Recall that is a join-tree with graph formed by the connected components of that are vertex-disjoint from . Rather than recursing on , we can instead simply recurse on while treating “” as an extra parameter. These two observations lead to the definition of below.

Notation 4.1.

The following alternative notation for will be convenient in what follows. For a graph and a set , we write for . Similarly, for a join-tree , we write for .

Definition 4.2 (The potential function ).

Let join-trees for subgraphs of subsets of be the unique pointwise minimum function — written as rather than — satisfying the following inequalities for all sets and join-trees :

| () | |||||

| ( ) | |||||

| () |

Alternatively, has the following recursive characterization:

-

•

If is an atomic join-tree, then .

-

•

If , then

(To avoid circularity, we take this over proper sub-join-trees such that .)

That is, at least one among inequalities (), ( ), () is tight for each join-tree .

Remark 4.3.

We could have defined in a stronger manner that ensures for all and (in order to claim that improves ) by using the following more general versions of (), ( ), ():

| () | |||||

| () | |||||

| () |

We chose the simpler Definition 4.2 since it is sufficient for our lower bound on in Section 6. Definition 4.2 also leads to a mildly simpler proof of Theorem 5.10 in Section 5, compared with inequalities (, (), () above.

The following theorem shows that serves the same purpose as of lower-bounding the invariant .

Theorem 4.4.

The invariant in Theorem 1.3 satisfies

In the next section, we will finally state the definition of and prove Theorem 4.4. We will then use this theorem to prove a lower bound on using in Section 5. (The argument in Section 5 is purely graph-theoretic and does not require the material in Section 5 if Theorem 4.4 is taken for granted.)

Remark 4.5.

The authors first proved the lower bound for using before considering the improved potential function . It is conceivable that the use of would simplify or improve the constant in our lower bound. On the other hand, a suitable induction hypothesis would have to account for the additional parameter , so it is unclear whether a dramatic simplification can be achieved.

5 The pathset framework

In this section we present the pathset framework, state the definition of , and prove Theorem 4.4 (which bounds in terms of the potential function ). All definitions and results in this section are from papers [13, 15, 16] (with minor modifications); a few straightforward lemmas are stated without proof. The reader is referred to those papers for much more context, illustrative examples, and an explanation of how provides a lower bound on the and monotone formulas size of .

Throughout this section, we fix a threshold-weighted graph and an arbitrary positive integer . Let range over subgraphs of , let range over subsets of , and let range over join-trees for subgraphs of .

Definition 5.1 (Relations, density, join, projection, restriction).

Let be arbitrary finite sets.

-

•

For a tuple and subset , let denote the restriction of to coordinates in .

-

•

For a relation , the density of is denoted

-

•

For relations and , the join is the relation defined by

-

•

For and , the projection is defined by

-

•

For and , the restriction is defined by

The next two lemmas bound the density of relations in terms of projections and restrictions.

Lemma 5.2.

For every relation and ,

Lemma 5.3.

For all relations and ,

For subgraph and sets , we will be interested in relations called -pathsets that satisfy certain density constraints. These density constraints are related to subgraph counts in the random graph (see [16]).

Definition 5.4 (Pathsets).

Let and .

-

•

We write for .

-

•

We write for the set of relations . (That is, is the power set of .)

-

•

For and , let

-

•

For and and , let

-

•

A relation is a -pathset if it satisfies

for all and . The set of all -pathsets is denoted by .

The next lemma is immediate from the definition of .

Lemma 5.5 (Pathsets are closed under restriction).

For all and and , we have .

We next introduce, for each join-tree and set , a complexity measure on relations . Very roughly speaking, measures the cost of “constructing” via operations and , where all intermediate relations are subject to pathset constraints and the pattern of joins is given by .

Definition 5.6 (Pathset complexity ).

-

•

For a join-tree , let , , etcetera.

-

•

For an atomic join-tree and relation , the -pathset complexity of , denoted , is the minimum number of pathsets such that .

-

•

For a non-atomic join-tree and relation , the -pathset complexity of , denoted , is the minimum value of over families satisfying

-

for all ,

-

for all , and

-

.

We express this concisely as:

We refer to any family achieving this minimum as a witnessing family for .

-

The next lemma lists three properties of , which are easily deduced from the definition.

Lemma 5.7 (Properties of ).

-

•

is subadditive: ,

-

•

is monotone: ,

-

•

if and and , then .

The next two lemmas show that pathset complexity is non-increasing under restrictions, as well as under projections to sub-join-trees.

Lemma 5.8 (Projection Lemma).

For all and , we have

Proof.

It suffices to prove the lemma in the case where (since is the transitive closure of “ is a child of ”). Fix a witnessing family for . Note that , since and . It follows that

Lemma 5.9 (Restriction Lemma).

For all and and , we have

Proof.

We argue by induction on join-trees . The lemma is trivial in the base case where is atomic. So assume . Fix a witnessing family for . Observe that the family of restricted triples satisfies:

-

for all ,

-

for all ,

-

.

Therefore,

We now prove the key theorem bounding pathset complexity in terms of the density of and the potential function .

Theorem 5.10.

For every join-tree and set and relation , we have

Proof.

We argue by induction on join-trees . The base case where is atomic is straightforward. Suppose where with . We have . For each , we have (by definition of ). Therefore, as required.

So we assume is non-atomic. Fix a witnessing family for . Since , it suffices to show, for each , that

| (6) |

We establish (6) by considering the three different cases for , according to which of the inequalities (), ( ) () is tight.

Case (): (or symmetrically )

Case ( ): (or symmetrically )

Case (): for some

We have

| (Lemma 5.2) | ||||

| (induction hyp.) | ||||

| (Proj. Lemma 5.8) | ||||

Since , we also have

Taking the product of the square roots of these two bounds on , we conclude

| (since ) | ||||

Having established (6) in all three cases, the proof is complete. ∎

Finally, we define the graph invariant . Since we no longer fix a particular threshold weighting and positive integer , we include these as subscripts to the pathset complexity function by writing for relations .

Definition 5.11 (The parameter of Theorem 1.3).

For a graph , let be the minimum real number such that

for every threshold weighting on , join-tree with graph , positive integer , and relation .

6 Lower bound

Throughout this section, we fix the infinite pattern graph and threshold weighting . Let range over finite subgraphs of , and let range over finite subsets of (). We suppress , writing instead of .

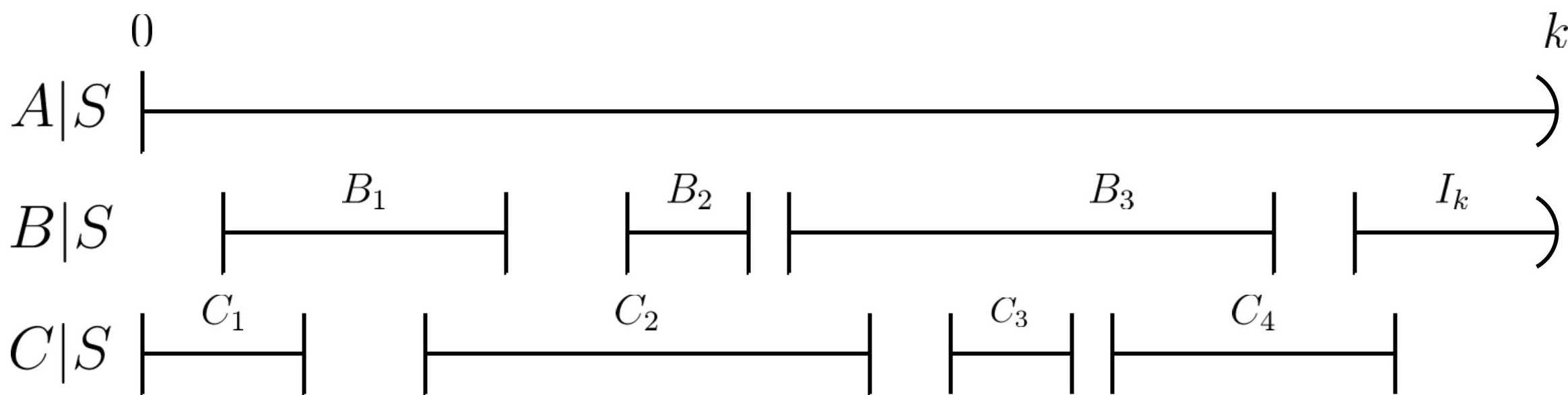

For integers , let be the path from to (with edges ). For , let .

Definition 6.1 (Open/half-open/closed components of ).

For integers such that , we say that

-

•

is an open component of if ,

-

•

is a half-open component of if and ,

-

•

is a half-open component of if and ,

-

•

is a closed component of if and and .

We shall use the term ‘interval’ and component interchangeably. In each of the four cases above, we define the length of the interval to be and refer to and as the left and right ‘end-points’ of that interval, respectively. We shall also refer to the length of an interval by . We treat a join-tree as its graph when speaking of the open/half-open/closed components of .

As a matter of notation, let and and and .

Lemma 6.2.

equals the number of closed components of .

Proof.

We have connected components of closed components of ∎

Recall that for any any graph and set , we denote by the subgraph induced by the vertices of . We have the following observation that follows immediately from Lemma 6.2:

Observation 6.3.

For a graph , set and a set such that for every open/half-open/closed component of , either or ,

where note that equals the number of closed components of that contains.

The following lemma will allow us to “zoom” into any component of when bounding and gain for each closed component that we discard.

Lemma 6.4.

Let be a join-tree and let be subsets of such that, for every open/half-open/closed component of , either or . Then

Proof.

We argue by induction on join-trees and by a backward induction on the set . The lemma is trivial when is atomic, or when . So assume . Note that the given condition means that is a union of components of and since are subgraphs, is a union of components of (and similarly for and ). We start with the observation that

| (7) |

To see this, note that as the graph is a union of the graphs and , each closed component of either contains at least one closed component of , or it does not. In the latter case, it is then clear that it must also be a component of . See Figure 6 for an illustration.

We will now consider three cases according to whether (), ( ) or () is tight for .

First, assume . We have

| (by induction hypothesis) | ||||

| (by assumption and by eq. (7)) | ||||

Next, assume . We have

| (by induction hypothesis) | ||||

| (by assumption and by eq. (7)) | ||||

Finally, assume for some . We have

where the last inequality follows from the induction hypothesis on and (this is where we use the backward induction for sets). It thus suffices to check that

But this is straightforward as each closed component of either contains at least one closed component of , or it does not. In the latter case, it is then clear that it must also be a component of . ∎

Theorem 6.5.

For every join-tree and set , and a component of of length ,

where , and .

Proof.

We prove this by a structural induction on join-trees as well as a backward induction on the set . Note that we may assume without loss of generality that . Assume is non-empty as the statement follows immediately, otherwise. If is atomic, then as in each case, the statement is trivial. So let us assume that is non-atomic with and that the theorem statement holds for any proper sub-join-tree and set with the given parameter settings of . Moreover, we shall also assume so for the given join-tree and for every such that .

Fix a component of . Let be the union of the vertex sets of all components of excluding . Then note that . Therefore, by Lemma 6.4, it suffices to show that

where we know that the graph of is simply . Hence, we may now assume without loss of generality that is connected and has length .

Henceforth, we shall think of and as indeterminates, imposing constraints on them as we move along the proof. We will eventually verify that the parameter settings specified in the theorem statement indeed satisfy these constraints. We shall proceed by considering the three cases one by one: that is (i) open, (ii) half-open, or (iii) closed. The strategy in each case is to suitably apply one of the three rules, namely (), ( ), or () in order to obtain a lower bound on . We remark that cases (i) and (ii) are similar in terms of the ideas involved to arrive at the lower bound: in particular, neither uses the () rule, which is solely used in case (iii). Roughly speaking, we shall see that case (i) determines the value of , while case (ii) that of and finally, case (iii) that of .

Case (i): is open. Suppose . Let and suppose (respectively ) where ’s (respectively ’s) are the components of (respectively ), sorted in the increasing order of left end-points. Then with the possible exception of and , every other is a closed interval (similarly for ). Also note that for any and , . We define

It follows that is a covering of the interval and moreover, each of the end-points and lie in a unique (and half-open) interval among the members of , which we denote by and respectively. Observe that if we arrange the intervals in in the increasing order of left end-points (starting with obviously), then they alternate membership between and . Finally, we denote by , the set of all closed intervals in the covering and define . See Figure 7 for an example that illustrates these notions.

We now consider four sub-cases according to whether , , and is even, or and is odd. They all involve very similar ideas except for the sub-case, which is slightly more subtle and requires the application of ( ) unlike the other sub-cases. The reader should bear in mind that the variables are taken to hold a distinct meaning in each of these sub-cases.

Open sub-case :

Either or then has a half-open component of size at least and without loss of generality, suppose that is . See Figure 8 for an example.

We have

as long as . Note that this sub-case is tight for our setting of but works for any .

Open sub-case :

Both and belong to the same collection i.e., either or . Without loss of generality, assume that it is the former. Then we know that , so let be that closed interval in , for some . Note that so assume without loss of generality that (in words, that there is more ‘space’ to the left of than to its right). Next, let be the closed intervals in (labelled in the increasing order of left end-points) that appear ‘before’ i.e., their right end-points are (strictly) less than (of course it may be the case that , when there is no such interval) and let . Let be the component (if it exists) in containing , and let (define if it does not exist).

Let be the ‘gap’ intervals (labelled in the increasing order of left end-points) as shown in Figure 9. Now with the possible exception of which may be half-open or open (depending or whether is empty or not), all other are open. Let be the length of the longest gap interval. Then it follows that

Now if either of the following occurs, we are immediately done.

-

•

If , then simply use the induction hypothesis on . We have

-

•

If , then as contains at least other closed components, we have

-

•

If , then we have

So we may assume that , , and , which together imply that

We have (this time by ( ))

which is at least as long as for all ,

which upon plugging in , reduces to showing for all that

which is indeed true for , . We note that this sub-case is not tight for our parameter settings , .

Open sub-case and is even:

If , then both and contain (exactly) one half-open interval among and closed intervals each. Similar to the previous case, we define and . It follows that . See Figure 10 for an example.

If , then as one of or has a half-open interval of length along with at least closed intervals, we have

and we are done. So assume that , implying . Therefore, as one of or has a closed interval of length with at least other closed intervals, we have

and it is enough to check that this last expression is at least , which reduces to showing that for all ,

which is clearly true when , and . Therefore, this sub-case is also not tight for our parameter settings , .

Open sub-case and is odd:

Both and must then lie in , without loss of generality. Then if , then has closed components while has . Define , , and . It follows that .

Refer back to the example described in Figure 7. For those particular graphs , , and , we would have , , and .

Now if either of the following occurs, we are immediately done.

-

•

If , simply use the induction hypothesis on : one of or is a half-open component of length at least and has closed components. We have

-

•

Similarly if , then there is closed component of length at least in along with other closed components. We have

Hence, we may assume that and , which together imply that

Now has a closed component of length at least along with other components. We have

and it is enough to show that this last expression is at least , which in turn reduces to checking that for all ,

which is straightforward to verify for any , and . In particular, this sub-case is also not tight for our parameter settings , .

Remark: The above inequality is not true for irrespective of the choice of , which is precisely why we have a separate argument for the sub-case .

Case (ii): is half-open. Suppose without loss of generality that . Again, let and suppose (respectively ) where s (respectively s) are the connected components of (respectively ). Then with the possible exception of , every other is a closed interval (similarly for ). Also note that for any and , . We define

It follows that is a covering of the interval and moreover, each of the end-points and lies in a unique (closed and half-open, respectively) interval among the members of , which we denote by and respectively. Again, observe that if we arrange the intervals in in the increasing order of left end-points, then they alternate membership between and . Finally, we denote by , the set of all closed intervals in the covering and define . See Figure 11 for an example that illustrates these notions.

We again consider four sub-cases according to whether , , and is odd, or and is even. The first two sub-cases are slightly more subtle and require the application of ( ) unlike the rest. Again, the variables are taken to hold a distinct meaning in each of these sub-cases.

Half-open sub-case :

This means that is the unique interval in , also implying that and overlap. So suppose without loss of generality that , , and let be the closed intervals that appear in ‘after’ . Further, let be the half-open interval in that contains and let where if such an interval does not exist. We define . See Figure 12 for an example.

Now if , then

and we are done. So assume that . Further, if , then

and so we assume that . Next, suppose are the ‘gap’ intervals as shown in Figure 12. Let be the length of the longest gap interval. Then it follows that . We have (this time by ( ))

The task of showing that this expression is at least reduces to showing that for all ,

which is clearly true when , , and . We note that this sub-case is indeed tight for our parameter settings and , however it works for any .

Half-Open sub-case :

Let , and . If , then we have ()

and we are done. So we may assume that . Let ( may be zero) be the closed intervals in that appear ‘before’ as shown in Figure 13 and suppose . If , then

and we are done. So assume that . Now let be the left end-point of and suppose are the ‘gap’ intervals as shown in the Figure 13. Let be the length of the longest gap interval. Then it follows that

We have (by ( ))

The task of showing that this expression is at least reduces to showing that for all ,

which is clearly true when , , and . This sub-case is also tight for our parameter settings and , however it works for any .

Half-open sub-case and is odd:

Suppose is in , without loss of generality. Then if , then has closed components while has . Let , and (see Figure 14 for an example). It follows that .

Now if either of the following occurs, we are immediately done.

-

•

If , then

-

•

Similarly if , then there is closed component of length at least in along with other closed components. We have

Hence, we may assume that and , which together imply that

Now has a closed component of length at least along with other components. Thus,

and it is enough to show that this last expression is at least , which in turn reduces to checking that for all ,

which is straightforward to verify for and . In particular, this is true for our parameter settings , and it follows that this sub-case is not tight.

Half-open sub-case and is even:

Suppose without loss of generality, . Then if , then both and contain at least closed intervals each. We define . It follows that .

Refer back to the example depicted in Figure 11. For that particular example, we would then have and , as is the longest interval in .

If , then

and we are done. So assume that , implying . Therefore,

and it is enough to check that this last expression is at least , which reduces to showing that for all ,

which is clearly true when and . This sub-case is also not tight for our parameter settings , .

Case (iii): is closed. Finally, suppose . First, consider the sub-case that there exists such that or with

for , as shown in Figure 15. Then We have (this time by ())

Note that for our choice of , and the given parameter settings and , , thereby establishing the claim if such a exists. We also note that our parameter settings are indeed tight in this case.

Therefore, now assume that no such exists.

Furthermore, we may assume that if there exists such that contains a component of length at least , then is connected (call this assumption ()). Because otherwise, we have the following:

Note that .

It follows from the application of () above and () that the only case left now is when there exists a (connected) and a child of such that and the component of containing satisfy

Let have other components apart from . We now consider sub-cases according to whether is zero or not.

Closed sub-case I: .

It follows that is connected. Then note that is as shown in Figure 16, and we have

So it only remains to check that , which is indeed true for our parameter settings. We note that this sub-case is tight for our parameter settings.

Closed sub-case II: .

We still apply ( ) as in the previous sub-case, but the analysis is different. As has components in all, we may assume that each has length at most as otherwise, we immediately obtain from the () rule. As a consequence, if are the ‘gap’ intervals as shown in Figure 17, some must be an open (or half-open) interval of length at least .

We have

which is at least as long as for all ,

By assumption, . Thus, we plug in and and see that it is enough to verify that for all ,

which is easily seen to be true for . ∎

7 Randomized formulas computing the product of permutations

In this section we define a broad class of randomized formulas for computing the product of permutations. The size of these formulas corresponds to a complexity measure related to pathset complexity, but much simpler and easier to analyze.

Definition 7.1.

For integers , let be the set of sequences such that . We denote by that each .

We define a complexity measure by the following induction:

-

•

In the base case , let

-

•

For , let

Note the following properties of :

-

1.

For all and , if and , then .

-

2.

For all , if , then .

-

3.

For all , we have .

The complexity measure is a simplified version of pathset complexity . In fact, provides an upper bound on pathset complexity!

Remark 7.2.

Consider the infinite pattern graph under the constant threshold weighting. For join-trees over , we will write for and for .

Each corresponds to a -pathset

where are arbitrary subsets of of size . Then there exists a join-tree with graph such that

This join-tree arises from the optimal and in the definition of : namely, where is the join-tree for associated with and is the join-tree for associated with . (Note that has the property that is a path for each ; so, not all join-trees with graph arise in this way.)

The above bound on (now dropping the subscript as is fixed) is justified as follows. Letting , there exist sets of size , indexed over and , such that . We then have where

Arguing by induction on proper subsequences and (note that the base case is trivial as and as itself is a pathset), it follows that

As a consequence of these observations, we see that is lower-bounded by . Since (by Theorem 5.10), it follows that

In particular, our lower bound of Section 6 implies that for all .

Finally, note that by covering the complete relation by shifted copies of rectangles , we get an upper bound

By a similar construction, we will show that is an upper bound on the randomized formula size of computing the product of permutations.

Definition 7.3.

Let be a sequence of permutations . For a sequence , we say that is a -path if for all .

If is a -path and is a sequence of sets , we will say that isolates if and is the only -path in .

Definition 7.4.

For a set and , notation denotes that is a random subset of that contains each element independently with probability .

Given , we will denote by the sequence of independent random sets .

We now state the key lemma for our construction.

Lemma 7.5.

For every and sequence of sets , there exist randomized formulas

each of depth and size and taking as input a sequence of permutations , such that on every input then with probability (with respect to both and the randomness of and ):

-

1.

outputs if, and only if, isolates some -path.

-

2.

If isolates a (necessarily unique) -path , then formulas output the binary representation of integers .

Proof.

The construction mimics the pathset complexity upper bound in Remark 7.2. In the base case , we have sets and need to determine if a permutation satisfies for a unique pair . This is accomplished by the following formula (writing for the input variable that is if and only if ):

This formula has depth and size (as measured by number of gates). Since , this size bound is as required. Formulas giving the binary representation of and (whenever uniquely exists) have just a single OR gate:

Onto the induction step where . Fix and with and

Letting , we sample independent random sequences of sets where for each , we have with .777A minor technicality arises when : in this case, we should instead sample . This case can be also avoided by approximating to an arbitrary additive constant : if we instead consider defined by , then we have .

Writing for and for and for and for , we now introduce auxiliary randomized formulas defined by

(Here abbreviates the formula .) If we consider the random sequence (in place of the arbitrary fixed sequence ), then for every input , with high probability, the formula outputs if, and only if, there exists a -path such that isolates the -path and isolates the -path .

Note that the number of -paths in has expectation (); it is easily shown that this number is at most with high probability. For each -path and , we have (by independence)

A further argument888This is a straightforward union bound. Here is where we use the assumption that for all . shows that

By independence of , we next have

Recalling that , the above bound will be (i.e., “with high probability”) for a suitable choice of in the exponent of (for instance, if we set for any constant ).

We have shown that, with high probability, for every -path in , there exists such that outputs . This justifies defining

In light of the above discussion, for random , the subformula with high probability asserts the existence of a -path in , while the remainder of asserts uniqueness. Formulas are defined by

If formulas and (respectively, ) and have depth at most and size at most (respectively, and ), then it is readily seen that formulas and have depth and size where

This recurrence justifies the bounds and .

As for the error probability of Properties (1) and (2), it should be clear that every usage of “with high probability” in this argument can be made to be by setting for suitable constants . ∎

Lemma 7.5 has the following corollary, which for each , gives a collection of randomized formulas of aggregate size that compute the product of permutations. Moreover, these formulas, on input , produce a list of all paths such that for all .

Corollary 7.6.

For every , there exists a matrix of randomized formulas

each of depth and size and taking a sequence of permutations as input, such that the following properties hold with probability :

-

1.

Each row in is either the all-0 string or contains the binary representation of integers for some -path .

-

2.

For every -path , the binary representation of integers is given by at least one row of .

Similar to the bound in Remark 7.2, Corollary 7.6 is obtained from Lemma 7.5 by covering with random rectangles where each has the same distribution as (i.e., ). The rows of are then given by the conjunction of with formulas . Property (1) is immediate from Lemma 7.5, while Property (2) follows by noting that, with high probability, every -path in is isolated by a rectangle for some .

7.1 Upper bounds on

We describe a few different constructions giving upper bounds on for sequences . Thanks to Corollary 7.6, each of these constructions corresponds to randomized formulas of size computing the product of permutations. Our best bound, , is obtained via a construction we call “Fibonacci overlapping”.

7.1.1 Recursive doubling

For , let

Then and for ,

This construction achieves

7.1.2 Maximally overlapping joins

For , let

Then and for ,

This construction achieves

Since , this upper bound is worse that the one from recursive doubling.

It turns out that a upper bound is achievable via a different construction via the maximally overlapping join-tree. If where and , we instead define

(Note that since .) In the base case (i.e., ), we have . When and , we have

| (by symmetry) | ||||

| ( coordinate increases from to ) | ||||

When and (i.e., ), we have

For any , this recurrence shows

This second construction thus achieves

7.1.3 Fibonacci overlapping joins

Let and for , let . For , let

We have and

For , we have

For with , this construction gives with

For , the bound extends to all of the form

This construction proves the following

Theorem 7.7.

For all , there exists with

where is the golden ratio.

Since , Theorem 7.7 improves the upper bounds from the recursive doubling and maximally overlapping join-trees described above. As a corollary of Corollary 7.6 and Theorem 7.7, we have

Corollary 7.8.

There exist randomized formulas of size that compute the product of -permutation matrices.

7.2 Tightness of upper bounds

We say that a join-tree (over ) is connected if is connected for all . For every connected join-tree with graph , we can consider the constrained complexity measure where parameters in the definition of are fixed according to . As described in Remark 7.2, the potential function implies a lower bound .

Let , and be the “recursive doubling”, “maximally overlapping” and “Fibonacci overlapping” connected join-trees recursively defined by and

The upper bounds on in the previous subsection respectively apply to constrained complexity measures , and .

With respect to these particular join-trees, the upper bounds of previous subsection are in fact tight! By () we have

| , | |||||

It follows that and . We get a lower bound on via ():

Therefore, for all , we have

This establishes the tightness of our upper bounds for these specific join-trees. It is open whether a different connected join-tree achieves a better bound. (The best lower bound on that we could determine is via a strengthening of the argument in Section 6 in the case of connected join-trees.)

Experimental results.

For any connected join-tree , the value is computable by a linear program with variables and constraints. In fact, our second upper bound for the maximally overlapping pattern (achieving ) was found with the help of this linear programs!

We experimentally searched for connected join-trees that beat the upper bound via Fibonacci overlapping by evaluating on various examples, both structured and randomly generated. We could not find any better upper bound. (In particular, join-trees appears optimal among a broad class of “recursively overlapping” join-trees.) It is tempting to conjecture that in fact gives the optimal bound, that is, for all . We leave this as an intriguing open question.

8 Open problems

We conclude by mentioning some open questions raised by this work.

Problem 1.

Prove that for all graphs . (An would also be interesting.)

Problem 1 is unlikely to follow from any excluded-minor approximation of tree-depth along the lines of Theorem 1.6. A first step to resolving this problem is to identify, for each graph , a particular threshold weighting such that is a “hard” input distribution with respect to the average-case formula size of . (The paper [8] does precisely this with respect to circuit size.)

Problem 1 should be easier to tackle in the special case of trees. (We remark that our lower bounds for and , combined with results in [4, 6], imply that for all trees .)

Problem 2.

Prove that for all trees .

A solution to Problem 2 could perhaps be shown by a common generalization of our lower bounds for and .

A third open problem is to nail down the exact average-case formula size of (or the related problem of multiplying permutations).

Problem 3.

Prove that or find an upper bound improving Theorem 1.10.

Finally and most ambitiously:

Problem 4.

Prove that is a lower bound the unrestricted formula size .

This of course would imply . An lower bound for in the average-case (or for the problem of multiplying permutations) would moreover imply . Although Problem 3 lies beyond current techniques, the applicability of the pathset framework in establishing lower bounds in the disparate and monotone settings is possibly reason for optimism.

Appendix A Appendix: Lower bound from [15]

This appendix gives the proof of Lemma 2.15 from [15]. As in Section 6, we consider infinite pattern graph with the constant threshold weighting .

Definition A.1.

For a join-tree , let denote the length of the longest path in (i.e., the number of edges in the largest connected component of ).

We omit the proof of the following lemma, which is similar to Lemma 6.4.

Lemma A.2.

For every join-tree and set , if intersects distinct connected components of , then

We now present the result from [15] that implies Lemma 2.15. (The precise value of in Lemma A.3, below, is thanks to an optimization suggested by an anonymous referee of the journal paper [15].)

Lemma A.3.

For every join-tree , where .

Proof.

Here is chosen such that .

We argue by induction on join-trees. The base case where is atomic is trivial. For the induction step, let be a non-atomic join-tree and assume the lemma holds for all smaller join-trees. We will consider a sequence of cases, which will be summarized at the end of the proof.

First, consider the case that is disconnected. Let (). Let be the set of all vertices of , except those in the largest connected component of . We have

| (Lemma A.2) | ||||

| (induction hypothesis) | ||||

This proves the lemma in the case where is disconnected.

Therefore, we proceed under the assumption that is connected (i.e. ). Without loss of generality, we assume that (i.e. ). Our goal is to show that

Consider the case that there exists a sub-join-tree such that and . Note that (i.e. the number of edges in the largest component of is at least the number of edges in divided by the number of components in ). We have

| (induction hypothesis) | ||||

| () | ||||

| ( and increasing) | ||||

This proves the lemma in this case.

Therefore, we proceed under the following assumption:

| () |

Going forward, the following notation will be convenient: for a proper sub-join-tree , let denote the parent of in , and let denote the sibling of in . Note that .

By walking down the join-tree , we can proper sub-join-trees such that

Fix any choice of such and . Note that and are connected by ( ‣ A). In particular, is a path of length with initial endpoint , and is a path of length with final endpoint .

Consider the case that and are vertex-disjoint. Note that , so the assumption ( ‣ A) implies that and are connected and . Let denote the least common ancestor of and in . We have

| (by ) | ||||

| ( and ) | ||||

| (induction hypothesis) | ||||

Therefore, we proceed under the assumption that and are not vertex-disjoint. It follows that or . Without loss of generality, we assume that . (We now forget about and .)

Before continuing, let’s take stock of the assumptions we have made so far:

Going forward, we will define vertices where . The following illustration will be helpful for what follows:

We first define and as follows: Let be the component of containing . (That is, the component of in is a path whose initial vertex is ; let be the final vertex in this path.) Let be the vertex in with maximal index (i.e. farthest away from ).

Note that contains edges for all . (In the event that , since is a path of length and does not contain vertices , it follows that contains all edges between and .) Therefore, . It follows that

Next, note that . We now walk down to find a proper sub-join-tree such that

Fix any choice of such . Note that is connected by ( ‣ A).