Treatment Effect Estimation with Disentangled Latent Factors

Abstract

Much research has been devoted to the problem of estimating treatment effects from observational data; however, most methods assume that the observed variables only contain confounders, i.e., variables that affect both the treatment and the outcome. Unfortunately, this assumption is frequently violated in real-world applications, since some variables only affect the treatment but not the outcome, and vice versa. Moreover, in many cases only the proxy variables of the underlying confounding factors can be observed. In this work, we first show the importance of differentiating confounding factors from instrumental and risk factors for both average and conditional average treatment effect estimation, and then we propose a variational inference approach to simultaneously infer latent factors from the observed variables, disentangle the factors into three disjoint sets corresponding to the instrumental, confounding, and risk factors, and use the disentangled factors for treatment effect estimation. Experimental results demonstrate the effectiveness of the proposed method on a wide range of synthetic, benchmark, and real-world datasets.

Introduction

Estimating the effect of a treatment on an outcome is a fundamental problem faced by many researchers and has a wide range of applications across diverse disciplines. In social economy, policy makers need to determine whether a job training program will improve the employment perspective of the workers (Athey and Imbens 2016). In online advertisement, companies need to predict whether an advertisement campaign could persuade a potential buyer into buying the product (Rzepakowski and Jaroszewicz 2011).

To estimate treatment effect from observational data, the treatment assignment mechanism needs to be independent of the possible outcomes when conditioned on the observed variables, i.e., the unconfoundedness assumption (Rosenbaum and Rubin 1983) needs to be satisfied. With this assumption, treatment effects can be estimated from observational data by adjusting on the confounding variables which affects both the treatment assignment and the outcome. The treatment effect estimation may be biased if not all the confounders are considered in the estimation (Pearl 2009).

From a theoretical perspective, practitioners are tempted to include as many variables as possible to ensure the satisfaction of the unconfoundedness assumption. This is because confounders can be difficult to measure in the real-world and practitioners need to include noisy proxy variables to ensure unconfoundedness. For example, the socio-economic status of patients confounds treatment and prognosis, but cannot be included in the electronic medical records due to privacy concerns. It is often the case that such unmeasured confounders can be inferred from noisy proxy variables which are easier to measure. For instance, the zip codes and job types of patients can be used as proxies to infer their socio-economic statuses (Sauer et al. 2013).

From a practical perspective, the inflated number of variables included for confounding adjustment reduces the efficiency of treatment effect estimation. Moreover, it has been previously shown that including unnecessary covariates is suboptimal when the treatment effect is estimated non-parametrically (Hahn 1998; Abadie and Imbens 2006; Häggström 2017). In a high dimensional scenario, eventually many included variables will not be confounders and should be excluded from the set of adjustment variables.

Most existing treatment estimation algorithms treat the given variables “as is”, and leave the task of choosing confounding variables to the user. It is clear that the users are left with a dilemma: on the one hand including more variables than necessary produces inefficient and inaccurate estimators; on the other hand restricting the number of adjustment variables may exclude confounders themselves or proxy variables of the confounders and thus increases the bias of the estimated treatment effects. With only a handful of variables, the problem can be avoided by consulting domain experts. However, a data-driven approach is required in the big data era to deal with the dilemma.

In this work, we propose a data-driven approach for simultaneously inferencing latent factors from proxy variables and disentangling the latent factors into three disjoint sets as illustrated in Figure 1: the instrumental factors which only affect the treatment but not the outcome, the risk factors which only affect the outcome but not the treatment, and the confounding factors that affect both the treatment and the outcome. Since our method builds upon the recent advancement of the research on variational autoencoder (Kingma and Welling 2014), we name our method Treatment Effect by Disentangled Variational AutoEncoder (TEDVAE). Our main contributions are:

-

•

We address an important problem in treatment effect estimation from observational data, where the observed variable may contain confounders, proxies of confounders and non-confounding variables.

-

•

We propose a data-driven algorithm, TEDVAE, to simultaneously infer latent factors from proxy variables and disentangle confounding factors from the others for a more efficient and accurate treatment effect estimation.

-

•

We validate the effectiveness of the proposed TEDVAE algorithm on a wide range of synthetic datasets, treatment effect estimation benchmarks and real-world datasets.

The rest of this paper is organized as follows. In Section 2, we discuss related works. The details of TEDVAE is presented in Section 3. In Section 4, we discuss the evaluation metrics, datasets and experiment results. Finally, we conclude the paper in Section 5.

Related Work

Treatment effect estimation has steadily drawn the attentions of researchers from the statistics and machine learning communities. During the past decade, several tree based methods (Su et al. 2009; Athey and Imbens 2016; Zhang et al. 2017, 2018) have been proposed to address the problem by designing a treatment effect specific splitting criterion for recursive partitioning. Ensemble algorithms and meta algorithms (Künzel et al. 2019; Wager and Athey 2018) have also been explored. For example, Causal Forest(Wager and Athey 2018) builds ensembles using the Causal Tree (Athey and Imbens 2016) as base learners. X-Learner (Künzel et al. 2019) is a meta algorithm that can utilize off-the-shelf machine learning algorithms for treatment effect estimation.

Deep learning based heterogeneous treatment effect estimation methods have attracted increasingly research interest in recent years (Shalit, Johansson, and Sontag 2017; Alaa and Schaar 2018; Louizos et al. 2017; Hassanpour and Greiner 2018; Yao et al. 2018; Yoon, Jordan, and van der Schaar 2018). Counterfactual Regression Net (Shalit, Johansson, and Sontag 2017) and several other methods (Yao et al. 2018; Hassanpour and Greiner 2018) have been proposed to reduce the discrepancy between the treated and untreated groups of samples by learning a representation such that the two groups are as close to each other as possible. However, their designs do not separate the covariates that only contribute to the treatment assignment from those only contribute to the outcomes. Furthermore, these methods are not able to infer latent covariates from proxies.

Variable decomposition (Kun et al. 2017; Häggström 2017) has been previously investigated for average treatment effect estimation. Our method has several major differences from the above methods: (i) our method is capable of estimating the individual level heterogeneous treatment effects, where existing ones only focus on the population level average treatment effect; (ii) we are able to identify the non-linear relationships between the latent factors and their proxies, whereas their approach only models linear relationships. Recently, a deep representation learning based method, DR-CFR (Hassanpour and Greiner 2020) is proposed for treatment effect estimation.

Another work closely related to ours is the Causal Effect Variational Autoencoder (CEVAE) (Louizos et al. 2017), which also utilizes variational autoencoder to learn confounders from observed variables. However, CEVAE does not consider the existence of non-confounders, and is not able to learn the separated sets of instrumental and risk factors. As demonstrated by the experiments, disentangling the factors significantly improves the performance.

Method

Preliminaries

Let denote a binary treatment where indicates the -th individual receives no treatment (control) and indicates the individual receives the treatment (treated). We use to denote the potential outcome of if it were treated, and to denote the potential outcome if it were not treated. Noting that only one of the potential outcomes can be realized, and the observed outcome is . Additionally, let denote the “as is” set of covariates for the -th individual. When the context is clear, we omit the subscript in the notations.

Throughout the paper, we assume that the following three fundamental assumptions for treatment effect estimations (Rosenbaum and Rubin 1983) are satisfied:

Assumption 1.

(SUTVA) The Stable Unit Treatment Value Assumption requires that the potential outcomes for one unit (individual) is unaffected by the treatment of others.

Assumption 2.

(Unconfoundedness) The distribution of treatment is independent of the potential outcome when conditioning on the observed variables: .

Assumption 3.

(Overlap) Every unit has a non-zero probability to receive either treatment or control when given the observed variables, i.e., .

The first goal of treatment effect estimation is estimating the average treatment effect (ATE) which is defined as: , where denote an manipulation on by removing all its incoming edges and setting (Pearl 2009).

The treatment effect for an individual is defined as . Due to the counterfactual problem, we never observe and simultaneously and thus is not observed for any individual. Instead, we estimate the conditional average treatment effect , defined as .

Treatment Effect Estimation from Latent Factors

In this work, we propose the TEDVAE model (Figure 1) for estimating the treatment effects, where the observed pre-treatment variables can be viewed as generated from three disjoint sets of latent factors . Here are instrumental factors that only affect the treatment but not the outcome, are risk factors which only affect the outcome but not the treatment, and are confounding factors that affect both the treatment and the outcome.

On the one hand, the proposed TEDVAE model in Figure 1 provides two important benefits. The first one is that by explicitly modelling for the instrumental factors and adjustment factors, it accounts for the fact that not all variables in the observed variables set are confounders. The second benefit is that it allows for the possibility of learning unobserved confounders that from their proxy variables.

On the other hand, our model diagram does not pose any restriction other than the three standard assumptions discussed in Section 3.1. To see this, consider the case where every variable in itself is a confounder, i.e., , then the generating mechanism in the TEDVAE model becomes with and the model in Figure 1 becomes identical to the widely used diagram for treatment effect estimation (Figure 2 in (Imbens 2019)).

With our model, the estimation of treatment effect is immediate using the back-door criterion (Pearl 2009):

Theorem 1.

The effect of on can be identified if we recover the confounding factors from the data.

Proof.

For the estimation of the conditional average treatment effect, our result follows from Theorem 3.4.1 in (Pearl 2009) as shown in the following theorem:

Theorem 2.

The conditional average treatment effect of on conditioned on can be identified if we recover the confounding factors and risk factors .

Proof.

Let denote the causal structure obtained by removing all incoming edges of in Figure 1, denote the structure by deleting all outgoing edges of .

Noting that in , using the three rules of do-calculus we can remove from the conditioning set and obtain . with Rule 1. Furthermore, using Rule 2 with in yields . ∎

An implication of Theorem 1 and 2 is that they are not restricted to binary treatment. In other words, our proposed method can be used for estimating treatment effect of a continuous treatment variable, while most of the existing estimators are not able to do so. However, due to the lack of datasets with continuous treatment variables for evaluating this, we focus on the case of binary treatment variable and leave the continuous treatment case for future work.

Theorems 1 and 2 suggest that disentangling the confounding factors allows us to exclude unnecessary factors when estimating ATE and CATE. However, keen readers may wonder since we already assumed unconfoundedness, doesn’t straightforwardly adjusting for suffice?

Theoretically, it has been shown that both the bias (Abadie and Imbens 2006) and the variance (Hahn 1998) of treatment effect estimation will increase if variables unrelated to the outcome is included during the estimation. Therefore, it is crucial to differentiate the instrumental, confounding and risk factors and only use the appropriate factors during treatment effect estimation. In the next section, we propose our data-driven approach to learn and disentangle the latent factors using a variational autoencoder.

Learning of the Disentangled Latent Factors

In the above discussion, we have seen that removing unnecessary factors is crucial for efficient and accurate treatment effect estimation. We have assumed that the mechanism which generates the observed variables from the latent factors and the decomposition of latent factors are available. However, in practice both the mechanism and the decomposition are not known. Therefore, the practical approach would be to utilize the complete set of available variables during the modelling to ensure the satisfaction of the unconfoundedness assumption, and utilize a data-driven approach to simultaneously learn and disentangle the latent factors into disjoint subsets.

To this end, our goal is to learn the posterior distribution for the set of latent factors with as illustrated in Figure 1, where are independent of each other and correspond the instrumental factors, confounding factors, and risk factors, respectively. Because exact inference would be intractable, we employ neural network based variational inference to approximate the posterior . Specifically, we utilize three separate encoders , , and that serve as variational posteriors over the latent factors. These latent factors are then used by a single decoder for the reconstruction of . Following standard VAE design, the prior distributions are chosen as Gaussian distributions (Kingma and Welling 2014).

Specifically, the factors and the generative models for and are described as:

| (4) |

with being the suitable distribution for the -th observed variable, is a function parametrized by neural network, and being the logistic function, , and are the parameters that determine the dimensions of instrumental, confounding, and risk factors to infer from .

For continuous outcome variable , we parametrize it as using a Gaussian distribution with its mean and variance given by a pair of disjoint neural networks that defines and . This pair of disjoint networks allows for highly imbalanced treatment. Specifically, for continuous we parametrize it as:

| (5) |

where , , , are neural networks parametrized by their own parameters. The distribution for the binary outcome case can be similarly parametrized with a Bernoulli distribution.

In the inference model, the variational approximations of the posteriors are defined as:

| (6) |

where and are the means and variances of the Gaussian distributions parametrized by neural networks similarly to the and in the generative model.

Given the training samples, the parameters can be optimized by maximizing the evidence lower bound (ELBO):

| (7) |

To encourage the disentanglement of the latent factors and ensure that the treatment can be predicted from and , and the outcome can be predicted from and , we add two auxiliary classifiers to the variational lower bound. Finally, the objective of TEDVAE can be expressed as

| (8) |

where and are the weights for the auxiliary objectives.

For predicting the CATEs of new subjects given their observed covariates , we use the encoders and to sample the posteriors of the confounding and risk factors for times, and average over the predicted outcome using the auxiliary classifier .

An important difference between TEDVAE and CEVAE lies in their inference models. During inference, CEVAE depends on , and for inferencing ; in other words, CEVAE needs to estimate and , inference as , and finally predict the CATE as . The estimations of and are unnecessary since we assume that and are generated by the latent factors and inferencing the latents should only depend on as in TEDVAE. As we later show in the experiments, this difference is crucial even when no instrumental or risk factors are present in the data.

Experiments

We empirically compare TEDVAE with traditional and neural network based treatment effect estimators. For traditional methods, we compare with tailor designed methods including Squared t-statistic Tree (t-stats) (Su et al. 2009) and Causal Tree (CT) (Athey and Imbens 2016); ensemble methods including Causal Random Forest (CRF) (Wager and Athey 2018), Bayesian Additive Regression Trees (BART) (Hill 2011), and meta algorithm X-Learner (Künzel et al. 2019) using Random Forest (Breiman et al. 1984) as base learner (X-RF). For deep learning based methods, we compare with representation learning based methods including Counterfactual Regression Net (CFR) (Shalit, Johansson, and Sontag 2017), Similarity Preserved Individual Treatment Effect (SITE) (Yao et al. 2018), and with a deep learning variable decomposition method for Counterfactual Regression (DR-CFR) (Hassanpour and Greiner 2020). We also compare with generative methods including Causal Effect Variational Autoencoder (CEVAE) (Louizos et al. 2017) and GANITE (Yoon, Jordan, and van der Schaar 2018). Parameters for the compared methods are tuned by cross-validated grid search on the value ranges recommended in the code repository. The code is available at https://github.com/WeijiaZhang24/TEDVAE.

Evaluation Criteria

For evaluating the performance of CATE estimation, we use the Precision in Estimation of Heterogeneous Effect (PEHE) (Hill 2011; Shalit, Johansson, and Sontag 2017; Louizos et al. 2017; Dorie et al. 2019) which measures the root mean squared distance between the estimated and the true CATE when ground truth is available: , where is the ground truth CATE for subjects with observed variables .

For evaluating the performance of the average treatment effect (ATE) estimation, the ground truth ATE can be calculated by averaging the differences of the outcomes in the treated and control groups if randomized controlled trials data is available. Then, when comparing the ground truth ATE with the estimated ATE obtained from a non-randomized sample (observational sample or created via biased sampling) of the dataset, the performances can then be evaluated using the mean absolute error in ATE (Hill 2011; Shalit, Johansson, and Sontag 2017; Louizos et al. 2017; Yao et al. 2018) for evaluation: , where is the estimated ATE, and are the treatments and outcomes from the randomized data. For both and , we use superscripts and to denote their values on the training and test sets, respectively.

Synthetic Datasets

We first conduct experiments using synthetic datasets to investigate TEDVAE’s capability of inferring the latent factors and estimating the treatment effects. Due to the page limit, we only provide an outline of the synthetic dataset and provide the detailed settings in the supplementary materials.

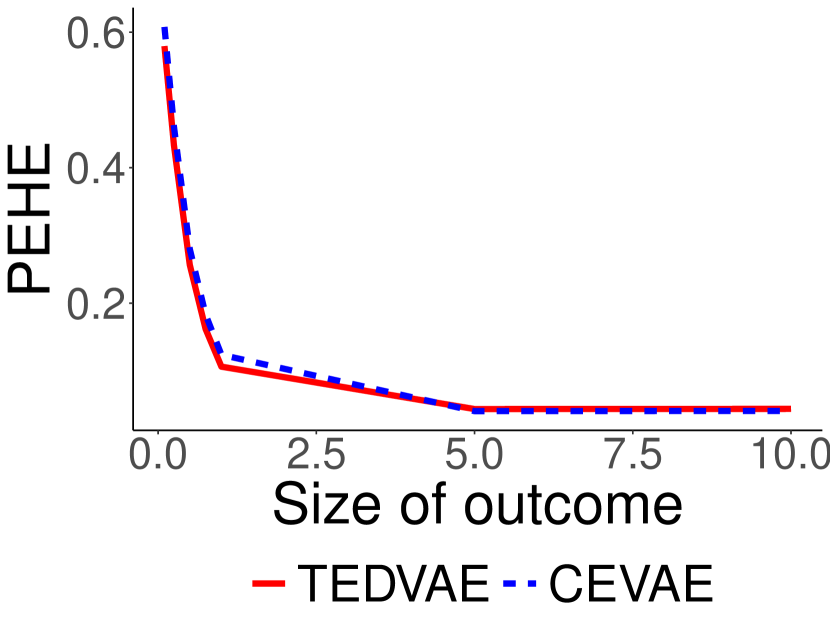

The first setting of synthetic datasets studies the benefit of disentangling the confounding factors from instrumental and risk factors, and are generated using the structure depicted in Figure 1. We illustrate the results in the first row of Figure 3. It can be seen that when the instrumental and risk factors exist in the data, the benefit of disentanglement is signficance as demonstrated by the PEHE curves between TEDVAE and CEVAE. When the proportions of the treated samples varies, the performances of CEVAE fluctuates severely and the error remains high even when the dataset is balanced; however, the PEHEs of TEDVAE are stable even when the dataset is highly imbalanced, and are always stays significantly lower than CEVAE. When the scales of outcome and CATE change, TEDVAE also performs consistently and significantly better than CEVAE.

The second setting for the synthetic datasets are designed to study how TEDVAE performs when the instrumental and risk factors are absent, and follow the same data generating procedure as in the CEVAE (Louizos et al. 2017). We illustrate the results of this synthetic dataset in the second row of Figure 3. Therefore, it is reasonable to expect that CEVAE would perform better than TEDVAE since the instrumental factors and risk factors do not exist. However, from the second row of Figure 3 we can see that TEDVAE either performs better than CEVAE, or performs as well as CEVAE using a wide range of parameters under this setting. This is possibly due to the differences in predicting for previous unseen samples between TEDVAE and CEVAE, where CEVAE needs to follow a complicated procedure of inferencing and first and then inferencing the latents as , whereas in TEDVAE this is not needed. These results suggests that the TEDVAE model is able to effectively learn the latent factors and estimate the CATE even when the instrumental and risk factors are absent. It also indicates that the TEDVAE algorithm is robust to the selection of the latent dimensionality parameters.

Next, we investigate whether TEDVAE is capable of recovering the latent factors of , , and that are used to generate the observed covariates . To do so, we compare the performances of TEDVAE when setting the , and parameters to 10 against itself when setting one of the latent dimensionality parameter of TEDVAE to , i.e., setting and forcing TEDVAE to ignore the existence of . If TEDVAE is indeed capable of recovering the latent factors, then its performances with non-zero latent dimensionality parameters should be better than its performance when ignoring the existence of any of the latent factors. Figure 4 illustrates the capability of TEDVAE for identifying the latent factors using radar chart. Taking the Figure 4(a) as example, the and polygons correspond to the performances of TEDVAE when setting the dimension parameter (identify ) and (ignore ). From the figures we can clearly see that the performances of TEDVAE are significantly better when the latent dimensionality parameters are set to non-zero, than setting any of the latent dimensionality to 0.

Benchmarks and Real-world Datasets

In this section, we use two benchmark datasets for treatment effect estimation to compare TEDVAE with the baselines.

Benchmark I: 2016 Atlantic Causal Inference Challenge

| Methods | ||

|---|---|---|

| CT | 4.810.18 | 4.960.21 |

| t-stats | 5.180.18 | 5.440.20 |

| CF | 2.160.17 | 2.180.19 |

| BART | 2.130.18 | 2.170.15 |

| X-RF | 1.860.15 | 1.890.16 |

| CFR | 2.050.18 | 2.180.20 |

| SITE | 2.320.19 | 2.410.23 |

| DR-CFR | 2.440.20 | 2.560.21 |

| GANITE | 2.780.56 | 2.84 0.61 |

| CEVAE | 3.120.28 | 3.280.35 |

| TEDVAE | 1.750.14 | 1.770.17 |

The 2016 Atlantic Causal Inference Challenge (ACIC2016) (Dorie et al. 2019) contains 77 different settings of benchmark datasets that are designed to test causal inference algorithms under a diverse range of real-world scenarios. The dataset contains 4802 observations and 58 variables. The outcome and treatment variables are generated using different data generating procedures for the 77 settings, providing benchmarks for a wide range of treatment effect estimation scenarios. This dataset can be accessed at https://github.com/vdorie/aciccomp/tree/master/2016.

We report the average PEHE metrics across 77 settings where each setting is repeated for 10 replications. For TEDVAE, the parameters are selected using the average of the first five settings, instead of tuning separately for the 77 settings. This approach has two benefits: firstly and most importantly, if an algorithm performs well using the same parameters across all 77 settings, it indicates that the algorithm is not sensitive to the choice of parameters and thus would be easier for practitioners to use in real-world scenarios; the second benefit is to save computation costs, as conducting parameter tuning across a large amount of datasets can be computationally overwhelming for practitioners. As a result, we set the latent dimensionality parameters as , , and set the weight for auxiliary losses as . For all the parametrized neural networks, we use 5 hidden layers and 100 hidden neurons in each layer, with ELU activation. with a 60%/30%/10% train/validation/test splitting proportions.

The results on the ACIC2016 datasets are reported in Table 1. We can see that TEDVAE performs significantly better than the compared methods. These results show that, without tuning parameters individually for each setting, TEDVAE achieves state-of-the-art performances across diverse range of data generating procedures, which empirically demonstrates that TEDVAE is effective for treatment effect estimation across different settings.

| Setting A | Setting B | |||

| Methods | ||||

| CT | 1.480.12 | 1.560.13 | 5.460.08 | 5.730.09 |

| t-stats | 1.780.09 | 1.910.12 | 5.400.08 | 5.710.09 |

| CF | 1.010.08 | 1.090.16 | 3.860.05 | 3.910.07 |

| BART | 0.870.07 | 0.880.07 | 2.780.03 | 2.910.04 |

| X-RF | 0.980.08 | 1.090.15 | 3.500.04 | 3.590.06 |

| CFR | 0.670.02 | 0.730.04 | 2.600.04 | 2.760.04 |

| SITE | 0.650.07 | 0.670.06 | 2.650.04 | 2.870.05 |

| DR-CFR | 0.620.15 | 0.650.18 | 2.730.04 | 2.930.05 |

| GANITE | 1.840.34 | 1.900.40 | 3.680.38 | 3.840.52 |

| CEVAE | 0.950.12 | 1.040.14 | 2.900.10 | 3.240.12 |

| TEDVAE | 0.590.11 | 0.600.14 | 2.100.09 | 2.220.08 |

Benchmark II: Infant Health Development Program

The Infant Health and Development Program (IHDP) is a randomized controlled study designed to evaluate the effect of home visit from specialist doctors on the cognitive test scores of premature infants. The datasets is first used for benchmarking treatment effect estimation algorithms in (Hill 2011), where selection bias is induced by removing a non-random subset of the treated subjects to create an observational dataset, and the outcomes are simulated using the original covariates and treatments. It contains 747 subjects and 25 variables that describe both the characteristics of the infants and the characteristics of their mothers. We use the same procedure as described in (Hill 2011) which includes two settings of this benchmark: ‘Setting A” and “Setting B”, where the outcomes follow linear relationship with the variables in “Setting A” and exponential relationship in “Setting B”. The datasets can be accessed at https://github.com/vdorie/npci. The reported performances are averaged over 100 replications with a training/validation/test splits proportions of 60%/30%/10%.

Since evaluating treatment effect estimation is difficult in real-world scenarios (Alaa and van der Schaar 2019), a good treatment effect estimation algorithm should perform well across different datasets with minimum requirement for parameter tuning. Therefore, for TEDVAE we use the same parameters in the ACIC dataset and do not perform parameter tuning on the IHDP dataset. For the compared traditional methods, we also use the same parameters as selected on the ACIC benchmark. For the compared deep learning methods, we conduct grid search using the recommended parameter ranges from the relevant papers.

From Table 2 we can see that TEDVAE achieves the lowest PEHE errors among the compared methods on both Setting A and Setting B of the IHDP benchmark. Wilcoxon signed rank tests () indicate that TEDVAE is significantly better than the compared methods. Since TEDVAE uses the same parameters on the IHDP datasets as in the previous ACIC benchmarks, these results demonstrate that the TEDVAE model is suitable for diverse real-world scenarios and is robust to the choice of parameters.

| Twins | ||

|---|---|---|

| Methods | ||

| CT | 0.0340.002 | 0.0380.007 |

| t-stats | 0.0320.003 | 0.0330.005 |

| CF | 0.0250.001 | 0.0250.001 |

| BART | 0.0500.002 | 0.0510.002 |

| X-RF | 0.0750.003 | 0.0740.004 |

| CFR | 0.0290.002 | 0.0300.002 |

| SITE | 0.0310.003 | 0.0330.003 |

| DR-CFR | 0.0320.002 | 0.0340.003 |

| GANITE | 0.0160.004 | 0.0180.005 |

| CEVAE | 0.0460.020 | 0.0470.021 |

| TEDVAE | 0.0060.002 | 0.0060.002 |

Real-world Dataset: Twins

In this section, we use a real-world randomized dataset to compare the methods capability of estimating the average treatment effects.

The Twins dataset has been previously used for evaluating causal inference in (Louizos et al. 2017; Yao et al. 2018). It consists of samples from twin births in the U.S. between the year of 1989 and 1991 provided in (Almond, Chay, and Lee 2005). Each subject is described by 40 variables related to the parents, the pregnancy and the birth statistics of the twins. The treatment is considered as if a sample is the heavier one of the twins, and considered as if the sample is lighter. The outcome is a binary variable indicating the children’s mortality after a one year follow-up period. Following the procedure in (Yao et al. 2018), we remove the subjects that are born with weight heavier than 2,000g and those with missing values, and introduced selection bias by removing a non-random subset of the subjects. The final dataset contains 4,813 samples. The data splitting is the same as previous experiments, and the reported results are averaged over 100 replications. The ATE estimation performances are illustrated in Table 3. On this dataset, we can see that TEDVAE achieves the best performance with the smallest among all the compared algorithms.

Overall, the experiments results show that the performances of TEDVAE are significantly better than the compared methods on a wide range of synthetic, benchmark, and real-world datasets. In addition, the results also indicate that TEDVAE is less sensitive to the choice of parameters than the other deep learning based methods, which makes our method attractive for real-world application scenarios.

Conclusion

We propose the TEDVAE algorithm, a state-of-the-art treatment effect estimator which infer and disentangle three disjoints sets of instrumental, confounding and risk factors from the observed variables. Experiments on a wide range of synthetic, benchmark, and real-world datasets have shown that TEDVAE significantly outperforms compared baselines. For future work, a path worth exploring is extending TEDVAE for treatment effects with non-binary treatment variables. While most of the existing methods are restricted to binary treatments, the generative model of TEDVAE makes it a promising candidate for extension to treatment effect estimation with continuous treatments.

References

- Abadie and Imbens (2006) Abadie, A.; and Imbens, G. W. 2006. Large Sample Properties of Matching Estimators for Average Treatment Effects. Econometrica 74(1): 235–267.

- Alaa and Schaar (2018) Alaa, A.; and Schaar, M. 2018. Limits of Estimating Heterogeneous Treatment Effects: Guidelines for Practical Algorithm Design. In Proceedings of the 35th International Conference on Machine Learning, 129–138.

- Alaa and van der Schaar (2019) Alaa, A. M.; and van der Schaar, M. 2019. Validating Causal Inference Models via Influence Functions. In Proceedings of the 36th International Conference on Machine Learning, 191–201.

- Almond, Chay, and Lee (2005) Almond, D.; Chay, K. Y.; and Lee, D. S. 2005. The costs of low birth weight. The Quarterly Journal of Economics 120(3): 1031–1083.

- Athey and Imbens (2016) Athey, S.; and Imbens, G. 2016. Recursive Partitioning for Heterogeneous Causal Effects. Proceedings of the National Academy of Sciences of the United States of America 113(27): 7353–7360.

- Breiman et al. (1984) Breiman, L.; Friedman, J.; Stone, C. J.; and Olshen, R. 1984. Classification and Regression Trees. Belmont: Wadsworth.

- Dorie et al. (2019) Dorie, V.; Hill, J.; Shalit, U.; Scott, M.; and Cervone, D. 2019. Automated versus Do-It-Yourself Methods for Causal Inference: Lessons Learned from a Data Analysis Competition. Stastical Science 34(1): 43–68.

- Hahn (1998) Hahn, J. 1998. On the Role of the Propensity Score in Efficient Semiparametric Estimation of Average Treatment Effects. Econometrica 66(2): 315–331.

- Hassanpour and Greiner (2018) Hassanpour, N.; and Greiner, R. 2018. CounterFactual Regression with Importance Sampling Weights. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence (IJCAI’19), 5880–5887.

- Hassanpour and Greiner (2020) Hassanpour, N.; and Greiner, R. 2020. Learning Disentangled Representations for CounterFactual Regression. In Proceedings of the 8th International Conference on Learning Representations.

- Hill (2011) Hill, J. L. 2011. Bayesian Nonparametric Modeling for Causal Inference. Journal of Computational and Graphical Statistics 20(1): 217–240.

- Häggström (2017) Häggström, J. 2017. Data-driven confounder selection via Markov and Bayesian networks. Biometrics 74(2): 389–398.

- Imbens (2019) Imbens, G. W. 2019. Potential Outcome and Directed Acyclic Graph Approaches to Causality: Relevance for Empirical Practice in Economics. Journal of Economic Literature 58(4): 1129–1179.

- Kingma and Welling (2014) Kingma, D. P.; and Welling, M. 2014. Auto-Encoding Variational Bayes. In Proceedings of the 5th International Conference on Learning Representations (ICLR’14).

- Kun et al. (2017) Kun, K.; Cui, P.; Li, B.; Jiang, M.; Yang, S.; and Wang, F. 2017. Treatment effect estimation with data-driven variable decomposition. In Proceedings of the 31st AAAI Conference on Artificial Intelligence (AAAI’17), 140–146.

- Künzel et al. (2019) Künzel, S. R.; Sekhon, J. S.; Bickel, P. J.; and Yu, B. 2019. Metalearners for estimating heterogeneous treatment effects using machine learning. Proceedings of the National Academy of Sciences 116(10): 4156–4165.

- Louizos et al. (2017) Louizos, C.; Shalit, U.; Mooij, J.; Sontag, D.; Zemel, R.; and Welling, M. 2017. Causal Effect Inference with Deep Latent-Variable Models. In Advances in Neural Information Processing Systems 30 (NeurIPS’17).

- Pearl (2009) Pearl, J. 2009. Causality. Cambridge University Press.

- Rosenbaum and Rubin (1983) Rosenbaum, P. R.; and Rubin, D. B. 1983. The central role of the propensity score in observational studies for causal effects. Biometrika 70(1): 41–55.

- Rzepakowski and Jaroszewicz (2011) Rzepakowski, P.; and Jaroszewicz, S. 2011. Decision trees for uplift modeling with single and multiple treatments. Knowledge and Information Systems 32(2): 303–327.

- Sauer et al. (2013) Sauer, B. C.; Brookhart, M. A.; Roy, J.; and VanderWeele, T. 2013. A review of covariate selection for non-experimental comparative effectiveness research. Pharmacoepidemiology and Drug Safety 22(11): 1139–1145.

- Shalit, Johansson, and Sontag (2017) Shalit, U.; Johansson, F. D.; and Sontag, D. 2017. Estimating individual treatment effect: generalization bounds and algorithms. In Proceedings of the 34th International Conference on Machine Learning (ICML’17), volume 70, 3076–3085.

- Su et al. (2009) Su, X.; Tsai, C.-L.; Wang, H.; Nkckerson, D. M.; and Li, B. 2009. Subgroup analysis via Recursive Partitioning. Journal of Machine Learning Research 10: 141–158.

- Wager and Athey (2018) Wager, S.; and Athey, S. 2018. Estimation and Inference of Heterogeneous Treatment Effects using Random Forests. Journal of the American Statistical Association 113(523): 1228–1242.

- Yao et al. (2018) Yao, L.; Li, S.; Li, Y.; Huai, M.; Gao, J.; and Zhang, A. 2018. Representation Learning for Treatment Effect Estimation from Observational Data. In Advances in Neural Information Processing Systems 31 (NeurIPS’18), 2638–2648.

- Yoon, Jordan, and van der Schaar (2018) Yoon, J.; Jordan, J.; and van der Schaar, M. 2018. GANITE: Estimation of Individualized Treatment Effects using Generative Adversarial Nets. In Proceedings of the 9th International Conference on Learning Representations (ICLR’18).

- Zhang et al. (2018) Zhang, W.; Le, T. D.; Liu, L.; and Li, J. 2018. Estimating heterogeneous treatment effect by balancing heterogeneity and fitness. BMC Bioinformatics 19(S19): 61–72.

- Zhang et al. (2017) Zhang, W.; Le, T. D.; Liu, L.; Zhou, Z.-H.; and Li, J. 2017. Mining heterogeneous causal effects for personalized cancer treatment. Bioinformatics 33(15): 2372–2378.