Transformer Transducer: One Model Unifying Streaming and Non-streaming Speech Recognition

Abstract

In this paper we present a Transformer-Transducer model architecture and a training technique to unify streaming and non-streaming speech recognition models into one model. The model is composed of a stack of transformer layers for audio encoding with no lookahead or right context and an additional stack of transformer layers on top trained with variable right context. In inference time, the context length for the variable context layers can be changed to trade off the latency and the accuracy of the model. We also show that we can run this model in a Y-model architecture with the top layers running in parallel in low latency and high latency modes. This allows us to have streaming speech recognition results with limited latency and delayed speech recognition results with large improvements in accuracy (20% relative improvement for voice-search task). We show that with limited right context (1-2 seconds of audio) and small additional latency (50-100 milliseconds) at the end of decoding, we can achieve similar accuracy with models using unlimited audio right context. We also present optimizations for audio and label encoders to speed up the inference in streaming and non-streaming speech decoding.

Index Terms— Transformer, RNN-T, sequence-to-sequence, encoder-decoder, end-to-end, speech recognition

1 Introduction

Past research has shown that having access to future audio context to encode the current audio frame in neural network models significantly improves speech recognition accuracy [1, 2, 3, 4]. Bidirectional LSTMs take advantage of future audio context, however the model can only be run when the entire audio is available. In the past few years, models employing self-attention mechanism have achieved state-of-art results for sequence modeling tasks [5, 6]. Transformer models encode an input sequence by running self-attention mechanism over left and right context window of each input in sequence. In speech recognition models the future audio context can be encoded by specifying a limited right context [4]. Since the right context is limited (unlike bidirectional LSTMs), this allows transformer models with future audio context to recognize speech in streaming fashion with some limited delay. This makes transformer models desirable especially for applications that can afford a higher streaming latency for better recognition quality.

Even in low latency streaming speech recognition systems (e.g. voice-search, dictation), the usability of the system depends on accuracy of the final recognized result. The accuracy of final results can be improved by applying n-best or lattice re-scoring techniques. For longform audio the extra latency introduced by rescoring models is not bounded and the accuracy improvements are also limited due to a lack of diversity in the n-best hypotheses. To address this problem, in this paper, we propose decoding speech using two transformer models in parallel - one with smaller right context for low-latency streaming recognition results and one with larger right context for final results. Unlike rescoring this works well for both short and long utterances. We show that it gives about 20% relative word error rate improvements over the low-latency results for voice-search task. The extra latency introduced by larger right context decoding is also bounded because of limited right context.

We further show that we can unify low-latency and high-latency models by training a single model that can decode speech in either low latency or high latency mode. We do this by varying right context for transformer layers (variable context layers) during training. By using only final few layers as variable context layers (referred to as Y-model architecture), and applying decoding optimizations presented in this paper, we show that it is possible to do efficient parallel decoding for high latency and low latency modes using one ASR model.

2 Transformer Transducers

2.1 Architecture

Transformer Transducer [4] is a model architecture that can be trained with end-to-end RNN-T loss [7] using transformer based audio encoder and label encoder. As shown in figure 1, T-T model predicts a probability distribution over the label space at every time step. The probability of an alignment can be factorized as

| (1) |

where is the sequence of non-blank labels in . In T-T architecture is parameterized with an audio encoder, a label encoder, and a joint network. The model defines as follows:

| (2) |

| (3) |

where each function is a different single-layer feed-forward neural network, is the audio encoder output at time , and is the label encoder output given the previous non-blank label sequence.

More details about each transformer layer is shown in figure 2. It contains normalization layer, masked multi-head attention layer with relative position encoding, residual connection, stacking/unstacking layer and feed forward layer. The residual connections are applied with normalized input to the output of the attention layer or feed forward layers. The stacking/unstacking layer can be used to change frame rate for each transformer layer which helps to speed up training and inference. For further optimization, the label encoder can also be a bigram label embedding model.

2.2 Variable Context Training

In self attention block of transformer architecture we compute the self attention over entire input sequence and then mask it based on the left and right context of the layer. In variable context training we keep the left context of the layers constant and sample a right context length from a given distribution. The sampled right context length decides the mask for the self attention. In our experiments we found that randomly sampling right context length for each layer independently led to unstable training, and we had to limit the number of right context configurations. During training we specify right context configuration as a list of right contexts for each transformer layer in the encoder ex: specifies a right context of 0 for first 15 layers and right context of 8 frames for last five layers. During training we uniformly sample a right context configuration from all these available configurations. We show in section 4.3.2 that models trained in this way can be used with any right context configurations used for training.

2.3 Y-model

Variable context training allows us to train transformer layers that are capable of using different right context from input, effectively at inference. We apply this technique to train a model that has input followed by several initial layers trained with zero right context and final few layers trained as variable context layers. With this model we can do speech recognition with different future audio context (depending on the right context used at inference time). This can be useful if an application can afford a higher latency for better quality results. We can also use this model for low latency speech recognition by running two parallel decoders using the same model, one with no or very small right context and one with larger right context. Since we keep the right context of shared layers constant (at zero) we only need to recompute the activation for the final layers with variable context. We call this a Y-model because we have parallel decoding branches running with different right context. We call the decoding branch with smaller right context ’low latency branch’ and decoding branch with higher right context ’high latency branch’

2.3.1 Inference and Recognition Latency

During inference we stream partial recognition results based on low latency branch (for better model responsiveness) and when the utterance ends we replace the recognition results with the results from high latency branch (for better ASR quality). Depending on the right context used for high latency branch (2.4sec in our experiments), it is always behind the low latency branch in decoding by a finite amount. When the user utterance ends we just need to run high latency branch decoding for the remaining lookahead (e.g. 2.4 secs) and show the final results. Since we have access to all the remaining audio context we can process it very quickly (no need to wait for streaming), by batching all the available context. The additional latency to show these final results at end of the utterance includes run time for computing activation for audio encoder layers with variable context in delayed branch and decoding. In section 4.3.2 we show that this can be done very quickly for lookahead audio context of 2.4 seconds, hence resulting in very small additional latency.

2.4 Constrained Alignment Training

Transformer-Transducer minimizes RNN-T loss which contains all the alignment paths for each label sequence. Although optimizing label likelihoods should provide advantage on improving ASR performance, a model can have high alignment delay because there’s no mechanism to restrict prediction delay in optimizing RNN-T loss. The high alignment delay happens especially for a streaming model which does not have a right context because the model tries to improve prediction accuracy by looking ahead future frames.

Constrained alignment training was originally proposed in [8]. It puts constraints on RNN-T loss by masking out high delay alignment paths from reference alignments. To find reference alignments, we used full-attention non-streaming Transformer-Transducer model as a reference alignment model because it showed almost no delay. Based on the reference alignment, we put constrained window on word-boundaries and any word label path outside of the constrained window is masked out from the RNN-T loss. We applied constrained alignment training on Y-model and its evaluation results are in section 4.3.2.

3 Inference Optimizations

In this section, we talk about the optimizations and implementations we did for speeding up the Transformer encoder in streaming and non-streaming applications and the label encoder used for speech decoding. This is required to make parallel decoding feasible for Y-model.

3.1 Streaming Transformer Audio Encoder

Streaming audio encoding is the process of taking audio frame(s) and previous states as inputs, and outputting the corresponding encoded feature(s) and next states. Unlike RNN/LSTM based audio encoder, Transformer encoder has no time dependency while encoding audio. In other word, to encode the -th frame, RNN/LSTM based encoders need to first encode from the -th to the -th frame before encoding the -th frame, whereas, Transformer encoder can encode all the frames at the same time in parallel. We refer to the way of encoding a batch of time steps in parallel ”batch step” inference. In the later section, we will discuss the effectiveness of batching more time frames in terms of inference speed.

3.2 Non-streaming Transformer Audio Encoder

In non-streaming inference, ideally we can run the Transformer encoder exactly the same way it is run during training time. However, in practice, because of the memory consumption in attention matrix computation, the Transformer encoder can be very memory expensive. As showed in [4], limited context Transformer encoder provides about the same quality as infinite context encoder. Based on this model architecture, we can compute the attention matrix for a few blocks at a time, and each block only attends to a limited set of s, which makes the memory consumption constant. We refer to this inference method as ”query slicing”.

3.3 Decoder Optimizations

In the transformer transducer model, we use a label encoder as an auto-regressive model to encode predicted label history. The label encoder output is combined with the audio encoder output using a dense layer before the softmax function. The decoding speed is highly dependent on the computational complexity of label encoder network because it is run for every hypothesis. For RNN-T models, it has been shown that the label history context length can be reduced significantly without affecting the accuracy of the models [9]. In this paper, we experimented with two models for label encoding. The first one is a transformer model with limited label context length as described in [4]. The other one is an embedding model with a bigram label context. The embedding model learns a weight vector of dimension for each possible bigram label context, where is the dimension of audio and label encoder outputs. The total number of parameters is where is the vocabulary size for the labels. The learned weight vector is simply used as the embedding of bigram label context in the T-T model. Since this is a simple embedding lookup based on label bigrams, the runtime for label encoder is very fast.

To further speed up the inference, we maintain a cache to store and reuse the computed label encoder outputs for the limited label contexts seen so far in decoding since during decoding the model will encode the same label history multiple times for different hypothesis.

4 Experiments and Results

4.1 Data

For our experiments we use 30K hours of speech data from voice-search application. The test set we use consists of 14K Voice Search utterances with duration less than 5.5 seconds long. The training and test sets are all anonymized and hand-transcribed. The input speech wave-forms are framed using a 32 msec window with 10 msec shift. We use 128 dimension logmel energy features and use as acoustic features after stacking 4 of them and subsampling by a factor of 3, resulting in 30msec features. During training we perform specaugment [10] on the acoustic features.

4.2 Limited Context Decoding

Tabel 1 shows WER and benchmarking results for different label encoder architectures. From the results we see that there is not much difference in WER when the context is reduce for the label encoder. Similar results have been reported before in [9]. For the case of limited context of 3 graphemes, we see a huge improvement in speed of decoder over the case when label encoder has 40 labels. This is because of the label encoder output caching as explained in section 3.3. For label context 2 it is even faster since the model itself is just a lookup table.

| Label encoder | WER | RTF |

|---|---|---|

| 40 grapheme context transformer | 4.8 | 0.3 |

| 3 grapheme context transformer | 4.8 | 0.02 |

| 2 grapheme emb lookup | 4.9 | 0.01 |

4.3 Y-model results

We present results on two Y-architecture models:

4.3.1 Y-Model1

The audio encoder has 20L of transformers with all the layers trained using variable context with following possible right context configurations: , , , and . In all these modes model is trained with an output delay of 4 frames. When evaluating the model with 240ms lookahead we use the right context configuration of , when evaluating with 1.2s lookahead we use and for 2.4 sec look ahead we use the configuration of

4.3.2 Y-Model2

The audio encoder has 20L of transformers with first 15 layers trained with no right context and last 5 layers trained with variable context with following possible right context configurations: , and . In all these modes model is trained with an output delay of 4 frames. When evaluating the model with 240ms lookahead we use the right context configuration of , when evaluating with 1.2s lookahead we use and for 2.4 sec look ahead we use the configuration of .

Table 2 shows baselines without constrained alignment training. We can see that Y-architecture with a high latency branch of 2.4 sec is very close to the full attention model performance. The same model in low latency mode has much better accuracy than our best streaming model with no right context. We also show the delay in word alignment for all the model evaluations. Since the low latency branch of Y-model2 is still delaying the output by 767 msec, it can get much better performance as it still looks ahead 240 msec + 767 msec by delaying the words. Another interesting observation is that 2.4 sec lookahead mode for Y model also delays the word alignments, although the full attention model does not delay. This is expected since delaying words helps low latency mode and does not affect high latency mode.

| Model | Lookahead | WER | Alignment Delay |

| Full context model | 34 sec | 4.8 | 60msec |

| Left context model | 240 msec | 6.1 | 982msec |

| Y model1 | 240msec | 6.1 | 883msec |

| 1.2sec | 5.2 | 780msec | |

| 2.4sec | 5.1 | 764msec | |

| Y model2 | 240msec | 5.3 | 767msec |

| 1.2sec | 5.0 | - | |

| 2.4sec | 5.0 | 742msec |

Table 3 shows the results of Y-model with constrained RNN-T loss, we can see that the words are now predicted much earlier, but this leads to degradation in quality of low latency branch. The quality for the higher latency mode is still same since it is not affected by word delays much.

| Model | Lookahead | WER | Alignment Delay |

|---|---|---|---|

| Y model2 | 240msec | 6.5 | 119msec |

| 2.4sec | 4.9 | 74msec |

The alignment delay is defined as the mean word alignment difference between reference non-streaming model and the constrained alignment Y model:

| (4) |

where is an alignment time for the word from the reference model, is an alignment time for the word from the Y model and N is the total number of words. For the low-latency mode of Y model, we used extra 4 right context at the last layer to reduce WER loss. Moreover, there is 4 output delay applied to Y model. Therefore, besides alignment delay, Y model has extra 240 msec for the low latency mode.

Table 3 shows constrained alignment model. Comparing with unconstrained Y model 2 at Table 2, alignment delay significantly improved: 767 msec to 119 msec and 742 msec to 74 msec for low and high latency modes, respectively. Moreover, constrained alignment high-latency mode did not show any WER loss because the high-latency mode already has enough right context and restricting its prediction delay did not hurt its performance. However, for the low-latency mode, WER degraded due to the reduced look-ahead frames.

For Y model, more accurate high-latency mode always comes to correct any error from the low-latency mode. Therefore, reducing alignment delay is more important for the low-latency mode, while maintaining high quality performance is the main concern for the high latency mode. The constrained alignment training is well suited for this.

4.4 Inference Benchmarks

In Figure3, we benchmark the time taken to encode 100 seconds audio with different modes on a single TPU [11] core with respect to the number of time steps the encoders run at a time. We can see that the inference speed is faster when we encode more time steps at a single inference run for all the modes because it allows better parallelization. We can also see that training mode is faster than query slicing mode, and batch step mode is the slowest among them because of extra overheads for handling the query block, and states. In high latency scenarios, where the encoder encodes 120 frames at a time, the encoding times are even faster. It takes 0.3 second, 0.6 second, and 1.8 seconds to encode 100 seconds audio with training mode, query slicing mode and batch step mode respectively.

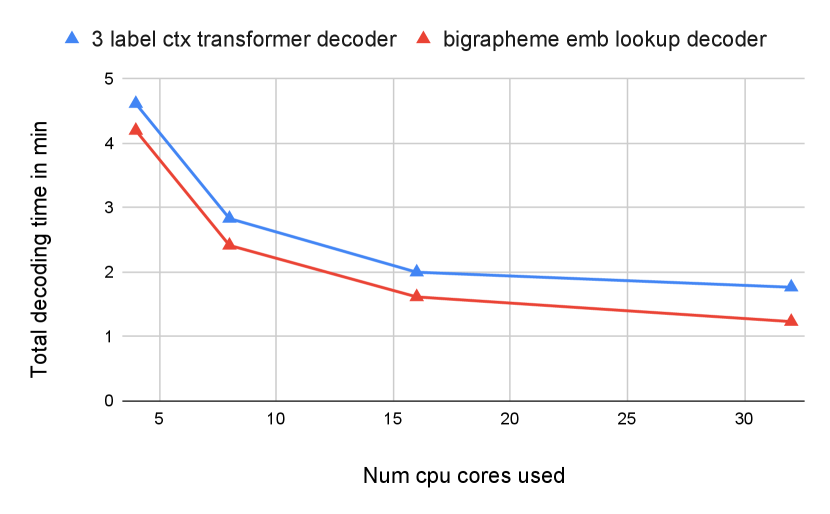

In Figure.4, we benchmark query slicing inference mode on desktop CPU for a 36 minutes long audio. We can see that this audio can be recognized in less than 3 minutes with 8 CPU cores (i.e. less than 8% real time factor). The recognition time can be further improved with a bigrapheme embedding lookup decoder with slight WER regression.

5 Conclusions

We describe a method to train a Transformer-Transducer model that allows training a single model that can decode speech in both low latency and high latency modes. We also propose Y-model trained with variable right context at penultimate layers as an efficient solution for unifying low-latency and high-latency decoding modes. The low latency mode can be used for streaming recognition results while the final recognition results are obtained from high-latency mode. We show that with a small future audio context of 2.4 sec, we can get very similar accuracy to a model with full audio context. We also show that the extra latency at the end of decoding from high latency mode can be made very small with parallel encoding of audio frames and decoder optimizations.

References

- [1] M. Schuster and K. K. Paliwal, “Bidirectional recurrent neural networks,” IEEE Transactions on Signal Processing, vol. 45, no. 11, pp. 2673–2681, 1997.

- [2] Alex Graves, Santiago Fernández, and Jürgen Schmidhuber, “Bidirectional lstm networks for improved phoneme classification and recognition,” in Artificial Neural Networks: Formal Models and Their Applications – ICANN 2005, Włodzisław Duch, Janusz Kacprzyk, Erkki Oja, and Sławomir Zadrożny, Eds., Berlin, Heidelberg, 2005, pp. 799–804, Springer Berlin Heidelberg.

- [3] H. Sak, A. Senior, K. Rao, O. İrsoy, A. Graves, F. Beaufays, and J. Schalkwyk, “Learning acoustic frame labeling for speech recognition with recurrent neural networks,” in 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2015, pp. 4280–4284.

- [4] Qian Zhang, Han Lu, Hasim Sak, Anshuman Tripathi, Erik McDermott, Stephen Koo, and Shankar Kumar, “Transformer transducer: A streamable speech recognition model with transformer encoders and rnn-t loss,” 2020.

- [5] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Lukasz Kaiser, and Illia Polosukhin, “Attention is all you need,” in Advances in neural information processing systems, 2017, pp. 5998–6008.

- [6] Zihang Dai, Zhilin Yang, Yiming Yang, William W Cohen, Jaime Carbonell, Quoc V Le, and Ruslan Salakhutdinov, “Transformer-xl: Attentive language models beyond a fixed-length context,” in Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, 2019, p. 2978–2988.

- [7] Alex Graves, “Sequence transduction with recurrent neural networks,” in Proceedings of the 29th International Conference on Machine Learning, 2012.

- [8] A. Senior, H. Sak, F. de Chaumont Quitry, T. Sainath, and K. Rao, “Acoustic modelling with cd-ctc-smbr lstm rnns,” in 2015 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), 2015, pp. 604–609.

- [9] M. Ghodsi, X. Liu, J. Apfel, R. Cabrera, and E. Weinstein, “Rnn-transducer with stateless prediction network,” in ICASSP 2020 - 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2020, pp. 7049–7053.

- [10] Daniel S Park, William Chan, Yu Zhang, Chung-Cheng Chiu, Barret Zoph, Ekin D Cubuk, and Quoc V Le, “Specaugment: A simple data augmentation method for automatic speech recognition,” arXiv preprint arXiv:1904.08779, 2019.

- [11] Jouppi et al, “In-datacenter performance analysis of a tensor processing unit,” in Proceedings of the 44th Annual International Symposium on Computer Architecture, New York, NY, USA, 2017, ISCA ’17, p. 1–12, Association for Computing Machinery.