Transcending Language Boundaries: Harnessing LLMs for Low-Resource Language Translation

Abstract

Large Language Models (LLMs) have demonstrated remarkable success across a wide range of tasks and domains. However, their performance in low-resource language translation, particularly when translating into these languages, remains underexplored. This gap poses significant challenges, as linguistic barriers hinder the cultural preservation and development of minority communities. To address this issue, this paper introduces a novel retrieval-based method that enhances translation quality for low-resource languages by focusing on key terms, which involves translating keywords and retrieving corresponding examples from existing data. To evaluate the effectiveness of this method, we conducted experiments translating from English into three low-resource languages: Cherokee, a critically endangered indigenous language of North America; Tibetan, a historically and culturally significant language in Asia; and Manchu, a language with few remaining speakers. Our comparison with the zero-shot performance of GPT-4o and LLaMA 3.1 405B, highlights the significant challenges these models face when translating into low-resource languages. In contrast, our retrieval-based method shows promise in improving both word-level accuracy and overall semantic understanding by leveraging existing resources more effectively.

1 Introduction

Low-resource languages, often spoken by small and marginalized communities, face critical challenges in preservation, communication, and cultural transmission. Many of these languages lack extensive written documentation or digital resources, which hinders their use in critical domains like healthcare, education, and public services. The absence of adequate linguistic resources not only threatens the survival of these languages but also creates barriers to services, often leaving native speakers disconnected from modern applications and opportunities. In healthcare, for example, the inability to communicate in one’s native language can lead to misunderstandings between patients and medical professionals, potentially impacting the quality of care and patient outcomes. The same challenges apply to legal, educational, and government services, where effective communication is essential. For these communities, access to reliable and accurate translations in their native language is crucial.

Addressing this problem with traditional methods of machine translation has proven challenging[43]. Historically, machine translation has been dominated by well-resourced languages like English, French, and Chinese, with significant advancements in translation quality being driven by the availability of large, high-quality bilingual corpora. However, low-resource languages often lack parallel text corpora, limiting the effectiveness of rule-based and statistical approaches. As a result, machine translation for low-resource languages has lagged behind, exacerbating the digital divide for these communities.

In recent years, the development of large language models (LLMs) has opened up new possibilities for low-resource language translation. Unlike traditional methods, LLMs benefit from vast pretraining across multiple languages, enabling them to generate coherent text even in languages for which little data exists. The transformer-based architecture that underpins these models, particularly models like GPT-4 [1] and LLaMA [14], has revolutionized the field of NLP by allowing parallel processing and improved contextual understanding. These models have demonstrated strong performance in many tasks, including translation, question-answering, and text generation, with emergent abilities that allow them to handle languages with scarce training data.

The evolution of natural language processing from early techniques such as word embeddings [52] to current models highlights how far the field has come. The development of word embeddings in the early 2010s allowed words to be represented as dense vectors, capturing their semantic relationships. This led to the rise of sequence-to-sequence models [64], which transformed machine translation by enabling models to map input sequences (such as sentences in one language) to output sequences (sentences in another language) with a higher degree of fluency and accuracy. The introduction of the Transformer architecture [69] marked a turning point in the field, with its attention mechanisms enabling models to process entire sentences at once, rather than word by word, resulting in significant improvements in translation tasks.

The field of machine translation has evolved from rule-based systems to statistical machine translation (SMT) [29], which relies on probabilistic models to generate translations based on statistical patterns in bilingual text. SMT systems dominated the field in the 1990s and early 2000s, but they required large volumes of parallel text to function effectively. Neural machine translation (NMT) [64, 2] soon emerged, leveraging deep learning and sequence-to-sequence models to generate more fluent and accurate translations. NMT marked a significant improvement over SMT by allowing for better context management across long sequences of text. Transformer-based models such as MarianMT [27] and OpenNMT [28] had set impressive standards in machine translation, particularly for high-resource languages. Generative LLMs have further pushed the boundaries of flexibility and knowledge representation, surpassing previous models in their ability to handle diverse tasks with minimal task-specific training. LLMs like GPT-4 [1] and LLaMA [14] leverage massive datasets across numerous languages, allowing them to generate human-like text across diverse domains and tasks, including translation.

Despite these advancements, generative LLMs still face significant challenges when applied to low-resource languages. One key issue is that these languages are often severely underrepresented in the pretraining data, leading to poor generalization when translating between high-resource and low-resource languages. Even with methods such as zero-shot and few-shot learning, generative LLMs may produce translations that are inaccurate or nonsensical due to limited exposure to the linguistic nuances of low-resource languages. Hallucination, where the model generates information that does not exist in the source text, is particularly problematic in this context, as it undermines the reliability of translations for critical domains like healthcare, education, and public services [25].

One promising approach to improving translation for low-resource languages is Retrieval-Augmented Generation (RAG) [15]. By integrating external knowledge retrieval with language generation, RAG enhances the model’s ability to provide accurate and context-aware translations. This hybrid framework allows the model to fetch relevant data during the translation process, thereby addressing limitations such as outdated or incomplete internal knowledge. RAG can be particularly beneficial for low-resource languages, as it allows the model to compensate for the scarcity of training data by pulling information from related high-resource languages or domain-specific corpora.

In this paper, we explore the performance of two cutting-edge models, GPT-4o and LLaMA 3.1, in translating from a high-resource language (English) into Cherokee, a critically endangered Native American language. We evaluate these models using both automatic evaluation metrics, such as BLEU [55], ROUGE [37], and BERTScore [79], and human expert assessments to gauge the quality of the translations. Our goal is to assess how well these models generalize to languages with limited training data and to identify strategies, such as keyword-based retrieval, that can further improve translation quality for low-resource languages.

In the broader context, improving LLM translation for low-resource languages can have far-reaching implications. It can not only help bridge the communication gap in critical domains like healthcare but also contribute to the preservation and revitalization of endangered languages. By making low-resource languages more accessible and usable in digital environments, we can help uplift communities and ensure that these languages continue to thrive in the modern world.

2 Related Work

2.1 Large Language Models

LLMs have revolutionized NLP, with applications across diverse fields such as education, healthcare, robotics, etc [32, 63, 30, 73, 40, 31, 34, 85]. The foundation of LLMs lies in the transformer architecture introduced by Vaswani et al. [69]. With its self-attention mechanism, the transformer effectively handles long-range dependencies, surpassing earlier models like Recurrent Neural Networks (RNNs) [6] and Long Short-Term Memory (LSTM) networks [18], which struggled with processing long sequences and parallelization. Transformers have achieved state-of-the-art (SOTA) performance on tasks such as machine translation. The BERT model [13], developed from Transformers, further enhanced the efficiency and scalability of NLP models through self-supervised pretraining.

More recently, generative language models, particularly the GPT series [58, 4], were the first to demonstrate zero-shot and few-shot in-context learning abilities without being finetuned on specific downstream tasks. These decoder-only models, pretrained on massive datasets from diverse sources, integrate a wide range of world knowledge, enabling efficient autoregressive generation with emergent abilities and scaling to larger and more powerful models. These factors are key to today’s LLMs. InstructGPT [54] refined the framework by incorporating reinforcement learning from human feedback (RLHF) [7], aligning model outputs more closely with human preferences.

Building on these advancements, OpenAI released ChatGPT in 2022, marking a new milestone in LLM development. Its large-scale pretraining and RLHF mechanisms enabled it to excel in tasks such as question answering, summarization, and dialogue, making it widely popular for its human-like conversational abilities. Subsequent models like GPT-4 and GPT-4V further integrated multimodal capabilities and enhanced reasoning abilities, enabling more complex tasks. Open-source LLMs such as LLaMA [67] and Mistral [26] have also demonstrated competitive performance, pushing LLMs toward more open and widespread use in real-world applications. Indeed, LLMs and related vision-language models have demonstrated significant practical impact in diverse domains [39, 83, 50, 12, 45, 36, 42, 60, 11, 81, 76, 46, 47, 49, 72, 23, 48, 24, 66].

With these advancements, the integration of LLMs into various real-world applications has garnered increasing attention. LLMs’ human-like understanding and reasoning abilities pave the way toward Artificial General Intelligence (AGI) and can facilitate societal development across a wide range of domains [83, 44, 84, 82, 35, 32].

2.2 Machine Translation on Low-Resource Language

Machine Translation for low-resource languages has been a long-standing challenge in the field of NLP. While machine translation for high-resource languages, such as English, Chinese, or Spanish, has seen considerable improvements, particularly with the advent of NMT techniques, low-resource languages have lagged due to the scarcity of large parallel corpora and linguistic resources.

Early efforts in machine translation, particularly for low-resource languages, were based on rule-based and SMT approaches. Rule-based systems relied on linguistic rules crafted by experts and extensive lexicons, but they were often labor-intensive and brittle when applied to complex languages. SMT, introduced in the 1990s, offered a data-driven approach, where models learned translation probabilities from bilingual text corpora. However, SMT required large volumes of parallel data, which was a significant limitation for low-resource languages, and its reliance on phrase-based techniques often failed to capture complex linguistic phenomena like morphology and syntax in underrepresented languages.

With the advent of Neural Machine Translation (NMT), particularly sequence-to-sequence (Seq2Seq) models with attention mechanisms, the field of Machine Translation entered a new era. NMT demonstrated superior performance over SMT in many language pairs by leveraging deep learning to create richer representations of source and target sentences. However, NMT’s effectiveness heavily depends on the availability of large datasets, making it less applicable to low-resource settings. Researchers began exploring techniques to alleviate the data scarcity issue. Transfer learning became a popular method, where models pretrained on high-resource languages are fine-tuned on low-resource languages. This technique allowed low-resource languages to benefit from knowledge gained from related high-resource languages. Multilingual NMT further extended this idea, training models to translate between multiple languages simultaneously. This method improved performance for low-resource languages by allowing them to share representations with high-resource languages, leveraging multilingual data in a shared model architecture.

Data augmentation techniques, such as back-translation, have become widely used for low-resource Machine Translation. In back-translation, a model is used to translate monolingual target language data into the source language, thereby creating pseudo-parallel corpora. This approach significantly boosts training data for low-resource pairs and has been shown to improve translation quality in languages with limited direct parallel data. Additionally, unsupervised machine translation emerged as a promising approach for low-resource languages by eliminating the need for parallel data entirely. Unsupervised Machine Translation leverages monolingual corpora in both the source and target languages and learns to align and translate between them using iterative back-translation and shared latent representations. However, while these methods show promise, they still struggle with low-resource languages that have extremely limited or non-existent monolingual resources.

More recently, LLMs such as GPT-3, GPT-4, and LLaMA have emerged as powerful tools for a range of NLP tasks, including translation. These models, with billions of parameters and trained on extensive multilingual data, have shown promise in addressing low-resource translation through prompt-based methods. By employing prompt engineering, LLMs can perform zero-shot or few-shot translation tasks by conditioning on examples or instructions provided as input prompts. However, their performance on low-resource languages, particularly those with complex linguistic features or limited presence in training data (e.g., indigenous languages like Cherokee), remains an area of active research.

3 Low-Resource Language

3.1 Cherokee

In this paper, we focus on one Native American low-resource language: Cherokee. We also design a LLMs empowered Machine Translation task based on Cherokee. The Cherokee language, known as Tsalagi gawonihisdi in its native form, belongs to the Iroquoian language family. It is primarily spoken by the Cherokee people, whose traditional homelands are in what is now the southeastern United States. Today, as a result of the federal policy to deport Native peoples from their homes east of the Mississippi River in the 1830s, there are two Cherokee nations in Oklahoma (the Cherokee Nation and the United Keetoowah Band of Cherokee Indians) and one in North Carolina (the Eastern Band of Cherokee Indians). Despite centuries of colonial pressures, the Cherokee language has persisted through various revitalization efforts. However, it still remains classified as a critically endangered language according to UNESCO [77].

The historical trajectory of the Cherokee language is inseparable from the history of the Cherokee people. Before European contact, Cherokee was an exclusively oral language [78]. The Cherokee syllabary, created in the early 19th century by Sequoyah (also known as George Guess), revolutionized the language by providing a standardized writing system. The syllabary consists of 85 symbols, each representing a syllable, making it fundamentally different from an alphabetic system like English, where letters represent individual phonemes. Cherokee is a polysynthetic language, meaning that words often consist of multiple morphemes that combine to convey complex meanings. In Cherokee, single words can function as complete sentences in English. When discussing parts of speech, verbs are the most morphologically complex part of the Cherokee language. A typical Cherokee verb consists of several components:

-

•

Pronominal prefix, which indicates the subject or object

-

•

Prepronominal prefix, that provides additional information such as tense, aspect, or negation

-

•

Verb root, the core meaning of the verb

-

•

Aspect markers, indicating whether an action is completed or ongoing

-

•

Directional suffixes, specifying the direction of the action

Nouns in Cherokee however, are typically simpler than verbs. They may consist of a root and optional suffixes. But they do not show the same level of inflection as verbs. Cherokee does not mark nouns for gender or case, although it does differentiate between animate and inanimate entities. Cherokee syntax follows a verb-final word order (SOV). In other words, Cherokee sentences typically have the subject first, followed by the object, and then the verb. However, Cherokee exhibits considerable flexibility in its word order due to the rich morphological marking of verbs. It allows speakers to emphasize different parts of a sentence by rearranging the word order without losing clarity.

The Cherokee language is crucial for preserving cultural identity and heritage, as it carries traditional knowledge, oral stories, and ceremonies that define the Cherokee people. It has historical significance due to the creation of the Cherokee syllabary, which empowered the Cherokee Nation in the colonial era and remains a symbol of resilience. Cherokee contributes to linguistic diversity, offering insights into unique grammatical structures and language typology. It also hold valuable culture knowledge. Efforts to revitalize the language support indigenous sovereignty and ensure intergenerational communication, allowing future generations to retain their cultural roots. We hope by alleviating LLMs, linguists and computer science researchers can contribute to revitalize Cherokee. It is one of the most vital goals for this paper.

3.2 Tibetan

Tibetan, a language with a rich historical legacy, is first attested from the mid-seventh century CE during the Tibetan Empire, which adopted the Chinese style of documenting political events. The earliest form, known as Old Tibetan, is found in the empire’s documents and inscriptions, while Classical Tibetan emerged after a significant language reform and standardization in the ninth century [65].

Morphologically, Tibetan shows both simplicity and complexity. Many basic vocabulary items are monosyllabic, reflecting its Sino-Tibetan roots, but the language also contains a significant number of bimorphemic and disyllabic nouns. Compound nouns and light verb constructions (Noun + Verb combinations) are prevalent, and while they are common in modern Tibetan, they do not form compounds in the same formal sense as compound nouns [3].

Tibetan utilizes tones to differentiate words that are otherwise phonetically identical, although the presence and nature of tones vary by dialect. Classical Tibetan, from which modern Tibetan dialects evolved, was not tonal, and the development of tones is a relatively recent phenomenon in the language’s evolution. Tibetan is primarily a Subject-Object-Verb (SOV) language. It uses postpositions rather than prepositions, and word order plays a critical role in sentence structure. The language has an ergative-absolutive alignment, which is evident in its case marking and verb agreement patterns.

Tibetan verbs are often complex and consist of several components, particularly in classical and literary forms. A typical Tibetan verb consists of the following components:

-

•

Pronominal prefix, which indicates the person (subject or object) involved in the action

-

•

Verb root, the core lexical meaning of the verb

-

•

Tense markers, which indicate past, present, or future actions

-

•

Auxiliary verbs, which modify the meaning of the main verb (e.g., indicating ability or necessity)

-

•

Honorific markers, used to show respect, particularly in formal or religious contexts

These components allow Tibetan verbs to convey detailed grammatical and social information, making them highly versatile and integral to the language’s structure.

3.3 Manchu

Manchu, part of the Tungusic language family, was historically the language of the ruling elite of the Qing Dynasty, which governed China from 1644 to 1912. The Manchu language, spoken by the Manchu people, was used extensively in the imperial court for religious ceremonies, diplomatic and ideological discourse, and for communicating with bannermen, nobility, and military officials [9]. Today, however, Manchu is considered a highly endangered language, with fewer than 100 native speakers left, predominantly among older generations in the remote regions of northeast China [68]. In Heilongjiang, the northernmost province of Manchuria, Manchu was still spoken by a considerable population at the start of the twentieth century. By the century’s end, however, only a few small groups of middle-aged and elderly speakers remained, and the outlook for the language’s continued survival appears bleak[53]. The language faces a severe risk of extinction due to limited transmission across generations and the increasing dominance of Mandarin Chinese in daily life and formal education.

The historical development of Manchu is closely tied to the Manchu people’s rise to power and the subsequent cultural assimilation policies of the Qing Dynasty. Originally a language with no written form, Manchu adopted a script based on Mongolian in the 17th century. This script is vertically oriented and bears no resemblance to Chinese writing systems, making it unique among the major languages of the region. The script consists of syllables represented by various glyphs, creating a logographic system distinct from alphabetic languages like English.

Linguistically, Manchu is an agglutinative language with a Subject-Object-Verb (SOV) word order, meaning that verbs typically come at the end of a sentence. It employs vowel harmony and features complex morphological rules, which allow for a large variety of meanings to be expressed through prefixes and suffixes attached to a verb root. Manchu verbs, much like those in polysynthetic languages such as Cherokee, carry substantial grammatical information, including tense, aspect, mood, and voice. A typical Manchu verb may include multiple morphemes that together convey complex meanings, a feature common to languages in the Tungusic family.

A typical Manchu verb consists of several components:

-

•

Verb root, which provides the main action or meaning of the verb

-

•

Aspect markers, indicating whether an action is completed, ongoing, or repetitive

-

•

Mood markers, which express the speaker’s attitude toward the action (e.g., imperative, interrogative)

-

•

Negative particles, which are added to negate the action of the verb

-

•

Directional suffixes, specifying the direction or orientation of the action (e.g., toward or away from the speaker)

Manchu nouns, however, are simpler than verbs in terms of morphology. Unlike verbs, Manchu nouns do not have extensive inflection, and they are not marked for gender or number. However, Manchu makes a distinction between animate and inanimate entities, which is reflected in both the syntax and verb conjugation rules of the language. Additionally, Manchu nouns are often modified by possessive suffixes and directional markers, allowing for precise expression of spatial and relational concepts.

The Manchu language is critical for understanding the culture and governance of the Qing Dynasty. Thousands of historical documents, including military records, imperial edicts, and literary texts, were written in Manchu. Many of these documents remain untranslated, making proficiency in Manchu essential for historians studying Qing Dynasty China. Manchu also holds significant cultural value for the Manchu people, preserving traditional knowledge, oral histories, and religious practices.

The challenges facing the revitalization of Manchu are similar to those encountered by other endangered languages, including limited resources, a dwindling speaker base, and the overwhelming dominance of global languages like Chinese and English. However, the continued interest from scholars and the rise of community-driven language preservation efforts offer hope for the survival of this historically significant language.

3.4 Techniques Leveraging LLMs for Low-Resource Machine Translation

Fluent Cherokee speakers are few in number, and while there are multiple initiatives to teach the language to Cherokee school children, it is a race against time to preserve the language and the cultural knowledge it contains before another generation passes away. Today, translation from Cherokee to English is a laborious process that involves transliterating the syllabary into the Latin Alphabet, producing a rough English analog, and then working with Cherokee elders to convey the cultural subtleties of the original text. With the recent, rapid technological advances, however, LLMs may aid the process of translation in both directions, with the goal of producing passable texts that fluent speakers can then polish.

3.4.1 Zero-shot and Few-shots Translation

Due to a lack of training data for low-resource languages, most LLMs cannot properly translate low-resource languages. Moreover, it is hard to finetune these LLM without sufficient language resources. Thus, various research efforts focus on improving LLMs to perform translation tasks with minimal or no task-specific training, commonly referred to as zero-shot and few-shot learning.

Zero-shot translation refers to the capability of LLMs to translate between language pairs without having seen explicit examples of these translations during training. For instance, Zhang et al. proposed strategies such as random online back-translation and language-specific modeling, improving zero-shot performance in multilingual NMT by approximately 10 BLEU score [75]. Gao et al. introduced Cross-lingual Consistency Regularization (CrossConST), which enhances zero-shot performance by bridging the representation gap across languages, proving effective in both high-resource and low-resource language settings [17]. Although zero-shot translation can provide good results for certain languages, particularly when leveraging shared linguistic patterns from pretraining on multilingual corpora, it basically leverages well-resourced languages (often English) to facilitate translation between low-resource language pairs. Thus, the performance will degrade when applied to highly underrepresented languages. This highlights the need for additional strategies such as fine-tuning or incorporating domain-specific data.

Compared with no training examples in zero-shot translation, few-shot translation involves exposing a model to a small number of translation examples before performing the task. In this scenario, LLMs can adapt quickly to specific language pairs or domains with minimal data, offering a highly flexible solution for low-resource languages. Zhang et al. explored few-shot learning in machine translation using LLMs [80]. They compared prompting, few-shot learning, and fine-tuning approaches, finding that few-shot learning often outperforms zero-shot prompting when the model is given even a few translation examples. This research highlighted the flexibility and efficiency of LLMs, especially when fine-tuned using the QLoRA method, which allows the models to adapt to machine translation tasks with minimal data and memory usage. The study by Cahyawijaya et al. extensively explores in-context learning (ICL) and cross-lingual in-context learning (X-ICL), focusing on their application to low-resource languages [5]. This work highlights the effectiveness of using in-context examples from high-resource languages to perform translation tasks in low-resource languages. By providing semantically aligned in-context examples, LLMs can bridge the language gap and enhance understanding of low-resource languages without fine-tuning. However, they also point out that label alignment can sometimes degrade performance in low-resource languages, and propose an alternative approach called query alignment, which focuses on aligning the input distribution rather than the labels. Additionally, Guo et al. proposed the Talent method, which utilizes a textbook-based learning approach to improve LLM translation performance on low-resource languages [19]. By creating structured textbooks containing vocabulary lists and syntax patterns, LLMs are guided to absorb this knowledge before the translation task, significantly improving performance in few-shot settings for low-resource languages

3.4.2 Multilingual Pretrained Model

LLMs such as mBART [38], MarianMT [27], and T5 [59] are pretrained on multilingual corpora utilizing techniques such as masked language modeling (MLM) or denoising autoencoding. These approaches facilitate the learning of shared representations across diverse languages. By leveraging this multilingual knowledge, these models demonstrate an ability to transfer learned representations to low-resource languages, thereby enabling effective cross-lingual generation. This transfer learning capability enhances performance in translation and generation tasks, even for languages with limited available training data.

mBART is a sequence-to-sequence model specifically designed for multilingual tasks, with its primary training objective centered on denoising. In this framework, both the source and target language representations are jointly learned within a unified training paradigm. The model is pretrained by corrupting text sequences through a variety of noise functions (such as token masking or permutation) and then learning to reconstruct the original sequence. This approach allows mBART to develop a deep understanding of sentence structure and language semantics across multiple languages. As a result of its pretraining strategy, mBART is capable of learning high-quality cross-lingual representations, which makes it particularly effective for translation tasks and other multilingual generation tasks. The shared encoder-decoder architecture allows for bidirectional transformation between languages, ensuring flexibility in generating text in one language based on input from another. This cross-lingual capability is further enhanced by mBART’s ability to transfer learned knowledge to lower-resource languages, making it a robust tool for multilingual natural language processing tasks, even when large monolingual corpora are not available.

XLM-R (XLM-RoBERTa) [8] is a multilingual model based on the RoBERTa [41] architecture, specifically extended to support over 100 languages. It is pretrained using a cross-lingual masked language modeling (MLM) task, which involves masking a portion of the input tokens and training the model to predict the missing tokens based on the context. This pretraining enables XLM-R to learn universal language representations that capture syntactic and semantic structures across a wide variety of languages. XLM-R inherits BERT’S optimizations and applies them to multilingual settings, allowing the model to handle a diverse set of languages with high proficiency. Its training corpus spans multiple languages so that it benefits from large-scale multilingual data to improve its understanding of both high-resource and low-resource languages.

MarianMT is a multilingual machine translation model designed specifically for efficient and high-quality translation between multiple languages. Unlike many other multilingual models, MarianMT is directly trained for translation tasks rather than relying solely on MLM or denoising pretraining. It is based on a transformer architecture that supports both many-to-one and many-to-many translation tasks. Therefore, even without the explicit source-target language pair specification, it can perform translations between a variety of languages. To achieves the general translation MarianMT utilize the shared vocabulary and token embeddings and fine-tuned on large parallel corpora. By leveraging parallel datasets during fine-tuning, MarianMT becomes adept at producing high-quality translations while maintaining efficient inference speeds. Additionally, MarianMT provides a flexible framework that can be adapted to different domain-specific translations, making it an ideal choice for machine translation applications across various industries. Its architecture ensures that MarianMT is capable of scaling to new languages and domains with minimal reconfiguration, supporting a wide range of multilingual tasks efficiently and effectively.

3.4.3 Fine-tuning LLMs

One effective approach to adapting a pretrained multilingual model while mitigating the risk of overfitting is the use of adapters [21]. Adapters are compact, trainable neural network modules that are inserted between the layers of a pretrained model. These modules allow the model to be fine-tuned for new tasks or languages without requiring the retraining of the entire network, thus preserving the general knowledge captured during pretraining. Adapter fine-tuning is particularly advantageous for low-resource machine translation tasks, where large datasets are not readily available. Adapters enable the model to leverage the knowledge from high-resource languages while being fine-tuned on smaller, task-specific datasets, effectively reducing the risk of overfitting. By adjusting only a small number of parameters, adapters maintain the computational efficiency of the base model, making them suitable for scenarios with limited computational resources.

Among all types of fine-tuning methods, Low-Rank Adaptation (LoRA) fine-tunning [22] is one of the most successful strategies. LoRA is dessigned to reduce the computational overhead and memory requirements when fine-tuning large models. Instead of updating all the parameters of the LLM, LoRA introduces low-rank matrices into the model’s architecture, allowing adaptation of only a subset of parameters while keeping most of the model’s original weights frozen. LoRA proposes an efficient method for fine-tuning large-scale pretrained models by leveraging matrix decomposition. Specifically, LoRA decomposes the weight matrices of the model into lower-dimensional matrices, and during the fine-tuning process, only these lower-dimensional matrices are updated. This significantly reducess the number of trainable parameters, enabling efficient adaptation of the model with minimal data and computational overhead. It also ensures that the fine-tuning process remains computationally efficient without compromising the model’s performance. By updating only the lower-dimensional matrices, the original model’s capacity and knowledge acquired during pretraining, are largely preserved. As a result, LoRA allows for effective task-specific specialization while maintaining the benefits of the pretrained model’s generalization abilities. This methodology is particularly valuable for low-resource machine translation (MT) tasks, where large amounts of parallel data are unavailable. LoRA’s ability to adapt models efficiently makes it ideal for such scenarios, enabling the model to specialize in low-resource language pairs without the need for full-scale retraining.

3.4.4 Back-Translation

To overcome the scarce availability of large parallel corpora researchers have developed back-translation, a widely adopted data augmentation technique that leverages monolingual data to improve translation performance. Back-translation is a semi-supervised learning technique used in NMT to generate additional synthetic parallel data. The process involves training a model to translate from the target language to the source language and using this model to translate monolingual data in the target language back to the source language. This synthetic source-target data pair is then used to augment the original parallel dataset, thus improving the performance of the translation model in the source-to-target direction. LLMs can also leverage back-translation by fine-tuning a model to first generate high-resource language translations. Since evaluating the accuracy and quality of high-resource languages is significantly more feasible compared to low-resource languages, this approach ensures the generation of abundant, high-quality augmented data for low-resource language tasks. Among Back-Translation, several variations of back-translation have been developed to enhance its effectiveness.

The most common and straightforward form of back-translation involves using a single translation model to generate synthetic source sentences from monolingual target data. Previously this model is simple but suffers from overfitting to the synthetic data if not properly balanced with authentic parallel data. However, LLMs serve as a highly effective alternative due to their extensive and versatile knowledge base, which enables them to outperform previous simple model with high accuracy and adaptability.

Iterative back-translation is an extension of the basic technique where the forward and backward models are trained in tandem, improving each other’s performance over successive iterations. It involves several steps:(1) Train an initial forward translation model from source to target language using the available parallel data. (2) Train the reverse model from target to source using the parallel data. (3) Generate synthetic data using the reverse model and retrain the forward model with the augmented dataset. (4) Generate synthetic target sentences using the updated forward model and retrain the reverse model with this new data. This iterative process continues until the model performance converges, leading to better generalization as both models reinforce each other through the generation of progressively higher-quality synthetic data. Fine-tuned LLMs are particularly well-suited for iterative back-translation due to their ability to capture complex linguistic patterns and context across languages. Their extensive pre-trained knowledge allows for nuanced understanding and generation of text, making them ideal for producing accurate and contextually appropriate translations, even in low-resource language settings. Additionally, their adaptability enables continuous improvement through fine-tuning, further enhancing their performance in iterative back-translation tasks.

3.4.5 Retrieval-Augmented Generation

Retrieval-Augmented Generation (RAG) typically involves three key stages: Indexing, Retrieval, and Generation [33, 51]. Indexing aims to transform raw data into a vectorized database. This stage begins by converting external data—ranging from structured to unstructured formats—into a standardized format. An embedding model then encodes the processed data into smaller chunks, which are stored in the vectorized database. Indexing establishes a robust foundation for efficient and precise retrieval. Retrieval involves applying RAG algorithms to search for and expand the prompt. In this stage, the user’s initial query is encoded into an input vector using the same embedding model utilized during Indexing. The system then computes the similarity between the input vector and the stored chunks, selecting the top K most relevant chunks based on their proximity. Generation utilizes a synthetic prompt formed by combining the user’s query with the retrieved documents. This enriched prompt provides the LLM with additional contextual information, which helps mitigate hallucinations or constrain the generated responses. Consequently, the Generation stage enhances the accuracy and reliability of the model’s outputs.

Advanced RAG methods have been developed to address the limitations of naive RAG by optimizing various components, including query prompts [51, 57, 16], indexing structures, similarity calculations, and prompt integration. Additionally, some advanced approaches leverage retrieval data to enhance training datasets, particularly in low-resource domains.

For instance, [62] retrieves relevant instances and uses large language models (LLMs) to generate new samples that integrate both original and retrieved data, thereby addressing data scarcity in specialized domains. [56] involves utilizing retriever models to identify relevant samples and expand the set of positive examples within privacy policy question-answering datasets, enhancing the training process. However, these methods often rely on external data, which poses challenges when the data is sensitive and cannot be accessed due to privacy concerns, or when computational resources are limited.

3.5 LLMs Empowered Machine Translation Task

3.5.1 Methodology

We implement a RAG LLM low resource language translator by combining retrieval-based techniques with LLMs to ensure accurate and context-aware translations. The overall architecture is shown in Figure 1. The system utilizes dictionary entries, which are indexed through two complementary approaches: keyword-to-document mappings and vector embeddings. Key-to-document mappings in systems refer to a process where keywords are linked directly to the documents or data entries that contain or are relevant to those keywords. The keyword retriever will retrieve corresponding documents according to the key-to-document mapping, if the keyword is inside our storage. The vector embedding indexing process organizes raw linguistic data into retrievable units by associating words with dictionary definitions and using text-embedding-ada-002 model to encode the text into high-dimensional vectors that capture semantic relationships beyond mere surface forms.

Our model operates with this dual retrieval mechanism. First, a keyword-based index allows for fast and efficient lookup by identifying exact matches between query terms and dictionary entries. This method ensures that direct translations of words or phrases are retrieved whenever possible. Second, in cases where no exact keyword matches are found, the system employs a vector-based retrieval method using cosine similarity. This approach encodes the query into a semantic vector and calculates similarity scores between the query vector and all indexed vectors. The top K most similar entries are then retrieved, ensuring that semantically related content such as synonyms or conceptually similar expressions are identified, even in the absence of exact matches.

After retrieval, the system uses a GPT-based model to synthesize the retrieved content into a coherent and fluent translation. This generative step integrates the context from retrieved entries to resolve ambiguities and produce a natural, human-readable translation. By balancing lightweight keyword-based retrieval with deeper semantic understanding through vector-based retrieval, this hybrid approach enhances both the accuracy and relevance of the translation output. The RAG architecture thus combines precise keyword matching with the flexibility of semantic search, making it particularly effective for low-resource languages like Cherokee.

3.5.2 Testing Data

Given the extreme scarcity of parallel translations between Cherokee and English literary works, this experiment utilizes three Cherokee literary sources that have available translations: the New Testament, Peter Parley’s Geography, and The Pilgrim’s Progress. These texts provide a rare opportunity to assess translation performance, as they offer both Cherokee and English versions for comparative analysis.

Cherokee New Testament is the largest single book written in Cherokee. This translation of the New Testament of the Bible into the Cherokee language, using the Cherokee syllabary, was completed in the early 19th century. It contains the verse written in Cherokee using Cherokee syllabary and Cherokee Latin transliteration, which provides the pronunciation of the Cherokee syllabary text using Latin letters. The translation was spearheaded by Samuel Worcester, a missionary, and Elias Boudinot, a prominent Cherokee, with significant involvement from Cherokee speakers. The Cherokee New Testament was printed and widely distributed by the Cherokee Nation and became a vital text in preserving the Cherokee language and literacy among Cherokee people. Given its importance in Cherokee history and its length compared to other works in Cherokee, it is considered one of the most substantial works written in the language.

Peter Parley’s Geography, written by Samuel Griswold Goodrich under the pseudonym Peter Parley, was a popular 19th-century educational book designed to teach geography to young readers. First published in the early 1820s, the book introduces geographical concepts in an accessible and engaging manner, using illustrations, maps, and simple language to explain the physical world, cultures, and places beyond the reader’s immediate surroundings. Notably, Peter Parley’s Geography was one of the earliest geography textbooks aimed at children, emphasizing both geographical knowledge and moral lessons. Its influence was significant during the 19th century, and its translation into Cherokee exemplifies the efforts to provide educational material to indigenous populations, reflecting a period of educational reform and expansion.

The Pilgrim’s Progress is one of the most significant works in English literature and Christian allegory written by John Bunyan and first published in 1678. The book narrates the journey of its protagonist, Christian, from his home in the ”City of Destruction” to the ”Celestial City,” symbolizing the believer’s path to salvation. Divided into two parts, the story combines spiritual lessons with vivid characters and settings, reflecting the struggles, temptations, and rewards of living a faithful Christian life.

We conducted the translation for a transcript from the Harris-Trump presidential debate to assess the performance of our model in the context of news and current events [20]. This evaluation aimed to determine how well the system could handle complex, real-world content and provide timely information to Cherokee speakers. The debate transcript was chosen for its rich linguistic features, including political terminology, colloquial expressions, and nuanced argumentation.

We also utilize the New Testament to evaluate our model on Tibetan, a language of significant historical and cultural importance in Asia, and Manchu, a critically endangered language with less than 100 speakers[74]. Due to a lack of resources, the Bible is one of the only available materials across Cherokee, Manchu, and Tibetan languages. We test Tibetan with This evaluation seeks to determine whether our model is capable of effectively handling other low-resource languages, further validating its generalizability and performance beyond Cherokee. By extending our analysis to these languages, we aim to explore the model’s robustness in addressing the unique challenges posed by different low-resource linguistic contexts.

3.6 Test Design and Evaluation

To evaluate the performance and capabilities of our model and original LLMs in low-resource machine translation, we conducted an experiment comparing our model with GPT-4o and LLaMA 3.1 405B models. The objective of the experiment was to assess the translation abilities of these models in generating low-resource languages, focusing primarily on Cherokee translations from English source sentences. Specifically, we randomly selected text from three test datasets for Cherokee and New Testament for Tibetan and Manchu with comparable sentence lengths. Each sentence was input sequentially into each model, which was tasked with translating the sentences into low-resource languages. A comprehensive evaluation framework was then applied to assess and analyze the performance of these models, allowing for a detailed comparison and discussion of their effectiveness in low-resource language generation. We employed a combination of fundamental evaluation metrics, including ROUGE and BLEU, alongside advanced semantic evaluation techniques such as BERTScore and human expert assessment. This multi-faceted evaluation approach allows for both quantitative and qualitative analysis of the generated translations, providing a comprehensive assessment of the models’ performance in terms of both lexical accuracy and semantic fidelity.

ROUGE (Recall-Oriented Understudy for Gisting Evaluation) is primarily used to evaluate the similarity between the machine-generated translations and reference translations by measuring overlapping n-grams, word sequences, and word pairs. It is commonly used in summarization tasks but also provides insights into the fluency and accuracy of translations.

BLEU (Bilingual Evaluation Understudy) is another widely adopted metric in machine translation, which calculates the precision of n-grams between the generated translations and reference translations. It provides a score that reflects how many words or phrases in the generated output are found in the reference, making it a valuable metric for assessing the fidelity of translation.

In addition to these surface-level metrics, we applied BERTScore, which leverages contextual embeddings from pretrained models to capture semantic similarity between the generated and reference translations. Unlike ROUGE and BLEU, which focus on exact n-gram matches, BERTScore evaluates the meaning and context, making it particularly useful for low-resource language tasks where exact matches may not fully capture translation quality.

In addition to automated metrics, we incorporated human expert evaluation to provide a qualitative assessment of the generated translations. The expert evaluated the translations based on three key dimensions: fluency, grammaticality, and faithfulness to the source text. Each of these aspects is scored on a scale from 0 to 5, with higher scores indicating better performance. Fluency measures the naturalness and ease of reading in the target language; grammaticality assesses adherence to the syntactic rules of Cherokee. Faithfulness evaluates how accurately the translation conveys the meaning of the original English sentence. To ensure consistency with other scoring metrics, we will standardize the expert evaluation scores to a range of 0 to 1. This will be achieved by summing all the scores across fluency, grammaticality, and faithfulness, then dividing by the maximum possible total score. This approach provides a consistent scale, allowing for more straightforward comparisons across translations. The formula for the normalized human evaluation score is shown as following:

| (1) |

In this formula, is the number of sentences, and the sum of full grade of fluency, grammaticality and faithfulness, 5 point each, is in total. Fluencyi, Grammaticalityi, and Faithfulnessi are the expert scores for sentence in each respective dimension.

4 Experiment Results

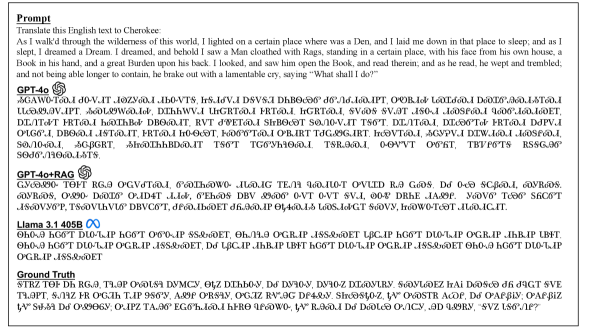

We show our evaluation results in table 1. Figure 2 to 4 show three examples applying LLMs for each Cherokee literary source. We then exhibit translation of 2024 president debate for both presidential candidates in figure 5 and 6. Finally, figure 7 and 8 include one test example for Tibetan and Manchu translation. Following [61], we use the transliteration of Manchu to the Latin alphabet when generating the Manchu translation. The complete evaluation examples and more details are shown in the appendix A.1.

| Language | Model | BLEU | ROUGE-L |

|

|

|

Human Evaluation | ||||||

| Llama 3.1 405B | 0.0 | 0.0 | 0.931 | 0.927 | 0.929 | 0.0 | |||||||

| Cherokee | GPT-4o | 0.003 | 0.0 | 0.938 | 0.938 | 0.938 | 0.0 | ||||||

| GPT-4o + RAG | 0.115 | 0.117 | 0.962 | 0.964 | 0.963 | 0.0 | |||||||

| Llama 3.1 405B | 0.0 | 0.0 | 0.879 | 0.859 | 0.869 | 0.067 | |||||||

| Tibetan | GPT-4o | 0.0 | 0.0 | 0.833 | 0.851 | 0.842 | 0.147 | ||||||

| GPT-4o + RAG | 0.108 | 0.123 | 0.802 | 0.810 | 0.806 | 0.293 | |||||||

| Llama 3.1 405B | 0.0 | 0.104 | 0.693 | 0.663 | 0.678 | 0.040 | |||||||

| Manchu | GPT-4o | 0.0 | 0.125 | 0.726 | 0.703 | 0.714 | 0.173 | ||||||

| GPT-4o + RAG | 0.077 | 0.188 | 0.716 | 0.696 | 0.706 | 0.333 |

| Model |

|

|

|

||||||||||||||||

| Languages | Sentence Index |

|

|

|

|

|

|

|

|

|

|||||||||

| 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||||||||||

| 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||||||||||

| 10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||||||||||

| 11 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||||||||||

| Cherokee | 12 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |||||||||

| 7 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 2 | ||||||||||

| 26 | 0 | 1 | 0 | 0 | 0 | 1 | 1 | 1 | 2 | ||||||||||

| 27 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 1 | ||||||||||

| 28 | 0 | 0 | 1 | 0 | 0 | 1 | 1 | 2 | 2 | ||||||||||

| Tibetan | 29 | 0 | 0 | 1 | 1 | 2 | 1 | 2 | 2 | 3 | |||||||||

| 8 | 0 | 0 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | ||||||||||

| 30 | 0 | 0 | 1 | 0 | 0 | 1 | 1 | 2 | 2 | ||||||||||

| 31 | 0 | 0 | 0 | 1 | 0 | 1 | 2 | 2 | 3 | ||||||||||

| 32 | 0 | 0 | 0 | 2 | 1 | 1 | 3 | 2 | 2 | ||||||||||

| Manchu | 33 | 0 | 1 | 0 | 2 | 1 | 1 | 3 | 2 | 2 |

Both LLaMA 3.1 405B and GPT-4o exhibit notably low scores in traditional machine translation evaluation metrics such as BLEU and ROUGE-L. These results indicate a near-complete lack of overlap between the generated translations and the reference texts in terms of both word n-gram precision measured by BLEU and sentence-level structure measured by ROUGE-L. Such results suggest that both models struggle significantly with the low-resource language translation task when evaluated using metrics that prioritize surface-level textual similarity. The zero scores in ROUGE-L across both models highlight their inability to produce outputs that match the structural or syntactic form of the reference translations, due to the inherent challenges associated with the limited availability of Cherokee training data and the unique linguistic structure of Cherokee compared to high-resource languages.

However, the GPT-4o+RAG model demonstrates a significant improvement over the standalone models in terms of BLEU and ROUGE-L scores. This suggests that the integration of RAG provides the model with additional contextual information that helps align its output more closely with the reference translations in terms of both lexical overlap and syntactic structure. The higher scores indicate that the retrieval component allows the model to generate translations that have better word and phrase alignment with the reference texts, addressing some of the structural limitations seen in the other models.

Despite the poor performance in BLEU and ROUGE-L for LLaMA 3.1 405B and GPT-4o, all models demonstrate significantly higher scores when evaluated using BERTScore, which focuses on semantic similarity rather than exact word matches. GPT-4o+RAG outperforms both LLaMA 3.1 405B and GPT-4o across all BERTScore metrics. These high values indicate that the GPT-4o+RAG model is the most effective in retaining semantic meaning in its translations. Its retrieval mechanism not only enhances the model’s lexical alignment but also strengthens its ability to preserve the semantic essence of the source text.

The human evaluation results reveal the models’ ability to produce translations that align with human expectations of quality. For LLaMA 3.1 405B and GPT-4o models, human evaluation scores remain relative low across all three languages. Likewise, the GPT-4o + RAG model achieves the highest human evaluation scores in both Tibetan and Manchu, indicating a notable improvement in aligning translations with human expectations compared to the standalone models. However, all three models receive a score of 0 in Cherokee translation, even for GPT-4o + RAG model, which otherwise performs best on BLEU, ROUGE-L, and BERTScore metrics. This result suggests that the model’s output may still lack cultural and contextual fidelity essential in low-resource language translation of Cherokee Language.

The low human evaluation scores for Cherokee translations can be partly explained by the relatively recent development of a written system for Cherokee compared to the long textual histories of Manchu and Tibetan. Cherokee’s writing system was only created in the early 19th century by Sequoyah[10], making it a young written language with fewer established conventions and limited historical literature. This lack of reference material restricts the models’ ability to learn from and align with standard expressions and syntactic norms. These findings indicate that while RAG improves the surface-level and semantic alignment, further advancements are needed to capture the nuanced syntactic and cultural elements that human evaluators prioritize in translation quality, emphasizing the value of integrating culturally-aware contextual knowledge into the translation models.

In summary, while the LLaMA 3.1 405B and GPT-4o models show considerable limitations in their ability to translate Cherokee with lexical and structural accuracy, the GPT-4o+RAG model provides a more promising approach by leveraging external information to improve translation quality. The significant disparity between BLEU/ROUGE-L and BERTScore for all models suggests that these models, even with RAG integration, still face challenges in achieving lexical and syntactic fidelity to the reference translations. Nonetheless, the relatively high BERTScore values for GPT-4o+RAG imply that our approach is better suited for low-resource translation tasks, offering a more balanced solution that captures both structural and semantic nuances more effectively.

5 Discussions & Conclusion

Preserving minority languages, such as Cherokee, is important for maintaining cultural heritage and linguistic diversity. However, in the digital age, these languages often face challenges due to a lack of resources and training data, which exacerbates the risk of their decline. To address this, AI models that generate high-quality translations could play a crucial role in sustaining minority languages [71, 70]. This digital integration would not only support language preservation but also could revitalize interest among younger generations, ensuring these unique linguistic perspectives continue to enrich our global discourse.

In this study, we evaluated the performance of our retrieval-based model alongside GPT-4o and Llama 3.1 405B in translating English into Cherokee, Tibetan, and Manchu. Our findings demonstrated a significant improvement in translation accuracy with our method compared to GPT-4o and Llama 3.1 405B, underscoring the effectiveness of our approach. AI models can effectively translate low-resource languages, they could assist in revitalization efforts by providing learners with more reliable language materials and tools for practice. Furthermore, integrating AI-driven translation into language learning programs could help bridge gaps in resources, supporting both native speakers and new learners. This could lead to more sustainable language preservation, helping minority languages remain active in both spoken and written forms. Overall, this study demonstrates the potential of AI to bridge the gap between traditional knowledge and modern technology in the preservation of minority languages. We hope this study can inspire further research and innovation in language revitalization efforts, and help minority languages continue to thrive, preserving unique worldviews and cultural perspectives for future generations.

References

- [1] Achiam, J., Adler, S., Agarwal, S., Ahmad, L., Akkaya, I., Aleman, F.L., Almeida, D., Altenschmidt, J., Altman, S., Anadkat, S., et al.: Gpt-4 technical report. arXiv preprint arXiv:2303.08774 (2023)

- [2] Bahdanau, D.: Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473 (2014)

- [3] Beyer, S.V.: The classical Tibetan language. SUNY Press (1992)

- [4] Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J.D., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., et al.: Language models are few-shot learners. Advances in neural information processing systems 33, 1877–1901 (2020)

- [5] Cahyawijaya, S., Lovenia, H., Fung, P.: Llms are few-shot in-context low-resource language learners. In: Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers). pp. 405–433 (2024). https://doi.org/10.18653/v1/2024.naacl-long.24, https://doi.org/10.18653/v1/2024.naacl-long.24

- [6] Cho, K.: Learning phrase representations using rnn encoder-decoder for statistical machine translation. arXiv preprint arXiv:1406.1078 (2014)

- [7] Christiano, P.F., Leike, J., Brown, T., Martic, M., Legg, S., Amodei, D.: Deep reinforcement learning from human preferences. Advances in neural information processing systems 30 (2017)

- [8] Conneau, A.: Unsupervised cross-lingual representation learning at scale. arXiv preprint arXiv:1911.02116 (2019)

- [9] Crossley, P.K., Rawski, E.S.: A profile of the manchu language in ch’ing history. Harvard Journal of Asiatic Studies 53(1), 63–102 (1993). https://doi.org/10.2307/2719468

- [10] Cushman, E.: ”we’re taking the genius of sequoyah into this century”: The cherokee syllabary, peoplehood, and perseverance. Wíčazo Ša Review 26(1), 72–75 (2011). https://doi.org/10.5749/wicazosareview.26.1.0067, jSTOR 10.5749/wicazosareview.26.1.0067

- [11] Dai, H., Li, Y., Liu, Z., Zhao, L., Wu, Z., Song, S., Shen, Y., Zhu, D., Li, X., Li, S., et al.: Ad-autogpt: An autonomous gpt for alzheimer’s disease infodemiology. arXiv preprint arXiv:2306.10095 (2023)

- [12] Dai, H., Liu, Z., Liao, W., Huang, X., Wu, Z., Zhao, L., Liu, W., Liu, N., Li, S., Zhu, D., et al.: Chataug: Leveraging chatgpt for text data augmentation. arXiv preprint arXiv:2302.13007 (2023)

- [13] Devlin, J.: Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018)

- [14] Dubey, A., Jauhri, A., Pandey, A., Kadian, A., Al-Dahle, A., Letman, A., Mathur, A., Schelten, A., Yang, A., Fan, A., et al.: The llama 3 herd of models. arXiv preprint arXiv:2407.21783 (2024)

- [15] Fan, W., Ding, Y., Ning, L., Wang, S., Li, H., Yin, D., Chua, T.S., Li, Q.: A survey on rag meeting llms: Towards retrieval-augmented large language models. In: Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining. vol. 24, pp. 6491–6501 (2024). https://doi.org/10.1145/3637528.3671470, https://doi.org/10.1145/3637528.3671470

- [16] Gao, L., Ma, X., Lin, J., Callan, J.: Precise zero-shot dense retrieval without relevance labels. arXiv preprint arXiv:2212.10496 (2022)

- [17] Gao, P., Zhang, L., He, Z., Wu, H., Wang, H.: Improving zero-shot multilingual neural machine translation by leveraging cross-lingual consistency regularization. In: Findings of the Association for Computational Linguistics: ACL 2023. pp. 12103–12119 (2023). https://doi.org/10.18653/v1/2023.findings-acl.766, https://doi.org/10.18653/v1/2023.findings-acl.766

- [18] Graves, A., Graves, A.: Long short-term memory. Supervised sequence labelling with recurrent neural networks pp. 37–45 (2012)

- [19] Guo, P., Ren, Y., Hu, Y., Li, Y., Zhang, J., Zhang, X., Huang, H.: Teaching large language models to translate on low-resource languages with textbook prompting. In: Calzolari, N., Kan, M.Y., Hoste, V., Lenci, A., Sakti, S., Xue, N. (eds.) Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024). pp. 15685–15697. ELRA and ICCL, Torino, Italia (May 2024), https://aclanthology.org/2024.lrec-main.1362

- [20] Hoffman, R.: Read: Harris-trump presidential debate transcript (2024), https://abcnews.go.com/amp/Politics/harris-trump-presidential-debate-transcript/story?id=113560542, september 10, 2024

- [21] Houlsby, N., Giurgiu, A., Jastrzebski, S., Morrone, B., De Laroussilhe, Q., Gesmundo, A., Attariyan, M., Gelly, S.: Parameter-efficient transfer learning for nlp. In: International conference on machine learning. pp. 2790–2799. PMLR (2019)

- [22] Hu, E.J., Shen, Y., Wallis, P., Allen-Zhu, Z., Li, Y., Wang, S., Wang, L., Chen, W.: Lora: Low-rank adaptation of large language models. arXiv preprint arXiv:2106.09685 (2021)

- [23] Huang, Y., Sun, L., Wang, H., Wu, S., Zhang, Q., Li, Y., Gao, C., Huang, Y., Lyu, W., Zhang, Y., et al.: Position: Trustllm: Trustworthiness in large language models. In: International Conference on Machine Learning. pp. 20166–20270. PMLR (2024)

- [24] Huang, Y., Sun, L., Wang, H., Wu, S., Zhang, Q., Li, Y., Gao, C., Huang, Y., Lyu, W., Zhang, Y., et al.: Trustllm: Trustworthiness in large language models. arXiv preprint arXiv:2401.05561 (2024)

- [25] Jayakody, R., Dias, G.: Performance of recent large language models for a low-resourced language. In: 2024 International Conference on Asian Language Processing (IALP). pp. 162–167. IEEE (2024)

- [26] Jiang, A., Sablayrolles, A., Mensch, A., Bamford, C., Chaplot, D., de las Casas, D., Bressand, F., Lengyel, G., Lample, G., Saulnier, L., et al.: Mistral 7b (2023). arXiv preprint arXiv:2310.06825 (2023)

- [27] Junczys-Dowmunt, M., Grundkiewicz, R., Dwojak, T., Hoang, H., Heafield, K., Neckermann, T., Seide, F., Germann, U., Aji, A.F., Bogoychev, N., et al.: Marian: Fast neural machine translation in c++. arXiv preprint arXiv:1804.00344 (2018)

- [28] Klein, G., Kim, Y., Deng, Y., Senellart, J., Rush, A.M.: Opennmt: Open-source toolkit for neural machine translation. arXiv preprint arXiv:1701.02810 (2017)

- [29] Koehn, P., Och, F.J., Marcu, D.: Statistical phrase-based translation. In: 2003 Conference of the North American Chapter of the Association for Computational Linguistics on Human Langauge Technology (HLT-NAACL 2003). pp. 48–54. Association for Computational Linguistics (2003)

- [30] Latif, E., Mai, G., Nyaaba, M., Wu, X., Liu, N., Lu, G., Li, S., Liu, T., Zhai, X.: Artificial general intelligence (agi) for education. arXiv preprint arXiv:2304.12479 1 (2023)

- [31] Latif, E., Zhou, Y., Guo, S., Gao, Y., Shi, L., Nayaaba, M., Lee, G., Zhang, L., Bewersdorff, A., Fang, L., et al.: A systematic assessment of openai o1-preview for higher order thinking in education. arXiv preprint arXiv:2410.21287 (2024)

- [32] Lee, G.G., Shi, L., Latif, E., Gao, Y., Bewersdorf, A., Nyaaba, M., Guo, S., Wu, Z., Liu, Z., Wang, H., et al.: Multimodality of ai for education: Towards artificial general intelligence. arXiv preprint arXiv:2312.06037 (2023)

- [33] Lewis, P., Perez, E., Piktus, A., Petroni, F., Karpukhin, V., Goyal, N., Küttler, H., Lewis, M., Yih, W.t., Rocktäschel, T., et al.: Retrieval-augmented generation for knowledge-intensive nlp tasks. Advances in Neural Information Processing Systems 33, 9459–9474 (2020)

- [34] Li, X., Zhao, L., Zhang, L., Wu, Z., Liu, Z., Jiang, H., Cao, C., Xu, S., Li, Y., Dai, H., et al.: Artificial general intelligence for medical imaging analysis. IEEE Reviews in Biomedical Engineering (2024)

- [35] Li, Y., Zhao, H., Jiang, H., Pan, Y., Liu, Z., Wu, Z., Shu, P., Tian, J., Yang, T., Xu, S., et al.: Large language models for manufacturing. arXiv preprint arXiv:2410.21418 (2024)

- [36] Liao, W., Liu, Z., Dai, H., Xu, S., Wu, Z., Zhang, Y., Huang, X., Zhu, D., Cai, H., Li, Q., et al.: Differentiating chatgpt-generated and human-written medical texts: quantitative study. JMIR Medical Education 9(1), e48904 (2023)

- [37] Lin, C.Y.: Rouge: A package for automatic evaluation of summaries. In: Text summarization branches out. pp. 74–81 (2004)

- [38] Liu, Y.: Multilingual denoising pre-training for neural machine translation. arXiv preprint arXiv:2001.08210 (2020)

- [39] Liu, Y., Han, T., Ma, S., Zhang, J., Yang, Y., Tian, J., He, H., Li, A., He, M., Liu, Z., et al.: Summary of chatgpt/gpt-4 research and perspective towards the future of large language models. arXiv preprint arXiv:2304.01852 (2023)

- [40] Liu, Y., He, H., Han, T., Zhang, X., Liu, M., Tian, J., Zhang, Y., Wang, J., Gao, X., Zhong, T., et al.: Understanding llms: A comprehensive overview from training to inference. arXiv preprint arXiv:2401.02038 (2024)

- [41] Liu, Y., Ott, M., Goyal, N., Du, J., Joshi, M., Chen, D., Levy, O., Lewis, M., Zettlemoyer, L., Stoyanov, V.: Roberta: A robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692 (2019)

- [42] Liu, Z., He, X., Liu, L., Liu, T., Zhai, X.: Context matters: A strategy to pre-train language model for science education. arXiv preprint arXiv:2301.12031 (2023)

- [43] Liu, Z., Li, Y., Cao, Q., Chen, J., Yang, T., Wu, Z., Hale, J., Gibbs, J., Rasheed, K., Liu, N., et al.: Transformation vs tradition: Artificial general intelligence (agi) for arts and humanities. arXiv preprint arXiv:2310.19626 (2023)

- [44] Liu, Z., Li, Y., Cao, Q., Chen, J., Yang, T., Wu, Z., Hale, J., Gibbs, J., Rasheed, K., Liu, N., et al.: Transformation vs tradition: Artificial general intelligence (agi) for arts and humanities. arXiv preprint arXiv:2310.19626 (2023)

- [45] Liu, Z., Li, Y., Shu, P., Zhong, A., Yang, L., Ju, C., Wu, Z., Ma, C., Luo, J., Chen, C., et al.: Radiology-llama2: Best-in-class large language model for radiology. arXiv preprint arXiv:2309.06419 (2023)

- [46] Liu, Z., Wang, P., Li, Y., Holmes, J., Shu, P., Zhang, L., Liu, C., Liu, N., Zhu, D., Li, X., et al.: Radonc-gpt: A large language model for radiation oncology. arXiv preprint arXiv:2309.10160 (2023)

- [47] Liu, Z., Wang, P., Li, Y., Holmes, J.M., Shu, P., Zhang, L., Li, X., Li, Q., Vora, S.A., Patel, S., et al.: Fine-tuning large language models for radiation oncology, a highly specialized healthcare domain. International Journal of Particle Therapy 12, 100428 (2024)

- [48] Liu, Z., Zhang, L., Wu, Z., Yu, X., Cao, C., Dai, H., Liu, N., Liu, J., Liu, W., Li, Q., et al.: Surviving chatgpt in healthcare. Frontiers in Radiology 3, 1224682 (2024)

- [49] Lyu, Y., Wu, Z., Zhang, L., Zhang, J., Li, Y., Ruan, W., Liu, Z., Yu, X., Cao, C., Chen, T., et al.: Gp-gpt: Large language model for gene-phenotype mapping. arXiv preprint arXiv:2409.09825 (2024)

- [50] Ma, C., Wu, Z., Wang, J., Xu, S., Wei, Y., Liu, Z., Zeng, F., Jiang, X., Guo, L., Cai, X., et al.: An iterative optimizing framework for radiology report summarization with chatgpt. IEEE Transactions on Artificial Intelligence (2024)

- [51] Ma, X., Gong, Y., He, P., Zhao, H., Duan, N.: Query rewriting for retrieval-augmented large language models. arXiv preprint arXiv:2305.14283 (2023)

- [52] Mikolov, T.: Efficient estimation of word representations in vector space. arXiv preprint arXiv:1301.3781 (2013)

- [53] Norman, J.: The manchus and their language (presidential address). Journal of the American Oriental Society 123(3), 483 (2003). https://doi.org/10.2307/3217747

- [54] Ouyang, L., Wu, J., Jiang, X., Almeida, D., Wainwright, C., Mishkin, P., Zhang, C., Agarwal, S., Slama, K., Ray, A., et al.: Training language models to follow instructions with human feedback. Advances in neural information processing systems 35, 27730–27744 (2022)

- [55] Papineni, K., Roukos, S., Ward, T., Zhu, W.J.: Bleu: a method for automatic evaluation of machine translation. In: Proceedings of the 40th annual meeting of the Association for Computational Linguistics. pp. 311–318 (2002)

- [56] Parvez, M.R., Chi, J., Ahmad, W.U., Tian, Y., Chang, K.W.: Retrieval enhanced data augmentation for question answering on privacy policies. arXiv preprint arXiv:2204.08952 (2022)

- [57] Peng, W., Li, G., Jiang, Y., Wang, Z., Ou, D., Zeng, X., Xu, D., Xu, T., Chen, E.: Large language model based long-tail query rewriting in taobao search. In: Companion Proceedings of the ACM on Web Conference 2024. pp. 20–28 (2024)

- [58] Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., Sutskever, I., et al.: Language models are unsupervised multitask learners. OpenAI blog 1(8), 9 (2019)

- [59] Raffel, C., Shazeer, N., Roberts, A., Lee, K., Narang, S., Matena, M., Zhou, Y., Li, W., Liu, P.J.: Exploring the limits of transfer learning with a unified text-to-text transformer. Journal of machine learning research 21(140), 1–67 (2020)

- [60] Rezayi, S., Dai, H., Liu, Z., Wu, Z., Hebbar, A., Burns, A.H., Zhao, L., Zhu, D., Li, Q., Liu, W., et al.: Clinicalradiobert: Knowledge-infused few shot learning for clinical notes named entity recognition. In: Machine Learning in Medical Imaging: 13th International Workshop, MLMI 2022, Held in Conjunction with MICCAI 2022, Singapore, September 18, 2022, Proceedings. pp. 269–278. Springer (2022)

- [61] Seo, J., Byun, S., Kang, M., Lee, S.: Mergen: The first manchu-korean machine translation model trained on augmented data. arXiv preprint arXiv:2311.17492 (2023)

- [62] Seo, M., Baek, J., Thorne, J., Hwang, S.J.: Retrieval-augmented data augmentation for low-resource domain tasks. arXiv preprint arXiv:2402.13482 (2024)

- [63] Shu, P., Zhao, H., Jiang, H., Li, Y., Xu, S., Pan, Y., Wu, Z., Liu, Z., Lu, G., Guan, L., et al.: Llms for coding and robotics education. arXiv preprint arXiv:2402.06116 (2024)

- [64] Sutskever, I.: Sequence to sequence learning with neural networks. arXiv preprint arXiv:1409.3215 (2014)

- [65] Thurgood, G., LaPolla, R.J.: The sino-tibetan languages. Routledge (2016)

- [66] Tian, J., Hou, J., Wu, Z., Shu, P., Liu, Z., Xiang, Y., Gu, B., Filla, N., Li, Y., Liu, N., et al.: Assessing large language models in mechanical engineering education: A study on mechanics-focused conceptual understanding. arXiv preprint arXiv:2401.12983 (2024)

- [67] Touvron, H., Martin, L., Stone, K., Albert, P., Almahairi, A., Babaei, Y., Bashlykov, N., Batra, S., Bhargava, P., Bhosale, S., et al.: Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288 (2023)

- [68] Tsunoda, T.: Language Endangerment and Language Revitalization. De Gruyter Mouton (2006)

- [69] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. Advances in neural information processing systems 30 (2017)

- [70] Wang, H., Dang, A.: Enhancing l2 writing with generative ai: A systematic review of pedagogical integration and outcomes. preprint DOI: 10.13140/RG.2.2.19572.16005 (2024)

- [71] Wang, H., Dang, A., Wu, Z., Mac, S.: Seeing chatgpt through universities’ policies, resources and guidelines. arXiv preprint arXiv:2312.05235 (2023)

- [72] Wang, J., Jiang, H., Liu, Y., Ma, C., Zhang, X., Pan, Y., Liu, M., Gu, P., Xia, S., Li, W., et al.: A comprehensive review of multimodal large language models: Performance and challenges across different tasks. arXiv preprint arXiv:2408.01319 (2024)

- [73] Wang, J., Wu, Z., Li, Y., Jiang, H., Shu, P., Shi, E., Hu, H., Ma, C., Liu, Y., Wang, X., et al.: Large language models for robotics: Opportunities, challenges, and perspectives. arXiv preprint arXiv:2401.04334 (2024)

- [74] Weers, N.: Saving the manchu language: 9 critically endangered languages from around the world. The Straits Times (June 29 2016), https://www.straitstimes.com/world/saving-the-manchu-language-9-critically-endangered-languages-from-around-the-world

- [75] Zhang, B., Williams, P., Titov, I., Sennrich, R.: Improving massively multilingual neural machine translation and zero-shot translation. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (2020). https://doi.org/10.18653/v1/2020.acl-main.148, https://doi.org/10.18653/v1/2020.acl-main.148

- [76] Zhang, K., Zhou, R., Adhikarla, E., Yan, Z., Liu, Y., Yu, J., Liu, Z., Chen, X., Davison, B.D., Ren, H., et al.: A generalist vision–language foundation model for diverse biomedical tasks. Nature Medicine pp. 1–13 (2024)

- [77] Zhang, S., Frey, B., Bansal, M.: How can nlp help revitalize endangered languages? a case study and roadmap for the cherokee language. arXiv preprint arXiv:2204.11909 (2022)

- [78] Zhang, S., Frey, B., Bansal, M.: Chren: Cherokee-english machine translation for endangered language revitalization. arXiv preprint arXiv:2010.04791 (2020)

- [79] Zhang, T., Kishore, V., Wu, F., Weinberger, K.Q., Artzi, Y.: Bertscore: Evaluating text generation with bert. arXiv preprint arXiv:1904.09675 (2019)

- [80] Zhang, X., Rajabi, N., Duh, K., Koehn, P.: Machine translation with large language models: Prompting, few-shot learning, and fine-tuning with qlora. In: Proceedings of the Eighth Conference on Machine Translation. pp. 468–481 (2023). https://doi.org/10.18653/v1/2023.wmt-1.43, https://doi.org/10.18653/v1/2023.wmt-1.43

- [81] Zhao, H., Ling, Q., Pan, Y., Zhong, T., Hu, J.Y., Yao, J., Xiao, F., Xiao, Z., Zhang, Y., Xu, S.H., et al.: Ophtha-llama2: A large language model for ophthalmology. arXiv preprint arXiv:2312.04906 (2023)

- [82] Zhao, H., Liu, Z., Wu, Z., Li, Y., Yang, T., Shu, P., Xu, S., Dai, H., Zhao, L., Mai, G., et al.: Revolutionizing finance with llms: An overview of applications and insights. arXiv preprint arXiv:2401.11641 (2024)

- [83] Zhao, L., Zhang, L., Wu, Z., Chen, Y., Dai, H., Yu, X., Liu, Z., Zhang, T., Hu, X., Jiang, X., et al.: When brain-inspired ai meets agi. Meta-Radiology p. 100005 (2023)

- [84] Zhenyuan, Y., Zhengliang, L., Jing, Z., Cen, L., Jiaxin, T., Tianyang, Z., Yiwei, L., Siyan, Z., Teng, Y., Qing, L., et al.: Analyzing nobel prize literature with large language models. arXiv preprint arXiv:2410.18142 (2024)

- [85] Zhong, T., Liu, Z., Pan, Y., Zhang, Y., Zhou, Y., Liang, S., Wu, Z., Lyu, Y., Shu, P., Yu, X., et al.: Evaluation of openai o1: Opportunities and challenges of agi. arXiv preprint arXiv:2409.18486 (2024)

Appendix A Appendix

A.1 Additional Experiment Results