Trainingless Adaptation of Pretrained Models

for Environmental Sound Classification

Abstract

Deep neural network (DNN)-based models for environmental sound classification are not robust against a domain to which training data do not belong, that is, out-of-distribution or unseen data. To utilize pretrained models for the unseen domain, adaptation methods, such as finetuning and transfer learning, are used with rich computing resources, e.g., the graphical processing unit (GPU). However, it is becoming more difficult to keep up with research trends for those who have poor computing resources because state-of-the-art models are becoming computationally resource-intensive. In this paper, we propose a trainingless adaptation method for pretrained models for environmental sound classification. To introduce the trainingless adaptation method, we first propose an operation of recovering time–frequency-ish (TF-ish) structures in intermediate layers of DNN models. We then propose the trainingless frequency filtering method for domain adaptation, which is not a gradient-based optimization widely used. The experiments conducted using the ESC-50 dataset show that the proposed adaptation method improves the classification accuracy by 20.40 percentage points compared with the conventional method.

Index Terms:

environmental sound classification, adaptationI Introduction

The analysis of environmental sounds has been studied for various applications [1, 2]. Analyzing environmental sounds enables machine condition monitoring [3], traffic monitoring [4], and bioacoustic analysis [5].

Deep neural network (DNN)-based models for analyzing environmental sounds have been attracting attention for the past ten years [6, 7, 8, 9]. In [6], convolutional neural network bidirectional gated recurrent unit (CNN-BiGRU) has been used for sound event detection (SED). In [7, 8], Transformer is employed to handle longer-sequence audio. In [9], Vision Transformer (ViT) [10] has resulted in a significant improvement in environmental sound classification.

DNN-based models are not robust against unseen data, which are the data that the models have not encountered during the training stages. To address robustness against unseen data, adaptation methods, such as finetuning and transfer learning, have been studied. In the analysis of environmental sounds, domain adaptation for environmental sound classification [11], acoustic scene classification (ASC) [12, 13, 14, 15], and the detection of anomalous machine conditions [3] has been in progress. In [12], ASC for addressing the gap in recording devices between training and inference stages has been studied. In [13], the generative adversarial network (GAN)-based domain adaptation for optical fiber sensing with ASC has been introduced. In the detection of anomalous machine conditions, domain generalization has been developed in the DCACE2023 challenge task 2 [3].

More recently, low-calculation-cost adaptation methods have been studied [14, 16] for tackling the problem that the scale of a dataset and DNN models are becoming larger. In the DCASE2022 challenge task 1 [14], the participants of the task develop low-calculation-cost adaptation methods with gradient-based optimization. Low-rank adaptation (LoRA) [16, 17] has been proposed for addressing the finetuning of large-scale models. However, it is assumed that the adaptation methods including LoRA require rich computing resources, e.g., a graphical processing unit (GPU). In such a situation, only organizations with rich computing resources can follow research trends and realize rapid developments.

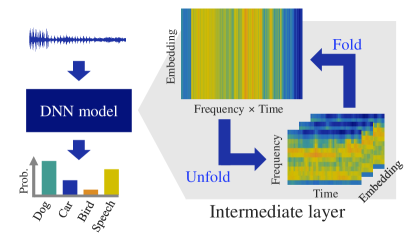

In this paper, we propose a trainingless adaptation method for pretrained models for environmental sound classification. For the implementation of the trainingless adaptation method, we first propose a method of recovering time–frequency-ish (TF-ish) structures in intermediate layers of DNN models. The trainingless frequency filtering method for domain adaptation is then proposed, as shown in Fig. 1. By the frequency filtering of the signal processing, which is not a gradient-based optimization, DNN-based models acquire robustness against unseen data without high calculation cost requiring training data. In this paper, we did not use any GPUs for experiments.

II Conventional method

II-A Audio spectrogram transformer (AST)

Recently, in the task of environmental sound classification, AST models, which are based on ViT [10], have been widely used [9, 18, 19, 20]. The output of the -th layer in AST is formalized as

| (1) |

and represent the batch size and the number of dimensions of the embedding, respectively. and indicate the numbers of partitions of the patches on the frequency and time axes, respectively. is the nonlinear operator. Note that Eq. 1 is formulated without a special token, such as the class token. In an AST-based architecture, it is mainly composed of multihead attention, time-distributed multi-layer perceptron (MLP), and layer normalization. These functions of save the shape of an inputted feature, which is a log-mel spectrogram in the time–frequency domain. The AST models are trained using large-scale audio datasets, such as AudioSet [21]. The AST models are expected to contain TF-ish features of intermediate layers.

II-B Adaptation of DNN models

For DNN models, many adaptation methods, including finetuning, have been developed [12, 13, 11, 14, 15, 17]. Finetuning is employed to mitigate the gap between the distributions of training and inference data, where the gap might cause the underperformance of classifying sound events. The finetuning generally utilizes gradient-based optimization to adapt models to unseen data using a dataset. These gradient-based adaptation methods require heavy calculations, which make it difficult to rapidly cycle the development for updating the parameters of the models.

III Proposed method

We introduce the adaptation method with which the pretrained models for environmental sound classification acquire robustness against unseen data without gradient-based optimization. The proposed adaptation method employs a naive signal processing in the intermediate layers focusing on the TF-ish structure appearing in AST. Fig. 2 shows the proposed trainingless adaptation method with which the frequency filtering is reproduced for extracting informative frequency bands in the intermediate layers.

III-A Recovering TF-ish structure in intermediate layer

We first define two operations for handling TF-ish features in the intermediate layers of AST. In AST models, spectrograms of frequency bins and time frames are first embedded using the convolutional neural network (CNN): . The embedded spectrograms are then reshaped with the following operation:

| (2) |

The reshaped is known as the “patch.” We further define the following operation in the intermediate layers of AST :

| (3) |

This operation, where a third-order tensor is transformed into a fourth-order one, indicates the reverse of . In this work, we use a simple reshape operator as , which is exactly the reverse operator of for the widely used AST models [9, 18, 19, 22]. By applying the Unfold operation in the intermediate layers of AST, we can expect to recover TF-ish structures.

III-B Signal processing in intermediate layers with TF-ish structure

To make the pretrained models robust against unseen data without retraining, we also introduce the signal-processing-based adaptation method. In the pretrained DNN models, the intermediate signals are normalized, e.g., the layer normalization with pretrained parameters. Such normalization could be regarded as a denoising operator, which enhances informative low-power signals. However, non-informative signals are also enhanced together with informative low-power signals. The enhanced non-informative signals then cause the misclassification of environmental sounds. In the proposed adaptation method, the non-informative signals are not enhanced by switching non-informative frequency bands into silent signals in intermediate layers on the TF-ish domain.

The adaptation method for the reshaped intermediate layers of AST for reproducing a naive signal processing is as follows:

| (4) |

where and respectively represent the outputs of the intermediate layers for the inputted audio and silent signals in terms of the -th batch, -th dimensional embedding, -th bin frequency, and -th time frame. The signal of the intermediate layer of the inputted audio signal is switched into the signal of that of the inputted silent signal, i.e., the signal contains only the direct current component. Eq. 4 reproduces the filtering on the TF domain in the intermediate layers of AST models. After applying Eq. 4 to the intermediate layers, Fold is again applied.

IV Experiments

IV-A Experimental conditions

[Dataset, parameters of models, and training] In experiments, the proposed filtering of Eq. 4 is used for classifying environmental sounds. To evaluate the performance, we used ESC-50 [23] with five-fold cross-validation. ESC-50 is composed of 2,000 audio signals with 50 environmental sound classes. For widely used baselines of AST models, we used Patchout fast Spectrogram Transformer (PaSST) [18], Contrastive language-audio pretraining [20], referred to as “MS-CLAP,” and Bidirectional encoder representation from audio Transformers (BEATs) [19]. PaSST, MS-CLAP, and BEATs are supervised, audio-language supervised, and self-supervised models, respectively. For PaSST, we used the pretrained weights of ESC-50, which are officially provided. For MS-CLAP, the events of ESC-50 are classified by prompts, e.g., “this is a sound of [class label],” in accordance with [22] using hierarchical token semantic audio Transformer (HTS-AT) [24] and generative pretrained Transformer 2 (GPT2) [25] as the audio and text encoders, respectively. For BEATs, we finetuned the model initialized with the parameters of “BEATs_iter3+ (AS2M)” [19]. The AST models used in the experiments did not conduct data augmentation by adding noise to the training dataset.

[Target domain] In the experiments, we consider the Distributed Fiber-Optic Sensor (DFOS) [26, 27, 28] as the target domain, which is different from that of the microphone. In DFOS, acoustic signals are captured by an optical fiber. Regarding the characteristics of DFOS, the observed audio signals are from low-pass filters covered by cables and optical noise with low signal-to-noise Ratio (SNR) [27]. The quality of audio signals observed by DFOS is thus significantly lower than that of audio signals observed by the microphone.

For reproducing audio signals observed by DFOS, we applied 1st- to 4th-order Butterworth low-pass filters to audio signals of ESC-50. To low-frequency-pass audio signals, white Gaussian noises with variable SNRs are added to reproduce optical noise. The larger the order of the filters and the lower the SNR, the more different the domain of DFOS is from that of the microphone.

[Setting for proposed method] In Eq. 4, the frequency bin is calculated as

| (5) | |||

| (6) |

where and indicate the cutoff frequency of the Butterworth low-pass filter used for DFOS and the center frequency of mel-frequency bins, which are used for the preprocessing of the AST models. and represent the index of the cutoff frequency bins of the intermediate layers and the number of mel-frequency bins, respectively.

Moreover, we use the term “block” in the experiment to refer to the intermediate layer for each AST model. For PaSST and BEATs, one Transformer block corresponds to one “block.” For the HTS-AT of MS-CLAP, one group (Swin Transformer + Patch-Merge) corresponds to one “block.”

| SNR | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 5 dB | 10 dB | 15 dB | |||||||||||||

| Order for low-pass filter | Order for low-pass filter | Order for low-pass filter | |||||||||||||

| Model | 1 | 2 | 3 | 4 | 1 | 2 | 3 | 4 | 1 | 2 | 3 | 4 | |||

| Conventional | 68.35 | 66.45 | 65.55 | 65.05 | 49.55 | 48.20 | 48.00 | 47.70 | 26.40 | 26.05 | 25.70 | 25.35 | |||

| Proposed | PaSST | 76.05 | 75.60 | 75.05 | 74.25 | 65.30 | 65.65 | 65.45 | 65.05 | 44.60 | 45.55 | 45.95 | 45.75 | ||

| Conventional | 60.15 | 57.05 | 55.55 | 54.70 | 46.60 | 44.25 | 42.75 | 42.30 | 30.15 | 28.65 | 28.15 | 27.85 | |||

| Proposed | MS-CLAP | 59.95 | 58.25 | 57.45 | 56.80 | 48.70 | 48.45 | 47.70 | 47.10 | 36.20 | 36.25 | 36.75 | 36.70 | ||

| Conventional | 31.45 | 29.80 | 28.90 | 28.65 | 15.05 | 14.85 | 14.75 | 14.60 | 5.85 | 5.70 | 5.90 | 5.75 | |||

| Proposed | BEATs | 46.40 | 47.80 | 48.25 | 48.60 | 33.50 | 34.75 | 35.55 | 35.70 | 15.70 | 16.50 | 16.85 | 17.20 | ||

IV-B Experimental results

[Quantitative confirmation of TF-ish features in intermediate layers] We first verify the assumption that TF-ish features are expressed in the intermediate layers of AST models. To verify the assumption, we conduct experiments in terms of time continuity, frequency continuity, and randomness in the intermediate features. More specifically, we inputted a sine wave, a pulse signal, and a white Gaussian noise of 5-s duration to the AST models. For the sine wave and pulse signal, we used a sine wave of 1 kHz and a square signal of 1 Hz for a clear analysis. Fig. 3 shows the amplitude spectrograms of the sine wave, pulse signal, and white Gaussian noise used in the experiment.

Fig. 4 shows the time and frequency continuity in the intermediate layers of the AST models. As for the evaluation metrics of the time and frequency continuities, we used the time and frequency differentiations and , respectively. For each intermediate layer of each AST model, the time and frequency differentiations were calculated. For all models, the time differentiation of the sine wave is smaller than that of the pulse signal. In other words, in the intermediate layers of the AST models, the TF-ish feature in terms of the time continuity is saved. Similarly, the frequency differentiation of the pulse signal is smaller than that of the sine wave. The result indicates that the continuity along the frequency axis is preserved in the intermediate layers.

Fig. 5 shows the response in the randomness in the intermediate layers. In this experiment, white Gaussian noise was inputted to each AST model for the extra analysis. Kurtosis was used to measure the sharpness of a distribution. The kurtosis close to zero indicates that a signal follows the white Gaussian noise. The kurtosis in Fig. 5 was calculated by averaging the kurtosis of each frequency bin in each intermediate layer. As shown in the figure, the kurtosis to the white Gaussian noise is closer to zero than those of the sine wave and pulse signal for each model and layer. The result indicates that the intermediate layers of the AST models maintain the randomness of the TF structure. Moreover, in the HTS-AT of MS-CLAP, the kurtosises of the pulse signal, sine wave, and white noise are close to each other. This is because the number of dimensions on the TF-ish domain is reduced to decrease the calculation cost through its network architecture.

[Qualitative confirmation of TF-ish features in intermediate layers] Fig. 6 shows examples of the TF-ish features in the intermediate layers of the AST models. For PaSST, MS-CLAP, and BEATs, , and of the intermediate layers are randomly selected and then shown in the figure. Figs. 6(a) - 6(c) show the unfolded features in the intermediate layers for the inputted sine wave, pulse signal, and white Gaussian noise, respectively. The results show that the features of the inputted signals are preserved in the intermediate layers. In the deeper blocks, the features of the inputted signals tend to be destroyed. Moreover, in the HTS-AT of MS-CLAP, it is clear that the number of dimensions on the TF-ish domain is reduced.

[Summary results of event classification] We verify the proposed filtering of Eq. 4 for classifying the environmental sounds hereafter. Table I shows the summary results of the environmental sound classification in terms of accuracy. In the table, “conventional method” and “proposed method” mean the AST model with and without the proposed filtering of Eq. 4, respectively. The indexes of the ending block for the proposed filtering are 10, 2, and 3 for PaSST, MS-CLAP, and BEATs, respectively. In the blocks shallower than the ending block, the proposed filtering is applied. As shown in the table, the proposed method outperforms the conventional method in most configurations. Under the condition of a lower SNR, the accuracy of the proposed method is significantly higher than that of the conventional method. In particular, the proposed method improved the accuracy of PaSST by 20.40 percentage points compared with the conventional method at SNR dB and order4. On the other hand, under the condition of SNR dB and order, the proposed filtering with MS-CLAP underperformed the conventional method. This is because the audio encoder HTS-AT of MS-CLAP reduces the number of dimensions of the frequency in the deeper block. This reduction mechanism is helpful for decreasing the calculation cost. However, the lower-dimensional frequency is harmful for frequency filtering.

[Application of the proposed method to different layers] Fig. 7 shows the result of the change in classification performance by changing the ending block of the intermediate layers for the proposed filtering. For example, in the left panel of the figure, the values at the 5th ending block represent the accuracies when the proposed filtering is applied from the 0th to 5th blocks in PaSST. In the results, only the average score of the orders 1 to 4 for the low-pass filter is discussed for a clear analysis. The results show that the accuracies of MS-CLAP and BEATs are significantly lower in the deeper ending blocks than in the shallower ending blocks. This is because over-enhancing the inputted signals in the deeper blocks by MS-CLAP and BEATs, as shown in Fig. 6, leads to the destruction of the frequency structures.

V Conclusion

In this paper, we proposed the trainingless adaptation method for pretrained AST models. In the experiments, we first confirmed the TF-ish features in the intermediate layers of AST. We then proposed the frequency filtering method for the TF-ish domain to address the domain adaptation. The results of experiments using the ESC-50 dataset show that the proposed filtering method improved the classification accuracy by 20.40 percentage points compared with the conventional method.

Our proposed method enables the application of signal processing methods to DNN models. In other words, it accelerates the combination of legacy signal processing methods and modern DNN methods.

References

- [1] K. Imoto, “Introduction to acoustic event and scene analysis,” Acoust. Sci. Tech., vol. 39, no. 3, pp. 182–188, 2018.

- [2] A. Mesaros, T. Heittola, T. Virtanen, and M. D. Plumbley, “Sound event detection: A tutorial,” IEEE Signal Processing Magazine, vol. 38, no. 5, pp. 67–83, 2021.

- [3] D. Kota, K. Imoto, N. Harada, D. Niizumi, Y. Koizumi, T. Nishida, H. Purohit, R. Tanabe, T. Endo, and Y. Kawaguchi, “Description and discussion on DCASE 2023 challenge task 2: First-shot unsupervised anomalous sound detection for machine condition monitoring,” Proc. Workshop on Detection and Classification of Acoustic Scenes and Events (DCASE), pp. 31–35, 2023.

- [4] S. Damiano, L. Bondi, S. Ghaffarzadegan, A. Guntoro, and T. van Waterschoot, “Can synthetic data boost the training of deep acoustic vehicle counting networks?,” Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 631–635, 2024.

- [5] I. Nolasco, B. Ghani, S. Singh, E. Vidaña-Vila, H. Whitehead, E. Grout, M. Emmerson, I. Kiskin, F. Jensen, J. Morford, A. Strandburg-Peshkin, L. Gill, H. Pamuła, V. Lostanlen, and D. Stowell, “Few-shot bioacoustic event detection at the DCASE 2023 challenge,” Proc. Workshop on Detection and Classification of Acoustic Scenes and Events (DCASE), pp. 146–150, 2023.

- [6] E. Çakır, G. Parascandolo, T. Heittola, H. Huttunen, and T. Virtanen, “Convolutional recurrent neural networks for polyphonic sound event detection,” IEEE/ACM Transactions on Audio, Speech, and Language Processing (TASLP), vol. 25, no. 6, pp. 1291–1303, 2017.

- [7] Q. Kong, Y. Xu, W. Wang, and M. D. Plumbley, “Sound event detection of weakly labelled data with CNN-Transformer and automatic threshold optimization,” IEEE/ACM Transactions on Audio, Speech, and Language Processing (TASLP), vol. 28, pp. 2450–2460, 2020.

- [8] K. Miyazaki, T. Komatsu, T. Hayashi, S. Watanabe, T. Toda, and K. Takeda, “Weakly-supervised sound event detection with self-attention,” Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 66–70, 2020.

- [9] Y. Gong, Y. Chung, and J. Glass, “AST: Audio Spectrogram Transformer,” Proc. INTERSPEECH, pp. 571–575, 2021.

- [10] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly, J. Uszkoreit, and N. Houlsby, “An image is worth 16x16 words: Transformers for image recognition at scale,” Proc. International Conference on Learning Representations (ICLR), pp. 1–21, 2021.

- [11] P. Lopez-Meyer, J. Ontiveros, H. Lu, and G. Stemmer, “Efficient end-to-end audio embeddings generation for audio classification on target applications,” Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 601–605, 2021.

- [12] T. Heittola, A. Mesaros, and T. Virtanen, “Acoustic scene classification in DCASE 2020 Challenge: generalization across devices and low complexity solutions,” Proc. Workshop on Detection and Classification of Acoustic Scenes and Events (DCASE), pp. 56–60, 2020.

- [13] N. He and J. Zhu, “A weighted partial domain adaptation for acoustic scene classification and its application in fiber optic security system,” IEEE Access, vol. 9, pp. 2244–2250, 2021.

- [14] I. Martín-Morató, F. Paissan, A. Ancilotto, T. Heittola, Mesaros A, E. Farella, A. Brutti, and T. Virtanen, “Low-complexity acoustic scene classification in DCASE 2022 Challenge,” Proc. Workshop on Detection and Classification of Acoustic Scenes and Events (DCASE), pp. 111–115, 2022.

- [15] Y. Tan, H. Ai, S. Li, and M. D. Plumbley, “Acoustic scene classification across cities and devices via feature disentanglement,” IEEE/ACM Transactions on Audio, Speech, and Language Processing (TASLP), vol. 32, pp. 1286–1297, 2024.

- [16] E. Edward J Hu, Y. Yelong Shen, P. Phillip Wallis, Z. Zeyuan Allen-Zhu, Y. Yuanzhi Li, S. Shean Wang, L. Lu Wang, and W. Weizhu Chen and, “LoRA: Low-rank adaptation of large language models,” Proc. International Conference on Learning Representations (ICLR), pp. 1–13, 2022.

- [17] X. Zheng, A. Jiang, B. Han, Y. Qian, P. Fan, J. Liu, and W. Zhang, “Improving anomalous sound detection via low-rank adaptation fine-tuning of pre-trained audio models,” arXiv, arXiv:2409.07016, pp. 1–6, 2024.

- [18] K. Koutini, J. Schlüter, H. Eghbal-zadeh, and G. Widmer, “Efficient training of audio transformers with patchout,” Proc. INTERSPEECH, pp. 2753–2757, 2022.

- [19] S. Chen, Y. Wu, C. Wang, S. Liu, D. Tompkins, Z. Chen, and F. Wei, “BEATs: Audio pre-training with acoustic tokenizers,” Proc. International conference on machine learning (ICML), pp. 5178–5193, 2023.

- [20] B. Elizalde, S. Deshmukh, and H. Wang, “Natural language supervision for general-purpose audio representations,” Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 336–340, 2024.

- [21] J. Gemmeke, D. Ellis, D. Freedman, A. Jansen, W. Lawrence, R. Moore, M. Plakal, and M. Ritter, “Audio set: An ontology and human-labeled dataset for audio events,” Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 776–780, 2017.

- [22] B. Elizalde, S. Deshmukh, M. Al Ismail, and H. Wang, “CLAP: learning audio concepts from natural language supervision,” Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1–5, 2023.

- [23] J. Piczak, “ESC: Dataset for environmental sound classification,” Proc. the 23rd Annual ACM Conference on Multimedia (ACMM), pp. 1015–1018, 2015.

- [24] K. Chen, X. Du, B. Zhu, Z. Ma, T. Berg-Kirkpatrick, and S. Dubnov, “HTS-AT: A hierarchical token-semantic audio transformer for sound classification and detection,” Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 646–650, 2022.

- [25] A. Radford et al., “Language models are unsupervised multitask learners,” Tech. Rep., OpenAI, 2019.

- [26] Y. Lu, Y. Tian, S. Han, E. Cosatto, S. Ozharar, and Y. Ding, “Automatic fine-grained localization of utility pole landmarks on distributed acoustic sensing traces based on bilinear resnets,” Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 4675–4679, 2021.

- [27] N. Tonami, S. Mishima, R. Kondo, K. Imoto, and T. Hino, “Event classification with class-level gated unit using large-scale pretrained model for optical fiber sensing,” Proc. Workshop on Detection and Classification of Acoustic Scenes and Events (DCASE), pp. 196–200, 2023.

- [28] N. Tonami, W. Kohno, S. Mishima, Y. Arai, R. Kondo, and T. Hino, “Low-rank constrained multichannel signal denoising considering channel-dependent sensitivity inspired by self-supervised learning for optical fiber sensing,” Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 8511–8515, 2024.