Training-Free Time-Series Anomaly Detection:

Leveraging Image Foundation Models

Abstract

Recent advancements in time-series anomaly detection have relied on deep learning models to handle the diverse behaviors of time-series data. However, these models often suffer from unstable training and require extensive hyperparameter tuning, leading to practical limitations. Although foundation models present a potential solution, their use in time series is limited. To overcome these issues, we propose an innovative image-based, training-free time-series anomaly detection (ITF-TAD) approach. ITF-TAD converts time-series data into images using wavelet transform and compresses them into a single representation, leveraging image foundation models for anomaly detection. This approach achieves high-performance anomaly detection without unstable neural network training or hyperparameter tuning. Furthermore, ITF-TAD identifies anomalies across different frequencies, providing users with a detailed visualization of anomalies and their corresponding frequencies. Comprehensive experiments on five benchmark datasets, including univariate and multivariate time series, demonstrate that ITF-TAD offers a practical and effective solution with performance exceeding or comparable to that of deep models.

1 Introduction

Time-series anomaly detection (TAD) is crucial across various fields, including industry, public service, healthcare, and finance, to monitor their status and avoid undesired events such as malfunctions (Woike, Abdul-Aziz, and Clem 2014), diseases (Chauhan and Vig 2015; Wang et al. 2023), and frauds (Doshi, Yilmaz, and Uludag 2021; Zhang et al. 2006).

In practical applications, collecting sufficient abnormal data is often challenging, and the diverse anomaly patterns complicate the use of supervised learning for classifying abnormal and normal data. Therefore, unsupervised or semi-supervised learning is more suitable, as it can identify anomalies using only unlabeled or normal data and detect previously unseen anomalies without past abnormal data. Unsupervised TAD methods can be broadly classified into three categories based on how they evaluate anomaly scores: (I) Similarity-based methods, which learn the distribution of normal data and use the distance from it or its probability density as the anomaly score (Breunig et al. 2000; Schölkopf et al. 2001); (II) Prediction-based methods, which predict the future values of a time series and measure the anomaly score as the discrepancy between predicted and actual values(Malhotra et al. 2015); (III) Reconstruction-based methods, which compress and reconstruct time series and use reconstruction error as the anomaly score (Audibert et al. 2020). While classical approaches detect anomalies based on points in time series, recent studies focus on detecting anomalies through changes or rare patterns in time series. Deep learning techniques such as variational autoencoders (VAE) (Li et al. 2021), generative adversarial networks (GAN) (Li et al. 2019), recurrent neural networks (RNN) (Hundman et al. 2018; Shen, Li, and Kwok 2020), and Transformers (Chen et al. 2021; Song et al. 2024) are frequently employed.

The use of highly complex deep models for TAD leads to practical issues. These models often suffer from unstable training processes, requiring the hyperparameter tuning of a specific model for each case. Moreover, These models necessitate a deep understanding of deep learning techniques and significant human costs. To tackle this issue, foundation models, trained on large-scale data and applicable to individual problems without additional training, have been employed in natural language processing (Touvron et al. 2023) and computer vision (Xiao et al. 2023a). Although recent efforts have adapted foundation models for TAD (Das et al. 2023; Zhou et al. 2023), achieving high performance still necessitates fine-tuning, which does not completely eliminate training instability and hyperparameter tuning.

To address these issues, we introduce the image-based training-free TAD (ITF-TAD) approach, which applies pre-trained image foundation models for TAD without additional neural network training and fine-tuning. ITF-TAD converts time-series data into images using techniques such as continuous wavelet transform (CWT) and then compresses these images into lower-channel representations to enhance computational efficiency. These compressed images are subsequently fed into a pretrained image foundation model. By aggregating the intermediate layers of the foundation model, a representation space of the time series is obtained, enabling similarity-based anomaly detection on these representations. Additionally, this approach identifies the anomaly locations in the frequency space. This process is summarized in Fig. 1 ITF-TAD demonstrates sufficient performance on five benchmark datasets, proving to be superior or comparable to prior deep models.

2 Related Works

Time-Series Anomaly Detection

Most unsupervised TAD methods fall into three main categories: similarity-based, prediction-based, or reconstruction-based approaches. In similarity-based methods, deep autoencoder on Gaussian mixture latent space (DAGMM) (Zong et al. 2018) stands out. This method reduces dimensionality for multivariate time series using an autoencoder, then scores anomalies based on probability density. Other notable methods include support vector data description (SVDD) (Tax and Duin 2004) and its high-dimensional extension, Deep-SVDD (Ruff et al. 2018). For prediction-based methods, the classical approach is the autoregressive integrated moving average (ARIMA) (Anderson and Kendall 1976). Recent studies employ deep models such as long short-term memory (LSTM) (Hundman et al. 2018) and graph deviation network (GDN) (Deng and Hooi 2021). DiffAD (Xiao et al. 2023b), which employs a diffusion probabilistic model also falls under this category. Reconstruction-based methods often utilize deep models such as LSTM-VAE (Park, Hoshi, and Kemp 2018), OmniAnomaly (Su et al. 2019), and Anomaly Transformer (Xu et al. 2021). GPT2-backbone frozen pre-trained Transformer (GPT2-FPT) (Zhou et al. 2023), which fine-tunes pre-trained language foundation models, is also based on reconstruction.

Image-Based Approaches

Traditional frequency analysis methods, such as Fourier and wavelet transforms, have been widely used in practical applications like machine vibration (Peng and Chu 2004), electrocardiograms (Khorrami and Moavenian 2010), and electroencephalography (Türk and Özerdem 2019). TimesNet (Wu et al. 2022) has employed fast Fourier transform to decompose time series into several characteristic periods, rearranging them in two dimensions for anomaly detection based on reconstruction error. This transformation from univariate time series to two-dimensional images is further enhanced using convolutional neural networks (CNN) (Aslan and Akin 2022; Copiaco et al. 2023), which enhanace explainability by enabling users to infer the causes of anomalies through visualizing abnormal areas or frequencies in images. Another imaged-based approach demonstrated that scalograms, generated by CWT, outperform other image encoding and a non-encoding TAD methods (Garcia et al. 2022). The frequency-time characteristics of scalograms also improve human understanding of anomalies. However, these methods still require extensive and unstable neural network training and hyperparameter tuning. Additionally, while scalogram approaches are effective for univariate time series, extending them to multivariate series presents significant challenges.

3 Method

We consider TAD for a -dimensional multivariate time series with length , denoted as , where represents each time-series data in -dimensional multivariate time series. In TAD, models provide anomaly scores , which increase when a time point is likely abnormal. As shown in Fig. 1, the ITF-TAD process includes three main stages: scalogram generation that uses dual mother wavelets, scalogram aggregation achieved by mapping and channel embedding, and anomaly detection using an image foundation model. The following sections delve into each of these three stages.

3.1 Scalogram Generation with Dual Mother Wavelets

In CWT, univariate time series of continuous variables are transformed into frequency-time characteristics, known as scalograms.This is achieved by translating and scaling a mother wavelet. ITF-TAD applies CWT to each dimension of the time-series data, resulting in scalograms.

Consider a moving window size and pseudo frequencies , which are equally distributed on a logarithmic scale, ranging from to Hz and consist of discrete points. The actual frequency is obtained by multiplying them by the sampling frequency of the time series. CWT can be expressed as follows:

| (1) |

where denote the transformation performed using the aforementioned process with a mother wavelet . represents the scalogram of the -th time series. This study employs the complex Morlet wavelet (Grossmann and Morlet 1984) and the Ricker wavelet (Ricker 1953) as . The dual use of complex and real mother wavelets is essential for maintaining high sensitivity in both frequency characteristics and phase shifts. Scalograms generated with complex mother wavelets exhibit high sensitivity in frequency but lose temporal accuracy while those with real wavelets, such as the Ricker wavelet, exhibit high sensitivity to phase shifts and lower frequency sensitivity. Additionally, these scalograms are normalized by using the maximum absolute value of each scalogram as follows:

| (2) |

This normalization keeping the origin at zero is essential for leveraging the sparsity of scalograms during aggregation.

3.2 Scalogram Aggregation

To use image foundation models for TAD, the normalized scalograms need to be converted into the input format using a mapping to perform TAD. Typically, image foundation models require, . It is important to maintain the time dimension during this conversion to accurately identify anomalous segments. This mapping process remains essential even when using other foundation models, such as language foundation models. In the following, we briefly introduce the mapping function for pre-trained image foundation models.

Component-Wise PCA Mapping

When using pre-trained image foundation models, it is crucial to ensure that the condition and holds true, resulting in . This necessitates information compression. To efficiently minimize information loss during compression, we employ a principal component analysis (PCA) for each component, refered to as component-wise PCA mapping. Specifically, for any , , where the matrix denotes the concatenated eigenvectors corresponding to the first principal components. This PCA mapping considers dimensional feature vector and as the number of data points for each component . This component-wise PCA mapping ensures consistency in the time direction and alignment with the mother wavelet before and after transformation The aggregated scalogram is given by

| (3) |

where represents the operation of concatenating along the frequency direction. The frequency resolution of the CWT is set so that . Although learnable approaches such as autoencoders could achieve this, we avoid the neural network training and the hyperparameter tuning by using PCA.

Random Mapping

In TAD, the backbone structure of time-series data, characterized by principal components with high contribution rates, does not necessarily indicate anomalies. While this structure is common, anomalies are often characterized by slight deviations from this backbone. Component-wise PCA mapping emphasizes components with high contribution rates, highlighting variations in the primary structure but may overlook minor anomalies outside this structure. To address this limitation, we propose another mapping method, refered to as random mapping. Here, the aggregated scalograms are obtained by the following dimensionality reduction using linear transformation with a random tensor :

| (4) |

Specifically, for each -th mother wavelet, this random tensor is uniformly generated from the Latin hyper cube, characterized by less-than-or-equal-to elements to be , from the -th column of . We set in this study. Detailed ablation studies of this parameter p are provided in the Appendix D. However, this random mapping assumes that scalograms are typically sparse. If they are not, the aggregated results become unsuitable for effective TAD.

Channel Embedding

Next, the aggregated scalogram is transformed into a format compatible with pretrained image foundation models, specifically RGB images, i.e, . As illustrated in Fig. 1, two channels (Red, Green) are used to input , while the remaining channel (Blue) inputs frequency indexes , referred to as frequency encoding in this study. This frequency encoding functions similarly to the positional encoding (Vaswani et al. 2017) employed in transformers. This design is crucial because most image anomaly detection models decompose each image into numerous patches and perform anomaly detection, often ignoring positional information related to frequency, which is crucial for anomaly segmentation. As such models cannot differentiate where learned normal image patches are located within a test image, false positives can occur if similar patches are located in different frequency bands. By incorporating frequency information in the image, any image anomaly detection model can more accurately detect such anomalies.

Additionally, the aggregated scalograms is normalized for imaging as

where is introduced to provide additional space for test data with larger absolute values in scalograms, and to identify them as anomalies. Finally, the values in each channel are converted into for imaging.

3.3 Anomaly Detection with Image Foundation Model

By converting time-series data into images, any image anomaly detection model can be applied to TAD. In this study, we use ITF-TAD with an image foundation model, specifically employing PatchCore (Roth et al. 2022) for image anomaly detection. PatchCore is an semi-supervised learning model that detects anomalies in images without needing to train a new neural network. It utilizes a pretrained ResNet(He et al. 2016)-type classifier as a foundation model to extract feature vectors from images. During the training process, the model selects representative points, known as a coreset, from the feature vectors of the training data. Anomaly scores are then calculated based on the distances from the nearest points in the coreset.

Image Dividing

In PatchCore, image features are extracted using a ResNet-type classifier trained on ImageNet (Deng et al. 2009). This means the achievable temporal resolution for anomaly detection matches the resolution of the classifier’s input images. In this study, we use PatchCore as implemented in Anomalib(Akcay et al. 2022) employing a pretrained Wide ResNet-50-2, whose resolution is 256, provided by Torchvision. Therefore, the entire image must be divided using a window size of . We chose and set the number of discrete points in the frequency direction to and for PCA mapping. is used for random mapping. While previous studies (Wu et al. 2022; Xu et al. 2021) used non-overlapping windows, our preliminary tests with PatchCore for ITF-TAD showed missed anomalies at image edges. To address this, we set the stride width to .

Anomaly Score Computation

After creating images for both training and test data using the described process, PatchCore evaluates the anomaly score for each pixel and converts these scores back into a time series. For simplicity, we first consider the case where the time series is divided without overlap (). Here the anomaly score for each image forms an matrix. When , concatenating these anomaly score matrices in the time direction yields a large anomaly score matrix . At each time index , the anomaly score is defined using the maximum value in the frequency direction as . If multiple images share the same (), the highest anomaly score among them is taken as the final anomaly score..

When using scalograms, corrections are needed at the start and end points of the anomaly scores in the test data. Near the temporal edges of the scalogram, accurate expression of frequency-time characteristics is challenging owing to the influence of the time series’ end, known as the cone of interference. In practical applications, these edge regions are typically excluded from anomaly detection evaluation. Therefore, in this study, after calculating the anomaly scores, the scores within points from the start and end of the test data are replaced with the minimum anomaly score found within the training and test data. This method is replicates the scenario where anomalies cannot be detected in these regions.

For actual anomaly detection, arbitrary threshold is used to determine anomalies, where time indexes with are considered anomalous. The decision of this threshold depends on the availability of real data specific to each case, especially the availability of anomaly data, and thus is not discussed here. In the benchmark datasets used in this study, the threshold that maximizes the F1 score is adopted, as seen in previous research..

4 Experiments

4.1 Experimental Setup

Benchmark Datasets

To evaluate ITF-TAD performance, we use five types of univariate and multivariate time-series benchmark datasets including UCR (Eamonn Keogh and Agrawal https://compete.hexagon-ml.com/practice/competition/39/), PSM (Abdulaal, Liu, and Lancewicki 2021), SMAP (Hundman et al. 2018), MSL (Hundman et al. 2018), SMD (Su et al. 2019). Details of these datasets are summarized in Table 1. Missing value sections included in the training interval of the PSM dataset are excluded. A duplicated subdataset in SMAP is omitted. Additional features of each dataset are provided in Appendix A.

| Dataset | Dimensions | Subdatasets | #Training data | #Test data | Anomaly rate [%] |

|---|---|---|---|---|---|

| UCR | 1 | 250 | 5302449 | 14051317 | 0.35 |

| PSM | 25 | 1 | 129784 | 87841 | 27.76 |

| SMAP | 25 | 54 | 138004 | 435826 | 12.82 |

| MSL | 55 | 27 | 58317 | 73729 | 10.48 |

| SMD | 38 | 28 | 708405 | 708420 | 4.16 |

Baselines

For comparison with ITF-TAD, we selected seven state-of-the-art baselines: GPT2-LFT (Zhou et al. 2023), TimesNet (Wu et al. 2022), DiffAD (Xiao et al. 2023b), TranAD (Tuli, Casale, and Jennings 2022), Anomaly Transformer (Xu et al. 2021), GDN (Deng and Hooi 2021), and LSTM (Hundman et al. 2018). All these baselines are based on deep learning and require neural network training or fine-tuning. If the hyperparameters for each dataset were specified in the author’s source code, those values were used. For datasets not covered in the papers, hyperparameters from similar datasets were applied, referencing the subdataset’s data size. Detailed information on these settings is provided in Appendix B.

Hyperparameters

The hyperparameter values for ITF-TAD consistent across all datasets. Additionally, the parameters used within PatchCore in ITF-TAD are consistent across all. Specifically, the the coreset sampling ratio is set to 0.01, and the number of neighbors used for calculating anomaly scores is set to 9.

Preprocessing of Anomaly Score before Evaluating Metrics

To ensure fair comparison using common metrics such as F1 score and AUCPR, we introduce a preprocessing method called score partitioning (SP) for anomaly scores. In prior research, a correction known as point adjustment (PA) has been frequently applied when calculating evaluation metrics. PA considers an entire segment of consecutive anomalies as identified if at least one point within the segment is recognized as anomalies. PA itself is a practical and reasonable correction in existing benchmark datasets, where anomaly labels are excessively lengthy or encompass normal intervals, leading to a high occurrence of false negatives without PA (see Appendix G for details). However, as pointed out in (Doshi, Abudalou, and Yilmaz 2022; Kim et al. 2022), using PA alone can be flawed, as it may achieve state-of-the-art performance even with random anomaly scores. To address flaw, we combine SP with PA to ensure a fair comparison. The process and effects of SP on anomaly scores are illustrated in Fig. 2. First, we apply PA to anomaly scores by substituting each interval labeled as abnormal in the ground truth with the maximum anomaly score found in that interval. Following this, we employ SP to divide the anomaly scores into non-overlapping sections with a window size of . The highest anomaly score within each section is then assigned as the representative score for that section. For ground truth labels, any section containing at least one abnormal point is classified as abnormal. Since PA is applied in advance, segmenting an abnormal interval does not result in an increase in false negatives, even if labels are improperly assigned. We calculate metrics based on these representative anomaly scores.

Evaluation Metrics

For evaluation metrics, we employ the best F1 score and AUCPR with SP, denoted as F1*-SP and AUCPR-SP. F1*-SP represents the highest F1 score achieved with SP when the threshold varies, and this optimal threshold is used for visualizing anomaly scores. Both F1*-SP and AUCPR-SP are calculated for each subdataset, with their averages used for comparison across datasets. In the UCR dataset, which contains a single artificially added anomaly in each subdataset, the number of correct answers serves as an evaluation metric. This metric counts the subdatasets where the highest anomaly score falls within a range of the ground truth segment, a method used for ranking participants in the KDD Cup 2021. Since these evaluation metrics alone may not fully capture model performance, we also visualize representative cases through time series of anomaly scores for a more detailed comparison.

4.2 Main Results

The results of applying ITF-TAD and seven baselines to each benchmark dataset are listed in Table 2. ITF-TAD comes in two variants: ITF-TAD-PCA, which uses PCA, and ITF-TAD-RM, which uses random matrices for mapping. For univariate time series like the UCR dataset, only ITF-TAD-PCA metrics are documented. ITF-TAD-RM, using random matrices, was run five times with different random seeds, and the mean metrics are reported. Table 2 also includes results from the two-sided Wilcoxon signed rank test without multiple testing correction for datasets consisting of multiple subdatasets (UCR, SMAP, MSL, and SMD) as five types of symbols. The symbols “”“” and “”“” indicate ITF-TAD-PCA has significantly higher and lower results at significance levels of , while the symbol “” indicates there is no significant difference. Metrics other than those in Table 2 are summarized in Appendix F.

| Dataset | Metric | ITF-TAD (Ours) | GPT2-FPT | TimesNet | DiffAD | TranAD | A.Trans. | GDN | LSTM | |

|---|---|---|---|---|---|---|---|---|---|---|

| PCA | RM | (2023) | (2023) | (2023) | (2022) | (2022) | (2021) | (2018) | ||

| UCR | F1*-SP | 0.724 | 0.231 | 0.244 | 0.199 | 0.306 | 0.237 | 0.512 | 0.197 | |

| AUCPR-SP | 0.819 | 0.504 | 0.521 | 0.487 | 0.560 | 0.526 | 0.671 | 0.513 | ||

| Correct | 150 | 22 | 33 | 23 | 37 | 17 | 99 | 20 | ||

| PSM | F1*-SP | 0.889 | 0.889 | 0.886 | 0.860 | 0.821 | 0.851 | 0.844 | 0.812 | 0.569 |

| AUCPR-SP | 0.915 | 0.895 | 0.949 | 0.934 | 0.842 | 0.901 | 0.913 | 0.883 | 0.435 | |

| SMAP | F1*-SP | 0.720 | 0.730 | 0.651 | 0.628 | 0.518 | 0.731 | 0.577 | 0.750 | 0.620 |

| AUCPR-SP | 0.797 | 0.798 | 0.719 | 0.715 | 0.642 | 0.803 | 0.681 | 0.798 | 0.685 | |

| MSL | F1*-SP | 0.727 | 0.724 | 0.606 | 0.659 | 0.598 | 0.713 | 0.575 | 0.727 | 0.719 |

| AUCPR-SP | 0.737 | 0.756 | 0.637 | 0.672 | 0.626 | 0.751 | 0.616 | 0.768 | 0.727 | |

| SMD | F1*-SP | 0.645 | 0.660 | 0.844 | 0.850 | 0.330 | 0.800 | 0.602 | 0.808 | 0.571 |

| AUCPR-SP | 0.585 | 0.620 | 0.833 | 0.829 | 0.266 | 0.790 | 0.542 | 0.779 | 0.514 | |

| Dataset | Metric | ITF-TAD-PCA | |||||

|---|---|---|---|---|---|---|---|

| Full | w/o Morlet | w/o Ricker | w/o Frequency | only Ricker | only Morlet | ||

| (RGB) | (GB) | (RB) | (RG) | (G) | (R) | ||

| UCR | F1*-SP | 0.724 | 0.732 | 0.727 | 0.746 | 0.743 | 0.777 |

| AUCPR-SP | 0.819 | 0.828 | 0.808 | 0.835 | 0.838 | 0.847 | |

| Correct | 150 | 149 | 151 | 152 | 151 | 163 | |

| PSM | F1*-SP | 0.889 | 0.873 | 0.833 | 0.863 | 0.882 | 0.836 |

| AUCPR-SP | 0.915 | 0.923 | 0.860 | 0.903 | 0.924 | 0.911 | |

| SMAP | F1*-SP | 0.720 | 0.607 | 0.542 | 0.587 | 0.598 | 0.588 |

| AUCPR-SP | 0.797 | 0.718 | 0.657 | 0.691 | 0.719 | 0.680 | |

| MSL | F1*-SP | 0.727 | 0.715 | 0.730 | 0.687 | 0.744 | 0.671 |

| AUCPR-SP | 0.737 | 0.744 | 0.770 | 0.705 | 0.744 | 0.707 | |

| SMD | F1*-SP | 0.645 | 0.683 | 0.645 | 0.620 | 0.657 | 0.642 |

| AUCPR-SP | 0.585 | 0.640 | 0.563 | 0.560 | 0.595 | 0.574 | |

ITF-TAD achieves significantly higher performance in the UCR dataset and comparable results with the best baseline models in PSM, SMAP, and MSL, all without requiring any neural network training. Specifically, the number of correct answers in UCR is remarkable; its correct answer rate of 0.6 (150/250) also outperforms the best-reported value of 0.47 by Rewicki, Denzler, and Niebling (2023). This result indicates that scalograms are more informative than raw time-series data themselves and that combining scalograms with an image foundation model yields high-performance TAD. The differences between ITF-TAD-PCA and ITF-TAD-RM are minor. PCA is more computationally intensive for eigenvalue decomposition. Thus, ITF-TAD-RM should be more reasonable for real-world applications. The computational times for each model are shown in Appendix C.

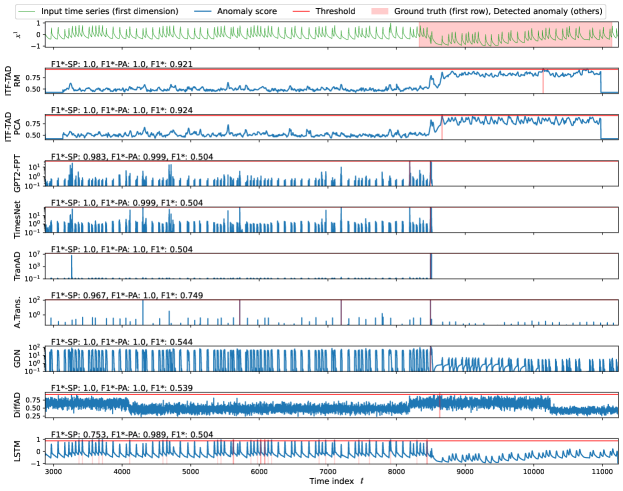

Figure 3 presents a case from the UCR dataset (UCR-060), showing the time series and anomaly scores from ITF-TAD, GPT2-FPT, TimesNet, TranAD, and GDN for the test section. The threshold used maximizes the F1*-SP shown in the top left of each model’s anomaly scores. Only ITF-TAD’s anomaly scores clearly spike at the anomaly labels. On the other hand, GDN generates two segments of false positives, and the other models generate numerous false positives. They have sufficiently low F1*-SP as intended, while the best F1 scores with PA alone (F1*-PA) shown in Fig. 3 are improperly high. These results demonstrate the validity of the correction using SP.

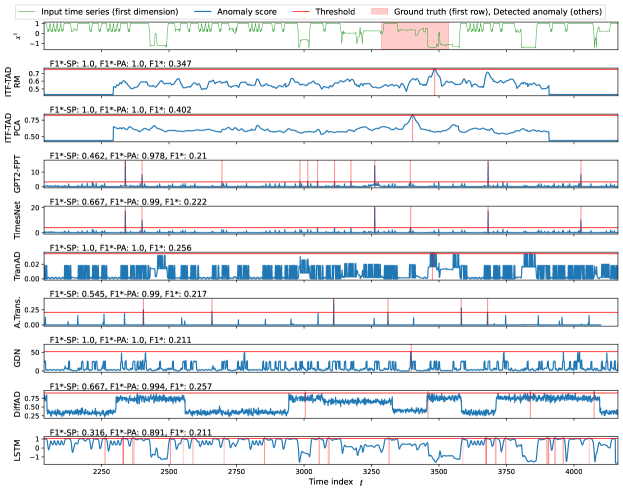

ITF-TAD has modest results in the SMD dataset, primarily owing to the unique distributions of its anomaly scores, which cause underestimations of its performance. SMD is characterized by the presence of numerous relatively short anomaly labels within each subdataset. Fig. 4 shows the time series and anomaly scores from ITF-TAD-PCA, GPT2-FPT, TimesNet, TranAD, and GDN for the test section of SMD-2-3. Although all methods detect many anomalies with few false detections, ITF-TAD-PCA has a particularly low F1*-SP score. This is because scalograms, which ITF-TAD uses, produce has a wider distribution of anomaly scores around peaks, such as those near , increasing the number of false positives in metric calculations. As shown in magenta text in Fig. 4, ITF-TAD-PCA achieves the highest F1*-SP score in the interval , suggesting that the wider distribution of anomaly scores can understimate ITF-TAD’s performance. Similar score distributions are observed in 22 out of 28 subdatasets of SMD. Although using SP can alleviate this underestimation, excessively increasing the window size for the SP metrics makes accurate evaluation difficult. Hence, qualitative comparisons with visualization are needed. The effects of on performance comparison are summarized in Appendix E.

4.3 Ablation Study

To validate the effects of dual aggregated scalograms and frequency embedding in the image via channel embedding, we conducted anomaly detection by removing each channel from ITF-TAD-PCA. The results, shown in Table 3, confirm the usefulness of each channel through the F1*-SP and AUCPR-SP metrics for SMAP, and the F1*-SP for PSM. Notably, removing the aggregated scalogram of the Ricker wavelet largely degrades performance, suggesting it is the most effective component for image-based TAD.

In the univariate UCR dataset, simpler images, such as those using only the complex Morlet wavelet, have shown improved performance. This suggests that in univariate time series, the image needs less information compared to multivariate cases, making simplicity and clarity more important. This principle may also enhance performance in multivariate TAD by focusing on clearer image mapping. Additionally, in the SMD dataset, performance improves when the aggregated scalogram of the complex Morlet wavelet is removed. Scalograms of the complex Morlet wavelet often have a wide distribution in the time direction in the lower frequency region, at the bottom of the scalograms, as shown in Fig. 1. This contributes to the wider distribution of anomaly scores observed in Fig. 4. Therefore, removing it led to improved performance. Although the scalogram of the Ricker wavelet also has a wide distribution, the issue of underestimation still persists.

5 Conclusions

This study introduces the ITF-TAD approach, which leverages image foundation models to efficiently detect anomalies in time-series data without requiring neural network training. ITF-TAD transforms each dimension of a time series into scalograms using CWT with two types of mother wavelets. These scalograms are then aggregated into a single aggregated scalogram through PCA or random matrices. Using PatchCore as the image anomaly detection model and a pretrained ResNet-type image classifier as the foundation model, ITF-TAD achieves superior or comparable performance across five univariate and multivariate time-series benchmark datasets all without the need for neural network training. However, the study acknowledges some limitations. The methods for generating aggregated scalograms without neural network training still have room for improvement. Additionally, the computation time for CWT and anomaly detection using PatchCore is not significantly different from existing deep models, indicating that the full benefits of using foundation models have yet to be realized. Future work aims to establish criteria for information compression suitable for TAD to introduce more effective mapping methods. We also plan to explore the use of foundation models from other fields to develop more time-efficient training-free TAD solutions.

References

- Abdulaal, Liu, and Lancewicki (2021) Abdulaal, A.; Liu, Z.; and Lancewicki, T. 2021. Practical approach to asynchronous multivariate time series anomaly detection and localization. In Proceedings of the 27th ACM SIGKDD conference on knowledge discovery & data mining, 2485–2494.

- Akcay et al. (2022) Akcay, S.; Ameln, D.; Vaidya, A.; Lakshmanan, B.; Ahuja, N.; and Genc, U. 2022. Anomalib: A deep learning library for anomaly detection. In 2022 IEEE International Conference on Image Processing (ICIP), 1706–1710. IEEE.

- Anderson and Kendall (1976) Anderson, O. D.; and Kendall, M. G. 1976. Time-Series. 2nd edn. The Statistician, 25: 308.

- Aslan and Akin (2022) Aslan, Z.; and Akin, M. 2022. A deep learning approach in automated detection of schizophrenia using scalogram images of EEG signals. Physical and Engineering Sciences in Medicine, 45(1): 83–96.

- Audibert et al. (2020) Audibert, J.; Michiardi, P.; Guyard, F.; Marti, S.; and Zuluaga, M. A. 2020. USAD: UnSupervised Anomaly Detection on Multivariate Time Series. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, KDD ’20, 3395–3404. New York, NY, USA: Association for Computing Machinery. ISBN 9781450379984.

- Breunig et al. (2000) Breunig, M. M.; Kriegel, H.-P.; Ng, R. T.; and Sander, J. 2000. LOF: identifying density-based local outliers. In Proceedings of the 2000 ACM SIGMOD international conference on Management of data, 93–104.

- Chauhan and Vig (2015) Chauhan, S.; and Vig, L. 2015. Anomaly detection in ECG time signals via deep long short-term memory networks. In 2015 IEEE international conference on data science and advanced analytics (DSAA), 1–7. IEEE.

- Chen et al. (2021) Chen, Z.; Chen, D.; Zhang, X.; Yuan, Z.; and Cheng, X. 2021. Learning graph structures with transformer for multivariate time-series anomaly detection in IoT. IEEE Internet of Things Journal, 9(12): 9179–9189.

- Copiaco et al. (2023) Copiaco, A.; Himeur, Y.; Amira, A.; Mansoor, W.; Fadli, F.; Atalla, S.; and Sohail, S. S. 2023. An innovative deep anomaly detection of building energy consumption using energy time-series images. Engineering Applications of Artificial Intelligence, 119: 105775.

- Das et al. (2023) Das, A.; Kong, W.; Sen, R.; and Zhou, Y. 2023. A decoder-only foundation model for time-series forecasting. arXiv preprint arXiv:2310.10688.

- Deng and Hooi (2021) Deng, A.; and Hooi, B. 2021. Graph neural network-based anomaly detection in multivariate time series. In Proceedings of the AAAI conference on artificial intelligence, volume 35, 4027–4035.

- Deng et al. (2009) Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; and Fei-Fei, L. 2009. Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition, 248–255. Ieee.

- Doshi, Abudalou, and Yilmaz (2022) Doshi, K.; Abudalou, S.; and Yilmaz, Y. 2022. Reward once, penalize once: Rectifying time series anomaly detection. In 2022 International Joint Conference on Neural Networks (IJCNN), 1–8. IEEE.

- Doshi, Yilmaz, and Uludag (2021) Doshi, K.; Yilmaz, Y.; and Uludag, S. 2021. Timely detection and mitigation of stealthy DDoS attacks via IoT networks. IEEE Transactions on Dependable and Secure Computing, 18(5): 2164–2176.

- Eamonn Keogh and Agrawal (https://compete.hexagon-ml.com/practice/competition/39/) Eamonn Keogh, U. N., Dutta Roy Taposh; and Agrawal, A. https://compete.hexagon-ml.com/practice/competition/39/. Multi-dataset time-series anomaly detection competition. Competition of International Conference on Knowledge Discovery & Data Mining 2021.

- Garcia et al. (2022) Garcia, G. R.; Michau, G.; Ducoffe, M.; Gupta, J. S.; and Fink, O. 2022. Temporal signals to images: Monitoring the condition of industrial assets with deep learning image processing algorithms. Proceedings of the Institution of Mechanical Engineers, Part O: Journal of Risk and Reliability, 236(4): 617–627.

- Grossmann and Morlet (1984) Grossmann, A.; and Morlet, J. 1984. Decomposition of Hardy functions into square integrable wavelets of constant shape. SIAM journal on mathematical analysis, 15(4): 723–736.

- He et al. (2016) He, K.; Zhang, X.; Ren, S.; and Sun, J. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, 770–778.

- Hundman et al. (2018) Hundman, K.; Constantinou, V.; Laporte, C.; Colwell, I.; and Soderstrom, T. 2018. Detecting spacecraft anomalies using lstms and nonparametric dynamic thresholding. In Proceedings of the 24th ACM SIGKDD international conference on knowledge discovery & data mining, 387–395.

- Khorrami and Moavenian (2010) Khorrami, H.; and Moavenian, M. 2010. A comparative study of DWT, CWT and DCT transformations in ECG arrhythmias classification. Expert systems with Applications, 37(8): 5751–5757.

- Kim et al. (2022) Kim, S.; Choi, K.; Choi, H.-S.; Lee, B.; and Yoon, S. 2022. Towards a Rigorous Evaluation of Time-Series Anomaly Detection. Proceedings of the AAAI Conference on Artificial Intelligence, 36(7): 7194–7201.

- Li et al. (2019) Li, D.; Chen, D.; Jin, B.; Shi, L.; Goh, J.; and Ng, S.-K. 2019. MAD-GAN: Multivariate anomaly detection for time series data with generative adversarial networks. In International conference on artificial neural networks, 703–716. Springer.

- Li et al. (2021) Li, Z.; Zhao, Y.; Han, J.; Su, Y.; Jiao, R.; Wen, X.; and Pei, D. 2021. Multivariate time series anomaly detection and interpretation using hierarchical inter-metric and temporal embedding. In Proceedings of the 27th ACM SIGKDD conference on knowledge discovery & data mining, 3220–3230.

- Malhotra et al. (2015) Malhotra, P.; Vig, L.; Shroff, G.; Agarwal, P.; et al. 2015. Long Short Term Memory Networks for Anomaly Detection in Time Series. In Esann, volume 2015, 89.

- Park, Hoshi, and Kemp (2018) Park, D.; Hoshi, Y.; and Kemp, C. C. 2018. A multimodal anomaly detector for robot-assisted feeding using an lstm-based variational autoencoder. IEEE Robotics and Automation Letters, 3(3): 1544–1551.

- Peng and Chu (2004) Peng, Z.; and Chu, F. 2004. Application of the wavelet transform in machine condition monitoring and fault diagnostics: a review with bibliography. Mechanical Systems and Signal Processing, 18(2): 199–221.

- Rewicki, Denzler, and Niebling (2023) Rewicki, F.; Denzler, J.; and Niebling, J. 2023. Is it worth it? Comparing six deep and classical methods for unsupervised anomaly detection in time series. Applied Sciences, 13(3): 1778.

- Ricker (1953) Ricker, N. 1953. The form and laws of propagation of seismic wavelets. Geophysics, 18(1): 10–40.

- Roth et al. (2022) Roth, K.; Pemula, L.; Zepeda, J.; Schölkopf, B.; Brox, T.; and Gehler, P. 2022. Towards total recall in industrial anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 14318–14328.

- Ruff et al. (2018) Ruff, L.; Vandermeulen, R.; Goernitz, N.; Deecke, L.; Siddiqui, S. A.; Binder, A.; Müller, E.; and Kloft, M. 2018. Deep one-class classification. In International conference on machine learning, 4393–4402. PMLR.

- Schölkopf et al. (2001) Schölkopf, B.; Platt, J. C.; Shawe-Taylor, J.; Smola, A. J.; and Williamson, R. C. 2001. Estimating the support of a high-dimensional distribution. Neural computation, 13(7): 1443–1471.

- Shen, Li, and Kwok (2020) Shen, L.; Li, Z.; and Kwok, J. 2020. Timeseries anomaly detection using temporal hierarchical one-class network. Advances in Neural Information Processing Systems, 33: 13016–13026.

- Song et al. (2024) Song, J.; Kim, K.; Oh, J.; and Cho, S. 2024. Memto: Memory-guided transformer for multivariate time series anomaly detection. Advances in Neural Information Processing Systems, 36.

- Su et al. (2019) Su, Y.; Zhao, Y.; Niu, C.; Liu, R.; Sun, W.; and Pei, D. 2019. Robust anomaly detection for multivariate time series through stochastic recurrent neural network. In Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining, 2828–2837.

- Tax and Duin (2004) Tax, D. M.; and Duin, R. P. 2004. Support vector data description. Machine learning, 54: 45–66.

- Touvron et al. (2023) Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; et al. 2023. Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288.

- Tuli, Casale, and Jennings (2022) Tuli, S.; Casale, G.; and Jennings, N. R. 2022. Tranad: Deep transformer networks for anomaly detection in multivariate time series data. arXiv preprint arXiv:2201.07284.

- Türk and Özerdem (2019) Türk, Ö.; and Özerdem, M. S. 2019. Epilepsy detection by using scalogram based convolutional neural network from EEG signals. Brain sciences, 9(5): 115.

- Vaswani et al. (2017) Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A. N.; Kaiser, Ł.; and Polosukhin, I. 2017. Attention is all you need. Advances in neural information processing systems, 30.

- Wang et al. (2023) Wang, H.; Luo, Z.; Yip, J. W.; Ye, C.; and Zhang, M. 2023. ECGGAN: A Framework for Effective and Interpretable Electrocardiogram Anomaly Detection. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 5071–5081.

- Woike, Abdul-Aziz, and Clem (2014) Woike, M.; Abdul-Aziz, A.; and Clem, M. 2014. Structural health monitoring on turbine engines using microwave blade tip clearance sensors. In Smart Sensor Phenomena, Technology, Networks, and Systems Integration 2014, volume 9062, 167–180. SPIE.

- Wu et al. (2022) Wu, H.; Hu, T.; Liu, Y.; Zhou, H.; Wang, J.; and Long, M. 2022. Timesnet: Temporal 2d-variation modeling for general time series analysis. arXiv preprint arXiv:2210.02186.

- Xiao et al. (2023a) Xiao, B.; Wu, H.; Xu, W.; Dai, X.; Hu, H.; Lu, Y.; Zeng, M.; Liu, C.; and Yuan, L. 2023a. Florence-2: Advancing a unified representation for a variety of vision tasks. arXiv preprint arXiv:2311.06242.

- Xiao et al. (2023b) Xiao, C.; Gou, Z.; Tai, W.; Zhang, K.; and Zhou, F. 2023b. Imputation-based Time-Series Anomaly Detection with Conditional Weight-Incremental Diffusion Models. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2742–2751.

- Xu et al. (2021) Xu, J.; Wu, H.; Wang, J.; and Long, M. 2021. Anomaly transformer: Time series anomaly detection with association discrepancy. arXiv preprint arXiv:2110.02642.

- Zhang et al. (2006) Zhang, S.; Chakrabarti, A.; Ford, J.; and Makedon, F. 2006. Attack detection in time series for recommender systems. In Proceedings of the 12th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, KDD ’06, 809–814. New York, NY, USA: Association for Computing Machinery. ISBN 1595933395.

- Zhou et al. (2023) Zhou, T.; Niu, P.; Sun, L.; Jin, R.; et al. 2023. One fits all: Power general time series analysis by pretrained lm. Advances in neural information processing systems, 36: 43322–43355.

- Zong et al. (2018) Zong, B.; Song, Q.; Min, M. R.; Cheng, W.; Lumezanu, C.; Cho, D.; and Chen, H. 2018. Deep autoencoding gaussian mixture model for unsupervised anomaly detection. In International conference on learning representations.

Appendices

Appendix A Dataset Details

UCR

The UCR dataset(Eamonn Keogh and Agrawal https://compete.hexagon-ml.com/practice/competition/39/), adopted for the KDD Cup 2021, represents a collection of univariate time series comprising 250 subdatasets. It consists of time-series data from various fields and contains a single artificially added anomaly in each subdataset. Despite being univariate, the clarity and reliability of its anomaly labels owing to artificial anomalies make it suitable for assessing the compatibility of the image-based TAD.

PSM

The pooled server metrics (PSM) dataset (Abdulaal, Liu, and Lancewicki 2021) is collected internally from multiple application server nodes at eBay and includes 25 dimensions with no subdatasets. Anomalies appear in both the training and test sections, but labels are only available for the test section. This set-up requires performance evaluation as an unsupervised method rather than a semi-supervised method.

SMAP & MSL

The soil moisture active passive (SMAP) satellite and Mars science laboratory (MSL) rover datasets(Hundman et al. 2018), collected by NASA, are related to spacecraft and contain 25 and 55 dimensions, respectively. The SMAP dataset has 54 subdatasets (though it is to be 55, one is omitted due to duplication) while the MSL dataset includes 27 subdatasets. In both datasets, the first dimension is usually continuous, while the remaining dimensions are often binary, with some subdatasets containing discrete values even in the first dimension. Although CWT may not be suitable for subdatasets with many binary values, these spacecraft-related datasets are rare and widely used.

SMD

The server machine dataset (SMD) (Su et al. 2019) is a 38-dimensional dataset acquired by a telecommunications operator and contains 28 subdatasets. This dataset was chosen because the proportion of anomalies it contains is relatively low, usually allowing for reasonable comparisons.

Appendix B Hyperparameters Used in Baselines

The hyperparameters for the baselines are detailed in Table 4. All source codes were downloaded from the authors’ GitHub repositories. When configuration files for specific datasets were available, those settings were used. For TranAD and GDN, the default parameters were applied across datasets owing to the absence of dataset-specific configuration files. However, for the univariate UCR dataset, the topk parameter for GDN was set to 1. For many baselines lacking UCR-specific configurations, settings from the SMD dataset, which has similar time-series lengths, were used. Parameters dependent on the number of dimensions were adjusted to 1 for UCR. For TimesNet, the number of epochs was set to 3 for UCR owing to computational constraints. The Anomaly Transformer used a common configuration for PSM, SMAP, and MSL, which was also UCR. For LSTM, most hyperparameters remained at their default values, but epochs and lstm_batch_size were specified in Table 4 to account for computational time. For UCR, the values in the table were used according to the length of each subdataset.

| Dataset | UCR | PSM | SMAP | MSL | SMD | |

|---|---|---|---|---|---|---|

| GPT2-FPT | same as SMD | provided | provided | provided | provided | |

| TimesNet | train_epochs: 3 | provided | provided | provided | provided | |

| others: same as SMD | ||||||

| DiffAD | same as SMD | provided | provided | provided | provided | |

| TranAD | default | default | default | default | default | |

| A.Trans. | same as PSM, SMAP, MSL | provided | provided | provided | provided | |

| GDN | topk: 1, othres: default | default | default | default | default | |

| LSTM | epochs | 4 or 10 | 4 | 20 | 20 | 20 |

| lstm_batch_size | 128, 512, 1024, or 2048 | 2048 | 64 (default) | 64 (default) | 512 | |

| others | default | default | default | default | default |

Appendix C Computational Resources and Runtime

The numerical experiments were conducted on two types of server machines, as detailed in Table 5, which also outlines the benchmark datasets used.

| Node name | V100 | A100 |

|---|---|---|

| GPU | NVIDIA V100 for NVLink 16GiB HBM2 | NVIDIA A100 for NVLink 40GiB HBM2 |

| CPU | Intel Xeon Gold 6148 Processor 2.4 GHz, 20 Cores | Intel Xeon Platinum 8360Y Processor 2.4 GHz, 36 Cores |

| Memory [GiB] | 32 | 32 |

| Dataset applied | UCR, SMAP, MSL, SMD | UCR-239 to 241, PSM |

| Dataset | Part | ITF-TAD (Ours) | GPT2-FPT | TimesNet | DiffAD | TranAD | A.Trans. | GDN | LSTM | |

|---|---|---|---|---|---|---|---|---|---|---|

| PCA | RM | (2023) | (2023) | (2023) | (2022) | (2022) | (2021) | (2018) | ||

| PSM | Entire | 16.2 | 14.2 | 62.6 | 58.7 | 35.1 | 2.8 | 9.6 | 8.8 | 15.8 |

| Imaging | 10.7 | 8.4 | - | - | - | - | - | - | - | |

| SMAP | Entire | 54 | 58.6 | 224.6 | 227.2 | 99.5 | 13.3 | 26.7 | 23.4 | 154.3 |

| Imaging | 24.2 | 26.5 | - | - | - | - | - | - | - | |

| SMD | Entire | 574.8 | 135.7 | 16.6 | 30.4 | 97.2 | 22.9 | 6.9 | 87.6 | 343.8 |

| Imaging | 530.1 | 89.7 | - | - | - | - | - | - | - | |

Table 6 shows the total runtime for each method in minutes. ITF-TAD’s runtime is comparable to that of deep models. Although ITF-TAD avoids training neural networks, it requires considerable time for generating scalograms, mapping them into images, and selecting the coreset for PatchCore, which is used in image anomaly detection. Unlike deep models that require extensive time for hyperparameter optimization, ITF-TAD is practical as it does not require hyperparameter tuning. For SMD, which contains a large number of data points in each subdataset, the imaging computation time for ITF-TAD-PCA is particularly high, making ITF-TAD-RM a more practical choice.

is superior in terms of practicality. The computation times for GPT2-FPT and TimesNet are highly dependent on the stride of the time window used for anomaly detection. For SMD, the stride is set to 1, whereas for other datasets, it is set to 100. This results in much smaller runtime for SMD.

Appendix D Random Mapping

D.1 Random Matrix Generation

The random matrix consisting of binary elements used in the random mapping is generated using Latin Hypercube Sampling (LHS). Figure 5 illustrates the process where two elements that take the value of 1 are determined for each through LHS. When selecting dimensions less than or equal to ( in Fig. 5) from each frequency, the first step is to generate sample points using LHS within the two-dimensional space . Next, in this two-dimensional space, if a sample point exists within each region divided by and , 1 is assigned; otherwise, 0 is assigned. This process yields the random matrix used for scalograms with the -th mother wavelet . The condition that the number of elements taking the value of 1 for each frequency is less than or equal to arises because, as seen in Figure 5 at (, ) = (1, 1), when multiple sample points are generated within the same region, the count may fall below . In this way, each dimension can be mapped equally to the frequencies.

D.2 Effects of Random Matrix Sparsity on Performance

The random matrix in Eq. 4 contains elements with 1 in the -th column, where is used in the main results. We investigated the effect of , which controls the sparsity of , across the PSM, SMAP, MSL, and SMD datasets as shown in Table 7. Since is not used in univariate time series, the UCR dataset is excluded from this investigation. When , two elements in each column are set to one, regardless of the number of dimensions . Each experiment was repeated five times with different random seeds, using the average results for comparison. Although the impact of is limited, there was a noticeable performance drop for the MSL dataset when . This likely occurred because MSL has the highest number of dimensions (55), and selecting only 2 dimensions resulted in insufficient information being embedded in the images.

| Dataset | Metric | ITF-TAD-RM | |||

|---|---|---|---|---|---|

| PSM | F1*-SP | 0.882 | 0.889 | 0.869 | 0.869 |

| AUCPR-SP | 0.890 | 0.895 | 0.907 | 0.907 | |

| SMAP | F1*-SP | 0.715 | 0.730 | 0.720 | 0.720 |

| AUCPR-SP | 0.781 | 0.798 | 0.794 | 0.794 | |

| MSL | F1*-SP | 0.725 | 0.724 | 0.696 | 0.652 |

| AUCPR-SP | 0.744 | 0.756 | 0.730 | 0.705 | |

| SMD | F1*-SP | 0.643 | 0.660 | 0.671 | 0.667 |

| AUCPR-SP | 0.599 | 0.620 | 0.636 | 0.642 | |

Appendix E Effects of Window Size in Score Partitioning

Given that SP metrics depend on the window size , we conducted a parametric study on this variable. Table 8 shows metrics evaluated at different window sizes where is the number of test data points in each subdataset. In most cases, the ranking among models remained consistent. However, for the SMAP and MSL datasets, GDN surpasses ITF-TAD only when . For SMAP and MSL, where , this results in . Such a small window size nearly eliminates the benefit of SP, leaving only the effects of PA.

Appendix F Comparison Using Other Evaluation Metrics

To further evaluate our models, we used three variants of key metrics: the best F1 score (F1*), AUCPR, and the area under the receiver operating characteristics curve (AUROC). These variations include metrics without any adjustments, with a PA adjustment, and with a SP adjustment, denoted by suffixes such as F1*-PA and F1*-SP. All metrics were calculated for each problem in the dataset, and their average values were used for comparison. For the UCR dataset, we focused on the number of correct answers. Since participants in the KDD Cup tuned their models specifically for the UCR dataset, and some models assumed only one anomaly per problem, a direct comparison of correct answers with KDD Cup is not feasible.

Table 9 presents a performance comparison of each model using these evaluation metrics. In UCR, SMAP, and MSL, ITF-TAD demonstrated excellent results in metrics without any adjustment. The PSM dataset, which lacks subdatasets, contains a mix of long and short anomalies, as shown in Fig. 7. GDN and TranAD achieved the highest two values in unadjusted metrics for PSM because their anomaly scores broadly increased during long anomalies. However, as the figure indicates, ITF-TAD excels in detecting short anomalies, making SP-adjusted metrics more appropriate for comparison. In SMD, since the anomaly labels are short, the trends in SP-adjusted and PA-adjusted metrics are similar.

Appendix G Additional Visualization

To effectively compare the models, a representative subdataset from each dataset was chosen, and the anomaly scores were visualized in Figs. 6-10. Figs. 7-9 clearly show that applying PA is essential for a valid comparison in datasets with long anomaly labels. However, PA has inherent flaws. Therefore, using SP adjustments, which address these flaws, provides the most reliable comparison.

| dataset | UCR | UCR | PSM | PSM | SMAP | SMAP | MSL | MSL | SMD | SMD | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1*-SP | AUCPR-SP | F1*-SP | AUCPR-SP | F1*-SP | AUCPR-SP | F1*-SP | AUCPR-SP | F1*-SP | AUCPR-SP | ||

| 256 | ITF-TAD-PCA | 0.732 | 0.839 | 0.854 | 0.897 | 0.749 | 0.779 | 0.812 | 0.821 | 0.703 | 0.677 |

| ITF-TAD-RM | 0.858 | 0.875 | 0.756 | 0.795 | 0.76 | 0.786 | 0.704 | 0.684 | |||

| GPT2-FPT | 0.256 | 0.522 | 0.859 | 0.929 | 0.673 | 0.695 | 0.66 | 0.688 | 0.838 | 0.829 | |

| TimesNet | 0.262 | 0.535 | 0.827 | 0.912 | 0.637 | 0.700 | 0.763 | 0.765 | 0.842 | 0.822 | |

| DiffAD | 0.227 | 0.507 | 0.737 | 0.771 | 0.564 | 0.641 | 0.622 | 0.667 | 0.393 | 0.282 | |

| TranAD | 0.319 | 0.564 | 0.800 | 0.889 | 0.749 | 0.793 | 0.745 | 0.774 | 0.807 | 0.798 | |

| A.Trans. | 0.242 | 0.530 | 0.790 | 0.893 | 0.580 | 0.664 | 0.629 | 0.650 | 0.615 | 0.578 | |

| GDN | 0.514 | 0.697 | 0.809 | 0.877 | 0.778 | 0.788 | 0.741 | 0.768 | 0.821 | 0.802 | |

| LSTM | 0.225 | 0.513 | 0.638 | 0.510 | 0.659 | 0.702 | 0.756 | 0.771 | 0.555 | 0.523 | |

| 100 | ITF-TAD-PCA | 0.724 | 0.819 | 0.889 | 0.915 | 0.72 | 0.797 | 0.727 | 0.737 | 0.645 | 0.585 |

| ITF-TAD-RM | 0.889 | 0.895 | 0.73 | 0.798 | 0.724 | 0.756 | 0.660 | 0.620 | |||

| GPT2-FPT | 0.231 | 0.504 | 0.886 | 0.949 | 0.651 | 0.719 | 0.606 | 0.637 | 0.844 | 0.833 | |

| TimesNet | 0.244 | 0.521 | 0.860 | 0.934 | 0.628 | 0.715 | 0.659 | 0.672 | 0.850 | 0.829 | |

| DiffAD | 0.199 | 0.487 | 0.821 | 0.842 | 0.518 | 0.642 | 0.598 | 0.626 | 0.330 | 0.266 | |

| TranAD | 0.306 | 0.560 | 0.851 | 0.901 | 0.731 | 0.803 | 0.713 | 0.751 | 0.800 | 0.790 | |

| A.Trans. | 0.237 | 0.526 | 0.844 | 0.913 | 0.577 | 0.681 | 0.575 | 0.616 | 0.602 | 0.542 | |

| GDN | 0.512 | 0.671 | 0.812 | 0.883 | 0.750 | 0.798 | 0.727 | 0.768 | 0.808 | 0.779 | |

| LSTM | 0.197 | 0.513 | 0.569 | 0.435 | 0.620 | 0.685 | 0.719 | 0.727 | 0.571 | 0.514 | |

| 50 | ITF-TAD-PCA | 0.727 | 0.824 | 0.907 | 0.914 | 0.732 | 0.806 | 0.686 | 0.735 | 0.625 | 0.55 |

| ITF-TAD-RM | 0.906 | 0.902 | 0.738 | 0.804 | 0.704 | 0.743 | 0.661 | 0.604 | |||

| GPT2-FPT | 0.256 | 0.529 | 0.901 | 0.965 | 0.668 | 0.727 | 0.611 | 0.661 | 0.865 | 0.855 | |

| TimesNet | 0.271 | 0.548 | 0.879 | 0.953 | 0.642 | 0.724 | 0.643 | 0.673 | 0.850 | 0.821 | |

| DiffAD | 0.214 | 0.497 | 0.862 | 0.885 | 0.545 | 0.660 | 0.603 | 0.639 | 0.363 | 0.286 | |

| TranAD | 0.328 | 0.572 | 0.872 | 0.912 | 0.741 | 0.823 | 0.717 | 0.768 | 0.815 | 0.806 | |

| A.Trans. | 0.269 | 0.550 | 0.867 | 0.936 | 0.588 | 0.712 | 0.553 | 0.612 | 0.645 | 0.580 | |

| GDN | 0.530 | 0.689 | 0.817 | 0.889 | 0.762 | 0.810 | 0.732 | 0.778 | 0.821 | 0.788 | |

| LSTM | 0.196 | 0.520 | 0.558 | 0.422 | 0.639 | 0.707 | 0.716 | 0.739 | 0.605 | 0.536 | |

| ITF-TAD-PCA | 0.725 | 0.835 | 0.871 | 0.943 | 0.723 | 0.800 | 0.700 | 0.714 | 0.684 | 0.638 | |

| ITF-TAD-RM | 0.848 | 0.925 | 0.731 | 0.792 | 0.706 | 0.742 | 0.668 | 0.646 | |||

| GPT2-FPT | 0.237 | 0.513 | 0.893 | 0.958 | 0.656 | 0.718 | 0.665 | 0.687 | 0.819 | 0.809 | |

| TimesNet | 0.248 | 0.534 | 0.850 | 0.936 | 0.629 | 0.715 | 0.700 | 0.696 | 0.814 | 0.792 | |

| DiffAD | 0.205 | 0.501 | 0.790 | 0.769 | 0.532 | 0.637 | 0.632 | 0.667 | 0.357 | 0.293 | |

| TranAD | 0.298 | 0.562 | 0.821 | 0.919 | 0.731 | 0.798 | 0.769 | 0.782 | 0.792 | 0.789 | |

| A.Trans. | 0.228 | 0.508 | 0.839 | 0.917 | 0.582 | 0.682 | 0.593 | 0.632 | 0.582 | 0.546 | |

| GDN | 0.503 | 0.687 | 0.878 | 0.917 | 0.752 | 0.802 | 0.762 | 0.780 | 0.813 | 0.791 | |

| LSTM | 0.204 | 0.504 | 0.800 | 0.744 | 0.631 | 0.695 | 0.747 | 0.790 | 0.539 | 0.513 | |

| ITF-TAD-PCA | 0.728 | 0.812 | 0.891 | 0.951 | 0.764 | 0.834 | 0.738 | 0.781 | 0.632 | 0.548 | |

| ITF-TAD-RM | 0.893 | 0.94 | 0.763 | 0.820 | 0.743 | 0.781 | 0.680 | 0.610 | |||

| GPT2-FPT | 0.321 | 0.583 | 0.883 | 0.950 | 0.748 | 0.787 | 0.847 | 0.823 | 0.872 | 0.853 | |

| TimesNet | 0.310 | 0.562 | 0.860 | 0.938 | 0.736 | 0.783 | 0.856 | 0.827 | 0.854 | 0.814 | |

| DiffAD | 0.246 | 0.526 | 0.822 | 0.850 | 0.677 | 0.754 | 0.805 | 0.845 | 0.431 | 0.344 | |

| TranAD | 0.358 | 0.598 | 0.854 | 0.901 | 0.813 | 0.865 | 0.873 | 0.894 | 0.835 | 0.827 | |

| A.Trans. | 0.332 | 0.602 | 0.846 | 0.910 | 0.717 | 0.787 | 0.776 | 0.831 | 0.692 | 0.623 | |

| GDN | 0.562 | 0.714 | 0.812 | 0.883 | 0.828 | 0.869 | 0.881 | 0.893 | 0.839 | 0.808 | |

| LSTM | 0.233 | 0.540 | 0.568 | 0.431 | 0.724 | 0.783 | 0.820 | 0.848 | 0.642 | 0.566 |

| Dataset | Metric | ITF-TAD (Ours) | GPT2-FPT | TimesNet | DiffAD | TranAD | A.Trans. | GDN | LSTM | |

|---|---|---|---|---|---|---|---|---|---|---|

| PCA | RM | (2023) | (2023) | (2023) | (2022) | (2022) | (2021) | (2018) | ||

| UCR | F1*-SP | 0.724 | 0.231 | 0.244 | 0.199 | 0.306 | 0.237 | 0.512 | 0.197 | |

| AUCPR-SP | 0.819 | 0.504 | 0.521 | 0.487 | 0.560 | 0.526 | 0.671 | 0.513 | ||

| Correct | 0.927 | 0.672 | 0.655 | 0.658 | 0.682 | 0.684 | 0.808 | 0.613 | ||

| F1*-PA | 0.744 | 0.531 | 0.496 | 0.402 | 0.497 | 0.644 | 0.684 | 0.342 | ||

| AUCPR-PA | 0.850 | 0.728 | 0.712 | 0.668 | 0.719 | 0.788 | 0.815 | 0.640 | ||

| AUROC-PA | 0.959 | 0.928 | 0.923 | 0.898 | 0.911 | 0.918 | 0.947 | 0.828 | ||

| F1* | 0.461 | 0.037 | 0.044 | 0.043 | 0.052 | 0.025 | 0.141 | 0.037 | ||

| AUCPR | 0.424 | 0.045 | 0.051 | 0.049 | 0.053 | 0.092 | 0.118 | 0.088 | ||

| AUROC | 0.900 | 0.529 | 0.539 | 0.562 | 0.529 | 0.502 | 0.593 | 0.491 | ||

| Correct | 150 | 22 | 33 | 23 | 37 | 17 | 99 | 20 | ||

| PSM | F1*-SP | 0.889 | 0.889 | 0.886 | 0.860 | 0.821 | 0.851 | 0.844 | 0.812 | 0.569 |

| AUCPR-SP | 0.915 | 0.895 | 0.949 | 0.934 | 0.842 | 0.901 | 0.913 | 0.883 | 0.435 | |

| AUROC-SP | 0.961 | 0.949 | 0.963 | 0.949 | 0.894 | 0.912 | 0.932 | 0.928 | 0.660 | |

| F1*-PA | 0.931 | 0.938 | 0.981 | 0.972 | 0.974 | 0.910 | 0.985 | 0.841 | 0.557 | |

| AUCPR-PA | 0.893 | 0.925 | 0.995 | 0.994 | 0.992 | 0.937 | 0.993 | 0.912 | 0.443 | |

| AUROC-PA | 0.987 | 0.988 | 0.998 | 0.998 | 0.996 | 0.955 | 0.992 | 0.960 | 0.727 | |

| F1* | 0.441 | 0.444 | 0.435 | 0.435 | 0.436 | 0.479 | 0.434 | 0.541 | 0.435 | |

| AUCPR | 0.384 | 0.426 | 0.394 | 0.391 | 0.318 | 0.456 | 0.287 | 0.500 | 0.234 | |

| AUROC | 0.603 | 0.620 | 0.595 | 0.593 | 0.553 | 0.640 | 0.502 | 0.721 | 0.395 | |

| SMAP | F1*-SP | 0.720 | 0.730 | 0.651 | 0.628 | 0.518 | 0.731 | 0.577 | 0.750 | 0.620 |

| AUCPR-SP | 0.797 | 0.798 | 0.719 | 0.715 | 0.642 | 0.803 | 0.681 | 0.798 | 0.685 | |

| AUROC-SP | 0.873 | 0.865 | 0.840 | 0.830 | 0.777 | 0.863 | 0.771 | 0.869 | 0.758 | |

| F1*-PA | 0.777 | 0.773 | 0.836 | 0.844 | 0.814 | 0.883 | 0.850 | 0.877 | 0.769 | |

| AUCPR-PA | 0.841 | 0.839 | 0.857 | 0.856 | 0.877 | 0.909 | 0.898 | 0.902 | 0.836 | |

| AUROC-PA | 0.949 | 0.933 | 0.973 | 0.974 | 0.985 | 0.979 | 0.981 | 0.988 | 0.941 | |

| F1* | 0.478 | 0.473 | 0.338 | 0.343 | 0.230 | 0.391 | 0.247 | 0.342 | 0.374 | |

| AUCPR | 0.451 | 0.436 | 0.225 | 0.226 | 0.148 | 0.299 | 0.177 | 0.223 | 0.294 | |

| AUROC | 0.761 | 0.753 | 0.548 | 0.608 | 0.585 | 0.641 | 0.550 | 0.603 | 0.572 | |

| MSL | F1*-SP | 0.727 | 0.724 | 0.606 | 0.659 | 0.598 | 0.713 | 0.575 | 0.727 | 0.719 |

| AUCPR-SP | 0.737 | 0.756 | 0.637 | 0.672 | 0.626 | 0.751 | 0.616 | 0.768 | 0.727 | |

| AUROC-SP | 0.844 | 0.822 | 0.775 | 0.787 | 0.702 | 0.831 | 0.744 | 0.828 | 0.788 | |

| F1*-PA | 0.743 | 0.749 | 0.883 | 0.886 | 0.848 | 0.896 | 0.821 | 0.900 | 0.835 | |

| AUCPR-PA | 0.785 | 0.786 | 0.872 | 0.872 | 0.879 | 0.913 | 0.875 | 0.910 | 0.861 | |

| AUROC-PA | 0.882 | 0.882 | 0.968 | 0.970 | 0.974 | 0.984 | 0.965 | 0.983 | 0.922 | |

| F1* | 0.411 | 0.362 | 0.320 | 0.377 | 0.316 | 0.406 | 0.203 | 0.376 | 0.367 | |

| AUCPR | 0.334 | 0.291 | 0.230 | 0.278 | 0.214 | 0.281 | 0.123 | 0.272 | 0.272 | |

| AUROC | 0.721 | 0.688 | 0.631 | 0.676 | 0.586 | 0.629 | 0.434 | 0.641 | 0.567 | |

| SMD | F1*-SP | 0.645 | 0.660 | 0.844 | 0.850 | 0.330 | 0.800 | 0.602 | 0.808 | 0.571 |

| AUCPR-SP | 0.585 | 0.620 | 0.833 | 0.829 | 0.266 | 0.790 | 0.542 | 0.779 | 0.514 | |

| AUROC-SP | 0.919 | 0.907 | 0.969 | 0.969 | 0.672 | 0.950 | 0.799 | 0.959 | 0.770 | |

| F1*-PA | 0.663 | 0.723 | 0.930 | 0.908 | 0.793 | 0.910 | 0.860 | 0.901 | 0.716 | |

| AUCPR-PA | 0.558 | 0.648 | 0.911 | 0.881 | 0.751 | 0.898 | 0.817 | 0.869 | 0.658 | |

| AUROC-PA | 0.959 | 0.969 | 0.997 | 0.996 | 0.985 | 0.991 | 0.950 | 0.990 | 0.894 | |

| F1* | 0.421 | 0.457 | 0.461 | 0.474 | 0.090 | 0.450 | 0.082 | 0.504 | 0.360 | |

| AUCPR | 0.333 | 0.406 | 0.412 | 0.409 | 0.050 | 0.389 | 0.036 | 0.444 | 0.309 | |

| AUROC | 0.892 | 0.882 | 0.849 | 0.882 | 0.539 | 0.792 | 0.278 | 0.848 | 0.714 | |