Minghao Han Dingkang Yang\footnotemark[4] Jiabei Cheng Xukun Zhang \\ Linhao Qu Zizhi Chen Lihua Zhang \\ \\ Academy for Engineering and Technology, Fudan University, Shanghai, China \\ Cognition and Intelligent Technology Laboratory, Fudan University, Shanghai, China \\ Department of Automation, Shanghai Jiaotong University, Shanghai, China \\ Fudan University, Shanghai, China \\ [email protected], [email protected], [email protected]

Towards Unified Molecule-Enhanced Pathology Image Representation Learning via Integrating Spatial Transcriptomics

Abstract

Recent advancements in multimodal pre-training models have significantly advanced computational pathology. However, current approaches predominantly rely on visual-language models, which may impose limitations from a molecular perspective and lead to performance bottlenecks. Here, we introduce a Unified Molecule-enhanced Pathology Image REpresentationn Learning framework (Umpire). Umpire aims to leverage complementary information from gene expression profiles to guide the multimodal pre-training, enhancing the molecular awareness of pathology image representation learning. We demonstrate that this molecular perspective provides a robust, task-agnostic training signal for learning pathology image embeddings. Due to the scarcity of paired data, approximately 4 million entries of spatial transcriptomics gene expression were collected to train the gene encoder. By leveraging powerful pre-trained encoders, Umpire aligns the encoders across over 697K pathology image-gene expression pairs. The performance of Umpire is demonstrated across various molecular-related downstream tasks, including gene expression prediction, spot classification, and mutation state prediction in whole slide images. Our findings highlight the effectiveness of multimodal data integration and open new avenues for exploring computational pathology enhanced by molecular perspectives. The code and pre-trained weights are available at https://github.com/Hanminghao/UMPIRE.

1 Introduction

Whole slide images (WSIs) and pathology images are considered the “gold standard” for cancer analysis due to their capacity to provide detailed information at cellular and tissue levels [21, 47]. Recent advancements in Computational Pathology (CPATH) have leveraged deep learning to achieve significant progress in various tasks, including cancer diagnosis [40, 59, 44], survival analysis [62, 36, 72], and cancer staging [70, 60]. However, most existing paradigms focus on specific tasks and train models in isolation, which can cause these meticulously designed models to fail when faced with new data or tasks requiring retraining. Some researchers argue that instead of investing considerable effort in designing complex downstream models, it is more cost-effective and scientifically sound to develop foundational models that can adapt to a wide range of downstream tasks [8, 20, 57, 77, 46, 30].

Recent research has demonstrated that utilizing a large number of noisy image-text pairs for extensive multimodal pre-training can enhance the alignment of spatial representations between images and text, as well as improve the encoder’s performance on downstream tasks [55, 41, 37, 80]. Building on this idea, several researchers have proposed contrastive learning-based pre-training frameworks that leverage pathology images and descriptive texts, including PLIP [30] and CONCH [46]. Despite the widespread of natural language in cancer pathology analysis, multimodal pre-training of image-text pairs fails to provide additional insights for cancer analysis. In contrast, gene expression data, such as RNA transcriptome, provides complementary information at the molecular level, elucidating the mechanisms of oncogenesis and facilitating personalized treatment recommendations [78, 17]. Consequently, TANGLE [35] introduced a methodology that employs bulk RNA to guide WSI representation learning. Their experimental results indicate that pre-training based on WSI and bulk RNA significantly enhances model performance on cancer subtype classification. However, their approach relies on WSIs and bulk RNA, representing only patient-level information and failing to capture the inherent heterogeneity within individual samples [42, 50].

Spatial Transcriptomics (ST) is an emerging technique that integrates pathology slides with gene expression (RNA transcriptome) analysis, enabling researchers to localize and quantify RNA expression within tissues [32]. In recent years, various ST methodologies, such as Spatial Transcriptomics [64], Visium [65], MERFISH [7], and Xenium [33], have advanced rapidly, establishing themselves as crucial links between pathology images and gene expression. Similar to image-text pairs, ST generates numerous mappings between pathology images and gene expression. Under typical conditions, pathology images specialize in the analysis of tissue structures and cell morphology [54, 60], while gene expression profiles excel in analyzing the tumor microenvironment and disease mechanisms [58, 18]. Both are crucial for cancer analysis. Recently, there has been rapid progress in the research of foundational and pre-trained models in both fields [8, 20, 57, 13, 58, 66]. However, a unified pre-training framework that integrates them is still lacking, leading to an incomplete perspective. This is due to two main factors: 1) Pathology images and gene expression data often originate from different labs and clinical environments, with varying formats and standards, which limits the construction of large-scale datasets; 2) Despite advances in visual-language models, there is no effective cross-modality learning framework for integrating pathology images with gene expression.

To address these challenges, we propose a two-stage Unified Molecule-enhanced Pathology Image REpresentationn Learning framework, termed Umpire. It is well established that gene expression plays a crucial role in regulating cellular proliferation and intercellular interactions [85]. Anomalies in gene expression correspond to discernible morphological patterns in pathology images [39]. Accordingly, we believe that leveraging gene expression to guide the representation learning of pathology images provides a more robust training signal than relying on image augmentation or text descriptions, enhancing the molecular perspective in this learning process. To our knowledge, Umpire is the first large-scale pre-training of pathology images and ST gene expression, providing a foundation for subsequent molecular perception pathological representation learning and multimodal integration models.

In this work, approximately 4M entries of ST gene expression were initially collected to pre-train a BERT-like gene encoder [16]. Then, we filtered data from the HEST dataset to obtain 697K aligned pairs. Following established multimodal contrastive learning paradigms [55, 37, 80], we aligned the vision encoder with the gene encoder during the alignment phase. Ultimately, extensive evaluations were conducted across multiple tasks, including bimodal gene expression prediction, unimodal spot/patch classification, and mutation state prediction for WSIs. Experimental results demonstrate that Umpire outperforms the baseline across all tasks. We also conducted comprehensive ablation experiments, visualization analyses, and case studies.

2 Related Work

Self-supervised Representation Learning: By generating its own supervisory signals, self-supervised learning (SSL) can operate without manual labels. This approach has gained significant attention in recent research [10, 28]. SSL gained popularity in natural language processing (NLP) with models such as GPT [4] and BERT [16], which employed SSL to learn semantic representations from text through tasks like masked language modeling. Due to similarities such as discrete sequences and context dependence, many NLP SSL techniques have been adapted for single-cell representation learning [13, 66]. SSL has also gained traction in computer vision, with methods such as SimCLR [9] and MoCo [10] learning visual representations through augmented views. This paper employs a BERT-like architecture to pre-train a gene encoder, which is then integrated into a multimodal contrastive learning framework.

Contrastive Learning: Contrastive learning is a powerful pre-training technique in the domain of SSL used to acquire task-agnostic representations. This mechanism constructs paired samples to enhance the proximity of paired embeddings in the latent space while increasing the distance between unpaired embeddings. PLIP [30] collected 208K pairs of pathology images and captions from Twitter and fine-tuned the model based on CLIP, resulting in an encoder exhibiting robust performance across various downstream tasks. CONCH [46] used over 1.17 million pathology image-caption pairs for task-agnostic pre-training, achieving excellent performance across 14 downstream benchmarks while minimizing the need for supervised fine-tuning. TANGLE [35] enhances performance on WSI level visual recognition tasks by aligning expression profiles with slide representations. Our Umpire aligns with these concepts by correlating pathology images with gene expression.

Spatial Transcriptomics in CPATH: Gene expression profiles offer a molecular perspective that complements tissue pathology, enabling researchers to better understand cancer pathogenesis and develop personalized treatment strategies [78, 17]. However, acquiring detailed gene expression profiles is time-consuming and costly [67, 32]. Given the mapping between pathology images and gene expression profiles, some researchers have proposed predicting gene expression from pathology images [25, 53, 84, 75, 49]. BLEEP [75] and mclSTExp [49] employ contrastive learning to create a low-dimensional joint embedding space, enabling the estimation of gene expression in any pathology image patch using expression profiles from a reference dataset. However, these methods depend on training from scratch with a single dataset, leading to suboptimal model performance due to limited training data. We recommend pre-training encoders on large-scale datasets and fine-tuning on downstream tasks, as this approach improves performance and reduces computational costs compared to existing methods.

3 Methodology

3.1 Data Collection

Given 1) the substantial heterogeneity of data across various sequencing platforms [58], 2) the 55-micron resolution of Visium, which aligns with the dimensions of individual tissue patches [32], 3) the wider variety of genes detectable by Visium [32], and 4) the relatively abundant and readily accessible Visium datasets [34, 6], only Visium spatial transcriptomics (ST) gene expression was selected for training. Despite being solely pre-trained on Visium data, our model successfully demonstrates transferability and generalization to other sequencing platforms (Section 4.3).

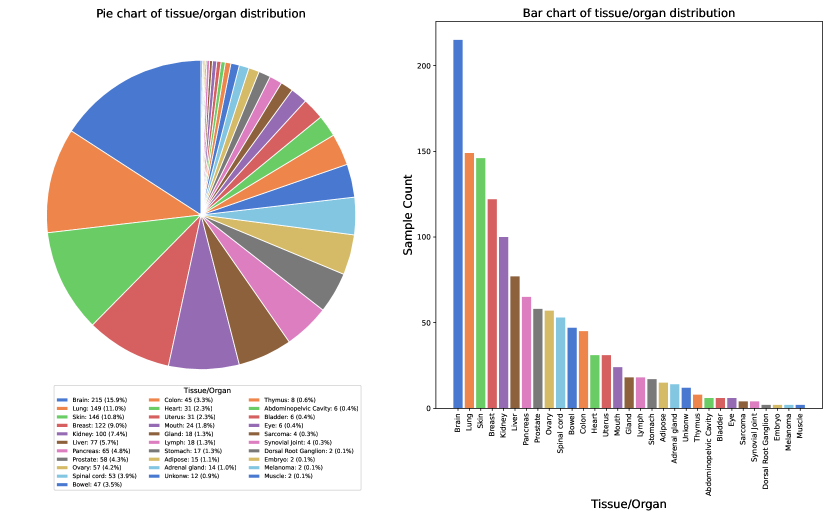

For gene expression, we collected approximately 4M ST gene expression data points from the Gene Expression Omnibus (GEO) and other public datasets [34, 6, 83, 79]. To our knowledge, this dataset represents the largest Visium-based Spatial TranscriptOmics Dataset (ViSTomics-4M), encompassing 3.94 million ST data points collected from 1,363 slides across 180 datasets and publications. For further details about ViSTomics-4M, please refer to Appendix D.1. For paired data, it was sourced from the largest pathology image and ST dataset, HEST [34]. After filtering for human samples based on Visium, 697K aligned pathology image-gene expression pairs were obtained.

3.2 Unsupervised Training for Unimodal Encoder

Although HEST is the largest dataset in the field, it contains only 329 slides and 697K data pairs after filtering, which is still insufficient compared to other multimodal pre-training models (e.g., CLIP [55] with 400M pairs and CONCH [46] with 1.17M pairs). We initialize the encoders with pre-trained weights and subsequently align them in the latent space to address this limitation. While existing models for gene expression primarily focus on single-cell [13, 66] or single-cell-level ST [58], ViSTomics-4M was collected to pre-train the gene encoder. Specifically, as shown in Figure 1a, we developed a Transformer-based gene encoder, termed Visiumformer. For comparison, Nicheformer [58] was also used as the gene encoder, though it focuses on single-cell ST data and has not been trained on Visium-based data.

Visiumformer Tokenization. We adopted a vocabulary including 20,310 genes. The average expression level for each gene across all samples was first calculated. To reduce batch effects, each gene expression value was normalized by dividing it by the average expression of the corresponding gene. Since each sequencing dataset originates from a whole tissue section, the data lacks an inherent order, rendering it order-agnostic [65]. Therefore, we normalized the gene expression values and sorted them in descending order for each gene to complete the tokenization process:

| (1) |

where and represent the index of gene in the gene vocabulary and the normalized gene expression of sample . In this study, we set to 1500, meaning that the context length for the gene encoder is 1500 tokens.

Visiumformer Pre-training. Given a tokenized ST gene expression , Visiumformer first applies an embedding process:

| (2) |

where represents the vector to be fed into the Transformer block, is the input dimension, and . Visiumformer is composed of 12 stacked Transformer blocks. Given the embedded sequence , each Transformer block processes the input sequence according to the following equations:

| (3) | |||

| (4) |

In line with BERT [16], masked language modeling (MLM) loss is utilized to optimize Visiumformer. Specifically, of the tokens are randomly masked, and the model is trained to predict these masked tokens using the unmasked tokens as context. The MLM loss can be expressed as:

| (5) |

where is the set of masked tokens, are the input tokens and is the -th masked token of .

Vision Encoder. The development of pathological visual foundation models has progressed rapidly [77, 69, 3, 71, 20, 8]. In this study, we select Phikon (ViT-B/16, 86M) [20] and UNI (ViT-L/16, 307M) [8] as our vision encoders.

3.3 Multimodal Alignment

Cross-modality Alignment. As depicted in Figure 1b, symmetric contrastive learning (SCL) loss was employed to align image embeddings with gene embeddings. Specifically, for a batch of paired pathology image-gene expression samples , where and denote the -th image and gene embedding obtained from the encoders, the loss function is defined as:

| (6) | ||||

where is the temperature parameter. The first term represents image-to-gene loss, and the second represents gene-to-image loss. The loss function aims to minimize the distance between paired embeddings while maximizing the distance between unpaired embeddings.

Other Optimization Strategy. Unlike qualitative text, gene expression is quantitative, prompting us to consider a regression approach for aligning the encoders across modalities. As a complement to the primary method, a reconstruction loss (mean squared error) is introduced, termed Umpire-Rec:

| (7) |

where represents the normalized top 1500 highly variable gene expression and denotes the image embedding. Additionally, we employed various contrastive learning loss and L1 loss for ablation studies (in Section 4.4).

3.4 Query-Reference for Expression Prediction

When attempting to learn full-dimensional gene expression from pathology images, regression-based approaches may struggle due to the “curse of dimensionality” [75, 49]. We mitigate this issue by fine-tuning, querying, and weighted aggregation (Figure 2a). Specifically, Umpire first undergoes fine-tuning on the downstream dataset. During inference, the frozen vision encoder converts pathology images into query vectors . Concurrently, all gene expression from the training set (termed reference database) is encoded into reference vectors using the frozen gene encoder. The cosine similarity between the query and reference vectors is then computed. Finally, the top references for each query are identified, and a weighted method is applied to derive the predicted gene expression:

| (8) | ||||

| (9) |

represents the predicted gene expression associated with the query image , while denotes the set of the top nearest references for this query. Additionally, signifies the authentic gene expression linked to reference .

4 Experiments and Results

4.1 Pre-training Implementation Details

We first conducted vocabulary masking pre-training[16] of Visiumformer on ViSTomics-4M, with the entire training process spanning 1 million steps and a global batch size of 256. For the vision encoder, two pathology-specific vision encoders were selected: Phikon (ViT-B/16, 86M) [20] and UNI (ViT-L/16, 307M) [8]. A linear projection head was employed to map the image and gene embeddings into a 512-dimensional latent space for alignment. Each Umpire model under different combinations was trained for ten epochs with a global batch size of 512 during alignment. All pre-training tasks were performed on four NVIDIA A800 GPUs. Please refer to Appendix B.2 for details.

| Top 50 | Method | HLT | HPC | HER2+ | Average | |||

| HVG | HEG | HVG | HEG | HVG | HEG | |||

| Regression based | ST-Net [25] | 0.0421±0.0206 | 0.0406±0.0140 | 0.2172±0.1720 | 0.0445±0.0386 | 0.1129±0.0576 | 0.0940±0.0421 | 0.0919 |

| HisToGene [53] | 0.0357±0.0213 | 0.0414±0.0322 | 0.1338±0.1093 | 0.0912±0.0451 | 0.0329±0.0416 | 0.0287±0.0387 | 0.0606 | |

| His2ST [84] | 0.0054±0.0122 | 0.0029±0.0163 | 0.0252±0.0213 | 0.0127±0.009 | 0.0443±0.0197 | 0.0328±0.0174 | 0.0206 | |

| THItoGene [38] | 0.0063±0.0098 | 0.0020±0.0106 | 0.0294±0.0316 | 0.0163±0.094 | 0.0391±0.0146 | 0.0286±0.0167 | 0.0203 | |

| Contrastive learning based | mclSTExp [49] | 0.1978±0.0326 | 0.3033±0.0216 | 0.3098±0.1628 | 0.0929±0.0151 | 0.1499±0.0814 | 0.1065±0.0491 | 0.1934 |

| BLEEP [75] | 0.1995±0.0435 | 0.2956±0.0253 | 0.3221±0.1417 | 0.0969±0.0300 | 0.1692±0.0729 | 0.1336±0.0573 | 0.2028 | |

| Umpire-Adapter (Ours) | Niche. + Phikon | 0.1925±0.0475 | 0.2955±0.0347 | 0.4082±0.1735 | 0.1912±0.0223 | 0.2713±0.0974 | 0.2276±0.0644 | 0.2644 |

| Niche. + UNI | 0.2015±0.0461 | 0.3097±0.0269 | 0.4328±0.1621 | 0.1903±0.0210 | 0.2800±0.0961 | 0.2162±0.0600 | 0.2718 | |

| Visium. + Phikon | 0.2291±0.0471 | 0.3368±0.0287 | 0.4286±0.1758 | 0.2133±0.0276 | 0.2849±0.0934 | 0.2307±0.0617 | 0.2872 | |

| Visium. + UNI | 0.2297±0.0466 | 0.3318±0.0305 | 0.4226±0.1739 | 0.1621±0.0290 | 0.2848±0.0980 | 0.2274±0.0635 | 0.2764 | |

| Umpire-Finetune (Ours) | Trans. + Phikon | 0.2246±0.0471 | 0.3315±0.0443 | 0.4216±0.1697 | 0.2137±0.0259 | 0.2389±0.0960 | 0.1726±0.0625 | 0.2672 |

| Trans. + UNI | 0.1695±0.0381 | 0.2674±0.0236 | 0.4276±0.1730 | 0.1886±0.0778 | 0.2400±0.0897 | 0.1726±0.0652 | 0.2443 | |

| Niche. + Phikon | 0.2174±0.0456 | 0.3123±0.0278 | 0.4194±0.1633 | 0.2085±0.0124 | 0.2651±0.0973 | 0.2155±0.0609 | 0.2753 | |

| Niche. + UNI | 0.2045±0.0462 | 0.3071±0.0281 | 0.4223±0.1599 | 0.2102±0.0373 | 0.2721±0.0964 | 0.2128±0.0641 | 0.2715 | |

| Visium. + Phikon | 0.2291±0.0516 | 0.3291±0.0360 | 0.4405±0.1649 | 0.2265±0.0197 | 0.2797±0.0996 | 0.2314±0.0670 | 0.2894 | |

| Visium. + UNI | 0.2364±0.0439 | 0.3343±0.0363 | 0.4317±0.1740 | 0.2220±0.0211 | 0.2843±0.1004 | 0.2324±0.0689 | 0.2902 | |

4.2 Downstream Datasets

Extensive evaluations were conducted across multiple downstream datasets to assess the capabilities of Umpire in multimodal and unimodal representation learning. All the downstream evaluation experiments included six datasets and three tasks. These tasks included bimodal gene expression prediction (Section 4.3), unimodal patch and spot type classification (Section 4.4), and WSI mutation state prediction (Section 4.5). The six downstream datasets used in these evaluations are as follows: Human Liver Tissue (HLT) [2] dataset, comprising four sections and 9,254 paired pathology images and gene expression data. Human Prostate Cancer (HPC) [19] dataset, containing five sections and 14,783 paired samples. HER2-positive breast tumor (HER2+)[1] dataset, consisting of 36 sections, with 32 reserved for training, resulting in 11,509 paired samples, as outlined in ST-Net[25]. Human Dorsolateral Prefrontal Cortex (DLPFC) [48] dataset, made up of 12 sections and 47,329 paired samples, where each spot was categorized into white matter (WM) and cortical layers L1–L6. Human Breast Cancer [76] (10X Breast) dataset, with one section and 3,789 paired samples, where each spot was categorized into four tissue subtypes. LUAD-mutation dataset, which includes 692 Fresh Frozen WSIs from 437 patients in TCGA-LUAD, used to predict mutation status (positive/negative) for four specific genes: EGFR, KRAS, STK11, and TP53, as detailed in DeepPATH [12]. For details on the downstream datasets, comparison methods, and downstream model training, please refer to Appendix B.

4.3 Multimodal Representation Learning

The multimodal representation capability of Umpire is evaluated through a bimodal gene expression prediction task (Figure 2a). As shown in Table 1, Umpire was assessed on three datasets: Human Liver Tissue dataset (HLT), Human Prostate Cancer dataset (HPC), and HER2-positive breast tumor dataset (HER2+). HLT and HPC were measured using the Visium platform [65]. The HER2+ dataset, derived from the Spatial Transcriptomics platform [64], was then used to assess the transfer learning capabilities of Umpire across different technologies and platforms.

Specifically, this task aims to predict full-dimensional gene expression based on pathology images. Two strategies were employed for evaluation: Umpire-Finetune (full-parameter fine-tuning) and Umpire-Adapter, which adds two trainable linear layers with ReLU activation to both the frozen encoders. Additionally, we included other task-specific methods, including regression-based and contrastive learning-based models. The average Pearson correlation coefficient (PCC) [11] was reported for the top 50 highly variable genes (HVG) and highly expressed genes (HEG), utilizing a leave-one-out cross-validation method. To eliminate data leakage and ensure a fair comparison, the datasets used in this section were not included in the pre-training phase.

Regression-based vs. Contrastive Learning-based: When predicting full-dimensional gene expression, regression-based methods often face the “curse of dimensionality”, causing training failures for all but ST-Net. In contrast, contrastive learning methods, using the Query-Reference paradigm, excel in full-dimensional prediction. The top-performing contrastive learning method, BLEEP, shows an average improvement of and over ST-Net on the HPC and HER2+ datasets, respectively.

Umpire vs. Contrastive Learning-based: Compared to contrastive learning-based methods, both Umpire-Adapter and Umpire-Finetune demonstrate significant improvements. Specifically, Umpire-Adapter achieves an average increase of over BLEEP, while Umpire-Finetune shows an improvement of . Apart from the HLT and HPC datasets based on Visium, Umpire also achieved outstanding performance on the HER2+ dataset, with an average improvement of . The HER2+ dataset was sequenced using the Spatial Transcriptomics platform, which was not encountered during the pre-training phase. This reflects the strong generalization capabilities of Umpire, which benefit from the diversity of data used during pre-training. Umpire performs well across various organs (liver, prostate, and breast), disease states (healthy and cancerous), and sequencing platforms (Visium and Spatial Transcriptomics). To further demonstrate that the significant performance improvement of Umpire is not solely attributable to a more powerful vision encoder, we replaced the vision encoders of ST-Net and BLEEP with Phikon. This modification leads to an improvement in the performance of ST-Net; however, it still significantly lags behind the original BLEEP. Applying the same operation to BLEEP results in a performance decrease of about due to the small training dataset, which is unsuitable for large-parameter vision encoders (please refer to Appendix A.5).

Visium. vs. Niche. vs. Trans.: For comparison, we employed three different gene encoders: our Visiumformer (Visium.), Nicheformer (Niche.) pre-trained on 100M single-cell and spatial transcriptomics data, and a randomly initialized 12-layer Transformer (Trans.). The Trans. encoder utilizes continuous gene expression values from the top 1500 highly variable genes, while both Visium. and Niche. require tokenization. Notably, our Visium. combined with vision encoders consistently outperforms the others, achieving an average PCC that is higher than that of Niche. and higher than that of Trans.. Although Niche. is not pre-trained on Visium data, it performs well after multimodal alignment pre-training. In contrast, Trans. underperforms due to the lack of pre-training in the first phase, despite participating in the second pre-training phase.

Umpire-Adapter vs. Umpire-Finetune: A key advantage of pre-trained models is their efficient performance with minimal resources, achieved through small-parameter fine-tuning on downstream tasks [55, 61]. To leverage this capability, Umpire-Adapter was introduced. Overall, the Umpire-Adapter performs worse than Umpire-Finetune by an average of . However, the Umpire-Adapter uses only to of the trainable parameters required by Umpire-Finetune. For individual datasets, the Umpire-Adapter lags behind Umpire-Finetune by on the larger HPC dataset, while it nearly matches Umpire-Finetune on the smaller HLT and HER2+ datasets. We recommend the Umpire-Adapter for limited data or computational resources and Umpire-Finetune for other scenarios to leverage Umpire fully.

Case Study: We visualized the actual expression of PIBF1 (Figure 3) and CTSC (see Appendix A.2) in the sample HPC-patient-1-H2-5, along with the expression predicted by various methods. PIBF1 and CTSC are known to influence cell proliferation and autophagy, each playing distinct roles in tumor invasion and metastasis [43, 74]. Umpire shows greater biological heterogeneity within the slices compared to ST-Net and BLEEP, especially between the tumor and the normal tissue (see red box in Figure 3).

| Method | Modality | DLPFC | 10X Breast | ||

| Bal. Acc. | Wgt. F1 | Bal. Acc. | Wgt. F1 | ||

| GeneMLP | 53.46±1.77 | 64.13±2.83 | 75.95±1.90 | 76.27±1.59 | |

| Niche. [58] | 45.12±4.50 | 56.18±3.44 | 72.56±1.49 | 74.80±1.51 | |

| Visium.(Ours) | 55.13±4.11 | 65.87±3.86 | 76.97±1.95 | 77.54±1.66 | |

| Phikon [20] | 48.17±10.76 | 56.92±8.89 | 82.10±2.35 | 83.04±2.41 | |

| UNI [8] | 53.72±10.59 | 62.84±7.12 | 81.88±4.08 | 82.92±3.83 | |

| Umpire-Rec-Phikon | 54.00±7.70 | 64.10±4.21 | 75.48±3.73 | 75.23±2.98 | |

| Umpire-Rec-UNI | 60.59±8.27 | 69.88±4.07 | 76.03±2.35 | 77.71±1.69 | |

| Umpire-Phikon | 68.53±7.14 | 76.34±4.19 | 85.06±1.19 | 86.07±1.17 | |

| Umpire-UNI | 68.76±8.17 | 76.83±3.89 | 84.31±2.98 | 85.51±2.48 | |

| Umpire-Niche. | 68.69±3.87 | 76.59±3.17 | 79.20±2.37 | 80.39±1.47 | |

| Umpire-Visium. | 70.70±3.21 | 77.97±2.76 | 82.06±1.45 | 83.17±1.23 | |

4.4 Linear Probing Classification

Table 2 presents the evaluation results of Umpire for classifying human dorsolateral prefrontal cortex (DLPFC) morphotypes and human breast cancer (10X Breast). Different brain regions show subtle visual differences, so gene expression data is typically used for spot classification. We use DLPFC to evaluate how well Umpire integrates complementary information across modalities. In contrast, the 10X Breast dataset exhibits significant visual differences between tissue types, allowing effective classification using visual information alone. This dataset helps assess whether the molecular perspective introduced by Umpire harms the original vision encoder. Following standard practices in SSL [52, 14, 5], linear probing was employed to benchmark Umpire, Umpire-Rec, and Visiumformer (Figure 2b). We also benchmarked Nicheformer [58], Phikon [20] and UNI [8] for comparison.

Gene-based vs. Image-based vs. Umpire-based: We evaluated three categories of models: gene-based (), pathology image-based (), and multimodal pre-trained models (). Gene-based models perform well on DLPFC; however, their performance declines in dataset with significant visual variations, i.e. 10X Breast. Regardless of the modality utilized, pre-training with Umpire consistently enhances model performance. Following alignment, Visiumformer demonstrated a balanced accuracy increase of on DLPFC and on 10X Breast. For the vision encoders Phikon and UNI, balanced accuracy improved by up to on DLPFC and on 10X Breast. These experiments clearly demonstrate that Umpire benefits from multimodal alignment, effectively enhancing information complementarity and significantly boosting performance.

Umpire vs. Umpire-Rec: In contrast to the improved consistency of Umpire across all datasets, the vision encoders pre-trained with reconstruction loss (Umpire-Rec) demonstrated significant performance improvements on DLPFC but experienced varying degrees of decline on 10X Breast. We believe that the high sparsity and dimensionality of gene expression restrict the vision encoders’ ability to learn effectively from the gene modality when utilizing regression and reconstruction methods.

Visium. vs. Niche. vs. GeneMLP: Analogous to the Trans. described in Section 4.3, an unpretrained GeneMLP was established as a baseline. In accordance with standard linear probing protocols, GeneMLP selects the top 1,500 highly variable gene expressions after normalization, which are subsequently processed through a linear layer for classification. Pre-training on ViSTomics-4M enabled Visiumformer to outperform other gene-based models. Conversely, Nicheformer, which lacked access to Visium platform gene expression during pre-training, performed worse than GeneMLP. After alignment, both models exhibited noticeable improvements; however, Umpire-Niche. still fell short of Umpire-Visium.. This underscores that while alignment can enhance performance, it cannot fully compensate for the degradation caused by the absence of corresponding data in the initial stage. This necessity prompted the development of ViSTomics-4M and the pre-training of Visiumformer.

Case Study: Figure 4 visualizes the linear probing classification results for sample 151673 from DLPFC, illustrating performance before (top) and after (bottom) multimodal pre-training. After pre-training, the model’s ability to differentiate between different brain regions significantly improves across both modalities, particularly among layers L1 to L6. This demonstrates that our Umpire effectively integrates information from both modalities, achieving a synergistic effect in which the combined performance exceeds the sum of the individual contributions.

Zero-shot Embedding: Following multimodal pre-training, we performed zero-shot embedding visualization to analyze the embeddings of the two modalities before and after pre-training using t-Distributed Stochastic Neighbor Embedding (t-SNE) [68]. DLPFC served as a benchmark for computing two clustering quality metrics, including the Silhouette score [56] and the Davies-Bouldin index [15]. The t-SNE visualizations and corresponding evaluation metrics are provided in Appendix A.3. Our results indicate that the embeddings after pre-training (Umpire and Umpire-Rec) are more effective at distinguishing various brain regions. Notably, pre-training enhances model performance on pathology images while also improving the results on gene expression. The integration of gene expression with pathology images further enhances the model’s ability to discern subtle features within the images and reveals previously unrecognized insights from the gene expression.

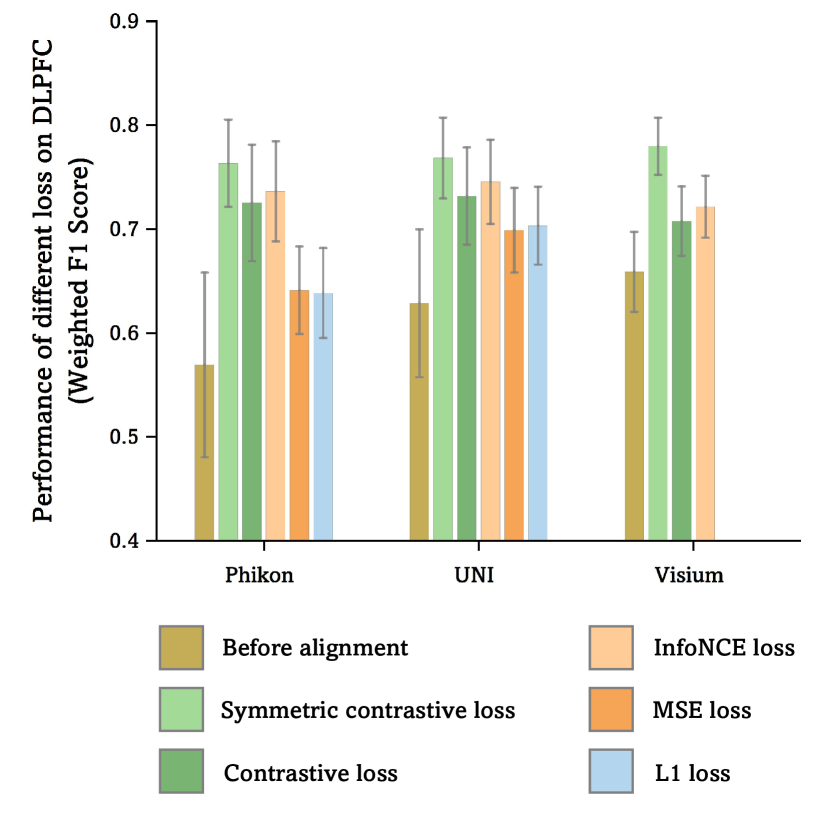

Loss Ablation: Ablation studies were conducted on DLPFC to evaluate the impact of different loss functions. When the symmetric contrastive loss was replaced with regression-based loss functions (mean squared error and L1 loss), the weighted F1 score for Phikon decreased by and , respectively. We reasonably attribute this decline to the high sparsity of the gene expression, which negatively impacts reconstruction performance. Additionally, the effects of replacing the symmetric contrastive loss with either unilateral contrastive loss [55] or InfoNCE loss [27] were investigated, both of which resulted in varying degrees of performance degradation (see Appendix A.4).

4.5 MIL-based WSI Classification

Certain cancer analyses require global WSI information; however, the large size of WSIs necessitates using Multiple Instance Learning (MIL) for WSI-level tasks. The impact of Umpire on WSI-level performance across four WSI gene mutation status classification tasks was evaluated. All tasks utilized ABMIL [31] as the instance aggregation method (see Figure 2c). All experiments were conducted using five-fold cross-validation at the patient level.

Figure 5 compares the performance of Phikon and UNI before and after alignment. Despite being self-supervised on numerous WSIs, Phikon and UNI exhibit suboptimal results in this challenging task. Umpire outperformed the original vision encoder in three sub-tasks, achieving maximum relative improvements of in AUC and in the F1 Score. In contrast, Umpire-Rec significantly underperformed compared to the original encoder. We speculate that the regression-based pre-training method caused the vision encoder to focus excessively on gene-level features, diminishing its ability to capture the original semantic information from the images. Conversely, our Umpire employs a contrastive learning approach that enhances the vision encoder’s ability to capture gene-level details while preserving its capacity to retain visual semantic information.

TANGLE [35] focuses on pre-training at the WSI level, using UNI [8] as a feature extractor and ABMIL as an aggregation module to align WSIs with bulk RNA data across 27 TCGA cohorts. To adapt TANGLE for WSI classification tasks, we utilize a frozen UNI to extract features and apply the pre-trained aggregation module from TANGLE. Our experimental results show that Umpire outperforms TANGLE in three out of four sub-tasks (see Figure 5b). In the sub-tasks involving KRAS, STK11, and TP53, Umpire demonstrates comparable performance to TANGLE in terms of AUC, surpassing it by an average of , while achieving an average improvement of in F1 Score. In the EGFR sub-task, Umpire falls short, lagging behind TANGLE by and , respectively.

5 Conclusion and Discussion

Conclusion: In this paper, we first collected and constructed the largest Visium-based spatial transcriptomics (ST) dataset and then introduced a unified molecule-enhanced pathology image representation learning framework. Our approach, Umpire, employs a two-stage pre-training process on extensive ST data and paired pathology image-ST gene expression. Comprehensive evaluations of Umpire were conducted across multiple downstream tasks, demonstrating its significant superiority over various baseline methods in all tasks. As the first attempt at a molecule-enhanced pathology image representation learning framework, Umpire will also serve as a foundational model for future research.

Future Work: These results underscore the potential of multimodal pre-training, paving the way for future advancements. Compared to other visual-language pre-training methods, the data used remains relatively small [46, 30], and future work should focus on larger-scale data collection. Additionally, while we demonstrated that models pre-trained on Visium data can be effectively transferred to other sequencing platforms, subsequent research should aim to develop a more generalized and robust model encompassing multiple sequencing technologies and platforms [58].

6 Acknowledgment

This project was funded by the National Natural Science Foundation of China 82090052.

References

- Andersson et al. [2021] Alma Andersson, Ludvig Larsson, Linnea Stenbeck, Fredrik Salmén, Anna Ehinger, Sunny Z Wu, Ghamdan Al-Eryani, Daniel Roden, Alex Swarbrick, Åke Borg, et al. Spatial deconvolution of her2-positive breast cancer delineates tumor-associated cell type interactions. Nature communications, 12(1):6012, 2021.

- Andrews et al. [2024] Tallulah S Andrews, Diana Nakib, Catia T Perciani, Xue Zhong Ma, Lewis Liu, Erin Winter, Damra Camat, Sai W Chung, Patricia Lumanto, Justin Manuel, et al. Single-cell, single-nucleus, and spatial transcriptomics characterization of the immunological landscape in the healthy and psc human liver. Journal of Hepatology, 80(5):730–743, 2024.

- Azizi et al. [2023] Shekoofeh Azizi, Laura Culp, Jan Freyberg, Basil Mustafa, Sebastien Baur, Simon Kornblith, Ting Chen, Nenad Tomasev, Jovana Mitrović, Patricia Strachan, et al. Robust and data-efficient generalization of self-supervised machine learning for diagnostic imaging. Nature Biomedical Engineering, 7(6):756–779, 2023.

- Brown [2020] Tom B Brown. Language models are few-shot learners. arXiv preprint arXiv:2005.14165, 2020.

- Caron et al. [2021] Mathilde Caron, Hugo Touvron, Ishan Misra, Hervé Jégou, Julien Mairal, Piotr Bojanowski, and Armand Joulin. Emerging properties in self-supervised vision transformers. In Proceedings of the IEEE/CVF international conference on computer vision, pages 9650–9660, 2021.

- Chen et al. [2024a] Jiawen Chen, Muqing Zhou, Wenrong Wu, Jinwei Zhang, Yun Li, and Didong Li. Stimage-1k4m: A histopathology image-gene expression dataset for spatial transcriptomics. arXiv preprint arXiv:2406.06393, 2024a.

- Chen et al. [2015] Kok Hao Chen, Alistair N Boettiger, Jeffrey R Moffitt, Siyuan Wang, and Xiaowei Zhuang. Spatially resolved, highly multiplexed rna profiling in single cells. Science, 348(6233):aaa6090, 2015.

- Chen et al. [2024b] Richard J Chen, Tong Ding, Ming Y Lu, Drew FK Williamson, Guillaume Jaume, Andrew H Song, Bowen Chen, Andrew Zhang, Daniel Shao, Muhammad Shaban, et al. Towards a general-purpose foundation model for computational pathology. Nature Medicine, 30(3):850–862, 2024b.

- Chen et al. [2020] Ting Chen, Simon Kornblith, Mohammad Norouzi, and Geoffrey Hinton. A simple framework for contrastive learning of visual representations. In International conference on machine learning, pages 1597–1607. PMLR, 2020.

- Chen et al. [2021] Xinlei Chen, Saining Xie, and Kaiming He. An empirical study of training self-supervised vision transformers. In Proceedings of the IEEE/CVF international conference on computer vision, pages 9640–9649, 2021.

- Cohen et al. [2009] Israel Cohen, Yiteng Huang, Jingdong Chen, Jacob Benesty, Jacob Benesty, Jingdong Chen, Yiteng Huang, and Israel Cohen. Pearson correlation coefficient. Noise reduction in speech processing, pages 1–4, 2009.

- Coudray et al. [2018] Nicolas Coudray, Paolo Santiago Ocampo, Theodore Sakellaropoulos, Navneet Narula, Matija Snuderl, David Fenyö, Andre L Moreira, Narges Razavian, and Aristotelis Tsirigos. Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. Nature medicine, 24(10):1559–1567, 2018.

- Cui et al. [2024] Haotian Cui, Chloe Wang, Hassaan Maan, Kuan Pang, Fengning Luo, Nan Duan, and Bo Wang. scgpt: toward building a foundation model for single-cell multi-omics using generative ai. Nature Methods, pages 1–11, 2024.

- Darcet et al. [2023] Timothée Darcet, Maxime Oquab, Julien Mairal, and Piotr Bojanowski. Vision transformers need registers, 2023.

- Davies and Bouldin [1979] David L Davies and Donald W Bouldin. A cluster separation measure. IEEE transactions on pattern analysis and machine intelligence, (2):224–227, 1979.

- Devlin [2018] Jacob Devlin. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018.

- Ding et al. [2023] Kexin Ding, Mu Zhou, Dimitris N Metaxas, and Shaoting Zhang. Pathology-and-genomics multimodal transformer for survival outcome prediction. In MICCAI, pages 622–631. Springer, 2023.

- Elhanani et al. [2023] Ofer Elhanani, Raz Ben-Uri, and Leeat Keren. Spatial profiling technologies illuminate the tumor microenvironment. Cancer cell, 41(3):404–420, 2023.

- Erickson et al. [2022] Andrew Erickson, Mengxiao He, Emelie Berglund, Maja Marklund, Reza Mirzazadeh, Niklas Schultz, Linda Kvastad, Alma Andersson, Ludvig Bergenstråhle, Joseph Bergenstråhle, et al. Spatially resolved clonal copy number alterations in benign and malignant tissue. Nature, 608(7922):360–367, 2022.

- Filiot et al. [2023] Alexandre Filiot, Ridouane Ghermi, Antoine Olivier, Paul Jacob, Lucas Fidon, Alice Mac Kain, Charlie Saillard, and Jean-Baptiste Schiratti. Scaling self-supervised learning for histopathology with masked image modeling. medRxiv, pages 2023–07, 2023.

- Glaser et al. [2017] Adam K Glaser, Nicholas P Reder, Ye Chen, Erin F McCarty, Chengbo Yin, Linpeng Wei, Yu Wang, Lawrence D True, and Jonathan TC Liu. Light-sheet microscopy for slide-free non-destructive pathology of large clinical specimens. Nature biomedical engineering, 1(7):0084, 2017.

- Grün [2020] Dominic Grün. Revealing dynamics of gene expression variability in cell state space. Nature methods, 17(1):45–49, 2020.

- Gu et al. [2023] Jianlei Gu, Jiawei Dai, Hui Lu, and Hongyu Zhao. Comprehensive analysis of ubiquitously expressed genes in humans from a data-driven perspective. Genomics, Proteomics & Bioinformatics, 21(1):164–176, 2023.

- Hadsell et al. [2006] Raia Hadsell, Sumit Chopra, and Yann LeCun. Dimensionality reduction by learning an invariant mapping. In 2006 IEEE computer society conference on computer vision and pattern recognition (CVPR’06), pages 1735–1742. IEEE, 2006.

- He et al. [2020a] Bryan He, Ludvig Bergenstråhle, Linnea Stenbeck, Abubakar Abid, Alma Andersson, Åke Borg, Jonas Maaskola, Joakim Lundeberg, and James Zou. Integrating spatial gene expression and breast tumour morphology via deep learning. Nature biomedical engineering, 4(8):827–834, 2020a.

- He et al. [2016] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- He et al. [2020b] Kaiming He, Haoqi Fan, Yuxin Wu, Saining Xie, and Ross Girshick. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 9729–9738, 2020b.

- He et al. [2022] Kaiming He, Xinlei Chen, Saining Xie, Yanghao Li, Piotr Dollár, and Ross Girshick. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 16000–16009, 2022.

- Huang et al. [2017] Gao Huang, Zhuang Liu, Laurens Van Der Maaten, and Kilian Q Weinberger. Densely connected convolutional networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4700–4708, 2017.

- Huang et al. [2023] Zhi Huang, Federico Bianchi, Mert Yuksekgonul, Thomas J Montine, and James Zou. A visual–language foundation model for pathology image analysis using medical twitter. Nature Medicine, pages 1–10, 2023.

- Ilse et al. [2018] Maximilian Ilse, Jakub Tomczak, and Max Welling. Attention-based deep multiple instance learning. In International conference on machine learning, pages 2127–2136. PMLR, 2018.

- Jain and Eadon [2024] Sanjay Jain and Michael T Eadon. Spatial transcriptomics in health and disease. Nature Reviews Nephrology, pages 1–13, 2024.

- Janesick et al. [2023] Amanda Janesick, Robert Shelansky, Andrew D Gottscho, Florian Wagner, Stephen R Williams, Morgane Rouault, Ghezal Beliakoff, Carolyn A Morrison, Michelli F Oliveira, Jordan T Sicherman, et al. High resolution mapping of the tumor microenvironment using integrated single-cell, spatial and in situ analysis. Nature Communications, 14(1):8353, 2023.

- Jaume et al. [2024a] Guillaume Jaume, Paul Doucet, Andrew H. Song, Ming Y. Lu, Cristina Almagro-Perez, Sophia J. Wagner, Anurag J. Vaidya, Richard J. Chen, Drew F. K. Williamson, Ahrong Kim, and Faisal Mahmood. HEST-1k: A Dataset for Spatial Transcriptomics and Histology Image Analysis. arXiv, 2024a.

- Jaume et al. [2024b] Guillaume Jaume, Lukas Oldenburg, Anurag Jayant Vaidya, Richard J. Chen, Drew FK Williamson, Thomas Peeters, Andrew H. Song, and Faisal Mahmood. Transcriptomics-guided slide representation learning in computational pathology. In CVPR, 2024b.

- Jaume et al. [2024c] Guillaume Jaume, Anurag Vaidya, Richard Chen, Drew Williamson, Paul Liang, and Faisal Mahmood. Modeling dense multimodal interactions between biological pathways and histology for survival prediction. CVPR, 2024c.

- Jia et al. [2021] Chao Jia, Yinfei Yang, Ye Xia, Yi-Ting Chen, Zarana Parekh, Hieu Pham, Quoc Le, Yun-Hsuan Sung, Zhen Li, and Tom Duerig. Scaling up visual and vision-language representation learning with noisy text supervision. In ICML, pages 4904–4916. PMLR, 2021.

- Jia et al. [2024] Yuran Jia, Junliang Liu, Li Chen, Tianyi Zhao, and Yadong Wang. Thitogene: a deep learning method for predicting spatial transcriptomics from histological images. Briefings in Bioinformatics, 25(1):bbad464, 2024.

- Kueckelhaus et al. [2024] Jan Kueckelhaus, Simon Frerich, Jasim Kada-Benotmane, Christina Koupourtidou, Jovica Ninkovic, Martin Dichgans, Juergen Beck, Oliver Schnell, and Dieter Henrik Heiland. Inferring histology-associated gene expression gradients in spatial transcriptomic studies. Nature Communications, 15(1):7280, 2024.

- Li et al. [2021] Bin Li, Yin Li, and Kevin W Eliceiri. Dual-stream multiple instance learning network for whole slide image classification with self-supervised contrastive learning. In CVPR, pages 14318–14328, 2021.

- Li et al. [2022] Junnan Li, Dongxu Li, Caiming Xiong, and Steven Hoi. Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In ICML, 2022.

- Li and Wang [2021] Xinmin Li and Cun-Yu Wang. From bulk, single-cell to spatial rna sequencing. International journal of oral science, 13(1):36, 2021.

- Li et al. [2024] Xiaomin Li, Ci Ren, Anni Huang, Yue Zhao, Liming Wang, Hui Shen, Chun Gao, Bingxin Chen, Tong Zhu, Jinfeng Xiong, et al. Pibf1 regulates multiple gene expression via impeding long-range chromatin interaction to drive the malignant transformation of hpv16 integration epithelial cells. Journal of Advanced Research, 57:163–180, 2024.

- Liu et al. [2024] Mingxin Liu, Yunzan Liu, Pengbo Xu, Hui Cui, Jing Ke, and Jiquan Ma. Exploiting geometric features via hierarchical graph pyramid transformer for cancer diagnosis using histopathological images. IEEE Transactions on Medical Imaging, 2024.

- Lu et al. [2021] Ming Y Lu, Drew FK Williamson, Tiffany Y Chen, Richard J Chen, Matteo Barbieri, and Faisal Mahmood. Data-efficient and weakly supervised computational pathology on whole-slide images. Nature biomedical engineering, 5(6):555–570, 2021.

- Lu et al. [2024] Ming Y Lu, Bowen Chen, Drew FK Williamson, Richard J Chen, Ivy Liang, Tong Ding, Guillaume Jaume, Igor Odintsov, Long Phi Le, Georg Gerber, et al. A visual-language foundation model for computational pathology. Nature Medicine, 30:863–874, 2024.

- Ludwig and Weinstein [2005] Joseph A Ludwig and John N Weinstein. Biomarkers in cancer staging, prognosis and treatment selection. Nature Reviews Cancer, 5(11):845–856, 2005.

- Maynard et al. [2021] Kristen R Maynard, Leonardo Collado-Torres, Lukas M Weber, Cedric Uytingco, Brianna K Barry, Stephen R Williams, Joseph L Catallini, Matthew N Tran, Zachary Besich, Madhavi Tippani, et al. Transcriptome-scale spatial gene expression in the human dorsolateral prefrontal cortex. Nature neuroscience, 24(3):425–436, 2021.

- Min et al. [2024] Wenwen Min, Zhiceng Shi, Jun Zhang, Jun Wan, and Changmiao Wang. Multimodal contrastive learning for spatial gene expression prediction using histology images. arXiv preprint arXiv:2407.08216, 2024.

- Oksza-Orzechowski et al. [2024] Kazimierz Oksza-Orzechowski, Edwin Quinten, Shadi Darvish Shafighi, Szymon M Kiełbasa, Hugo van Kessel, Ruben AL de Groen, Joost SP Vermaat, Julieta H Seplúveda-Yáñez, Marcelo A Navarrete, Hendrik Veelken, et al. Caclust: linking genotype to transcriptional heterogeneity of follicular lymphoma using bcr and exomic variants. bioRxiv, pages 2024–04, 2024.

- Oord et al. [2018] Aaron van den Oord, Yazhe Li, and Oriol Vinyals. Representation learning with contrastive predictive coding. arXiv preprint arXiv:1807.03748, 2018.

- Oquab et al. [2023] Maxime Oquab, Timothée Darcet, Theo Moutakanni, Huy V. Vo, Marc Szafraniec, Vasil Khalidov, Pierre Fernandez, Daniel Haziza, Francisco Massa, Alaaeldin El-Nouby, Russell Howes, Po-Yao Huang, Hu Xu, Vasu Sharma, Shang-Wen Li, Wojciech Galuba, Mike Rabbat, Mido Assran, Nicolas Ballas, Gabriel Synnaeve, Ishan Misra, Herve Jegou, Julien Mairal, Patrick Labatut, Armand Joulin, and Piotr Bojanowski. Dinov2: Learning robust visual features without supervision, 2023.

- Pang et al. [2021] Minxing Pang, Kenong Su, and Mingyao Li. Leveraging information in spatial transcriptomics to predict super-resolution gene expression from histology images in tumors. BioRxiv, pages 2021–11, 2021.

- Qu et al. [2024] Linhao Qu, Yingfan Ma, Xiaoyuan Luo, Qinhao Guo, Manning Wang, and Zhijian Song. Rethinking multiple instance learning for whole slide image classification: A good instance classifier is all you need. IEEE Transactions on Circuits and Systems for Video Technology, 2024.

- Radford et al. [2021] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. In ICML, pages 8748–8763. PMLR, 2021.

- Rousseeuw [1987] Peter J Rousseeuw. Silhouettes: a graphical aid to the interpretation and validation of cluster analysis. Journal of computational and applied mathematics, 20:53–65, 1987.

- Saillard et al. [2024] Charlie Saillard, Rodolphe Jenatton, Felipe Llinares-López, Zelda Mariet, David Cahané, Eric Durand, and Jean-Philippe Vert. H-optimus-0, 2024.

- Schaar et al. [2024] Anna Christina Schaar, Alejandro Tejada-Lapuerta, Giovanni Palla, Robert Gutgesell, Lennard Halle, Mariia Minaeva, Larsen Vornholz, Leander Dony, Francesca Drummer, Mojtaba Bahrami, et al. Nicheformer: a foundation model for single-cell and spatial omics. bioRxiv, pages 2024–04, 2024.

- Shao et al. [2021] Zhuchen Shao, Hao Bian, Yang Chen, Yifeng Wang, Jian Zhang, Xiangyang Ji, et al. Transmil: Transformer based correlated multiple instance learning for whole slide image classification. NIPS, 34:2136–2147, 2021.

- Shi et al. [2023] Jiangbo Shi, Lufei Tang, Yang Li, Xianli Zhang, Zeyu Gao, Yefeng Zheng, Chunbao Wang, Tieliang Gong, and Chen Li. A structure-aware hierarchical graph-based multiple instance learning framework for pt staging in histopathological image. IEEE Transactions on Medical Imaging, 42(10):3000–3011, 2023.

- Shi et al. [2024] Jiangbo Shi, Chen Li, Tieliang Gong, Yefeng Zheng, and Huazhu Fu. Vila-mil: Dual-scale vision-language multiple instance learning for whole slide image classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11248–11258, 2024.

- Song et al. [2024] Andrew H Song, Richard J Chen, Guillaume Jaume, Anurag Jayant Vaidya, Alexander Baras, and Faisal Mahmood. Multimodal prototyping for cancer survival prediction. In ICML, 2024.

- Song et al. [2020] Fangda Song, Ga Ming Angus Chan, and Yingying Wei. Flexible experimental designs for valid single-cell rna-sequencing experiments allowing batch effects correction. Nature communications, 11(1):3274, 2020.

- Ståhl et al. [2016a] Patrik L Ståhl, Fredrik Salmén, Sanja Vickovic, Anna Lundmark, José Fernández Navarro, Jens Magnusson, Stefania Giacomello, Michaela Asp, Jakub O Westholm, Mikael Huss, et al. Visualization and analysis of gene expression in tissue sections by spatial transcriptomics. Science, 353(6294):78–82, 2016a.

- Ståhl et al. [2016b] Patrik L Ståhl, Fredrik Salmén, Sanja Vickovic, Anna Lundmark, José Fernández Navarro, Jens Magnusson, Stefania Giacomello, Michaela Asp, Jakub O Westholm, Mikael Huss, et al. Visualization and analysis of gene expression in tissue sections by spatial transcriptomics. Science, 353(6294):78–82, 2016b.

- Theodoris et al. [2023] Christina V Theodoris, Ling Xiao, Anant Chopra, Mark D Chaffin, Zeina R Al Sayed, Matthew C Hill, Helene Mantineo, Elizabeth M Brydon, Zexian Zeng, X Shirley Liu, et al. Transfer learning enables predictions in network biology. Nature, 618(7965):616–624, 2023.

- Tian et al. [2023] Luyi Tian, Fei Chen, and Evan Z Macosko. The expanding vistas of spatial transcriptomics. Nature Biotechnology, 41(6):773–782, 2023.

- Van der Maaten and Hinton [2008] Laurens Van der Maaten and Geoffrey Hinton. Visualizing data using t-sne. Journal of machine learning research, 9(11), 2008.

- Vorontsov et al. [2023] Eugene Vorontsov, Alican Bozkurt, Adam Casson, George Shaikovski, Michal Zelechowski, Siqi Liu, Kristen Severson, Eric Zimmermann, James Hall, Neil Tenenholtz, et al. Virchow: a million-slide digital pathology foundation model. arXiv preprint arXiv:2309.07778, 2023.

- Wang et al. [2024a] Pengyu Wang, Huaqi Zhang, Meilu Zhu, Xi Jiang, Jing Qin, and Yixuan Yuan. Mgiml: Cancer grading with incomplete radiology-pathology data via memory learning and gradient homogenization. IEEE Transactions on Medical Imaging, 2024a.

- Wang et al. [2022] Xiyue Wang, Sen Yang, Jun Zhang, Minghui Wang, Jing Zhang, Wei Yang, Junzhou Huang, and Xiao Han. Transformer-based unsupervised contrastive learning for histopathological image classification. Medical image analysis, 81:102559, 2022.

- Wang et al. [2024b] Xiyue Wang, Junhan Zhao, Eliana Marostica, Wei Yuan, Jietian Jin, Jiayu Zhang, Ruijiang Li, Hongping Tang, Kanran Wang, Yu Li, et al. A pathology foundation model for cancer diagnosis and prognosis prediction. Nature, pages 1–9, 2024b.

- Wolf et al. [2018] F Alexander Wolf, Philipp Angerer, and Fabian J Theis. Scanpy: large-scale single-cell gene expression data analysis. Genome biology, 19:1–5, 2018.

- Xiao et al. [2021] Yansen Xiao, Min Cong, Jiatao Li, Dasa He, Qiuyao Wu, Pu Tian, Yuan Wang, Shuaixi Yang, Chenxi Liang, Yajun Liang, et al. Cathepsin c promotes breast cancer lung metastasis by modulating neutrophil infiltration and neutrophil extracellular trap formation. Cancer cell, 39(3):423–437, 2021.

- Xie et al. [2024] Ronald Xie, Kuan Pang, Sai Chung, Catia Perciani, Sonya MacParland, Bo Wang, and Gary Bader. Spatially resolved gene expression prediction from histology images via bi-modal contrastive learning. NIPS, 36, 2024.

- Xu et al. [2024a] Hang Xu, Huazhu Fu, Yahui Long, Kok Siong Ang, Raman Sethi, Kelvin Chong, Mengwei Li, Rom Uddamvathanak, Hong Kai Lee, Jingjing Ling, et al. Unsupervised spatially embedded deep representation of spatial transcriptomics. Genome Medicine, 16(1):12, 2024a.

- Xu et al. [2024b] Hanwen Xu, Naoto Usuyama, Jaspreet Bagga, Sheng Zhang, Rajesh Rao, Tristan Naumann, Cliff Wong, Zelalem Gero, Javier González, Yu Gu, et al. A whole-slide foundation model for digital pathology from real-world data. Nature, pages 1–8, 2024b.

- Xu et al. [2024c] Yingxue Xu, Yihui Wang, Fengtao Zhou, Jiabo Ma, Shu Yang, Huangjing Lin, Xin Wang, Jiguang Wang, Li Liang, Anjia Han, et al. A multimodal knowledge-enhanced whole-slide pathology foundation model. arXiv preprint arXiv:2407.15362, 2024c.

- Xu et al. [2024d] Zhicheng Xu, Weiwen Wang, Tao Yang, Ling Li, Xizheng Ma, Jing Chen, Jieyu Wang, Yan Huang, Joshua Gould, Huifang Lu, et al. Stomicsdb: a comprehensive database for spatial transcriptomics data sharing, analysis and visualization. Nucleic acids research, 52(D1):D1053–D1061, 2024d.

- Yu et al. [2022] Jiahui Yu, Zirui Wang, Vijay Vasudevan, Legg Yeung, Mojtaba Seyedhosseini, and Yonghui Wu. Coca: Contrastive captioners are image-text foundation models. arXiv preprint arXiv:2205.01917, 2022.

- Yu et al. [2023] Xiaokang Yu, Xinyi Xu, Jingxiao Zhang, and Xiangjie Li. Batch alignment of single-cell transcriptomics data using deep metric learning. Nature communications, 14(1):960, 2023.

- Yuan et al. [2024] Xin Yuan, Yanran Ma, Ruitian Gao, Shuya Cui, Yifan Wang, Botao Fa, Shiyang Ma, Ting Wei, Shuangge Ma, and Zhangsheng Yu. Heartsvg: a fast and accurate method for identifying spatially variable genes in large-scale spatial transcriptomics. Nature Communications, 15(1):5700, 2024.

- Yuan et al. [2023] Zhiyuan Yuan, Wentao Pan, Xuan Zhao, Fangyuan Zhao, Zhimeng Xu, Xiu Li, Yi Zhao, Michael Q Zhang, and Jianhua Yao. Sodb facilitates comprehensive exploration of spatial omics data. Nature Methods, 20(3):387–399, 2023.

- Zeng et al. [2022] Yuansong Zeng, Zhuoyi Wei, Weijiang Yu, Rui Yin, Yuchen Yuan, Bingling Li, Zhonghui Tang, Yutong Lu, and Yuedong Yang. Spatial transcriptomics prediction from histology jointly through transformer and graph neural networks. Briefings in Bioinformatics, 23(5):bbac297, 2022.

- Zhu and Thompson [2019] Jiajun Zhu and Craig B Thompson. Metabolic regulation of cell growth and proliferation. Nature reviews Molecular cell biology, 20(7):436–450, 2019.

Appendix A More Experimental Results

A.1 Impact of Unimodal Pre-training

The pre-training process of Umpire is divided into two stages—unimodal encoder pre-training and multimodal alignment pre-training—to mitigate reliance on the quantity of paired pathology image-spatial transcriptomic gene data. Moreover, our experiments reveal that the first stage significantly accelerates the convergence of the loss function during the second stage. We conducted these experiments using Umpire, which integrates Visiumformer and Phikon [20]. Figure S1 illustrates that the convergence speed of the model’s loss is significantly impacted when Visiumformer skips the first-stage pre-training or when the pret-rained weights from Phikon are excluded.

A.2 More Results of Multimodal Representation Learning

The Results of Top 100 HEG and HVG Genes: In Section 4.3, the performance of Umpire and other methods were evaluated on the HLT, HPC, and HER2+ datasets by reporting the average Pearson correlation coefficient (PCC) for the top 50 highly expressed genes (HEG) and highly variable genes (HVG). Table S1 presents the PCC for the top 100 HEGs and HVGs across the three datasets, highlighting the consistent advantage of Umpire. Interestingly, a decline in predictive performance is observed when transitioning from the top 50 to the top 100 genes, suggesting that the model is particularly adept at identifying patterns among genes with the highest expression levels or the greatest variability. This finding underscores the model’s capacity to focus on genes that are more biologically significant and potentially more relevant in understanding complex biological processes. Furthermore, these genes are often the most informative markers of pathological alterations in tissues or tumors, emphasizing the practical utility of the approach for detecting critical molecular changes associated with disease states [82, 23, 22].

| Top 100 | Method | HLT | HPC | HER2+ | Average | |||

| HVG | HEG | HVG | HEG | HVG | HEG | |||

| Regression based | ST-Net [25] | 0.0265±0.0112 | 0.0301±0.0076 | 0.1890±0.1568 | 0.0631±0.0480 | 0.1062±0.0570 | 0.0940±0.0413 | 0.0848 |

| HisToGene [53] | 0.0344±0.0213 | 0.0387±0.0284 | 0.1172±0.0876 | 0.0888±0.0387 | 0.0301±0.0363 | 0.0228±0.0299 | 0.0553 | |

| His2ST [84] | 0.0051±0.0125 | 0.0028±0.0157 | 0.0224±2.09 | 0.0138±0.0129 | 0.0411±0.0185 | 0.0298±0.0177 | 0.0192 | |

| THItoGene [38] | 0.0055±0.0124 | 0.0023±0.0126 | 0.0311±2.84 | 0.0193±0.0246 | 0.0319±0.0135 | 0.0207±0.0098 | 0.0185 | |

| Contrastive learning based | mclSTExp [49] | 0.1530±0.0313 | 0.2561±0.0164 | 0.2738±0.1272 | 0.0967±0.0105 | 0.1324±0.0713 | 0.0929±0.0486 | 0.1675 |

| BLEEP [75] | 0.1579±0.0354 | 0.2530±0.0195 | 0.2885±0.1300 | 0.0999±0.0432 | 0.1443±0.0637 | 0.1283±0.0562 | 0.1787 | |

| Umpire-Adapter (Ours) | Niche. + Phikon | 0.1478±0.0383 | 0.2532±0.0246 | 0.3630±0.1604 | 0.1906±0.0407 | 0.2329±0.0881 | 0.2136±0.0661 | 0.2335 |

| Niche. + UNI | 0.1559±0.0365 | 0.2657±0.0184 | 0.3896±0.1481 | 0.1918±0.0166 | 0.2409±0.0872 | 0.2028±0.0626 | 0.2412 | |

| Visium. + Phikon | 0.1849±0.0370 | 0.2909±0.0193 | 0.3818±0.1611 | 0.2114±0.0376 | 0.2482±0.0846 | 0.2185±0.0635 | 0.2560 | |

| Visium. + UNI | 0.1854±0.0371 | 0.2874±0.0212 | 0.3781±0.1580 | 0.1645±0.0324 | 0.2478±0.0898 | 0.2153±0.0637 | 0.2464 | |

| Umpire-Finetune (Ours) | Trans. + Phikon | 0.1841±0.0407 | 0.2832±0.0314 | 0.3854±0.1567 | 0.2191±0.0352 | 0.2048±0.0858 | 0.1674±0.0621 | 0.2407 |

| Trans. + UNI | 0.1378±0.0353 | 0.2252±0.0194 | 0.3834±0.1541 | 0.1941±0.077 | 0.2069±0.0802 | 0.1683±0.0627 | 0.2193 | |

| Niche. + Phikon | 0.1740±0.0365 | 0.2680±0.0212 | 0.3796±0.1453 | 0.2069±0.0227 | 0.2289±0.0880 | 0.2023±0.0620 | 0.2433 | |

| Niche. + UNI | 0.1563±0.0377 | 0.2588±0.0236 | 0.3881±0.1390 | 0.2146±0.0317 | 0.2340±0.0879 | 0.1983±0.0651 | 0.2417 | |

| Visium. + Phikon | 0.1855±0.0412 | 0.2838±0.0249 | 0.3949±0.1483 | 0.2271±0.0281 | 0.2438±0.0904 | 0.2175±0.0681 | 0.2588 | |

| Visium. + UNI | 0.1919±0.0368 | 0.2913±0.0246 | 0.3898±0.1550 | 0.2207±0.0296 | 0.2467±0.0907 | 0.2177±0.0682 | 0.2597 | |

Additional Case Study: In Section 4.3, the predicted expression of the PIBF1 gene for sample patient-1-H2-5 was visualized using Umpire and other methods. Furthermore, the predicted expression levels were visualized alongside the ground truth for the CTSC (Figure S2) and H2AZ1 (Figure S3) genes for the same sample. Compared to other methods, Umpire demonstrates a superior ability to comprehensively preserve the heterogeneity of gene expression within tissue slices, particularly in distinguishing between tumor and normal regions. This enhanced capability allows clinicians and researchers to focus on areas of the tissue slices that provide greater informational value, thereby facilitating more targeted and insightful analyses.

A.3 Zero-shot Embeddings Visualization

Following pre-training, we conducted a zero-shot t-Distributed Stochastic Neighbor Embedding (t-SNE) [68] visualization on the DLPFC dataset, focusing on sample 151673, as shown in Figure S4. In addition, we evaluated the model’s performance using the Silhouette score (Silhouette) [56] and the Davies-Bouldin Index (DB Index) [15]. Prior to Umpire pre-training, the model could distinguish only the white matter (WM), with the remaining cortical layers (L1-L6) largely indistinguishable. Post-pre-training, however, the model exhibited a markedly improved capacity to differentiate among the cortical layers, accompanied by substantial improvements in both the Silhouette and DB Index, reflecting enhanced spatial and cluster separation.

A.4 Loss Function Ablation Study

As detailed in Section 4.3, we conducted an ablation study on the loss functions using the DLPFC dataset for the linear probing classification task. Replacing the symmetric contrastive loss (SCL) with reconstruction loss functions (mean squared error loss and L1 loss) resulted in a weighted F1 score reduction of and , respectively, for Phikon. Similarly, substituting SCL with Contrastive loss [24] and InfoNCE loss [51] led to weighted F1 score decreases of and , respectively. The effects of different loss functions on Umpire-UNI and Umpire-Visium were also analyzed, showing consistent performance degradation with loss function replacement. These findings are visualized in Figure S5. The superior performance of SCL can be attributed to the symmetry it introduces in contrastive learning, enabling more effective capture of bidirectional relationships within the data. This symmetry enhances the model’s generalization across diverse tasks.

A.5 Impact of Pathological Vision Encoder

In the task of gene expression prediction, our model, Umpire, achieved improvements in the PCC of and compared to ST-Net [25] and BLEEP [75], respectively. Umpire employs UNI [8] and Phikon [20] as vision encoders to encode pathology images, whereas ST-Net and BLEEP utilize DenseNet-121 [29] and ResNet-50 [26] as their respective vision encoders. To demonstrate that the significant performance improvement of Umpire is not solely attributable to using more powerful pathology-specific vision encoders, we replaced the vision encoders in ST-Net and BLEEP with Phikon and conducted the same experiments. Table S2 reports the performance changes observed when the vision encoder in ST-Net was replaced. This modification led to performance improvements in the HLT and HPC datasets. However, a decline in performance was noted on the HER2+ dataset, suggesting dataset-specific effects of the encoder replacement. Overall, the performance of the modified ST-Net improved by compared to the original ST-Net, yet it still significantly lagged behind that of the original BLEEP. Conversely, the situation was entirely different for BLEEP; when we replaced the vision encoder in BLEEP with Phikon, the performance across all three datasets decreased, with an average decline of . This phenomenon has also been observed by Xie et al. [75], who attributed it to the use of large-parameter vision encoders on small-scale datasets. They argued that such an approach might lead the network to prioritize memorizing information within its weights rather than encoding it effectively in the projection space, ultimately compromising overall performance. In contrast, Umpire addresses this challenge by incorporating pre-training on extensive large-scale datasets, which enables the model to learn more robust and transferable representations. The improvements achieved by Umpire stem from the synergistic contributions of all modules and the strategic benefits of pre-training, rather than solely from replacing the vision encoder with a pathology-specific alternative.

Appendix B Model Architecture, Experiment Settings and Comparison Methods

B.1 Model Architecture

Tokenization for Visiumformer: In biological experiments, systematic differences in measurement results, known as batch effects, can arise from variations in sample processing, experimental conditions, timing, operators, or other technical factors. These effects are particularly pronounced in high-throughput sequencing techniques, including RNA sequencing, single-cell sequencing, and spatial transcriptomics, and they can substantially influence data analysis and biological interpretation [63, 81]. To mitigate batch effects, we standardized the count data across all spots, ensuring each spot contained 10,000 counts. Subsequently, we computed the average expression value for each gene across all data, considering only non-zero values in the calculation. The final normalized data were obtained by dividing the initial normalized values by the corresponding average expression values. The normalized results were then sorted in descending order, and the indices of the top genes were selected as the tokenized gene expression data. The complete normalization and tokenization procedure is detailed in Algorithm 1.

Model Architecture of Visiumformer: Visiumformer is composed of 12 stacked Transformer blocks. As shown in Figure 1, each Transformer block primarily consists of a multi-head attention mechanism and a feed-forward network (FFN). In this work, we use 16 attention heads, set the token dimension to , and configure the hidden layer of the feed-forward network to 1024. For more details on the model architecture, please refer to Table S3.

Model Architecture of Trans.: To highlight the necessity of pre-training for Visiumformer, we designed a Transformer baseline model (Trans.), described in Section 4.3, where normalized gene expression values serve as input without any pre-training. The gene expression values for Trans. were normalized using the same method as Visiumformer. Given the high dimensionality of gene expression data, the Scanpy library [73] was employed to select the top 1,500 highly variable genes across the training dataset. A transformation was then applied to prepare the input. This processed input was also used as the regression target for Umpire-Rec. To ensure fairness in comparison, the Transformer blocks in Trans. were kept identical to those in Visiumformer.

B.2 Experiment Settings

| HLT | HPC | HER2+ | Average | ||||

| HVG | HEG | HVG | HEG | HVG | HEG | ||

| ST-Net [25] | 0.0421±0.0206 | 0.0406±0.0140 | 0.2172±0.1720 | 0.0445±0.0386 | 0.1129±0.0576 | 0.0940±0.0421 | 0.0919 |

| ST-Net-Phikon | 0.1090±0.0294 | 0.1140±0.0103 | 0.2326±0.1557 | 0.1301±0.0512 | 0.0842±0.0597 | 0.0583±0.0442 | 0.1214 |

| BLEEP [75] | 0.1995±0.0435 | 0.2956±0.0253 | 0.3221±0.1417 | 0.0969±0.0300 | 0.1692±0.0729 | 0.1336±0.0573 | 0.2028 |

| BLEEP-Phikon | 0.0149±0.0274 | 0.0240±0.0334 | 0.2598±0.1831 | 0.0786±0.0481 | 0.0804±0.0627 | 0.0513±0.0493 | 0.0848 |

Pre-training for Visiumformer: Pre-training for Visiumformer was conducted using four NVIDIA A800 GPUs. The configurations for this per-training, including hyperparameters and setup details, are thoroughly outlined in Table S3.

Pre-training for Alignment: All pre-training experiments for alignment were conducted using four NVIDIA A800 GPUs. Additional experimental configurations are provided in Table S4. In addition, gene expression hidden states were extracted from the -th Transformer block, and mean pooling was applied across the sequence length dimension to obtain the encoded gene expression embedding.

Experimental Platform for Downstream Tasks: We evaluated our Umpire on multiple downstream tasks, all of which were performed on a single NVIDIA A800 GPU.

Experiment Settings for Multimodal Representation Learning: When fine-tuning on downstream datasets, leave-one-out cross-validation was employed, using one slice as the test set, while the remaining slices were used for training and validation. The model architecture is kept identical to that during pre-training. We set the learning rate to 1e-4, weight decay to 1e-3, and did not use warmup. AdamW was used as the optimizer. In addition, of the training data is used as the training set, and the remaining is used as the validation set. All models were trained for 50 epochs, with early stopping based on the validation loss and a patience of 5. When implementing Umpire-Adapter, two linear layers with ReLU activation were incorporated following the Gene Encoder and the Vision Encoder, with a bottleneck layer dimension set to 128.

| Hyperparameter | Value | |

| Model Architecture | Vocab size | 20,310 |

| Token dimensionality | 512 | |

| FFN dimensionality | 1024 | |

| Number of Transformer layers | 12 | |

| Max sequence length | 1,500 | |

| Number of attention heads | 16 | |

| Dropout | 0.0 | |

| Hidden act | ReLU | |

| LayerNorm eps | 1e-12 | |

| Training Details | Optimizer | AdamW |

| Scheduler | CosineWarmupScheduler | |

| Max learning rate | 1e-4 | |

| Min learning rate | 1e-5 | |

| Warm up steps | 20,000 | |

| Total steps | 1,000,000 | |

| Weight decay | 0.1 | |

| Global batch size | 256 | |

| Masking probability | 0.15 |

| Hyperparameter | Values |

| Similarity function | Cosine similarity |

| Optimizer | AdamW |

| Scheduler | CosineWarmupScheduler |

| Max learning rate | 1e-4 |

| Min learning rate | 1e-5 |

| Warm up steps | 5,000 |

| Total epochs | 10 |

| Weight decay | 1e-3 |

| Globa batch size | 512 |

| Extraction layer | 12 |

| Pooling method | Mean |

Experiment Settings for Linear Probing: Since the DLPFC dataset consists of 12 slices, we similarly employed leave-one-out cross-validation. In contrast, the 10X Breast dataset contains only a single slice, so five-fold cross-validation was used for this dataset. Adam was selected as the optimizer, with the learning rate set to 1e-4. The feature encoders were frozen, and only a trainable linear layer was added. All models were trained for 50 epochs, configuring early stopping with a patience 5.

Experiment Settings for MIL-based WSI Classification: We used CLAM [45] to divide all WSIs into non-overlapping patches of pixels at 20 magnification. To meet the input requirements of the vision encoder, all patches were resized to pixels. Since each patient may have multiple WSIs, five-fold cross-validation was performed at the patient level to prevent data leakage. When a patient had multiple WSIs, the patches obtained from all WSIs were stacked into a single bag. The simple yet effective ABMIL framework [31] was utilized as the feature aggregation module, while the cross-entropy loss was employed to guide the training process. All models were set with a learning rate of 5e-4, used Adam as the optimizer, and were trained for 50 epochs with early stopping and a patience of 5.

B.3 Downstream Comparison Methods

To comprehensively evaluate the capabilities of Umpire, in Section 4.3, we compared several models, including regression-based models: ST-Net [25], HisToGene [53], His2ST [84], and THItoGene [38], as well as contrastive learning-based models: BLEEP [75], and mclSTExp [49].

ST-Net is a deep learning model developed to integrate spatial transcriptomics data with pathology images for predicting gene expression in breast cancer. The model processes hematoxylin and eosin (H&E)-stained tissue image patches of pixels, corresponding to spots approximately 100 µm in diameter. It utilizes DenseNet-121 [29] to extract image features, followed by a fully connected layer to predict the expression levels of 250 target genes. We only modified the fully connected layer to enable it to predict the full-dimensional gene expression.

HisToGene utilizes a modified Vision Transformer architecture to account for the spatial dependencies between spatial transcriptomics spots. It first extracts image patches corresponding to the spatial coordinates of each spot in the spatial transcriptomics data. These patches are then processed through a learnable linear layer to generate patch embeddings and positional embeddings to capture spatial relationships. HisToGene employs multi-head attention layers to model these dependencies and predict gene expression.

His2ST integrates Convolutional Neural Networks (CNNs) and Graph Convolutional Networks (GCNs) to predict spatial gene expression from histopathological images. CNNs are used to extract local features from the input images, capturing the tissue’s morphological characteristics. GCNs then model the spatial relationships between neighbouring regions, enabling the model to effectively capture the spatial dependencies of gene expression within the tissue.

THItoGene integrates dynamic convolutional networks, Efficient Capsule Networks, Vision Transformers, and Graph Attention Networks. By synthesizing these advanced components, THItoGene effectively captures local visual features, spatial dependencies, and inter-spot relationships. This powerful combination enables accurate high-resolution gene expression prediction from pathology images.

BLEEP is a framework that utilizes contrastive learning to predict gene expression from pathology images. The model learns a joint low-dimensional embedding space from paired pathology images and gene expression profiles. Given a query image patch, BLEEP imputes gene expression by referencing the nearest neighbours in the learned embedding space from a reference dataset. This framework enables accurate and efficient prediction of spatially resolved gene expression profiles, outperforming existing methods in terms of prediction accuracy while preserving biological heterogeneity and robustness to experimental artifacts.

mclSTExp employs a Transformer-based architecture to explicitly model spatial dependencies in spatial transcriptomics. It treats spatial transcriptomics spots as “words” in a sequence, utilizing self-attention mechanisms to integrate positional and contextual information. By incorporating image features via contrastive learning, mclSTExp improves the accuracy of spatial gene expression predictions, especially in capturing complex tissue structures.

Appendix C Complexity Analysis

C.1 Complexity Analysis of Visiumformer

Visiumformer is built on the Transformer and BERT architectures, which means that its time and space complexity bottleneck arises from the self-attention mechanism, characterized by a complexity of , where represents the context length of the tokens input into Visiumformer, denotes the number of Transformer blocks, and denotes the embedding dimension.

| Top 50 | Method |

|

|

|

||||||

| Regression based | ST-Net [25] | 27.77M | 17.27G | 0.0848 | ||||||

| HisToGene [53] | 242.35M | 1.45G | 0.0553 | |||||||

| His2ST [84] | 92.34M | 108.3G | 0.0192 | |||||||

| THItoGene [38] | 83.60M | 82.11G | 0.0185 | |||||||

| Contrastive learning based | mclSTExp [49] | 23.21M | 17.24G | 0.1675 | ||||||

| BLEEP [75] | 24.55M | 24.63G | 0.1787 | |||||||

| Umpire-Adapter (Ours) | Visium. + Phikon | 0.92M | 105.46G | 0.2560 | ||||||

| Visium. + UNI | 1.05M | 358.09G | 0.2464 | |||||||

| Umpire-Finetune (Ours) | Visium. + Phikon | 135.76M | 332.23G | 0.2588 | ||||||

| Visium. + UNI | 353.44M | 584.86G | 0.2597 |

C.2 Complexity Analysis of Umpire

Umpire primarily consists of two branches: the gene encoder, Visiumformer, and the vision encoder, ViT. Therefore, its time complexity is , where represents the context length of the vision encoder, denotes the number of Transformer blocks, and denotes the embedding dimension. For a batch of data, the time complexity of training Umpire can be expressed as: , where represents the batch size and represents the complexity involved in computing the symmetric contrastive learning loss. In the task of gene expression prediction using multimodal representation learning, the time complexity of Umpire for inferring a single image is , where denotes the number of reference embeddings, and represents the dimensionality of the aligned embeddings.

C.3 Comparison with Baseline Methods