Towards Lightweight and Stable Zero-shot TTS with Self-distilled Representation Disentanglement

Abstract

Zero-shot Text-To-Speech (TTS) synthesis shows great promise for personalized voice customization through voice cloning. However, current methods for achieving zero-shot TTS heavily rely on large model scales and extensive training datasets to ensure satisfactory performance and generalizability across various speakers. This raises concerns regarding both deployment costs and data security. In this paper, we present a lightweight and stable zero-shot TTS system. We introduce a novel TTS architecture designed to effectively model linguistic content and various speaker attributes from source speech and prompt speech, respectively. Furthermore, we present a two-stage self-distillation framework that constructs parallel data pairs for effectively disentangling linguistic content and speakers from the perspective of training data. Extensive experiments show that our system exhibits excellent performance and superior stability on the zero-shot TTS tasks. Moreover, it shows markedly superior computational efficiency, with RTFs of 0.13 and 0.012 on the CPU and GPU, respectively.

Index Terms— Text-to-speech synthesis, Voice clone, Speaker representation, Self-distillation.

1 Introduction

The recent advancements in Text-To-Speech (TTS) synthesis have catalyzed an increasing demand for personalized voice customization, especially in speaker imitation using voice cloning techniques. However, traditional TTS approaches typically require a large amount of high-quality speaker speech data as supervision signals to adapt to new speakers[1, 2]. Such approaches not only demand substantial resources to fine-tune and maintain a unique TTS model for each user, but also require users to record substantial amounts of speech data. To alleviate this challenge, recent research[3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18] has explored zero-shot voice cloning with multi-speaker TTS systems, appearing to provide a promising solution.

Zero-shot TTS provides a highly generalised model that is capable of synthesising speech that resemble a new speaker with only a brief prompt speech (typically a few seconds). This approach eliminates the need for additional model finetuning. Recent zero-shot TTS studies can be broadly categorized into two types: those leveraging speaker representation models[7, 3, 4, 5, 6, 8] and those employing in-context learning strategies[14, 15, 16, 17, 18]. Speaker representation-based approaches enable explicitly modeling a target speaker’s representation from any given speech input. This is achieved either through pre-trained speaker encoders[19, 20, 5, 21] or through speaker encoders jointly trained with the TTS model[9, 10, 8, 11, 6]. These speaker representations are then utilized as global[5, 4] or temporal[8, 12] conditional encodings within the audio generation. By thoroughly learning the speaker characteristics of numerous speakers in the training dataset, the model can map the speaker latent space to the corresponding speech properties, thus attaining zero-shot capability. Recent studies leverage superior in-context learning capabilities of diffusion models[7, 13] or Large Language Models (LLMs)[14, 15, 16, 17, 18] to learn speaker-related characteristics from the prompt speech. These approaches demonstrate the efficacy of leveraging contextually rich information to achieve speech synthesis across unseen speakers.

Despite significant progress in recent zero-shot TTS, these methods still face considerable challenges in practical applications, i.e., high resource dependence and suboptimal synthesis stability. First, current zero-shot TTS systems rely on large-scale parameters to model the inherent diversity of human voices from a massive amount of data. For example, CosyVoice[16] employs a 414M model and requires 171K hours of training data. Such an enormous model hinders their integration into resource-constrained environments. It not only increases service costs, but also raises privacy and security concerns, as users’ speech prompts still need to be uploaded to cloud servers. Second, while autoregressive speech modeling methods such as Tacotron[22] and VALL-E[14] improve in-contextual modeling and enhance speech expressiveness, they also increase vulnerability to time series prediction errors such as omissions, incorrect readings, repetitions, and even severe error accumulation, leading to suboptimal stability.

In this paper, we present a lightweight and stable zero-shot TTS system with self-distilled representation disentanglement. On the one hand, we propose a TTS architecture that effectively models the prompt speech into multi-level speaker representations, including global timbre features and temporal style features (i.e., features that change over time, including speed, pitch, and energy). By explicitly leveraging the multi-level speaker representations, the model can operate effectively with less data and simpler architectures, as these representations capture the essential characteristics of the speakers. On the other hand, we employ a self-distillation framework to enhance the model’s ability to disentangle content from speaker characteristics. We use a pre-trained teacher model to generate parallel data pairs that differ only in speaker characteristics, thereby guiding the training of a student model. This disentangles the linguistic content from the speaker-related attributes at the data level, reinforcing the model’s ability to synthesize speech conditioned solely on the provided prompt speech. Extensive objective and subjective evaluations demonstrate that our system outperforms baseline models in content integrity while showing promise in speaker similarity. Moreover, our framework exhibits the most exceptional computational efficiency, rendering it well-suited for resource-constrained environments and real-time applications. Audio samples are available at the demo website: https://zzfng9696.github.io.

2 Zeo-shot TTS Model

In this section, we introduce the model of our system. Figure 1 shows the model architecture, which comprises two main parts, i.e., content extraction and speaker adaptation.

2.1 Content Extraction

In the first part, we extract speaker-independent content representation from both text (phoneme sequence) and the corresponding ground truth speech (mel-spectrogram). To enhance the expressive capacity for complex speech content, we integrate a Mel Variational Autoencoder (VAE) with a flow-based model to construct a latent space of speech, transcending the limitations of text-only representations. We first encode both the text and speech at the phoneme level using a linguistic encoder and a mel encoder, generating representations and , respectively. We use as a condition for predicting through Volume-Preserving Flow (VP-Flow). After that, we fuse with , forming the content representation , which is then fed into the speaker adaptivation part. Specifically, the text encoder consists of multiple feed-forward Transformer blocks with relative positional encoding[23]. The mel encoder consists of multiple 2D Residential blocks (ResBlock) to downsample and encode the input mel-spectrogram, followed by average pooling to map frame-level features to phoneme-level features. Another multi-level ResNet estimates the mean and variance of the variational latent space. Note that the source speech contains not only content information, but also information related to the target speaker. This content-speaker coupling leads to the leakage of speaker information from . We address this issue using a self-distillation framework in Section 3.

2.2 Speaker Adaptation

In the second part, we explicitly model multi-level speaker-specific representations from the prompt speech, i.e., temporal style representations and global timbre representations. These representations serve as conditions for steering the synthesis of the target speech, ensuring that it accurately reflects the desired speaker characteristics.

We adopt a trainable mel encoder to extract the temporally-related style representation, . This encoder is similar to that used in HierSpeech++[10] and GPT-SoVITS[17], but it includes positional encoding and omits the final temporal averaging layer to better capture temporal information. We use as a condition for a variation adapter, which consists of three predictors for independent speech attribute predictors, i.e., duration, pitch, and energy. We integrate and align with the input sequence of the predictors through a multi-head cross-attention layer. These predictors are individually trained under supervised conditions using annotated data. To ensure the model’s stability, we separate the gradients of these predictors from the main network.

For timbre extraction, we utilize a speaker recognition model as the timbre encoder, i.e., ERes2NetV2[24], pre-trained on a large-scale multi-domain dataset, Speaker3D[25]. This encoder generates a comprehensive speaker latent space that encompasses various speakers and speaking conditions, facilitating the effective application of unseen speaker features to achieve precise modeling of their vocal styles. Additionally, a trainable linear layer is integrated into this model to yield the speaker representation . To transfer the timbre representation extracted from arbitrary speech into synthesized speech, we employ a speaker-adaptive mel Decoder based on a multi-level ResNet architecture with Adaptive Instance Normalization (AdaIN) layer.

3 Two-stage Self-distillation

In this section, we propose a self-distillation framework aimed at disentangling linguistic content and speaker characteristics from the perspective of data. The basic idea is to construct parallel data pairs featuring identical content but distinct speakers, utilizing the model’s initial zero-shot capability. Figure 2 shows the training framework, which involves the sequential training of a teacher model and a student model.

3.1 Stage 1: Training Teacher TTS

We begin by training a teacher model , which is expected to effectively reconstruct the linguistic content from the given text and preliminarily clone the speaker’s voice from the prompt speech.

Given a single speech sample , where and represent the temporal and frequency dimensions, respectively, we extract from and from the corresponding text . These two representations are combined to obtain . Next, we use the timbre encoder and the style encoder to extract and from the prompt speech . Using as a condition, we predict time-dependent speech attributes and apply these to . Finally, we input the fused feature into the Mel decoder to reconstruct the speech mel spectrogram, ensuring that the generated speech matches the target speaker’s timbre by conditioning on . We employ the following loss functions to guide the training process.

Primary Losses. A reconstructive loss () based on the -norm is employed to quantify the discrepancy between the reconstructed and original speech. Additionally, a Kullback-Leibler (KL) divergence loss () is utilized to constrain the distribution of the speech and linguistic representations. A PatchGAN-structured discriminator is employed to leverage its capability for multi-scale analysis, distinguishing synthesized mel-spectrograms from genuine audio features. This discriminator facilitates an adversarial training paradigm with the generator, utilizing the Least Squares Generative Adversarial Network (LSGAN) loss ().

Style-related Losses. A suite of loss functions refine the accuracy of our speech attribute predictors within the variance adapter. For every speech sample , our model generates predictions for the attributes, i.e., duration, pitch, and energy, denoted as , where spans {duration, pitch, energy}. The loss function, , quantifies the mean squared error (MSE) between the model’s predictions and the ground truth values.

Timbre-related Loss. A cyclic contrastive loss function is employed to enforce alignment between the output speech and the prompt audio within the speaker space of the timbre encoder. Let and denote the timbre representations of the original mel-spectrogram and reconstructed mel-spectrogram, respectively, where indexes the samples within a batch of size . The speaker representation , obtained by feeding into the speaker encoder, should closely match . Conversely, speaker representations of other utterances within the mini-batch should significantly diverge. The contrastive loss is defined as[26]:

| (1) |

where, denotes the cosine similarity between two vectors, and is an indicator function.

3.2 Stage 2: Training Student TTS

By leveraging the well-trained teacher model, we proceed to generate parallel data pairs for distilling a student model.

Given a speech sample , and the corresponding text, represented as a phoneme sequence , our method begins by employing the teacher model to synthesize a new speech sample . The synthetic speech sample preserves the textual content , but is delivered by a distinct speaker. To this end, we begin by randomly selecting a prompt speech sample from our training dataset. The sample corresponds to a different speaker than the speaker in . Then, we infer the teacher model with and for the generation of . For the strict temporal alignment of the phonemes of the and , we explicitly specify the prediction result of the duration predictor as the annotated phoneme duration of during the inference process.

After obtaining the time-aligned parallel data pair , we proceed to train the student model. The student model mirrors the same model structure as the teacher model but is initialized randomly. We input as the speech content for the student model, accompanied by as the linguistic content input. Additionally, we randomly select another speech sample as the prompt, which is uttered by the same speaker as . We optimize the student model following the same procedure as detailed in Section 3.1, using along with its detailed annotation serving as the ground truth.

To regulate the intensity of distillation, we blend real and synthesized samples within each training batch. To this end, we introduce a coefficient , which modulates the proportion of synthetic speech samples. Specifically, in each batch of speech content input, we replace randomly selected speech samples with their corresponding synthesized speech samples, while the remaining samples remain as the ground truth speech samples.

The self-distillation framework ensures that the student model’s content extraction avoids speaker-specific details, compelling it to rely solely on prompt speech for speaker adaptation, thereby enhancing the separation of the representations.

4 Evaluation

4.1 Experiemnt Setup

We train both the teacher and student models on a diverse dataset, comprising 4,678 speakers, totaling 531 hours of audio. The preprocessing pipeline involve extracting 80-dimensional mel-spectrograms from the audio clips. For audio reconstruction from mel-spectrograms, we employ a NFS-HiFiGAN model[27] as vocoder. The TTS models are trained using an AdamW optimizer and a batch size of 64 for 200,000 training steps. When training the student model, we set by default.

Our proposed model is compared against four state-of-the-art zero-shot Text-to-Speech (TTS) models: Vall-E[14], X-TTSv2[28], CosyVoice[16], and GPT-SoVITS[17]. We reproduce Vall-E based on the published methodology[14] and trained it on a speech corpus of approximately 60K hours and 19K speakers. The other systems utilize the open-source code and pre-trained models provided by their respective authors.

Prompt speeches for the test dataset are collected from 20 volunteers (10 males and 10 females) who are not part of the training set, ensuring that the baseline system’s training data does not contain these test cases. For each volunteer, we record 5 audio clips, ensuring high audio quality, clear pronunciation, and varied durations ranging from 5 to 10 seconds. For each prompt speech, we generate 100 synthetic speeches using different sentences, with lengths ranging from approximately 30 to 50 characters.

The metrics employed in the experiments are as follows,

- •

-

•

Character Error Rate(CER): We use a pre-trained ASR model[30] to infer transcripts, and then derive CERs by calculating the edit distance between the transcripts and the correct text.

-

•

Real-Time Factor(RTF): We measure RTF on a Sever platform equipped with an Intel(R) Xeon(R) Platinum [email protected] CPU and an Nvidia Tesla A100 GPU.

-

•

Mean Opinion Score (MOS): We invite 50 volunteers to score 20 groups of generated speech based on their on their subjective feelings in terms of content consistency () and speaker similarity () on a scale of 1 to 5. Final MOS scores are calculated with a 95% confidence interval.

4.2 Overall Performance Comparing with Baselines

| Model | Content Integrity | Speaker Similarity | ||

|---|---|---|---|---|

| CER | SIM | |||

| Vall-E | 2.89 | 4.170.11 | 0.72 | 3.240.12 |

| X-TTSv2 | 4.05 | 3.310.16 | 0.47 | 2.040.09 |

| GPT-SoVITS | 4.89 | 4.180.12 | 0.70 | 3.400.12 |

| CosyVoice | 3.42 | 4.350.10 | 0.84 | 3.730.11 |

| Our system | 1.80 | 4.430.09 | 0.73 | 3.310.11 |

| Model | Params | Data(h) | ||

|---|---|---|---|---|

| Vall-E | 370M | 100K | 2.61 | 0.47 |

| X-TTSv2 | 447M | N/A | 5.75 | 0.26 |

| GPT-SoVITS | 223M | 2K | 3.53 | 0.38 |

| CosyVoice | 414M | 173K | 15.3 | 1.89 |

| Our system | 22.5M | 531 | 0.13 | 0.012 |

Table 2 presents the overall performance comparison of our system with baseline systems regarding content integrity and speaker similarity. In terms of content integrity, our system achieves the lowest CER of 1.8 and the highest of 4.43, surpassing all baseline models. These results highlight our system’s ability to generate accurate and reliable content that meets expectations. In terms of speaker similarity, our system attains a SIM score of 0.73, trailing only behind the state-of-the-art model, CosyVoice, which scores 0.84. may slightly differ from the SIM results, because the objective scoring model primarily assesses timbre similarity, while human raters consider additional factors such as speaking style and emotional expressiveness. Overall, the experimental results demonstrate that our system outperforms baseline models in content integrity while showing promise in speaker similarity.

Table 2 shows the efficiency comparison of our system with baseline systems in terms of parameter scale, data requirements, and real-time performance metrics. All baseline systems exceed 200M parameters, with CosyVoice and X-TTSv2 even surpassing 400M parameters. While this extensive parameter count enables robust speaker modeling, it necessitates considerable training datasets and computational resources, as illustrated by the significant data hours and RTF involved. In contrast, our system employs a lightweright architecture with only 22.5M parameters, making it both data and computationally efficient. Regarding real-time performance, all baseline systems operate slower than real-time on CPUs, with their RTF values significantly exceeding 1. In comparison, our system achieves RTFs of 0.13 on CPUs and 0.012 on GPUs, indicating at least a tenfold improvement over the baselines.

4.3 Impact of Self-distillation Framework

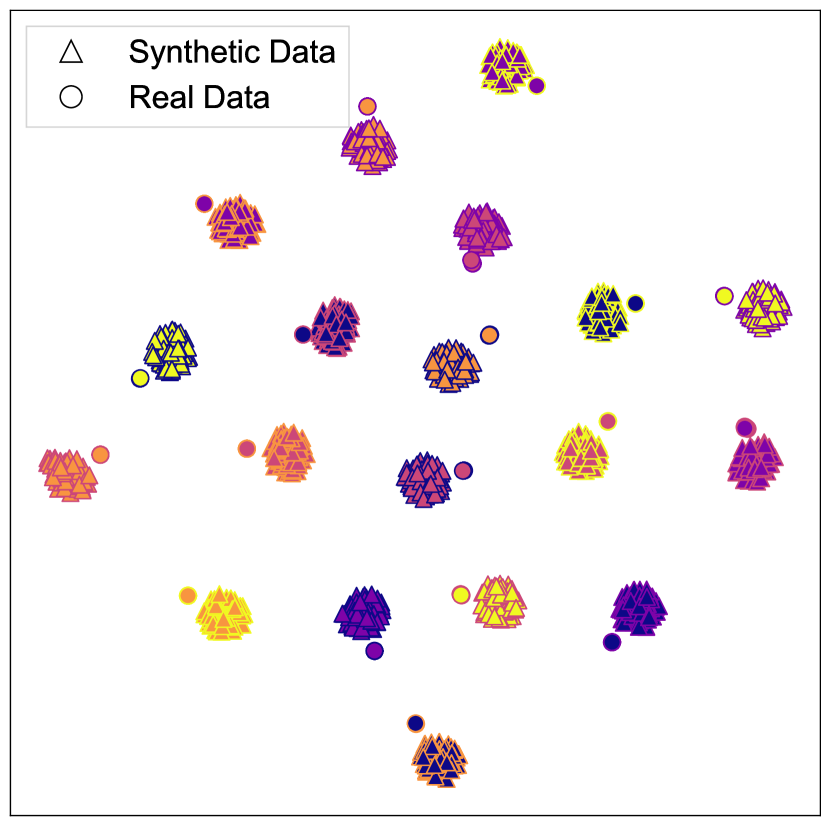

Figure 3 shows the t-SNE visualization of speaker embeddings from our system with and without self-distillation. We can obvious that, compared with real speech samples(triangles), the non-distillation model shows a clear separation between the point clusters of real speeches and synthesized speeches (circles). In contrast, the self-distilled model exhibits less separability. The distance between the feature points of real speech and synthesized speech is significantly reduced, and in some cases, they even highly overlap. This indicates a high degree of timbre consistency achieved by the self-distilled model, highlighting its effectiveness in enhancing the similarity of model speaker characteristics.

Figure 4 quantitatively illustrates the impact of the self-distillation coefficient on the SIM and the CER. As increased, the SIM score steadily improves to a maximum of 0.73 at . Further increases in led to a slight decline in the score. This trend emphasizes the importance of selecting an appropriate value to enhance speaker feature disentanglement and improve the model’s generalization capabilities. Meanwhile, CER scores exhibit stability across varying values, experiencing minimal fluctuations between and . This finding indicates that the self-distillation framework can effectively improve victim similarity without compromising the integrity of the content.

5 Conclusion

In this paper, we introduce a lightweight and stable zero-shot TTS system. We first propose a novel TTS architecture designed to effectively model linguistic content and various speaker-related attributes from source speech and prompt speech, respectively. Next, we present a two-stage self-distillation framework that constructs parallel data pairs for effectively disentangling linguistic content and speakers from the aspect of training data. Both objective and subjective evaluations demonstrate that our system matches the Baseline in zero-shot speech synthesis performance while achieving superior computational efficiency.

References

- [1] Jian Cong, Shan Yang, Lei Xie, et al., “Data Efficient Voice Cloning from Noisy Samples with Domain Adversarial Training,” in Proceedings of ISCA INTERSPEECH, Shanghai, China, 2020, pp. 811–815.

- [2] Yi Ren, Jinglin Liu, and Zhou Zhao, “Portaspeech: Portable and High-Quality Generative Text-to-Speech,” in Proceedings of MIT Press NeurIPS, Virtual Event, 2021, pp. 13963–13974.

- [3] Edresson Casanova, Christopher Shulby, Eren Gölge, et al., “Sc-Glowtts: an Efficient Zero-Shot Multi-Speaker Text-to-Speech Model,” in Proceedings of ISCA INTERSPEECH, Brno, Czechia, 2021, pp. 3645–3649.

- [4] Yihan Wu, Xu Tan, Bohan Li, et al., “Adaspeech 4: Adaptive Text to Speech in Zero-Shot Scenarios,” in Proceedings of ISCA INTERSPEECH, Incheon, Korea, 2022, pp. 2568–2572.

- [5] Edresson Casanova, Julian Weber, Christopher Dane Shulby, et al., “Yourtts: Towards Zero-Shot Multi-Speaker Tts and Zero-Shot Voice Conversion for Everyone,” in Proceedings of ACM ICML, Baltimore, Maryland, USA, 2022, pp. 2709–2720.

- [6] Yinghao Aaron Li, Cong Han, Vinay S. Raghavan, et al., “Styletts 2: Towards Human-Level Text-to-Speech through Style Diffusion and Adversarial Training with Large Speech Language Models,” in Proceedings of MIT Press NeurIPS, New Orleans, LA, USA, 2023.

- [7] Zeqian Ju, Yuancheng Wang, Kai Shen, et al., “Naturalspeech 3: Zero-Shot Speech Synthesis with Factorized Codec and Diffusion Models,” 2024.

- [8] Ziyue Jiang, Jinglin Liu, Yi Ren, et al., “Mega-Tts 2: Boosting Prompting Mechanisms for Zero-Shot Speech Synthesis,” in Proceedings of ICLR, Vienna, Austria, 2024.

- [9] Rongjie Huang, Yi Ren, Jinglin Liu, Chenye Cui, and Zhou Zhao, “Generspeech: Towards Style Transfer for Generalizable Out-of-Domain Text-to-Speech,” in Proceedings of MIT Press NeurIPS, New Orleans, LA, USA [hybrid], 2022.

- [10] Sang-Hoon Lee, Ha-Yeong Choi, Seung-Bin Kim, and Seong-Whan Lee, “Hierspeech++: Bridging the Gap Between Semantic and Acoustic Representation of Speech by Hierarchical Variational Inference for Zero-Shot Speech Synthesis,” CoRR, vol. abs/2311.12454, 2023.

- [11] Minki Kang, Dongchan Min, and Sung Ju Hwang, “Grad-Stylespeech: Any-Speaker Adaptive Text-to-Speech Synthesis with Diffusion Models,” in Proceedings of IEEE ICASSP, Rhodes Island, Greece, 2023, pp. 1–5.

- [12] Kai Shen, Zeqian Ju, Xu Tan, et al., “Naturalspeech 2: Latent Diffusion Models Are Natural and Zero-Shot Speech and Singing Synthesizers,” in Proceedings of ICLR, Vienna, Austria, 2024.

- [13] Keon Lee, Dong Won Kim, Jaehyeon Kim, and Jaewoong Cho, “Ditto-Tts: Efficient and Scalable Zero-Shot Text-to-Speech with Diffusion Transformer,” CoRR, vol. abs/2406.11427, 2024.

- [14] Chengyi Wang, Sanyuan Chen, Yu Wu, et al., “Neural codec language models are zero-shot text to speech synthesizers,” CoRR, vol. abs/2301.02111, 2023.

- [15] Sanyuan Chen, Shujie Liu, Long Zhou, et al., “Vall-E 2: Neural Codec Language Models Are Human Parity Zero-Shot Text to Speech Synthesizers,” CoRR, vol. abs/2406.05370, 2024.

- [16] Zhihao Du, Qian Chen, Shiliang Zhang, et al., “Cosyvoice: a Scalable Multilingual Zero-Shot Text-to-Speech Synthesizer Based on Supervised Semantic Tokens,” CoRR, vol. abs/2407.05407, 2024.

- [17] RVC-Boss, “Gpt-sovits,” https://github.com/RVC-Boss/GPT-SoVITS/tree/b3e8eb40c25bea1b9977195a380d40c941040419, 2024, Accessed: 2024-08-15.

- [18] Philip Anastassiou, Jiawei Chen, Jitong Chen, et al., “Seed-Tts: a Family of High-Quality Versatile Speech Generation Models,” CoRR, vol. abs/2406.02430, 2024.

- [19] Ye Jia, Yu Zhang, Ron J. Weiss, et al., “Transfer Learning from Speaker Verification to Multispeaker Text-to-Speech Synthesis,” in Proceedings of MIT Press NeurIPS, Montréal, Canada, 2018, pp. 4485–4495.

- [20] Sercan Ömer Arik, Jitong Chen, Kainan Peng, et al., “Neural Voice Cloning with a Few Samples,” in Proceedings of MIT Press NeurIPS, Montréal, Canada, 2018, pp. 10040–10050.

- [21] Neeraj Kumar, Ankur Narang, and Brejesh Lall, “Zero-Shot Normalization Driven Multi-Speaker Text to Speech Synthesis,” IEEE ACM Trans. Audio Speech Lang. Process., vol. 30, pp. 1679–1693, 2022.

- [22] Yuxuan Wang, R. J. Skerry-Ryan, Daisy Stanton, et al., “Tacotron: Towards End-to-End Speech Synthesis,” in Proceedings of ISCA INTERSPEECH, Stockholm, Sweden, 2017, pp. 4006–4010.

- [23] Peter Shaw, Jakob Uszkoreit, and Ashish Vaswani, “Self-Attention with Relative Position Representations,” in Proceedings of Association for Computational Linguistics NAACL, New Orleans, Louisiana, USA, 2018, pp. 464–468.

- [24] Yafeng Chen, Siqi Zheng, Hui Wang, et al., “Eres2netv2: Boosting short-duration speaker verification performance with computational efficiency,” 2024.

- [25] Siqi Zheng, Luyao Cheng, Yafeng Chen, et al., “3d-Speaker: a Large-Scale Multi-Device, Multi-Distance, and Multi-Dialect Corpus for Speech Representation Disentanglement,” 2023, vol. abs/2306.15354.

- [26] Ting Chen, Simon Kornblith, Mohammad Norouzi, et al., “a Simple Framework for Contrastive Learning of Visual Representations,” in Proceedings of ACM ICML, Virtual Event, 2020, pp. 1597–1607.

- [27] Xin Wang, Shinji Takaki, and Junichi Yamagishi, “Neural Source-Filter Waveform Models for Statistical Parametric Speech Synthesis,” IEEE/ACM TASLP, vol. 28, pp. 402–415, 2020.

- [28] Coqui, “Xtts-v2,” https://huggingface.co/coqui/XTTS-v2, Accessed: 2024-08-26.

- [29] Brecht Desplanques, Jenthe Thienpondt, and Kris Demuynck, “Ecapa-Tdnn: Emphasized Channel Attention, Propagation and Aggregation in Tdnn Based Speaker Verification,” in Proceedings of ISCA INTERSPEECH, Shanghai, China, 2020, pp. 3830–3834.

- [30] “Paraformer: Fast and Accurate Parallel Transformer for Non-Autoregressive End-to-End Speech Recognition,” in Proceedings of ISCA INTERSPEECH, Incheon, Korea, 2022, pp. 2063–2067.